Abstract

Online tools are increasingly being used by students to cheat. File-sharing and homework-helper websites offer to aid students in their studies, but are vulnerable to misuse, and are increasingly reported as a major source of academic misconduct. Chegg.com is the largest such website. Despite this, there is little public information about the use of Chegg as a cheating tool. This is a critical omission, as for institutions to effectively tackle this threat, they must have a sophisticated understanding of their use. To address this gap, this work reports on a comprehensive audit of Chegg usage conducted within an Australian university engineering school. We provide a detailed analysis of the growth of Chegg, its use within an Australian university engineering school, and the wait time to receive solutions. Alarmingly, we found over half of audit units had cheating content on Chegg is broadly used to cheat and 50% of questions asked on Chegg are answered within 1.5 h. This makes Chegg an appealing tool for academic misconduct in both assignment tasks and online exams. We further investigate the growth of Chegg and show its use is above pre-pandemic levels. This work provides valuable insights to educators and institutions looking to improve the integrity of their courses through assessment and policy development. Finally, to better understand and tackle this form of misconduct, we call on education institutions to be more transparent in reporting misconduct data and for homework-helper websites to improve defences against misuse.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Academic integrity is of critical importance to the modern tertiary education sector and underpins pedagogical approaches to teaching and learning. To ensure the integrity of their courses, academics and institutions must be aware of the latest methods students use to cheat. Universities must be agile in their response to new trends in academic integrity and student misconduct, especially given the new challenges and opportunities offered by the digital era. While early academic integrity research placed emphasis on plagiarism (Walker, 1998), new forms of misconduct are growing, including homework-helper, contract cheating and file-sharing websites (Curtis et al., 2022; Lancaster & Cotarlan, 2021a), automatic text-spinning and paraphrasing tools (Prentice & Kinden, 2018; Rogerson & McCarthy, 2017) and AI tools (Finnie-Ansley et al., 2022). Of interest in this paper is the growing prevalence of homework-helper websites, in particular Chegg.com (henceforth referred to as Chegg), the largest such website Chegg Inc, (2022) and Nasdaq Inc.(2022).

Homework-helper websites, offer to aid students in their learning, however they also represent a growing threat to academic integrity. In this article we draw a distinction between contract-cheating websites (e.g. essay mills) which exist exclusively for the purpose of cheating, and homework-helper websites which present as a legitimate service, but whose business model is extremely vulnerable to misuse. We also make a distinction between homework-helper and filesharing websites. The latter term is commonly used in literature, but is not preferred by the authors of this article as file sharing is only one such service offered by homework-helper websites Lancaster and Cotarlan (2021a).

Homework-helper websites offer to aid students in their studies through a range of services, for example question & answer (Q&A) services, file-sharing (e.g. study notes and assessment) and citation assistance. Of concern in this paper are Q&A services, which are identified as a concerning source of cheating (Broemer & Recktenwald, 2021; Lancaster & Cotarlan, 2021a). Through these Q&A services, students can look-up solutions to questions on a database or submit their own questions to be solved by the website’s ‘tutors’ (these are then added to the database). By far the largest homework-helper website is Chegg, with a market capital of 3.7 billion USD and 7.3 million subscribers (Chegg Inc, (2022) and Nasdaq Inc.(2022). Other large homework-helper sites include CourseHero, Studocu and Bartleby. These websites generally operate under a subscription model by which students pay a monthly fee (14.95 USD for Chegg as of writing) to access the solution database and to ask their own questions. While these sites purport to be for legitimate study, they are highly vulnerable to misuse, and there are limited mechanisms to prevent students using these Q&A services to cheat. Broemer and Recktenwald (2021) proposed that the Chegg’s Q&A service is primarily used for cheating, this is further supported by Lancaster and Cotarlan (2021a). Figure 1 provides context on use of these sites by showing (a) a unique assessment question, (b) Google search results identifying solutions to the questions on (c-d) Chegg and (e) CourseHero.

Considering a student’s motivations to cheat, homework-helper websites are highly appealing. A small portion of students routinely cheat, while a much larger group, approximately 44%, fall within the cheat-curious category – these are students who may cheat under certain circumstances (Bretag et al., 2018, 2019; Rigby et al., 2015). Drivers of cheating behaviour include a perception of low risk (Diekhoff et al., 1999), perception that there are lots of opportunities to cheat (Bretag et al., 2019) and perception of norms (Curtis et al., 2018) (i.e., the perception that cheating is the norm, or that others are cheating).

Homework-helper websites embody these motivators. Homework-helper websites appear high in search engine results pages (often as the first result), and thus may appear when students engage in normal and healthy study behaviour (e.g. researching an assignment problem). It takes just one student to upload an item to a homework-helper website for it to appear in common search engines like Google. The appearance of these links provides a low barrier to entry, provides ample opportunity and feeds into a low perception of risk. Further, the fact that a peer has uploaded the assessment to a homework-helper site feeds into the perception that others are engaging in this behaviour. Combined, cheat curious students are vulnerable to these motivators. Finally, due to the large subscriber base and ease of uploading questions, these websites can rapidly include unique assignment questions (Christodoulou, 2022).

The global COVID-19 pandemic has increased rates of academic misconduct and usage of homework-helper websites (Comas-Forgas et al., 2021; Erguvan, 2021; Lancaster & Cotarlan, 2021a). Subsequently, the pandemic has seen an increased focus from tertiary education institutions on modern trends in academic misconduct (Erguvan, 2021; Reedy et al., 2021; Turner et al., 2022). A recent study by Lancaster and Cotarlan (2021a) studied the impact of COVID-19 on Chegg usage, finding a 196% increase following the transition to online learning. More recently, an international survey of 1,000 students by Slade et al. (2024) found a third had used a file-sharing or homework-helper website, a more than 10 times increase over five years (Bretag et al., 2019; Slade et al., 2024). Further, of those who had downloaded content, 48% had done so to get an answer for a test or exam, these were then commonly shared to other students. This trend is particularly concerning for engineering and other STEM disciplines. STEM is known to be overrepresented in academic misconduct (Bretag et al., 2019; Lancaster & Cotarlan, 2021a). For example, a Australian based large-scale survey of students found engineering students were 1.8 × more likely to engage in cheating behaviour (Bretag et al., 2019). Adding to this concern, Chegg’s own data shows that a majority (59%) of their userbase are STEM students (Chegg Inc, 2022; Nasdaq Inc., 2022).

While there is growing concern about homework-helper websites in the tertiary education sector, there remains little information on the use of these websites. To demonstrate, as of the date of writing (2023), a Scopus search for ‘Chegg,’ the largest homework-helper website, returns nine journal articles, with only four related to academic misconduct, the others relating to Chegg’s textbook hiring service or are unrelated to the Chegg website (e.g. related to geology) (Busch, 2017; Emery-Wetherell & Wang, 2023; Lancaster & Cotarlan, 2021a; Ruggieri, 2020). Lancaster and Cotarlan (2021a) studied the impact of COVID-19 on Chegg usage finding a 196% increase due to COVID-19 and the shift to online learning; Ruggieri (2020) found 38 – 71% of physics students reported Chegg usage, with increased usage in more advanced units; while Busch (2017) explored methods to reduce usage of sites like Chegg; Emery-Weatherell and Wang (2023) explored cheating via Chegg in an introductory statistics course, explored ways to discourage cheating, and provided code to help identify cheating students.

Broader searches uncover additional literature. In a valuable conference paper, Broemer and Recktenwald (2021) presented a detailed investigation of Chegg usage in a 2-h online mechanical engineering exam, identifying 129 unique posts to Chegg, with 71% of posts answered during the exam (50% answered within 1 h). Interestingly, many answered posts were not viewed during the exam, even by the uploader. Manoharan and Speidel (2020) uploaded assignment questions to Chegg investigating factors like solution quality and time for a solution to be provided, finding easy questions were answered quickly and correctly, while complex questions were not answered or received substandard answers. Interestingly, Manoharan and Speidel (2020), ensured the questions they uploaded were clearly identifiable as formative assessment, which violates Chegg’s policy and which Chegg tutors are required to report. None of these questions were identified as attempts to cheat. Finally, Somers et al. (2023) developed a tool to automatically detect the upload of questions to file-sharing and homework-helper sites like Chegg.

As Chegg is the largest homework-helper website, this lack of investigation represents a major gap in our understanding of academic misconduct and cheating. With such sparse information, developing effective strategies and policies is near impossible. To address this gap, this article aims to present a detailed understanding of Chegg usage within an Australian technology university engineering school. This article provides insights into the growth of Chegg’s homework-helper service, prevalence of Chegg usage, and time required to receive a solution on Chegg. The findings provide a valuable resource for institutions and academics in understanding the challenge presented by homework-helper websites.

Methodology

The first objective of this study was to build an accurate understanding of the growth of Chegg. While Chegg does not openly publish the number of questions asked on their platform, each question has a publish date in the page’s source code. For example, the first mechanical engineering question on Chegg was asked on 20-Sep-2005 with the address: https://www.chegg.com/homework-help/questions-and-answers/need-solution-problems-q8. Further, each question has a unique number, the above question is number 8. These numbers are sequential. As of writing this paper (date 24-Jul-2023), the most recent mechanical engineering question is number 117666519 with the address:https://www.chegg.com/homework-help/questions-and-answers/create-excel-program-determines-forces-member-supports-dimension-force-p-varies-q117666519. Using this data, the date and question number was extracted for the first question of each year. This provided accurate data on the number of questions uploaded over any 12-month period, and the total number of questions at each time point. While not used in this study, further information can be obtained from the website source code, including ‘publishedDate’ and ‘answerCreatedDate.’ We share this as it may be useful to the reader, noting that the source code and variables differ in the free-preview and paid-subscription webpages. A verification of the relationship between question ID and date-time is provided in the supplementary information. Note in the second half of 2023, Chegg changed their numbering system and the relationship between question ID and date-time now no-longer follows that observed prior to June-2023. As such, this analysis is not presented past June-2023.

To understand the scope of Chegg usage within the Australian engineering education context, an audit was conducted within an engineering school of an Australian technology university. During the second half of 2020, unit-coordinators (i.e. the staff member in charge of a given unit, unit referring to an individual unit of study) were asked to provide current and historic assessment task descriptions; these included take-home assignments and online exams. All unit-coordinators were contacted, a total of 50 units. Following provision of assessment task descriptions, key sentences and paragraphs were extracted and searched for using both the Google and inbuilt Chegg search engine. This work was completed by a staff member experienced in searching for assessment content online. When content on Chegg was identified which matched the assessment tasks, it was recorded, and a Digital Millennium Copyright Act (DMCA) takedown request was sent through to Chegg’s copyright office, along with a request to provide the upload and solution date and time. During the time period this study was conducted, Chegg responded to these requests for additional information. Using this, the time to solution was calculated as the difference between the question upload time and solution time. All assessment tasks used in this study were summative assessment items where the upload of these to homework-helper websites, like Chegg, contravened the university’s academic integrity policy and is considered cheating. The engineering school has an annual enrolment of approximately 800 equivalent full-time students, where a full-time load is eight units per year (e.g. four units per semester, two semesters per year). In the audit year, 845 equivalent full-time students were enrolled.

Results

The total number of questions in Chegg’s Q&A section and number of questions per year is presented in Fig. 2. On first appearance, the growth (blue line) appears exponential, however deeper analysis reveals more complexity. In the early years, 2005–2009, Chegg grew by between 2 and 7 × relative to the previous year, however due the low number of total questions, this growth is small relative to later years. In Nov-2013 Chegg was listed on the New York Stock Exchange (NYSE), in the following years the question rate rapidly increased. Between the years of 2016–2019, the question rate remained relatively stagnant at between 7.5–9.5 × 106 per year. This dramatically increased to 22 × 106 following the COVID-19 pandemic (declared a pandemic by the WHO in Mar-2020). More granular data is presented in the Fig. 2 inset, which shows the number of questions asked on the Chegg platform per month, in the 2019–2023 calendar years. A clear annual trend is observed with usage peaking in April and November, presumably aligning to annual tertiary education calendars. April-2020 shows an unusually large increase correlated with the transition to online learning Lancaster and Cotarlan (2021a); this increase is sustained into 2021. 2022 shows usage levels down from the 2020–2021 pandemic peaks, but still above the pre-pandemic norms. In Nov-2020, ChatGPT was released by OpenAI, presenting new concerns for academic integrity (Mrabet & Studholme, 2023; Rahman & Watanobe, 2023), for which commentators predicted a shift from homework-helper and filesharing websites like Chegg Singh (2023). Early data from 2023 does not support these predictions, with 2023 usage being above 2022 (though below the 202–2021 peak correlated with the COVID-19 transition to online learning), although this is early data and these trends could shift as the full impact of generative AI is realised.

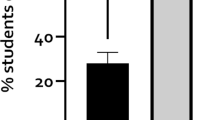

Of the 50 unit coordinators contacted during the audit, 41 (82%) provided assessment task descriptions, this accounted for 750 equivalent full-time students (89% of the total). A breakdown of the audit findings is provided in Fig. 3a. Of the respondent units, a majority (56%) had assessment content found on Chegg. Through this process, 1180 solutions were found on Chegg which directly matched assessment content, with the largest unit having 394 matches identified. We reiterate that the uploading of these 1180 assessment items to Chegg constituted academic misconduct as these were efforts to subvert assessment. The full dataset of items identified on Chegg is provided in the supplementary information, including the question ID, question date, answer date, question, and solution. Figure 3b shows the number of matching items found online per unit, ranked in descending order. For units with many matches identified, the same assessment task appeared multiple times. Note, each matching item identified represented a unique case of assessment being uploaded to Chegg. As both present and historic assessment tasks were investigated, the identified solutions spread over several years, with 2020 accounting for 32% of solutions, 2019 33%, 2018 15%, 2017 12%, and the earliest found item originating from 2011.

Figure 4a shows the solution time from the 1180 items identified on Chegg. The curve shape appears analogous to the step response of a first order system. Upon first submission, questions were answered rapidly with 25% answered within 42 min, 50% within 1.5 h, and 75% within 4.5 h. Over time however, solution rate decreases, with only 87% answered within 24 h, asymptotically approaching 98%. Solution time has also been reducing, as demonstrated in Fig. 4b. In 2015 it took 1 h for 25% of questions to be solved, while in 2020 this reduced by 30% to 0.7 h. This reduction has been more dramatic for the time to solve 50% and 75% of questions, which has reduced by 61% (3.6 h down to 1.4 h) and 94% (62 h down to 3.5 h) respectively. Evidently, solution time is reducing, and it is reasonable to expect that these will reduce further. Finally, it is important to consider the proportion of questions which are eventually solved. This rate has remained relatively stable between 95 – 99%, without a distinct year-to-year trend.

Time to solution from 1180 items identified on Chegg. a Cumulative distribution of percentage of questions answered at a given time. b Time to solution for various years for the 25th, 50th and 75th percentile. c Total proportion of questions solved by year. Results are not provided prior to 2015 as insufficient data was avaliable

Discussion

This study provides three valuable insights to academics and institutions on the use of Chegg as a tool for academic misconduct. These relate to (1) the growth of Chegg, (2) the prevalence of Chegg as a cheating tool, and (3) the rapid rate at which questions are answered. These are discussed below.

The Growth of Chegg

Over the past decade, Chegg has grown dramatically from a business with revenue of 213 million USD and 460 thousand subscribers, to a behemoth with revenue of 775 million USD and 7.8 million subscribers, an increase of 4.5 × and 17.0 × respectively (Chegg Inc, 2013, 2021). While Chegg can be used for both learning (legitimate) and cheating (non-legitimate) uses, there is a growing body of evidence that Chegg is a significant tool for cheating (Broemer & Recktenwald, 2021; Lancaster & Cotarlan, 2021a). As such, it is important to understand how the growth of Chegg might impact its use as a tool for academic misconduct. These figures however represent publicly available data through annual reports submitted to the U.S. Securities and Exchange Commission. To address this need, this manuscript provides new insights into the growth of Chegg and its use in the Australian engineering education context.

Over this same period, the annual number of questions asked on Chegg’s Q&A service has increased from 810 thousand per year (2011) to 25 million per year (2021), an increase of 29.9x. The greatest driver of growth appeared to be the global COVID-19 pandemic which saw many courses internationally move to online-only modes. Over this period, annual questions asked increased from 9.6 million (2019) to 22.1 million (2020), an increase of 2.3x. This reflects the findings of Lancaster and Cotarlan (2021a), who noted a dramatic increase in the number of questions being asked on Chegg following the transition to online education.

While much focus has been placed on the increased misconduct risk due to the COVID-19 pandemic, it is important to note that this isn’t the first time Chegg has seen dramatic growth and may not be the last. Both 2016 and 2017 saw more than a doubling in annual Chegg questions, over the previous year. While the move to online learning is correlated with increased misconduct (Maryon et al., 2022), it would be remiss of educational institutions to assume this was the only significant driver. Pre-pandemic, Chegg had already shown incredible capacity for growth. Based upon previous trends, Chegg usage can be expected to grow in the coming years. With this, unless action is taken, tertiary education institutions can expect increased misconduct through the use of Chegg and similar sites.

The future of Chegg, and similar file sharing websites, is challenged by the rise of generative AI such as ChatGPT. Commentators alike have proposed that these will replace file-sharing and homework-helper sites both as tools for learning and academic misconduct (Singh, 2023). Multiple articles have also demonstrated the effectiveness of large language models, like ChatGPT, in solving university level assessment and exams (Gilson et al., 2023; Lo, 2023; Nikolic et al., 2023). While early, the data presented in the Fig. 2 inset does not support this prediction, with 2023 Chegg usage being in-line with previous years, though below the 2021–2022 peak. We propose that the growth of generative AI will not replace file-sharing and homework helper websites, rather a new status quo will develop where both fill different niche uses. While there are limited tools to combat misconduct through file-sharing and homework-helper sites (Somers et al., 2023), significant effort is being invested in developing tools to combat misconduct via generative AI. For example, both TurnItIn (a current market leader in plagiarism detection) and GPTZero (a recent startup) are developing detectors for AI written text (Weber-Wulff et al., 2023). There remains a market gap in tools able to combat academic misconduct through file-sharing and homework-helper websites.

In summary, from this analysis it is clear that Chegg has experienced year-on-year growth, driven by both intrinsic (e.g. listing on NYSE) and extrinsic (e.g. COVID-19 pandemic) factors. While we note that Chegg can be used for both learning and cheating, the findings of this study should be of concern to educators and institutions alike. As Chegg has grown, so too has the opportunity for it to be used as a cheating tool. With this, unless action is taken, tertiary education institutions can expect increased misconduct through the use of Chegg and similar sites.

Within the Australian context, there is additional cause for concern. Currently, 89% of Chegg’s revenue comes from the United States (Chegg Inc, 2021), with relatively little revenue coming from other countries like Australia. If Australia was to follow the trajectory of the United States, we can expect significant growth in Australia. Institutions within the United States should also cautiously interpret the data presented in this study, given the much greater Chegg saturation in the United States.

Prevalence of Chegg as a Cheating Tool

The true rate of cheating within tertiary education institutions has long been debated, with Curtis et al. (2022) providing a summary of current literature. Self-reporting studies of contract-cheating estimate rates of between 2–3.5% (Bretag et al., 2019; Curtis & Clare, 2017), while an incentivised truth-telling study estimated truer rates to be 7.9% for contract cheating and 11.4% for outsourcing (Curtis et al., 2022). Data from the Tertiary Education Quality and Standards Agency (Bretag et al., 2018, 2019; Rigby et al., 2015) classified student behaviours into the categories of ‘wont-cheat’ (50%), ‘cheat-curious’ (44%), ‘outsourcing’ (4%) and ‘buy’ (2%).

A more recent survey of 1,000 students by Slade et al. (2024) found 6% had uploaded exam questions to homework-helper or file-sharing sites, while 16% had downloaded answers to exams. The impact of this behaviour is later magnified, with 71% of respondents who downloaded material on-sharing this to other students. Further, amongst the respondents, Chegg was the second-best known file-sharing or homework-helper site after CourseHero, but had the highest rate of paid users. These findings by Slade et al. (2024) highlight the threat presented by these sites, and interestingly demonstrate higher rates of cheating behaviour that that observed by Curtis et al. (2022).

Our results harmonise with these findings of Slade et al. (2024) but show the other side of the equation. While Slade et al. (2024) identified a high rate of students cheating via uploading to and downloading from homework-helper and file-sharing sites, our work identifies the result of this behaviour – in this case, a high rate of cheating content on Chegg. A weakness of our work however is that it does not provide visibility to the rates of students using these sites. This gap is filled by research like Slade et al. (2024) and other literature highlighted in our manuscript, which together show high rates of cheating through these sites.

The results of the present study are significant when considered in the context of these ‘buy,’ ‘outsource’ and ‘cheat-curious’ groups. Only one student in the ‘buy’ or ‘outsource’ group is required to upload a question to a homework-helper site, for the ‘cheat-curious’ students to be exposed to these solutions via online search engines. Given the combined size of these ‘buy’ and ‘outsourcing’ groups (6%), it is likely that many units will have a student from one of these categories who may upload assessment. Once uploaded, the ‘cheat-curious’ students are exposed to a solution, which can be accessed with a low barrier of entry, which when combined with other factors which increase cheating (e.g. deadlines, stress, assumption that other students are cheating) create an environment where these students may cheat.

In this work, we found 56% of investigated units had assessment content on Chegg. This material was presumably uploaded by students within the ‘buy’ and ‘outsource’ categories. Once this assessment content has been uploaded, the 44% ‘cheat-curious’ students are vulnerable to misconduct.

One weakness of this study is that it only considers the number of assessment items uploaded to Chegg, it did not consider the number of students who access these solutions. For the institution investigated, both uploading assessment and accessing solutions constitutes academic misconduct, as both are actions intended to subvert the assessment design. In this study, we only have visibility on half the transaction. At its most benign, these results show that at least 1 student uploaded assessment content for each of the 23 units with assessment content on Chegg, however it is reasonable to assume the rates are much higher.

These findings paint a picture where units are highly vulnerable to cheating through Chegg. A majority of the units investigated in this study had their integrity compromised through the use of Chegg. While this study only presents analysis from a single engineering school, it is reasonable to assume these results can be generalised to other institutions and subject areas. Other institutions should take these results as a warning bell, and should consider how the integrity of their degrees might be vulnerable to misconduct through Chegg and similar services. Further, as detailed, this study only considers one half of the transaction, blind to the rates of students downloading solutions from Chegg to cheat. Future studies which investigate this question would provide a valuable contribution.

Solution Time on Chegg

A critical omission in institutions’ understanding of the challenge and risks of homework-helper websites, is the time delay between submitting a question and receiving a solution (i.e. solution time). This critical parameter dictates how effective these tools can be to cheat, and the types of assessment they can effectively be exploited for. In this work, we identify solution times as a function of year, finding the time to solve 50% of questions has dropped from 3.6 h (2015) to 1.4 h (2020). This is similar to Broemer and Recktenwald (2021) who found 50% of questions answered in 1 h. If the current trend continues, these solution times will reduce further.

The results reveal that the vast majority (89%) of questions are answered within 24 h. This makes Chegg a very effective means of cheating on assessment items. Students can post a question, with large certainty that they will have a solution the following day. More alarmingly, the short wait for a solution makes Chegg an effective option to cheat on timed online assessment (e.g. online quizzes or exams). In this study 40% of questions were answered within 1 h, and 58% within 2 h. As common exam durations are 1 or 2 h, students can upload assessment questions to homework-helper sites like Chegg, with the expectations, that many questions will be answered during the exam duration. These results are interesting when considered in the context of generative AI which can give instantaneous answers to questions. While generative AI can give instantaneous answers, our data (Fig. 2 inset) shows Chegg usage in 2023, following the release of ChatGPT, was still tracking above pre-pandemic levels. It is still to-be-determined how generative AI will disrupt the use of homework-helper and file sharing sites like Chegg.

During the shift towards online education there has been significant discussion of the integrity of online exams (Abdel-Hameed et al., 2021). Institutions have been concerned about the inability to ensure integrity on online exams, which has seen some institutions use electronic proctoring software, albeit with some controversy (Kharbat & Abu Daabes, 2021). Studies are inconclusive if online proctoring impacts average score in online exams, with some finding an impact (Alessio et al., 2017) and others finding no impact (Yates & Beaudrie, 2009) (although the validity of the study by Yates and Beaudrie (2009) has been disputed (Englander et al., 2011)). While in this work does not make comment on the use of electronic proctoring, the data presented in this work does demonstrate risks inherent in non-proctored online exams.

Conclusion

Students are increasingly turning to online tools to learn and cheat. Homework-helper websites like Chegg can provide valuable support to students, although often these sites are vulnerable to misuse and represent a significant risk to academic integrity. To effectively address these challenges, universities must have a sophisticated understanding of how homework-helper websites are misused. However, currently there is little published data. In this work we reveal the growth of Chegg, it’s penetration within an Australian technology university engineering school, and solution time. We show that Chegg was used for misconduct across a majority of audited mechanical engineering units. We further show that the rapid rate of Chegg solutions makes it an effective tool to cheat on both assessment and online exams. We further provide insights into the rapid growth of Chegg. While Chegg can be used for legitimate learning, its growth coupled with it short solution time and use as a cheating tool in the majority of units audited in this study, paint a picture of a tool which can be effectively used to cheat. The data in this work provides critical information for academics and institutions looking to improve the integrity of their courses through assessment and policy development. To support these efforts, we implore institutions to have greater transparency regarding academic misconduct. We also implore homework-helper websites, if they wish to be considered legitimate tools, to significantly increase efforts to reduce misconduct and improve engagementwith university integrity processes.

References

Abdel-Hameed, F. S. M., Tomczyk, Ł, & Hu, C. (2021). The editorial of special issue on education, IT, and the COVID-19 pandemic. Education and Information Technologies, 26(6), 6563–6566. https://doi.org/10.1007/s10639-021-10781-z

Alessio, H. M., Malay, N., Maurer, K., Bailer, A. J., & Rubin, B. (2017). Examining the effect of proctoring on online test scores. Online Learning Journal, 21(1), 146–161. https://doi.org/10.24059/olj.v21i1.885

Bretag, T., Curtis, G., Mcneill, M., & Slade, C. (2018). Academic integrity in Australian higher education: A national priority. https://web.archive.org/web/20220317020107/https://www.teqsa.gov.au/sites/default/files/academic-integrity-infographic.pdf?v=1574919157. Accessed 22 Jul 2024.

Bretag, T., Harper, R., Burton, M., Ellis, C., Newton, P., Rozenberg, P., Saddiqui, S., & van Haeringen, K. (2019). Contract cheating: A survey of Australian university students. Studies in Higher Education, 44(11), 1837–1856. https://doi.org/10.1080/03075079.2018.1462788

Broemer, E., & Recktenwald, G. (2021). Cheating and Chegg: A retrospective. 2021 ASEE Virtual Annual Conference Content Access Proceedings. https://doi.org/10.18260/1-2--36792

Busch, H. (2017). One Method for Inhibiting the Copying of Online Homework. The Physics Teacher, 55(7), 422–423. https://doi.org/10.1119/1.5003744

Chegg Inc. (2021). Chegg 2021 annual report. https://s21.q4cdn.com/596622263/files/doc_financials/2021/ar/2022-04-14-Chegg-Inc.-18637-Proxy-Annual-Report-Combo-(Final-pdf).pdf

Chegg Inc. (2022). Chegg Q3–22 Investor Presentation. https://s21.q4cdn.com/596622263/files/doc_presentations/presentation/2022/11/Chegg-Q3-22-Investor-Deck-FINAL.pdf

Chegg Inc. (2013). Chegg 2013 annual report. https://d1lge852tjjqow.cloudfront.net/CIK-0001364954/853c36b7-7d2f-4f75-9619-ed3f969144ff.pdf

Christodoulou, M. (2022). The billion-dollar industry helping students at major Australian universities cheat online assessments. Australian Broadcasting Corporation. https://www.abc.net.au/news/2022-07-30/cheating-rife-australian-unis-online-assessments-covid/101277426. Online.

Comas-Forgas, R., Lancaster, T., Calvo-Sastre, A., & Sureda-Negre, J. (2021). Exam cheating and academic integrity breaches during the COVID-19 pandemic: An analysis of internet search activity in Spain. Heliyon, 7(10), e08233. https://doi.org/10.1016/j.heliyon.2021.e08233

Curtis, G. J., & Clare, J. (2017). How Prevalent is Contract Cheating and to What Extent are Students Repeat Offenders? Journal of Academic Ethics, 15(2), 115–124. https://doi.org/10.1007/s10805-017-9278-x

Curtis, G. J., Cowcher, E., Greene, B. R., Rundle, K., Paull, M., & Davis, M. C. (2018). Self-Control, Injunctive Norms, and Descriptive Norms Predict Engagement in Plagiarism in a Theory of Planned Behavior Model. Journal of Academic Ethics, 16(3), 225–239. https://doi.org/10.1007/s10805-018-9309-2

Curtis, G. J., McNeill, M., Slade, C., Tremayne, K., Harper, R., Rundle, K., & Greenaway, R. (2022). Moving beyond self-reports to estimate the prevalence of commercial contract cheating: An Australian study. Studies in Higher Education, 47(9), 1844–1856. https://doi.org/10.1080/03075079.2021.1972093

Diekhoff, G. M., Labeff, E. E., Shinohara, K., & Yasukawa, H. (1999). COLLEGE CHEATING IN JAPAN AND THE UNITED STATES. Research in Higher Education, 40(3), 343–353. https://doi.org/10.1023/A:1018703217828

Emery-Wetherell, M., & Wang, R. (2023). How to use academic and digital fingerprints to catch and eliminate contract cheating during online multiple-choice examinations: A case study. Assessment and Evaluation in Higher Education. https://doi.org/10.1080/02602938.2023.2175348

Englander, F., Fask, A., & Wang, Z. (2011). Comment on The impact of online assessment on grades in community college distance education mathematics courses by Ronald W Yates and Brian Beaudrie. American Journal of Distance Education, 25(2), 114–120. https://doi.org/10.1080/08923647.2011.565243

Erguvan, I. D. (2021). The rise of contract cheating during the COVID-19 pandemic a qualitative study through the eyes of academics in Kuwait. Language Testing in Asia, 11(1), 34. https://doi.org/10.1186/s40468-021-00149-y

Finnie-Ansley, J., Denny, P., Becker, B. A., Luxton-Reilly, A., & Prather, J. (2022). The robots are coming: Exploring the implications of OpenAI Codex on introductory programming (pp. 10–19). Australasian Computing Education Conference. https://doi.org/10.1145/3511861.3511863

Gilson, A., Safranek, C. W., Huang, T., Socrates, V., Chi, L., Taylor, R. A., & Chartash, D. (2023). How does ChatGPT perform on the United States medical licensing examination? The implications of large language models for medical education and knowledge assessment. JMIR Medical Education, 9, e45312. https://doi.org/10.2196/45312

Nasdaq Inc. (2022). Chegg, Inc. Common Stock. https://www.nasdaq.com/market-activity/stocks/chgg

Kharbat, F. F., & Abu Daabes, A. S. (2021). E-proctored exams during the COVID-19 pandemic: A close understanding. Education and Information Technologies, 26(6), 6589–6605. https://doi.org/10.1007/s10639-021-10458-7

Lancaster, T., & Cotarlan, C. (2021a). Contract cheating by STEM students through a file sharing website: A Covid-19 pandemic perspective. International Journal for Educational Integrity, 17(1), 3. https://doi.org/10.1007/s40979-021-00070-0

Lo, C. K. (2023). What Is the Impact of ChatGPT on Education? A Rapid Review of the Literature. Education Sciences, 13(4), 410. https://doi.org/10.3390/educsci13040410. MDPI.

Manoharan, S., & Speidel, U. (2020). Contract cheating in computer science: A case study. Proceedings of 2020 IEEE International Conference on Teaching, Assessment, and Learning for Engineering, TALE 2020 (pp. 91–98). IEEE. https://doi.org/10.1109/TALE48869.2020.9368454

Maryon, T., Dubre, V., Elliott, K., Escareno, J., Fagan, M. H., Standridge, E., & Lieneck, C. (2022). COVID-19 Academic Integrity Violations and Trends A Rapid Review. Education Sciences 12(12). MDPI. https://doi.org/10.3390/educsci12120901

Mrabet, J., & Studholme, R. (2023). ChatGPT: A friend or a foe? Proceedings of 3rd IEEE International Conference on Computational Intelligence and Knowledge Economy, ICCIKE 2023, 269–274. https://doi.org/10.1109/ICCIKE58312.2023.10131713

Nikolic, S., Daniel, S., Haque, R., Belkina, M., Hassan, G. M., Grundy, S., Lyden, S., Neal, P., & Sandison, C. (2023). ChatGPT versus engineering education assessment: A multidisciplinary and multi-institutional benchmarking and analysis of this generative artificial intelligence tool to investigate assessment integrity. European Journal of Engineering Education, 48(4), 559–614. https://doi.org/10.1080/03043797.2023.2213169

Prentice, F. M., & Kinden, C. E. (2018). Paraphrasing tools, language translation tools and plagiarism: An exploratory study. International Journal for Educational Integrity, 14(1), 1–16. https://doi.org/10.1007/s40979-018-0036-7

Rahman, M. M., & Watanobe, Y. (2023). ChatGPT for education and research: Opportunities, threats, and strategies. Applied Sciences, 13(9), 5783. https://doi.org/10.3390/app13095783

Reedy, A., Pfitzner, D., Rook, L., & Ellis, L. (2021). Responding to the COVID-19 emergency: Student and academic staff perceptions of academic integrity in the transition to online exams at three Australian universities. International Journal for Educational Integrity, 17(1), 1–32. https://doi.org/10.1007/s40979-021-00075-9

Rigby, D., Burton, M., Balcombe, K., Bateman, I., & Mulatu, A. (2015). Contract cheating & the market in essays. Journal of Economic Behavior and Organization, 111, 23–37. https://doi.org/10.1016/j.jebo.2014.12.019

Rogerson, A. M., & McCarthy, G. (2017). Using Internet based paraphrasing tools: Original work, patchwriting or facilitated plagiarism? International Journal for Educational Integrity, 13(1), 2. https://doi.org/10.1007/s40979-016-0013-y

Ruggieri, C. (2020). Students’ use and perception of textbooks and online resources in introductory physics. Physical Review Physics Education Research, 16(2), 20123. https://doi.org/10.1103/PhysRevPhysEducRes.16.020123

Singh, M. (2023). Edtech Chegg tumbles as ChatGPT threat prompts revenue warning. Reuters. https://www.reuters.com/markets/us/edtech-chegg-slumps-revenue-warning-chatgpt-threatens-growth-2023-05-02/

Slade, C., Curtis, G. J., & Thomson, S. (2024). Understanding how and why students use academic file-sharing and homework-help websites: Implications for academic integrity. Higher Education Research and Development. https://doi.org/10.1080/07294360.2024.2349290

Somers, R., Cunningham, S., Dart, S., Thomson, S., Chua, C., & Pickering, E. (2023). AssignmentWatch: An automated detection and alert tool for reducing academic misconduct associated with file-sharing websites. IEEE Transactions on Learning Technologies, 17, 310–318. https://doi.org/10.1109/tlt.2023.3234914

Turner, K. L., Adams, J. D., & Eaton, S. E. (2022). Academic integrity, STEM education, and COVID-19: A call to action. Cultural Studies of Science Education, 17(2), 331–339. https://doi.org/10.1007/s11422-021-10090-4

Walker, J. (1998). Student Plagiarism in Universities: What are we Doing About it? Higher Education Research & Development, 17(1), 89–106. https://doi.org/10.1080/0729436980170105

Weber-Wulff, D., Anohina-Naumeca, A., Bjelobaba, S., et al. (2023). Testing of detection tools for AI-generated text. International Journal for Educational Integrity, 19, 26. https://doi.org/10.1007/s40979-023-00146-z

Yates, R. W., & Beaudrie, B. (2009). The impact of online assessment on grades in community college distance education mathematics courses. International Journal of Phytoremediation, 21(1), 62–70. https://doi.org/10.1080/08923640902850601

Acknowledgements

The authors graciously thank staff members who provided their assessment tasks for audit purposes. We further thank Karen Whelan for her guidance in drafting this manuscript. We acknowledge funding from the School of Mechanical, Medical and Process Engineering (MMPE), Queensland University of Technology (QUT) who provided funding to support this work. Clancy Schuller was supported with funding from MMPE QUT. Edmund Pickering is supported by a QUT Centre for Biomedical Technologies research fellowship. We acknowledge review from QUT’s Human Research Ethics Coordinator who deemed this work did not fall under the criteria of human research.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions

Author information

Authors and Affiliations

Contributions

EP was responsible for conceptualization, writing and analysis. CS was responsible for data collection and reviewing. EP was the lead author in this work.

Corresponding author

Ethics declarations

Conflict on Interests

The authors have no conflicts to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pickering, E., Schuller, C. Chegg’s Growth, Response Rate, and Prevalence as a Cheating Tool: Insights From an Audit within an Australian Engineering School. J Acad Ethics (2024). https://doi.org/10.1007/s10805-024-09551-6

Accepted:

Published:

DOI: https://doi.org/10.1007/s10805-024-09551-6