Abstract

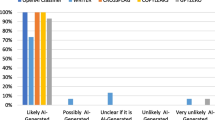

This study explores the capability of academic staff assisted by the Turnitin Artificial Intelligence (AI) detection tool to identify the use of AI-generated content in university assessments. 22 different experimental submissions were produced using Open AI’s ChatGPT tool, with prompting techniques used to reduce the likelihood of AI detectors identifying AI-generated content. These submissions were marked by 15 academic staff members alongside genuine student submissions. Although the AI detection tool identified 91% of the experimental submissions as containing AI-generated content, only 54.8% of the content was identified as AI-generated, underscoring the challenges of detecting AI content when advanced prompting techniques are used. When academic staff members marked the experimental submissions, only 54.5% were reported to the academic misconduct process, emphasising the need for greater awareness of how the results of AI detectors may be interpreted. Similar performance in grades was obtained between student submissions and AI-generated content (AI mean grade: 52.3, Student mean grade: 54.4), showing the capabilities of AI tools in producing human-like responses in real-life assessment situations. Recommendations include adjusting the overall strategies for assessing university students in light of the availability of new Generative AI tools. This may include reducing the overall reliance on assessments where AI tools may be used to mimic human writing, or by using AI-inclusive assessments. Comprehensive training must be provided for both academic staff and students so that academic integrity may be preserved.

Similar content being viewed by others

Data Availability

The full commentaries left by academic staff members when marking the test submissions cannot be shared because of the confidentiality of the assessment results and associated comments. All other data generated or analysed during this study are included in this published article.

References

Abd-Elaal, E. S., Gamage, S. H., & Mills, J. E. (2022). Assisting academics to identify computer generated writing. European Journal of Engineering Education, 1–21. https://doi.org/10.1080/03043797.2022.2046709.

Azaria, A., & Mitchell, T. (2023). The Internal State of an LLM knows when its lying. arXiv. https://doi.org/10.48550/arXiv.2304.13734. arXiv:2304.13734.

Baidoo-Anu, D., & Owusu Ansah, L. (2023). Education in the era of generative artificial intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning (SSRN Scholarly Paper 4337484). https://doi.org/10.2139/ssrn.4337484

Biderman, S., & Raff, E. (2022). Fooling MOSS Detection with Pretrained Language models (arXiv:2201.07406). arXiv. https://doi.org/10.48550/arXiv.2201.07406.

Bowman, S. R. (2023). Eight things to know about large language models (arXiv:2304.00612). arXiv. https://doi.org/10.48550/arXiv.2304.00612

Bretag, T., Harper, R., Burton, M., Ellis, C., Newton, P., Rozenberg, P., Saddiqui, S., & van Haeringen, K. (2019). Contract cheating: A survey of Australian university students. Studies in Higher Education, 44(11), 1837–1856.

Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J. D., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D., Wu, J., Winter, C., … Amodei, D. (2020). Language models are few-shot learners. Advances in Neural Information Processing Systems, 33, 1877–1901. https://proceedings.neurips.cc/paper_files/paper/2020/hash/1457c0d6bfcb4967418bfb8ac142f64a-Abstract.html?utm_medium=email&utm_source=transaction.

Bubeck, S., Chandrasekaran, V., Eldan, R., Gehrke, J., Horvitz, E., Kamar, E., Lee, P., Lee, Y. T., Li, Y., Lundberg, S., Nori, H., Palangi, H., Ribeiro, M. T., & Zhang, Y. (2023). Sparks of Artificial General Intelligence: Early experiments with GPT-4 (arXiv:2303.12712). arXiv. https://doi.org/10.48550/arXiv.2303.12712.

Campello de Souza, B., Serrano de Andrade Neto, A., & Roazzi, A. (2023). Are the New AIs Smart Enough to Steal Your Job? IQ Scores for ChatGPT, Microsoft Bing, Google Bard and Quora Poe (SSRN Scholarly Paper 4412505). https://doi.org/10.2139/ssrn.4412505.

Cassidy, C. (2023, April 16). Australian universities split on using new tool to detect AI plagiarism. The Guardian. https://www.theguardian.com/australia-news/2023/apr/16/australian-universities-split-on-using-new-tool-to-detect-ai-plagiarism.

Chakraborty, S., Bedi, A. S., Zhu, S., An, B., Manocha, D., & Huang, F. (2023). On the Possibilities of AI-Generated Text Detection (arXiv:2304.04736). arXiv. https://doi.org/10.48550/arXiv.2304.04736.

Clark, E., August, T., Serrano, S., Haduong, N., Gururangan, S., & Smith, N. A. (2021). All That’s `Human’ Is Not Gold: Evaluating Human Evaluation of Generated Text. Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), 7282–7296. https://doi.org/10.18653/v1/2021.acl-long.565.

Cotton, D. R., Cotton, P. A., & Shipway, J. R. (2023). Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innovations in Education and Teaching International, 1–12. https://doi.org/10.1080/14703297.2023.2190148.

Crawford, J., Cowling, M., & Allen, K. A. (2023). Leadership is needed for ethical ChatGPT: Character, assessment, and learning using artificial intelligence (AI). Journal of University Teaching & Learning Practice, 20(3), 02.

Cullen, R. (2001). Addressing the digital divide. Online Information Review, 25(5), 311–320. https://doi.org/10.1108/14684520110410517.

Dawson, P. (2020). Cognitive Offloading and Assessment. In M. Bearman, P. Dawson, R. Ajjawi, J. Tai, & D. Boud (Eds.), Re-imagining University Assessment in a Digital World (pp. 37–48). Springer International Publishing. https://doi.org/10.1007/978-3-030-41956-1_4.

Elkhatat, A. M., Elsaid, K., & Almeer, S. (2021). Some students plagiarism tricks, and tips for effective check. International Journal for Educational Integrity, 17(1), 1–12. https://doi.org/10.1007/s40979-021-00082-w.

Elkhatat, A. M., Elsaid, K., & Almeer, S. (2023). Evaluating the efficacy of AI content detection tools in differentiating between human and AI-generated text. International Journal for Educational Integrity, 19(1), https://doi.org/10.1007/s40979-023-00140-5.

Foltynek, T., Bjelobaba, S., Glendinning, I., Khan, Z. R., Santos, R., Pavletic, P., & Kravjar, J. (2023). ENAI recommendations on the ethical use of artificial intelligence in education. International Journal for Educational Integrity, 19(1), 1–4. https://doi.org/10.1007/s40979-023-00133-4.

Fröhling, L., & Zubiaga, A. (2021). Feature-based detection of automated language models: Tackling GPT-2, GPT-3 and Grover. PeerJ Computer Science, 7, e443. https://doi.org/10.7717/peerj-cs.443.

Gehrmann, S., Strobelt, H., & Rush, A. M. (2019). GLTR: Statistical detection and visualization of generated text (arXiv:1906.04043). arXiv. https://doi.org/10.48550/arXiv.1906.04043

GPTZero. (n.d.-a). GPTZero FAQ. Retrieved 28 (May 2023). from https://app.gptzero.me/app/faq.

GPTZero. (n.d.-b). Home. GPTZero. Retrieved 28 (May 2023). from https://gptzero.me/.

Guerrero-Dib, J. G., Portales, L., & Heredia-Escorza, Y. (2020). Impact of academic integrity on workplace ethical behaviour. International Journal for Educational Integrity, 16(1), https://doi.org/10.1007/s40979-020-0051-3.

Gunser, V. E., Gottschling, S., Brucker, B., Richter, S., & Gerjets, P. (2021). Can users distinguish narrative texts written by an artificial intelligence writing tool from purely human text? International Conference on Human-Computer Interaction, 520–527. https://doi.org/10.1007/978-3-030-78635-9_67

Ippolito, D., Duckworth, D., Callison-Burch, C., & Eck, D. (2020). Automatic detection of generated text is easiest when humans are fooled (arXiv:1911.00650). arXiv. https://doi.org/10.48550/arXiv.1911.00650

Kasneci, E., Sessler, K., Küchemann, S., Bannert, M., Dementieva, D., Fischer, F., Gasser, U., Groh, G., Günnemann, S., Hüllermeier, E., Krusche, S., Kutyniok, G., Michaeli, T., Nerdel, C., Pfeffer, J., Poquet, O., Sailer, M., Schmidt, A., Seidel, T., & Kasneci, G. (2023). ChatGPT for good? On opportunities and challenges of large language models for education. Learning and Individual Differences, 103, 102274. https://doi.org/10.1016/j.lindif.2023.102274.

Köbis, N., & Mossink, L. D. (2021). Artificial intelligence versus Maya Angelou: Experimental evidence that people cannot differentiate AI-generated from human-written poetry. Computers in Human Behavior, 114, 106553. https://doi.org/10.1016/j.chb.2020.106553.

Kirchenbauer, J., Geiping, J., Wen, Y., Katz, J., Miers, I., & Goldstein, T. (2023). A watermark for large language models (arXiv:2301.10226). arXiv. http://arxiv.org/abs/2301.10226

Koubaa, A. (2023). GPT-4 vs. GPT-3.5: A concise showdown. TechRxiv. https://doi.org/10.36227/techrxiv.22312330.v2.

Krishna, K., Song, Y., Karpinska, M., Wieting, J., & Iyyer, M. (2023). Paraphrasing evades detectors of AI-generated text, but retrieval is an effective defense (arXiv:2303.13408). arXiv. https://doi.org/10.48550/arXiv.2303.13408.

Kumar, R. (2023). Faculty members’ use of artificial intelligence to grade student papers: A case of implications. International Journal for Educational Integrity, 19(1), 9. https://doi.org/10.1007/s40979-023-00130-7.

Kumar, R., Mindzak, M., Eaton, S. E., & Morrison, R. (2022). AI & AI: Exploring the contemporary intersections of artificial intelligence and academic integrity. Canadian Society for the Study of Higher Education Annual Conference, Online. Werklund School of Education. https://tinyurl.com/ycknz8fd.

Lancaster, T. (2023). Artificial intelligence, text generation tools and ChatGPT – does digital watermarking offer a solution? International Journal for Educational Integrity, 19(1), https://doi.org/10.1007/s40979-023-00131-6.

Liang, W., Yuksekgonul, M., Mao, Y., Wu, E., & Zou, J. (2023). GPT detectors are biased against non-native English writers (arXiv:2304.02819). arXiv. http://arxiv.org/abs/2304.02819.

Malinka, K., Perešíni, M., Firc, A., Hujňák, O., & Januš, F. (2023). On the educational impact of ChatGPT: Is artificial intelligence ready to obtain a university degree? (arXiv:2303.11146). arXiv. https://doi.org/10.48550/arXiv.2303.11146

Marche, S. (2022, December 6). The college essay is dead. The Atlantic. https://www.theatlantic.com/technology/archive/2022/12/chatgpt-ai-writing-college-student-essays/672371/.

Microsoft (2023). Confirmed: The new Bing runs on OpenAI’s GPT-4 | Bing Search Blog. https://blogs.bing.com/search/march_2023/Confirmed-the-new-Bing-runs-on-OpenAI%E2%80%99s-GPT-4.

Netus AI. (n.d.). NetusAI Paraphrasing Tool | Undetectable AI Paraphraser. Netus AI Paraphrasing Tool. Retrieved 28 (May 2023). from https://netus.ai/.

Okonkwo, C. W., & Ade-Ibijola, A. (2021). Chatbots applications in education: A systematic review. Computers and Education: Artificial Intelligence, 2, 100033. https://doi.org/10.1016/j.caeai.2021.100033.

OpenAI (2023a). GPT-4 Technical Report (arXiv:2303.08774). arXiv. http://arxiv.org/abs/2303.08774.

OpenAI (2023b, January 31). New AI classifier for indicating AI-written text. https://openai.com/blog/new-ai-classifier-for-indicating-ai-written-text.

Originality.AI. (2023, April 9). AI Content Detection Accuracy – GPTZero vs Writer vs Open AI vs CopyLeaks vs Originality.AI – Detecting Chat GPT AI Content Accuracy—Originality.AI. https://originality.ai/ai-content-detection-accuracy/.

Perkins, M. (2023). Academic Integrity considerations of AI large Language models in the post-pandemic era: ChatGPT and beyond. Journal of University Teaching & Learning Practice, 20(2), https://doi.org/10.53761/1.20.02.07.

Perkins, M., Gezgin, U. B., & Roe, J. (2018). Understanding the relationship between Language ability and plagiarism in non-native English speaking business students. Journal of Academic Ethics, 16(4), https://doi.org/10.1007/s10805-018-9311-8.

Perkins, M., Gezgin, U. B., & Roe, J. (2020). Reducing plagiarism through academic Misconduct education. International Journal for Educational Integrity, 16(1), 3. https://doi.org/10.1007/s40979-020-00052-8.

Perkins, M., & Roe, J. (2023). Decoding Academic Integrity policies: A Corpus Linguistics Investigation of AI and other Technological threats. Higher Education Policy. https://doi.org/10.1057/s41307-023-00323-2.

Pichai, S. (2023, February 6). An important next step on our AI journey. Google. https://blog.google/technology/ai/bard-google-ai-search-updates/.

Rahman, M. M., & Watanobe, Y. (2023). ChatGPT for Education and Research: Opportunities, threats, and strategies. Applied Sciences, 13(9), https://doi.org/10.3390/app13095783. Article 9.

Reimers, F., Schleicher, A., Saavedra, J., & Tuominen, S. (2020). Supporting the continuation of teaching and learning during the COVID-19 Pandemic (pp. 1–38). OECD. https://globaled.gse.harvard.edu/files/geii/files/supporting_the_continuation_of_teaching.pdf.

Risko, E. F., & Gilbert, S. J. (2016). Cognitive offloading. Trends in Cognitive Sciences, 20(9), 676–688. https://doi.org/10.1016/j.tics.2016.07.002.

Rodgers, C. M., Ellingson, S. R., & Chatterjee, P. (2023). Open Data and transparency in artificial intelligence and machine learning: A new era of research. F1000Research, 12, 387. https://doi.org/10.12688/f1000research.133019.1.

Roe, J. (2022). Reconceptualizing academic dishonesty as a struggle for intersubjective recognition: A new theoretical model. Humanities and Social Sciences Communications, 9(1). https://doi.org/10.1057/s41599-022-01182-9

Roe, J., & Perkins, M. (2022). What are automated paraphrasing tools and how do we address them? A review of a growing threat to academic integrity. International Journal for Educational Integrity, 18(1). https://doi.org/10.1007/s40979-022-00109-w

Roe, J., Renandya, W., & Jacobs, G. (2023). A review of AI-Powered writing tools and their implications for Academic Integrity in the Language Classroom. Journal of English and Applied Linguistics, 2(1). https://doi.org/10.59588/2961-3094.1035

Rudolph, J., Tan, S., & Tan, S. (2023). ChatGPT: Bullshit spewer or the end of traditional assessments in higher education? Journal of Applied Learning and Teaching, 6(1). https://doi.org/10.37074/jalt.2023.6.1.9

Sadasivan, V. S., Kumar, A., Balasubramanian, S., Wang, W., & Feizi, S. (2023). Can AI-generated text be reliably detected? (arXiv:2303.11156). arXiv. https://doi.org/10.48550/arXiv.2303.11156

Sohail, S. S., Madsen, D., Himeur, Y., & Ashraf, M. (2023). Using ChatGPT to navigate ambivalent and contradictory research findings on artificial intelligence (SSRN Scholarly Paper 4413913). https://doi.org/10.2139/ssrn.4413913

Solaiman, I., Brundage, M., Clark, J., Askell, A., Herbert-Voss, A., Wu, J., Radford, A., Krueger, G., Kim, J. W., Kreps, S., McCain, M., Newhouse, A., Blazakis, J., McGuffie, K., & Wang, J. (2019). Release strategies and the social impacts of language models (arXiv:1908.09203). arXiv. https://doi.org/10.48550/arXiv.1908.09203

Sparrow, J. (2022, November 18). ‘Full-on robot writing’: The artificial intelligence challenge facing universities. The Guardian. https://www.theguardian.com/australia-news/2022/nov/19/full-on-robot-writing-the-artificial-intelligence-challenge-facing-universities.

Strzelecki, A. (2023). To use or not to use ChatGPT in higher education? A study of students’ acceptance and use of technology. Interactive Learning Environments, 0(0), 1–14. https://doi.org/10.1080/10494820.2023.2209881.

Sullivan, M., Kelly, A., & McLaughlan, P. (2023). ChatGPT in higher education: Considerations for academic integrity and student learning. Journal of Applied Learning and Teaching, 6(1).

Turnitin.com. (2021, January 21). A new path and purpose for Turnitin. https://www.turnitin.com/blog/a-new-path-and-purpose-for-turnitin

Turnitin.com. (2023, April 4). The launch of Turnitin’s AI writing detector and the road ahead. https://www.turnitin.com/blog/the-launch-of-turnitins-ai-writing-detector-and-the-road-ahead.

Turnitin.com. (n.d.-a). AI writing detection frequently asked questions. Retrieved 28 (May 2023). from https://www.turnitin.com/products/features/ai-writing-detection/faq

Turnitin.com. (n.d.-b). Turnitin for universities. Retrieved 16 (August 2023). from https://www.turnitin.com/regions/uk/university

Uzun, L. (2023). ChatGPT and Academic Integrity concerns: Detecting Artificial Intelligence Generated Content. Language Education and Technology, 3(1), Article 1. http://www.langedutech.com/letjournal/index.php/let/article/view/49.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin, I. (2017). Attention is All you Need. Advances in Neural Information Processing Systems, 30. https://proceedings.neurips.cc/paper/2017/hash/3f5ee243547dee91fbd053c1c4a845aa-Abstract.html

Weber-Wulff, D., Anohina-Naumeca, A., Bjelobaba, S., Foltýnek, T., Guerrero-Dib, J., Popoola, O., Šigut, P., & Waddington, L. (2023). Testing of detection tools for AI-generated text (arXiv:2306.15666). arXiv. https://doi.org/10.48550/arXiv.2306.15666

Zhang, S. J., Florin, S., Lee, A. N., Niknafs, E., Marginean, A., Wang, A., Tyser, K., Chin, Z., Hicke, Y., Singh, N., Udell, M., Kim, Y., Buonassisi, T., Solar-Lezama, A., & Drori, I. (2023). Exploring the MIT mathematics and EECS curriculum using large language models (arXiv:2306.08997). arXiv. https://doi.org/10.48550/arXiv.2306.08997

Acknowledgements

The authors are very grateful for the support of the academic staff who participated in the study and the ongoing support of the university Examinations Office team who provided technical assistance throughout the project.

Funding

No funding was received for this study.

Author information

Authors and Affiliations

Contributions

Mike Perkins conceived and designed the study. Data collection and analysis were performed by the authors Mike Perkins, Darius Postma, James McGaughran, and Don Hickerson. The first draft of the manuscript was written by Mike Perkins, and all authors subsequently revised the manuscript. All authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

Mike Perkins, Darius Postma, James McGaughran, and Don Hickerson are currently employed by the university where the study took place. Jasper Roe was previously employed at the same university. This study was not connected to or funded by Turnitin.

Ethics Approval and Consent to Participate

The study was approved by the institution’s Human Ethics Committee before commencement, and all participants provided informed consent with the option to opt out of the study at any point in time.

LLM Usage

This study used Generative AI tools to produce draft text, and revise wording throughout the production of the manuscript. Multiple versions of ChatGPT over different time periods were used, with all versions using the underlying GPT-4 Large Language Model. The authors reviewed, edited, and take responsibility for all outputs of the tools used in this study.

Preprint Publication

The initial version of this manuscript prior to peer-review was posted on the arXiv preprint server, and is available on a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) licence at https://arxiv.org/abs/2305.18081.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Perkins, M., Roe, J., Postma, D. et al. Detection of GPT-4 Generated Text in Higher Education: Combining Academic Judgement and Software to Identify Generative AI Tool Misuse. J Acad Ethics 22, 89–113 (2024). https://doi.org/10.1007/s10805-023-09492-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10805-023-09492-6