Abstract

Questioning is a critical instructional strategy for teachers to support students’ knowledge construction in inquiry-oriented science teaching. Existing literature has delineated the characteristics and functions of effective questioning strategies. However, attention has been primarily cast on the format of questioning like open-ended questions in prompting student interactions or class discourses, but not much on science content embedded in questions and how they guide students toward learning objectives. Insufficient attention has been cast on the connection between a chain of questions used by a teacher in the attempt to scaffold student conceptual understanding, especially when students encounter difficulties. Furthermore, existing methods of question analysis from massive information of class discourses are unwieldy for large-scale analysis. Science teacher education needs an instrument to assess a large sample of Pre-service Teachers’ (PST) competencies of not only asking open-ended questions to solicit students’ thoughts but also analyzing the information collected from students’ responses and determining the logical of consecutive responses. This study presented such an instrument for analyzing patterns of 60 PST’s questioning chains from when they taught a science lesson during a methods course and another lesson during student teaching. Cohen’s Kappa was conducted to examine the inter-rater reliability of the coders. The PST’s orientations from the two videos were determined and the correlation between them was compared to test the validity of this instrument. Consideration of the data from this instrument identified patterns of the PSTs’ science teaching, discussed the importance of guiding questions in inquiry teaching, and suggested quantitative studies with this instrument.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Questioning is an important strategy for teachers to interact with students (Benedict-Chambers et al., 2017; Chin & Osborne, 2008). In an inquiry-oriented setting, questioning is a promising method to diagnose students’ ideas, prompt productive discourses, and promote student thinking (Chin, 2007; Chin & Osborne, 2008). Chin (2007) defined the format of productive questioning as a chain of I (a teacher initiates a question)-R (students respond to that question)-F (the teacher asks a follow-up question)-R-F in comparison to I-R-E (the teacher evaluates students’ responses). Similarly in Socratic questioning, a series of questions are used to “drive his students to the conclusions to which he had wanted all along to drive them” (Gardner, 1999, p. 99). The model of I-R-F-R-F shares two features that separate teacher questions in inquiry teaching from those in traditional settings: (1) questions are for teachers to leverage student thinking yet hold students accountable for their knowledge construction; (2) questions are logically connected that gradually guide students toward learning objectives. The two features are involved in many instructional models, such as the 5E learning cycle (Bybee, 2000) and the learning-assistant model (Brewe et al., 2009). However, it remains unclear how science teachers put questions in a chain, how a question chain may potentially contribute to student learning, and what competencies teachers need to ask effective questions.

One reason is that existing instruments for questioning analysis primarily focus on features or formats of individual questions and cast insufficient attention to science content knowledge embedded in question chains. Although certain questions have been found effective in promoting class discourses or affective learning outcomes, their impact on students’ conceptual understanding remains unknown. For instance, Morris and Chi (2020) defined constructive questions as those that require students to go beyond presented materials, and active questions as those that require students to recall information or background knowledge. However, it is unclear in what direction students go beyond presented materials as being prompted by constructive questions or how recalling background knowledge contributes to students’ understanding of lesson objectives. Overlooking science content embedded in questions and their relation to lesson objectives would lead to an over emphasis of open-ended questions. Open-ended questions are productive in prompting student thinking (Scott et al., 2006), but they may contribute little to students’ conceptual understanding because they contain no purposeful interventions that regulate student thinking especially when it ventures off on a tangent. In the education of pre-service teachers (PST), overemphasizing open-ended questions may mislead PSTs toward a hands-off manner of inquiry teaching (Crawford, 2014) that open-ended questions are sufficient for students to develop sophisticated understanding if they articulate their thoughts.

Another challenge in the preparation of questioning for PSTs lies in the assessment of PSTs’ questions. A common method is discourse analysis in selected scenarios that involve teacher questioning (Erdogan & Campbell, 2008; Van Zee et al., 2001). This method provides rich information such as the language use and conversational context but is unwieldy for large-scale analyses of questions from multiple scenarios or a large sample of PSTs. Furthermore, it may be inaccurate to derive a PST’s competence of questioning based on 1–2 scenarios since teacher questioning is related to situational contingencies (Roth, 1996; Wang & Sneed, 2019). There needs an instrument for teacher educators to easily extract critical information about a PST’s competence of questioning from numerous or lengthy scenarios of science teaching. In this study, we designed such an instrument that measures teachers’ practice of questioning. Like Soysal (2023) who examined teachers’ questioning in monologic, declarative, and dialectical patterns, we focused on the teacher side of the I-(R)-F-(R)-F model and investigated how different patterns of I-F-F from a teacher could potentially intervene with student learning. We did not include students’ responses in analysis for two reasons. First, existing studies have established the connection between effective teaching practice and positive student learning outcome (Araceli Ruiz-Primo & Furtak, 2006; McCutchen et al., 2002; Vescio et al., 2008). In this study, we designed an instrument to describe PSTs’ efforts of inquiry teaching via questioning and their potential impact on student learning. After validating this instrument, a future step would be to use students’ data to reflect on the actual impact of PSTs’ questioning. Secondly, we designed this instrument for large-scale qualitative analyses. Including students’ data would largely increase the workload of coding and then affect the practicability of this instrument. To thoroughly introduce our instrument, we investigated its validity and reliability (Q1 and Q2) and demonstrated its use in depicting PSTs’ practice of questioning in their inquiry teaching (Q3). Our research questions are the following:

-

Q1. Was this coding scheme valid to measure the PSTs’ questioning in science teaching?

-

Q2. Was this coding scheme reliable to measure the PSTs’ questioning in science teaching?

-

Q3. What patterns of science teaching from the PSTs were suggested by this coding scheme when they were required to teach a lesson through inquiry teaching?

Theoretical Framework

Teacher Questioning in Inquiry Teaching

Despite its various forms, inquiry teaching has an essential feature that students construct scientific knowledge by internalizing their first-hand experience with natural phenomena (Crawford, 2014). Three activities are important in this process that are raising questions, conducting investigations, and formulating explanations (Bell et al., 2005). According to students’ accountability in the three activities, four types of inquiry teaching are defined along the inquiry spectrum (Table 1). From confirmation to open inquiry, there is a shift from being teacher-centered to student-centered in three inquiry activities of Question, Investigation, and Explanation. These three activities that lie in the core of science (National Research Council [NRC], 2012) correspond to the stages of Engage, Explore, and Explain in the 5E learning cycle (Bybee, 2014). Engage is for students to develop curiosity or questions about the learning objectives associated with a science concept. Explore is for students to access activities reflective of learning objectives. Explain is for students to reflect on exploration experiences and develop understandings of learning objectives. The three phases of Engage, Explore, and Explain accommodate the three activities of Question, Investigation, and Explanation, which compose of a complete cycle of scientific inquiry. Thus, we applied the first three E phases in this study to reify inquiry teaching that contextualized teacher questioning.

Teacher guidance is important in inquiry teaching to avoid students’ knowledge construction proceeding in random or unproductive directions (Crawford, 2014). Questioning is an advantageous approach to teacher guidance because it maintains students’ sense of agency for problem solving (Kawalkar & Vijapurkar, 2013; Lehtinen et al., 2019). Researchers argued that teacher questioning in inquiry teaching functions to elicit students’ thinking and promote their conceptual understanding (Chin, 2007; Chin & Osborne, 2008). Correspondingly, two types of questions can be derived that function differently in helping students achieve a learning objective: (1) questions aimed to gauge students’ understanding by prompting them to articulate their thoughts; (2) questions aimed to regulate student reasoning in a direction toward a learning objective. This categorization focuses on science content knowledge embedded in questions rather than other factors like cognitive demands or functions in prompting different formats of interaction. For example, Kawalkar and Vijapurkar (2013), (pp. 14-16, Table 2) specified that teacher questioning should progress in a sequence of exploring prerequisites, generating ideas and explanations, probing further understanding, refining conceptions and explanations, guiding towards scientific conceptions, and eventually achieving the intended teaching goal. From the perspective of knowledge construction, this process can be represented as: probing (prerequisite)—guiding (the generation of ideas)—probing (further understanding)—guiding (the refinement of ideas)—guiding (toward scientific conceptions)—(achieving the goal). In this study, we labeled the two types of questions probing and guiding questions. Details about the two types of questions will be discussed later.

Questioning Assessment via Discourse Analysis

Discourse analysis is the examination of language use and interactional contexts in ongoing teaching events (Kelly, 2014). In science teaching, studies about the communicative approach yield a similar finding that dialogic discourses enable deeper conceptual understanding of students than authoritative discourses such as teacher lecturing and evaluative questioning in the format of I-R-E (Almahrouqi & Scott, 2012; Krystyniak & Heikkinen, 2007; Manz, 2012; Vrikki & Evagorou, 2023). The reason is that dialogic discourses engage students in conversation with peers or a teacher. However, it is unclear how engaging in conversation of dialogic discourses leverages students’ conceptual understanding. Teacher intervention is neglected in this process. For example, Almahrouqi and Scott (2012) found that the students receiving dialogic discourses demonstrated a sophisticated understanding of electric circuits such as “the battery just organizes the charges and supplies them with energy” (p.303). This transcript showed how the teacher solicited different opinions from students and then “the teacher uses this difference in points of view as a starting point to move towards the accepted scientific view” (p. 296). However, the author did not explain how the students developed this understanding from their misconceptions that “charges run out” and “the wire does not have charges” (p. 296). It is unclear what practice from the teacher led to the transfer from students’ different views to the accepted scientific view. Students’ conceptual understanding cannot take place automatically after they participate in group or class discussion (Smart & Marshall, 2013). Mortimer and Scott (2003) proposed five dimensions for discourse analysis: teaching purpose, science content, communicative approach, patterns of discourse, and teacher intervention. Regarding questioning, the dimension of teacher intervention has not received as much attention as the other four dimensions. In a more recent study, Vrikki and Evagorou (2023) reemphasized the importance of dialogic pattern of questioning as opposed to the authoritative one. They claimed that dialogic questioning promoted student responses but did not specify how students’ engagement in class dialogues contributed to their conceptual understanding.

A review of the literature revealed three themes regarding discourse analyses on teacher questioning. First, the primary focus was on formats of questions with insufficient to science content embedded in questions. For example, Reinsvold and Cochran (2012) identified three categories of closed-ended, open-ended, and task-oriented questions and found that teacher questions during class discourses were primarily closed-ended and task-oriented. Smith and Hackling (2016) subcategorized open-ended questions into questions for different instructional activities, such as questions soliciting student observation and those soliciting student explanations. Most of the studies were in favor of open-ended questions that seemed to epitomize inquiry teaching as opposed to closed-ended questions in lecturing. Their difference lies in whether a question prompts active responses of students (i.e., open-ended) or terminates a conversation (i.e., closed-ended) but not how a question facilitates student learning. Secondly, questions were analyzed with a small sample size of teachers or lessons, such as two middle-school teachers (Aranda et al., 2020), two lessons from one teacher (Reinsvold & Cochran, 2012), three lessons from three teachers (Smith & Hackling, 2016), and six lessons from two teachers (Morris & Chi, 2020). Teacher questioning is contingent on science content and instructional context. Questions extracted from a small sample of lessons may not represent a science teacher’s general practice of questioning. Thus, the analysis may be biased about the teacher’s competency of questioning. Thirdly, questions from teachers were analyzed separately with limited attention to the connection between them and how they could potentially facilitate student learning. For example, Soysal (2022) identified eight types of challenging questions and investigated how each type was associated with the participants’ conceptual tools and ontological commitments. We found two exceptions (Lehtinen et al., 2019; Smith & Hackling, 2016). Smith and Hackling (2016) claimed that a sequence of questions consistent with inquiry teaching was a teacher using open-idea questions to initiate class discussion, followed up with open-description questions to elicit student observation, then using open-explanation/reason questions to prompt student explanation, and ending with closed-ended questions to help students draw a conclusion. Lehtinen et al. (2019) found that the participating teachers used more non-specific guidance in the opening-up stage and more specific guidance in the closing-down stage. Both studies suggest an important feature of questioning in inquiry teaching in terms of a sequence from open-ended or divergent questions to specific guidance or convergent questions in the I-R-F-R-F chain (Chin, 2007).

Our Instrument for Questioning Analysis

Our instrument is composed of two parts: (1) discourse codes within a vignette of science teaching (Table 2); (2) vignette levels determined by the pattern of discourse codes (Table 3). We first defined open-ended or divergent questions as probing questions (pq, Table 2) aimed to collect information about student understanding of science content, such as questions soliciting student observation or explanation (Reinsvold & Cochran, 2012; Smith & Hackling, 2016). We defined specific guidance or convergent questions as guiding questions (gq) aimed to regulate student learning along a desired direction. Unlike closed-ended questions that require brief answers from students (Reinsvold & Cochran, 2012), guiding questions engage students in deep scientific reasoning in directions designated by teachers. The third type of questioning was checking questions (cq), which was to interact with students for temperature checking. We labeled non-questioning discourses as “statement,” including lecturing (le) that intervenes with student learning by imparting knowledge directly to students, transition (tr) that shows a teacher’s engagement with student reasoning but contains no explicit intervention, error (er) that diverts student learning with negative intervention, and direction (di) for instructional directives. For large-scale analyses, we ignored the minute differences in questions for different activities because such differences entail intensive but peripheral information for the identification of intervention patterns. For example, we ignored the difference between questions soliciting students’ observation and explanation but coded them both as probing questions that gauge students’ existing knowledge. Our focus was on the patterns of discourse codes. Repeated codes collapse into one set. For example, a chain of “pq-tr-pq-tr-pq-tr-pq-tr” that described a teacher repeatedly paraphrasing students’ answers after each probing question would collapse into “pq-tr” that meant the teacher gauged students’ understanding without explicit intervention.

There were seven vignette levels based on seven different patterns of discourse codes that belonged to three categories of teachers’ potential intervention to student learning (Table 3). The category of Q is marked by the presence of “gq,” which describe situations where students construct content knowledge related to a learning objective with a teacher’s indirect intervention through questioning. “Qa” is completely student-centered with little teacher lecturing. It describes an ideal situation of inquiry teaching where students are prompted toward a learning objective solely by teachers’ guiding questions. “Qb” involves some teacher lecturing, but it is not directly related to the lesson objective. Take a lesson about floating and sinking for instance, the definitions of volume and mass are the prerequisite knowledge without which students cannot continue with their exploration. Thus, lecturing the concept of volume or mass would be coded as “le,” and the vignette level is still at the Q level because the target knowledge about floating and sinking is not lectured by the teacher but constructed by students. The category of D is marked by the presence of “le*,” which describe a teacher’s intervention of directly imparting target content knowledge in an authoritative format. Students are no longer the agent of knowledge construction. “Da” is interactive lecturing where a teacher uses questions to check students’ understanding during direct instruction. “Db” is teacher-dominant lecturing where a teacher pays little attention to student understanding when direction instruction starts. Q and D levels are separated by the presence of “le*,” i.e., whether the lesson objective is achieved by students (Q) or their teacher (D). The category of N describes situations where a teacher contributes little to student learning due to the absence of any explicit interventions (i.e., gq or le).

Teacher Knowledge of Questioning Suggested by Our Instrument

Science teachers need to possess certain knowledge to be able to ask effective questions. Adopting the framework of pedagogical content knowledge (Magnusson et al., 1999; Park & Oliver, 2008), we defined four components of teachers’ competency of questioning regarding a scientific topic (Table 4). For convenience, we referred to this knowledge in this study as Pedagogical Content Knowledge framework applied in Questioning (PCK-Q). The four components describe teachers’ understanding of science content and its representation in learning activities (knowledge of curriculum), awareness of students’ strengths and difficulties after probing their understanding (knowledge of students), preference of using questions in their responses to students (orientation), and ability of selecting appropriate questions in response to students (knowledge of instructional strategy). We did not incorporate the component of assessment because: (1) we focused exclusively on one method of assessment, i.e., questioning, rather than teachers’ knowledge of various methods of assessment; (2) we were more interested in how teachers respond to students after they assess students’ understanding.

Teachers’ PCK-Q can be inferred from their practice of questioning indicated by vignette levels (Table 3) in their science teaching. For example, a teacher who is more question-oriented is likely to have more vignettes belonging to the Q category (i.e., Qa, Qb, and Qe). A teacher who has stronger knowledge of curriculum is likely to have fewer vignettes with errors (i.e., Qe and De). We have validated the process of deriving PCK-Q from discourse codes in the context of college introductory physics (Wang et al., 2023). In that study, we collected multiple teaching videos from each participant. We found that the knowledge of curriculum, students, and instructional strategies were contingent on the context of discourse, especially science content. For example, a teacher more knowledgeable about classical mechanics than electromagnetism would demonstrate stronger knowledge in those three components while teaching classical mechanics. Their orientation was more consistent across content areas. In this study, we coded two teaching videos from each PST because of the large sample size. We used the component of orientation relatively independent from instructional topics to validate the instrument (i.e., Tables 2 and 3) in the context of K–8 science.

Methods

Context and Participants

We used mixed methods for this study (Ary et al., 2010). The participants were 60 PSTs from a science methods course selected through convenient sampling. All the PSTs were female. They were self-contained teacher candidates in a 2-year program of teacher preparation. This program was composed of four blocks with each block lasting for one semester. In the first block, the PSTs took courses about general issues in education, such as classroom management and equity in education. In the second block, the PSTs took instructional methods courses about different disciplines, like mathematics and science. In the first two blocks, the PSTs were placed with a practicing teacher in the field as their mentor 1 day per week. Their major task was to observe and facilitate lessons taught by their mentor teacher. The PSTs conducted their student teaching in the third and fourth blocks where they were in the field 4 days a week. They had more opportunities to be involved in teaching practice. In this program, the PSTs took only one science methods course at the second block where the 5E model and questioning are important learning objectives (Wang & Sneed, 2019). All five Es typically cannot fit in one lesson, and the PSTs had limited opportunities of teaching in the field. Thus, the PSTs were required to practice with Engage, Explore, and Explain that correspond to three key activities of inquiry (Table 1) and compose of a complete cycle of investigation. At the end of this course, the PSTs individually taught a science lesson that involved the three Es. During student teaching in the third block, the PSTs taught 3 science lessons on their own without any support from their mentor teacher. They were not required to use the 5E model or inquiry teaching in these lessons.

The PSTs recorded their teaching with a device that included a microphone carried by a PST for audio recording and an iPad on a robotic set following the microphone for video recording. From all the videos submitted from the PSTs, we selected the 60 participants’ individual lesson from the methods course in Block 2 and the first science lesson during student teaching in Block 3. In this paper, we referred to the former as course assignment (CA) and the latter as student teaching (ST). The PSTs had to discuss both CA and ST with their mentor teacher to align with his/her science teaching. Since the PSTs were novices, they often taught a topic that had already been addressed by their mentor teacher. To most of them, the task in both CA and ST was to review a scientific topic and deepen students’ understanding about it. In CA, the number of students in the lessons varied from 5 to 20, which depended on the availability of students as approved by PSTs’ mentor teacher. The length of CA ranged from 20 to 40 min. In ST, all PSTs had a full class of around 20 students. The length of ST ranged from 40 to 60 min. Overall, ST was more authentic than CA.

Video Coding

While coding a video, we first separated it into several episodes of instructional activities that represented how a teacher unpacked the lesson objective. For example, a lesson about floating and sinking might be chunked into four episodes: (1) the PST solicited students’ prior knowledge about floating and sinking; (2) students predicted whether certain objects would float or sink; (3) students worked in groups to test their predictions; (4) the PST summarized the features of objects that float with a T-chart. Multiple vignettes of teacher-student interaction unfolded in an episode. A vignette was a scenario of a teacher interacting with certain student(s) for a specific teaching purpose, which we referred to as a learning task. The vignette shifted when the task of a teacher-student interaction changed. For example, the vignette shifted when a PST switched the discussion from the properties of objects that sink to the properties of those that float. Another example of vignette shift was when a PST finished the conversation with one table of students and moved to another table during a laboratory.

In a vignette, we focused exclusively on PSTs’ discourse and coded them based on Table 2. For example, a PST asked three questions about the properties of objects that sank in a class discussion where all students participated. After each question, the PST paraphrased students’ answers without any lecturing. The discourse below was coded as “pq-tr-pq-tr-pq-tr.” According to Table 3, this vignette had a level of “N” because the PST probed students’ understanding without any explicit interventions. We provided a complete spectrum of examples for all levels (Table 3) in the Appendix.

“According to your lab, what is in common between the objects that sank?” (pq) - “Jack said heavy objects sank. Interesting.” (tr) - “What else? Katie.” (pq) - “Oh, metals sank. Thanks, Katie.” (tr) - “Brittney, how about you?” (pq) - “OK, you agree with them.” (tr)

To answer the third research question, vignette levels within an episode needed to be clustered into a quantitative value that describes how a teacher intervened with student learning in an instructional activity. When an episode contained multiple vignettes, its level was determined by the most salient or frequent vignette level. For example, an episode with four vignettes of “Da|Db|Da|N” would be labeled as “Da,” which indicated that the PST intervened with student learning in this episode mainly through interactive lecturing. Since the level of N denoted little teacher intervention, it had the least priority compared to the other levels in determining the episode level. For example, an episode with five vignettes of “N|N|N|Qb|N” had a level of “Qb,” which indicated that there was intervention from the PST to students’ learning through guiding questions in this instructional activity. To avoid confusion, we used the sign of “-” to connect PST discourse in a chain (e.g., “pq-tr-pq-tr-pq”) and the sign of “|” to connect multiple vignettes or episodes in a sequence (e.g., “N|N|N|Qb|N”).

Data Analysis

Video Coding

We used a pair-coding method to assign a pair of coder-reviewer on each video. As shown in Fig. 1, BW (initials of a pseudonym) was the coder who coded the video based on Table 2 and Table 3. TP (initials of a pseudonym) was the reviewer who watched the same video and labeled with a different color any disagreement in the vignette or episode codes. In order for the reviewer to better locate the codes in the video, the coder recorded the time stamp of the beginning of a vignette as “hour.minute.second.” In addition, key words were added to briefly describe the codes of “le*” and “gq” since they weighted heavier in determining a vignette level. Thus, the codes also served as information locators to guarantee that the discussion for inter-rater agreement was based on objective evidence from videos rather than subjective impressions, feelings, or preferences about a vignette. Finally, a pair of coder and reviewer met to discuss and reconcile any disagreement marked by the reviewer with text of a different color, but kept the disagreement documented. For example, the record of “pq(00.08.24)-pq-gq(Tennis ball hollow?)/pq-tr” under Episode 2 suggests that the coder took the teacher’s question with the key words of “Tennis ball hollow” as a guiding question. However, the reviewer disagreed and took that question as a probing question. To reach an agreement, they needed to locate that question in the vignette that started at 8 min and 24 s and discuss about it in reference to the context of conversation. If the reviewer successfully convinced the coder, the coder’s code of “gq” would be struck through (i.e., “gq(Tennis ball hollow?)/pq”), which documented both the disagreement and final decision on this code.

Among the videos from the 60 PSTs, 56 ST videos (the first three excluded) and 58 CA videos were chosen for the analysis inter-rater reliability (IRR) that had good audio quality during PST-student interaction. We used the first three ST videos to adjust the instrument and train Raters 2-4. Considering that the coding scheme was new to Raters 2–4, there were more ST videos coded between Raters 2–4 and Rater 1 who designed the instrument than those coded among Raters 2–4 (Table 5). After that, we evenly distributed the CA videos among the four raters. Each video was watched by two raters. We did not directly examine the inter-rater agreement among all the raters but between a pair of raters based on the videos that they both analyzed. The final vignette/episode levels of a video represented the consensus from two raters in the research team.

Reliability and Validity Consideration

We calculated Cohen’s Kappa (Cohen, 1960) between different pairs of raters for both ST and CA videos to examine the IRR of the coding scheme. As for validity, we examined the criterion-related validity which describes whether a measurement relates well with a current or future criterion (Heale & Twycross, 2015). We adopted criterion-related validity to gauge whether the PSTs’ practice of questioning measured by this instrument was aligned with two criteria associated with the properties of the PSTs’ teaching in this study. First, many PSTs revisited a science concept taught by their mentor teacher rather than teaching a new concept. Their students had already developed some understanding of that concept. As novice science teachers, the PSTs were not skilled yet in promoting students’ conceptual learning in depth or using questions to support students’ difficulties. Thus, their intervention to student learning was likely to be limited to repeating similar information that the students had received (i.e., N) or correcting students’ misconceptions through lecturing (i.e., Da or Db). It is reasonable to infer that the levels of “N,” “Da,” or “Db” probably had higher frequencies than other levels (Table 3) in both CA and ST videos. Secondly, CA and ST were 3–4 months apart, between which the participating PSTs did not receive any intervention targeting questioning. Thus, the PSTs’ orientation towards questioning was unlikely to change drastically. Their orientation measured from the CA and ST videos should be highly correlated. To examine Criterion 1, we summarized the percentages of the seven levels (i.e., Qa to N) among all the PSTs’ teaching videos for CA and ST respectively. To examine Criterion 2, we calculated the Pearson’s correlation between the PSTs’ orientations from CA and ST.

We calculated orientation in two ways. First, orientation was the ratio of episodes where PSTs intervened through questioning to all episodes with PSTs’ intervention, which was the percentage of Q levels (i.e., “Qa” + “Qb” + “Qe”) to all Q and D episodes. In the second method, we excluded the levels of Qe and De because they focused more on errors from PSTs rather than their orientation. We hypothesized that the rest levels had a hierarchy from being most questioning oriented (“Qa”) to least (“Db”) and assigned different scores to them as Qa (+ 2), Qb (+ 1), Da (− 1), and Db (− 2). Qe, De, and N were assigned the score of 0. We added the episode scores in a video and divided the sum by the number of episodes. A positive or negative net score would indicate a PST being more questioning or lecturing oriented.

Patterns of the PSTs’ Science Teaching

We used the CA videos to investigate the patterns of the PSTs’ science teaching when they took the methods course and received the instruction about open-ended questions. In CA, the PSTs were required to implement inquiry teaching by incorporating the phases of Engage, Explore, and Explain from the 5E model. We communicated with the PSTs the definitions of the three phases adapted from the existing framework (Bybee, 2014; Liu et al., 2009; Wang et al., 2019). In an ideal situation when PSTs asked appropriate questions throughout a lesson, we should assumably see a high percentage of the “N” level in Engage when the PSTs assessed students’ prior knowledge or a high percentage of “Qa” or “Qb” when the PSTs organized students’ thinking. In Explore, we should see a high percentage of “Qa” or “Qb” when the PSTs helped students explore questions and generate new ideas. In Explain, we should see a high percentage of “Qa” or “Qb” when PSTs led the students toward a deeper understanding based on their ideas generated from Explore. “Da” or “Db” should also have a relatively high percentage when the students needed direct instruction from the PSTs. We went through the episodes from the CA videos pertaining to the three E phases, calculated the percentages of the seven levels, and summarized most representative patterns of the PSTs’ science teaching that was aligned with or against our hypotheses.

Findings

Q1. Was this coding scheme valid to measure the PSTs’ questioning in science teaching?

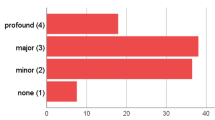

There were 51 PSTs who had both ST and CA videos for the validity analysis. We summarized in Table 6 the descriptive statistics of the percentages of all episode levels, including mean, median, and standard deviation. Three patterns stood out. First, the level of “N” (i.e., no identifiable intervention via questioning or lecturing, Table 3) had the highest percentage in both ST and CA videos. The PSTs did not explicitly intervene with student learning in nearly half of the episodes in a video. This result matched our hypothesis that the PSTs probably were unprepared to deepen student understanding when they revisited a concept, or they probably did not develop the skill of asking guiding questions from the instruction regarding open-ended questions only. Secondly, the percentages of “D” levels (i.e., Da + Db + De, intervention via lecturing) in total were higher than that of “Q” levels (i.e., Qa + Qb + Qe, intervention via questioning) in total. In other words, the PSTs preferred to use direct instruction when they intervened with student learning. This result further supported that the PSTs were not skilled in providing guidance through questioning when it was not explicitly addressed. Thirdly, the standard deviations were close to or larger than the means of all seven levels, which suggested that there were great variances in the PSTs’ practice of questioning. This could result from PSTs’ drastically different competencies of questioning or their different teaching contexts and content. We leaned toward the second explanation more because there was no evidence suggesting huge differences in the participating PSTs’ teaching competencies. In other words, the PSTs’ practice of questioning was likely to be affected by the science content they taught or the teaching context, which resonated with the context-contingent nature of questioning (Roth, 1996). To achieve more accuracy in measurement, a PST’s PCK-Q may need to be derived from multiple videos or science concepts rather than one.

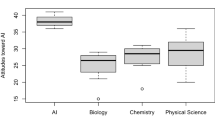

Both methods of calculating orientation might return errors mathematically, such as the denominator being 0 when all episode levels in a video were “N.” There were 48 out of 51 complete pairs of orientation calculated through the first method (i.e., the sum of Q levels divided by the sum of Q and D levels). The PSTs’ orientation had a mean of 14.5%, a median of 0.0%, and a standard deviation of 26.1% in ST. The counterparts in CA were 19.2% for mean, 0.0% for median, and 29.3% for standard deviation. The correlation between the PSTs’ orientations through the first method was 0.51 (moderate, Cohen et al., 2007). There were 44 out of 51 complete pairs of orientation calculated through the second method (i.e., Qa = + 2, Qb = + 1, Da = − 1, Db = − 2). The PSTs’ orientation had − 0.88 for mean, − 1.25 for median, and 1.07 for standard deviation in ST. The counterparts in CA were − 1.05 for mean, − 1.2 for median, and 0.96 for standard deviation. The correlation between the PSTs’ orientation through the second method was 0.31 (moderate). There was consistency in orientation between ST and CA.

Q2. Was this coding scheme reliable to measure the PSTs’ questioning in science teaching?

We summarized the coefficients of Cohen’s Kappa for ST and CA videos in Table 7. All the Kappa coefficients (Landis & Koch, 1977) were within the range of 0.61–0.80 (substantial agreement) or 0.81–1.00 (excellent agreement). In the 56 ST videos, the agreement coefficients between Rater 1 and the other three raters (i.e., 0.63, 0.62, and 0.66) were lower than those among Raters 2–4 (i.e., 0.81, 0.77, and 0.87). This was probably because Raters 2–4 were calibrating their use of this instrument considering that ST videos also served to train Raters 2–4 with the coding scheme. Besides, since more videos were coded between Rater 1 and Raters 2–4, the likelihood of disagreement also increased. After ST video coding, such a difference diminished in the coding of the 58 CA videos. The agreement coefficients between Rater 1 and Raters 2–4 increased (i.e., 0.78, 0.77, and 0.72) and those among Raters 2–4 decreased (i.e., 0.66, 0.81, and 0.80). None of the coefficients were extremely high, especially between Rater 1 and Raters 2–4, which suggested that none of the raters blindly deferred to the opinions of others but analyzed videos independently. Generally, the IRR was satisfactory. There was substantial agreement between the raters in coding both ST and CA videos.

Q3. What patterns of science teaching from the PSTs were suggested by this coding scheme when they were required to teach a lesson through inquiry teaching?

The structure of most CA videos was aligned with inquiry teaching through the three phases of Engage, Explore, and Explain. For example, a lesson about animal adaptation was composed of three episodes of students brainstorming cases of animal adaptation (Engage), students working in stations about birds picking up various types of food with different beaks (Explore), and students reflecting on their lab about how different beaks helped birds survive in different environments (Explain). A close look into episode levels indicated by questioning chains revealed something different. We summarized in Table 8 the percentages of the seven levels (i.e., Qa–N) in the three E phases from the CA videos. In Engage, the level of “N” had the highest percentage (56.7%), followed by the D levels with a total of 30.0% (i.e., “Da” + “Db” + “De”), and then the Q levels with a total of 13.3% (i.e., “Qa” + “Qb” + “Qe”). In most cases, the PSTs were aware of gauging their students’ prior knowledge, such as “What do you know about energy?” and “Why do you think birds have different beaks?” The PSTs sometimes lectured students the target knowledge during Engage, which was against the constructivist nature of inquiry teaching. Scaffolding student thinking via guiding questioning happened less frequently, such as “Can the ridges on your shoes do the same thing [increasing friction]?”

In Explore, the level of “N” also had the highest percentage (35.9%). In those cases, the PSTs normally asked a series of questions to students to gauge their understanding from various perspectives, such as “In your food chain, what role does the sun play?,” “How about the grass?,” and “How about the animals?” The students gave answers based on their existing knowledge without thinking deeper as being prompted by the PSTs’ questions. The percentage of Q levels increased from 13.3 to 20.3%, and the percentage of D levels increased from 30 to 43.8%. The PSTs intervened with student learning more than they did in Engage mainly through direct instruction. For example, a PST played a video that illustrated thoroughly the phases in the life cycle of a butterfly rather than a video showing the phases without any narration. In this case, the phase of Explore functioned as lecturing where students received knowledge directly from an authoritative resource. In Explain, the level of “Db” had the highest percentage (45.5%) followed by “Da” (22.7%). The level of “N” decreased to 21.2%. The Q levels decreased greatly to a total of 6%. This result suggests that the PSTs were aware of the importance of Explain for the summary of target knowledge. The agent of knowledge construction was primarily the PSTs instead of students.

We identified six representative patterns of the PSTs’ science teaching across the three E phrases, i.e., Engage|Explore|Explain (Table 9). In the lessons following the patterns of “N|N|N,” “N|N|D,” and “D|D|D,” we found that the questions raised by the PSTs were more random and scattered. For instance, a PST asked the probing question of “Why is the sun much larger than the planets?” after the probing question of “What causes day and night?” in a lesson about the sun, moon, and earth. The two open-ended questions lacked necessary logical connection and were unlikely to guide student thinking in a productive direction. Besides, the PSTs preferred to use the encouragement statements after their questions regardless of the quality of student answer, such as “There is no right or wrong” and “It is OK if you cannot answer this question.” Although these statements eased students’ anxiety, they might divert student attention away from the content knowledge in their answer because any answers were acceptable. In these cases, there was little evidence supporting that the students in these lessons developed new ideas on their own as being prompted by the PSTs’ questions. In comparison, the questions were more purposeful and more closely related to the lesson objective in the lessons following the patterns of “D|Q|Q,” “Q|Q|D,” and “Q|Q|Q.” Take a lesson about magnetism for example, the PST tried to challenge students’ conclusion that “Metals can be picked up by a magnet” by asking the guiding questions of “Can you pick up the penny with your magnet?” and “How about the piece of aluminum foil?” The students tested again and answered “No,” which led them to realize that not all metals are magnetic. The pattern of “Q|Q|D” was more inquiry-oriented than “D|Q|Q” because the lab in the former was more exploratory in nature and that in the latter was more confirmatory. The pattern of “Q|Q|Q” was most inquiry-oriented and probably most difficult. We witnessed only two lessons of “Q|Q|Q” among all the CA videos. These findings suggest that addressing open-ended questions alone could not automatically empower PSTs with the skill of using questions to guide student learning.

Discussion and Implications

The objective of this study is to introduce a coding scheme for large-scale qualitative analyses of teachers’ practice of questioning and its potential impact on student learning. This instrument is focused on the teacher side of the I-(R)-F-(R)-F model (Chin, 2007; Chin & Osborne, 2008) and examines the dimension of teacher intervention in discourse analysis (Mortimer & Scott, 2003; Soysal, 2023) by involving science content knowledge in the coding of questions. We defined seven levels of teacher intervention from questioning chains (Table 3) that belonged to three categories of Q (questioning oriented intervention), D (direct-instruction oriented intervention), and N (no explicit intervention). Through this method, we tracked the details in discourses and saved the laborious work of scrutinizing peripheral information (e.g., minute differences between formats of questions) while analyzing teacher questioning. Unlike existing studies of question analysis from a small sample (less than 10) of lessons (Aranda et al., 2020; Morris & Chi, 2020; Reinsvold & Cochran, 2012; Smith & Hackling, 2016), we thoroughly analyzed a total of 114 videos (i.e., 56 ST and 58 CA) across various contexts in science teaching. Thus, this instrument is feasible for large-scale qualitative analyses on multiple science lessons in PST education. In addition, the time stamps and key words associated with codes as information locators (Fig. 1) enabled raters to quickly pinpoint evidence from teaching videos, which guaranteed evidence-based rather than opinion-based reflection or discussion over coding. The accuracy and objectivity of question analyses were both achieved. Although our instrument simplifies video coding, it may still be limited for large-scale quantitative studies because it relies on human effort to dissect a video. Combining our instrument with machine learning may break that limit.

Our data supports the IRR, theoretical validity (i.e., theoretical support and descriptive statistics), and criterion-related validity (i.e., Pearson correlation) of this instrument (Heale & Twycross, 2015). The moderate correlation between orientation calculated by both methods supports the possibility of measuring teachers’ PCK-Q (Table 4) using this instrument. Orientation calculated via the first method (i.e., the percentage of Q levels to all levels with intervention) described how often a teacher used questioning to intervene with student learning. It had a higher correlation coefficient (i.e., 0.51) than that of orientation calculated via the second method (i.e., 0.31) that assigned different scores to the levels from Qa to Db. Taken that orientation is consistent and the correlation should be theoretically high, the lower correlation via the second method suggests that the levels of teacher intervention (Table 3) may not be hierarchical. It is more appropriate to treat the seven levels from Qa to N as nominal data. Similarly, PSTs’ other components of PCK-Q (Table 4) could be inferred from their videos by the frequencies of specific levels from multiple videos. Take knowledge of curriculum for example, a PST with strong science content knowledge is less likely to make errors in teaching practice. Thus, this component could be quantified by the percentage of the PST not making errors (i.e., 1—the percentage of Qe and De levels to all the levels). We have validated this scoring method in the context of college physics (Wang et al., 2023) where over five videos were collected from each individual novice teacher about different physics concepts. Unfortunately, this method did not apply to this study because we only collected two videos from each PST. This could be the direction of future work.

From examining the patterns of CA videos after the PSTs learned the 5E model (Bybee, 2000; Bybee, 2014) and open-ended questions for inquiry teaching, we found that questioning was not the primary way that the PSTs applied to intervene with student learning. In this sense, many of the science lessons were not aligned with inquiry teaching in how the target knowledge be constructed even though the structure of activities in the three E phases matched the format of inquiry teaching. In the lessons with the pattern of “N|N|N” or “N|N|D” (Table 9), some PSTs continuously asked probing questions without clear evidence of how they proceeded the information collected from students to direct their following instruction. They might oversimplify inquiry teaching as asking open-ended questions until students automatically picked up the target knowledge from their own answer or when direct instruction was necessary. Correspondingly in PST education, over emphasizing open-ended questions in inquiry teaching (Kawalkar & Vijapurkar, 2013; Lehesvuori et al., 2018; Lehtinen et al., 2019; Reinsvold & Cochran, 2012) may overshadow the importance of teacher guidance in student learning and divert PSTs’ attention from the science content embedded in their questions or how their questions add values to student understanding.

Effective guidance via teacher questioning is a unique dimension of inquiry teaching that cannot be entailed by dialogic nature of communication, active role of students, or exploratory structure of a lesson (Lehtinen et al., 2019; Reinsvold & Cochran, 2012). In other words, content engagement and extended explanation from students as prompted by open-ended questions cannot guarantee the efficacy of inquiry teaching. Analogically, students randomly “creating” a science model from their imagination cannot be counted as a sophisticated thinking skill even though “create” is at the top of the Bloom’s taxonomy which is defined as “produce new or original work” (Armstrong, 2017). Instead, students should create a science model based on evidence, existing work, and logical deduction. Similarly, teacher questions should be carefully designed along a logical chain that matches how students may unpack and comprehend a lesson objective. Taken that teacher practice is directly connected to student learning (Araceli Ruiz-Primo & Furtak, 2006; McCutchen et al., 2002; Vescio et al., 2008), lacking explicit effort of purposeful guidance by referring to specific science content knowledge is unlikely to yield positive student learning outcomes. While applying this instrument in PST education, we expect to convey to PSTs that the questioning chain of I (teacher initiates a question)-R (students respond)-N (teacher follows with an open-ended question without explicit effort of intervention)-R (students respond)-N, as one example of the I-R-F-R-F model for inquiry teaching (Chin, 2007), may be equally ineffective as I-R-E to support student learning.

Data Availability

The datasets generated and/or analyzed during the current study are not publicly available due to the protection of research participants’ privacy that is identifiable from teaching videos as the research data.

References

Almahrouqi, A., & Scott, P. (2012). Classroom discourse and science learning: Issues of engagement, quality and outcome. In D. Jorde & J. Dillon (Eds.), Science education research and practice in Europe: Retrospective and prospective (pp. 291–307). Sense publishers.

Araceli Ruiz-Primo, M., & Furtak, E. M. (2006). Informal formative assessment and scientific inquiry: Exploring teachers’ practices and student learning. Educational Assessment, 11(3-4), 237–263.

Aranda, M. L., Lie, R., Selcen Guzey, S., Makarsu, M., Johnston, A., & Moore, T. J. (2020). Examining teacher talk in an engineering design-based science curricular unit. Research in Science Education, 50(2), 469–487.

Armstrong, P. (2017, June 10). Bloom’s Taxonomy. Vanderbilt University. Retrieved from https://cft.vanderbilt.edu/guides-sub-pages/blooms-taxonomy

Ary, D., Jacobs, L. C., Razavieh, A., & Sorensen, C. (2010). Introduction to research in education (8th ed.). Thomson Learning.

Banchi, H., & Bell, R. (2008). The many levels of inquiry. Science and Children, 46(2), 26-29.

Bell, R. L., Smetana, L., & Binns, I. (2005). Simplifying inquiry instruction. The Science Teacher, 72(7), 30–33.

Benedict-Chambers, A., Kademian, S. M., Davis, E. A., & Palincsar, A. S. (2017). Guiding students towards sensemaking: Teacher questions focused on integrating scientific practices with science content. International Journal of Science Education, 39(15), 1977–2001.

Brewe, E., Kramer, L., & O’Brien, G. (2009). Modeling instruction: Positive attitudinal shifts in introductory physics measured with CLASS. Physical Review Special Topics-Physics Education Research, 5(1), 013102.

Bybee, R. W. (2000). Teaching science as inquiry. In J. Minstrell & E. van Zee (Eds.), Inquiring into inquiry learning and teaching in science (pp. 20–46). American Association for the Advancement of Science.

Bybee, R. W. (2014). The BSCS 5E instructional model: Personal reflections and contemporary implications. Science and Children, 51(8), 10–13.

Chin, C. (2007). Teacher questioning in science classrooms: Approaches that stimulate productive thinking. Journal of Research in Science Teaching, 44(6), 815–843.

Chin, C., & Osborne, J. (2008). Students’ questions: A potential resource for teaching and learning science. Studies in Science Education, 44(1), 1–39.

Cohen, J. (1960). A coefficient of agreement for nominal scales. Educational and Psychological Measurement, 20(1), 37–46.

Cohen, L., Manion, L., & Morrison, K. (2007). Research methods in education (6th ed.). Routledge. https://doi.org/10.4324/9780203029053

Crawford, B. A. (2014). From inquiry to scientific practices in the science classroom. In N. G. Lederman & S. K. Abell (Eds.), Handbook of research on science education (Vol. 2, pp. 515–541). Routledge.

Erdogan, I., & Campbell, T. (2008). Teacher questioning and interaction patterns in classrooms facilitated with differing levels of constructivist teaching practices. International Journal of Science Education, 30(14), 1891–1914.

Gardner, M. R. (1999). The true teacher and the furor to teach. In S. Appel (Ed.), Psychoanalysis and pedagogy (pp. 93–102). Bergin & Garvey.

Heale, R., & Twycross, A. (2015). Validity and reliability in quantitative studies. Evidence-based Nursing, 18(3), 66–67.

Kawalkar, A., & Vijapurkar, J. (2013). Scaffolding Science Talk: The role of teachers’ questions in the inquiry classroom. International Journal of Science Education, 35(12), 2004–2027.

Kelly, G. J. (2014). Discourse practices in science learning and teaching. In N. G. Lederman & S. K. Abell (Eds.), Handbook of research on science education (Vol. 2, pp. 321–336). Routledge.

Krystyniak, R. A., & Heikkinen, H. W. (2007). Analysis of verbal interactions during an extended, open-inquiry general chemistry laboratory investigation. Journal of Research in Science Teaching, 44(8), 1160–1186.

Landis, J. R., & Koch, G. G. (1977). The measurement of observer agreement for categorical data. Biometrics, 159-174. https://doi.org/10.2307/2529310

Lehesvuori, S., Ramnarain, U., & Viiri, J. (2018). Challenging transmission modes of teaching in science classrooms: Enhancing learner-centredness through dialogicity. Research in Science Education, 48(5), 1049–1069. https://doi.org/10.1007/s11165-016-9598-7

Lehtinen, A., Lehesvuori, S., & Viiri, J. (2019). The connection between forms of guidance for inquiry-based learning and the Communicative approaches applied—a case study in the context of Pre-service teachers. Research in Science Education, 49(6), 1547–1567. https://doi.org/10.1007/s11165-017-9666-7

Liu, T. C., Peng, H., Wu, W. H., & Ming-Sheng, L. (2009). The effects of mobile natural-science learning based on the 5E learning cycle: A case study. Journal of Educational Technology & Society, 12(4), 344–358.

Magnusson, S., Krajcik, J., & Borko, H. (1999). Nature, sources, and development of pedagogical content knowledge for science teaching. In J. Gess-Newsome & N. G. Lederman (Eds.), Examining pedagogical content knowledge (pp. 95–132). Springer.

Manz, E. (2012). Understanding the codevelopment of modeling practice and ecological knowledge. Science Education, 96(6), 1071–1105.

McCutchen, D., Abbott, R. D., Green, L. B., Beretvas, S. N., Cox, S., Potter, N. S., & Gray, A. L. (2002). Beginning literacy: Links among teacher knowledge, teacher practice, and student learning. Journal of Learning Disabilities, 35(1), 69–86.

Morris, J., & Chi, M. T. (2020). Improving teacher questioning in science using ICAP theory. The Journal of Educational Research, 113(1), 1–12.

Mortimer, E. F., & Scott, P. (2003). Meaning making in science classrooms. Open University Press.

National Research Council (NRC). (2012). A Framework for K-12 science education: Practices, crosscutting concepts, and core ideas. The National Academies Press.

Park, S., & Oliver, J. S. (2008). Revisiting the conceptualisation of pedagogical content knowledge (PCK): PCK as a conceptual tool to understand teachers as professionals. Research in Science Education, 38(3), 261–284.

Reinsvold, L. A., & Cochran, K. F. (2012). Power dynamics and questioning in elementary science classrooms. Journal of Science Teacher Education, 23(7), 745–768.

Roth, W. M. (1996). Teacher questioning in an open-inquiry learning environment: Interactions of context, content, and student responses. Journal of Research in Science Teaching, 33(7), 709–736.

Scott, P. H., Mortimer, E. F., & Aguiar, O. G. (2006). The tension between authoritative and dialogic discourse: A fundamental characteristic of meaning making interactions in high school science lessons. Science Education, 90(4), 605–631.

Smart, J. B., & Marshall, J. C. (2013). Interactions between classroom discourse, teacher questioning, and student cognitive engagement in middle school science. Journal of Science Teacher Education, 24(2), 249–267.

Smith, P. M., & Hackling, M. W. (2016). Supporting teachers to develop substantive discourse in primary science classrooms. Australian Journal of Teacher Education, 41(4), 151–173.

Soysal, Y. (2022). Science teachers’ challenging questions for encouraging students to think and speak in novel ways. Science & Education, 1–41. https://doi.org/10.1007/s11191-022-00411-6

Soysal, Y. (2023). Exploring middle school science teachers’ error-reaction patterns by classroom discourse analysis. Science & Education, 1–41. https://doi.org/10.1007/s11191-023-00431-w

Van Zee, E. H., Iwasyk, M., Kurose, A., Simpson, D., & Wild, J. (2001). Student and teacher questioning during conversations about science. Journal of Research in Science Teaching, 38(2), 159–190.

Vescio, V., Ross, D., & Adams, A. (2008). A review of research on the impact of professional learning communities on teaching practice and student learning. Teaching and Teacher Education, 24(1), 80–91.

Vrikki, M., & Evagorou, M. (2023). An analysis of teacher questioning practices in dialogic lessons. International Journal of Educational Research, 117, 102107.

Wang, J. & Sneed S. (2019). Exploring the design of scaffolding pedagogical instruction for elementary preservice teacher education. Journal of Science Teacher Education, 30(5), 483–506. https://doi.org/10.1080/1046560X.2019.1583035

Wang, J., Sneed, S., & Wang, Y. (2019). Validating a 3E rubric assessing pre-service science teachers’ practical knowledge of inquiry teaching. Eurasia Journal of Mathematics, Science and Technology Education, 16(2), em1814. https://doi.org/10.29333/ejmste/112547

Wang, J., Wang, Y. Wipfli, K., Thacker, B., & Hart, S. (2023) Measuring learning assistants’ use of questioning in online courses about introductory physics. Physical Review Physics Education Research, 19(1), 1–18.

Funding

The research reported here is supported by the National Science Foundation, through Grant# 1838339 to Texas Tech University. The opinions, findings, and conclusions or recommendations expressed are our own and do not necessarily reflect the views of the National Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics Approval

This study was approved by the human research protection program of Texas Tech University, IRB No. 2019-579.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Conflict of Interest

The authors declare no competing interests.

Appendix

Appendix

Examples of vignette levels (Qa-N)

Lesson objective: Students will understand the difference between reflection and refraction.

Episode: Students are conducting two labs about reflection and refraction. In the first lab, they shine torch light on a mirror. In the second lab, they place a pencil in a half-full glass of water.

Vignette: The teacher approaches a group of three students who have finished the first lab and checks their findings.

Qa vignette: pq-pq-pq-di-gq-pq-tr-gq-tr-pq-tr-di

Teacher: What happened when you shined the torch light on the mirror? (pq)

Students: It bounced off.

Teacher: How did you know that?(pq)

Students: Because it was reflection.

Teacher: What is reflection? (pq)

Students: Bounce-off is reflection. (sign of conceptual difficulty)

Teacher: Let’s break apart this process a little. (di) Was there anything on the ceiling when you shined the torch light on the mirror? (gq)

Students: Yes, there was a light spot on the ceiling.

Teacher: How did the light get there? (pq)

Students: The light hit the mirror, got bounced.

Teacher: Exactly. (tr) Without the mirror, would the torch light reach the ceiling it was shined horizontally? (gq)

Students: No, because light moves straight.

Teacher: That’s right. (tr) Now do you know what reflection means? (pq)

Students: Yes, it means light changes direction, because it hits the mirror, gets bounced.

Teacher: Good thinking (tr). Now you can go on with the next lab. (di)

Qb vignette: pq-pq-pq-di-pq-le-cq-le-cq-gq-gq-tr-di

Teacher: What happened when you shined the torch light on the mirror? (pq)

Students: It bounced off.

Teacher: How did you know that? (pq)

Students: Because it was reflection.

Teacher: What is reflection? (pq)

Students: Because bounce-off is reflection. (sign of conceptual difficulty)

Teacher: Let’s break apart this process a little. (di) How does light travel? (pq)

Student: Like spread out.

Teacher: Light travels in a straight line, it cannot change direction on its own. (le) Does that make sense? (cq)

Student: Yes

Teacher: So you saw a light spot on the ceiling (le), right? (cq) Without the mirror, would the torchlight reach the ceiling when it was shined horizontally? (gq)

Students: No.

Teacher: Then what is the function of the mirror in this lab? (gq)

Students: Change the direction of light.

Teacher: Yes, that’s what bounce-off is. (tr) Now you can go on with the next lab. (di)

Qe vignette: pq-pq-pq-di-pq-le-cq-le-cq-gq-gq-tr-er-di

Teacher: What happened when you shined the torch light on the mirror? (pq)

Students: It bounced off.

Teacher: How did you know that? (pq)

Students: Because it was reflection.

Teacher: What is reflection? (pq)

Students: Because bounce-off is reflection. (sign of conceptual difficulty)

Teacher: Let’s break apart this process a little. (di) How does light travel? (pq)

Student: Like spread out.

Teacher: Light travels in a straight line, it cannot change direction on its own. (le) Does that make sense? (cq)

Student: Yes

Teacher: So you saw a light spot on the ceiling (le), right? (cq) Without the mirror, would the torchlight reach the ceiling when it was shined horizontally? (gq)

Students: No.

Teacher: Then what is the function of the mirror in this lab? (gq)

Students: Change the direction of light.

Teacher: Yes, that’s what bounce-off is. (tr) Only smooth surfaces like a mirror can bounce off light. (er) Now you can go on with the next lab. (di)

Da vignette: pq-pq-pq-le*-cq-le-gq-le*-pq-tr-di

Teacher: What happened when you shined the torch light on the mirror? (pq)

Students: It bounced off.

Teacher: How did you know that? (pq)

Students: Because it was reflection.

Teacher: What is reflection? (pq)

Students: Because bounce-off is reflection. (sign of conceptual difficulty)

Teacher: Reflection is the change of the direction of light when it hits a surface, like the mirror (le*). Did you see a light spot on the ceiling? (cq)

Student: Yes.

Teacher: Light travels in a straight line, it cannot change the direction on its own. (le) Without the mirror, would you be able to see that? (gq)

Student: No.

Teacher: So the mirror reflects the light and changes its direction. That’s what reflection is. (le*) What else could reflect light? (pq)

Students: The screen of an iPad.

Teacher: Exactly (tr). Now you can go on with the next lab. (di)

Db vignette: pq-pq-pq-le*-cq-di

Teacher: What happened when you shined the torch light on the mirror? (pq)

Students: It bounced off.

Teacher: How did you know that? (pq)

Students: Because it was reflection.

Teacher: What is reflection? (pq)

Students: Because bounce-off is reflection. (sign of conceptual difficulty)

Teacher: Reflection is the change of the direction of light when it hits a surface, like the mirror. Light travels in a straight line. It cannot change direction on its own. When light hits the mirror, the mirror reflects the light and changes its direction. Another example of reflection is when you see your image in a lake. (le*) Does it make sense? (cq)

Students: Yes.

Teacher: Now you can go on with the next lab. (di)

De vignette: pq-pq-pq-le*-er-cq-di

Teacher: What happened when you shined the torch light on the mirror? (pq)

Students: It bounced off.

Teacher: How did you know that? (pq)

Students: Because it was reflection.

Teacher: What is reflection? (pq)

Students: Because bounce-off is reflection. (sign of conceptual difficulty)

Teacher: Reflection is the change of the direction of light when it hits a surface, like the mirror. Light travels in a straight line. It cannot change direction on its own. When light hits the mirror, the mirror reflects the light and changes its direction. Another example of reflection is when you see your image in a lake. (le*) Reflection only happens on smooth surfaces (er). Does it make sense? (cq)

Students: Yes.

Teacher: Now you can go on with the next lab. (di)

N vignette: pq-pq-tr-pq-pq-pq-tr-di

Teacher: What happened when you shined the torch light on the mirror? (pq)

Student: It bounced off.

Teacher: How did you know that? (pq)

Student: There was light on the ceiling.

Teacher: Good observation. (tr) What do you think bounced off the light? (pq)

Student: The mirror.

Teacher: Is that reflection or refraction? (pq)

Student: Reflection.

Teacher: Why? (pq)

Student: Because light bounced off, that’s reflection. (sign of conceptual difficulty)

Teacher: Good thinking. (tr) Now you can go on with the next lab. (di)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, J., Wang, Y., Moore, Y. et al. Analyzing the Patterns of Questioning Chains and Their Intervention on Student Learning in Science Teacher Preparation. Int J of Sci and Math Educ 22, 809–836 (2024). https://doi.org/10.1007/s10763-023-10408-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10763-023-10408-4