Abstract

Educational Robotics is rapidly gaining attention as an effective methodology to develop skills and engage students preserving their peculiar style of learning. It is often tied together with two other methodologies, Coding and Tinkering, characterized by a similar hands-on approach. In order to fully exploit their inclusive features, teachers need to be prepared to introduce them into classroom. It is often noticed that in service teachers are not yet fully prepared to face this challenge. Many actions have been established to recover this situation, but a proper method for assessing whether these actions are successful or not is not yet developed. This paper presents a methodology for introducing in-service teachers to Educational Robotics, Coding and Tinkering and for assessing the outcomes. 184 in-service teachers were assessed and results analysed. Final considerations draw a picture of the situation amongst the sample chosen for the present study, observing that the intervention seemed to be successful in providing key notions and examples, and improving teachers’ self-confidence.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A key component for the education of the future is the development of the twenty first century skills, whose definition and assessment is still matter of research. Some of the major findings are reported in Dede (2010), which reports frameworks whose aim is to define, comprehend and assess this set of new skills. Technology seems to hold a special place in each framework, probably because of its direct connection with the future employability and quality of life. At European policy level, for example, introducing the technological literacy has been the focus of several actions. One result of these actions is a study on what the skills for the key enabling technologies are (Fig. 1). However, the Organisation for Economic Co-operation and Development (OECD) states that education systems and institutions experience several barriers to the adoption of new technologies (OECD 2016).

Skills for key enabling technologies in Europe (OECD 2016)

So, even if the twenty first century skills and technologies in the classroom are acknowledged as capital to future citizens, nowadays teachers find it hard to figure out how to train their students to develop such skills. Several studies report examples on the effectiveness of new approaches to teaching and learning that might aid teachers in their path to innovation (Benitti 2012; Scaradozzi et al. 2016b; Cesaretti et al. 2017). For example, Educational Robotics (ER) can be used to motivate children’s learning (Mubin et al. 2013), to enhance STEM learning at different age level and gender (Atmatzidou and Demetriadis 2016), in people with certain impairment, such as ASD (Kaboski et al. 2015) or hearing impairments (Kose et al. 2014), and in underprivileged student in Africa (Eguchi 2015). ER is an integrated approach to STEM (English 2016; Sanders 2008) which exploit specific pedagogical methodologies to help developing skills (Benitti 2012; Atmatzidou and Demetriadis 2016) allowing different speeds and learning styles (Urschitz and Moro 2014). ER is a powerful and versatile mean to address several questions and a variety of applications are possible, even with support teachers (Agatolio et al. 2017). Not only ER, but also other activities such as Tinkering or Making can be incorporated into daily classroom activities. Tinkering is a bottom-up approach, characterized by hands-on activities in which pupils can experiment and play freely with materials, both technological and waste (Resnick and Rosenbaum 2013). Creativity, innovation and entrepreneurship are some of the main characteristics of Tinkering and Making, which are usually employed in an informal environment. The design and construction of physical objects are usually perceived as playful, but in schools, where activities are structured and guided, Tinkering and Making can also support deep student learning, thus stimulating young people to raise interest in STEM career and even to open themselves to others reporting their own positive experience of learning (Vossoughi and Bevan 2014; Bevan et al. 2015; Blikstein et al. 2017). Another field of interest is Coding. Learning how to program has always been an issue and nations worldwide have tried to introduce it in the education system (Bottino 2014; Rolandsson and Skogh 2014; Weintrop and Wilensky 2016). But learning to code is not just an end in itself. Learning to code can lead students to learn the design process, how to develop an idea, how to break down big problems into small problems and many other abilities that are related not just with the world of computers. Some tools have been developed through the years to help students to create meaningful artefacts, like Scratch (https://scratch.mit.edu/), a free educational programming language that was developed by the Lifelong Kindergarten Group at the Massachusetts Institute of Technology (MIT).

Understanding the need for innovation and the potential of these new approaches, the Italian Ministry of Education (MIUR) put into action the National Plan for Digital School (PNSD) which aims to set up a comprehensive structure of renewal for Italian school. Many activities have been supported by PNSD to introduce and educate students to digital technologies (Avvisati et al. 2013; Bottino 2014). One of these activities is to train in-service teachers on didactic innovation. PNSD establishes that the professional development of teachers will take place in the poli (schools leading other set of schools) and in the snodi formativi territoriali (schools which host training courses for a particular area of Italy). Even if this large and comprehensive strategy has brought the possibility to expose new technologies to school and schools to technology with a widespread coverage over the country, often projects rely on external experts to realize activities. But to fully exploit the potential of new methodologies, school cannot rely only on external experts or on small and fragmented activities in the remnant of after-school time. Research on introducing technology into schools emphasizes that preparing teachers to deal with the use technology and new methodologies in their teaching activity is the paramount concern (Lee and Lee 2014; OECD 2010; Rockland et al. 2010; Wang et al. 2014). Other studies indicate that a more extended and structured programme to bring technology into school curricula can help introducing several skills since an early stage of education (Scaradozzi et al. 2015, 2016a). This can be achieved only if teachers are trained to carry out meaningful activities, as they are the ones in charge of students’ education, balancing contents and experiences and articulating teaching to match the way students learn. Research on this field produced a variety of results. Aldunate and Nussbaum (2013) found that the sooner the teachers become acquainted with technology, the better they can cope with it and use it in the daily activities in the classroom. Heitink et al. (2016) found a mismatch between what teachers think about their own abilities and the abilities they actually show in practice, stating that this is a threat to the effectiveness of using ICT in teaching and calling for explicit attention to teacher’s reasoning about their technology use in practice. Understanding users’ acceptance of technology in education has been the focus of several researches and as a result some theories have spread across the scientific world, such as the technology acceptance model (TAM) (Davis 1989), the theory of planned behaviour (TPB) (Ajzen 1991), and the unified theory of acceptance and use of technology (UTAUT) (Venkatesh et al. 2003). But that is not all that research is doing in this field. When measuring teachers’ technology usage, not only ICT integration has been investigated, but also pedagogical and ethical issues, ways to measure and improve teachers’ ICT integration proficiency and internal and external influencing factors that facilitate, or hinder teachers’ ICT use at school (Hsu 2017).

This paper describes a training course for in-service teachers dealing with three new methodologies and technologies for teaching: Educational Robotics (ER), Coding and Tinkering. Actually, the course dealt also with Classroom Debate and Storytelling, but authors chose to emphasize the themes more relevant to the present issue. While the motivation to realise this kind of training course has been explained previously in this section, the objectives are specified as follows:

-

to provide teachers with key notions and examples of the tools and techniques that are proper to each of the above-mentioned methodologies;

-

to improve teachers’ self-confidence in using technology in the classroom;

-

to evaluate how much they have learnt from this course.

The course was designed to introduce teachers to each methodology, to make them experience a specific activity based on that methodology and, finally, to assess them anonymously. Details on the design and implementation of the course can be found in the Sect. 2. Section 3 will summarize main findings from the statistical analysis of data gathered through the assessments instruments. Section 4 will draw some considerations from the analysis.

2 Methods

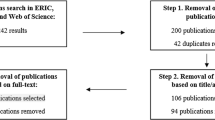

The course followed the schedule reported in Table 1. An overall amount of 15 h was dedicated to the introduction of five different methodologies. During the four workshops teachers dealt with both theoretical aspects and practical activities. About 400 teachers were enrolled in the course offered by the snodo formativo territoriale. The whole course was delivered to groups of about 30 teachers at a time. Groups were created according to the availability of teachers and host facilities. Courses were delivered from May 2017 to June 2017 in Silvi and Giulianova, in the province of Teramo (Italy).

Next subsections will provide full details on the research design and on the activities carried out during the workshops.

2.1 Participants

Participants were enrolled during the planned training course. Authors had no control over the enrolment of teachers. All teachers came from the province of Teramo. Teachers were presented with the aim of the study at the beginning of the training course. They could choose whether to participate in the study, so only volunteers took part in the study. Both teachers participating in the study and those who did not participate had the same training, the same access to contents and the same possibility to address questions to educators. They also worked together during the activities. Volunteers were asked also to fill a form asking personal information (age, gender, educational qualification, background), information about their professional life (years spent in the career of teaching, educational stage at which he or she is currently teaching and which subject(s), in which province of Italy) and issues connected with the topics of the training course (if they are currently using some technology in their classroom and which kind of technology). The total number of teachers, ranging from kindergarten to upper secondary school, considered for the statistical study described in Statistical methods, is 184. This sample is described in Table 2 and Fig. 2. Personal data and data on their professional life are reported in Table 2. Figure 2 represents the histogram of teachers’ usage of technology in the classroom by category. “Robot” stands for use of robots, either in kit or already assembled, in classroom for activities. “LIM” (Lavagna Interattiva Multimediale) is the Italian acronym for the electronic whiteboard. “Tablet/PC” stand for the use of PC or tablet in his/her teaching activities. “Boards” mean electronic boards like Arduino, Raspberry and many other choices the market offer. “SW_Coding” is the software dedicated to computer programming (i.e. Scratch). “SW_Didactical” is the software dedicated to didactical purposes (for example, learning geometry, to visualize history and geography,…). “Text Editor” is the category for software dedicated to text editing. “AV_Editor” means the software for Audio–Video editing. “Other” collects all those technologies teachers couldn’t classify satisfactorily in the previous categories. From Fig. 2 we can see that the clear majority of teacher had previous experience in using tablet and pc to work with students. Also, LIM seems to be very popular amongst teachers’ work. This may indicate a good result for the Italian policies aiming at integrating this device into all classrooms. On the other hand, the use of robots, software for coding activities, boards and other technological instruments is low amongst our sample. Prior to this course, less than 10% of teachers had used Robots or software dedicated to coding in classroom’s activities and less than 5% of teachers had used Boards or other technological means. This characteristic agrees with the fact that Piano Nazionale Informatica (PNI) and other policies addressing the use of computers at school started before PNSD and they have given results in the past years. Moreover, the increased availability of PCs and tablets as personal devices in recent years may help to reach even the schools that cannot afford such devices. The wide availability of Pcs, Tablets and Smartphones makes it possible for teachers to exploit the philosophy of BYOD (bring your own device) and the potentiality of blended learning. By contrast, robots and, more specifically, educational robots are still a heavy cost for schools that fail to access funds. Moreover, the use of robots and electronic boards require more specific technological knowledge which still weigh on the endeavours and good will of teachers.

2.2 Workshops Description

2.2.1 Workshop 1

First step in Workshop 1 was highlighting that the core of the course was not about devices, but about a different approach that teachers can use in their class, allowing students to become creators of knowledge working on meaningful projects. To create effective learning Papert proposes the practical construction of a public entity, that could be tangible, but also very abstract (Papert 1972; Papert and Harel 1991). The trainer presented also the definition of Computational Thinking (CT) as stated by Wing (2011), which defines it as “the thought processes involved in formulating problems and their solutions […]. The solution can be carried out by a human or machine, or more generally, by combinations of humans and machines.”. After this brief theoretical introduction, teachers applied this pedagogical vision working on their first course project. Kindergarten teachers used devices suitable for 4 to 5-year-old children (Bee-Bot, Fig. 3, and Scratch Jr., Fig. 4) to create stimulating scenarios. By practising, they could see how to construct programming sequences for the Bee Bot, how to realise creative mats where robots move and how to create simple digital products with Scratch Jr.

Primary school teachers built some robots that could act the story “Two monsters” by David McKee. They built a lion robot using Lego WeDo kit (Fig. 5) and transformed it into a monster (Fig. 6). Then, they programmed it to create behaviours in line with the main character of McKee’s story, using motor and sound blocks in Scratch.

Secondary school teachers built a robot to help NASA exploring Mars with Lego Mindstorms EV3 kit, Education edition (Fig. 7). Then they were challenged to use simple programming concepts to accomplish a task: the rover had to cover a given distance (1 m) and teachers had to be as precise as possible. This challenge aimed to make them aware of how much mathematics and problem solving could be introduced in a practical and playful activity.

Primary and secondary teachers experienced a possible model for organizing their present (and hopefully future) building activities: the assignment of roles. Each group was made up of four people having different roles: the designer (responsible for the project and coordinator of the team, he/she has the task to communicate building instructions to teammates), the warehouse worker (responsible for the robotic kit, this student looks for Lego pieces inside the box), the technical-assembler (responsible for the robot assembling, this student builds the robot receiving instructions from the designer and Lego pieces from the technical-assembler), and the validator (responsible for checking whether the robot is correctly assembled, observing the instructions on the computer).

At the end of the activity, all groups of teachers shared their tangible (in the case of robots) or abstract (in the case of programming sequences) artefacts, comparing different solutions and teaching each other how to solve diverse types of problems.

2.2.2 Workshop 2

Workshop 2 started with a consideration on possible flows and frameworks that a teacher could adopt while designing a process of an educational progression. The trainer proposed two models: Lego 4C Framework (https://education.lego.com/en-us/about-us) and TMI model (Martinez and Stager 2013).

Lego 4C framework is made up of 4 phases:

-

Connect the challenge, project or task is introduced, allowing students to ask clarifying questions and build on their existing knowledge. In this phase they can connect to a real problem, meaningful for them, and find the motivations to decide to start the project.

-

Construct students have to create something concrete or abstract to fulfil the project they have in mind. Pupils can create artefacts together, working and experimenting in group.

-

Contemplate students think about their invention and reflect on possible improvements; they could also show and explain their products to other classmates.

-

Continue after the Contemplate phase, pupils could have new ideas to realize or problems to solve, so they start again with the Connect phase.

The TMI model is characterized by 3 phases: Think, Make and Improve:

-

Think the moment in which any group of learners have a brainstorming and decide what and how to create, defining goals and roles to maintain during the project.

-

Make the creation phase, when students can build, experiment and play.

-

Improve (Fix or Make Better) pupils can share their inventions, they discuss with peers or with the educator how to improve or how to fix their creations.

TMI cycle can model a process in which students build their own products to solve a specific problem or challenge restarting from step 1 to 3 as many times as needed (Fig. 8).

It’s easy to see the connections between the two models: Connect phase corresponds to Think step, Construct with Make step, Contemplate with Improve step and Continue with the loop structure of the TMI model. Workshop then continued with a practical activity: kindergarten teachers built a more complex robot with respect to their previous experience (a robotic lion using Lego WeDo kit), primary and secondary teachers installed a sensor in the robot they had previously built and programmed their artefact to interact with the environment. For example, they used the motion sensor of the Lego WeDo kit to measure the distance from an object (Fig. 9) or the robot rover to avoid obstacles on Mars following the code in Fig. 10.

Thus, teachers had the possibility to create a simple “intelligence” for their robot, useful to integrate other sensors, and not only an ON–OFF logic. At the end of Workshop 2 the trainer and the educators discussed some ER’s projects analysing objectives, activities and obtained results. It was a moment to inspire teachers and to give them powerful ideas they can replicate during future projects.

2.2.3 Workshop 3

This workshop presented two innovative didactic methodologies: Digital Storytelling and Classroom Debate. Both of them were introduced both in their theoretical foundations and in their practical aspects. As stated before, these two methodologies will not be discussed.

2.2.4 Workshop 4

Workshop 4 was dedicated to introducing Tinkering activities in an educational project. Teachers created a very inexpensive robot (cost of materials about 3 €), using waste materials, markers and motors. Then, they freely created and tested “scribbling machines” (Fig. 11). At the end of these activities the trainer introduced the definition of Tinkering, reflecting on how many styles students could apply in a making activity. In fact, students that can act as planners (relying on formal rules, formulas and calculations) or tinkerers (tending to react to the specific details of the particular situation). Tinkerers are often left out or alienated, especially in STEM classes, which particularly emphasize the other learning style (Resnick and Rosenbaum 2013).

Teachers have to recognise these different styles of learning to let the students express themselves in their own way, because the paths students cross to create an artefact are multiple. Workshop continued with a practical activity. Kindergarten and primary school’s teachers worked with Makey Makey board, exploring the potentiality of this device and some possible connections with Italian curricular subjects. Secondary school’s teachers worked with Arduino board, creating their first simple circuits and discovering how many powerful projects students could develop with it.

2.3 Assessment Instruments

The variables of interest were basic knowledge and self-confidence of teachers towards the proposed methodologies and tools. Both them were measured in two different moments. The first measure provides the entry level for the course and “Baseline” (BL) for considerations. The last measure provides the “Post-Training” (PT) measure. Self-confidence towards the proposed methodologies was measured by means of a questionnaire (B1). It was presented to teachers twice: during the preliminary seminar (B1-BL) and during the final seminar (B1-PT). For each methodology the questionnaire asked three questions: one on the proficiency on the theoretic knowledge, one on the mastery of techniques and tools and one on the capability to design a didactic path employing their knowledge on the tools and techniques of that particular methodology. The full questionnaire is reported in Appendix. These three questions per methodology aimed at measuring self confidence in two dimensions: knowledge of tools and theories behind each methodology and capability to take the challenge of designing a didactic path. Administering B1 twice authors aimed at detecting how teachers varied the assessment of his/her competence according to the participation to the course. To this end a 10-points Likert scale with 0.5 points sensitivity was chosen. This kind of answer allows to transfer the intensity of an attitude or of a perception in a numeric value which relates to a scale with a high degree of precision. Teachers were enabled to give their opinion or position on the question expressing the degree of detail they wanted. The use of Likert-type scales, the administration procedure, the wording of questions, all concurred to make administration easy and consistent to deal with methodological threats. A factor analysis and reliability analysis were carried out using IBM SPSS v. 25. Items considered for these analyses are those regarding ER, Coding and Tinkering (9 items). Data was gathered from teachers not interested in participating to all the experiment and just filled B1 at the beginning of the course. 285 teachers participated in this part of the study. Sampling was not an issue because this number of observations respects the rule of thumbs of having at least 10-15 participants per item. Moreover, applying the Kaiser–Meyer–Okin (KMO) measure of sampling adequacy, the dataset scores 0.862, a good value. The correlation matrix (Table 3) shows good correlations between items. None of the correlations falls under r = 0.3. On the contrary, it should be noted that correlation is high (r > 0.9) for the two questions concerning methodologies and tools (pairs of item 1 and 2, 3 and 4, 5 and 6).

Bartlett’s test value says that we can reject the null hypothesis (H0: no correlation between variables, rejected with p > 0.05), so we can proceed with factor analysis. Figure 12 shows the Scree plot. Factors with values above the point at which the curve flattens should be retained. In this case we have two factors. Together they explain about the 84.5% of the total variance, the factor 1 explains alone the 71.85% of the total variance. Factor 1 has high loadings on each item (more than 0.7 at least for each item), while factor 2 shows very low loadings (most of them less than 0.3) except for items 3, 4, 8 (higher than 0.3 and less than 0.6). A reliability analysis was also carried out on the self-confidence questionnaire and Cronbach’s alpha showed B1 to reach good reliability, alpha = 0.945. All item seemed worthy of retention.

Finally, as a measure of criterion validity we compared the mean scores in B1 with the previous experiences with Robots, Boards and Software for coding activities. On the contingency table with results to B1 (scores above or below the mean value of all scores) and previous experience (“some” or “none”) the Cohen’s kappa coefficient showed moderate agreement between the results to the two tests (κ = 0.45).

To measure basic knowledge about the methodologies and the tools designed to be the subjects of the course, the test B2 was built by the expert educators. It was presented to teachers twice: the first time, during the preliminary seminar (B2-BL), the second, during the final seminar (B2-PT). Two questions per methodology were formulated: one testing teachers’ knowledge on the theoretical foundations of the methodology and one testing their basic knowledge of a specific tool usually used for activities with that methodology. Each question provided three possible answers: one correct, one wrong and one partially wrong. Teachers could either chose to answer the question or not. They were instructed to choose the best option amongst the three. B2-BL gives an overview of how much teachers already knew about the methodologies before the training. B2-PT tells us how much this changed after they completed the course. This can give information both on how these methodologies are already in use, or at least known, and on the changes in their basic knowledge which can be reasonably considered as an effect of the training course, thus providing a measure of the effectiveness of the training course.

2.4 Statistical Methods

Data from B1-BL, B1-PT, B2-BL and B2-PT were recoded. The 10-points Likert scale that respondents to B1-BL and B1-PT used was discretized and divided in five classes: class I was associated with very low level, class II with low level, class III with medium level, class IV with good level, class V with a very good level. This was necessary due to the granularity of data. Also the answers to B2_BL and B2_PT were classified considering different situations. Each subject could in fact give two correct answers (class III), only one correct answer (class II) or no correct answers, both missing and/or wrong answer to the two questions (class I) for each methodology. So, class I represents the lowest level of knowledge, class III the highest and class II the medium level. To establish if the differences among the values collected for a specific variable are due to chance (null hypothesis, H0) or not (alternative hypothesis, H1) some statistical tests were applied: the p value indicates the significance level at which H0 is rejected and H1 is accepted. In our study H1 established that (1) differences at BL were due to individual, previous self-awareness (B1) or knowledge/competence (B2) and (2) differences between BL and PT were due to the training. Being the variable collected non-parametric (percentage), χ2 test and McNemar test were chosen. Comparisons among classes at BL and PT were performed by χ2 test was performed to evaluate the significance of the differences (Bonferroni correction for multiple comparison was applied and significance was accepted for p < 0.005 for B1_BL and B1-PT, p < 0.0167 for B2_BL and B2-PT). To compare BL and PT values the McNemar test was applied; the significance was accepted for p < 0.05.

3 Results

Data from questionnaires B1-BL, B1-PT, B2-BL and B2-PT were analysed using MS Excel and Mathworks Matlab R2017b. Results from this procedure are reported in the following subsections divided by methodologies.

3.1 Educational Robotics

Figure 13 summarizes the outcomes of the course evaluated through questionnaires B1 and B2 at baseline (BL) and post-training (PT). The figure shows the significance of the differences between each column in each histogram (B1-BL, B1-PT, B2-BL, B2-PT), between the columns in B1-BL and B1-PT and between B2-BL and B2-PT. At baseline, all columns in B1 are significantly different from each other, showing a descending trend from class I, which is the most populated class, to class V. In post training, this trend shapes differently, showing a significant difference between each column, even if no significant differences were envisaged comparing columns in class I and class V and columns in class II and IV. All columns in B2-BL and B2-PT are significantly different from each other. Significant differences can be found in comparing B1-BL and B1-PT considering columns for class I, III, IV and V. B2-BL and B2-PT show significantly different values for class II and III.

3.2 Coding

Figure 14 summarizes the outcomes of the course evaluated through questionnaires B1 and B2 at baseline (BL) and post-training (PT). The figure shows the significance of the differences between each column in each histogram (B1-BL, B1-PT, B2-BL, B2-PT), between the columns in B1-BL and B1-PT and between B2-BL and B2-PT. At baseline, all columns in B1 are significantly different from each other, except for class II with respect to class II and class IV to class IV; class I is significantly the most populated class of the histogram. In post training, this trend shapes differently, showing a significant difference between class III and all the other columns, between class II and class IV and between class IV and class V. All columns in B2-BL and B2-PT are significantly different from each other except for class II and class III at baseline. Significant differences can be found in comparing B1-BL and B1-PT considering columns for class I, III, IV and V. B2-BL and B2-PT show significantly different values for class II and III.

3.3 Tinkering

Figure 15 summarizes the outcomes of the course evaluated through questionnaires B1 and B2 at baseline (BL) and post-training (PT). The figure shows the significance of the differences between each column in each histogram (B1-BL, B1-PT, B2-BL, B2-PT), between the columns in B1-BL and B1-PT and between B2-BL and B2-PT. At baseline, all columns in B1 are significantly different from each other, except for class IV and V that are also the only empty classes; class I is the class of the histogram that is significantly higher than all the others. In post training, this trend shapes differently, showing a significant difference between class V and all the other columns and between class I and class III. Columns in B2-BL show no significant difference, while B2-PT reports that class II and class III are significantly higher than class I. B1-BL and B1-PT reports significant difference between the columns of the histograms, and so do B2-BL and B2-PT.

4 Discussion and Conclusion

Hsu (2017) noticed a lack of attention in investigating the extent to which teachers improve their ICT integration over time. Moreover, she states that more than 40% of research designed activities in K12 education did not utilize devices to provide students with constructivist or collaborative learning. This study aims at evaluating the effect of a training course on in-service teachers’ knowledge and self-confidence in using technology in practice. Specifically, the course was designed to transfer not just basic technological skills, but also knowledge about new approaches to teaching and reasoning about technology in the classroom. Two measurements allowed to conduct the analysis reported in Sect. 3. Figures 13, 14, and 15 allow us to make some considerations. Similar trends in questionnaires and tests can be noticed: B1-BL histograms are unimodal distributions strongly right-skewed, while B1-PT are unimodal distributions more symmetric with respect to B1-BL; B2-BL histograms for ER and Coding has similar shapes (strong negative skew), while B2-BL for Tinkering has an almost flat distribution among the three categories, but all the three B2-PT distributions are strongly negative skewed. The differences amongst classes in each histogram were tested by a Chi squared test (with Bonferroni correction) to test whether they were due to chance or they were statistically significant. Differences between first and second measurement were tested by McNemar test. Results were presented in the previous section. In B1-BL, class I for ER, Coding and Tinkering is significantly higher than all the other columns. This suggest that the entry level for all the three methodologies was very low, reporting that teachers’ self-confidence was very low. This may relate to the fact that they hadn’t a lot of previous experience with technologies, in particular, teachers had little experience with robots, boards or software for coding activities in their classroom (Fig. 2). On the other hand, B2-BL suggests that most of the teachers had some early general notions on ER and Coding. Nothing can be said about this on Tinkering because all the three classes are equally represented as can be seen in Fig. 15 (left). At the end of course, class I for B1 is significantly lower than the corresponding class at baseline. This suggest that teachers’ self-confidence tend to be more positive. In fact, Class III, IV and V of B1-PT are significantly higher than the corresponding classes in B1-BL, even though class V is significantly lower than class III and IV. However, the rise of class V of B1-PT is not the most prominent amongst the three higher classes. This can be connected to the limited time teachers had to discover these new methodologies. In fact, an overall amount of 15 h in a week could be a too short period to become fully confident. Teachers may need further explorations in the three methodologies, especially in a real environment. Thus, a useful addition to their training could be the design of a project that could integrate the methodologies they learnt during the course with the subject they teach at school. This could be feasible in future courses by adding more dedicated hours. Research design could be improved by adding a follow-up study, examining how and if participants implement some activities in their real environment, trying for example to create an activity, organising the work accordingly and then evaluating the outcomes, exploiting both the knowledge on the methodologies and their personal richness as experienced teachers. Anyway, the improvement of teachers’ self-confidence is a promising factor. Heitink et al. (2016) studied teachers professional reasoning about their pedagogical use of technology. Their study evaluated volunteers (more positive and more confident than the average teacher, as stated by authors), thus their results were not influenced by lack of self-confidence or positive perceptions toward technology and still they found that there is a mismatch between teachers’ reasoning about technology and their use of technology in practice.

B2-PT in both ER and Coding shows a marked increase in class III and a decrease in class II with respect to B2-BL. At baseline, teachers seemed to have a partial knowledge on ER and Coding (Class II contains all the teachers who answered with only one correct answer to B2), while at post training they seemed to improve their level of knowledge. For Tinkering we can observe that class I significantly decreases in B2-PT, while class II-III significantly increase. This lead us to think that the course effectively gave some notions on this methodology. It seems that Tinkering is not as popular as the other two methodologies (B2-BL shows no significant differences between classes), but at the end of the course a good share of teachers improved their basic knowledge (about 40% correctly answered to 1 question and about 55% correctly answered both of the two questions).

We can observe that at the end of the course both the self-confidence and the basic knowledge of teachers improved. Practice during workshops, knowledge gained through small lectures and discussions among peers, the exchange of experience all may have concurred to the improvement of their self-confidence with the three methodologies. Research in education can benefit from a wider exploitation of quantitative, qualitative and mixed-methods approaches, but literature on ER, Coding and Tinkering have a wide variety of qualitative studies and still few which employ quantitative methods (Benitti 2012; Brennan 2014; Vossoughi and Bevan 2014). If ER and Coding are very commonly applied both on formal and informal settings, Tinkering is still applied more in informal settings (Hjorth et al. 2016). This paper attempts to quantify how much in-service teachers are self-confident and how much notions have passed to them before and after a training course. This piece of information can give useful suggestion to those interested in digging deeper the associated phenomena, may them be researchers developing a more comprehensive study, entrepreneurs willing to invest in the fields, or policy makers willing to understand the actual situation of an in-service teacher in the balance between past and future of education. Anyway, assessing the outcomes of a course and quantifying variables is not an easy task. In this work some points could be improved in the future by applying some refinements to the design of the experiment and to the assessment instruments. This work was the initial step toward a more deep and comprehensive research on the introduction of new methodologies in the twenty first century education. Teachers’ training is a key component in this process. Next steps will include a larger sample and the introduction of other tests as an addition to the ones presented in this paper. The sampling procedure presented here was carried out by convenience sampling. It will be useful to expand the experiment in other occasions to include a control group to test the maturation effect. Moreover, other courses will start in other geographical areas allowing us to reach different geographical areas of Italy, to extend the generalizability of results and establishing comparisons between different areas. Extending our assessment instruments will probably result in a more accurate picture of in-service teachers. To give an example, in the overall evaluation answers to B2 seems to be sufficiently positive from the beginning (B2-BL), especially for ER and Coding. This may be due to previous knowledge about the methodologies, but it could be related also to the fact that just two general questions are not sufficient to evaluate completely the knowledge of a teacher on the methodology. It may be useful to empower this test with other questions and to evaluate its psychometric properties.

Change history

02 May 2019

The article listed above was initially published with incorrect copyright information.

Abbreviations

- 4C:

-

Connect, Construct, Contemplate, Continue

- ASD:

-

Autistic spectrum disorder

- BL:

-

Baseline

- BYOD:

-

Bring your own device

- CT:

-

Computational thinking

- HSS:

-

Humanities and Social Sciences

- ICT:

-

Information Communication Technology

- KMO:

-

Kaiser–Meyer–Okin measure of sampling adequacy

- LIM:

-

Lavagna Interattiva Multimediale (interactive whiteboard)

- MIUR:

-

Ministero dell’Istruzione, dell’Università e della Ricerca

- MIT:

-

Massachusetts Institute of Technology

- NASA:

-

National Aeronautics and Space Administration

- OECD:

-

Organisation for Economic Co-operation and Development

- PCA:

-

Principal component analysis

- PNI:

-

Piano Nazionale Informatica (National Plan for teaching Informatics at school)

- PNSD:

-

Piano Nazionale Scuola Digitale

- PT:

-

Post-training

- ST:

-

Science and Technology

- STEM:

-

Science technology engineering mathematics

- TAM:

-

Theory of planned behaviour

- TMI:

-

Think Make Improve

- TPB:

-

Technology acceptance model

- UTAUT:

-

Unified theory of acceptance and use of technology

References

Agatolio, F., Pivetti, M., Di Battista, S., Menegatti, E., & Moro, M. (2017). A training course in educational robotics for learning support teachers. In D. Alimisis, M. Moro, & E. Menegatti (Eds.), Educational robotics in the makers era (pp. 43–57). Cham: Springer.

Ajzen, I. (1991). The theory of planned behavior. Organizational Behavior and Human Decision Processes, 50(2), 179–211.

Aldunate, R., & Nussbaum, M. (2013). Teacher adoption of technology. Computers in Human Behavior, 29(3), 519–524. https://doi.org/10.1016/j.chb.2012.10.017.

Atmatzidou, S., & Demetriadis, S. (2016). Advancing students’ computational thinking skills through educational robotics: A study on age and gender relevant differences. Robotics and Autonomous Systems, 75(Part B), 661–670. https://doi.org/10.1016/j.robot.2015.10.008.

Avvisati, F., Hennessy, S., Kozma, R. B., & Vincent-Lancrin, S. (2013). Review of the Italian strategy for digital schools. OECD Education Working Papers (90), 0_1.

Benitti, F. B. V. (2012). Exploring the educational potential of robotics in schools: A systematic review. Computers & Education, 58(3), 978–988. https://doi.org/10.1016/j.compedu.2011.10.006.

Bevan, B., Petrich, M., & Wilkinson, K. (2015). Tinkering is serious play. Educational Leadership, 72(4), 28–33.

Blikstein, P., Kabayadondo, Z., Martin, A., & Fields, D. (2017). An assessment instrument of technological literacies in makerspaces and fabLabs. Journal of Engineering Education, 106(1), 149–175.

Bottino, R. M. (2014). ICT as a catalyst of innovation. In R. Huang, Kinshuk, & J. K. Price (Eds.), ICT in education in global context (pp. 3–18). Berlin: Springer.

Brennan, K. (2014). Constructionism in the classroom: Three experiments in disrupting technocentrism. In G. Futschek & C. Kynigos (Eds.), Constructionism and creativity, proceedings of the 3rd international constructionism conference 2014 (pp. 1–8). Wien, Austria. Retrieved from http://constructionism2014.ifs.tuwien.ac.at/papers/1.3_1-8524.pdf.

Cesaretti, L., Storti, M., Mazzieri, E., Screpanti, L., Paesani, A., Principi, P., & Scaradozzi, D. (2017). An innovative approach to School-Work turnover programme with Educational Robotics. Mondo Digitale, 16(72). Retrieved from http://mondodigitale.aicanet.net/2017-5/best_papers_didamatica_2017/MD72_03_paper_64.pdf.

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13, 319–340.

Dede, C. (2010). Comparing frameworks for 21st century skills. In J. Bellanca & R. Brandt (Eds.), 21st century skills: Rethinking how students learn (Vol. 20, pp. 51–76). Bloomington: Solution Tree Press.

Eguchi, A. (2015). Educational robotics to promote 21 st century skills and technological understanding among underprivileged undergraduate students. In 2015 IEEE integrated STEM education conference (ISEC) (pp. 76–82). IEEE.

English, L. D. (2016). STEM education K-12: Perspectives on integration. International Journal of STEM Education, 3(1), 3.

Heitink, M., Voogt, J., Verplanken, L., van Braak, J., & Fisser, P. (2016). Teachers’ professional reasoning about their pedagogical use of technology. Computers & Education, 101, 70–83.

Hjorth, M., Smith, R. C., Loi, D., Iversen, O. S., & Christensen, K. S. (2016). Educating the reflective educator: Design processes and digital fabrication for the classroom. Paper presented at the 6th annual conference on creativity and fabrication in education.

Hsu, S. (2017). Developing and validating a scale for measuring changes in teachers’ ICT integration proficiency over time. Computers & Education, 111, 18–30.

Kaboski, J. R., Diehl, J. J., Beriont, J., Crowell, C. R., Villano, M., Wier, K., et al. (2015). Brief report: A pilot summer robotics camp to reduce social anxiety and improve social/vocational skills in adolescents with ASD. Journal of Autism and Developmental Disorders, 45(12), 3862–3869.

Kose, H., Akalin, N., & Uluer, P. (2014). Socially interactive robotic platforms as sign language tutors. International Journal of Humanoid Robotics, 11(01), 1450003.

Lee, Y., & Lee, J. (2014). Enhancing pre-service teachers’ self-efficacy beliefs for technology integration through lesson planning practice. Computers & Education, 73, 121–128.

Martinez, S. L., & Stager, G. (2013). Invent to learn: Making, tinkering, and engineering in the classroom. Torrance: Constructing Modern Knowledge Press.

Mubin, O., Stevens, C. J., Shahid, S., Al Mahmud, A., & Dong, J. (2013). A review of the applicability of robots in education. Technology for Education and Learning, 1, 1–7. https://doi.org/10.2316/Journal.209.2013.1.209-0015.

OECD. (2010). Inspired by technology, driven by pedagogy: A systemic approach to technology-based school innovations. In. Paris: OECD Publishing.

OECD. (2016). Innovating education and educating for innovation: The power of digital technologies and skills. Paris: OECD Publishing.

Papert, S. (1972). Teaching children thinking. Programmed Learning and Educational Technology, 9(5), 245–255.

Papert, S., & Harel, I. (1991). Situating constructionism. Constructionism, 36(2), 1–11.

Resnick, M., & Rosenbaum, E. (2013). Designing for tinkerability. In M. Honey & D. E. Kanter (Eds.), Design, make, play: Growing the next generation of STEM innovators (pp. 163–181). New York: Routledge.

Rockland, R., Bloom, D. S., Carpinelli, J., Burr-Alexander, L., Hirsch, L. S., & Kimmel, H. (2010). Advancing the “E” in K-12 STEM education. Journal of Technology Studies, 36(1), 53–64. https://doi.org/10.21061/jots.v36i1.a.7.

Rolandsson, L., & Skogh, I.-B. (2014). Programming in school: Look back to move forward. ACM Transactions on Computing Education (TOCE), 14(2), 12.

Sanders, M. E. (2008). Stem, stem education, stemmania. Technology Teacher, 68(4), 20–26.

Scaradozzi, D., Pachla, P., Screpanti, L., Costa, D., Berzano, M., & Valzano, M. (2016a). Innovative robotic tools for teaching STREM at the early stage of education. Paper presented at the 10th international technology, education and development conference (INTED), Valencia, Spain.

Scaradozzi, D., Screpanti, L., Cesaretti, L., Mazzieri, E., Storti, M., Brandoni, M., & Longhi, A. (2016b). Rethink Loreto: We build our smart city!” A stem education experience for introducing smart city concept with the educational robotics. Paper presented at the 9th annual international conference of education, research and innovation (ICERI 2016), Seville, Spain.

Scaradozzi, D., Sorbi, L., Pedale, A., Valzano, M., & Vergine, C. (2015). Teaching robotics at the primary school: An innovative approach. Procedia-Social and Behavioral Sciences, 174, 3838–3846.

Urschitz, T., & Moro, M. (2014). Low threshold: How inclusive is Constructionism. Paper presented at the international conference on constructionism, Vienna, Austria.

Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27, 425–478.

Vossoughi, S., & Bevan, B. (October, 2014). Making and tinkering: A review of the literature. National Research Council Committee on Out of School Time STEM, pp. 1–55. Retrieved from http://sites.nationalacademies.org/cs/groups/dbassesite/documents/webpage/dbasse_089888.pdf.

Wang, S.-K., Hsu, H.-Y., Campbell, T., Coster, D. C., & Longhurst, M. (2014). An investigation of middle school science teachers and students use of technology inside and outside of classrooms: Considering whether digital natives are more technology savvy than their teachers. Educational Technology Research and Development, 62(6), 637–662.

Weintrop, D., & Wilensky, U. (2016). Bringing blocks-based programming into high school computer science classrooms. Paper presented at the annual meeting of the American Educational Research Association (AERA), Washington DC, USA.

Wing, J. (2011). Research notebook: Computational thinking—What and why? The Link Magazine, Spring. Carnegie Mellon University, Pittsburgh. Retrieved from http://link.cs.cmu.edu/article.php?a=600.

Acknowledgements

Authors would like to show their gratitude to Dr. Marta Balietti for her professional support in analysing data. A special thank you goes out to the Headmaster of Istituto Comprensivo “Giovanni Pascoli” (Silvi, TE) and I.I.S. “Crocetti-Cerulli” (Giulianova, TE) for having made possible the fulfilment of the project. This material is based upon work supported by the Italian Ministry of Education with programme: Fondi Strutturali Europei – Programma Operativo Nazionale “Per la scuola, competenze e ambienti per l’apprendimento” 2014–2020. FSE Azione 10.8.4 “Formazione del personale della scuola e della formazione su tecnologie e approcci metodologici innovativi”. Individuazione degli “Snodi formativi territoriali”.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Questionnaire B1 (self-confidence)

-

1.

How much do you know about the methodologies related to Educational Robotics?

-

2.

How much do you know the tools (like software, kit, …) to carry out an activity of Educational Robotics in the classroom?

-

3.

How much do you know about the methodologies related to Coding?

-

4.

How much do you know the tools (like software, IDE, …) to carry out an activity of Coding in the classroom?

-

5.

How much do you know about the methodologies related to Tinkering?

-

6.

How much do you know the tools to carry out an activity of Tinkering in the classroom?

-

7.

How much do you feel to be able to prepare an Educational Robotics activity?

-

8.

How much do you feel to be able to prepare a Coding activity?

-

9.

How much do you feel to be able to prepare a Tinkering activity?

Test B2 (knowledge)

-

1.

With the term “Educational Robotics” we intend:

-

A.

The robotic kits that can be employed while teaching

-

B.

The whole set of tools and methodologies based on constructionism, which states that learning derives by the possibility to build an object.

-

C.

The method designed by Lego to use robots in schools

-

A.

-

2.

In an Educational Robotics kit, a generic sensor can be used

-

A.

To acquire data from environment and to allow the robot to interact with the environment

-

B.

To prevent the robot from hitting obstacles

-

C.

To make the robot sensitive to emotions

-

A.

-

3.

Tinkering in an educational environment is

-

A.

A method characterized by a bottom-up approach through which students explore, make and iteratively make experiments in a project-based or problem-based context

-

B.

A method that students at school can use to successfully manage projects

-

C.

A method characterized by a top-down approach, specific calculations and structured design, to help students to carry out projects or to solve problems

-

A.

-

4.

To carry out projects using the tinkering methodology in the classroom we need

-

A.

A Makey Makey board

-

B.

Lego boxes

-

C.

It depends on the kind of the project, in general it can be useful to consider waste material

-

A.

-

5.

Computational thinking can be defined as

-

A.

A mental process to make possible for an agent, being it a machine or a person, to solve problems by giving instructions to follow autonomously

-

B.

Being able to write computer programs

-

C.

A passion for computer science

-

A.

-

6.

Scratch is a tool

-

A.

That allows to create funny videogames

-

B.

Which allows interdisciplinary link letting students free to create personal projects (stories, games, simulations, animated cartoon…)

-

C.

Which allows teachers to present students with fixed exercises that train computational thinking and train programming skill (for example labyrinth’s solution)

-

A.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Scaradozzi, D., Screpanti, L., Cesaretti, L. et al. Implementation and Assessment Methodologies of Teachers’ Training Courses for STEM Activities. Tech Know Learn 24, 247–268 (2019). https://doi.org/10.1007/s10758-018-9356-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10758-018-9356-1