Abstract

AI-powered chat technology is an emerging topic worldwide, particularly in areas such as education, research, writing, publishing, and authorship. This study aims to explore the factors driving students' acceptance of ChatGPT in higher education. The study employs the unified theory of acceptance and use of technology (UTAUT2) theoretical model, with an extension of Personal innovativeness, to verify the Behavioral intention and Use behavior of ChatGPT by students. The study uses data from a sample of 503 Polish state university students. The PLS-SEM method is utilized to test the model. Results indicate that Habit has the most significant impact (0.339) on Behavioral intention, followed by Performance expectancy (0.260), and Hedonic motivation (0.187). Behavioral intention has the most significant effect (0.424) on Use behavior, followed by Habit (0.255) and Facilitating conditions (0.188). The model explains 72.8% of the Behavioral intention and 54.7% of the Use behavior variance. While the study is limited by the sample size and selection, it is expected to be a starting point for more research on ChatGPT-like technology in university education, given that this is a recently introduced technology.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The integration of Artificial Intelligence (AI) into various sectors of society has become a prominent focal point of academic inquiry and application, with education standing out as a particularly noteworthy arena (Nazaretsky et al., 2022a). Historical traces of AI's foray into education can be linked to the development of early chatbots in the 1960s (Weizenbaum, 1966). However, a marked improvement in AI's capabilities, particularly in the realm of generative AI, became apparent in last decade (Adiwardana et al., 2020; Vaswani et al., 2017). Among the torchbearers of this evolution is ChatGPT, an application of Generative AI with transformative potential for educational landscapes (OpenAI, 2023).

Generative AI, such as ChatGPT, operates distinctively from traditional AI models. Instead of merely recognizing patterns and making predictions, it innovatively produces novel outputs, encompassing text, audio, and video. With its roots in deep neural network architectures, and further powered by transformer designs, Large Language Models (LLMs) like ChatGPT or LLaMA harness billions of parameters, making strides in various natural language processing tasks (OpenAI, 2023; Touvron et al., 2023). Notably, educators have leveraged the capabilities of such LLMs for diverse purposes, ranging from augmenting learning experiences through dynamic feedback to the facilitation of language acquisition (Cukurova et al., 2023).

However, the infusion of such AI technologies in post-secondary education is not without challenges. One can point to the surge of interest in AI's educational potential over the last decade, juxtaposed with a landscape marked by exaggerated claims and often inconclusive findings (Bates et al., 2020). Even as a considerable volume of studies on AI in education emerged in the past years, signifying a booming interest, the actual effects of applications like ChatGPT on higher education institutions and stakeholders remain enigmatic (Zawacki-Richter et al., 2019).

Moreover, accompanying this technological fervor are grave concerns about intellectual property, data privacy, potential biases, and equitable access to resources (Li et al., 2022). The deployment of generative AI in pedagogical contexts begs pertinent questions about pedagogy, learning paradigms, authorship, and more (Williamson et al., 2023; Zembylas, 2023). For instance, as students turn to AI for academic aid, intricate issues of plagiarism, erasure of original thought, and challenges to established pedagogies arise (Rudolph et al., 2023). Such developments resonate with broader ethical considerations surrounding factuality, fairness, transparency, and more, emphasizing the need for sustainable AI systems in education (Kasneci et al., 2023). Consequently, there is an urgent and pressing need to explore not just the promises but also the potential impacts, limitations, and ethical ramifications of LLM-enabled educational applications (Strzelecki, 2023). Research must tackle the intricacies of design and implementation, ascertain the appropriateness of such AI deployments in educational settings, and articulate the overarching principles and guidelines for ethical utilization (Hahn et al., 2021).

This investigation, therefore, situates itself within this rich tapestry of debates, possibilities, and challenges. By delving deeper into the matrix of technology adoption, artificial intelligence, and the nuances presented by applications like ChatGPT, we aim to contribute to a more informed, equitable, and constructive discourse that respects both the transformative potential of AI and the foundational tenets of education in the twenty-first century.

ChatGPT, a chatbot tool, uses artificial intelligence to facilitate human-like conversations (Vaswani et al., 2017). This innovative natural language processing tool is capable of providing assistance with various tasks, including email composition, essay writing, and coding. OpenAI developed ChatGPT, which was officially launched on November 30, 2022, and has since attracted a great deal of attention (OpenAI, 2023).

Despite its impressive capabilities, ChatGPT is currently in research and feedback- gathering phase, and it is freely accessible to the public for usage. However, a paid subscription version called ChatGPT Plus was launched on February 1st, 2023, offering improved response times, priority access to new improvements and features. Milmo (2023) reports that ChatGPT has become the fastest-growing application in history, with approximately 100 million users actively engaged within two months of its release, as per Forbes. Along with the ability to answer basic queries, the model offers a variety of functions, including generating AI art prompts, coding, and crafting essays. Both supervised and reinforcement learning techniques are employed to fine-tune the language model, with human AI trainers participating in conversations where they perform the roles of both user and AI assistant. Despite its many strengths, ChatGPT has limitations such as difficulty answering questions that have a specific wording and a lack of quality control, which can lead to erroneous responses (Altman, 2023).

ChatGPT in university education presents challenges to the academic community wherever ChatGPT is available (Teubner et al., 2023). While some academics embrace the development, others oppose its use for fear that it may facilitate cheating or academic misconduct. Proponents argue that adapting to the technology and designing policies for academic integrity will equip students with the necessary skills and ethical mindset to operate in an AI environment. The chatbot generates coherent and informative text, and can be accessed in many countries (Liebrenz et al., 2023). Academics are at loggerheads over the use of AI-generated bots in educational settings. Some academics promote a framework for its appropriate use, whereas others, like Hsu (2023), advocate an outright ban. The escalating debate underscores a need for reshaping curricula in higher education to embrace more critical thinking, ethical perspectives, creativity, problem-solving, and future-proof solutions, preparing students for an AI-influenced professional landscape.

Though ChatGPT has swiftly gained popularity as a cutting-edge tool in natural language processing, research into how it's perceived and utilized by university students remains sparse. This shortfall is significant because discerning how students engage with AI aids is crucial for their efficacious deployment in educational contexts. Consequently, a deeper understanding is needed regarding factors that shape students' attitudes towards ChatGPT, their perceptions of its usefulness, and the difficulties they encounter while using it. By closing this research gap, we can gain a clearer perspective on how best to weave ChatGPT into the fabric of our education system, facilitating student learning and academic triumph.

This paper seeks to fill that research void by examining the acceptance and application of ChatGPT among university students. We delve into the determinants that foster or inhibit its acceptance and utilization, utilizing the "Unified Theory of Acceptance and Use of Technology 2" (UTAUT2) model by Venkatesh et al. (2012) as our theoretical framework. We've expanded this model by integrating "Personal Innovativeness"—a construct introduced by Agarwal and Prasad (1998) in the IT domain, viewing it as a potential precursor within the model.

We begin by outlining the origins of ChatGPT and summarizing the controversy surrounding its use. Then we proceed to explain how we've extended the UTAUT2 model to evaluate students' acceptance and use of ChatGPT. A specifically modified measurement scale tailored for ChatGPT in university settings is also presented. Following this, we share the results of our analysis, deploying a structural equation modeling using the partial least squares method and the model estimation. In this section, we also engage in a rich discussion of our findings, highlighting the uniqueness and contributions of our study. We wrap up by addressing the limitations of our study and recommending avenues for future exploration.

Literature Review

The literature indicates a burgeoning interest in the application of ChatGPT within higher education, spanning general, and scientific educational domains. Studies highlight its potential in personalizing learning and enhancing engagement but caution against uncritical adoption due to biases and academic integrity concerns (Kasneci et al., 2023; Rudolph et al., 2023). Cotton et al. (2023) and Tlili et al. (2023) discuss maintaining academic integrity, while Williamson et al. (2023) encourage more research into AI's educational impact. Naumowa (2023) stresses critical thinking in using AI, whereas Hsu (2023), Ivanov and Soliman (2023), Lim et al. (2023), Crawford et al. (2023), Sullivan et al. (2023), and Cooper (2023), explore the ethical and practical implications, calling for ethical guidelines and theoretical frameworks.

The role of ChatGPT in academic authorship raises concerns about integrity and recognition of contribution. Van Dis et al. (2023) recognize its benefits in democratizing knowledge but call for debates on authorship and accountability. The potential impact of AI on academia and the need for ethical use policies is discussed by Lund et al. (2023), while Thorp (2023) cautions against naming AI as a co-author. Perkins (2023) advises HEIs to update academic integrity policies to consider AI tools. Conversations with ChatGPT reveal its capabilities and limitations, particularly in areas like plagiarism and misuse in education (Eysenbach, 2023). Interviews explore its potential in improving content creation and academic research, with a focus on ethical implications (Lund & Wang, 2023; Pavlik, 2023). These dialogues with ChatGPT underscore the importance of evaluating its impact on academia and the media critically.

Methodology

The acceptance and use of technology has become a significant topic of interest in recent years, as individuals increasingly rely on technology for daily tasks. One of the most prominent theories used to explain and predict technology acceptance is the “Unified Theory of Acceptance and Use of Technology” (UTAUT) (Venkatesh et al., 2003). UTAUT “synthesizes the concepts and user experiences that provide the foundation for theories on the user acceptance process of an information system” (Yu et al., 2021). UTAUT was constructed by integrating and synthesizing eight pre-existing models of information technology acceptance. The model includes constructs such as "Performance expectancy”, “Social influence”, "Effort expectancy”, and Facilitating conditions”, which have been found to significantly influence "Behavioral intention” to use a technology. Additionally, individual differences such as age, gender, voluntariness of use and experience are considered moderators of the four constructs in the UTAUT model.

Recently, UTAUT was modified to create UTAUT2, which includes three new constructs of “Hedonic motivation”, “Price value”, and “Habit” (Venkatesh et al., 2012). UTAUT2 is a prominent theoretical model that aims to understand the factors that influence individuals' adoption and use of new technologies in organizational and personal contexts (Tamilmani et al., 2021). Developed through extensive empirical research, UTAUT2 provides a comprehensive framework for researchers and practitioners to identify key factors that influence the acceptance and use of technology. In the realm of higher education the UTAUT2 model is used to identify factors affecting students' or teachers' intentions to use different technology tools such as e-learning systems (Raza et al., 2022), mobile applications (Ameri et al., 2020; Kang et al., 2015), immersive virtual and augmented reality (Bower et al., 2020; Faqih & Jaradat, 2021), and learning management software (Alotumi, 2022; Jakkaew & Hemrungrote, 2017; Kumar & Bervell, 2019). Recent studies also examine how these factors can be affected by different contextual factors, such as the COVID-19 pandemic (Osei et al., 2022; Zacharis & Nikolopoulou, 2022), and how they can be utilized to improve the design and implementation of educational technology tools.

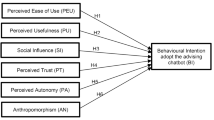

Our study argues that “Performance expectancy”, “Effort expectancy”, "Social influence”, "Facilitating conditions”, “Hedonic motivation”, Price value” and “Habit” can significantly influence students’ “Behavioral intention” to use a ChatGPT technology in higher education. We proposed to extend a well-established UTAUT2 theory, by adding also “Personal innovativeness” as a factor influencing “Behavioral intention” to use ChatGPT technology. Personal innovativeness (PI) has been acknowledged as important factor that impact technology adoption and usage (Agarwal & Prasad, 1998). A number of scholars “have suggested that personality traits, such as personal innovativeness, play a significant role in technology adoption in the domain of Information Technology (IT)” (Farooq et al., 2017; Sitar-Taut & Mican, 2021). Personal innovativeness reflects a person’s tendency to independently experiment with and implement new IT developments, and is seen as a stable and context-specific characteristic that strongly influences acceptance and adoption of IT (Dajani & Abu Hegleh, 2019; Twum et al., 2022).

The goal of our research is to examine the influence of seven UTAUT2 factors and PI on students' behavioral intention to use ChatGPT and its effectiveness in assisting them with their studies. The study will define each factor and explore how students' perceptions of ChatGPT's use influence their continued usage.

Performance expectancy (PE) “influences individuals' behavioral intention to adopt new technology. It refers to the extent to which individuals believe that using a system will help them attain gains in job performance or enhance their performance in learning processes” (Venkatesh et al., 2003). In this study PE pertains to how much these students think that utilizing ChatGPT can boost their academic results or efficiency. We hypothesize that:

-

H1: “Performance expectancy will have a direct positive influence on the Behavioral intention to use ChatGPT in higher education by students.”

Effort expectancy (EE) is defined “as the degree of ease or effort associated with the use of technology” (Venkatesh et al., 2003). EE consists of constructs such as perceived ease of use, complexity, and ease of use. Research has shown that EE is a critical predictor of technology acceptance, and it has a direct impact on individuals' “Behavioral intention” to use technology. In this study, EE would denote how much students feel that interacting with ChatGPT is easy and demands minimal effort. Hence, it is proposed that:

-

H2: “Effort Expectancy will have a direct positive influence on the Behavioral intention to use ChatGPT in higher education by students.”

Social influence (SI) is defined “as the extent to which important others, such as family and friends, believe that an individual should use a particular technology” (Venkatesh et al., 2003). The influence of social circles, including family members, teachers, co-workers, elders, friends, and peers, has been shown to positively impact users' intention to use a technology. In this research, SI indicates the extent to which students perceive that peers, instructors, or other key figures in their social circle endorse or motivate them to engage with ChatGPT. We hypothesize that:

-

H3: “Social influence will have a direct positive influence on the Behavioral intention to use ChatGPT in higher education by students.”

Facilitating conditions (FC) refer to “the level of accessibility to resources and support needed to accomplish a task” (Venkatesh et al., 2003). In university education settings, FC emphasize the importance of having access to technical and reliable infrastructure, knowledge, training, and support, which can impact students' willingness to use educational systems. FC in this context means the extent to which students feel they can access the AI tool even with its popularity, coupled with their access to technical assistance and training for ChatGPT. The subsequent hypotheses are stated:

-

H4: “Facilitating conditions will have a direct positive influence on the Behavioral intention to use ChatGPT in higher education by students.”

-

H5: “Facilitating conditions will have a direct positive influence on the ChatGPT Use behavior in higher education by students.”

Hedonic motivation (HM) refers “to the pleasure or enjoyment derived from using a technology, and its effect on the intention of users has been found to be statistically significant” (Venkatesh et al., 2012). The findings of various studies suggest that users are more likely to continue using a technology if they experience pleasure or enjoyment while using it. In this context, HM denotes how much students perceive ChatGPT as fun or pleasurable to engage with, and their enjoyment in exploring new AI technological tools. Hence, it is proposed that:

-

H6: “Hedonic motivation will have a direct positive influence on the Behavioral intention to use ChatGPT in higher education by students.”

Price value (PV) is “an individual's trade-off between the perceived benefits of using the system and its monetary cost” (Venkatesh et al., 2012). PV refers to the cost students bear when purchasing access to the online services used for learning. Hence, it is proposed that:

-

H7: “Price value will have a direct positive influence on the Behavioral intention to use ChatGPT in higher education by students.”

Habit (HT) is defined “as the extent to which an individual tends to perform behaviors automatically because of prior learning and experiences with the technology” (Venkatesh et al., 2012). Venkatesh et al. (2012) and Limayem et al. (2007) have explained HT as a perceptual construct, and it has been found to be a significant predictor of “Behavioral intention” and technology use (Tamilmani et al., 2019). In the context of the study, HT pertains to how deeply students have ingrained the use of ChatGPT into their academic habits. This encompasses aspects like how often they use it, how long they engage with it, and how seamlessly ChatGPT fits into their academic processes. The subsequent hypotheses are stated:

-

H8: “Habit will have a direct positive influence on the Behavioral intention to use ChatGPT in higher education by students.”

-

H9: “Habit will have a direct positive influence on the ChatGPT Use behavior in higher education by students.”

The connection between Personal Innovativeness (PI) and acceptance and usage of technology has been thoroughly investigated in the literature (Slade et al., 2015). This characteristic can be interpreted “as a willingness to adopt the latest technological gadgets or a propensity for risk-taking, which may be associated with trying out new features and advancements in the IT domain” (Farooq et al., 2017). Within the scope of the study, personal innovativeness relates to the degree of eagerness students show in adopting cutting-edge tech tools such as ChatGPT, as well as their belief in their capacity to learn and excel in new technological skills.

-

H10: “Personal innovativeness will have a direct positive influence on the Behavioral intention to use ChatGPT in higher education by students.”

Behavioral intention (BI) is a fundamental concept in the study of technology adoption and use behavior (Ajzen, 1991; Davis, 1986; Fishbein & Ajzen, 1975). It refers to “individuals' willingness and intention to use a particular technology for a specific task or purpose” (Venkatesh et al., 2003, 2012). In the context of this study, BI is investigated to understand students' willingness to use ChatGPT in their studies.

-

H11: “Behavioral intention to use will have a direct positive influence on the ChatGPT Use behavior in higher education by students.”

Use behavior (UB) aims to explain user technology acceptance and usage behavior. According to the model, actual use refers to the extent to which users actually use a technology for a specific task or purpose. The original study by Venkatesh et al. (2012) did not provided how the actual use was measured. In this study use of ChatGPT was measured on seven items scale from “never” to “several times a day”.

Venkatesh et al. (2016) recommended using UTAUT2 as a fundamental model to establish hypotheses regarding the connections between proposed variables and user adoption of technology. Additionally, Dwivedi et al. (2019) noted that previous research in this field typically utilized only a portion of the UTAUT2 model and frequently neglected moderators. To address this issue, the current study employed a modified version of UTAUT2 and did not incorporate age, gender, and experience as moderators.

Our study utilized a research model (Fig. 1) which included seven constructs from the UTAUT2 scale (Venkatesh et al., 2012). This scale is commonly employed to evaluate the acceptance of technology. To further enhance our model, we integrated the PI variable from Agarwal and Prasad's (1998) research. The data was collected using a seven-point Likert scale, where respondents could choose from "strongly disagree" to "strongly agree". The scale for measuring use behavior was 7-options from “never” to “several times a day”. and Descriptive statistics and measurement scale are in Appendix Table 3.

Sample Characteristics

First, a pilot study was carried out using a representative sample of 36 students (18 female and 18 male) from the first cycle study level to test the scales developed for this study before administering them to the targeted audience. The reliability and validity criteria were met for each construct, and discriminant validity was confirmed (Hair et al., 2013). In terms of determining the final sample size, according to Hair et al. (2022), studies conducted with the PLS-SEM method require a minimum sample size of 189 to detect R2 values of at least 0.1 with a significance level of 5%. Moreover, Arnold (1990) suggested that a statistical power of at least 95% is commonly sought after in social science research.

As of December 31, 2021, there were 1,218,200 students in universities in Poland. To calculate the sample size of population size 1,218,200, with confidence level at 95% and margin of error 5%, we use the following formula provided by Yamane (1967) “n = (z^2 * p * (1-p)) / e^2, where n is the sample size, z is the z-score associated with the confidence level, which is 1.96 for a 95% confidence level, p is the estimated proportion of the population with the desired characteristic”. Since we don't have an estimate, we use 0.5 to get the maximum sample size and e is the margin of error, which is 0.05. Plugging in the values we get a minimum sample size of 385.

The questionnaire was distributed through a web survey created on Google Forms. A total of 13,388 students from the University of Economics in Katowice, Poland, were invited to participate via email in the period of 13th to 21st March 2023. To ensure unbiased data, the students were assured of the confidentiality, voluntary participation, and anonymity of their responses. After removing 3 responses with a variance of zero, 503 valid responses were collected, which is enough, according to a minimal sample size calculation.

The sample size consists of 268 males (53.3%), 212 females (42.1%), and 23 (4.6%) who were unwilling to disclose their gender. With regard to the diversification of study experience, 25 students (5%) are in their first year, 162 (32.2%) are in their second year, and 192 (38.2%) are in their third year of the bachelor's degree program. Additionally, 52 students (10.3%) are in their first year and 64 (12.7%) are in their second year of the master's degree program, while 8 (1,6%) are PhD students.

Results

We implemented the PLS-SEM technique using the path weighting approach via SmartPLS 4 software (Version 4.0.9.2), adopting the standard initial weights with a limit of 3000 iterations to estimate our model (Ringle et al., 2022). Additionally, to determine the statistical significance of the PLS-SEM outcomes, we applied bootstrapping with 5000 samples, which is a nonparametric statistical method. In the assessment of constructs specified reflectively, we scrutinized the indicator loadings, noting that a loading greater than 0.7 indicates the construct explains over half of the indicator’s variance, signifying an acceptable degree of item reliability. Loadings are presented in Appendix Table 3 and exceed the lower bond except the FC4, which was removed from additional processing within the model and is not taken into account.

Composite reliability is a criterion for evaluating reliability, where results from 0.70 to 0.95 demonstrate reliability levels that are acceptable to good (Hair et al., 2022). Cronbach’s alpha measures internal consistency reliability and uses similar thresholds as composite reliability (ρc). An additional reliability coefficient ρA is built on Dijkstra and Henseler (2015), with ρA providing an exact and consistent alternative. The assessment of the measurement models' convergent validity is achieved by calculating the average variance extracted (AVE) from all the items linked to a specific reflective variable. An AVE threshold of 0.50 or higher is considered acceptable (Sarstedt et al., 2022). All measurement instruments have met the quality criteria (Table 1).

The Henseler et al. (2015) heterotrait-monotrait ratio of correlations (HTMT) is the preferred method for PLS-SEM discriminant validity analysis. A threshold of 0.90 is recommended since a high HTMT result suggests a difficulty with discriminant validity, when the constructs are conceptually similar. If they are more distinct, a limit of 0.85 should be used (Henseler et al., 2015). All the values in are below a 0.85 threshold (Appendix Table 4).

The subsequent stage involves an assessment of the coefficient of determination (R2), which gauges the amount of variance accounted for in each construct and hence the model's explanatory capability. The coefficient of determination, denoted as R2, varies from 0 to 1, with higher figures denoting a stronger degree of explained variance. According to Hair et al. (2011), R2 values can generally be interpreted as weak (0.25), moderate (0.50), or substantial (0.75). When evaluating the magnitude of an independent variable's impact, f2 values are categorized as large (0.35), medium (0.15), or small (0.02), and are used as standard reference points. Should the effect size fall below 0.02, it is indicative of a negligible effect (Sarstedt et al., 2022).

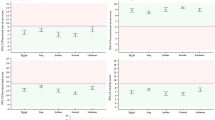

PLS-SEM findings are illustrated in Fig. 2, with standardized regression coefficients shown on the path relationships and R2 values presented in the variables' squares. The primary observation reveals that HT has the most prominent impact (0.339) on BI, followed by PE (0.260), and HM (0.187), explaining 72.8% of the "Behavioral intention" variance (as indicated by the R2 value). SI (0.093), PI (0.086), PV (0.083), and Effort expectancy (0.079) have positive effect on Behavioral intention, however there is no f2 effect size for these relationships. Conversely, BI has the most significant effect (0.424) on UB, with HT (0.255) and FC (0.188) following suit. These three variables account for 54.7% of the "Use behavior" variance. Table 2 presents results of the significance testing for the path coefficients within the structural model and the verification of the hypotheses.

Discussion

Our study enhances the knowledge around student perceptions of ChatGPT's application in tertiary education. Although existing literature on this subject is scarce, particularly regarding higher education, our results hold considerable importance for broadening discussions about the deployment of AI-driven chat tools in academic settings. We utilized the UTAUT2 scale to evaluate the acceptance and utilization of ChatGPT, with all eight external variables satisfying the established standards for reliability and validity. Our study confirms that three UTAUT2 constructs: “Performance expectancy”, “Habit”, and “Hedonic motivation” are positively connected to “Behavioral intention”, consistent with Zwain (2019) study on users' acceptance of learning management systems.

Our research affirms the strong association between “Performance expectancy” and “Habit”, which is consistent with previous studies by Venkatesh et al. (2012) and Lewis et al. (2013) on how emerging technologies are accepted in higher education. Within the framework of ChatGPT, an AI-powered conversation platform, students demonstrate a high level of comfort in adopting new technology, and the frequency of their usage contributes to the development of routine actions. “Habit” was found to have a significant positive impact on “Behavioral intention” in a majority of UTAUT2-based studies in higher education. For instance, it has been shown to positively influence acceptance and use of lecture caption systems (Farooq et al., 2017), mobile learning during social distancing (Sitar-Taut & Mican, 2021), and Google Classroom platform for mobile learning (Alotumi, 2022; Jakkaew & Hemrungrote, 2017; Kumar & Bervell, 2019). Nevertheless, our results are not the same as those of Twum et al. (2022), who found no direct effect of “Habit” on “Behavioral intention” to use e-learning, and Raman & Don (2013) and Ain et al. (2016), who found no direct impact of “Habit” on “Behavioral intention” to use learning management systems. Consistent with the original UTAUT2 model, our study confirms the significant relationship between Habit and Use behavior.

In our study, “Performance expectancy” emerged as the second-best indicator of “Behavioral intention”. These findings are consistent with other studies that have demonstrated a notable and affirmative correlation between “Performance expectancy” and “Behavioral intention” in areas such as mobile learning (Arain et al., 2019), e-learning platforms (Azizi et al., 2020; Nikolopoulou et al., 2020), and learning management software (Raman & Don, 2013). In addition, “Performance expectancy”, as an original variable in the UTAUT theory, has been shown to be a significant predictor of “Behavioral intention” in studies such as Raffaghelli et al.'s (2022) exploration of students' acceptance of an early warning system in higher education and Mehta et al.'s (2019) investigation of the influence of values on e-learning adoption. According to our findings, students with high "Performance expectancy" are more likely to adopt useful technology like ChatGPT.

Our study found that “Hedonic motivation” has a positive impact on “Behavioral intention” to use ChatGPT. This finding is in line with earlier studies on the use of emerging technologies in higher education, including Google Classroom (Kumar & Bervell, 2019), animation (Dajani & Abu Hegleh, 2019), mobile technologies (Hu et al., 2020), blended learning (Azizi et al., 2020), and augmented reality technology (Faqih & Jaradat, 2021). However, our findings contradict those of studies that examined the use of learning management systems (Ain et al., 2016) and blackboard learning systems (Raza et al., 2022).

“Effort expectancy”, “Social influence” and “Price value” have been found to have a positive and significant effect on “Behavioral intention”, despite having f2 values that are lower than 0.02. With respect to “Effort expectancy”, students' responses had the highest mean values among all the variables, indicating that the AI-powered chat technology is widely used and there are no problems when using ChatGPT. This demonstrates that using this technology in higher education requires very little effort and does not affect “Behavioral intention”. Research has shown comparable outcomes on the application of mobile learning while social distancing (Sitar-Taut & Mican, 2021), using Google Classroom (Alotumi, 2022; Kumar & Bervell, 2019), mobile learning (Ameri et al., 2020; Arain et al., 2019; Kang et al., 2015; Nikolopoulou et al., 2020) e-learning platform (El-Masri & Tarhini, 2017; Twum et al., 2022) and learning management system (Ain et al., 2016; Zwain, 2019).

“Social influence” has a very low impact on the “Behavioral intention” to use ChatGPT. The likelihood of adopting the AI-enabled chat is higher among early adopters who have a higher level of education and are less swayed by external factors. Our study found no social pressure on the adoption of ChatGPT, possibly due to it being a new technology not yet widely adopted. Nonetheless, as academic institutions develop regulations regarding AI tools such as ChatGPT, the factor of "Social Influence" might gain significance. Previous studies have found “Social influence” to have a significant effect on “Behavioral intention” in areas such as mobile learning (Kang et al., 2015) and e-learning systems (El-Masri & Tarhini, 2017), while others did not find such significance, such as in the study of Alotumi (2022) or Kumar & Bervell (2019) on Google Classroom acceptance.

Likewise, “Price value” has a minimal effect on the “Behavioral intention” to use ChatGPT. This may be because the use of ChatGPT is currently free of charge during the tool's research preview. Although the ChatGPT plus version with the latest GPT-4 language model is available for $20 per month, the difference in the ChatGPT's use, powered by the GPT-3.5 version, for everyday student tasks is unlikely to be noticeable. However, students may perceive “Price value” as learning value since they need to invest time and effort into learning new technology. In the future, ChatGPT may no longer be offered as a free preview, and “Price value” could have a more significant impact. Previous studies have found “Price value” to have a significant effect on “Behavioral intention” in areas such as lecture caption systems (Farooq et al., 2017) and blended learning (Azizi et al., 2020), while others did not find this significance in areas like mobile learning (Nikolopoulou et al., 2020) and e-learning (Osei et al., 2022).

From the UTAUT2 model, the last construct, “Facilitating conditions”, has been found to have no significant effect on “Behavioral intention, but it did have a significant effect on “Use behavior”, as the original UTAUT2 model contains this relationship. Previous studies on the adoption of animation (Dajani & Abu Hegleh, 2019) and Google Classroom in higher education (Alotumi, 2022; Kumar & Bervell, 2019) have usually found that “Facilitating conditions” have no effect. On the contrary, it was found that “Facilitating conditions” have a significant impact on “Behavioral intention” in areas such as mobile learning (Yu et al., 2021) and augmented reality technology in higher education (Faqih & Jaradat, 2021).

“Personal innovativeness” is the fourth variable that has been found to have a minimal positive effect on “Behavioral intention”, due to f2 lower than 0.02. This finding could be since students have not fully experimented with ChatGPT and may have insufficient knowledge on how to use it. In previous studies, personal innovativeness was found to have a significant effect on “Behavioral intention” in areas such as animation (Dajani & Abu Hegleh, 2019), lecture capture systems (Farooq et al., 2017), mobile learning during social distancing in higher education (Sitar-Taut & Mican, 2021), and e-learning (Twum et al., 2022).

In this model, “Behavioral intention” is explained by 72.8%, which is considered a substantial explanatory power. Moreover, “Behavioral intention” has a direct and significant impact on “Use behavior” and model explains “Use behavior” by 54.7%, which is considered a moderate explanatory power. The explained variances were higher than in a study conducted by Maican et al. (2019) on communication and collaboration applications in academic environments, as well as in a study conducted by Hoi (2020) on how the mobile devices are accepted and used for language learning by students.

Recent research delves into the increasing discourse around the integration and application of AI-driven tools in education. There are suggested initiatives to bolster educators' proficiency in navigating multiple AI-enhanced educational platforms. For example, educators have opportunities to undergo specialized training modules to familiarize themselves with platforms that assess student essays (Nazaretsky et al., 2022a). Similarly, there are specific platforms tailored for educators that can be introduced in educational settings to elevate the learning experience (Cukurova et al., 2023). There are also tools designed to gauge the degree to which educators embrace AI-driven utilities and address potential issues like trustworthiness, the lack of human-like attributes, and clarity in AI's decision-making patterns (Nazaretsky et al., 2022b).

Our investigation pivots to a different facet of the educational journey, centering on students' acceptance and utilization of generative AI utilities. This research's value lies in shedding light on determinants shaping the embrace of such AI-driven tools. It's pertinent to mention that ChatGPT doesn't have adaptive capabilities and isn't tailored for academic use, indicating a need for more research on its potential role in learning environments.

Practical Implications

ChatGPT might assist with personalized learning and the adaptation of AI in education. For students, the role of ChatGPT can be highlighted as an efficient and tailored tool for information acquisition, conceptual understanding, language learning, and task facilitation. It can be perceived as a digital assistant capable of providing immediate, personalized feedback and guidance. It can serve as a grammar checker, content recommender, and query solver across a spectrum of disciplines and languages. ChatGPT's ability to present complex concepts in simpler terms for better comprehension can also be highlighted, along with its potential to make abstract educational materials more accessible. Additionally, it can has a role as a research facilitator, providing swift access to relevant information and a strategic roadmap for research studies.

The transformative potential of ChatGPT for learning, indicating its capability to create tailored content, interactive learning experiences, and customized assessments can be stressed, thereby offering a more individualized learning pathway. The use of AI technologies such as ChatGPT to supplement traditional educational strategies could result in a dynamic learning ecosystem that caters to each learner's unique needs and learning styles. It can provide individualized attention that human instructors, due to time and resource constraints, may not be able to. There can be underscored the potential of ChatGPT as a creative tool to stimulate critical thinking, enhance metacognition, and foster innovative problem-solving skills among students. By aiding in tasks such as organizing and summarizing information, developing essays, and creating tests based on study material, ChatGPT could be instrumental in promoting deeper learning.

However, there should be issued a note of caution about the ethical implications, data security, and potential overreliance on AI technologies. This emphasizes the importance of using AI as a tool, not as a replacement for human teachers, indicating that the criticality of human judgment and contextual understanding must remain integral to the educational process. The integration of AI technologies like ChatGPT in education, when done responsibly and ethically, can create enriched, personalized, and adaptive learning experiences. However, it requires concerted effort to ensure its appropriate use, involving iterative feedback loops between ChatGPT, human instructors, and students, along with careful attention to the potential challenges and pitfalls.

Limitations

The questionnaire was circulated during a turbulent phase related to AI, with each month profoundly influencing the tool's evolution. Those who began using ChatGPT early in its rollout are viewed as pioneers. Additionally, factors like specific times in the academic year, like assignment submissions or the specific subject of study, might affect how the tool is used. The study is also limited by the sample selection, which consisted only of students from one state university in Poland. However, the sample was diverse in terms of study experience. We did not consider different study programs as a moderating variable. It is possible that different study programs, such as IT, business, management, and finance, have different levels of AI chat use.

Conclusions

The study aimed to test students' acceptance of ChatGPT using the UTAUT2 framework, which is extensively used in technology acceptance research. The study confirmed the significant impact of “Habit”, “Performance Expectancy”, and “Hedonic Motivation” on “Behavioral Intention” to use ChatGPT by students. Since ChatGPT use in higher education is not yet explored, there are many recommendations for future studies. For example, future studies can test the scale used in this study and further develop it in the future.

Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Adiwardana, D., Luong, M.-T., So, D. R., Hall, J., Fiedel, N., Thoppilan, R., Yang, Z., Kulshreshtha, A., Nemade, G., Lu, Y., & Le, Q. V. (2020). Towards a human-like open-domain chatbot. http://arxiv.org/abs/2001.09977

Agarwal, R., & Prasad, J. (1998). A conceptual and operational definition of personal innovativeness in the domain of information technology. Information Systems Research, 9(2), 204–215. https://doi.org/10.1287/isre.9.2.204

Ain, N., Kaur, K., & Waheed, M. (2016). The influence of learning value on learning management system use. Information Development, 32(5), 1306–1321. https://doi.org/10.1177/0266666915597546

Ajzen, I. (1991). The theory of planned behavior. Organizational Behavior and Human Decision Processes, 50(2), 179–211. https://doi.org/10.1016/0749-5978(91)90020-T

Alotumi, M. (2022). Factors influencing graduate students’ behavioral intention to use Google Classroom: Case study-mixed methods research. Education and Information Technologies, 27(7), 10035–10063. https://doi.org/10.1007/s10639-022-11051-2

Altman, S. (2023). ChatGPT is incredibly limited, but good enough at some things to create a misleading impression of greatness. it’s a mistake. Twitter. https://twitter.com/sama/status/1601731295792414720. Accessed 15 May 2023.

Ameri, A., Khajouei, R., Ameri, A., & Jahani, Y. (2020). Acceptance of a mobile-based educational application (LabSafety) by pharmacy students: An application of the UTAUT2 model. Education and Information Technologies, 25(1), 419–435. https://doi.org/10.1007/s10639-019-09965-5

Arain, A. A., Hussain, Z., Rizvi, W. H., & Vighio, M. S. (2019). Extending UTAUT2 toward acceptance of mobile learning in the context of higher education. Universal Access in the Information Society, 18(3), 659–673. https://doi.org/10.1007/s10209-019-00685-8

Arnold, S. F. (1990). Mathematical statistics. Prentice Hall.

Azizi, S. M., Roozbahani, N., & Khatony, A. (2020). Factors affecting the acceptance of blended learning in medical education: Application of UTAUT2 model. BMC Medical Education, 20(1), 367. https://doi.org/10.1186/s12909-020-02302-2

Bates, T., Cobo, C., Mariño, O., & Wheeler, S. (2020). Can artificial intelligence transform higher education? International Journal of Educational Technology in Higher Education, 17(1), 42. https://doi.org/10.1186/s41239-020-00218-x

Bower, M., DeWitt, D., & Lai, J. W. M. (2020). Reasons associated with preservice teachers’ intention to use immersive virtual reality in education. British Journal of Educational Technology, 51(6), 2215–2233. https://doi.org/10.1111/bjet.13009

Cooper, G. (2023). Examining science education in ChatGPT: An exploratory study of generative artificial intelligence. Journal of Science Education and Technology, 32(3), 444–452. https://doi.org/10.1007/s10956-023-10039-y

Cotton, D. R. E., Cotton, P. A., & Shipway, J. R. (2023). Chatting and cheating: Ensuring academic integrity in the era of ChatGPT. Innovations in Education and Teaching International, 1–12. https://doi.org/10.1080/14703297.2023.2190148

Crawford, J., Cowling, M., & Allen, K.-A. (2023). Leadership is needed for ethical ChatGPT: Character, assessment, and learning using artificial intelligence (AI). Journal of University Teaching and Learning Practice, 20(3). https://doi.org/10.53761/1.20.3.02

Cukurova, M., Miao, X., & Brooker, R. (2023). Adoption of artificial intelligence in schools: unveiling factors influencing teachers’ engagement. In N. Wang, G. Rebolledo-Mendez, N. Matsuda, O. C. Santos, & V. Dimitrova (Eds.), Artificial Intelligence in Education (pp. 151–163). https://doi.org/10.1007/978-3-031-36272-9_13

Dajani, D., & Abu Hegleh, A. S. (2019). Behavior intention of animation usage among university students. Heliyon, 5(10), e02536. https://doi.org/10.1016/j.heliyon.2019.e02536

Davis, F. D. (1986). A technology acceptance model for empirically testing new end-user information systems: Theory and results [Massachusetts Institute of Technology]. https://dspace.mit.edu/handle/1721.1/15192

Dijkstra, T. K., & Henseler, J. (2015). Consistent and asymptotically normal PLS estimators for linear structural equations. Computational Statistics & Data Analysis, 81, 10–23. https://doi.org/10.1016/j.csda.2014.07.008

Dwivedi, Y. K., Rana, N. P., Jeyaraj, A., Clement, M., & Williams, M. D. (2019). Re-examining the unified theory of acceptance and use of technology (UTAUT): Towards a revised theoretical model. Information Systems Frontiers, 21(3), 719–734. https://doi.org/10.1007/s10796-017-9774-y

El-Masri, M., & Tarhini, A. (2017). Factors affecting the adoption of e-learning systems in Qatar and USA: Extending the unified theory of acceptance and use of technology 2 (UTAUT2). Educational Technology Research and Development, 65(3), 743–763. https://doi.org/10.1007/s11423-016-9508-8

Eysenbach, G. (2023). The role of ChatGPT, generative language models, and artificial intelligence in medical education: A conversation with ChatGPT and a call for papers. JMIR Medical Education, 9, e46885. https://doi.org/10.2196/46885

Faqih, K. M. S., & Jaradat, M.-I.R.M. (2021). Integrating TTF and UTAUT2 theories to investigate the adoption of augmented reality technology in education: Perspective from a developing country. Technology in Society, 67, 101787. https://doi.org/10.1016/j.techsoc.2021.101787

Farooq, M. S., Salam, M., Jaafar, N., Fayolle, A., Ayupp, K., Radovic-Markovic, M., & Sajid, A. (2017). Acceptance and use of lecture capture system (LCS) in executive business studies. Interactive Technology and Smart Education, 14(4), 329–348. https://doi.org/10.1108/ITSE-06-2016-0015

Fishbein, M., & Ajzen, I. (1975). Belief, attitude, intention, and behavior: An introduction to theory and research. Addison Wesley.

Hahn, M. G., Navarro, S. M. B., De La Fuente Valentin, L., & Burgos, D. (2021). A systematic review of the effects of automatic scoring and automatic feedback in educational settings. IEEE Access, 9, 108190–108198. https://doi.org/10.1109/ACCESS.2021.3100890

Hair, J. F., Ringle, C. M., & Sarstedt, M. (2011). PLS-SEM: Indeed a silver bullet. Journal of Marketing Theory and Practice, 19(2), 139–152. https://doi.org/10.2753/MTP1069-6679190202

Hair, J. F., Ringle, C. M., & Sarstedt, M. (2013). Partial least squares structural equation modeling: Rigorous applications, better results and higher acceptance. Long Range Planning, 46(1–2), 1–12. https://doi.org/10.1016/j.lrp.2013.01.001

Hair, J. F., Hult, G. T. M., Ringle, C., & Sarstedt, M. (2022). A primer on partial least squares structural equation modeling (PLS-SEM) (3rd ed.). Sage.

Henseler, J., Ringle, C. M., & Sarstedt, M. (2015). A new criterion for assessing discriminant validity in variance-based structural equation modeling. Journal of the Academy of Marketing Science, 43(1), 115–135. https://doi.org/10.1007/s11747-014-0403-8

Hoi, V. N. (2020). Understanding higher education learners’ acceptance and use of mobile devices for language learning: A Rasch-based path modeling approach. Computers & Education, 146, 103761. https://doi.org/10.1016/j.compedu.2019.103761

Hsu, J. (2023). Should schools ban AI chatbots? New Scientist, 257(3422), 15. https://doi.org/10.1016/S0262-4079(23)00099-4

Hu, S., Laxman, K., & Lee, K. (2020). Exploring factors affecting academics’ adoption of emerging mobile technologies-an extended UTAUT perspective. Education and Information Technologies, 25(5), 4615–4635. https://doi.org/10.1007/s10639-020-10171-x

Ivanov, S., & Soliman, M. (2023). Game of algorithms: ChatGPT implications for the future of tourism education and research. Journal of Tourism Futures, 9(2), 214–221. https://doi.org/10.1108/JTF-02-2023-0038

Jakkaew, P., & Hemrungrote, S. (2017). The use of UTAUT2 model for understanding student perceptions using google classroom: A case study of introduction to information technology course. 2017 International Conference on Digital Arts, Media and Technology (ICDAMT), 205–209. https://doi.org/10.1109/ICDAMT.2017.7904962

Kang, M., Liew, B. Y. T., Lim, H., Jang, J., & Lee, S. (2015). Investigating the determinants of mobile learning acceptance in korea using UTAUT2. In G. Chen, V. Kumar, Kinshuk, R. Huang, & S. C. Kong (Eds.), Lecture Notes in Educational Technology (pp. 209–216). https://doi.org/10.1007/978-3-662-44188-6_29

Kasneci, E., Sessler, K., Küchemann, S., Bannert, M., Dementieva, D., Fischer, F., Gasser, U., Groh, G., Günnemann, S., Hüllermeier, E., Krusche, S., Kutyniok, G., Michaeli, T., Nerdel, C., Pfeffer, J., Poquet, O., Sailer, M., Schmidt, A., Seidel, T., … Kasneci, G. (2023). ChatGPT for good? On opportunities and challenges of large language models for education. Learning and Individual Differences, 103, 102274. https://doi.org/10.1016/j.lindif.2023.102274

Kumar, J. A., & Bervell, B. (2019). Google Classroom for mobile learning in higher education: Modelling the initial perceptions of students. Education and Information Technologies, 24(2), 1793–1817. https://doi.org/10.1007/s10639-018-09858-z

Lewis, C. C., Fretwell, C. E., Ryan, J., & Parham, J. B. (2013). Faculty use of established and emerging technologies in higher education: A unified theory of acceptance and use of technology perspective. International Journal of Higher Education, 2(2). https://doi.org/10.5430/ijhe.v2n2p22

Li, C., Xing, W., & Leite, W. (2022). Building socially responsible conversational agents using big data to support online learning: A case with Algebra Nation. British Journal of Educational Technology, 53(4), 776–803. https://doi.org/10.1111/bjet.13227

Liebrenz, M., Schleifer, R., Buadze, A., Bhugra, D., & Smith, A. (2023). Generating scholarly content with ChatGPT: Ethical challenges for medical publishing. The Lancet Digital Health, 5(3), e105–e106. https://doi.org/10.1016/S2589-7500(23)00019-5

Lim, W. M., Gunasekara, A., Pallant, J. L., Pallant, J. I., & Pechenkina, E. (2023). Generative AI and the future of education: Ragnarök or reformation? A paradoxical perspective from management educators. International Journal of Management Education, 21(2), 100790. https://doi.org/10.1016/j.ijme.2023.100790

Limayem, M., Hirt, S. G., & Cheung, C. M. K. (2007). How habit limits the predictive power of intention: The case of information systems continuance. MIS Quarterly, 31(4), 705–737.

Lund, B. D., & Wang, T. (2023). Chatting about ChatGPT: How may AI and GPT impact academia and libraries? Library Hi Tech News, 40(3), 26–29. https://doi.org/10.1108/LHTN-01-2023-0009

Lund, B. D., Wang, T., Mannuru, N. R., Nie, B., Shimray, S., & Wang, Z. (2023). ChatGPT and a new academic reality: Artificial Intelligence-written research papers and the ethics of the large language models in scholarly publishing. Journal of the Association for Information Science and Technology, 74(5), 570–581. https://doi.org/10.1002/asi.24750

Maican, C. I., Cazan, A.-M., Lixandroiu, R. C., & Dovleac, L. (2019). A study on academic staff personality and technology acceptance: The case of communication and collaboration applications. Computers & Education, 128, 113–131. https://doi.org/10.1016/j.compedu.2018.09.010

Mehta, A., Morris, N. P., Swinnerton, B., & Homer, M. (2019). The influence of values on e-learning adoption. Computers & Education, 141, 103617. https://doi.org/10.1016/j.compedu.2019.103617

Milmo, D. (2023). ChatGPT reaches 100 million users two months after launch. The Guardian. https://www.theguardian.com/technology/2023/feb/02/chatgpt-100-million-users-open-ai-fastest-growing-app. Accessed 15 May 2023.

Naumova, E. N. (2023). A mistake-find exercise: a teacher’s tool to engage with information innovations, ChatGPT, and their analogs. Journal of Public Health Policy, Palgrave Macmillan UK, 44(2), 173–178. https://doi.org/10.1057/s41271-023-00400-1

Nazaretsky, T., Ariely, M., Cukurova, M., & Alexandron, G. (2022a). Teachers’ trust in AI-powered educational technology and a professional development program to improve it. British Journal of Educational Technology, 53(4), 914–931. https://doi.org/10.1111/bjet.13232

Nazaretsky, T., Cukurova, M., & Alexandron, G. (2022). An instrument for measuring teachers’ trust in AI-based educational technology. ACM International Conference Proceeding Series, 56–66. https://doi.org/10.1145/3506860.3506866

Nikolopoulou, K., Gialamas, V., & Lavidas, K. (2020). Acceptance of mobile phone by university students for their studies: An investigation applying UTAUT2 model. Education and Information Technologies, 25(5), 4139–4155. https://doi.org/10.1007/s10639-020-10157-9

OpenAI. (2023). ChatGPT: Optimizing language models for dialogue. https://openai.com/blog/chatgpt/. Accessed 15 May 2023.

Osei, H. V., Kwateng, K. O., & Boateng, K. A. (2022). Integration of personality trait, motivation and UTAUT 2 to understand e-learning adoption in the era of COVID-19 pandemic. Education and Information Technologies, 27(8), 10705–10730. https://doi.org/10.1007/s10639-022-11047-y

Pavlik, J. V. (2023). Collaborating with ChatGPT: Considering the implications of generative artificial intelligence for journalism and media education. Journalism & Mass Communication Educator, 78(1), 84–93. https://doi.org/10.1177/10776958221149577

Perkins, M. (2023). Academic integrity considerations of AI large language models in the post-pandemic era: ChatGPT and beyond. Journal of University Teaching and Learning Practice, 20(2). https://doi.org/10.53761/1.20.02.07

Raffaghelli, J. E., Rodríguez, M. E., Guerrero-Roldán, A.-E., & Bañeres, D. (2022). Applying the UTAUT model to explain the students’ acceptance of an early warning system in Higher Education. Computers & Education, 182, 104468. https://doi.org/10.1016/j.compedu.2022.104468

Raman, A., & Don, Y. (2013). Preservice teachers’ acceptance of learning management software: An application of the UTAUT2 model. International Education Studies, 6(7), 157–164. https://doi.org/10.5539/ies.v6n7p157

Raza, S. A., Qazi, Z., Qazi, W., & Ahmed, M. (2022). E-learning in higher education during COVID-19: Evidence from blackboard learning system. Journal of Applied Research in Higher Education, 14(4), 1603–1622. https://doi.org/10.1108/JARHE-02-2021-0054

Ringle, C. M., Wende, S., & Becker, J.-M. (2022). SmartPLS 4. SmartPLS GmbH.

Rudolph, J., Tan, S., & Tan, S. (2023). ChatGPT: Bullshit spewer or the end of traditional assessments in higher education? Journal of Applied Learning & Teaching, 6(1), 1–22.

Sarstedt, M., Ringle, C. M., & Hair, J. F. (2022). Partial least squares structural equation modeling. In C. Homburg, M. Klarmann, & A. Vomberg (Eds.), Handbook of market research (pp. 587–632). Springer International Publishing. https://doi.org/10.1007/978-3-319-57413-4_15

Sitar-Taut, D.-A., & Mican, D. (2021). Mobile learning acceptance and use in higher education during social distancing circumstances: An expansion and customization of UTAUT2. Online Information Review, 45(5), 1000–1019. https://doi.org/10.1108/OIR-01-2021-0017

Slade, E. L., Dwivedi, Y. K., Piercy, N. C., & Williams, M. D. (2015). Modeling consumers’ adoption intentions of remote mobile payments in the United Kingdom: Extending UTAUT with innovativeness, risk, and trust. Psychology & Marketing, 32(8), 860–873. https://doi.org/10.1002/mar.20823

Strzelecki, A. (2023). To use or not to use ChatGPT in higher education? A study of students’ acceptance and use of technology. Interactive Learning Environments, 1–14. https://doi.org/10.1080/10494820.2023.2209881

Sullivan, M., Kelly, A., & McLaughlan, P. (2023). ChatGPT in higher education: Considerations for academic integrity and student learning. Journal of Applied Learning & Teaching, 6(1), 1–10. https://doi.org/10.37074/jalt.2023.6.1.17

Tamilmani, K., Rana, N. P., Wamba, S. F., & Dwivedi, R. (2021). The extended unified theory of acceptance and use of technology (UTAUT2): A systematic literature review and theory evaluation. International Journal of Information Management, 57, 102269. https://doi.org/10.1016/j.ijinfomgt.2020.102269

Tamilmani, K., Rana, N. P., & Dwivedi, Y. K. (2019). Use of ‘habit’ is not a habit in understanding individual technology adoption: A review of UTAUT2 based empirical studies. In A. Elbanna, Y. K. Dwivedi, D. Bunker, & D. Wastell (Eds.), Smart working, living and organising (pp. 277–294). https://doi.org/10.1007/978-3-030-04315-5_19

Teubner, T., Flath, C. M., Weinhardt, C., van der Aalst, W., & Hinz, O. (2023). Welcome to the era of ChatGPT et al. Business & Information Systems Engineering, 65(2), 95–101. https://doi.org/10.1007/s12599-023-00795-x

Thorp, H. H. (2023). ChatGPT is fun, but not an author. Science, 379(6630), 313–313. https://doi.org/10.1126/science.adg7879

Tlili, A., Shehata, B., Adarkwah, M. A., Bozkurt, A., Hickey, D. T., Huang, R., & Agyemang, B. (2023). What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learning Environments, 10(1), 15. https://doi.org/10.1186/s40561-023-00237-x

Touvron, H., Lavril, T., Izacard, G., Martinet, X., Lachaux, M.-A., Lacroix, T., Rozière, B., Goyal, N., Hambro, E., Azhar, F., Rodriguez, A., Joulin, A., Grave, E., & Lample, G. (2023). LLaMA: Open and efficient foundation language models. http://arxiv.org/abs/2302.13971

Twum, K. K., Ofori, D., Keney, G., & Korang-Yeboah, B. (2022). Using the UTAUT, personal innovativeness and perceived financial cost to examine student’s intention to use E-learning. Journal of Science and Technology Policy Management, 13(3), 713–737. https://doi.org/10.1108/JSTPM-12-2020-0168

van Dis, E. A. M., Bollen, J., Zuidema, W., van Rooij, R., & Bockting, C. L. (2023). ChatGPT: Five priorities for research. Nature, 614(7947), 224–226. https://doi.org/10.1038/d41586-023-00288-7

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin, I. (2017). Attention is all you need. In I. Guyon, U. Von Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, & R. Garnett (Eds.), Advances in neural information processing systems (Vol. 30). Curran Associates, Inc. https://proceedings.neurips.cc/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf

Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27(3), 425–478. https://doi.org/10.2307/30036540

Venkatesh, V., Thong, J. Y. L., & Xu, X. (2012). Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Quarterly, 36(1), 157–178. https://doi.org/10.2307/41410412

Venkatesh, V., Thong, J., & Xu, X. (2016). Unified theory of acceptance and use of technology: A synthesis and the road ahead. Journal of the Association for Information Systems, 17(5), 328–376. https://doi.org/10.17705/1jais.00428

Weizenbaum, J. (1966). ELIZA—a computer program for the study of natural language communication between man and machine. Communications of the ACM, 9(1), 36–45. https://doi.org/10.1145/365153.365168

Williamson, B., Macgilchrist, F., & Potter, J. (2023). Re-examining AI, automation and datafication in education. Learning, Media and Technology, 48(1), 1–5. https://doi.org/10.1080/17439884.2023.2167830

Yamane, T. (1967). Statistics: An introductory analysis (2nd ed.). Harper and Row.

Yu, C.-W., Chao, C.-M., Chang, C.-F., Chen, R.-J., Chen, P.-C., & Liu, Y.-X. (2021). Exploring behavioral intention to use a mobile health education website: An extension of the UTAUT 2 model. SAGE Open, 11(4), 1–12. https://doi.org/10.1177/21582440211055721

Zacharis, G., & Nikolopoulou, K. (2022). Factors predicting University students’ behavioral intention to use eLearning platforms in the post-pandemic normal: An UTAUT2 approach with ‘Learning Value.’ Education and Information Technologies, 27(9), 12065–12082. https://doi.org/10.1007/s10639-022-11116-2

Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education – where are the educators? International Journal of Educational Technology in Higher Education, 16(1), 39. https://doi.org/10.1186/s41239-019-0171-0

Zembylas, M. (2023). A decolonial approach to AI in higher education teaching and learning: Strategies for undoing the ethics of digital neocolonialism. Learning, Media and Technology, 48(1), 25–37. https://doi.org/10.1080/17439884.2021.2010094

Zwain, A. A. A. (2019). Technological innovativeness and information quality as neoteric predictors of users’ acceptance of learning management system. Interactive Technology and Smart Education, 16(3), 239–254. https://doi.org/10.1108/ITSE-09-2018-0065

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Faculty of Informatics and Communication of the University of Economics in Katowice.

Conflicts of Interest

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Strzelecki, A. Students’ Acceptance of ChatGPT in Higher Education: An Extended Unified Theory of Acceptance and Use of Technology. Innov High Educ 49, 223–245 (2024). https://doi.org/10.1007/s10755-023-09686-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10755-023-09686-1