Abstract

Many healthcare practices expose people to risks of harmful outcomes. However, the major theories of moral philosophy struggle to assess whether, when and why it is ethically justifiable to expose individuals to risks, as opposed to actually harming them. Sven Ove Hansson has proposed an approach to the ethical assessment of risk imposition that encourages attention to factors including questions of justice in the distribution of advantage and risk, people’s acceptance or otherwise of risks, and the scope individuals have to influence the practices that generate risk. This paper investigates the ethical justifiability of preventive healthcare practices that expose people to risks including overdiagnosis. We applied Hansson’s framework to three such practices: an ‘ideal’ breast screening service, a commercial personal genome testing service, and a guideline that lowers the diagnostic threshold for hypertension. The framework was challenging to apply, not least because healthcare has unclear boundaries and involves highly complex practices. Nonetheless, the framework encouraged attention to issues that would be widely recognised as morally pertinent. Our assessment supports the view that at least some preventive healthcare practices that impose risks including that of overdiagnosis are not ethically justifiable. Further work is however needed to develop and/or test refined assessment criteria and guidance for applying them.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In traditional Russian roulette, a player (P) voluntarily exposes her or himself to a one in six possibility of inflicting a lethal wound when pulling the trigger.Footnote 1 Whatever we might think about this as a pastime, P does not seem to be wronged. In contrast, if P changes the game by firing the roulette gun at an unsuspecting victim (V), the situation becomes morally murky. Even if the chamber is empty, it seems plausible to claim that P has wronged V by exposing her to the possibility of a lethal wound, whether or not V is actually harmed. This example points to the challenge of morally appraising risk: if it is wrong to harm V, then to what extent is it wrong to impose the possibility of that same harm to V? [1] If the gun had 1000 chambers and only one bullet, would P be less wrong in playing Russian roulette with V? According to various scholars, while moral philosophy relies extensively on the idea of harming as a wrong, prevailing normative theories have trouble explaining when and why it is wrong to impose risk in which potential harms are not inevitable or certain [1, 12, 13, 26]. Sven Ove Hansson has recently attempted to address this problem and to specify the conditions under which risk imposition may be ethically justifiable in the context of complex social practices [12, 13].

This paper explores the ethical justifiability of imposing risk in healthcare, with a focus on disease prevention or early detection practices offered to individuals with the ostensible goal of reducing disease burden in populations. Specifically, we use Hansson’s approach to consider the ethical acceptability of preventive healthcare practices that impose risks associated with overdiagnosis.

The paper is organised into six sections. The first introduces the multivalent concept of risk, and its connection to related concepts such as uncertainty. The second summarises key challenges in the ethical appraisal of risk imposition, including the failure of traditional ethical analysis approaches. Section three outlines Hansson’s criteria for such appraisal. In section four, overdiagnosis (ODx) and associated risks are explained, while section five uses Hansson’s criteria to ethically evaluate the risks associated with preventive practices that can generate overdiagnosis. The various challenges that arise in the process are discussed in section six. We conclude that Hansson’s criteria for risk evaluation, while difficult to apply, nonetheless are valuable in raising important questions about the ethical justifiability of risk-imposing healthcare practices.

Risk, Probability and Uncertainty

Risk is a slippery concept. It is a term of art in many disciplines, and tends to be used with different meaning—or at least different inflection—in each. It has been an area of active interdisciplinary research for more than 40 years. And it is an everyday term, so carries common-use meanings in additional to technical ones. Hansson (on whom we will rely heavily in this analysis) notes that in common usage, a risky situation is one in which an ‘undesirable event’ will possibly, but not certainly, occur [14]. Negative valence—the undesirability of the event in question—is generally built into the concept of risk, even though risk-taking can be positive or generate benefits (for example in the context of successful investment). Hansson outlines five meanings of the word risk commonly used across disciplines, shown in Box 1 [14].

Hansson notes that although the technical definitions in Box 1 sometimes enter into the philosophical literature, philosophers tend to use the word risk in the informal sense, that is, to refer to “a state of affairs in which a desirable or undesirable event may or may not occur”[14, n.p.], and that the technical definitions have not influenced the common-language usage of the word.

Terminological matters are further complicated by the relation between risk and related concepts such as uncertainty, ignorance and indeterminacy. Brian Wynne, for example, has produced a taxonomy that seeks to tease-apart these risk-related concepts (Box 2) [37].

This taxonomy (which Wynne characterised as a taxonomy of uncertainty rather than of risk) was created in part to connect up the co-constitutive scientific, social, cultural and moral dimensions of uncertainty and risk, and to argue that normative responsibilities and commitments are concealed in the ‘natural’ discourse of the sciences. His taxonomy helpfully draws out dimensions of risk [37], but also calls to mind Hansson’s warning that in practice these distinctions are often less clear than we would like them to be. Probabilities, for example, are very rarely clearly known, so all decisions are made under some degree of uncertainty [14]. Hansson has argued that it may be more fruitful to focus on action—specifically the actions of imposing and taking risk—and to ask under what conditions it might be justified to override someone’s assumed prima facie right not to be exposed to risk [14]. It is to these questions—and the inadequacy of traditional ethical theory in dealing with them—that we now turn. In what follows, we use the term ‘risk’ in Hansson’s informal sense to refer to situations where an unwanted event may occur, the probability of which may be known or unknown. Thus our usage incorporates Wynne’s notions of risk and uncertainty; we assume, along with Hansson, that the line between risk and uncertainty is less clear than we would like it to be.

The Challenge of Ethically Appraising Risk Imposition

As noted in the introduction, imposing risk poses a puzzle for moral theories that tend to appraise determinate rather than probabilistic situations [1, 13, 26]. Determinate moral theories can tell us that it is wrong to kill an innocent, but not whether it is likewise wrong to impose a risk of death on an innocent, even if the activity does not cause her death [1]. The probability entailed by the risk seems morally important: many would agree that an action with a 99.9% chance of killing an innocent is more morally blameworthy than one with a 0.0001% chance of killing her, but moral theories lack a principled way of determining just what probability (or, indeed what associated degree) of harm is morally acceptable. As most of our actions increase the likelihood of some morally condemned outcome (such as the very slight possibility of a range of possible harms to others), prohibiting all actions that entail risk would lead to paralysis. However, various approaches to moral decision making, including utilitarianism, deontology, natural rights and contract theory all lack an obvious way to respond to questions about when and why it can be ethically justifiable to impose risk on others [1, 13, 26]. See Box 3.

Hansson works from the recognition that when human actions and their consequences are morally appraised according to the tenets of particular moral theories, the focus is typically on one or a limited set of actors, actions and outcomes, and both the range of actions and their consequences are assumed to be determinate. The Russian roulette example draws our attention to ethical discomfort with imposing risk (in this case of a lethal wound) via a single action, but it is an artificial example and of limited use because it effectively eliminates the need to consider potential beneficial as well as potential harmful consequences of actions. Hansson stresses that in many social situations there is an complex mix of actions and outcomes at play. Prevailing moral theories might tell us that action X and its certain consequence Y are impermissible, but they say little about how to evaluate action X* which has a mixture of unknown and/or uncertain consequences, including Y [13].

The challenge thus lies in morally appraising situations in which potential actions have a complex and as yet uncertain and/or unknown set of consequences. These situations are common in healthcare where services and interventions typically have many variants, each of which may have an indeterminate mixture of outcomes at both population and individual levels. In “Overdiagnosis” section, we examine the risks of ODx and associated harms as generated by some preventive healthcare practices, but before then, the section “Hansson’s criteria for the ethical acceptability of risk imposition” summarises Hansson’s criteria for assessing the ethical acceptability of risk imposition.

Hansson’s Criteria for the Ethical Acceptability of Risk Imposition

In response to the challenge of morally appraising risk imposition, Hansson proposes a set of criteria based on a loosely contractarian approach that considers not only values and probabilities, but also duties, agency, intentions, consent, rights and equity. The central premise of his argument is that everyone has a prima facie right not to be exposed to risk, but that this right can be overridden in certain circumstances [12, 13].

We do not reproduce Hansson’s arguments in detail, but start with his conclusion about when it is acceptable to impose risk:

Exposure of a person to risk is acceptable if:

- (i)

this exposure is part of a persistently justice-seeking social practice of risk-taking that works to her advantage and which she de facto accepts by making use of its advantages, and

- (ii)

she has as much influence over her risk-exposure as every similarly risk-exposed person can have without loss of the social benefits that justify the risk exposure [13, p. 108].

Hansson aims to specify the conditions under which risk imposition is morally acceptable. By ‘social practice of risk taking’, he means collections of activities about which decisions can be made regarding risk exposure. This can be contrasted with being required to make decisions on each individual risk-exposing activity. For example, he takes driving cars to be a complex risk-imposing social practice that is to everyone’s mutual benefit and is therefore permissible. He recognises that not all individuals will be similarly risk-exposed, but conceives of complex chains of risk exchanges that are morally acceptable to the extent that the risks taken by one group, for example in their workplace, are “exchanged” for risks taken by others who might live in proximity to polluting factories, where both the work in question and the polluting factory’s products are to the mutual advantage of all concerned. Given the difficulty of working out the risk exchanges across societal wholes, he recommends considering risks and benefits as they occur within sectors, such as healthcare or transportation [13].

Hansson’s de facto acceptance clause aims to protect individuals. The requirement that individuals de facto accept and benefit from participating in the relevant social practice aims to ensure that individuals cannot be treated as impersonal and interchangeable entities, as, for example, occurs in expected utility approaches that rely upon aggregations. The justice clause aims to exclude situations in which some individuals are exposed to risk for the benefit of others in exchanges that provide some level of advantage to all, but are nonetheless unfair. Here Hansson has in mind something like exploitative and dangerous labour conditions that provide some minimal level of income to workers. These render workers better off in some sense than if they had no employment, but the vast majority of benefits accrue unfairly to their employers and perhaps other elite groups who are not exposed to the work risks. Hansson does not require a perfectly just system of risk distribution, partly because it is unclear what this would entail. Rather, his justice clause seeks to dictate that the social practices in question should be aiming towards social justice, and in particular seeking to limit the risk exposures of people who suffer other social and economic disadvantages [13].

Hansson’s second criterion concerns influence, control and the limits of veto powers that individuals have over others’ potentially risk-imposing activities. It seeks to exclude situations in which otherwise acceptable, justice-seeking risks are imposed by a benevolent dictator; or where each individual is able to dictate their own conditions of risk exposure and so effectively preclude social practices. It is a requirement for social cooperation in which each individual has maximal equal influence [13].

The examples Hansson provides are quite brief and general and we have not found a detailed application of his criteria to a specific social practice. In the next section, we explain ODx and the imposition of risk involved in disease prevention or early detection practices associated with ODx, before investigating whether these healthcare practices meet Hansson’s criteria in the subsequent section, “Assessing the ethical acceptability of imposing risk of ODx using Hansson’s criteria”.

Overdiagnosis

There is longstanding recognition of the fact that health measures aimed at early detection or prevention, may entail uncertain outcomes involving harm [32]. However, the risks of preventive healthcare have received renewed attention in the context of overdiagnosis (ODx) arising from widespread uptake of interventions such as cancer and other disease screening. ODx refers to the phenomenon of individuals receiving diagnoses (often accompanied by interventions) that, on balance, lead to greater harm than benefit, making ODx one of the risks of various preventive practices.Footnote 2 [18, 23, 30] ODx is a counter-intuitive phenomenon as detecting instances of disease is usually considered to be beneficial (given assumptions about the availability of effective treatments and the value of knowledge about prognoses). ODx upends these assumptions as there are more harms than benefits from the diagnosis, often because the detected condition would not have progressed to advanced disease, thus there is no benefit to detection. ODx is also counterfactual in that the diagnosis and any associated interventions cause harm on balance because people’s overall health states and experiences are worse than they would otherwise have been without the diagnostic intervention.

As well as being counter-intuitive and counterfactual, ODx is mostly undetectable at the individual level. In any population diagnosed with a particular disease, it rarely possible to distinguish individuals who receive a health-benefitting diagnosis from those who are overdiagnosed. Instead, ODx is primarily visible as a population-level phenomenon [8].

ODx is associated with several known precursors including systematic programs of disease identification such as cancer screening programs; and the broadening of diagnostic criteria for particular diseases, creating a larger cohort who then carry the disease label) [23].

Overdiagnosis as an Example of Risk Imposition

Healthcare is inherently risky as it always involves the possibility of iatrogenic harm [32]. However, some risks are willingly undertaken by individuals in response to their own requests for healthcare and are, at least to some extent, mitigated by fully informed and valid consent. These two features of request and consent suggest that therapeutic care is not a good fit with Hansson’s notion of social practices involving risk imposition. In contrast, preventive healthcare interventions are social practices in that they are part of the organized efforts of society to improve health. They are offered to healthy people in a systemic and usually state-sanctioned way rather than in response to an individual health concern. Thus prima facie, preventive practices seem to be the kinds of social practices that involve potentially ethically problematic risk imposition.

Preventive care clearly falls within the scope of actions identified by Hansson as containing a mixture of outcomes that are indeterminate both in terms of value and probability—that is, preventive care is risky. For any individual patient, a preventive care action such as screening has an unknown mixture of benefits and harms. The individual may have the consequences of serious disease averted, or she may receive an ODx from which she gains no overall benefit. Once we turn to ODx, both the probability of ODx occurring, and the probability and nature of specific harms associated with ODx are uncertain. As mentioned, ODx generally cannot be identified at the individual level, so people can be offered only population-level information about the likelihood of ODx occurring. This information is often expressed as a probability estimate. Numerical probability estimates are widely used in healthcare, but are by no means as reliable as the probability of throwing a six with a fair dice; in Wynne’s terms they are uncertain [37]. There is no general probability of ODx. Instead, the probability of being overdiagnosed must be specified in relation to particular diseases and particular diagnostic situations (e.g. probability of screening-detected breast cancer ODx [22, 35]; probability of gestational diabetes ODx during routine antenatal care etc.). Calculating the probability of disease-specific ODx is epistemically challenging such that the methods and results are often bitterly contested [28]. Probability estimates may lack precision or have questionable transferability due to sampling and study design issues [6]. For example, estimates of the probability of ODx generated by breast cancer screening lie between 10 and 50% [34], analogous to a game of Russian roulette when the gun might contain one, two or three bullets. In addition, there are various ways of describing the probability of ODx for a condition. For example, the rate of ODx generated by breast-cancer screening can be expressed in relation to numbers of women invited to be screened, women actually screened, or cancers detected by screening [20].

As well as uncertainty about the probability of ODx for any particular condition, there is further uncertainty about the nature, magnitude and likelihood of specific sequelae (both harmful and beneficial) of ODx itself, and how to deal with variations in individuals’ valuation of these [8]. One set of physical, psychological, social and economic harms arises from the offer of intervention and the imposed need to select an option from a menu on which there are no risk-free alternatives. Once the spectre of undiagnosed but potentially treatable disease is raised, the individual is faced with a series of options in which various undesirable events or harms are possible, including: making a poor decision, becoming anxious about doing so, experiencing regret, being pressured and/or negatively judged by others for whichever option she chooses and so forth. The complexity of the systems in which these options play out means that each one carries a mixture of outcomes with uncertain values and probabilities. A second set of harms arises from receiving a diagnostic label (such as anxiety, unwarranted patienthood or change in identity), while a third set flows from the cascade of interventions that follow diagnosis (including the costs of time off work and ongoing healthcare).

Thus while the risks arising from ODx are not unique in healthcare, ODx makes a good case study for examining the ethical acceptability of risk imposition in Hansson’s terms. This is because ODx is a systemic problem, arising from preventive practices that are offered and delivered as services to individuals with justifications based on population level evidence of benefit, and often organised, at least to some extent, at a population level. ODx reflects structuring decisions made within healthcare systems [6].

Current Approaches to Decision-Making About Exposure to Risk of Harm Through ODx

The introduction of preventive practices that risk ODx is justified at a population level by weighing up potential benefits and burdens (a variety of expected utility analysis), based on the assumption that for an action to be acceptable, the likely overall benefits must outweigh the likely overall harms [13]. Using this approach, for example, the benefit of reducing breast cancer mortality in women who are screened has served as the justification for governments to introduce population screening for breast cancer, despite the risks (of ODx and other harms, such as pain from mammography or false positives). This expected utility approach tallies risks and potential benefits at a population level to reach a decision about implementing, continuing or discontinuing interventions whose effects can be measured at a population level. The approach relies on assigning probabilities and values to each outcome on the assumption that these can be tallied up in a conclusive way.

There are recognised problems with an expected utility approach to decision making in conditions of risk [13, 26]. First, it is practically impossible to derive a single metric that does justice to the variety of potential outcomes, values and disvalues at stake in any decision. Some outcomes may be incommensurable, the significance of a particular disvalued outcome may vary with context and across individuals, and people (including policy leaders) have different levels of tolerance for risk. Some benefits and harms will be unknown because they have not yet occurred or been recognised, while there may not be agreement about or an explicit method for enumerating what counts as a relevant harm.

Next, the probability estimates required for expected utility approaches may be derived from different sources and will be more or less uncertain. Importantly, not all of the harms associated with ODx can be assessed quantitatively. The possible loss of trust in healthcare secondary to high rates of ODx is one example that illustrates the challenge of creating an adequate hybrid metric and undermines the idea that the harms associated with ODx, and hence the magnitude of the risk can be fully quantified.

Finally, the focus on probabilities and (dis)utilities excludes other morally relevant features. It takes more than knowledge of the probabilities and dis/values associated with specific outcomes to make a morally competent decision. Risks do not arise in a vacuum but attach to people for whom it matters morally why and by whom the risk is imposed, whether and how the people have contributed or consented to the risk, what relevant duties are observed or breached in the imposing of risk and how the risks are distributed in comparison with potential benefits between affected individuals [2, 12].

We note that one approach to addressing the ethical problem of imposing risk of ODx is via valid patient consent—typically via informed or shared decision making (SDM). This approach assumes that it is ethically acceptable to perform actions that impose risk of ODx if the risk-exposed individual understands and voluntarily agrees [3, 13]. Several authors have however, identified the ethical inadequacy of consent as a response to the risk of ODx [6, 29, 31]. One concern is the poor quality of the information available for an SDM approach, such as the lack of consensus about the probability of ODx itself occurring and the nature of the associated harms [31]. Second, narrow SDM approaches may not adequately support autonomy in situations where the information is complex or there are strong social pressures towards one decision rather than another [9, 10]. Both of these conditions apply in at least some overdiagnosing practices. Third, relying upon informed consent represents a potentially unfair shifting of the epistemic burden onto individual patients rather than policy makers or healthcare professionals who have training and expertise in navigating complex medical and epidemiological data [29]. Finally, decision aids often simplify information and understate the degree of uncertainty, presenting the decision as a clear choice between absolute numbers of persons harmed and benefited. Forcing this kind of trade-off seems likely to trigger well-recognised cognitive biases and moral intuitions—for example, willingness to allow many people to be harmed to save one life, or valuing of identified over statistical lives—which complicates the ethical justification of such decision-making [6].

In summary, ODx occurs in the context of complex and systemic healthcare practices, and raises a number of challenging questions concerning risk, for which current population level and individual level approaches seem ethically inadequate. This suggests that overdiagnosing practices may benefit from investigation using Hansson’s approach to analysing the ethics of risk imposition [13].

Assessing the Ethical Acceptability of Imposing Risk of ODx Using Hansson’s Criteria

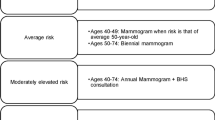

Any attempt to ethically appraise the imposition of risk of ODx immediately faces the problem that ODx is heterogeneous and the different practices associated with ODx have widely varying potential benefits and harms. This suggests that reasoning about the ethical acceptability of risk imposition in ODx needs to proceed case by case, so as to be responsive to the differing features of each case. Here we focus on three quite different interventions that risk ODx. Although the interventions differ, they all fit within Hansson’s notion of a social practice as they all: (1) rely upon the use of population level data for estimates of risks and benefits, and (2) are widely promoted and/or enforced via a combination of the market, professional standard-setting, or health system structuring. Thus, we propose, these three practices all represent collective forms of social practice of the kind in which Hansson is interested. The first is a breast cancer screening program that is publicly funded, free at point of service, and articulated with high quality universally accessible clinical care (‘Ideal breast cancer screening’) [17, 19]. The second is the introduction and marketing of a new commercial diagnostic technology for personal genome sequencing [16]. The third is promulgation through health systems of a clinical practice guideline developed by experts that lowers the diagnostic threshold for hypertension [4, 36].

To facilitate the application of Hansson’s criteria to these three overdiagnosing practices we re-framed them as four questions, responses to which can be found in Tables 1, 2, 3 and 4 with a summary in Table 5:

-

1.

Is the risk exposure part of a persistently justice-seeking social practice of risk taking?

-

2.

Does the social practice of risk-taking work to individuals’ advantage?

-

3.

Do individuals de facto accept the risk exposure?

-

4.

Does each risk-exposed individual have as much influence over her risk-exposure as every similarly risk-exposed person can have without loss of the social benefits that justify the risk exposure?

Tables 1, 2, 3, 4 and 5 show that none of these three interventions meet Hansson’s criteria for justified risk imposition. The ideal breast cancer screening program is, broadly speaking, a justice-seeking social practice, but it is not obviously to individual women’s benefit, is arguably not widely understood to be a risk-taking practice and individuals generally have little influence over the nature of their risk exposure as they are poorly informed as consumers and excluded from the design and implementation of the program. Commercial personal genome sequencing services are not justice-seeking and nor do they permit equal influence over risk exposure. The responses to the other two questions are equivocal and would depend upon the particularities of the service in question. Assessing the acceptability of risk imposition associated with the development and implementation of clinical practice guidelines (CPGs) is complex. While the in-principle aims of CPGs might be justifiable on Hansson’s account, the particularities of each CPG are relevant, and our example of a CPG that lowers the diagnostic threshold fails to meet any of Hansson’s criteria. The formulation of recommendations requires complex assessments of benefits, risks and of various ethical, legal and social considerations. Conflicts of interest are highly likely within expert groups [13], and may be particularly problematic among expert committees that lower diagnostic thresholds in medicine [24].

Challenges with Implementing Hansson’s Criteria

As Tables 1, 2, 3, 4 and 5 show, it is a complex matter attempting to apply Hansson’s criteria to examples of overdiagnosing practices. A major challenge relates to the consideration of healthcare (or aspects of it) as a risk-imposing social practice. Healthcare is highly complex and the organisation and funding of it varies considerably between jurisdictions. Healthcare is more obviously akin to the social practices that Hansson identifies (such as driving cars) in countries with universally accessible healthcare free at the point of need, but less obviously so in countries with for-profit healthcare or mixed public–private systems. In addition, the boundaries of healthcare are poorly delineated and contested even in publicly-funded universal systems, making it difficult to determine what should be considered part of the complex exchange of risks and benefits Hansson envisages within practices [13].

Taken as a notional whole, it is far from clear that it should be necessary to accept the risks of ODx as part and parcel of accessing the overall benefits of healthcare, especially where the risk of ODx results from redefining diseases, as occurred with the guideline for hypertension. In addition, the overall functioning of the healthcare system does not seem to rely upon the presence of specific services or interventions, in perhaps the way that the traffic system as a whole requires all of its various elements (although even this is arguable).

Scoping and defining the social practice of interest is crucial to the application of Hansson’s criteria because the way that this is done has implications for assessing whether the practice works to an individual’s advantage, her acceptance of it and her degree of influence over her risk exposure. If we take the practice to be healthcare as a whole, then it is probably uncontroversial that having a universal healthcare system is to the advantage of all individuals. But if we focus on specific preventive practices that risk ODx, it becomes more problematic to claim that they advantage individuals. Resolution of the problem would depend on investigating people’s views about the value of having particular screening programs or diagnostic thresholds in their healthcare system.

Assessing individuals’ de facto acceptance of a practice is also challenging. If the practice is universal healthcare per se, it will be difficult to assess individuals’ acceptance or rejection of the practice, although people could be asked via political processes such as referendums. If the practice is defined at the level of a service or intervention, empirical assessment of its acceptance might seem more feasible, but it would be problematic to assume that individuals who accept an overdiagnosing practice fully—or even adequately—understand its implications and recognise relevant nuances (such as a contested evidence base or influential conflicts of interest), and it is a further empirical question whether people understand the balance of risks and benefits, even when they express a strong opinion in favour of or against a practice. Assessment of acceptance is further complicated if there are no alternatives: people’s tolerance of risks in an existing system is not a good indicator of what they would accept if they had more options.

The scope for people to have equal influence over their risk exposure in healthcare is in some senses highly limited. Health services/practices are mostly shaped by expert medical knowledge implemented within government policy and in competition with other priorities. There is often little room for ordinary citizens to contribute to policy making regarding the nature and extent of risks that could be acceptably imposed given the kinds of benefits that might accompany the risks. Initiatives such as citizens’ juries offer mechanisms in keeping with the democratic ethos Hansson espouses, but no healthcare system has contemplated using deliberative processes to inform all of its decisions. Some have attempted this in a limited way—for example, the NICE Citizens’ Council in the UK [27], but the resource intensiveness and limitations on this process suggest it would not be practicable to enlist citizens in all necessary decisions. In fact, it is unclear how this criterion can ever be met as even in Hansson’s example of driving, citizens have few formal opportunities to influence traffic regulations. The criterion could be formally fulfilled by saying everyone was equally voiceless regarding traffic laws, but this manner of fulfilment effectively renders the criterion powerless: denying everyone influence over the practice in question defeats the object of the criterion.

Finally, Hansson says little regarding whether all the criteria must be met in order to justify exposing individuals to risk, and whether meeting each criterion admits of degrees or must be absolute. As we have shown, none of our three examples met the all the criteria. Prima facie, then, we might conclude that all three practices involve unjustifiable imposition of risk of ODx.

Despite the challenges in implementing Hansson’s criteria for specific practices, we believe that the exercise has been valuable. Healthcare, especially preventive and early detection practices, are risky. As the harms are potentially serious and far-reaching, we need a way of morally appraising the acceptability of imposing the relevant risks. To date, Hansson offers the most comprehensive criteria for this task, justifying a detailed investigation. Applying Hansson’s criteria has illuminated the complexity of practices that impose risk and the challenges of assessing the justifiability of those practices, while also identifying reasons why some risk imposing practices may be unjustifiable.

Conclusion

The results of our analysis are thought provoking in that none of the three preventive and early detection practices that risk ODx meet Hansson’s proposed criteria for ethically justifiable risk imposition. Should we therefore conclude that these three practices are unjustified in imposing risks of ODx? Such a conclusion is consistent with views about the ethical unacceptability of at least some practices that risk ODx [7, 8, 29, 31]. The value of Hansson’s approach lies in forcing a step back to ask specific and pertinent questions about particular health care practices. In so doing, it helps us to move beyond potentially simplistic tallies of benefits and burdens, and, importantly, to incorporate considerations of justice, both substantive and procedural. Prima facie, this seems morally correct. It would be difficult to argue that healthcare practices that risk ODx should not be broadly justice seeking. The other ideas in Hansson’s account also seem consistent with much contemporary moral and political reasoning: practices that risk ODx should be acceptable to the population in question and be to their overall advantage, and citizens should have some say over the implementation of these practices. That the three instances we have considered fail Hansson’s criteria is perhaps a strong indication that at least some healthcare practices that impose risk of ODx are not justifiable.

Further work to refine Hansson’s criteria will be necessary to enhance their value for ethical analyses of risk-imposing practices. This will include investigating ways of assessing new risk-imposing interventions and potential changes to health policies or services that would shift risks across populations and individuals [15]. Following our attempt to apply the criteria to concrete examples, we conclude that their primary contribution may be in broadening the range of morally relevant factors that should be taken into account when decisions are made about interventions that will impose risk. Whether or not they can constitute a system for normative reasoning that clearly applies to preventive healthcare practices will require further testing, application and debate.

Notes

Russian roulette is a game of chance in which a person spins the cylinder of a hand gun which has one bullet and five empty spaces in its chamber, then aims the gun at their head and pulls the trigger.

References

Altham, J. E. J. (1984). II—Ethics of risk. Proceedings of the Aristotelian Society, 84(1), 15–30.

Baylis, F., Kenny, N. P., & Sherwin, S. (2008). A relational account of public health ethics. Public Health Ethics, 1(3), 196–209.

Beauchamp, T. L., & Childress, J. F. (2013). Principles of biomedical ethics (7th ed.). New York: Oxford University Press.

Bell, K. J. L., Doust, J., & Glasziou, P. (2018). Incremental benefits and harms of the 2017 American College of Cardiology/American Heart Association high blood pressure guideline. JAMA Internal Medicine, 178(6), 755.

Biller-Andorno, N., & Jüni, P. (2014). Abolishing mammography screening programs? A view from the Swiss Medical Board. The New England Journal of Medicine, 370(21), 1965–1967.

Carter, S. M. (2017). Overdiagnosis, ethics, and trolley problems: Why factors other than outcomes matter-an essay by Stacy Carter. BMJ, 358(2), j3872.

Carter, S. M., & Barratt, A. (2017). What is overdiagnosis and why should we take it seriously in cancer screening. Public Health Research & Practice. https://doi.org/10.17061/phrp2731722.

Carter, S. M., Degeling, C., Doust, J., & Barratt, A. (2016). A definition and ethical evaluation of overdiagnosis. Journal of Medical Ethics, 42(11), 705–714.

Cribb, A., & Entwistle, V. A. (2011). Shared decision making: Trade-offs between narrower and broader conceptions. Health Expectations, 14(2), 210–219.

Entwistle, V. A., Carter, S. M., Trevena, L., Flitcroft, K., Irwig, L., McCaffrey, K., et al. (2008). Communicating about screening. BMJ, 337, a1591.

Gilbert Welch, H., Schwartz, L., & Woloshin, S. (2011). Overdiagnosed: Making people sick in the pursuit of health. Boston, MA: Beacon Press.

Hansson, S. O. (2003). Ethical criteria of risk acceptance. Erkenntnis, 59, 291–309.

Hansson, S. O. (2013). The ethics of risk: Ethical analysis in an uncertain world. Basingstoke: Springer.

Hansson, S. O. (2018 Fall). Risk. In E. N. Zalta (Ed.), The Stanford Encyclopedia of Philosophy. https://plato.stanford.edu/archives/fall2018/entries/risk/.

Hansson, S. O. (2018). How to perform an ethical risk analysis (eRA). Risk Analysis, 38(9), 1820–1829.

Hawkins, A. K., & Ho, A. (2012). Genetic counseling and the ethical issues around direct to consumer genetic testing. Journal of Genetic Counseling, 21(3), 367–373.

Hersch, J., Barratt, A., Jansen, J., Irwig, L., McGeechan, K., Jacklyn, G., et al. (2015). Use of a decision aid including information on overdetection to support informed choice about breast cancer screening: A randomised controlled trial. The Lancet, 385(9978), 1642–1652.

Hofmann, B. (2014). Diagnosing overdiagnosis: Conceptual challenges and suggested solutions. European Journal of Epidemiology, 29(9), 599–604.

Houssami, N. (2017). Overdiagnosis of breast cancer in population screening: Does it make breast screening worthless? Cancer Biology & Medicine, 14(1), 1–8.

Independent UK Panel on Breast Cancer Screening. (2012). The benefits and harms of breast cancer screening: An independent review. The Lancet, 380(9855), 1778–1786.

Marmot, M. G., Altman, D. G., Cameron, D. A., Dewar, J. A., Thompson, S. G., Wilcox, M., et al. (2012). The benefits and harms of breast cancer screening: an independent review: A report jointly commissioned by Cancer Research UK and the Department of Health (England). British Journal of Cancer, 108(11), 2205–2240.

Morrell, S., Barratt, A., Irwig, L., Howard, K., Biesheuvel, C., & Armstrong, B. (2010). Estimates of overdiagnosis of invasive breast cancer associated with screening mammography. Cancer Causes & Control: CCC, 21(2), 275–282.

Moynihan, R., Doust, J., & Henry, D. (2012). Preventing overdiagnosis: How to stop harming the healthy. BMJ, 344, e3502–e3502.

Moynihan, R. N., Cooke, G. P. E., Doust, J. A., Bero, L., Hill, S., & Glasziou, P. P. (2013). Expanding disease definitions in guidelines and expert panel ties to industry: A cross-sectional study of common conditions in the United States. PLoS Medicine, 10(8), e1001500.

Moynihan, R., Nickel, B., Hersch, J., Beller, E., Doust, J., Compton, S., et al. (2015). Public opinions about overdiagnosis: A national community survey. PLoS ONE, 10(5), e0125165.

Munthe, C. (2011). The Price of precaution and the ethics of risk. Dordrecht: Springer.

NICE. Citizen’s Council. (n.d.). https://www.nice.org.uk/get-involved/citizens-council. Accessed September 21, 2018.

Quanstrum, K. H., & Hayward, R. A. (2010). Lessons from the mammography wars. The New England Journal of Medicine, 363(11), 1076–1079.

Rogers, W. A. (2019). Analysing the ethics of breast cancer overdiagnosis: A pathogenic vulnerability. Medicine, Health Care and Philosophy, 22(1), 129–140.

Rogers, W. A., & Mintzker, Y. (2016). Getting clearer on overdiagnosis. Journal of Evaluation in Clinical Practice, 22(4), 580–587.

Rogers, W. A., Craig, W. L., & Entwistle, V. A. (2017). Ethical issues raised by thyroid cancer overdiagnosis: A matter for public health? Bioethics, 31(8), 590–598.

Skrabanek, P. (1990). Why is preventive medicine exempted from ethical constraints? Journal of Medical Ethics, 16, 187–190.

Welch, H. G., & Black, W. C. (2010). Overdiagnosis in cancer. Journal of the National Cancer Institute, 102(9), 605–613.

Welch, H. G., & Passow, H. J. (2014). Quantifying the benefits and harms of screening mammography. JAMA Internal Medicine, 174(3), 448–454.

Welch, H. G., Gilbert Welch, H., Prorok, P. C., James O’Malley, A., & Kramer, B. S. (2016). Breast-cancer tumor size, overdiagnosis, and mammography screening effectiveness. The New England Journal of Medicine, 375(15), 1438–1447.

Whelton, P. K., Carey, R. M., Aronow, W. S., Casey, D. E., Jr., Collins, K. J., Dennison Himmelfarb, C., et al. (2018). 2017 ACC/AHA/AAPA/ABC/ACPM/AGS/APhA/ASH/ASPC/NMA/PCNA Guideline for the Prevention, Detection, Evaluation, and Management of High Blood Pressure in Adults: Executive Summary: A Report of the American College of Cardiology/American Heart Association Task Force on Clinical Practice Guidelines. Hypertension, 71(6), 1269–1324.

Wynne, B. (1992). Uncertainty and environmental learning. Global Environmental Change, 2(2), 111–127.

Acknowledgements

Wendy Rogers was supported by Future Fellowship (FT130100346) from the Australian Research Council and a 2018 Residency from the Brocher Foundation. Stacy Carter was supported by National Health and Medical Research Council Centre for Research Excellence 1104136 and a 2018 Residence from the Brocher Foundation.

Funding

This study was funded by FT130100346 from the Australian Research Council (Rogers) and CRE 1104136 from the National Health and Medical Research Council (Carter), and Rogers and Carter both received support in the form of a 2018 month-long residency at the Brocher Foundation, Switzerland.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest. The research in this paper is conceptual therefore did not involve human participants or require review by a research ethics committee.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Rogers, W.A., Entwistle, V.A. & Carter, S.M. Risk, Overdiagnosis and Ethical Justifications. Health Care Anal 27, 231–248 (2019). https://doi.org/10.1007/s10728-019-00369-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10728-019-00369-7