Abstract

Careful considerations concerning the interpretation of quantum mechanics serves not only for a better philosophical understanding of the physical world, but can also be instrumental for model building. After a resume of the author’s general views and their mathematical support, it is shown what new insights can be gained, in principle, concerning features such as the Standard Model of the elementary particles and the search for new approaches to bring he gravitational force in line with quantum mechanics. Questions to be asked include the cure for the formal non-convergence of renormalised perturbation expansions, the necessary discreteness of physical variables at the Planck scale, and the need to reconcile these with diffeomorphism invariance in General Relativity. Finally, a program is proposed to attempt to derive the propagation laws for cellular automaton models of the universe.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Most of the modern theories for the elementary particles and the different forces by which they interact, assume the validity of quantum mechanics as it is usually defined. The Copenhagen Interpretation states precisely how the rules for quantum dynamics can be derived from classical dynamical laws, and subsequently how the resulting Schrödinger equation can be derived and solved. The resulting wave function dictates how probability distributions evolve in time. This interpretation is unique, and this skeleton of structures can now serve as a basis for new theories to be formulated. The mathematical basis of the skeleton is taken to be sufficiently solid to serve for this new task; it is assumed that no further modifications are needed.

The present author fully agrees with the idea that quantum mechanics as an underlying frame is perfectly acceptable. Hilbert space is a vector space, for which one can choose an orthonormal set of basis elements, to be referred to as the primary states a system can be in, and the state of a physical system is identified with a normalised vector in this space. The Schrödinger equation then dictates how a state vector is evolving. Born’s rule dictates that the probabilities can be identified with the absolute squares of the components of the state vector as it evolves.

There are three questions however, which are not answered in a completely satisfactory way by the Copenhagen scheme. The first is a pragmatic question. In practice, Hilbert space is very large, particularly when special or general relativity are included, and one consequence of this is that the interactions assumed by the postulated Schrödinger equation more often than not lead to divergent expressions, which have to be renormalised before they can be used at all to describe the world we see. One can perform these renormalisations order by order in a perturbation expansion, to find that often this expansion converges rapidly to the desired answer, such as the anomalous magnetic moment of charged, spinning particles. But in some cases the convergence is not good, so that one can ask a fundamental question: can we even define what the exact expressions aught to be, even if we do not have the ability to calculate them in practice, or, does the perturbation series lead to a meaningful result at all? Mathematicians have strong reasons to expect that the entire perturbation expansion diverges, in practically all of these theories.

T. Padmanabhan had his own theory on how a convergent theory for gravity could be arrived at, in principle [1]. He considers a hierarchy of ‘shells’ where the coarse-grained cells are regarded as consequences of partly integrating underlying shells. This should be repeated ad infinitum, but would this converge? [2,3,4,5] Are pictures such as these physically acceptable? We proceed by asking our second question:

When electrons are described as they orbit an atomic nucleus, we know explicitly what the correct Schrödinger equation is, as we believe that the classical theory is valid in the classical limit, and thus, Copenhagen gives us a unique Schrödinger equation. But the other elementary particles, and at higher orders the electrons as well, also react on forces different from the electro-magnetic ones. We can register these forces by doing experiments, and the outcome of these observations is that we have a fairly complex structure of Abelian and non Abelian gauge forces, each with their own interaction strengths, in combination with a couple of other direct interaction effects. Taken all together, we have more than 20 freely adjustable ‘constants of nature’. They can be measured with some reasonable accuracy, but should there not be a theory that precisely explains those numbers, making them calculable before we measure anything? The Copenhagen doctrine cannot provide answers to such questions.

A third question, finally, is extremely fundamental. Even in the early days of quantum mechanics, the question was asked what ‘really happens’ when we observe some quantum phenomenon. Heated debates at the time could not lead to complete agreement here, but the Copenhagen school then decided to ‘agree that we disagree’, since the answer of the question is irrelevant. All we see is the outcome of measurements, and we know what our theories say about them. ‘let us just agree on that’. Do not ask questions that cannot be answered by doing measurements.

It is this last dictum of Copenhagen that this author decided not to follow. It is true that the answer cannot come from measurements, but the question that can be answered is: what could it have been that happened in an experiment giving the observed results? Could it have been that the particles that we experimented upon, were behaving as totally classical objects? Maybe uncertainties in the initial state gave rise to uncertainties in the finally observed state, while nevertheless the outcome of the measurements are in agreement of the known quantum theory [6, 7].

We believe that the descriptions given within the Copenhagen doctrine have shown to be totally reliable, and this implies that we do not need to do new measurements; one can now merely ask the question; “Assume that quantum mechanics predicts the statistical abundances and correlations correctly, which behavioural law could have been followed to give these answers?” The question is meaningful [8], and here we shall argue that it is important also, since the answer(s) may be extremely helpful in trying to answer the other two questions.

The most logical starting point would be: start with assuming some basic evolution law that does not require Hilbert space procedures, but merely deterministic laws based on cause and effect. We call such evolution laws ‘classical’ for short, even if this is not entirely classical in the standard sense. Classical evolution equations are usually differential equations, whereas we would allow for discretised jumps as well.

2 Models

Our starting point is first to consider the completely general case of a ‘classical’ model in the sense described above [9]. For the time being we start with a system that can be in a finite number of physical states. At integer time steps, \(t=n\,\delta t\,,\ \ n\) being integer, states evolve into different states. Inevitably then, after some large number \(N\) of steps, the system returns to its initial state. If \(N\) equals the total number of states allowed, this ends the description of what happens. Our system is periodic. Alternatively, there could be some states left; these must belong to different periodic sequences. All together, this world is described by a finite number, \(k\), of state sequences, each consisting of \(N_i\) members, \(i=1,\ \dots , k\). The total number of states is \(N=\sum _iN_i\).

We now introduce Hilbert space just for our convenience, declaring every physical state to be an element of an orthonormal basis for this Hilbert space. The classical evolution law can be written as a unitary matrix \(U_{\delta t}\) mapping every state onto its successor. After time \(\Delta t=n\,\delta t\), Each periodic sequence has the property

so a simple derivation yields that the eigenvalues of \(U(t)\) are

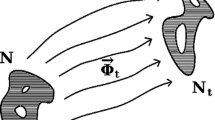

Here, \(E^{\,0}_i\) is a real number depending on the sequence \(i\). It is arbitrary because the rank \(i\) of the sequence we are in is conserved by the evolution law. See a sketch of the energy spectra in Fig. 1.

The energy spectrum of a deterministic theory. (a) The case of a single cycle of 11 states. The total energy range \(E^{\mathrm {\,max}}\) equals \(2\pi /\delta t\), where \(\delta t\) is the smallest time step. The separation \(\delta E\) equals \(2\pi /P\) where \(P\) is the period. (b) There could be many cycles, depending on the initial state. Cycles with periods \(N_i=4,\ 13,\ 9\) and 7 are shown. Since they share the same time steps \(\delta t\), their energies have the same range, but the separations \(\delta E_i\) are different. Then, we can freely add relative shifts \(E^{\,0}_i\) for the different sequences, since \(E^{\,0}_i\) are absolutely conserved in time. (c) When merged, we see that the energy levels can become quite complex, but the equality of some separations can still be recognised

It is easy to verify that the energy spectrum entirely specifies our evolution operator, as it does not depend on the basis chosen. Thus we can observe that the problem of interpreting quantum mechanical phenomena boils down to learning to understand the energy spectrum.

We succeed in writing almost any classical system as if it were a quantum system, in its quantum notation, and it is not hard to use the norm of the eigenstates to describe probabilities, so that Born’s probability ansatz can be employed without any loss of generality. This proves that, if we encounter a quantum system with an energy spectrum of the form (2.2), then it may be interpreted as the classical system as described here.

Now the converse is in general not true. If we consider just any quantum system, its energy spectrum may not be of the form (2.2). On the other hand, the spectrum (2.2) is sufficiently general to be employed to approximate given quantum systems in the large size limit, where the spectrum becomes continuous, which is why we do not stop here – we search for quantum systems that mimic some classical spectrum accurately enough to serve as useful models.

Then, the next thing to worry about is locality. The prototype of a model with locality is the cellular automaton [10]. In a cellular automaton (CA), the physical variables are updated in integer time steps \(t=n\,\delta t\). The cells are separated by a lattice mesh parameter \(a\). At first sight, one might worry that this is not a strict form of locality, but actually it is the closest substitute of locality for a lattice. This is because it takes time \(\delta t\) for a signal to be passed on to its neighbour in the lattice, which means that signals cannot move faster than \(c=a/\delta t\), and, to define locality, we cannot ask for more than that.Footnote 1

Can we construct realistic models that approach the desired quantum energy spectra while respecting locality? It was thought for a long time that this has been proven impossible. Some very ‘plausible’ assumptions had been employed, but these assumptions were made without first exploring the world of classical models, as we did above. For instance, it is often assumed that Alice and Bob can modify the settings of their detector devices without affecting the ‘statistical independence’ of all physical variables in the intermediate regions of space and time. Our above starting points, consisting of complete lists of periodic sequences of states, never needed the assumption of statistical independence; in fact, we are not doing statistics but instead, we consider single pure, classical states. The Gedanken experiments proposed by Bell and others could not be analysed at all without invoking statistical arguments (such as the variables in a random number generator used to decide about the settings), and this invalidates the conclusions made [11, 12].

In our earlier attempts it seemed to be hard to generate plausible models. As long as the energy spectra aren’t right, the chance of succeeding is small. But by looking at the energy spectra, we found the right procedure. There are two keywords to pay attention to: Fast variables and Perturbation expansion.

3 Fast variables and the perturbation expansion

As mentioned in Sect. 1, much of our knowledge of the fundamental particles is based on the fact that most of the interaction parameters are small, so that they can be incorporated in our models by establishing power expansions. This is usually done in terms of the degrees of freedom we have, written in the quantum notation. One never encounters fundamental restrictions on the energy spectrum that way. Now classical CA models do not carry small, tuneable interaction constants, so, we must invent a new way to introduce them. This we do by introducing fast variables [13]. A fast variable is here meant to be a variable that follows a periodic path with very small periods. Typically, their periods \(P\) will obey

where \(E^{\mathrm {\,max}}\) is an anergy limit for the quantum particles observed in any experiment. It can also be interpreted as a limit in the time resolution of our observations in practice, or a limit for the typical energy scale for the quantum transitions considered. If those energies are small compared to the inverse of the periods of our fast particles, then we may safely assume that those variables are in relatively low energy modes. Now, as we saw in Sect. 2, the energy spectrum of fast, periodic variables takes the form (2.2), that is,

This means that only the lowest energy level, \(E_0\), plays a role. We may assume that both the initial state and the final state are in this lowest energy mode for the fast variable. This means that we have to use states that are not the realistic states for the fast variables, but have them in a particular superposition.. We are tempted to make a \(1/(E_n-E_0)\) expansion. In this expansion, the interaction Hamiltonian emerges as

The interaction Hamiltonian may bring about a permutation of the slow variables, which depends on the values of the fast variables. All these numbers are of order 1 or 0. However, the state \(|E_0\rangle \) is normalised to be of unit norm, and this introduces factors \(1/\sqrt{N_{\mathrm {fast}}}\) squared, where \(N_{\mathrm {fast}}\) counts the number of states the fast variable visits before completing its period.

\(N_{\mathrm {fast}}\) may be large, which now enables us to employ a perturbation expansion. Before, this was impossible because we were dealing with realistic states, where small numbers do not exist. We could view this as an application of statistics, but here, everything is completely calculable. We now see how quantum mechanics can emerge in a deterministic system. Some fast variables will always be too fast for us to detect, so that we are forced to use averages, but this gives us the small numbers needed for setting up perturbation expansions.

It may seem that the types of interaction chosen between the fast and the slow variables is non-Newtonian: the fast variable dictates transitions among the slow ones, but not vice versa, which keeps the fast variable in its lowest energy state. This is actually not true in the quantum expressions, because even if the fast variable does not react visibly on the states of the slow ones, the interaction Hamiltonian is hermitian. As such it affects the statistical distributions of the fast variables, eventually causing transitions into higher energy states. It is just the amplitudes that are very low.

The addition of the fast variables may clarify some issues that were expressed by critics; they could not understand how a classical model such as a cellular automaton can ever evolve through wave functions \(\psi \) while the observable physical features are probability distributions. At extremely high energies, or extremely high accuracies, the excited states of the fast variables will produce linear sequences of energy eigenvalues, betraying their classical nature, but this does not invalidate the use of wave functions and operators at low energies. The energy eigenstates are purely mathematical features and they behave as wave functions much more than probability distributions, indeed at all energy scales. At very high energies, we do expect sizeable deviations from standard quantum field theories, even though quantum mechanics itself is not affected.

Curiously, critics find the underlying mathematics difficult to understand. Actually the mathematics of all of the above is quite basic and should not present any problem. Superpositions, collapsing wave functions, Born’s probability interpretation, are all completely straightforwardly derived from the unitary transformation matrices relating different basis choices.Footnote 2

The reason why other critics still do not accept some of these views, may be something more serious, however: the cellular automata do not respect the essential symmetry features of the quantised fields in the Standard Model. This emphatically includes rotation symmetry and Lorentz invariance. The conservation of spin, in all directions, is an essential ingredient in Bell’s theorem, more essential perhaps than many people realise. If this is felt as an objection against my views, I accept this; more study is needed to understand the origin of the rich symmetry structures of our world, in spite of a cellular structure at ultra-tiny distance scales. We briefly discuss the symmetries in the next section.

As stated in the introduction, Sect. 1, most perturbation expansions are formally divergent, but if the first few terms converge sufficiently rapidly then our expressions make sense and our results may be compared with observations. The important improvement this entails for perturbative field theories is that, the theory itself may be defined by a CA evolution law that is interpreted as being exact, and perturbation expansions are only needed for comparison with experiment. Whenever the expansion diverges too much, we replace it by performing simulations of the CA itself. This may be hard in practice because the fast variable(s) may move to many different positions, but, at least in principle, no unreliable approximations are needed to define our theories.

As for locality, there is no reason for worry at all. In the past, locality was usually thought to be a property of the quantum system, while the deterministic underlying theory was subservient to that. Locality was regarded as a property of observed phenomena rather than the theory itself. However, it eventually should amount to ascertaining that no signals of any form should be allowed to go faster than light; this feature is much easier to check by inspecting the classical equations for the dynamics. We mentioned already that classical lattice theories naturally impose speed limits for signals. Even the fast variables can easily be localised in space, so that they also cannot transport signals faster than their speed limits.

4 Standard model

Knowing how quantum theories can be linked to systems such as cellular automata, we can now try to set up such a formalism for the quantised fields used in the Standard Model (SM). For reasons to be spelled out in the next section, one expects the dynamical variables for the Standard Model to live close to the Planck scale. This means that direct observations will be unlikely. We really need to use accurate mathematical arguments to proceed there, and it will be important to keep many options open.

As observed in the previous section, the most conspicuous feature of the Standard Model is its rich symmetry structure. Maybe the three invariant local gauge groups, \(U(1),\ SU(2),\) and \(SU(3)\), will be easiest to handle, since they are local and compact. What we find is that the physically relevant states are the ones invariant under these gauge transformations. This reminds us of the fast variables, where the most relevant elementary states form the lowest energy eigenstate, which is also invariant under rotations around the period. This leads to the suggestion that quantum gauge theories can emerge from classical systems if the latter are thought to run very fast over special orbits in the space of the gauge transformations, so that, just as for the other fast variables, only the gauge-invariant state survives. Perhaps, \(U(1),\ SU(2)\) and \(SU(3)\) are the essential spaces in which all fast variables move around. We suggest that this must be a discrete space, which would imply that the real local gauge group is a discrete subgroup of \(U(1)\otimes SU(2)\otimes SU(3)\), as sometimes used in lattice gauge field simulation models.

At least one symmetry group of the Standard Model is non-compact, besides being continuous. This is the Lorentz group, or the Poincaré group. Such symmetries would be the most difficult to understand if they were to emerge in a cellular automaton. It may be helpful to realise that limits in the Lorentz boost parameters may be expected as soon as matter is present, if we may assume that there are limits for the amounts of energy admissible within a given volume. Thus, eventually the inclusion of the gravitational force might be unavoidable. How to implement such ideas we do not know, but this may be a reason to suspect that, as long as we do not include gravitation, Lorentz invariance will only be approximately obeyed.

An important step will be the realisation of the Dirac equation. There were earlier attempts in lower dimensions and in the absence of mass, by Wetterich [6, 7]. In the case of 3+1 dimensions, and/or with mass term, the energy spectrum shows that we shall need fast variables, Sect. 3. This has not yet been done explicitly, as far as we know. It will be of much interest to extend such a model into QCD, by including couplings of the Dirac fields to the link variables of the local gauge group \(SU(3)\). It all depends on how well continuum limits will be arrived at. This we cannot foresee. It will be very worth-while to make some attempts, since we might arrive at a simulation technique for QCD in which we can follow in Minkowskian time how events evolve.

Next, we need a dynamical degree of freedom that explains the existence of the Higgs field. The most conspicuous feature there, is the very low value of the Higgs mass as compared with the Planck mass. This has proven to be difficult to understand. A possible connection with cosmology was suggested by Bezrukov et al. [14], and Jegerlehner [15]. Here, we mention that, due to Goldstone’s theorem [16, 17], a low mass scalar field must be related to a global symmetry. Since the field of the neutral Higgs particle is formed by the invariant length of the Higgs isospin field, the Goldstone symmetry here must involve global scale transformations of the Higgs isospinor. It is almost but not quite an exact symmetry at the Planck scale, broken by about one part in \(10^{34}\), being the square of the Higgs mass / Planck mass ratio. One suggestion could be that, as everything in this theory, also the Higgs field takes integer values only. Perhaps statistical laws force the vacuum values to be rather small integers, while there must be a limit to the size of the Higgs isospinor. Choosing the size limit as a sufficiently large integer, this limit might violate the Higgs-scale invariance by the given small amount.

Spinor fields can be introduced in the automaton theory by accepting Boolean variables; these give rise to anticommuting field variables. A study of the role of fermions in cellular automaton theories was made by Wetterich [6, 7]. An interesting question is the origin of the rich Yukawa interactions displayed by the observed fermions; they too are expansion parameters and must be tuned down by factors \(1/N\). We emphasise these observations, hoping that one day such features will be calculable.

5 Gravity: nature’s Rubik cube

Unfortunately, some of the schemes suggested above may be highly premature. The reason for this suspicion is that, at the Planck scale, for sure the gravitational force will take over. To stay in line with our general considerations, it will be mandatory to search for a classical model for gravity as well. We should ask for invariance under local diffeomorphisms, or, if possible, some discrete substitute for that. Our problem is that diffeomorphism invariance does not square well with discreteness. Yet we believe that our proposal is more promising than some of the usual attempts to ‘quantise gravity’. In lieu of that, we propose to first ‘discretise gravity’.

In fact we have a more direct indication that the allowed classical states may be sparse at the Planck scale; this is suggested when we analyse Hawking radiation emitted by a black hole. Since this radiation is thermal, one can derive the state density by applying statistical mechanics. This gives us approximately one physical degree of freedom on each surface element of Planckian dimensions on a black hole horizon. It is tempting to believe that this also points to discreteness of states as seen by freely falling observers in the same region of space-time. These states should include all structures involved with the local curvature of space and time.

In a discrete space-time, the ultimate diffeomorphic transformation is the permutation of two space-time points. From there, one might hope to return to the continuum limit. This sounds like being a very hard problem because of the paucity of clues available, How do laws of physics change under a two-point permutation?

There may be better ways to pose such questions. Which space-time transformation may be easier to handle? Here, we propose a transformation that came about from the study of quantum black holes. Here, we found important space-time effects when an ultra fast particle is added or removed from a system. Such a particle carries a gravitational field in the form of a shock wave [18], or a Cherenkov flash of gravitational radiation. The creation or annihilation of an energetic particle causes space-time to rip apart in two pieces, the part in front of, and the part behind the particle. The parts are glued together again after a shift, whose magnitude follows from Einstein’s equations. This is a simple example of a diffeomorphism transformation generated by a particle.

If, however, two or more particles are created or annihilated, the combination of two such fissures results in a more complicated diffeomorphism, as these two may not commute. This situation should actually be familiar to what one needs to do to solve the Rubik cube: a single move causes quite a few of the faces to interchange colours, but by applying carefully chosen permutations of such actions, one may end up with a single two-point permutation. Perhaps indeed we should try to formulate the effects of a single permutation of two neighbouring space-time points similarly, but the author has not yet succeeded in completing this space-time Rubik cube puzzle. The question will be whether such procedures can be discretised.

Needless to emphasise that this last section contains just wild speculations on where to go next. These were brought up merely to emphasise that questions can be phrased in ways different from the usual ones, and that we need to consider such questions to make more lasting progress.

Data availibility

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

Notes

If the CA is on a lattice, one expects signals to move faster in particular lattice directions, as compared to others. This is not seen to happen in the real world, as we have rotation invariance. This topic is to be handled later.

Notably, the Born rule, linking probabilities to wave functions, seemed to be a big mystery for my critics. If we turn to the basic real states of an automaton, the Born probabilities travel along the same paths as the wave functions, so that the action of squaring one to get the other, remains rigorously valid during the evolution. Born’s rule is not valid when applied among superpositions alone, but careful analysis reveals that this is never needed for the physical interpretation.

References

Padmanabhan, T., Chakraborty, S.: Microscopic origin of Einstein’s field equations and the raison d’être for a positive cosmological constant. Phys. Lett. B 824, 136828 (2022). arXiv:2112.09446

Callan, C.G.: Broken scale invariance in scalar field theory. Phys. Rev. D 2, 1541 (1970)

Symanzik, K.: Small distance behaviour and power counting. Commun. Math. Phys. 18, 227 (1970)

Wilson, K.G., Fisher, M.E.: Critical exponents in 3.99 dimensions. Phys. Rev. Lett. 28, 240 (1972)

’t Hooft, G.: Dimensional regularisation and the renormalization group. Nucl. Phys. B 61, 455 (1973)

Wetterich, C.: Probabilistic cellular automata for interacting fermionic quantum field theories. Nucl. Phys. B 963, 115296 (2021). arXiv:2007.06366 [quant-ph]

Wetterich, C.: Fermionic quantum field theories as probabilistic cellular automata, arXiv e-prints arXiv:2111.06728 [hep-lat] (2021)

Bell, J.S.: On the Einstein Podolsky Rosen paradox. Physics 1, 195 (1964)

’t Hooft, G.: The Cellular Automaton Interpretation of Quantum Mechanics. Fundamental Theories of Physics, vol. 185, Springer, Cham (2016) https://doi.org/10.1007/978-3-319-41285-6. arXiv:1405.1548

Zuse, K.: Rechnender Raum. Friedrich Vieweg & Sohn, Braunschweig (1969). Transl: Calculating Space. MIT Technical Translation AZT-70-164-GEMIT, Massachusetts Institute of Technology (Project MAC), Cambridge, Mass. 02139. Adrian German and Hector Zenil (eds.)

Hossenfelder, S., Palmer, T.N.: Rethinking Superdeterminism. Front. Phys. 8, 139 (2020). arXiv:1912.06462 [quant-ph]

Hossenfelder, S.: Superdeterminism: A Guide for the Perplexed. arXiv e-prints arXiv:2010.01324v2 [quant-ph] (2020)

’t Hooft, G.: Explicit construction of Local Hidden Variables for any quantum theory up to any desired accuracy. arXiv e-prints arXiv:2103.04335 [quant-ph]

Bezrukov, F., Kalmykov, M.Y., Kniehl, B.A., Shaposhnikov, M.: Higgs boson mass and new physics. J. High Energy Phys. 1210, 140 (2012). arXiv:1205.2893v2

Jegerlehner, F.: The Standard Model of particle physics as a conspiracy theory and the possible role of the Higgs boson in the evolution of the Early Universe. Acta Phys. Pol., B 52, 575 (2021). arXiv:2106.00862

Goldstone, J.: Field theories with superconductor solutions. Nuovo Cim. 19, 154 (1961)

Nambu, Y., Jona-Lasinio, G.: Dynamical model of elementary particles based on an analogy with superconductivity. I. Phys. Rev. 122, 345 (1961)

Aichelburg, P.C., Sexl, R.U.: On the gravitational field of a massless particle. Gen. Rel. Grav. 2, 303 (1971). https://doi.org/10.1007/BF00758149

Author information

Authors and Affiliations

Corresponding author

Additional information

In Memory of Professor T Padmanabhan.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

’t Hooft, G. Quantum mechanics, statistics, standard model and gravity. Gen Relativ Gravit 54, 56 (2022). https://doi.org/10.1007/s10714-022-02939-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10714-022-02939-y