Abstract

Automated verification techniques for stochastic games allow formal reasoning about systems that feature competitive or collaborative behaviour among rational agents in uncertain or probabilistic settings. Existing tools and techniques focus on turn-based games, where each state of the game is controlled by a single player, and on zero-sum properties, where two players or coalitions have directly opposing objectives. In this paper, we present automated verification techniques for concurrent stochastic games (CSGs), which provide a more natural model of concurrent decision making and interaction. We also consider (social welfare) Nash equilibria, to formally identify scenarios where two players or coalitions with distinct goals can collaborate to optimise their joint performance. We propose an extension of the temporal logic rPATL for specifying quantitative properties in this setting and present corresponding algorithms for verification and strategy synthesis for a variant of stopping games. For finite-horizon properties the computation is exact, while for infinite-horizon it is approximate using value iteration. For zero-sum properties it requires solving matrix games via linear programming, and for equilibria-based properties we find social welfare or social cost Nash equilibria of bimatrix games via the method of labelled polytopes through an SMT encoding. We implement this approach in PRISM-games, which required extending the tool’s modelling language for CSGs, and apply it to case studies from domains including robotics, computer security and computer networks, explicitly demonstrating the benefits of both CSGs and equilibria-based properties.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Stochastic multi-player games are a versatile modelling framework for systems that exhibit cooperative or competitive behaviour in the presence of adversarial or uncertain environments. They can be viewed as a collection of players (agents) with strategies for determining their actions based on the execution so far. These models combine nondeterminism, representing the adversarial, cooperative and competitive choices, stochasticity, modelling uncertainty due to noise, failures or randomness, and concurrency, representing simultaneous execution of interacting agents. Examples of such systems appear in many domains, from robotics and autonomous transport, to security and computer networks. A game-theoretic approach also facilitates the design of protocols that use penalties or incentives to ensure robustness against selfish participants. However, the complex interactions involved in such systems make their correct construction a challenge.

Formal verification for stochastic games provides a means of producing quantitative guarantees on the correctness of these systems (e.g. “the control software can always safely stop the vehicle with probability at least 0.99, regardless of the actions of other road users”), where the required behavioural properties are specified precisely in quantitative extensions of temporal logic. The closely related problem of strategy synthesis constructs an optimal strategy for a player, or coalition of players, which guarantees that such a property is satisfied.

A variety of verification algorithms for stochastic games have been devised, e.g., [13, 14, 24, 25, 75]. In recent years, further progress has been made: verification and strategy synthesis algorithms have been developed for various temporal logics [5, 19, 42, 45] and implemented in the PRISM-games tool [51], an extension of the PRISM probabilistic model checker [44]. This has allowed modelling and verification of stochastic games to be used for a variety of non-trivial applications, in which competitive or collaborative behaviour between entities is a crucial ingredient, including computer security and energy management.

A limitation of the techniques implemented in PRISM-games to date is that they focus on turn-based stochastic multi-player games (TSGs), whose states are partitioned among a set of players, with exactly one player taking control of each state. In this paper, we propose and implement techniques for concurrent stochastic multi-player games (CSGs), which generalise TSGs by permitting players to choose their actions simultaneously in each state. This provides a more realistic model of interactive agents operating concurrently, and making action choices without already knowing the actions being taken by other agents. Although algorithms for CSGs have been known for some time (e.g., [14, 24, 25]), their implementation and application to real-world examples has been lacking.

A further limitation of existing work is that it focuses on zero-sum properties, in which one player (or a coalition of players) aims to optimise some objective, while the remaining players have the directly opposing goal. In PRISM-games, properties are specified in the logic rPATL (probabilistic alternating-time temporal logic with rewards) [19], a quantitative extension of the game logic ATL [1]. This allows us to specify that a coalition of players can achieve a high-level objective, regarding the probability of an event’s occurrence or the expectation of reward measure, irrespective of the other players’ strategies. Extensions have allowed players to optimise multiple objectives [5, 20], but again in a zero-sum fashion.

In this work, we move beyond zero-sum properties and consider situations where two players (or two coalitions of players) in a CSG have distinct objectives to be maximised or minimised. The goals of the players (or coalitions) are not necessarily directly opposing, and so it may be beneficial for players to collaborate. For these nonzero-sum scenarios, we use the well studied notion of Nash equilibria (NE), where it is not beneficial for any player to unilaterally change their strategy. In particular, we use subgame-perfect NE [61], where this equilibrium criterion holds in every state of the game, and we focus on two specific variants of equilibria: social welfare and social cost NE, which maximise and minimise, respectively, the sum of the objectives of the players.

We propose an extension of the rPATL logic which adds the ability to express quantitative nonzero-sum properties based on these notions of equilibria, for example “the two robots have navigation strategies which form a (social cost) Nash equilibrium, and under which the combined expected energy usage until completing their tasks is below k”. We also include some additional reward properties that have proved to be useful when applying our methods to various case studies.

We provide a formal semantics for the new logic and propose algorithms for CSG verification and strategy synthesis for a variant of stopping games, including both zero-sum and nonzero-sum properties. Our algorithms extend the existing approaches for rPATL model checking, and employ a combination of exact computation through backward induction for finite-horizon properties and approximate computation through value iteration for infinite-horizon properties. Both approaches require the solution of games for each state of the model in each iteration of the computation: we solve matrix games for the zero-sum case and find optimal NE for bimatrix games for the nonzero-sum case. The former can be done with linear programming; we perform the latter using labelled polytopes [52] and a reduction to SMT.

We have implemented our verification and strategy synthesis algorithms in a new release, version 3.0, of PRISM-games [48], extending both the modelling and property specification languages to support CSGs and nonzero-sum properties. In order to investigate the performance, scalability and applicability of our techniques, we have developed a large selection of case studies taken from a diverse set of application domains including: finance, computer security, computer networks, communication systems, robot navigation and power control.

These illustrate examples of systems whose modelling and analysis requires stochasticity and competitive or collaborative behaviour between concurrent components or agents. We demonstrate that our CSG modelling and verification techniques facilitate insightful analysis of quantitative aspects of such systems. Specifically, we show cases where CSGs allow more accurate modelling of concurrent behaviour than their turn-based counterparts and where our equilibria-based extension of rPATL allows us to synthesise better performing strategies for collaborating agents than can be achieved using the zero-sum version.

The paper combines and extends the conference papers [45, 46]. In particular, we: (i) introduce the definition of social cost Nash equilibria for CSGs and model checking algorithms for verifying temporal logic specifications using this definition; (ii) provide additional details and proofs of model checking algorithms, for example for combinations of finite- and infinite-horizon objectives; (iii) present an expanded experimental evaluation, including a wider range of properties, extended analysis of the case studies and a more detailed evaluation of performance, including efficiency improvements with respect to [45, 46].

Related work Various verification algorithms have been proposed for CSGs, e.g. [14, 24, 25], but without implementations, tool support or case studies. PRISM-games 2.0 [51], which we have built upon in this work, provided modelling and verification for a wide range of properties of stochastic multi-player games, including those in the logic rPATL, and multi-objective extensions of it, but focusing purely on the turn-based variant of the model (TSGs) in the context of two-coalitional zero-sum properties. GIST [17] allows the analysis of \(\omega \)-regular properties on probabilistic games, but again focuses on turn-based, not concurrent, games. GAVS+ [21] is a general-purpose tool for algorithmic game solving, supporting TSGs and (non-stochastic) concurrent games, but not CSGs. Three further tools, PRALINE [10], EAGLE [74] and EVE [34], support the computation of NE [58] for the restricted class of (non-stochastic) concurrent games. In addition, EVE has recently been extended to verify if an LTL property holds on some or all NE [35]. Computing NE is also supported by MCMAS-SLK [12] via strategy logic and general purpose tools such as Gambit [57] can compute a variety of equilibria but, again, not for stochastic games.

Work concerning nonzero-sum properties includes [18, 75], in which the existence of and the complexity of finding NE for stochastic games is studied, but without practical algorithms. The complexity of finding subgame-perfect NE for quantitative reachability properties is studied in [11], while [33] considers the complexity of equilibrium design for temporal logic properties and lists social welfare requirements and implementation as future work. In [65], a learning-based algorithm for finding NE for discounted properties of CSGs is presented and evaluated. Similarly, [53] studies NE for discounted properties and introduces iterative algorithms for strategy synthesis. A theoretical framework for price-taking equilibria of CSGs is given in [2], where players try to minimise their costs which include a price common to all players and dependent on the decisions of all players. A notion of strong NE for a restricted class of CSGs is formalised in [27] and an approximation algorithm for checking the existence of such NE for discounted properties is introduced and evaluated. The existence of stochastic equilibria with imprecise deviations for CSGs and a PSPACE algorithm to compute such equilibria is considered in [8]. Finally, we mention the fact that the concept of equilibrium is used to analyze different applications such as cooperation among agents in stochastic games [39] and to design protocols based on quantum secret sharing [67].

2 Preliminaries

We begin with some basic background from game theory, and then describe CSGs, illustrating each with examples. For any finite set X, we will write \({ Dist }(X)\) for the set of probability distributions over X and for any vector \(v \in \mathbb {Q}^n\) for \(n \in \mathbb {N}\) we use v(i) to denote the ith entry of the vector.

2.1 Game theory concepts

We first introduce normal form games, which are simple one-shot games where players make their choices concurrently.

Definition 1

(Normal form game) A (finite, n-person) normal form game (NFG) is a tuple \(\mathsf N = (N,A,u)\) where:

-

\(N=\{1,\dots ,n\}\) is a finite set of players;

-

\(A = A_1 \times \cdots \times A_n\) and \(A_i\) is a finite set of actions available to player \(i \in N\);

-

\(u = (u_1,\dots ,u_n)\) and \(u_i :A \rightarrow \mathbb {Q}\) is a utility function for player \(i \in N\).

In a game \(\mathsf N \), players select actions simultaneously, with player \(i \in N\) choosing from the action set \(A_i\). If each player i selects action \(a_i\), then player j receives the utility \(u_j(a_1,\dots ,a_n)\).

Definition 2

(Strategies and strategy profile) A (mixed) strategy \(\sigma _i\) for player i in an NFG \(\mathsf N \) is a distribution over its action set, i.e., \(\sigma _i \in { Dist }(A_i)\). We let \(\varSigma ^i_\mathsf N \) denote the set of all strategies for player i. A strategy profile (or just profile) \(\sigma = (\sigma _1,\dots ,\sigma _n)\) is a tuple of strategies for each player.

Under a strategy profile \(\sigma = (\sigma _1,\dots ,\sigma _n)\) of an NFG \(\mathsf N \), the expected utility of player i is defined as follows:

A two-player NFG is also called a bimatrix game as it can be represented by two distinct matrices \(\mathsf Z _1, \mathsf Z _2 \in \mathbb {Q}^{l \times m}\) where \(A_1 = \{a_1,\dots ,a_l\}\), \(A_2 = \{b_1,\dots ,b_m\}\), \(z^1_{ij} = u_1(a_i,b_j)\) and \(z^2_{ij} = u_2(a_i,b_j)\).

A two-player NFG is constant-sum if there exists \(c \in \mathbb {Q}\) such that \(u_1(\alpha ) {+} u_2(\alpha ) = c\) for all \(\alpha \in A\) and zero-sum if \(c = 0\). A zero-sum, two-player NFG is often called a matrix game as it can be represented by a single matrix \(\mathsf Z \in \mathbb {Q}^{l \times m}\) where \(A_1 = \{a_1,\dots ,a_l\}\), \(A_2 = \{b_1,\dots ,b_m\}\) and \(z_{ij} = u_1(a_i,b_j) = - u_2(a_i,b_j)\). For zero-sum, two-player NFGs, in the bimatrix game representation we have \(\mathsf Z _1 =-\mathsf Z _2\).

2.1.1 Matrix games

We require the following classical result concerning matrix games, which introduces the notion of the value of a matrix game (and zero-sum NFG).

Theorem 1

(Minimax theorem [76, 77]) For any zero-sum NFG \(\mathsf N = (N,A,u)\) and corresponding matrix game \(\mathsf Z \), there exists \(v^\star \in \mathbb {Q}\), called the value of the game and denoted \({ val }(\mathsf Z )\), such that:

-

there is a strategy \(\sigma _1^\star \) for player 1, called an optimal strategy of player 1, such that under this strategy the player’s expected utility is at least \(v^\star \) regardless of the strategy of player 2, i.e. \(\inf _{\sigma _2 \in \varSigma ^2_\mathsf N } u_1(\sigma _1^\star ,\sigma _2) \geqslant v^\star \);

-

there is a strategy \(\sigma _2^\star \) for player 2, called an optimal strategy of player 2, such that under this strategy the player’s expected utility is at least \(-v^\star \) regardless of the strategy of player 1, i.e. \(\inf _{\sigma _1 \in \varSigma ^1_\mathsf N } u_2(\sigma _1,\sigma _2^\star ) \geqslant -v^\star \).

The value of a matrix game \(\mathsf Z \in \mathbb {Q}^{l \times m}\) can be found by solving the following linear programming (LP) problem [76, 77]. Maximise v subject to the constraints:

In addition, the solution for \((x_1,\dots ,x_l)\) yields an optimal strategy for player 1. The value of the game can also be found by solving the following dual LP problem. Minimise v subject to the constraints:

and in this case the solution \((y_1,\dots ,y_m)\) yields an optimal strategy for player 2.

Example 1

Consider the (zero-sum) NFG corresponding to the well known rock-paper-scissors game, where each player \(i\in \{1,2\}\) chooses “rock” (\(r_i\)), “paper” (\(p_i\)) or “scissors” (\(s_i\)). The matrix game representation is:

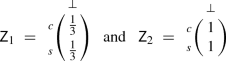

where the utilities for winning, losing and drawing are 1, \(-1\) and 0 respectively. The value for this matrix game is the solution to the following LP problem. Maximise v subject to the constraints:

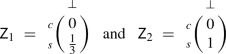

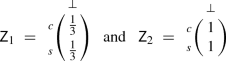

which yields the value \(v^\star =0\) with optimal strategy \(\sigma _1^\star = (1/3,1/3,1/3)\) for player 1 (the optimal strategy for player 2 is the same).

2.1.2 Bimatrix games

For bimatrix games (and nonzero-sum NFGs), we use the concept of Nash equilibria (NE), which represent scenarios for players with distinct objectives in which it is not beneficial for any player to unilaterally change their strategy. In particular, we will use variants called social welfare optimal NE and social cost optimal NE. These variants are equilibria that maximise or minimise, respectively, the total utility of the players, i.e., the sum of the individual player utilities.

Definition 3

(Best and least response) For NFG \(\mathsf N = (N,A,u)\), strategy profile \(\sigma = (\sigma _1,\dots ,\sigma _n)\) and player i strategy \(\sigma _i'\), we define the sequence of strategies \(\sigma _{-i} = (\sigma _1,\dots ,\sigma _{i-1},\sigma _{i+1},\dots ,\sigma _n)\) and profile \(\sigma _{-i}[\sigma _i'] = (\sigma _1,\dots ,\sigma _{i-1},\sigma _i',\sigma _{i+1},\dots ,\sigma _n)\). For player i and strategy sequence \(\sigma _{-i}\):

-

a best response for player i to \(\sigma _{-i}\) is a strategy \(\sigma ^\star _i\) for player i such that \(u_i(\sigma _{-i}[\sigma ^\star _i]) \geqslant u_i(\sigma _{-i}[\sigma _i])\) for all strategies \(\sigma _i\) of player i;

-

a least response for player i to \(\sigma _{-i}\) is a strategy \(\sigma ^\star _i\) for player i such that \(u_i(\sigma _{-i}[\sigma ^\star _i]) \leqslant u_i(\sigma _{-i}[\sigma _i])\) for all strategies \(\sigma _i\) of player i.

Definition 4

(Nash equilibrium) For NFG \(\mathsf N = (N,A,u)\), a strategy profile \(\sigma ^\star \) of \(\mathsf N \) is a Nash equilibrium (NE) and \(\langle u_i(\sigma ^\star )\rangle _{i \in N}\) NE values if \(\sigma _i^\star \) is a best response to \(\sigma _{-i}^\star \) for all \(i \in N\).

Definition 5

(Social welfare NE) For NFG \(\mathsf N = (N,A,u)\), an NE \(\sigma ^\star \) of \(\mathsf N \) is a social welfare optimal NE (SWNE) and \(\langle u_i(\sigma ^\star )\rangle _{i \in N}\) corresponding SWNE values if \(u_1(\sigma ^\star ){+}\cdots {+}u_n(\sigma ^\star )\geqslant u_1(\sigma ){+} \cdots {+}u_n(\sigma )\) for all NE \(\sigma \) of \(\mathsf N \).

Definition 6

(Social cost NE) For NFG \(\mathsf N = (N,A,u)\), a profile \(\sigma ^\star \) of \(\mathsf N \) is a social cost optimal NE (SCNE) and \(\langle u_i(\sigma ^\star )\rangle _{i \in N}\) corresponding SCNE values if it is an NE of \(\mathsf N ^{-}= (N,A,{-}u)\) and \(u_1(\sigma ^\star ){+}\cdots {+}u_n(\sigma ^\star )\leqslant u_1(\sigma ){+} \cdots {+}u_n(\sigma )\) for all NE \(\sigma \) of \(\mathsf N ^{-}= (N,A,{-}u)\).

The notion of SWNE is standard [59] and corresponds to the case where utility values represent profits or rewards. We introduce the dual notion of SCNE for the case where utility values correspond to losses or costs. In our experience of modelling with stochastic games, such situations are common: example objectives in this category include minimising the probability of a fault occurring or minimising the expected time to complete a task. Representing SCNE directly is a more natural approach than the alternative of simply negating utilities, as above.

The following demonstrates the relationship between SWNE and SCNE.

Lemma 1

For NFG \(\mathsf N = (N,A,u)\), a strategy profile \(\sigma ^\star \) of \(\mathsf N \) is an NE of \(\mathsf N ^{-} = (N,A,{-}u)\) if and only if \(\sigma ^\star _i\) is a least response to \(\sigma ^\star _{-i}\) of player i in \(\mathsf N \) for all \(i \in N\). Furthermore, \(\sigma ^\star \) is a SWNE of \(\mathsf N ^{-}\) if and only if \(\sigma ^\star \) is a SCNE of \(\mathsf N \).

Lemma 1 can be used to reduce the computation of SCNE profiles and values to those of SWNE profiles and values (or vice versa). This is achieved by negating all utilities in the NFG or bimatrix game, computing an SWNE profile and corresponding SWNE values, and then negating the SWNE values to obtain an SCNE profile and corresponding SCNE values for the original NFG or bimatrix game.

Finding NE and NE values in bimatrix games is in the class of linear complementarity problems (LCPs). More precisely, \((\sigma _1,\sigma _2)\) is an NE profile and (u, v) are the corresponding NE values of the bimatrix game \(\mathsf Z _1,\mathsf Z _2 \in \mathbb {Q}^{l \times m}\) where \(A_1 = \{a_1,\dots ,a_l\}\), \(A_2 = \{b_1,\dots ,b_m\}\) if and only if for the column vectors \(x \in \mathbb {Q}^l\) and \(y \in \mathbb {Q}^m\) where \(x_i = \sigma _1(a_i)\) and \(y_j = \sigma _2(b_j)\) for \(1 \leqslant i \leqslant l\) and \(1 \leqslant j \leqslant m\), we have:

and \(\mathbf {0}\) and \(\mathbf {1}\) are vectors or matrices with all components 0 and 1, respectively.

Example 2

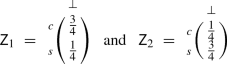

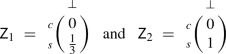

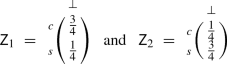

We consider a nonzero-sum stag hunt game [62] where, if players decide to cooperate, this can yield a large utility, but if the others do not, then the cooperating player gets nothing while the remaining players get a small utility. A scenario with 3 players, where two form a coalition (assuming the role of player 2), yields a bimatrix game:

where \( nc _i\) and \( c _i\) represent player 1 and coalition 2 not cooperating and cooperating, respectively, and \( hc _2\) represents half the players in the coalition cooperating. A strategy profile \(\sigma ^* = ((x_1,x_2,x_3),(y_1,y_2))\) is an NE and (u, v) the corresponding NE values of the game if and only if, from Eqs. (1) and (2):

There are three solutions to this LCP problem which correspond to the following NE profiles:

-

player 1 and the coalition pick \( nc _1\) and \( nc _2\), respectively, with NE values (2, 4);

-

player 1 selects \( nc _1\) and \(c_1\) with probabilities 5/9 and 4/9 and the coalition selects \( nc _2\) and \(c_2\) with probabilities 2/3 and 1/3, with NE values (2, 4);

-

player 1 and the coalition select \(c_1\) and \(c_2\), respectively, with NE values (6, 9).

For instance, in the first case, neither player 1 nor the coalition believes the other will cooperate: the best they can do is act alone. The third maximises the joint utility and is the only SWNE profile, with corresponding SWNE values (6, 9).

To find SCNE profiles and SCNE values for the same set of utility functions, using Lemma 1 we can negate all the utilities of the players in the game and look for NE profiles in the resulting bimatrix game; again, there are three:

-

player 1 and the coalition select \(c_1\) and \( nc _2\), respectively, with NE values \((0,-4)\);

-

player 1 selects \( nc _1\) and \(c_1\) with probabilities 1/2 and 1/2 and the coalition selects \( nc _2\) and \( hc _2\) with probabilities 1/2 and 1/2, with NE values \((-2,-4)\);

-

player 1 and the coalition select \( nc _1\) and \(c_2\), respectively, with NE values \((-2,0)\).

The third is the only SCNE profile, with corresponding SCNE values (2, 0).

In this work, we compute the SWNE values for a bimatrix game (or, via Lemma 1, the SCNE values) by first identifying all the NE values of the game. For this, we use the Lemke-Howson algorithm [52], which is based on the method of labelled polytopes [59]. Other well-known methods include those based on support enumeration [64] and regret minimisation [69]. Given a bimatrix game \(\mathsf Z _1,\mathsf Z _2 \in \mathbb {Q}^{l \times m}\), we denote the sets of deterministic strategies of players 1 and 2 by \(I = \{1,\dots ,l\}\) and \(M = \{1,\dots ,m\}\) and define \(J = \{l{+}1,\dots ,l{+}m\}\) by mapping \(j \in M\) to \(l{+}j \in J\). A label is then defined as an element of \(I \cup J\). The sets of strategies for players 1 and 2 can be represented by:

The strategy set Y is then divided into regions Y(i) and Y(j) (polytopes) for \(i \in I\) and \(j \in J\) such that Y(i) contains strategies for which the deterministic strategy i of player 1 is a best response and Y(j) contain strategies which choose action j with probability 0:

where \(\mathsf Z _1(i,:)\) is the ith row vector of \(\mathsf Z _1\). A vector y is then said to have label k if \(y \in Y(k)\), for \(k \in I \cup J\). The strategy set X is divided analogously into regions X(j) and X(i) for \(j \in J\) and \(i\in I\) and a vector x has label k if \(x \in X(k)\), for \(k \in I \cup J\). A pair of vectors \((x,y) \in X {\times } Y\) is completely labelled if the union of the labels of x and y equals \(I \cup J\).

The NE profiles of the game are the vector pairs that are completely labelled [52, 72]. The corresponding NE values can be computed through matrix-vector multiplication. A SWNE profile and corresponding SWNE values can then be found through an NE profile with NE values that maximise the sum.

2.2 Concurrent stochastic games

We now define concurrent stochastic games [71], where players repeatedly make simultaneous choices over actions that update the game state probabilistically.

Definition 7

(Concurrent stochastic game) A concurrent stochastic multi-player game (CSG) is a tuple \(\mathsf G = (N, S, \bar{S}, A, \varDelta , \delta , { AP }, { L })\) where:

-

\(N=\{1,\dots ,n\}\) is a finite set of players;

-

S is a finite set of states and \(\bar{S} \subseteq S\) is a set of initial states;

-

\(A = (A_1\cup \{\bot \}) {\times } \cdots {\times } (A_n\cup \{\bot \})\) where \(A_i\) is a finite set of actions available to player \(i \in N\) and \(\bot \) is an idle action disjoint from the set \(\cup _{i=1}^n A_i\);

-

\(\varDelta :S \rightarrow 2^{\cup _{i=1}^n A_i}\) is an action assignment function;

-

\(\delta :S {\times } A \rightarrow { Dist }(S)\) is a probabilistic transition function;

-

\({ AP }\) is a set of atomic propositions and \({ L }:S \rightarrow 2^{{ AP }}\) is a labelling function.

A CSG \(\mathsf G \) starts in an initial state \({\bar{s}}\in \bar{S}\) and, when in state s, each player \(i \in N\) selects an action from its available actions \(A_i(s) \,{\mathop {=}\limits ^{\mathrm{{\tiny def}}}}\varDelta (s) \cap A_i\) if this set is non-empty, and from \(\{ \bot \}\) otherwise. Supposing each player i selects action \(a_i\), the state of the game is updated according to the distribution \(\delta (s,(a_1,\dots ,a_n))\). A CSG is a turn-based stochastic multi-player game (TSG) if for any state s there is precisely one player i for which \(A_i(s) \ne \{ \bot \}\). Furthermore, a CSG is a Markov decision process (MDP) if there is precisely one player i such that \(A_i(s) \ne \{ \bot \}\) for all states s.

A path \(\pi \) of \(\mathsf G \) is a sequence \(\pi = s_0 \xrightarrow {\alpha _0}s_1 \xrightarrow {\alpha _1} \cdots \) where \(s_i \in S\), \(\alpha _i\in A\) and \(\delta (s_i,\alpha _i)(s_{i+1})>0\) for all \(i \geqslant 0\). We denote by \(\pi (i)\) the \((i{+}1)\)th state of \(\pi \), \(\pi [i]\) the action associated with the \((i{+}1)\)th transition and, if \(\pi \) is finite, \( last (\pi )\) the final state. The length of a path \(\pi \), denoted \(|\pi |\), is the number of transitions appearing in the path. Let \( FPaths _\mathsf G \) and \( IPaths _\mathsf G \) (\( FPaths _\mathsf{G ,s}\) and \( IPaths _\mathsf{G ,s}\)) be the sets of finite and infinite paths (starting in state s).

We augment CSGs with reward structures of the form \(r=(r_A,r_S)\), where \(r_A :S{\times }A \rightarrow \mathbb {Q}\) is an action reward function (which maps each state and action tuple pair to a rational value that is accumulated when the action tuple is selected in the state) and \(r_S :S \rightarrow \mathbb {Q}\) is a state reward function (which maps each state to a rational value that is incurred when the state is reached). We allow both positive and negative rewards; however, we will later impose certain restrictions to ensure the correctness of our model checking algorithms.

A strategy for a player in a CSG resolves the player’s choices in each state. These choices can depend on the history of the CSG’s execution and can be randomised. Formally, we have the following definition.

Definition 8

(Strategy) A strategy for player i in a CSG \(\mathsf G \) is a function of the form \(\sigma _i : FPaths _\mathsf{G } \rightarrow { Dist }(A_i \cup \{ \bot \})\) such that, if \(\sigma _i(\pi )(a_i)>0\), then \(a_i \in A_i( last (\pi ))\). We denote by \(\varSigma ^i_\mathsf G \) the set of all strategies for player i.

As for NFGs, a strategy profile for \(\mathsf G \) is a tuple \(\sigma = (\sigma _1,\dots ,\sigma _{n})\) of strategies for all players and, for player i and strategy \(\sigma _i'\), we define the sequence \(\sigma _{-i}\) and profile \(\sigma _{-i}[\sigma _i']\) in the same way. For strategy profile \(\sigma =(\sigma _1,\dots ,\sigma _{n})\) and state s, we let \( FPaths ^\sigma _\mathsf{G ,s}\) and \( IPaths ^\sigma _\mathsf{G ,s}\) denote the finite and infinite paths from s under the choices of \(\sigma \). We can define a probability measure \({ Prob }^{\sigma }_\mathsf{G ,s}\) over the infinite paths \( IPaths ^{\sigma }_\mathsf{G ,s}\) [43]. This construction is based on first defining the probabilities for finite paths from the probabilistic transition function and choices of the strategies in the profile. More precisely, for a finite path \(\pi = s_0 \xrightarrow {\alpha _0}s_1 \xrightarrow {\alpha _1} \cdots \xrightarrow {\alpha _{m-1}} s_m\) where \(s_0=s\), the probability of \(\pi \) under the profile \(\sigma \) is defined by:

Next, for each finite path \(\pi \), we define the basic cylinder \(C^\sigma (\pi )\) that consists of all infinite paths in \( IPaths ^\sigma _\mathsf{G ,s}\) that have \(\pi \) as a prefix. Finally, using properties of cylinders, we can then construct the probability space \(( IPaths ^{\sigma }_\mathsf{G ,s}, {\mathcal {F}}^\sigma _s, { Prob }^{\sigma }_\mathsf{G ,s})\), where \({\mathcal {F}}^\sigma _s\) is the smallest \(\sigma \)-algebra generated by the set of basic cylinders \(\{ C^\sigma (\pi ) \mid \pi \in FPaths ^{\sigma }_\mathsf{G ,s} \}\) and \(Prob^{\sigma }_\mathsf{G ,s}\) is the unique measure such that \(Prob^{\sigma }_\mathsf{G ,s}(C^\sigma (\pi )) = \mathbf {P}^\sigma (\pi )\) for all \(\pi \in FPaths ^\sigma _\mathsf{G ,s}\).

For random variable \(X : IPaths _\mathsf{G } \rightarrow \mathbb {Q}\), we can then define for any profile \(\sigma \) and state s the expected value \(\mathbb {E}^{\sigma }_\mathsf{G ,s}(X)\) of X in s with respect to \(\sigma \). These random variables X represent an objective (or utility function) for a player, which includes both finite-horizon and infinite-horizon properties. Examples of finite-horizon properties include the probability of reaching a set of target states T within k steps or the expected reward accumulated over k steps. These properties can be expressed by the random variables:

respectively. Examples of infinite-horizon properties include the probability of reaching a target set T and the expected cumulative reward until reaching a target set T (where paths that never reach the target have infinite reward), which can be expressed by the random variables:

where \(k_{\min } = \min \{ j \in \mathbb {N}\mid \pi (j) \in T \}\), respectively.

Let us first focus on zero-sum games, which are by definition two-player games. As for NFGs (see Definition 1), for a two-player CSG \(\mathsf G \) and a given objective X, we can consider the case where player 1 tries to maximise the expected value of X, while player 2 tries to minimise it. The above definition yields the value of \(\mathsf G \) with respect to X if it is determined, i.e., if the maximum value that player 1 can ensure equals the minimum value that player 2 can ensure. Since the CSGs we consider are finite state and finitely-branching, it follows that they are determined for all the objectives we consider [55]. Formally we have the following.

Definition 9

(Determinacy and optimality) For a two-player CSG \(\mathsf G \) and objective X, we say that \(\mathsf G \) is determined with respect to X if, for any state s:

and call this the value of \(\mathsf G \) in state s with respect to X, denoted \({ val }_\mathsf G (s,X)\). Furthermore, a strategy \(\sigma _1^\star \) of player 1 is optimal with respect to X if we have \(\smash {\mathbb {E}^{\sigma _1^\star ,\sigma _2}_\mathsf{G ,s}(X) \geqslant { val }_\mathsf G (s,X)}\) for all \(s\in S\) and \(\sigma _2 \in \varSigma ^2\) and a strategy of player 2 is optimal with respect to X if \(\smash {\mathbb {E}^{\sigma _1,\sigma _2^\star }_\mathsf{G ,s}(X) \leqslant { val }_\mathsf G (s,X)}\) for all \(s\in S\) and \(\sigma _1 \in \varSigma ^1\).

Example 3

Consider the (non-probabilistic) CSG shown in Fig. 1 corresponding to two players repeatedly playing the rock-paper-scissors game (see Example 1). Transitions are labelled with action pairs, where \(A_i = \{r_i,p_i,s_i,t_i\}\) for \(1 \leqslant i \leqslant 2\), with \(r_i\), \(p_i\) and \(s_i\) representing playing rock, paper and scissors, respectively, and \(t_i\) restarting the game. The CSG starts in state \(s_0\) and states \(s_1\), \(s_2\) and \(s_3\) are labelled with atomic propositions corresponding to when a player wins or there is a draw in a round of the rock-paper-scissors game.

For the zero-sum objective to maximise the probability of reaching \(s_1\) before \(s_2\), i.e. player 1 winning a round of the game before player 2, the value of the game is 1/2 and the optimal strategy of each player i is to choose \(r_i\), \(p_i\) and \(s_i\), each with probability 1/3 in state \(s_0\) and \(t_i\) otherwise.

For nonzero-sum CSGs, with an objective \(X_i\) for each player i, we will use NE, which can be defined as for NFGs (see Definition 4). In line with the definition of zero-sum optimality above (and because the model checking algorithms we will later introduce are based on backward induction [70, 77]), we restrict our attention to subgame-perfect NE [61], which are NE in every state of the CSG.

Definition 10

(Subgame-perfect NE) For CSG \(\mathsf G \), a strategy profile \(\sigma ^\star \) is a subgame-perfect Nash equilibrium for objectives \(\langle X_i \rangle _{i \in N}\) if and only if \(\mathbb {E}^{\sigma ^\star }_\mathsf{G ,s}(X_i) \geqslant \sup _{\sigma _i \in \varSigma _i} \mathbb {E}^{\sigma ^\star _{-i}[\sigma _i]}_\mathsf{G ,s}(X_i)\) for all \(i \in N\) and \(s \in S\).

Furthermore, because we use a variety of objectives, including infinite-horizon objectives, where the existence of NE is an open problem [7], we will in some cases use \(\varepsilon \)-NE, which do exist for any \(\varepsilon >0\) for all the properties we consider.

Definition 11

(Subgame-perfect \(\varepsilon \)-NE) For CSG \(\mathsf G \) and \(\varepsilon >0\), a strategy profile \(\sigma ^\star \) is a subgame-perfect \(\varepsilon \)-Nash equilibrium for objectives \(\langle X_i \rangle _{i \in N}\) if and only if \(\mathbb {E}^{\sigma ^\star }_\mathsf{G ,s}(X_i) \geqslant \sup _{\sigma _i \in \varSigma _i} \mathbb {E}^{\sigma ^\star _{-i}[\sigma _i]}_\mathsf{G ,s}(X_i) - \varepsilon \) for all \(i \in N\) and \(s \in S\).

Example 4

In [10] a non-probabilistic concurrent game is used to model medium access control. Two users with limited energy share a wireless channel and choose to transmit (\(t_i\)) or wait (\(w_i\)) and, if both transmit, the transmissions fail due to interference. We extend this to a CSG by assuming that transmissions succeed with probability q if both transmit. Figure 2 presents a CSG model of the protocol where each user has enough energy for one transmission. The states are labelled with the status of each user, where the first value represents if the user i has transmitted or not transmitted their message (\( tr _i\) and \( nt _i\) respectively) and the second if there is sufficient energy to transmit or not (1 and 0 respectively).

If the objectives are to maximise the probability of a successful transmission, there are two subgame-perfect SWNE profiles, one when user 1 waits for user 2 to transmit before transmitting and another when user 2 waits for user 1 to transmit before transmitting. Under both profiles, both users successfully transmit with probability 1. If the objectives are to maximise the probability of being one of the first to transmit, then there is only one SWNE profile corresponding to both users immediately trying to transmit. In this case the probability of each user successfully transmitting is q.

3 Property specification: extending the logic rPATL

In order to formalise properties of CSGs, we propose an extension of the logic rPATL, previously defined for zero-sum properties of TSGs [19]. In particular, we add operators to specify nonzero-sum properties, using (social welfare or social cost) Nash equilibria, and provide a semantics for this extended logic on CSGs.

Definition 12

(Extended rPATL syntax) The syntax of our extended version of rPATL is given by the grammar:

where \(\mathsf {a} \) is an atomic proposition, C and \(C'\) are coalitions of players such that \(C' = N {\setminus } C\), \({{\,\mathrm{opt}\,}}\in \{ \min ,\max \}\), \(\sim \,\in \{<, \leqslant , \geqslant , >\}\), \(q \in \mathbb {Q}\cap [0, 1]\), \(x \in \mathbb {Q}\), r is a reward structure and \(k \in \mathbb {N}\).

rPATL is a branching-time temporal logic for stochastic games, which combines the probabilistic operator \({\texttt {P}}\) of PCTL [38], PRISM’s reward operator \({\texttt {R}}\) [44], and the coalition operator \(\langle \! \langle {C} \rangle \! \rangle \) of ATL [1]. The syntax distinguishes between state (\(\phi \)), path (\(\psi \)) and reward (\(\rho \)) formulae. State formulae are evaluated over states of a CSG, while path and reward formulae are both evaluated over paths.

The core operators from the existing version of rPATL [19] are \(\langle \! \langle {C} \rangle \! \rangle {\texttt {P}}_{\sim q}[\,{\psi }\,]\) and \(\langle \! \langle {C} \rangle \! \rangle {\texttt {R}}^{r}_{\sim x}[\,{\rho }\,]\). A state satisfies a formula \(\langle \! \langle {C} \rangle \! \rangle {\texttt {P}}_{\sim q}[\,{\psi }\,]\) if the coalition of players C can ensure that the probability of the path formula \(\psi \) being satisfied is \({\sim } q\), regardless of the actions of the other players (\(N{\setminus }C\)) in the game. A state satisfies a formula \(\langle \! \langle {C} \rangle \! \rangle {\texttt {R}}^{r}_{\sim x}[\,{\rho }\,]\) if the players in C can ensure that the expected value of the reward formula \(\rho \) for reward structure r is \({\sim } x\), whatever the other players do. Such properties are inherently zero-sum in nature as one coalition tries to maximise an objective (e.g., the probability of \(\psi \)) and the other tries to minimise it; hence, we call these zero-sum formulae.

The most significant extension we make to the rPATL logic is the addition of nonzero-sum formulae. These take the form \(\langle \! \langle {C{:}C'} \rangle \! \rangle _{{{\,\mathrm{opt}\,}}\sim x}(\theta )\), where C and \(C'\) are two coalitions that represent a partition of the set of players N, and \(\theta \) is the sum of either two probabilistic or two reward objectives. Their meaning is as follows:

-

\(\langle \! \langle {C{:}C'} \rangle \! \rangle _{\max \sim x}(\theta )\) is satisfied if there exists a subgame-perfect SWNE profile between coalitions C and \(C'\) under which the sum of the objectives of C and \(C'\) in \(\theta \) is \({\sim } x\);

-

\(\langle \! \langle {C{:}C'} \rangle \! \rangle _{\min \sim x}(\theta )\) is satisfied if there exists a subgame-perfect SCNE profile between coalitions C and \(C'\) under which the sum of the objectives of C and \(C'\) in \(\theta \) is \({\sim } x\).

Like the existing zero-sum formulae, the new nonzero-sum formulae still split the players into just two coalitions, C and \(C' = N{\setminus }C\). This means that the model checking algorithm (see Sect. 4) reduces to finding equilibria in two-player CSGs, which is more tractable than for larger numbers of players. Technically, therefore, we could remove the second coalition \(C'\) from the syntax. However, we retain it for clarity about which coalition corresponds to each of the two objectives, and to allow a later extension to more than two coalitions [47].

Both types of formula, zero-sum and nonzero-sum, are composed of path (\(\psi \)) and reward (\(\rho \)) formulae, used in probabilistic and reward objectives included within \({\texttt {P}}\) and \({\texttt {R}}\) operators, respectively. For path formulae, we follow the existing rPATL syntax from [19] and allow next (\({\texttt {X}\,}\phi \)), bounded until (\(\phi {\ \texttt {U}^{\leqslant k}\ }\phi \)) and unbounded until (\(\phi {\ \texttt {U}\ }\phi \)). We also allow the usual equivalences such as \({\texttt {F}\ }\phi \equiv \texttt {true}{\ \texttt {U}\ }\phi \) (i.e., probabilistic reachability) and \({\texttt {F}^{\leqslant k}\ }\phi \equiv \texttt {true}{\ \texttt {U}^{\leqslant k}\ }\phi \) (i.e., bounded probabilistic reachability).

For reward formulae, we introduce some differences with respect to [19]. We allow instantaneous (state) reward at the kth step (instantaneous reward \(\texttt {I}^{=k}\)), reward accumulated over k steps (bounded cumulative reward \(\texttt {C}^{\leqslant k}\)), and reward accumulated until a formula \(\phi \) is satisfied (expected reachability \({\texttt {F}\ }\phi \)). The first two, adapted from the property specification language of PRISM [44], were not previously included in rPATL, but proved to be useful for the case studies we present later in Sect. 7.2. For the third (\({\texttt {F}\ }\phi \)), [19] defines several variants, which differ in the treatment of paths that never reach a state satisfying \(\phi \). We restrict our attention to the most commonly used one, which is the default in PRISM, where paths that never satisfy \(\phi \) have infinite reward. In the case of zero-sum formulae, adding the additional variants is straightforward based on the algorithm of [19]. On the other hand, for nonzero-sum formulae, currently no algorithms exist for these variants.

As for other probabilistic temporal logics, it is useful to consider numerical queries, which represent the value of an objective, rather than checking whether it is above or below some threshold. In the case of zero-sum formulae, these take the form \(\langle \! \langle {C} \rangle \! \rangle {\texttt {P}}_{\min =?}[\,{\psi }\,]\), \(\langle \! \langle {C} \rangle \! \rangle {\texttt {P}}_{\max =?}[\,{\psi }\,]\), \(\langle \! \langle {C} \rangle \! \rangle {\texttt {R}}^{r}_{\min =?}[\,{\rho }\,]\) and \(\langle \! \langle {C} \rangle \! \rangle {\texttt {R}}^{r}_{\max =?}[\,{\rho }\,]\). For nonzero-sum formulae, numerical queries are of the form \(\langle \! \langle {C{:}C'} \rangle \! \rangle _{\max =?}[\theta ]\) and \(\langle \! \langle {C{:}C'} \rangle \! \rangle _{\min =?}[\theta ]\) which return the SWNE and SCNE values, respectively.

Example 5

Consider a scenario in which two robots (\( rbt _1\) and \( rbt _2\)) move concurrently over a square grid of cells, where each is trying to reach their individual goal location. Each step of the robot involves transitioning to an adjacent cell, possibly stochastically. Examples of zero-sum formulae, where \(\mathsf {crash}, \mathsf {goal}_1, \mathsf {goal}_2\) denote the obvious atomic propositions labelling states, include:

-

\(\langle \! \langle { rbt _1} \rangle \! \rangle {\texttt {P}}_{\max =?}[\,{\lnot \mathsf {crash}\ {\texttt {U}}^{\leqslant 10}\ \mathsf {goal}_1}\,]\) asks what is the maximum probability with which the first robot can ensure that it reaches its goal location within 10 steps and without crashing, no matter how the second robot behaves;

-

\(\langle \! \langle { rbt _2} \rangle \! \rangle {\texttt {R}}^{r_\mathsf {crash}}_{\leqslant 1.5}[\,{{\texttt {F}\ }\mathsf {goal}_2}\,]\) states that, no matter the behaviour of the first robot, the second robot can ensure the expected number of times it crashes before reaching its goal is less than or equal to 1.5 (\(r_ crash \) is a reward structure that assigns 1 to states labelled \(\mathsf {crash}\) and 0 to all other states).

Examples of nonzero-sum formulae include:

-

\(\langle \! \langle { rbt _1{:} rbt _2} \rangle \! \rangle _{\max \geqslant 2}({\texttt {P}}_{}[\,{{\texttt {F}\ }\mathsf {goal}_1}\,]{+}{\texttt {P}}_{}[\,{\lnot \mathsf {crash} \ {\texttt {U}}^{\leqslant 10} \mathsf {goal}_2}\,])\) states the robots can collaborate so that both reach their goal with probability 1, with the additional condition that the second has to reach its goal within 10 steps without crashing;

-

\(\langle \! \langle { rbt _1{:} rbt _2} \rangle \! \rangle _{\min =?}({\texttt {R}}^{r_ steps }_{}[\,{{\texttt {F}\ }\mathsf {goal}_1}\,]{+}{\texttt {R}}^{r_ steps }_{}[\,{{\texttt {F}\ }\mathsf {goal}_2}\,])\) asks what is the sum of expected reachability values when the robots collaborate and each wants to minimise their expected steps to reach their goal (\(r_ steps \) is a reward structure that assigns 1 to all state and action tuple pairs).

Examples of more complex nested formulae for this scenario include the following, where \(r_ steps \) is as above:

-

\(\langle \! \langle { rbt _1} \rangle \! \rangle {\texttt {P}}_{\max =?}[\,{ {\texttt {F}\ }\langle \! \langle { rbt _2} \rangle \! \rangle {\texttt {R}}^{r_ steps }_{\geqslant 10}[\,{{\texttt {F}}\, \mathsf {goal}_2}\,]}\,]\) asks what is the maximum probability with which the first robot can get to a state where the expected time for the second robot to reach their goal is at least 10 steps;

-

\(\langle \! \langle { rbt _1, rbt _2} \rangle \! \rangle {\texttt {P}}_{\geqslant 0.75}[\,{ {\texttt {F}\ }\langle \! \langle { rbt _1{:} rbt _2} \rangle \! \rangle _{\min \leqslant 5}({\texttt {R}}^{r_ steps }_{}[\,{{\texttt {F}}\, \mathsf {goal}_1}\,]{+}{\texttt {R}}^{r_ steps }_{}[\,{{\texttt {F}}\, \mathsf {goal}_2}\,])}\,]\) states the robots can collaborate to reach, with probability at least 0.75, a state where the sum of the expected time for the robots to reach their goals is at most 5.

Before giving the semantics of the logic, we define coalition games which, for a CSG \(\mathsf G \) and coalition (set of players) \(C\subseteq N\), reduce \(\mathsf G \) to a two-player CSG \(\mathsf G ^C\), with one player representing C and the other \(N{\setminus }C\). Without loss of generality we assume the coalition of players is of the form \(C = \{1,\dots ,n'\}\).

Definition 13

(Coalition game) For CSG \(\mathsf G = (N, S, \bar{s}, A, \varDelta , \delta , { AP }, { L })\) and coalition \(C = \{1,\dots ,n'\} \subseteq N\), the coalition game \(\mathsf G ^C = ( \{1,2\}, S, \bar{s}, A^C, \varDelta ^C, \delta ^C, { AP }, { L })\) is a two-player CSG where:

-

\(A^C = (A^C_1\cup \{ \bot \}) {\times } (A^C_2\cup \{ \bot \})\);

-

\(A^C_1 = (A_1\cup \{\bot \}) {\times } \cdots {\times } (A_{n'}\cup \{\bot \}) \setminus \{(\bot ,\dots ,\bot )\}\);

-

\(A^C_2 = (A_{n'+1}\cup \{\bot \}) {\times } \cdots {\times } (A_n\cup \{\bot \}) \setminus \{(\bot ,\dots ,\bot )\}\);

-

\(a_1^C = (a_1,\dots ,a_m) \in \varDelta ^C(s)\) if and only if either \(\varDelta (s) \cap A_j =\varnothing \) and \(a_j=\bot \) or \(a_j \in \varDelta (s)\) for all \(1 \leqslant j \leqslant m\) and \(a_2^C = (a_{m+1},\dots ,a_n) \in \varDelta ^C(s)\) if and only if either \(\varDelta (s) \cap A_j =\varnothing \) and \(a_j=\bot \) or \(a_j \in \varDelta (s)\) for all \(m+1 \leqslant j \leqslant n\) for \(s \in S\);

-

for any \(s \in S\), \(a^C_1 \in A^C_1\) and \(a^C_2 \in A^C_2\) we have \(\delta ^C(s,(a^C_1,a^C_2))=\delta (s,(a_1,a_2))\) where \(a_i=(\bot ,\dots ,\bot )\) if \(a^C_i=\bot \) and \(a_i=a^C_i\) otherwise for \(1 \leqslant i \leqslant 2\).

Furthermore, for a reward structure \(r=(r_A,r_S)\) of \(\mathsf G \), by abuse of notation we also use r for the corresponding reward structure \(r=(r^C_A,r^C_S)\) of \(\mathsf G ^C\) where:

-

for any \(s \in S\), \(a^C_1 \in A^C_1\) and \(a^C_2 \in A^C_2\) we have \(r^C_A(s,(a^C_1,a^C_2))=r_A(s,(a_1,a_2))\) where \(a_i=(\bot ,\dots ,\bot )\) if \(a^C_i=\bot \) and \(a_i=a^C_i\) otherwise for \(1 \leqslant i \leqslant 2\);

-

for any \(s \in S\) we have \(r^C_S(s) =r_S(s)\).

Our logic includes both finite-horizon (\({\texttt {X}\,}\), \({\texttt {U}^{\leqslant k}}\), \(\texttt {I}^{=k}\), \(\texttt {C}^{\leqslant k}\)) and infinite-horizon (\({\texttt {U}}\), \({\texttt {F}}\)) temporal operators. For the latter, the existence of SWNE or SCNE profiles is an open problem [7], but we can check for \(\varepsilon \)-SWNE or \(\varepsilon \)-SCNE profiles for any \(\varepsilon \). Hence, we define the semantics of the logic in the context of a particular \(\varepsilon \).

Definition 14

(Extended rPATL semantics) For a CSG \(\mathsf G \), \(\varepsilon >0\) and a formula \(\phi \) in our rPATL extension, we define the satisfaction relation \({\,\models \,}\) inductively over the structure of \(\phi \). The propositional logic fragment \((\texttt {true}\), \(\mathsf {a} \), \(\lnot \), \(\wedge )\) is defined in the usual way. For a zero-sum formula and state \(s \in S\) of CSG \(\mathsf G \), we have:

For a nonzero-sum formula and state \(s \in S\) of CSG \(\mathsf G \), we have:

where \((\sigma _1^\star ,\sigma _2^\star )\) is a subgame-perfect \(\varepsilon \)-SWNE profile if \({{\,\mathrm{opt}\,}}= \max \), or a subgame-perfect \(\varepsilon \)-SCNE profile if \({{\,\mathrm{opt}\,}}= \min \), for the objectives \((X^\theta _1,X^\theta _2)\) in \(\mathsf G ^{C}\). For an objective \(X^{\psi }\), \(X^{r,\rho }\) or \(X^\theta _i\) (\(1 \leqslant i \leqslant 2\)), and path \(\pi \in IPaths _\mathsf{G ^C,s}\):

For a temporal formula and path \(\pi \in IPaths _\mathsf{G ^C,s}\):

For a reward structure r, reward formula and path \(\pi \in IPaths _\mathsf{G ^C,s}\):

where \(k_\phi = \min \{ k \mid \pi (k) {\,\models \,}\phi \}\).

Using the notation above, we can also define the numerical queries mentioned previously. For example, for state s we have:

As the zero-sum objectives appearing in the logic are either finite-horizon or infinite-horizon and correspond to either probabilistic until or expected reachability formulae, we have that CSGs are determined (see Definition 9) with respect to these objectives [55], and therefore values exist. More precisely, for any CSG \(\mathsf G \), coalition C, state s, path formula \(\psi \), reward structure r and reward formula \(\rho \), the values \({ val }_\mathsf{G ^C}(s,X^\psi )\) and \({ val }_\mathsf{G ^C}(s,X^{r,\rho })\) of the game \(\mathsf G ^C\) in state s with respect to the objectives \(X^\psi \) and \(X^{r,\rho }\) are well defined. This determinacy result also yields the following equivalences:

Also, as for other probabilistic temporal logics, we can represent negated path formulae by inverting the probability threshold, e.g.: \(\langle \! \langle {C} \rangle \! \rangle {\texttt {P}}_{\geqslant q}[\,{\lnot \psi }\,] \equiv \langle \! \langle {C} \rangle \! \rangle {\texttt {P}}_{\leqslant 1-q}[\,{\psi }\,]\) and \(\langle \! \langle {C{:}C'} \rangle \! \rangle _{\max \geqslant q }({\texttt {P}}_{}[\,{\psi _1}\,]{+}{\texttt {P}}_{}[\,{\psi _2}\,]) \equiv \langle \! \langle {C{:}C'} \rangle \! \rangle _{\min \leqslant 2-q }({\texttt {P}}_{}[\,{\lnot \psi _1}\,]{+}{\texttt {P}}_{}[\,{\lnot \psi _2}\,])\), notably allowing the ‘globally’ operator \({\texttt {G}\ }\phi \equiv \lnot ({\texttt {F}\ }\lnot \phi )\) to be defined.

4 Model checking for extended rPATL against CSGs

We now present model checking algorithms for the extended rPATL logic, introduced in the previous section, on a CSG \(\mathsf G \). Since rPATL is a branching-time logic, this works by recursively computing the set \({ Sat }(\phi )\) of states satisfying formula \(\phi \) over the structure of \(\phi \), as is done for rPATL on TSGs [19].

If \(\phi \) is a zero-sum formula of the form \(\langle \! \langle {C} \rangle \! \rangle {\texttt {P}}_{\sim q}[\,{\psi }\,]\) or \(\langle \! \langle {C} \rangle \! \rangle {\texttt {R}}^{r}_{\sim x}[\,{\rho }\,]\), this reduces to computing values for a two-player CSG (either \(\mathsf G ^C\) or \(\mathsf G ^{N \setminus C}\)) with respect to \(X^\psi \) or \(X^{r,\rho }\). In particular, for \(\sim \, \in \{ \geqslant , > \}\) and \(s \in S\) we have:

and, since CSGs are determined for the zero-sum properties we consider, for \(\sim \, \in \{ < , \leqslant \}\) we have:

Without loss of generality, for such formulae we focus on computing \({ val }_\mathsf{G ^C}(s,X^\psi )\) and \({ val }_\mathsf{G ^C}(s,X^{r,\rho })\) and, to simplify the presentation, we denote these values by \({\texttt {V}}_\mathsf{G ^C}(s,\psi )\) and \({\texttt {V}}_\mathsf{G ^C}(s,r,\rho )\) respectively.

If, on the other hand, \(\phi \) is a nonzero-sum formula of the form \(\langle \! \langle {C{:}C'} \rangle \! \rangle _{{{\,\mathrm{opt}\,}}\sim x}(\theta )\) then, from the semantics for \(\langle \! \langle {C{:}C'} \rangle \! \rangle _{{{\,\mathrm{opt}\,}}\sim x}(\theta )\) (see Definition 14), computing \({ Sat }(\phi )\) reduces to the computation of subgame-perfect SWNE or SCNE values for the objectives \((X^\theta _1,X^\theta _2)\) and a comparison of their sum to the threshold x. Again, to simplify the presentation, will use the notation \({\texttt {V}}_\mathsf{G ^C}(s,\theta )\) for the SWNE values of the objectives \((X^\theta _1,X^\theta _2)\) in state s of \(\mathsf G ^C\).

For the remainder of this section, we fix a CSG \(\mathsf G = (N, S, \bar{S}, A, \varDelta , \delta , { AP }, { L })\) and coalition C of players and assume that the available actions of players 1 and 2 of the (two-player) CSG \(\mathsf G ^C\) in a state s are \(\{a_1,\dots ,a_l\}\) and \(\{b_1,\dots ,b_m\}\), respectively. We also fix a value \(\varepsilon >0\) which, as discussed in Sect. 3, is needed to define the semantics of our logic, in particular for infinite-horizon objectives where we need to consider \(\varepsilon \)-SWNE profiles.

Assumptions Our model checking algorithms require several assumptions on CSGs, depending on the operators that appear in the formula \(\phi \). These can all be checked using standard graph algorithms [23]. In the diverse set of model checking case studies that we later present in Sect. 7.2, these assumptions have not limited the practical applicability of our model checking algorithms.

For zero-sum formulae, the only restriction is for infinite-horizon reward properties on CSGs with both positive and negative reward values.

Assumption 1

For a zero-sum formula of the form \(\langle \! \langle {C} \rangle \! \rangle {\texttt {R}}^{r}_{\sim x}[\,{{\texttt {F}\ }\phi }\,]\), from any state s where \(r_S(s)<0\) or \(r_A(s,a)<0\) for some action a, under all profiles of \(\mathsf G \), with probability 1 we reach either a state satisfying \(\phi \) or a state where all rewards are zero and which cannot be left with probability 1 under all profiles.

Without this assumption, the values computed during value iteration can oscillate, and therefore fail to converge (see “Appendix A”). This restriction is not applied in the existing rPATL model checking algorithms for TSGs [19] since that work assumes that all rewards are non-negative.

The remaining two assumptions concern nonzero-sum formulae that contain infinite-horizon objectives. We restrict our attention to a class of CSGs that can be seen as a variant of stopping games [20], as used for multi-objective TSGs. Compared to [20], we use a weaker, objective-dependent assumption, which ensures that, under all profiles, with probability 1, eventually the outcome of each player’s objective does not change by continuing.

Assumption 2

For nonzero-sum formulae, if \({\texttt {P}}_{}[\,{\phi _1 {\ \texttt {U}\ }\phi _2}\,]\) is a probabilistic objective, then \({ Sat }(\lnot \phi _1 \vee \phi _2)\) is reached with probability 1 from all states under all profiles of \(\mathsf G \).

Assumption 3

For nonzero-sum formulae, if \({\texttt {R}}^{r}_{}[\,{{\texttt {F}\ }\phi }\,]\) is a reward objective, then \({ Sat }(\phi )\) is reached with probability 1 from all states under all profiles of \(\mathsf G \).

Like for Assumption 1, without this restriction, value iteration may not converge since values can oscillate (see “Appendices B, C”). Notice that Assumption 1 is not required for nonzero-sum properties containing negative rewards since Assumption 3 is itself a stronger restriction.

4.1 Model checking zero-sum properties

In this section, we present algorithms for zero-sum properties, i.e., for computing the values \({\texttt {V}}_\mathsf{G ^C}(s,\psi )\) or \({\texttt {V}}_\mathsf{G ^C}(s,r,\rho )\) for path formulae \(\psi \) or reward formulae \(\rho \) in all states s of \(\mathsf G ^C\). We split the presentation into finite-horizon properties, which can be solved exactly using backward induction [70, 77], and infinite-horizon properties, for which we approximate values using value iteration [15, 68]. Both cases require the solution of matrix games, for which we rely on the linear programming approach presented in Sect. 2.1.1.

4.1.1 Computing the values of zero-sum finite-horizon formulae

Finite-horizon properties are defined over a bounded number of steps: the next or bounded until operators for probabilistic formulae, and the instantaneous or bounded cumulative reward operators. Computation of the values \({\texttt {V}}_\mathsf{G ^C}(s,\psi )\) or \({\texttt {V}}_\mathsf{G ^C}(s,r,\rho )\) for these is done recursively, based on the step bound, using backward induction and solving matrix games in each state at each iteration. The actions of each matrix game correspond to the actions available in that state; the utilities are constructed from the transition probabilities \(\delta ^C\) of the game \(\mathsf G ^C\), the reward structure r (in the case of reward formulae) and the values already computed recursively for successor states.

Next This is the simplest operator, over just one step, and so in fact requires no recursion, just solution of a matrix game for each state. If \(\psi = {\texttt {X}\,}\phi \), then for any state s we have that \({\texttt {V}}_\mathsf{G ^C}(s,\psi ) = { val }(\mathsf Z )\) where \(\mathsf Z \in \mathbb {Q}^{l \times m}\) is the matrix game with:

Bounded Until If \(\psi = \phi _1 {\ \texttt {U}^{\leqslant k}\ }\phi _2\), we compute the values for the path formulae \(\psi _{n} = \phi _1 \ {\texttt {U}}^{\leqslant n}\ \phi _2\) for \(0 \leqslant n \leqslant k\) recursively. For any state s:

where \({ val }(\mathsf Z )\) equals the value of the matrix game \(\mathsf Z \in \mathbb {Q}^{l \times m}\) with:

and \(v^{s'}_{n-1} = {\texttt {V}}_\mathsf{G ^C}(s',\psi _{n-1})\) for all \(s' \in S\).

Instantaneous Rewards If \(\rho = \texttt {I}^{=k}\), then for the reward structure r we compute the values for the reward formulae \(\rho _{n} = \texttt {I}^{=n}\) for \(0 \leqslant n \leqslant k\) recursively. For any state s:

where \({ val }(\mathsf Z )\) equals the value of the matrix game \(\mathsf Z \in \mathbb {Q}^{l \times m}\) with:

and \(v^{s'}_{n-1} = {\texttt {V}}_\mathsf{G ^C}(s',r,\rho _{n-1})\) for all \(s' \in S\).

Bounded Cumulative Rewards If \(\rho = \texttt {C}^{\leqslant k}\), then for the reward structure r we compute the values for the reward formulae \(\rho _{n} = \texttt {C}^{\leqslant n}\) for \(0 \leqslant n \leqslant k\) recursively. For any state s:

where \({ val }(\mathsf Z )\) equals the value of the matrix game \(\mathsf Z \in \mathbb {Q}^{l \times m}\) with:

and \(v^{s'}_{n-1} = {\texttt {V}}_\mathsf{G ^C}(s',r,\rho _{n-1})\) for all \(s' \in S\).

4.1.2 Computing the values of zero-sum infinite-horizon formulae

We now discuss how to compute the values \({\texttt {V}}_\mathsf{G ^C}(s,\psi )\) and \({\texttt {V}}_\mathsf{G ^C}(s,r,\rho )\) for infinite-horizon properties, i.e., when the path formula \(\psi \) is an until operator, or for the expected reachability variant of the reward formulae \(\rho \). In both cases, we approximate these values using value iteration, adopting a similar recursive computation to the finite-horizon cases above, solving matrix games in each state and at each iteration, which converges in the limit to the desired values.

Following the approach typically taken in probabilistic model checking tools to implement value iteration, we estimate convergence of the iterative computation by checking the maximum relative difference between successive iterations. However, it is known [36] that, even for simpler probabilistic models such as MDPs, this convergence criterion cannot be used to guarantee that the final computed values are accurate to within a specified error bound. Alternative approaches that resolve this by computing lower and upper bounds for each state have been proposed for MDPs (e.g. [9, 36]) and extended to both single- and multi-objective solution of TSGs [3, 42]; extensions could be investigated for CSGs. Another possibility is to use policy iteration (see, e.g., [14]).

Until If \(\psi = \phi _1 {\ \texttt {U}\ }\phi _2\), the probability values can be approximated through value iteration using the fact that \(\langle {\texttt {V}}_\mathsf{G ^C}(s,\phi _1 {\ \texttt {U}^{\leqslant k}\ }\phi _2) \rangle _{k \in \mathbb {N}}\) is a non-decreasing sequence converging to \({\texttt {V}}_\mathsf{G ^C}(s,\phi _1 {\ \texttt {U}\ }\phi _2)\). We compute \({\texttt {V}}_\mathsf{G ^C}(s,\phi _1 {\ \texttt {U}^{\leqslant k}\ }\phi _2)\) for increasingly large k and estimate convergence as described above, based on the difference between values in successive iterations. However, we can potentially speed up convergence by first precomputing the set of states \(S^{\psi }_0\) for which the value of the zero-sum objective \(X^\psi \) is 0 and the set of states \(S^{\psi }_1\) for which the value is 1 using standard graph algorithms [23]. We can then apply value iteration to approximate \({\texttt {V}}_\mathsf{G ^C}(s,\phi _1 {\ \texttt {U}\ }\phi _2) = \lim _{k \rightarrow \infty } {\texttt {V}}_\mathsf{G ^C}(s,\phi _1 {\ \texttt {U}\ }\phi _2,k)\) where:

where \({ val }(\mathsf Z )\) equals the value of the matrix game \(\mathsf Z \in \mathbb {Q}^{l \times m}\) with:

and \(v^{s'}_{n-1} = {\texttt {V}}_\mathsf{G ^C}(s',\phi _1 {\ \texttt {U}\ }\phi _2,n-1)\) for all \(s' \in S\).

Expected Reachability If \(\rho = {\texttt {F}\ }\phi \) and the reward structure is r, then we first make all states of \(\mathsf G ^C\) satisfying \(\phi \) absorbing, i.e., we remove all outgoing transitions from such states. Second, we find the set of states \(S^\rho _\infty \) for which the reward is infinite; as in [19], this involves finding the set of states satisfying the formula \(\langle \! \langle {C} \rangle \! \rangle {\texttt {P}}_{<1}[\,{{\texttt {F}\ }\phi }\,]\) and we can use the graph algorithms of [23] to find these states. Again following [19], to deal with zero-reward cycles we need to use value iteration to compute a greatest fixed point. This involves first computing upper bounds on the actual values, by changing all zero reward values to some value \(\gamma >0\) to construct the reward structure \(r_\gamma =(r_A^\gamma ,r_A^\gamma )\) and then applying value iteration to approximate \({\texttt {V}}_\mathsf{G ^C}(s,r_\gamma ,\rho ) = \lim _{k \rightarrow \infty } {\texttt {V}}_\mathsf{G ^C}(s,r_\gamma ,\rho _k)\) where:

where \({ val }(\mathsf Z )\) equals the value of the matrix game \(\mathsf Z \in \mathbb {Q}^{l \times m}\) with:

and \(v^{s'}_{n-1} = {\texttt {V}}_\mathsf{G ^C}(s',r_\gamma ,\rho _{n{-}1})\) for all \(s' \in S\). Finally, using these upper bounds as the initial values we again perform value iteration as above, except now using the original reward structure r, i.e., to approximate \({\texttt {V}}_\mathsf{G ^C}(s,r_,\rho ) = \lim _{k \rightarrow \infty } {\texttt {V}}_\mathsf{G ^C}(s,r,\rho _k)\). The choice of \(\gamma \) can influence value iteration computations in opposing ways: increasing \(\gamma \) can speed up convergence when computing over-approximations, while potentially slowing it down when computing the actual values.

4.2 Model checking nonzero-sum properties

Next, we show how to compute subgame-perfect SWNE and SCNE values for the two objectives corresponding to a nonzero-sum formula. As for the zero-sum case, the approach taken depends on whether the formula contains finite-horizon or infinite-horizon objectives. We now have three cases:

-

1.

when both objectives are finite-horizon, we use backward induction [70, 77] to compute (precise) subgame-perfect SWNE and SCNE values;

-

2.

when both objectives are infinite-horizon, we use value iteration [15, 68] to approximate the values;

-

3.

when there is a mix of the two types of objectives, we convert the problem to two infinite-horizon objectives on an augmented model.

We describe these three cases separately in Sects. 4.2.1, 4.2.2 and 4.2.3, respectively, focusing on the computation of SWNE values. Then, in Sect. 4.2.4, we explain how to adapt this for SCNE values.

In a similar style to the algorithms for zero-sum properties, in all three cases the computation is an iterative process that analyses a two-player game for each state at each step. However, this now requires finding SWNE or SCNE values of a bimatrix game, rather than solving a matrix game as in the zero-sum case. We solve bimatrix games using the approach presented in Sect. 2.1.2 (see also the more detailed discussion of its implementation in Sect. 6.2).

Another important aspect of our algorithms is that, for efficiency, if we reach a state where the value of one player’s objective cannot change (e.g., the goal of that player is reached or can no longer be reached), then we switch to the simpler problem of solving an MDP to find the optimal value for the other player in that state. This is possible since the only SWNE profile in that state corresponds to maximising the objective of the other player. More precisely:

-

the first player (whose objective cannot change) is indifferent, since its value will not be affected by the choices of either player;

-

the second player cannot do better than the optimal value of its objective in the corresponding MDP where both players collaborate;

-

for any NE profile, the value of the first player is fixed and the value of the second is less than or equal to the optimal value of its objective in the MDP.

We use the notation \({\texttt {P}}^{\max }_\mathsf{G ,s}(\psi )\) and \({\texttt {R}}^{\max }_\mathsf{G ,s}(r,\rho )\) for the maximum probability of satisfying the path formula \(\psi \) and the maximum expected reward for the random variable \( rew (r,\rho )\), respectively, when the players collaborate in state s. These values can be computed through standard MDP model checking [6, 22].

4.2.1 Computing SWNE values of finite-horizon nonzero-sum formulae

As for the zero-sum case, for a finite-horizon nonzero-sum formula \(\theta \), we compute the SWNE values \({\texttt {V}}_\mathsf{G ^C}(s,\theta )\) for all states s of \(\mathsf G ^C\) in a recursive fashion based on the step bound. We now solve bimatrix games at each step, which are defined in a similar manner to the matrix games for zero-sum properties: the actions of each bimatrix game correspond to the actions available in that state and the utilities are constructed from the transition probabilities \(\delta ^C\) of the game \(\mathsf G ^C\), the reward structure (in the case of reward formulae) and the values already computed recursively for successor states.

For any state formula \(\phi \) and state s we let \(\eta _{\phi }(s)\) equal 1 if \(s \in { Sat }(\phi )\) and 0 otherwise. Recall that probability and reward values of the form \({\texttt {P}}^{\max }_\mathsf{G ,s}(\psi )\) and \({\texttt {R}}^{\max }_\mathsf{G ,s}(r,\rho )\), respectively, are computed through standard MDP verification. Below, we explain the computation for both types of finite-horizon probabilistic objectives (next and bounded until) and reward objectives (instantaneous and bounded cumulative), as well as combinations of each type.

Next If \(\theta = {\texttt {P}}_{}[\,{{\texttt {X}\,}\phi ^1}\,]{+}{\texttt {P}}_{}[\,{{\texttt {X}\,}\phi ^2}\,]\), then \({\texttt {V}}_\mathsf{G ^C}(s,\theta )\) equals SWNE values of the bimatrix game \((\mathsf Z _1, \mathsf Z _2) \in \mathbb {Q}^{l \times m}\) where:

Again, since next is a 1-step property, no recursion is required.

Bounded Until If \(\theta = {\texttt {P}}_{}[\,{\phi _1^1 {\ \texttt {U}^{\leqslant k_1}\, } \phi _2^1}\,]\,+\,{\texttt {P}}_{}[\,{\phi _1^2 {\ \texttt {U}^{\leqslant k_2}\, } \phi _2^2}\,]\), we compute SWNE values for the objectives for the nonzero-sum formulae \(\theta _{n+n_1,n+n_2}={\texttt {P}}_{}[\,{\phi _1^1 {\ \texttt {U}^{\leqslant n+n_1}\, } \phi _2^1}\,]\,+\,{\texttt {P}}_{}[\,{\phi _1^2 {\ \texttt {U}^{\leqslant n+n_2}\, } \phi _2^2}\,]\) for \(0 \leqslant n \leqslant k\) recursively, where \(k = \min \{k_1,k_2\}\), \(n_1 = k_1{-}k\) and \(n_2 = k_2{-}k\). In this case, there are three situations in which the value of the objective of one of the players cannot change, and hence we can switch to MDP verification. The first is when the step bound is zero for only one of the corresponding objectives, the second is when a state satisfying \(\phi _2^i\) is reached by only one player i (and therefore the objective is satisfied by that state) and the third is when a state satisfying \(\lnot \phi _1^i \wedge \lnot \phi _2^i\) is reached by only one player i (and therefore the objective is not satisfied by that state). For any state s, if \(n = 0\), then:

On the other hand, if \(n>0\), then:

where \({ val }(\mathsf Z _1, \mathsf Z _2)\) equals SWNE values of the bimatrix game \((\mathsf Z _1,\mathsf Z _2)\in \mathbb {Q}^{l \times m}\):

and \((v^{s',1}_{(n-1)+n_1},v^{s',2}_{(n-1)+n_2}) = {\texttt {V}}_\mathsf{G ^C}(s',\theta _{(n-1)+n_1,(n-1)+n_2})\) for all \(s' \in S\).

Next and Bounded Until If \(\theta = {\texttt {P}}_{}[\,{{\texttt {X}\,}\phi ^1}\,]{+}{\texttt {P}}_{}[\,{\phi _1^2 {\ \texttt {U}^{\leqslant k_2}\, } \phi _2^2}\,]\), then \({\texttt {V}}_\mathsf{G ^C}(s,\theta )\) equals SWNE values of the bimatrix game \((\mathsf Z _1, \mathsf Z _2) \in \mathbb {Q}^{l \times m}\) where:

In this case, since the value for objectives corresponding to next formulae cannot change after the first step, we can always switch to MDP verification after this step. The symmetric case is similar.

Instantaneous Rewards If \(\theta = {\texttt {R}}^{r_1}_{}[\,{\texttt {I}^{=k_1}}\,]{+}{\texttt {R}}^{r_2}_{}[\,{\texttt {I}^{=k_2}}\,]\), we compute SWNE values of the objectives for the nonzero-sum formulae \(\theta _{n+n_1,n+n_2}={\texttt {R}}^{r_1}_{}[\,{\texttt {I}^{=n+n_1}}\,]+{\texttt {R}}^{r_2}_{}[\,{\texttt {I}^{=n+n_2}}\,]\) for \(0 \leqslant n \leqslant k\) recursively, where \(k = \min \{k_1,k_2\}\), \(n_1 = k_1{-}k\) and \(n_2 = k_2{-}k\). Here, there is only one situation in which the value of the objective of one of the players cannot change: when one of the step bounds equals zero. Hence, this is the only time we switch to MDP verification. For any state s, if \(n = 0\), then:

On the other hand, if \(n>0\), then \({\texttt {V}}_\mathsf{G ^C}(s,\theta _{n+n_1,n+n_2})\) equals SWNE values of the bimatrix game \((\mathsf Z _1, \mathsf Z _2) \in \mathbb {Q}^{l \times m}\) where:

and \((v^{s',1}_{(n-1)+n_1},v^{s',2}_{(n-1)+n_2}) = {\texttt {V}}_\mathsf{G ^C}(s',\theta _{(n-1)+n_1,(n-1)+n_2})\) for all \(s' \in S\).

Bounded Cumulative Rewards If \(\theta = {\texttt {R}}^{r_1}_{}[\,{\texttt {C}^{\leqslant k_1}}\,]{+}{\texttt {R}}^{r_2}_{}[\,{\texttt {C}^{\leqslant k_2}}\,]\), we compute values of the objectives for the formulae \(\theta _{n+n_1,n+n_2}={\texttt {R}}^{r_1}_{}[\,{\texttt {C}^{\leqslant n+n_1}}\,]+{\texttt {R}}^{r_2}_{}[\,{\texttt {C}^{\leqslant n+n_2}}\,]\) for \(0 \leqslant n \leqslant k\) recursively, where \(k = \min \{k_1,k_2\}\), \(n_1 = k_1{-}k\) and \(n_2 = k_2{-}k\). As for instantaneous rewards, the only time we can switch to MDP verification is when one of the step bounds equals zero. For state s, if \(n = 0\):

and if \(n>0\), then \({\texttt {V}}_\mathsf{G ^C}(s,\theta _{n+n_1,n+n_2})\) equals SWNE values of the bimatrix game \((\mathsf Z _1, \mathsf Z _2) \in \mathbb {Q}^{l \times m}\):

and \((v^{s',1}_{(n-1)+n_1},v^{s',2}_{(n-1)+n_2}) = {\texttt {V}}_\mathsf{G ^C}(s',\theta _{(n-1)+n_1,(n-1)+n_2})\) for all \(s' \in S\).

Bounded Instantaneous and Cumulative Rewards If \(\theta = {\texttt {R}}^{r_1}_{}[\,{\texttt {I}^{= k_1}}\,]{+}{\texttt {R}}^{r_2}_{}[\,{\texttt {C}^{\leqslant k_2}}\,]\), we compute values of the objectives for the formulae \(\theta _{n+n_1,n+n_2}={\texttt {R}}^{r_1}_{}[\,{\texttt {I}^{= n+n_1}}\,]+{\texttt {R}}^{r_2}_{}[\,{\texttt {C}^{\leqslant n+n_2}}\,]\) for \(0 \leqslant n \leqslant k\) recursively, where \(k = \min \{k_1,k_2\}\), \(n_1 = k_1{-}k\) and \(n_2 = k_2{-}k\). Again, here we can only switch to MDP verification when one of the step bounds equals zero. For state s, if \(n = 0\):

and if \(n>0\), then \({\texttt {V}}_\mathsf{G ^C}(s,\theta _{n+n_1,n+n_2})\) equals SWNE values of the bimatrix game \((\mathsf Z _1, \mathsf Z _2) \in \mathbb {Q}^{l \times m}\):

and \((v^{s',1}_{(n-1)+n_1},v^{s',2}_{(n-1)+n_2}) = {\texttt {V}}_\mathsf{G ^C}(s',\theta _{(n-1)+n_1,(n-1)+n_2})\) for all \(s' \in S\). The symmetric case follows similarly.

4.2.2 Computing SWNE values of infinite-horizon nonzero-sum formulae

We next show how to compute SWNE values \({\texttt {V}}_\mathsf{G ^C}(s,\theta )\) for infinite-horizon nonzero-sum formulae \(\theta \) in all states s of \(\mathsf G ^C\). As for the zero-sum case, we approximate these using a value iteration approach. Each step of this computation is similar in nature to the algorithms in the previous section, where a bimatrix game is solved for each state, and a reduction to solving an MDP is used after one of the player’s objective can no longer change.

A key aspect of the value iteration algorithm is that, while the SWNE (or SCNE) values take the form of a pair, with one value for each player, convergence is defined over the sum of the two values. This is because there is not necessarily a unique pair of such values, but the maximum (or minimum) of the sum of NE values is uniquely defined. Convergence of value iteration is estimated in the same way as for the zero-sum computation (see Sect. 4.1.2), by comparing values in successive iterations. As previously, this means that we are not able to guarantee that the computed values are within a particular error bound of the exact values.

Below, we give the algorithms for the cases of two infinite-horizon objectives. The notation used is as in the previous section: for any state formula \(\phi \) and state s we let \(\eta _{\phi }(s)\) equal 1 if \(s \in { Sat }(\phi )\) and 0 otherwise; and values of the form \({\texttt {P}}^{\max }_\mathsf{G ,s}(\psi )\) and \({\texttt {R}}^{\max }_\mathsf{G ,s}(r,\rho )\) are computed through standard MDP verification.

Until If \(\theta = {\texttt {P}}_{}[\,{\phi _1^1 {\ \texttt {U}\ }\phi _2^1}\,]{+}{\texttt {P}}_{}[\,{\phi _1^2 {\ \texttt {U}\ }\phi _2^2}\,]\), values for any state s can be computed through value iteration as the limit \({\texttt {V}}_\mathsf{G ^C}(s,\theta ) = \lim _{n \rightarrow \infty } {\texttt {V}}_\mathsf{G ^C}(s,\theta ,n)\) where:

where \({ val }(\mathsf Z _1, \mathsf Z _2)\) equals SWNE values of the bimatrix game \((\mathsf Z _1,\mathsf Z _2)\in \mathbb {Q}^{l \times m}\):

and \((v^{s',1}_{n-1},v^{s',2}_{n-1}) = {\texttt {V}}_\mathsf{G ^C}(s',\theta ,n{-}1)\) for all \(s' \in S\).

As can be seen, there are two situations in which we switch to MDP verification. These correspond to the two cases where the value of the objective of one of the players cannot change: when a state satisfying \(\phi _2^i\) is reached for only one player i (and therefore the objective is satisfied by that state) and when a state satisfying \(\lnot \phi _1^i \wedge \lnot \phi _2^i\) is reached for only one player i (and therefore the objective is not satisfied by that state).

Expected Reachability If \(\theta = {\texttt {R}}^{r_1}_{}[\,{{\texttt {F}\ }\phi ^1}\,]{+}{\texttt {R}}^{r_2}_{}[\,{{\texttt {F}\ }\phi ^2}\,]\), values can be computed through value iteration as the limit \({\texttt {V}}_\mathsf{G ^C}(s,\theta ) = \lim _{n \rightarrow \infty } {\texttt {V}}_\mathsf{G ^C}(s,\theta ,n)\) where:

where \({ val }(\mathsf Z _1, \mathsf Z _2)\) equals SWNE values of the bimatrix game \((\mathsf Z _1,\mathsf Z _2)\in \mathbb {Q}^{l \times m}\):

and \((v^{s',1}_{n-1},v^{s',2}_{n-1}) = {\texttt {V}}_\mathsf{G ^C}(s',\theta ,n{-}1)\) for all \(s' \in S\).

In this case, the only situation in which the value of the objective of one of the players cannot change is when only one of their goals is reached, i.e., when a state satisfying \(\phi ^i\) is reached for only one player i. This is therefore the only time we switch to MDP verification.

4.2.3 Computing SWNE values of mixed nonzero-sum formulae