Abstract

The article considers the multivariate stochastic orders of upper orthants, lower orthants and positive quadrant dependence (PQD) among simple max-stable distributions and their exponent measures. It is shown for each order that it holds for the max-stable distribution if and only if it holds for the corresponding exponent measure. The finding is non-trivial for upper orthants (and hence PQD order). From dimension \(d\ge 3\) these three orders are not equivalent and a variety of phenomena can occur. However, every simple max-stable distribution PQD-dominates the corresponding independent model and is PQD-dominated by the fully dependent model. Among parametric models the asymmetric Dirichlet family and the Hüsler-Reiß family turn out to be PQD-ordered according to the natural order within their parameter spaces. For the Hüsler-Reiß family this holds true even for the supermodular order.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Research on stochastic orderings and inequalities cover several decades, culminating among a vast literature for instance in the two monographs of Shaked and Shanthikumar (2007) and Müller and Stoyan (2002), the latter with a view towards applications and stochastic models, which appear in queuing theory, survival analysis, statistical physics or portfolio optimisation. Li and Li (2013) summarises developments of stochastic orders in reliability and risk management. While the scientific activities in finance, insurance, welfare economics or management science have been a driving force for many advances in the area, applications of stochastic orders are numerous and not limited to these fields. Importantly, such orderings will often play a role for robust inference, when only partial knowledge about a highly complex stochastic model is available.

Within the Extremes literature, related notions of positive dependence are well-known. It is a long-standing result that multivariate extreme value distributions exhibit positive association (Marshall and Olkin 1983). More generally, max-infinitely divisible distributions have this property as shown in Resnick (1987), while Beirlant et al. (2004) summarise further implications in terms of positive dependence notions. Recently, an extremal version of the popular MTP2 property (Fallat et al. 2017; Karlin and Rinott 1980) has been studied in the context of multivariate extreme value distributions, especially Hüsler-Reiß distributions, and linked to graphical modelling, sparsity and implicit regularisation in multivariate extreme value models (Röttger et al. 2023). Without any hope of being exhaustive, further fundamental scientific activity of the last decade on comparing stochastic models with a focus on multivariate extremes includes for instance an ordering of multivariate risk models based on extreme portfolio losses (Mainik and Rüschendorf 2012), inequalities for mixtures on risk aggregation (Chen et al. 2022), a comparison of dependence in multivariate extremes via tail orders (Li 2013) or stochastic ordering for conditional extreme value modelling (Papastathopoulos and Tawn 2015). Yuen and Stoev (2014) use stochastic dominance results from Strokorb and Schlather (2015) in order to derive bounds on the maximum portfolio loss and extend their work in Yuen et al. (2020) to a distributionally robust inference for extreme Value-at-Risk.

In this article we go back to some fundamental questions concerning stochastic orderings among multivariate extreme value distributions. We focus on the order of positive quadrant dependence (PQD order, also termed concordance order), which is defined via orthant orders. Answers are given to the following questions.

-

What is the relation between orders among max-stable distributions and corresponding orders among their exponent measures? (Theorem 4.1 and Corollary 4.2)

-

Can we find characterisations in terms of other typical dependency descriptors (stable tail dependence function, generators, max-zonoids)? (Theorem 4.1)

-

What is the role of fully independent and fully dependent model in this framework? (Corollary 4.3)

-

What is the role of Choquet/Tawn-Molchanov models in this framework? (Corollary 4.4 and Lemma 4.12)

For lower orthants, the answers are readily deduced from standard knowledge in Extremes. It is dealing with the upper orthants that makes this work interesting. The key element in the proof of our most fundamental characterisation result, Theorem 4.1, is based on Proposition B.9 below, which may be of independent interest. Stochastic orders are typically considered for probability distributions only. In order to make sense of the first question, we introduce corresponding orders for exponent measures, which turn out natural in this context, cf. Definition 3.2.

Second, we draw our attention to two popular parametric families of multivariate extreme value distributions that are closed under taking marginal distributions.

-

Can we find order relations among the Dirichlet and Hüsler-Reiß parametric models? (Theorem 4.5 and Theorem 4.7)

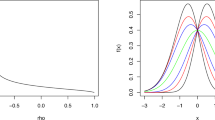

The answers are affirmative. For the Hüsler-Reiß model the result may be even strengthened for the supermodular order, which is otherwise beyond the scope of this article. To give an impression of the result for the Dirichlet family, Fig. 1 depicts six angular densities of the trivariate max-stable Dirichlet model. Aulbach et al. (2015) showed already that the symmetric models associated with the top row densities are decreasing in the lower orthant sense. Our new result covers the asymmetric case depicted in the bottom row; we show that the associated multivariate extreme value distributions are decreasing in the (even stronger) PQD-sense (with a more streamlined proof).

Accordingly, our text is structured as follows. Section 2 recalls some basic representations of multivariate max-stable distributions and examples of parametric models that are relevant for subsequent results. In Section 3 we review the relevant multivariate stochastic orderings together with important closure properties. This section contains also our (arguably natural) definition for corresponding order notions for exponent measures. All main results are then given in Section 4. Proofs and auxiliary results are postponed to Appendices 1 and 2. Appendix 3 contains background material how we obtained the illustrations (max-zonoid envelopes for bivariate Hüsler-Reiß and Dirichlet families) depicted in Figs. 2, 4, 5 and 8.

Angular densities (heat maps) of the symmetric max-stable Dirichlet model (top) and of the asymmetric max-stable Dirichlet model (bottom), cf. (4) for an expression of the density. Larger values are represented by brighter colours. The corresponding max-stable distributions are stochastically ordered in the PQD sense, increasing from left to right (Theorem 4.5). The black, blue and red boxes encode the matching with Figs. 6 and 7

2 Prerequisites from multivariate extremes

Our main results concern stochastic orderings among max-stable distributions, or, equivalently, orderings among their respective exponent measures, cf. Theorem 4.1 below. Therefore, this section reviews some basic well-known results from the theory of multivariate extremes. Second, we will take a closer look at three marginally closed parametric families, the Dirichlet family, the Hüsler-Reiß family and the Choquet (Tawn-Molchanov) family of max-stable distributions, each model offering a different insight into phenomena of orderings among multivariate extremes.

2.1 Max-stable random vectors and their exponent measures

In order to clarify our terminology, we recall some definitions and basic facts about representations for max-stable distributions, cf. also Resnick (1987) or Beirlant et al. (2004). Operations and inequalities between vectors are meant componentwise. We abbreviate \({\varvec{0}} = (0,0,\ldots ,0)^\top \in \mathbb {R}^d\). A random vector \({\varvec{X}}=(X_1,\ldots ,X_d)^\top \in \mathbb {R}^d\) is called max-stable if for all \(n \ge 1\) there exist suitable norming vectors \({\varvec{a}_n}>{\varvec{0}}\) and \({\varvec{b}_n}\in \mathbb {R}^d\), such that the distributional equality

holds, where \({\varvec{X}_1},\ldots ,{\varvec{X}_n}\) are i.i.d. copies of \({\varvec{X}}\). According to the Fisher-Tippet theorem the marginal distributions \(G_i(x)=\mathbb {P}(X_i \le x)\) are univariate max-stable distributions, that is, either degenerate to a point mass or a generalised extreme value (GEV) distribution of the form \(G_{\xi }((x-\mu )/\sigma )\) with \(G_{\xi }(x)=\exp (-(1+\xi x)_+^{-{1}/{\xi }})\) if \(\xi \ne 0\) and \(G_{0}(x)= \exp (-e^{-x})\), where \(\xi \in \mathbb {R}\) is a shape-parameter, while \(\mu \in \mathbb {R}\) and \(\sigma >0\) are the location and scale parameters, respectively. We write \(\textrm{GEV}(\mu , \sigma ,\xi )\) for short.

A max-stable random vector \({\varvec{X}}=(X_1,\ldots ,X_d)^\top\) is called simple max-stable if it has standard unit Fréchet marginals, that is, \(\mathbb {P}(X_i \le x)=\exp (-1/x)\), \(x>0\), for all \(i=1,\ldots ,d\). Any max-stable random vector \({\varvec{X}}\) with GEV margins \(X_i \sim \textrm{GEV}(\mu _i,\sigma _i,\xi _i)\) can be transformed into a simple max-stable random vector \({\varvec{X}^*}\) and vice versa via the componentwise order-preserving transformations

(with the usual interpretation of \((1+\xi x)^{1/\xi }\) as \(e^x\) for \(\xi =0\)). In this sense simple max-stable random vectors can be interpreted as a copula-representation for max-stable random vectors with non-degenerate margins, which encapsulates its dependence structure.

There are different ways to describe the distribution of such simple max-stable random vectors. The following will be relevant for us. Note that such vectors take values in the open upper orthant \((0,\infty )^d\) almost surely. Here and hereinafter we shall denote the i-th indicator vector by \({\varvec{e}_i}\) (all components of \({\varvec{e}_i}\) are zero except for the i-th component, which takes the value one).

Theorem/Definition 2.1

(Representations of simple max-stable distributions). A random vector \({\varvec{X}}=(X_1,\ldots ,X_d)^\top\) with distribution function \(G({\varvec{x}})=\exp (-V({\varvec{x}}))\), \({\varvec{x}}\in (0,\infty ]^d\), is simple max-stable if and only if the exponent function V can be represented in one of the following equivalent ways:

-

(i)

Spectral representation (de Haan 1984). There exists a finite measure space \((\Omega ,\mathcal {A},\nu )\) and a measurable function \(f: \Omega \rightarrow [0,\infty )^d\) such that \(\int _{\Omega } f_i({\omega }) \; \nu (\textrm{d}{\omega })=1\) for \(i=1,\ldots ,d\), and

$$\begin{aligned} V(x_1,\ldots ,x_d)=\int _{\Omega } \max _{i=1,\ldots ,d}\frac{f_i(\omega )}{x_i} \; \nu (\textrm{d}\omega ). \end{aligned}$$ -

(ii)

Exponent measure (Resnick 1987). There exists a \((-1)\)-homogeneous measure \(\Lambda\) on \([0,\infty )^d \setminus \{{\varvec{0}}\}\), such that

$$\begin{aligned} \Lambda \Big ( \big \{y \in [0,\infty )^d \,:\, y_i > 1\big \}\Big ) = 1 \end{aligned}$$for \(i=1,\ldots ,d\), and

$$\begin{aligned} V(x_1,x_2,\ldots ,x_d) = \Lambda \Big ( \big \{y \in [0,\infty )^d \,:\, y_i > x_i \text { for some } i \in \{1,\ldots ,d\} \big \}\Big ). \end{aligned}$$ -

(iii)

Stable tail dependence function (Ressel 2013). There exists a 1-homogeneous and max-completely alternating function \(\ell : [0,\infty )^d \rightarrow [0,\infty )\), such that \(\ell ({\varvec{e}_i})=1\) for \(i=1,\ldots ,d\), and

$$\begin{aligned} V(x_1,\ldots ,x_d) = \ell \bigg (\frac{1}{x_1},\ldots ,\frac{1}{x_d}\bigg ) \end{aligned}$$(cf. Appendix 2 for the notion of max-complete alternation).

In fact, the spectral representation can be seen as a polar decomposition of the exponent measure \(\Lambda\), cf. e.g. Resnick (1987) or Beirlant et al. (2004). Importantly, it is not uniquely determined by the law of \({\varvec{X}}\). Typical choices for the measure space \((\Omega ,\mathcal {A},\nu )\) include (i) the unit interval with Lebesgue measure or (ii) a sphere \(\Omega =\lbrace {\varvec{\omega }}\in [0,\infty )^d : \; \Vert {\varvec{\omega }} \Vert =1\rbrace\) with respect to some norm \(\Vert \cdot \Vert\), for instance the \(\ell _p\)-norm

for some \(p\ge 1\). For (i) it is then the spectral map f which contains all dependence information. For (ii) one usually considers the component maps \(f_i({\varvec{\omega }})=\omega _i\), so that the measure \(\nu\), then often termed angular measure, absorbs the dependence information. For a given spectral representation \((\Omega ,\mathcal {A},\nu ,f)\) one may rescale \(\nu\) to a probability measure and absorb the rescaling constant into the spectral map f. The resulting random vector \({\varvec{Z}}=(Z_1,\ldots ,Z_d)^\top\) such that \(\mathbb {E}(Z_i)={1}\), \(i=1,\ldots ,d\), and

has been termed generator of \({\varvec{X}}\), cf. Falk (2019). A useful observation is the following; for a given vector \({\varvec{x}}\) with values in \(\mathbb {R}^d\) and a subset \(A \subset \{1,\ldots ,d\}\), let \({\varvec{x}}_A\) be the subvector with components in A.

Lemma 2.2

Let \({\varvec{Z}}\) be a generator for the max-stable law \({\varvec{X}}\), then \({\varvec{Z}}_A\) is a generator for \({\varvec{X}}_A\).

The stable tail dependence function \(\ell\) goes back to Huang (1992) and has also been called D-norm (Falk et al. 2004) of \({\varvec{X}}\). Since \(\ell\) is 1-homogeneous, it suffices to know its values on the unit simplex \(\triangle _d=\lbrace {\varvec{x}}\in [0,\infty )^d:\, \Vert {\varvec{x}} \Vert _1 =1 \rbrace\); the restriction of \(\ell\) to \(\triangle _d\) is called Pickands dependence function

There exist further descriptors of the dependence structure, e.g. in terms of Point processes or LePage representation, cf. e.g. Resnick (1987) or, in a very general context, Davydov et al. (2008). Copulas of max-stable random vectors on standard uniform margins are called extreme value copulas (Gudendorf and Segers 2010).

Let us close with a representation that allows for some interesting geometric interpretations. Molchanov (2008) introduced a convex body \(K\subset [0,\infty )^d\), which can be interpreted (up to rescaling) as selection expectation of a random cross polytope associated with the (normalised) spectral measure \(\nu\). It turns out that the stable tail dependence function is in fact the support function of K

The convex body K is called max-zonoid (or dependency set) of \({\varvec{X}}\) and it is uniquely determined by the law of \({\varvec{X}}\). In fact

In general, it is difficult to translate one representation from Theorem 2.1 into another apart from the obvious relations

for \({\varvec{x}} \ge {\varvec{0}}\). For convenience, we have added material in Appendix 3 how to obtain the boundary of a max-zonoid K from the stable tail dependence function \(\ell\) in the bivariate case, which will help to illustrate some of the results below.

2.2 Parametric models

Several parametric models for max-stable random vectors have been summarised for instance in Beirlant et al. (2004). In what follows we draw our attention to two of the most popular parametric models, the Dirichlet and Hüsler-Reiß families, as well as the Choquet model (Tawn-Molchanov model), which will reveal some interesting phenomena and (counter-)examples of stochastic ordering relations.

2.2.1 Dirichlet model

Coles and Tawn (1991) compute densities of angular measures of simple max-stable random vectors constructed from non-negative functions on the unit simplex \(\Delta _d\). In particular, the following asymmetric Dirichlet model has been introduced. We summarise some equivalent characterisations, each of which may serve as a definition of the asymmetric Dirichlet model. This model has gained popularity due to its flexibility and simple structure forming the basis of Dirichlet mixture models (Boldi and Davison 2007; Sabourin and Naveau 2014).

Theorem/Definition 2.3

(Multivariate max-stable Dirichlet distribution). A random vector \({\varvec{X}}=(X_1,\ldots ,X_d)^\top\) is simple max-stable Dirichlet distributed with parameter vector \({\varvec{\alpha }}=(\alpha _1,\ldots ,\alpha _d)^\top \in (0,\infty )^d\), we write

for short, if and only if one of the following equivalent conditions is satisfied:

-

(i)

(Gamma generator) A generator of \({\varvec{X}}\) is the random vector

$$\begin{aligned} {\varvec{\alpha }}^{-1}{\varvec{\Gamma }}=(\Gamma _1/\alpha _1, \Gamma _2/\alpha _2,\ldots ,\Gamma _d/\alpha _d)^\top , \end{aligned}$$where \({\varvec{\Gamma }}=(\Gamma _1,\ldots ,\Gamma _d)^\top\) consists of independent Gamma distributed variables \(\Gamma _i\sim \Gamma (\alpha _i)\), \(\alpha _i>0\), \(i=1,\ldots ,d\). Here, the Gamma distribution \(\Gamma (\alpha _i)\) has the density

$$\begin{aligned} \gamma _{\alpha _i}(x)=\frac{ x^{\alpha _i-1}}{\Gamma (\alpha _i)}\exp \big (-{x}\big ). \end{aligned}$$ -

(ii)

(Dirichlet generator) A generator of \({\varvec{X}}\) is the random vector

$$\begin{aligned} ({\varvec{\alpha }}^{-1}\Vert {\varvec{\alpha }}\Vert _1){\varvec{D}} =(\alpha _1+\ldots +\alpha _d) \cdot (D_1/\alpha _1,D_2/\alpha _2,\ldots ,D_d/\alpha _d)^\top , \end{aligned}$$where \({\varvec{D}}\) follows a Dirichlet distribution \(\textrm{Dir}(\alpha _1,\ldots ,\alpha _d)\) on the unit simplex \(\triangle _d\) with density

$$\begin{aligned} d({\omega _1,\ldots ,\omega _d})=\Gamma (\Vert {\varvec{\alpha }}\Vert _1)\prod _{i=1}^d\frac{\omega _i^{\alpha _i-1}}{\Gamma (\alpha _i)}, \quad (\omega _1,\ldots ,\omega _d)^\top \in \triangle _d. \end{aligned}$$ -

(iii)

(Angular measure) The density of the angular measure of \({\varvec{X}}\) on \(\triangle _d\) is given by

$$\begin{aligned} h(\omega _1,\ldots ,\omega _d)=\frac{\Gamma (\Vert {\varvec{\alpha }}\Vert _1+1)}{\Vert {\varvec{\alpha }}{\varvec{\omega }}\Vert _1}\prod _{i=1}^d\frac{\alpha _i^{\alpha _i}\omega _i^{\alpha _i-1}}{\Gamma (\alpha _i)(\Vert {\varvec{\alpha }}{\varvec{\omega }}\Vert _1)^{\alpha _i}}, \quad (\omega _1,\ldots ,\omega _d)^\top \in \triangle _d. \end{aligned}$$(4)

To the best of our knowledge the representation through the Gamma generator, albeit inspired by Aulbach et al. (2015) from the fully symmetric case, is new in this generality. We have added a proof in Appendix 2. An advantage of the representation with the Gamma generator is that it reveals immediately the closure of the model with respect to taking marginal distributions, cf. Lemma 2.2, a result that has been previously obtained in Ballani and Schlather (2011), but with a one-page proof and some intricate density calculations.

Lemma 2.4

(Closure of Dirichlet model under taking marginals). Let \({\varvec{X}} = (X_1,\ldots ,X_d)^\top \sim \textrm{MaxDir}(\alpha _1,\ldots ,\alpha _d)=\textrm{MaxDir}({\varvec{\alpha }})\) and \(A \subset \{1,\ldots ,d\}\), then \({\varvec{X}}_A \sim \textrm{MaxDir}({\varvec{\alpha }}_A)\).

The angular density representation on the other hand is useful to see that different parameter vectors \({\varvec{\alpha }} \ne {\varvec{\beta }}\) lead in fact to different multivariate distributions \(\textrm{MaxDir}({\varvec{\alpha }}) \ne \textrm{MaxDir}({\varvec{\beta }})\) for \(d\ge 2\), so that \((0,\infty )^d\) is indeed the natural parameter space for this model.

2.2.2 Hüsler-Reiß model

The multivariate Hüsler-Reiß distribution (Hüsler and Reiß 1989) forms the basis of the popular Brown-Resnick process (Kabluchko et al. 2009) and has sparked significant interest from the perspectives of spatial modelling (Davison et al. 2019) and more recently in connection with graphical modelling of extremes (Engelke and Hitz 2020). The natural parameter space for this model is the convex cone of conditionally negative symmetric \(d\times d\)-matrices, whose diagonal entries are zero

It is well-known, cf. e.g. Berg et al. (1984, Ch. 3), that for a given \({\varvec{\gamma }} \in \mathcal {G}_d\), there exists a zero mean Gaussian random vector \({\varvec{W}}=(W_1,\ldots ,W_d)^\top\) with incremental variance

although its distribution is not uniquely specified by this condition. For instance, select \(i \in \{1,\ldots ,d\}\). Imposing additionally the linear constraint “\(W_i=0\) almost surely” leads to \({\varvec{W}} \sim \mathcal {N}({\varvec{0}}, {\varvec{\Sigma }_i})\) with

which satisfies (5).

Theorem/Definition 2.5

(Multivariate Hüsler-Reiss distribution, cf. Kabluchko (2011) Theorem 1). Let \({\varvec{\gamma }} \in \mathcal {G}_d\) and (5) be valid. Consider the simple max-stable random vector \({\varvec{X}}=(X_1,\ldots ,X_d)^\top\) defined by the generator \({\varvec{Z}}=(Z_1,\ldots ,Z_d)^\top\) with

Then the distribution of \({\varvec{X}}\) depends only on \({\varvec{\gamma }}\) and not on the specific choice of a zero mean Gaussian distribution satisfying (5). We call \({\varvec{X}}\) simple Hüsler-Reiß distributed with parameter matrix \({\varvec{\gamma }}\) and write for short

We also note that for \({\varvec{\gamma }}_1, \, {\varvec{\gamma }}_2 \in \mathcal {G}_d\), the distributions \(\textrm{HR}({\varvec{\gamma }}_1)\) and \(\textrm{HR}({\varvec{\gamma }}_2)\) coincide if and only if \({\varvec{\gamma }}_1 = {\varvec{\gamma }}_2\), so that \(\mathcal {G}_d\) is indeed the natural parameter space for these models. This follows directly from the observation that the multivariate Hüsler-Reiß model is also closed under taking marginal distributions and the equivalent statement for bivariate Hüsler-Reiß models, which can be seen for instance from (19) below. Indeed, we also state the following lemma for clarity. It follows directly from the generator representation of \(\textrm{HR}({\varvec{\gamma }})\) and Lemma 2.2.

Lemma 2.6

(Closure of Hüsler-Reiß model under taking marginals). Let \({\varvec{X}} = (X_1,\dots ,X_d)^\top \sim \textrm{HR}({\varvec{\gamma }})\) and \(A \subset \{1,\dots ,d\}\), then \({\varvec{X}}_A \sim \textrm{HR}({\varvec{\gamma }}_{A \times A})\), where \({\varvec{\gamma }}_{A \times A}\) is the restriction of \({\varvec{\gamma }}\) to the components of A in both rows and columns.

It is well-known that up to a change of location and scale parameters Hüsler-Reiß distributions are the only possible limit laws of maxima of triangular arrays of multivariate Gaussian distributions, a finding which can be traced back to Hüsler and Reiß (1989) and Brown and Resnick (1977). The following version will be convenient for us.

Theorem 2.7

(Triangular array convergence of maxima of Gaussian vectors, cf. Kabluchko (2011) Theorem 2). Let \(u_n\) be a sequence such that \(\sqrt{2\pi } u_n e^{u_n^2/2}/n \rightarrow 1\) as \(n \rightarrow \infty\). For each \(n \in \mathbb {N}\) let \({\varvec{Y}^{(n)}_1},{\varvec{Y}^{(n)}_2},\dots , {\varvec{Y}^{(n)}_n}\) be independent copies of a d-variate zero mean unit-variance Gaussian random vector with correlation matrix \((\rho ^{(n)}_{ij})_{i,j \in \{1,\dots ,d\}}\). Suppose that for all \(i,j \in \{1,\dots ,d\}\)

as \(n \rightarrow \infty\). Then the matrix \({\varvec{\gamma }}=(\gamma _{ij})_{i,j \in \{1,\dots ,d\}}\) is necessarily and element of \(\mathcal {G}_d\). Let \({\varvec{M}}^{(n)}\) be the componentwise maximum of \({\varvec{Y}^{(n)}_1},{\varvec{Y}^{(n)}_2},\dots , {\varvec{Y}^{(n)}_n}\). Then the componentwise rescaled vector \(u_n({\varvec{M}}^{(n)}-u_n)\) converges in distribution to the Hüsler-Reiß distribution \(\textrm{HR}({\varvec{\gamma }})\).

Remark 2.8

In the bivariate case we have \(\gamma _{12}=\gamma _{21}=\gamma \in [0,\infty )\) and the boundary case \(\gamma =0\) leads to a degenerate random vector with fully dependent components, whereas \(\gamma \uparrow \infty\) leads to a random vector with independent components. More generally, one might also admit the value \(\infty\) for \(\gamma _{ij}\) in the multivariate case, as long as the resulting matrix \({\varvec{\gamma }}\) is negative definite in the extended sense, cf. Kabluchko (2011). This extension corresponds to a partition of \({\varvec{X}}\) into independent subvectors \({\varvec{X}} = \bigsqcup _A {\varvec{X}}_A\), where each \({\varvec{X}}_A\) is a Hüsler-Reiß random vector in the usual sense. Here \(\gamma _{ij}=\infty\) precisely when i and j are in different subsets of the partition. Theorem 2.7 extends to this situation as well. In fact, is has been formulated in this generality in Kabluchko (2011).

2.2.3 Choquet model / Tawn-Molchanov model

A popular way to summarise extremal dependence information within a random vector is by considering its extremal coefficients, which in the case of a simple max-stable random vector \({\varvec{X}}=(X_1,X_2,\dots ,X_d)^\top\) may be expressed as

or, equivalently,

where \(\ell\) is the stable tail dependence function, \({\varvec{Z}}\) a generator, \(\Lambda\) the exponent measure and \((\Omega ,\mathcal {A},\nu ,f)\) a spectral representation for \({\varvec{X}}\). Loosely speaking, the coefficient \(\theta (A)\), which takes values in [1, |A|], can be interpreted as the effective number of independent variables among the collection \((X_i)_{i \in A}\). We have \(\theta (\{i\})=1\) for singletons \(\{i\}\) and naturally \(\theta (\emptyset )=0\).

The following result can be traced back to Schlather and Tawn (2002) and Molchanov (2008). Accordingly, the associated max-stable model, which can be parametrised by its extremal coefficients, has been introduced as Tawn-Molchanov model in Strokorb and Schlather (2015). It is essentially an application of the the Choquet theorem (see Molchanov, 2017, Section 1.2 and Berg et al., 1984, Theorem 6.6.19), which also holds for not necessarily finite capacities (see Schneider and Weil, 2008, Theorem 2.3.2). Therefore, it has been relabelled Choquet model in Molchanov and Strokorb (2016), cf. Appendix 2 for background on complete alternation. We write \(\mathcal {P}_d\) for the power set of \(\{1,\dots ,d\}\) henceforth.

Theorem 2.9

-

(a)

Let \(\theta : \mathcal {P}_d \rightarrow \mathbb {R}\). Then \(\theta\) is the extremal coefficient function of a simple max-stable random vector in \((0,\infty )^d\) if and only if \(\theta (\emptyset )=0\), \(\theta (\{i\})=1\) for all \(i=1,\dots ,d\) and \(\theta\) is union-completely alternating.

-

(b)

Let \(\theta : \mathcal {P}_d \rightarrow \mathbb {R}\) be an extremal coefficient function. Let

$$\begin{aligned} \ell ^*({\varvec{x}}) = \int _0^\infty \theta (\{i \,:\, x_i \ge t\}) \, dt, \quad {\varvec{x}} \in [0,\infty )^d \end{aligned}$$be the Choquet integral with respect to \(\theta\). Then \(\ell ^{*}\) is a valid stable tail dependence function, which retrieves the given extremal coefficients \(\ell ^{*}({\varvec{e}}_A)=\theta (A)\) for all \(A \in \mathcal {P}_d\). Its max-zonoid is given by

$$\begin{aligned} K^* = \big \{{\varvec{k}} \in [0,\infty )^d \,:\, \langle {\varvec{k}}, {\varvec{e}}_A \rangle \le \theta (A) \text { for all } A \in \mathcal {P}_d \big \}. \end{aligned}$$ -

(c)

Let \(\ell\) be any stable tail dependence function with extremal coefficient function \(\theta\) and K its corresponding max-zonoid. Then

$$\begin{aligned} \ell ({\varvec{x}}) \le \ell ^*({\varvec{x}}), \quad {\varvec{x}} \ge 0 \qquad \text {and} \qquad K \subset K^*. \end{aligned}$$

Example 2.10

(Choquet model in the bivariate case). Let \(\ell\) be a bivariate stable tail dependence function and \(\theta =\ell (1,1)\in [1,2]\) the bivariate extremal coefficient. Then the associated Choquet model is given by the max-zonoid \(K^*= \{(x_1,x_2) \in [0,1]^2:\, x_1+x_2 \le \theta \}\) or the stable tail dependence function \(\ell ^*(x_1,x_2)=\max (x_1 + (\theta -1) x_2, (\theta -1) x_1 + x_2)\). Figure 2 displays a situation, where the original \(\ell\) stems from an asymmetric Dirichlet model.

In geometric terms, for any given max-zonoid \(K \subset [0,1]^d\) the associated Choquet max-zonoid \(K^* \subset [0,1]^d\) is bounded by \(2^d-1\) hyperplanes, one for each direction \({\varvec{e}}_A\), which is the supporting hyperplane of the max-zonoid K in the direction of \({\varvec{e}}_A\).

The Choquet model is a spectrally discrete max-stable model, whose exponent measure has its support contained in the rays through the vectors \({\varvec{e}}_A\), \(A \subset \{1,\dots ,d\}\), \(A \ne \emptyset\). While its natural parameter space is the set of extremal coefficients, we can also describe it via the mass that the model puts on those rays. To this end, let \(\tau : \mathcal {P}_d \setminus \{\emptyset \} \rightarrow \mathbb {R}\) be given as follows

where we assume \(a_1,a_2,\dots , a_n\) to be the distinct elements from \(A \subset \{1,\dots ,d\}\). Then the spectral representation \((\Omega ,\mathcal {A},\nu ^{*},f)\) with \(\Omega =\{{\varvec{\omega }} \in [0,\infty )^2:\, \Vert {\varvec{\omega }} \Vert _\infty = 1\}\), \(f_i({\varvec{\omega }})=\omega _i\) and

corresponds to the stable tail dependence function \(\ell ^{*}\) from Theorem 2.9. In terms of an underlying generator for which (6) holds true, we may express \(\tau\) as

cf. Papastathopoulos and Strokorb (2016) Lemma 3. Moreover, we recover \(\theta\) from \(\tau\) via

which makes the analogy between extremal coefficient functions \(\theta\) and capacity functionals of random sets even more explicit.

However, there are two drawbacks with representing the Choquet model by the collection of coefficients \(\tau (A)\), \(A \subset \{1,\dots ,d\}\), \(A \ne \emptyset\). First, this representation is specific to the dimension, in which the model is considered, that is, we cannot simply turn to a subset of these coefficients when considering marginal distributions. Second, one may easily forget that one has in fact not \(2^d-1\) degrees of freedom among these coefficients, but \(2^d-1-d\), since \(\theta (\{i\})=1\) for singletons \(\{i\}\), which is only encoded through d linear constraints for \(\tau\) as follows

A third parametrisation of the Choquet model, which has received little attention so far, but is very relevant for the ordering results in this article (cf. Lemma 4.12) and does not have such drawbacks, is the following. Instead of extremal coefficients, let us consider the following tail dependence coefficients for \(A \subset \{1,\dots ,d\}\), \(A \ne \emptyset\):

Then it is easily seen that

Since \(\theta (\emptyset )=0\), and with \(a_1,\dots ,a_n\) being the distinct elements of A, the first identity may also be expressed as

In particular \(\chi (\{i\})=\theta (\{i\})=1\) for \(i=1,\dots ,d\), and these operations show explicitly, how \(\theta\) and \(\chi\) can be recovered from each other. While \(\theta\) resembles a capacity functional, \(\chi\) can be seen as an analog of an inclusion functional, since

whereas

where \(b_1,b_2,\dots ,b_m\) are the distinct elements of \(\{1,2,\dots ,d\} \setminus A\).

To sum up, we may consider three different parametrizations for the Choquet model:

-

(i)

by the \(2^d-1\) extremal coefficients \(\theta (A)\), \(A \in \mathcal {P}_d\), \(A \ne \emptyset\),

-

(ii)

by the \(2^d-1\) tail dependence coefficients \(\chi (A)\), \(A \in \mathcal {P}_d\), \(A \ne \emptyset\),

-

(iii)

by the \(2^d-1\) mass coefficients \(\tau (A)\), \(A \in \mathcal {P}_d\), \(A \ne \emptyset\).

For (i) and (ii) the constraint for standard unit Fréchet margins is encoded via \(\chi (\{i\})=\theta (\{i\})=1\) for \(i=1,\dots ,d\). For (iii) it amounts to the d conditions from (8). Only (i) and (ii) do not depend on the dimension, in which the model is considered.

3 Prerequisites from stochastic orderings

A wealth of stochastic orderings and associated inequalities have been summarised in Müller and Stoyan (2002) and Shaked and Shanthikumar (2007), the most fundamental order being the usual stochastic order

between two univariate distributions F and G, which is defined as \(F(x)\ge G(x)\) for all \(x\in \mathbb {R}\). This means that draws from F are less likely to attain large values than draws from G.

For multivariate distributions definitions of orderings are less straightforward and there are many more notions of stochastic orderings. We will focus on upper orthants, lower orthants and the PQD order here. A subset \(U \subset \mathbb {R}^d\) is called an upper orthant if it is of the form

for some \({\varvec{a}}\in \mathbb {R}^d\). Similarly, a subset \(L \subset \mathbb {R}^d\) is called a lower orthant if it is of the form

for some \({\varvec{a}}\in \mathbb {R}^d\).

Definition 3.1

(Multivariate orders LO, UO, PQD, Shaked and Shanthikumar (2007), Sections 6.G and 9.A, Müller and Stoyan (2002), Sections 3.3. and 3.8).

Let \({\varvec{X}},{\varvec{Y}}\in \mathbb {R}^d\) be two random vectors.

-

\({\varvec{X}}\) is said to be smaller than \({\varvec{Y}}\) in the upper orthant order, denoted \({\varvec{X}}\le _{\textrm{uo}}{\varvec{Y}}\), if \(\mathbb {P}({\varvec{X}}\in U)\le \mathbb {P}({\varvec{Y}}\in U)\) for all upper orthants \(U \subset \mathbb {R}^d\).

-

\({\varvec{X}}\) is said to be smaller than \({\varvec{Y}}\) in the lower orthant order, denoted \({\varvec{X}}\le _{\textrm{lo}}{\varvec{Y}}\), if \(\mathbb {P}({\varvec{X}}\in L)\ge \mathbb {P}({\varvec{Y}}\in L)\) for all lower orthants \(L \subset \mathbb {R}^d\).

-

\({\varvec{X}}\) is said to be smaller than \({\varvec{Y}}\) in the positive quadrant order, denoted \({\varvec{X}}\le _{\textrm{PQD}}{\varvec{Y}}\), if we have the relations \({\varvec{X}}\le _{\textrm{uo}}{\varvec{Y}}\) and \({\varvec{X}}\ge _{\textrm{lo}}{\varvec{Y}}\).

Note that the PQD order (also termed concordance order) is a dependence order. If \({\varvec{X}}\le _{\textrm{PQD}}{\varvec{Y}}\) holds, it implies that \({\varvec{X}}\) and \({\varvec{Y}}\) have identical univariate marginals. Several equivalent characterizations of these orders are summarised in the respective sections of Müller and Stoyan (2002) and Shaked and Shanthikumar (2007). In relation to portfolio properties, it is interesting to note that for non-negative random vectors \({\varvec{X}}, {\varvec{Y}} \in [0,\infty )^d\)

In addition, if \({\varvec{X}}, {\varvec{Y}} \in [0,\infty )^d\) and \({\varvec{X}}\le _{\textrm{lo}}{\varvec{Y}}\), then

for all \({\varvec{a}}\in [0,\infty )^d\) and all Bernstein functions g, provided that the expectation exists, cf. Shaked and Shanthikumar (2007) 6.G.14 and 5.A.4 for this fact and Appendix 2 for a definition of Bernstein functions. In particular, such functions are non-negative, monotonously increasing and concave and therefore form a natural class of utility functions, see e.g. Brockett and Golden (1987) and Caballé and Pomansky (1996). Important examples of Bernstein functions include the identity function, \(g(x)=\log (1+x)\) or \(g(x)=(1+x)^\alpha - 1\) for \(\alpha \in (0,1)\).

The multivariate orders from Definition 3.1 have several useful closure properties. We refer to Müller and Stoyan (2002) Theorem 3.3.19 and Theorem 3.8.7 for a systematic collection, including

-

independent or identical concatenation,

-

marginalisation,

-

distributional convergence,

-

applying increasing transformations to the components,

-

taking mixtures.

In what follows, we will need a corresponding notion of multivariate orders not only for probability measures on \(\mathbb {R}^d\), but also for exponent measures as introduced in Section 2. While the support of an exponent measure \(\Lambda\) is contained in \([0,\infty )^d\setminus \{{\varvec{0}}\}\), its total mass is infinite. We only know for sure that \(\Lambda (B)\) is finite for Borel sets \(B\subset \mathbb {R}^d\) bounded away from the origin in the sense that there exists \(\varepsilon >0\), such that \(B \cap L_{\varepsilon {\varvec{e}}} = \emptyset\) (recall \(L_{\varepsilon {\varvec{e}}} = \lbrace {\varvec{x}} \in \mathbb {R}^d :\, x_1 \le \varepsilon ,\dots ,x_d \le \varepsilon \rbrace\)). This means that we need to assume a different view on lower orthants and work with their complements instead, a subtlety, which did not matter previously when defining such notions for probability measures only. The following notion seems natural in view of Definition 3.1 and the results of Section 4. Figure 3 illustrates the restriction to fewer admissible test sets for these orders for exponent measures.

Illustration of test sets for multivariate orders for exponent measures in dimension \(d=2\), cf. Definition 3.2. Left: \(\Lambda\) is locally finite on the (closed) grey area for all \(\varepsilon >0\), its total (infinite) mass is contained in the union of such sets; middle: admissible complement of a lower orthant \(\mathbb {R}^2\setminus L_{\varvec{a}}\) (blue area) for testing lower orthant order for \(\Lambda\); right: admissible upper orthants \(U_{\varvec{a}}\), \(U_{\varvec{b}}\), \(U_{\varvec{c}}\) (three red areas) for testing upper orthant order for \(\Lambda\)

Definition 3.2

(Multivariate orders for exponent measures). Let \(\Lambda ,\widetilde{\Lambda }\) be two infinite measures on \(\mathbb {R}^d\) with mass contained in \([0,\infty )^d\setminus \{{\varvec{0}}\}\) and taking finite values on Borel sets bounded away from the origin.

-

\(\Lambda\) is said to be smaller than \(\widetilde{\Lambda }\) in the upper orthant order, denoted \(\Lambda \le _{\textrm{uo}}\widetilde{\Lambda }\), if \(\Lambda (U)\le \widetilde{\Lambda }(U)\) for each upper orthant \(U \subset \mathbb {R}^d\) that is bounded away from the origin.

-

\(\Lambda\) is said to be smaller than \(\widetilde{\Lambda }\) in the lower orthant order, denoted \(\Lambda \le _{\textrm{lo}}\widetilde{\Lambda }\), if \(\Lambda (\mathbb {R}^d \setminus L)\le \widetilde{\Lambda }(\mathbb {R}^d \setminus L)\) for all lower orthants \(L \subset \mathbb {R}^d\) such that \(\mathbb {R}^d \setminus L\) is bounded away from the origin.

-

\(\Lambda\) is said to be smaller than \(\widetilde{\Lambda }\) in the positive quadrant order, denoted \(\Lambda \le _{\textrm{PQD}} \widetilde{\Lambda }\), if we have the relations \(\Lambda \le _{\textrm{uo}} \widetilde{\Lambda }\) and \(\Lambda \ge _{\textrm{lo}} \widetilde{\Lambda }\).

Remark 3.3

Exponent measures \(\Lambda\) and \(\widetilde{\Lambda }\) are Radon measures on \([0,\infty ]^d \setminus \{{\varvec{0}}\}\) (the one-point uncompactification of \([0,\infty ]^d\)). Any Borel set \(B\subset [0,\infty ]^d \setminus \{{\varvec{0}}\}\) bounded away from the origin is relatively compact in this space, hence \(\Lambda (B)\) and \(\widetilde{\Lambda }(B)\), including \(\Lambda (U)\), \(\widetilde{\Lambda }(U)\), \(\Lambda (\mathbb {R}^d \setminus L)\) and \(\widetilde{\Lambda }(\mathbb {R}^d \setminus L)\) as above, are all finite.

4 Main results

First we present some fundamental characterisations of LO, UO and PQD order among simple max-stable distributions and their exponent measures, then we study these orders among the introduced parametric families. While we focus on simple max-stable distributions in what follows, we would like to stress that applying componentwise identical isotonic transformations to random vectors preserves orthant and concordance orders; in this sense the following properties can be seen as statements about the respective copulas. In particular, among max-stable random vectors, it suffices to establish these orders among simple max-stable random vectors and they translate immediately to all counterparts with different marginal distributions, cf. (1).

4.1 Fundamental results

We start by assembling the most fundamental relations for multivariate orders among simple max-stable random vectors. While the statements about lower orthant orders are almost immediate from existing theory and definitions, the relations for upper orthants are a bit more intricate and non-standard in the area. In particular, showing that “\(\Lambda \le _{\textrm{uo}} \widetilde{\Lambda }\) implies \(G \le _{\textrm{uo}} \widetilde{G}\)” turns out to be non-trivial. The key ingredient in the proof of the following theorem (cf. Appendix 1) is Proposition B.9 for part b).

Theorem 4.1

(Orthant orders characterisations). Let G and \(\widetilde{G}\) be d-variate simple max-stable distributions with exponent measures \(\Lambda\) and \(\widetilde{\Lambda }\), generators \({\varvec{Z}}\) and \(\widetilde{\varvec{Z}}\), stable tail dependence functions \(\ell\) and \(\widetilde{\ell }\) and max-zonoids K and \(\widetilde{K}\), respectively.

-

(a)

The following statements are equivalent.

-

(i)

\(G\le _{\textrm{lo}} \widetilde{G}\);

-

(ii)

\(\Lambda \le _{\textrm{lo}} \widetilde{\Lambda }\);

-

(iii)

\(\mathbb {E}(\max _{i=1,\dots ,d}(a_i Z_i)) \le \mathbb {E}(\max _{i=1,\dots ,d}(a_i \widetilde{Z}_i))\) for all \({\varvec{a}} \in (0,\infty )^d\);

-

(iv)

\(\ell \le \widetilde{\ell }\);

-

(v)

\(K \subset \widetilde{K}\).

-

(i)

-

(b)

The following statements are equivalent.

-

(i)

\(G\le _{\textrm{uo}} \widetilde{G}\);

-

(ii)

\(\Lambda \le _{\textrm{uo}} \widetilde{\Lambda }\);

-

(iii)

\(\mathbb {E}(\min _{i \in A}(a_i Z_i)) \le \mathbb {E}(\min _{i \in A}(a_i \widetilde{Z}_i))\) for all \({\varvec{a}} \in (0,\infty )^d\) and \(A \subset \{1,\dots ,d\}\), \(A \ne \emptyset\).

-

(i)

-

(c)

If \(d=2\), the following statements are equivalent.

-

(i)

\(G\le _{\textrm{PQD}} \widetilde{G}\);

-

(ii)

\(G\le _{\textrm{uo}} \widetilde{G}\);

-

(iii)

\(G\ge _{\textrm{lo}} \widetilde{G}\).

-

(i)

The assumption \(d=2\) is important in part c); these equivalences are no longer true in higher dimensions, cf. Example 4.13 below. Theorem 4.1 implies further that the orthant ordering of two generators \({\varvec{Z}}\) and \({\widetilde{\varvec{Z}}}\) implies the respective ordering of the corresponding distributions G and \({\widetilde{G}}\) and exponent measures \({\Lambda }\) and \({\widetilde{\Lambda }}\). However, the converse is false and most generators will not satisfy orthant orderings, even when the corresponding distributions do. An interesting case for this phenomenon is the Hüsler-Reiß family, cf. Example 4.11 below. The following corollary is another immediate consequence of Theorem 4.1.

Corollary 4.2

(PQD/concordance order characterisation). Let G and \(\widetilde{G}\) be d-variate simple max-stable distributions with exponent measures \(\Lambda\) and \(\widetilde{\Lambda }\), then

It is well-known that for any stable tail dependence function \(\ell\) of a simple max-stable random vector

where \(\ell _{\textrm{dep}}\) represents the degenerate max-stable random vector, whose components are fully dependent, and \(\ell _{\textrm{indep}}\) corresponds to the max-stable random vector with completely independent components. From the perspective of stochastic orderings this means that every max-stable random vector is dominated by the fully independent model, while it dominates the fully dependent model with respect to the lower orthant order. It seems less well-known that the converse ordering holds true for upper orthants, so that we arrive at the following corollary.

Corollary 4.3

(PQD/concordance for independent and fully dependent model). Let \(G_{\textrm{indep}}\), \(G_{\textrm{dep}}\) and G be d-dimensional simple max-stable distributions, where \(G_{\textrm{indep}}\) represents the model with fully independent components, and \(G_{\textrm{dep}}\) represents the model with fully dependent components. Then

Similarly Theorem 2.9 can be strengthened as follows. Whilst previously only the lower orthant order was known, we have in fact PQD/concordance ordering.

Corollary 4.4

(PQD/concordance for the associated Choquet model). Let \({\varvec{X}}\) be a simple max-stable random vector with extremal coefficients \((\theta (A))\), \(A \subset \{1,\dots ,d\}\), \(A \ne \emptyset\) and \({\varvec{X}}^*\) the Choquet (Tawn-Molchanov) random vector with identical extremal coefficients. Then

4.2 Parametric models

In general, parametric families of multivariate distributions do not necessarily exhibit stochastic orderings. One of the few more interesting known examples among multivariate max-stable distributions is the Dirichlet family, for which it has been shown that it is ordered in the symmetric case (Aulbach et al., 2015, Proposition 4.4), that is, for \(\alpha \le \beta\) we have

Figure 4 illustrates (15) in the bivariate situation and shows a bivariate example that these distributions are otherwise not necessarily ordered in the asymmetric case.

Top: Nested max-zonoids (left) and ordered (hypographs of) Pickands dependence functions (right) from the fully symmetric Dirichlet family for \(\alpha \in \lbrace 0.0625, 0.25, 1, 4\rbrace\). Smaller values of \(\alpha\) correspond to larger sets and larger Pickands dependence functions and are closer to the independence model represented by the box \([0,1]^2\) or the constant function, which is identically 1. The fully dependent model is represented in black. Bottom: Non-nested max-zonoids and non-ordered Pickands dependence function from the asymmetric Dirichlet family for \((\alpha _1,\alpha _2) \in \{(0.15,12),(4,0.2)\}\)

Here, we extend (15) in several ways: (i) going beyond the symmetric situation considering the fully asymmetric model, (ii) considering PQD/concordance order, (iii) shortening the proof by exploiting a connection to the theory of majorisation, cf. Appendix 2. Figure 5 provides an illustration of the stochastic ordering for the asymmetric Dirichlet family in the bivariate case. In Fig. 1 we see how the mass of the angular measure of the symmetric and asymmetric Dirichlet model is more concentrated from left plot to right plot. This also corresponds to their stochastic ordering, with the right one being the most dominant model in terms of PQD order.

Nested max-zonoids and ordered Pickands dependence functions of the asymmetric max-stable Dirichlet family for \((\alpha _1,\alpha _2)\in \lbrace (0.25,0.25),(1,0.25),(1,1),(1,4),(4,4)\rbrace\). Componentwise smaller values of \((\alpha _1,\alpha _2)\) correspond to larger sets and larger Pickands dependence functions and are closer to the independence model

Theorem 4.5

(PQD/concordance order of Dirichlet family). Consider the max-stable Dirichlet family from Theorem/Definition 2.3. If \({\alpha }_i \le {\beta }_i\), \(i=1,\dots ,d\), then

Example 4.6

In order to draw attention to some further consequences of Theorem 4.5, let \({\varvec{X}} \sim \textrm{MaxDir}({\varvec{\alpha }})\) and \({\varvec{Y}} \sim \textrm{MaxDir}({\varvec{\beta }})\) where \(\alpha _i \le \beta _i\), \(i=1,\dots ,d\), so that \({\varvec{X}} \le _{\textrm{PQD}} {\varvec{Y}}\), hence \({\varvec{X}} \le _{\textrm{uo}} {\varvec{Y}}\) and \({\varvec{X}} \ge _{\textrm{lo}} {\varvec{Y}}\), which implies

cf. (12), (13) and Lemma 2.4. Exemplarily, we consider a range of trivariate symmetric and asymmetric max-stable Dirichlet distributions \(\textrm{MaxDir}(\alpha _1,\alpha _2,\alpha _3)\) with parameters \((\alpha _1,\alpha _2,\alpha _3)\) given in Fig. 1. The colouring is chosen such that red models PQD-dominate blue models, which PQD-dominate black models.

In addition, we consider the portfolio with equal weights (1, 1, 1) and the resulting min-projections \(\min (X_1,X_2,X_3)\) and max-projections \(\max (X_1,X_2,X_3)\), where \((X_1,X_2,X_3)\sim \textrm{MaxDir}(\alpha _1,\alpha _2,\alpha _3)\). Figures 6 and 7 display their distribution functions on the Gumbel scale. As commonly of interest for extreme value distributions, instead of the quantile function Q, we show the equivalent return level plot, which displays the return levels \(Q(1-p)\) for the return period of 1/p observations. The plots of these functions are based on empirical estimates from one million simulated observations from the respective models, and their orderings are as expected from the theory, i.e. quantile functions increase as the dominance of the model grows, while distribution functions decrease.

Distribution functions (left) and return levels (right) for return periods 10 to 100 (on logarithmic scale) of \(\min (X_1,X_2,X_3)\), where \((X_1,X_2,X_3)\sim \textrm{MaxDir}(\alpha _1,\alpha _2,\alpha _3)\) on standard Gumbel scale with \({\varvec{\alpha }}=(\alpha _1,\alpha _2,\alpha _3)\) as chosen in Fig. 1. Top: symmetric case; bottom: asymmetric case. Black, blue and red colouring encodes the matching with Fig. 1. The grey areas represent the range between the fully dependent (dashed line) and fully independent (dotted line) cases

Distribution functions (left) and return levels (right) for return periods 10 to 100 (on logarithmic scale) of \(\max (X_1,X_2,X_3)\), where \((X_1,X_2,X_3)\sim \textrm{MaxDir}(\alpha _1,\alpha _2,\alpha _3)\) on standard Gumbel scale with \({\varvec{\alpha }}=(\alpha _1,\alpha _2,\alpha _3)\) as chosen in Fig. 1. Top: symmetric case; bottom: asymmetric case. Black, blue and red colouring encodes the matching with Fig. 1. The grey areas represent the range between the fully dependent (dashed line) and fully independent (dotted line) cases

Another prominent family of multivariate max-stable distributions that turns out to be stochastically ordered in the PQD/concordance order is the Hüsler-Reiß family. It can be shown using the limit result from Theorem 2.7 together with Slepian’s normal comparison lemma and some closure properties of the PQD/concordance order. Figure 8 provides an illustration in terms of nested max-zonoids and ordered Pickands dependence functions in the bivariate case. However, while these models are ordered, we would like to point out that none of the typically chosen families of log-Gaussian generators satisfy any of the orthant orders, cf. Example 4.11.

Theorem 4.7

(PQD/concordance order of Hüsler-Reiß family). Consider the max-stable Hüsler-Reiß family from Theorem/Definition 2.5. If \({\gamma }_{i,j} \le {\widetilde{\gamma }}_{i,j}\) for all \(i,j \in \{1,\dots ,d\}\), then

Remark 4.8

With almost identical proof, cf. Appendix 2, we may even deduce

where \(\ge _{\textrm{sm}}\) denotes the supermodular order, cf. Müller and Stoyan (2002) Section 3.9 or Shaked and Shanthikumar (2007) Section 9.A.4. We have therefore included the respective arguments in the proof, too, although considering the supermodular order is otherwise beyond the scope of this article.

Remark 4.9

Theorem 4.7 includes the assumption that both parameter matrices \({\varvec{\gamma }}\) and \(\widetilde{\varvec{\gamma }}\) constitute a valid set of parameters for the Hüsler-Reiß model, i.e. they need to be elements of \(\mathcal {G}_d\). In dimensions \(d \ge 3\) it is possible that increasing (or decreasing) any of the parameters of a given valid \({\varvec{\gamma }}\) will result in a set of parameters that is not valid for the Hüsler-Reiß model.

Remark 4.10

Since the orthant orders are closed under independent conjunction, Theorem 4.7 extends to the generalised Hüsler-Reiß model, where we can allow some parameter values of \({\varvec{\gamma }}\) to assume the value \(\infty\), as long as \({\varvec{\gamma }}\) remains negative definite in the extended sense (see Remark 2.8).

Example 4.11

(Ordering of G/\(\widetilde{G}\) does not imply generator ordering for \({\varvec{Z}}\)/\(\widetilde{\varvec{Z}}\) – the case of Hüsler-Reiß log-Gaussian generators). Consider the non-degenarate bivariate Hüsler-Reiß model with \(\gamma _{12}=\gamma _{21}=\gamma \in (0,\infty )\) and let additionally \(a\in [0,1]\). Then the zero mean bivariate Gaussian model \((W_1,W_2)^\top\) with \(\mathbb {E}(W_1)=\gamma a^2\), \(\mathbb {E}(W_2)=\gamma (1-a)^2\), \(\textrm{Cov}(W_1,W_2)=0.5\gamma \cdot (a^2+(1-a)^2-1)\) satisfies \(\mathbb {E}(W_1-W_2)^2=\gamma\), hence leads to a generator for the bivariate Hüsler-Reiß distribution in the sense of Theorem/Definition 2.5. WLOG \(a \in (0,1]\) (otherwise consider \(1-a\) instead of a). Then \(\log (Z_1)\) follows a non-degenerate univariate Gaussian distribution with mean \(-0.5 \gamma a^2\) and variance \(\gamma a^2\). The family of such distributions is not ordered in \(\gamma >0\) (cf. e.g. Shaked and Shanthikumar (2007) Example 1.A.26 or Müller and Stoyan (2002) Theorem 3.3.13). Hence, the bivariate family \((\log (Z_1),\log (Z_2))^\top\) can also not be ordered according to orthant order, nor can any multivariate family, for which this constitutes a marginal family. Accordingly, the corresponding log-Gaussian generators \({\varvec{Z}}\) of the Hüsler-Reiß model will not be ordered, even when the resulting max-stable model and exponent measures are ordered as seen in Theorem 4.7.

While Dirichlet and Hüsler-Reiß families are ordered in the PQD/concordance sense according to the natural ordering of their parameter spaces, we would like to provide some examples that show that UO and LO ordering among simple max-stable distributions are in fact not equivalent.

To this end, we revisit the Choquet max-stable model from Section 2.2.3. We write \(\textrm{Choquet}_{\textrm{EC}}(\theta )\) for the simple max-stable Choquet distribution if it is parameterised by its extremal coefficients \(\theta (A)\), \(A \subset \{1,\dots ,d\}\), \(A \ne \emptyset\) and \(\textrm{Choquet}_{\textrm{TD}}(\chi )\) if it is parameterised by its tail dependence coefficients \(\chi (A)\), \(A \subset \{1,\dots ,d\}\), \(A \ne \emptyset\).

Lemma 4.12

(LO and UO order of Choquet family/Tawn-Molchanov model). Consider the family of max-stable Choquet models from Section 2.2.3. Then the LO order is characterised by the ordering of extremal coefficients, we have

and the UO order is characterised by the ordering of tail dependence coefficients, that is

As we know already from Theorem 4.1 part c), in dimension \(d=2\), it is easily seen that \(\chi \le \widetilde{\chi }\) is equivalent to \(\theta \ge \widetilde{\theta }\), alternatively recall \(\theta _{12}+\chi _{12}=2\). Starting from dimension \(d=3\), this is no longer the case and one can easily construct examples, where only LO or UO ordering holds.

Example 4.13

Table 1 lists valid parameter sets for four different trivariate Choquet models. Among these, we can easily read off that

-

\(B \le _{\textrm{uo}}D\), but there is no order between B and D according to lower orthants;

-

\(C \le _{\textrm{lo}} B\), but there is no order between B and C according to upper orthants.

Of course, it is still possible that Choquet models are ordered according to PQD order, e.g.

-

\(A \le _{\textrm{PQD}}B\).

It is also possible to have both LO and UO order in the same direction, e.g.

-

\(A \le _{\textrm{uo}}C\) and \(A \le _{\textrm{lo}}C\).

However, note that such an order can only arise if the bivariate marginal distributions all agree.

Availability of data and material

Not Applicable.

Code availability

Not Applicable.

References

Aulbach, S., Falk, M., Zott, M.: The space of \(D\)-norms revisited. Extremes 18(1), 85–97 (2015). https://doi.org/10.1007/s10687-014-0204-y

Ballani, F., Schlather, M.: A construction principle for multivariate extreme value distributions. Biometrika 98(3), 633–645 (2011). https://doi.org/10.1093/biomet/asr034

Beirlant, J., Goegebeur, Y., Teugels, J., Segers, J.: Statistics of extremes: theory and applications, with contributions from Daniel De Waal and Chris Ferro. Wiley Series in Probability and Statistics. John Wiley & Sons, Ltd., Chichester (2004). https://doi.org/10.1002/0470012382

Berg, C., Christensen, J.P.R., Ressel, P.: Harmonic analysis on semigroups, Vol. 100 of Graduate Texts in Mathematics, Springer-Verlag, New York. Theory of positive definite and related functions. (1984). https://doi.org/10.1007/978-1-4612-1128-0

Boldi, M.-O., Davison, A.C.: A mixture model for multivariate extremes. J. R. Stat. Soc. Ser. B Stat. Methodol. 69(2), 217–229 (2007). https://doi.org/10.1111/j.1467-9868.2007.00585.x

Brockett, P.L., Golden, L.L.: A class of utility functions containing all the common utility functions. Management Sci. 33(8), 955–964 (1987). https://doi.org/10.1287/mnsc.33.8.955

Brown, B.M., Resnick, S.I.: Extreme values of independent stochastic processes. J. Appl. Probability 14(4), 732–739 (1977). https://doi.org/10.2307/3213346

Caballé, J., Pomansky, A.: Mixed risk aversion. J. Econom. Theory 71(2), 485–513 (1996). https://doi.org/10.1006/jeth.1996.0130

Chen, Y., Liu, P., Liu, Y., Wang, R.: Ordering and inequalities for mixtures on risk aggregation. Math. Finance 32(1), 421–451 (2022). https://doi.org/10.1111/mafi.12323

Coles, S.G., Tawn, J.A.: Modelling extreme multivariate events. J Roy Statist Soc Ser B Stat Methodol 53(2), 377–392 (1991). https://doi.org/10.1111/j.2517-6161.1991.tb01830.x

Davison, A., Huser, R., Thibaud, E.: Spatial extremes. In: Handbook of environmental and ecological statistics, pp. 711–744. CRC Press, Boca Raton, FL, Chapman & Hall/CRC Handb. Mod. Stat. Methods (2019)

Davydov, Y., Molchanov, I., Zuyev, S.: Strictly stable distributions on convex cones. Electron. J. Probab. 13(11), 259–321 (2008). https://doi.org/10.1214/EJP.v13-487

de Haan, L.: A spectral representation for max-stable processes. Ann. Probab. 12(4), 1194–1204 (1984). https://www.jstor.org/stable/2243357

Engelke, S., Hitz, A.S.: Graphical models for extremes. J. R. Stat. Soc. Ser. B. Stat. Methodol. 82(4), 871–932 (2020). With discussions. https://doi.org/10.1111/rssb.12355

European Mathematical Society: Envelope. In: Encyclopedia of Mathematics. EMS Press (2020). https://encyclopediaofmath.org/wiki/Envelope. Accessed 29 Aug 2022

Falk, M.: Multivariate Extreme Value theory and D-Norms. Springer Series in Operations Research and Financial Engineering, Springer, Cham. (2019). https://doi.org/10.1007/978-3-030-03819-9

Falk, M., Hüsler, J., Reiß, R.-D.: Laws of small numbers: extremes and rare events, extended Birkhäuser Verlag, Basel (2004). https://doi.org/10.1007/978-3-0348-7791-6

Fallat, S., Lauritzen, S., Sadeghi, K., Uhler, C., Wermuth, N., Zwiernik, P.: Total positivity in Markov structures. Ann. Statist. 45(3), 1152–1184 (2017). https://doi.org/10.1214/16-AOS1478

Gudendorf, G., Segers, J.: Extreme-value copulas. In: Copula Theory and its Applications. Lecture Notes in Statistics, vol. 198, pp. 127–145. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-12465-5_6

Huang, X.: Statistics of bivariate extremes, PhD Thesis, Erasmus University, Rotterdam, Tinbergen Institute Research series No. 22 (1992)

Hüsler, J., Reiß, R.-D.: Maxima of normal random vectors: between independence and complete dependence. Statist. Probab. Lett. 7(4), 283–286 (1989). https://doi.org/10.1016/0167-7152(89)90106-5

Kabluchko, Z.: Extremes of independent Gaussian processes. Extremes 14(3), 285–310 (2011). https://doi.org/10.1007/s10687-010-0110-x

Kabluchko, Z., Schlather, M., de Haan, L.: Stationary max-stable fields associated to negative definite functions. Ann. Probab. 37(5), 2042–2065 (2009). https://doi.org/10.1214/09-AOP455

Karlin, S., Rinott, Y.: Classes of orderings of measures and related correlation inequalities. I. Multivariate totally positive distributions. J. Multivariate Anal. 10(4), 467–498 (1980). https://doi.org/10.1016/0047-259X(80)90065-2

Li, H.: Dependence comparison of multivariate extremes via stochastic tail orders. In: Stochastic orders in reliability and risk. Lecture Notes in Statistics, vol. 208, pp. 363–387. Springer, New York (2013). https://doi.org/10.1007/978-1-4614-6892-9_19

Li, H., Li, X. (eds.): Stochastic orders in reliability and risk. In honor of Professor Moshe Shaked, Papers from the International Workshop (SORR2011) held in Xiamen, June 27–29, 2011. Lecture Notes in Statistics, Springer, New York (2013). https://doi.org/10.1007/978-1-4614-6892-9

Mainik, G., Rüschendorf, L.: Ordering of multivariate risk models with respect to extreme portfolio losses. Stat. Risk Model. 29(1), 73–105 (2012). https://doi.org/10.1524/strm.2012.1103

Marshall, A.W., Olkin, I.: Domains of attraction of multivariate extreme value distributions. Ann. Probab. 11(1), 168–177 (1983). https://www.jstor.org/stable/2243583

Marshall, A.W., Olkin, I., Arnold, B.C.: Inequalities: Theory of Majorization and Its Applications. Springer Series in Statistics, second edn, Springer, New York. (2011). https://doi.org/10.1007/978-0-387-68276-1

Marshall, A.W., Proschan, F.: An inequality for convex functions involving majorization. J. Math. Anal. Appl. 12, 87–90 (1965). https://doi.org/10.1016/0022-247X(65)90056-9

Molchanov, I.: Convex geometry of max-stable distributions. Extremes 11(3), 235–259 (2008). https://doi.org/10.1007/s10687-008-0055-5

Molchanov, I.: Theory of random sets, Vol. 87 of Probability Theory and Stochastic Modelling, 2nd edn. Springer-Verlag, London (2017). https://doi.org/10.1007/978-1-4471-7349-6

Molchanov, I., Strokorb, K.: Max-stable random sup-measures with comonotonic tail dependence. Stochastic Process. Appl. 126(9), 2835–2859 (2016). https://doi.org/10.1016/j.spa.2016.03.004

Müller, A., Stoyan, D.: Comparison methods for stochastic models and risks. Wiley Series in Probability and Statistics, John Wiley & Sons, Ltd., Chichester (2002). https://www.wiley.com/-p-9780471494461

Papastathopoulos, I., Strokorb, K.: Conditional independence among max-stable laws. Statist. Probab. Lett. 108, 9–15 (2016). https://doi.org/10.1016/j.spl.2015.08.008

Papastathopoulos, I., Tawn, J.A.: Stochastic ordering under conditional modelling of extreme values: drug-induced liver injury. J. R. Stat. Soc. Ser. C. Appl. Stat. 64(2), 299–317 (2015). https://doi.org/10.1111/rssc.12074

Resnick, S.I.: Extreme values, regular variation, and point processes, Vol. 4 of Applied Probability. A Series of the Applied Probability Trust, Springer-Verlag, New York (1987). https://doi.org/10.1007/978-0-387-75953-1

Ressel, P.: Homogeneous distributions–and a spectral representation of classical mean values and stable tail dependence functions. J. Multivariate Anal. 117, 246–256 (2013). https://doi.org/10.1016/j.jmva.2013.02.013

Röttger, F., Engelke, S., Zwiernik, P.: Total positivity in multivariate extremes. Ann. Statist. 51(3), 962–1004 (2023). https://doi.org/10.1214/23-aos2272

Sabourin, A., Naveau, P.: Bayesian Dirichlet mixture model for multivariate extremes: a re-parametrization. Comput. Statist. Data Anal. 71, 542–567 (2014). https://doi.org/10.1016/j.csda.2013.04.021

Schlather, M., Tawn, J.: Inequalities for the extremal coefficients of multivariate extreme value distributions. Extremes 5(1), 87–102 (2002). https://doi.org/10.1023/A:1020938210765

Schneider, R., Weil, W.: Stochastic and integral geometry, Probability and its Applications (New York). Springer-Verlag, Berlin. (2008). https://doi.org/10.1007/978-3-540-78859-1

Shaked, M., Shanthikumar, J.G.: Stochastic Orders. Springer Series in Statistics, Springer, New York. (2007). https://doi.org/10.1007/978-0-387-34675-5

Slepian, D.: The one-sided barrier problem for Gaussian noise. Bell System Tech. J. 41, 463–501 (1962). https://doi.org/10.1002/j.1538-7305.1962.tb02419.x

Strokorb, K., Schlather, M.: An exceptional max-stable process fully parameterized by its extremal coefficients. Bernoulli 21(1), 276–302 (2015). https://doi.org/10.3150/13-BEJ567

Tong, Y.L.: Probability inequalities in multivariate distributions, Academic Press [Harcourt Brace Jovanovich, Publishers]. New York-London-Toronto, Ont (1980)

Yuen, R., Stoev, S.: Upper bounds on value-at-risk for the maximum portfolio loss. Extremes 17(4), 585–614 (2014). https://doi.org/10.1007/s10687-014-0198-5

Yuen, R., Stoev, S., Cooley, D.: Distributionally robust inference for extreme Value-at-Risk, Insurance Math. Econom. 92, 70–89 (2020). https://doi.org/10.1016/j.insmatheco.2020.03.003

Acknowledgements

We would like to thank two anonymous referees who have positively challenged us in two directions: (i) not only considering the order according to lower orthants, but to study the PQD-order, and (ii) extending the bivariate to the multivariate Hüsler-Reiß model. Their encouragement to pursue these routes has helped us to significantly broaden the scope of this work. MC thankfully acknowledges funding from the Maths DTP 2020 – Engineering and Physical Sciences Research Council grant EP/V520159/1 – at the School of Mathematics of Cardiff University.

Funding

MC thankfully acknowledges funding from the Maths DTP 2020 – Engineering and Physical Sciences Research Council grant EP/V520159/1 – at the School of Mathematics of Cardiff University.

Author information

Authors and Affiliations

Contributions

These authors contributed equally to this work.

Corresponding author

Ethics declarations

Ethics approval

Not Applicable.

Consent to participate

Not Applicable.

Consent for publication

Not Applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1 Proofs

1.1 Proofs concerning fundamental order relations

Proof of Theorem 4.1

In what follows, let \({\varvec{X}} \sim G\) and \(\widetilde{\varvec{X}} \sim \widetilde{G}\).

-

(a)

Because of (13), it suffices to compare \(G({\varvec{x}})\) and \(\widetilde{G}({\varvec{x}})\) for \({\varvec{x}} \in (0,\infty )^d\) only. The same is true for \(\ell\) and \(\widetilde{\ell }\) as they are continuous on \([0,\infty )^d\). At the same time the test sets for the relation \(\Lambda \le _{\textrm{lo}}\widetilde{\Lambda }\) in Definition 3.2 are precisely of the form \(\mathbb {R}^d \setminus L_{{\varvec{x}}}\), where \({\varvec{x}} \in (0,\infty )^d\). So the equivalence of (i), (ii), (iii) and (iv) follows directly from the relations

$$\begin{aligned} G({\varvec{x}}) =\mathbb {P}({\varvec{X}} \in L_{\varvec{x}}) =\exp (-\Lambda ([0,\infty ]^d \setminus L_{\varvec{x}})) =\exp (-\Lambda (\mathbb {R}^d \setminus L_{\varvec{x}})) \end{aligned}$$with

$$\begin{aligned} \Lambda (\mathbb {R}^d \setminus L_{\varvec{x}}) = \ell (1/x_1,\dots ,1/x_d)= \mathbb {E}(\max (Z_1/x_1,\dots ,Z_d/x_d)), \end{aligned}$$and the respective tilde-counterparts. Likewise, the equivalence of (iv) and (v) is immediate from (3) and (2).

-

(b)

We start by showing the equivalence between (ii) and (iii). The test sets for the relation \(\Lambda \le _{\textrm{uo}}\widetilde{\Lambda }\) in Definition 3.2 are precisely the upper orthants \(U_{{\varvec{x}}}\), where at least one component of \({\varvec{x}}\) is larger than zero. Let \({\varvec{a}} \in (0,\infty )^d\) and \(A \subset \{1,\dots ,d\}\), \(A \ne \emptyset\). Define \({\varvec{x}} \in \mathbb {R}^d\) by setting \(x_i=1/a_i\) if \(i \in A\) and \(x_i=-1\) else. Then \(U_{\varvec{x}}\) is an admissible test set and

$$\begin{aligned} \Lambda (U_{\varvec{x}}) = \Lambda \bigg (\Big \{{\varvec{y}} \in [0,\infty )^d\setminus \{{\varvec{0}}\} \,:\, \min _{i\in A}(a_iy_i)>1\Big \}\bigg ) =\mathbb {E}\Big (\min _{i \in A}(a_i Z_i)\Big ). \end{aligned}$$(16)Likewise, \(\widetilde{\Lambda }(U_{\varvec{x}})=\mathbb {E}(\min _{i \in A}(a_i \widetilde{Z}_i))\) and we may deduce the implication (ii)\(\Rightarrow\)(iii). Conversely, assume (iii) and note that the same argument implies \(\Lambda (U_{\varvec{x}})\le \widetilde{\Lambda }(U_{\varvec{x}})\) for any \({\varvec{x}}\), which has at least one positive component, whilst all other components of \({\varvec{x}}\) are negative. What remains to be seen is the same relation for upper orthants \(U_{\varvec{x}}\), for which at least one component of \({\varvec{x}}\) is positive, but where among the non-positive components, there may be zeroes. Let \({\varvec{x}} \in \mathbb {R}^d\) be such a vector. For \(n \in \mathbb {N}\) let \({\varvec{x}}_n \in \mathbb {R}^d\) be an identical vector, but with zero entries replaced by 1/n. Then \(\Lambda (U_{{\varvec{x}}_n})\le \widetilde{\Lambda }(U_{{\varvec{x}}_n})\) for all \(n \in \mathbb {N}\) by the previous argument, whilst \(U_{{\varvec{x}}_n} \uparrow U_{\varvec{x}}\), such that \(\Lambda (U_{{\varvec{x}}_n}) \rightarrow \Lambda (U_{{\varvec{x}}})\) and \(\widetilde{\Lambda }(U_{{\varvec{x}}_n}) \rightarrow \widetilde{\Lambda }(U_{{\varvec{x}}})\) as \(n \rightarrow \infty\). This shows (iii)\(\Rightarrow\)(ii).

Next, we establish (i)\(\Rightarrow\)(iii). Assume (i). Since the order UO is closed under marginalisation, it suffices to consider \(A=\{1,\dots ,d\}\) in (iii), see also Lemma 2.2. Set \(x_i=1/a_i\), \(i=1,\dots ,d\), such that (16) holds (as well as the tilde-version) and note that the closure of \(U_{\varvec{x}}\) in \([0,\infty ]^d \setminus \{{\varvec{0}}\}\) is a continuity set for each of the \((-1)\)-homogeneous measures \(\Lambda\) and \(\widetilde{\Lambda }\). Hence, since each max-stable vector satisfies its own Domain-of-attraction conditions (cf. e.g. Resnick (1987) Section 5.4.2), we have

$$\begin{aligned} \Lambda (U_{\varvec{x}})=\lim _{n \rightarrow \infty } n \mathbb {P}({\varvec{X}} \in n U_{\varvec{x}})=\lim _{n \rightarrow \infty } n \mathbb {P}({\varvec{X}} \in U_{n{\varvec{x}}}) \end{aligned}$$and the analog for \(\widetilde{\Lambda }\) and \(\widetilde{\varvec{X}}\). The implication (i)\(\Rightarrow\)(iii) follows.

Lastly, let us establish (iii)\(\Rightarrow\)(i). Suppose (iii) holds. We abbreviate \(\chi ^{(a)}(A)=\mathbb {E}(\min _{i \in A}(a_i Z_i))\) and analogously \(\widetilde{\chi }^{(a)}(A)=\mathbb {E}(\min _{i \in A}(a_i \widetilde{Z}_i))\), such that (iii) translates into

$$\begin{aligned} \chi ^{(a)}(A) \le \widetilde{\chi }^{(a)}(A) \end{aligned}$$for all \({\varvec{a}} \in (0,\infty )^d\) and \(A \subset \{1,\dots ,d\}\), \(A \ne \emptyset\). With \(\theta ^{(a)}(A)=\mathbb {E}(\max _{i \in A}(a_i Z_i))\) we find that

$$\begin{aligned} \chi ^{(a)}(A)=\sum _{I \subset A, \, I \ne \emptyset } (-1)^{|I|+1} \theta ^{(a)}(I) \qquad \text {and} \qquad \theta ^{(a)}(A)=\sum _{I \subset A, \, I \ne \emptyset } (-1)^{|I|+1} \chi ^{(a)}(I) \end{aligned}$$(similarly to (9) and analogously for the tilde-version), where \(\theta ^{(a)}\) can be interpreted as directional extremal coefficient function. It is easily seen that \(\theta ^{(a)}\) with \(\theta ^{(a)}(\emptyset )=0\) is union-completely alternating, cf. Lemma B.3.

Because of (12), in order to arrive at (i), it suffices to establish

$$\begin{aligned} \mathbb {P}\Big (\min _{i=1,\dots ,d}(a_i X_i)>1\Big ) \le \mathbb {P}\Big (\min _{i=1,\dots ,d}(a_i \widetilde{X}_i)>1\Big ) \end{aligned}$$for all \({\varvec{a}} \in (0,\infty )^d\). In the notation of Lemma B.3 and with \(g(x)=1-\exp (-x)\), the left-hand side can be rewritten as

$$\begin{aligned} \mathbb {P}\Big (\min _{i=1,\dots ,d}(a_i X_i)>1\Big )&= 1-\sum _{I \subset \{1,\dots ,d\}, \, I \ne \emptyset } (-1)^{|I|+1} \mathbb {P}\Big (\max _{i \in I}(a_i X_i) \le 1\Big )\\&= - \sum _{I \subset \{1,\dots ,d\}} (-1)^{|I|+1} \exp \big (-\ell ({\varvec{a}}_I)\big )\\&= \sum _{I \subset \{1,\dots ,d\}} (-1)^{|I|+1} - \sum _{I \subset \{1,\dots ,d\}} (-1)^{|I|+1} \exp \big (-\theta ^{(a)}(I)\big )\\&= \sum _{I \subset \{1,\dots ,d\}} (-1)^{|I|+1} g\big (\theta ^{(a)}(I)\big ) \end{aligned}$$(and analogously for the tilde-version). The assertion follows then directly from Proposition B.9, since g is a Bernstein function.

-

(c)

The statement follows from the relation

$$\begin{aligned} \mathbb {E}(\min (a_1Z_1,a_2Z_2)) + \mathbb {E}(\max (a_1Z_1,a_2Z_2)) = \mathbb {E}(\min (a_1\widetilde{Z}_1,a_2 \widetilde{Z}_2)) + \mathbb {E}(\max (a_1 \widetilde{Z}_1,a_2 \widetilde{Z}_2)), \end{aligned}$$as both sides are equal to \(a_1+a_2\). \(\square\)

Proof of Corollary 4.3

In view of (14) and Theorem 4.1 b), if suffices to investigate the upper and lower bounds of \(\mathbb {E}(\min _{i \in A}(a_i Z_i))\) for \({\varvec{a}} \in (0,\infty )^d\) and \(A \subset \{1,\dots ,d\}\), \(A \ne \emptyset\), where \({\varvec{Z}}\) is a generator for G. We have

and the upper and lower bounds are attained by generators of the fully dependent model (\({\varvec{Z}}\) being almost surely \({\varvec{e}}=(1,1,\dots ,1)^\top\)) and the independent model (\({\varvec{Z}}\) being uniformly distributed among the set \(\{d{\varvec{e}_1},d{\varvec{e}_2},\dots ,d{\varvec{e}}_d\}\)), respectively, which implies the assertion. \(\square\)

Proof of Corollary 4.4

The lower orthant order \({\varvec{X}}^* \, \ge _{\textrm{lo}}\, {\varvec{X}}\) is known from Theorem 2.9. Let \({\varvec{Z}}\) and \({\varvec{Z}}^*\) be generators of the respective models. Since they share identical extremal coefficients, they also share identical tail dependence coefficients \(\chi (A)=\mathbb {E}(\min _{i \in A} Z_i)=\mathbb {E}(\min _{i \in A} Z^*_i)\), \(A \subset \{1,\dots ,d\}\), \(A \ne \emptyset\), which can be retrieved from \(\theta\) via (9). In general, we have for \(A \subset \{1,\dots ,d\}\), \(A \ne \emptyset\), \({\varvec{a}} \in (0,\infty )^d\)

The Choquet model attains the lower bound, since with (7) and (11)

So by Theorem 4.1 we also have \({\varvec{X}}^* \, \le _{\textrm{uo}}\, {\varvec{X}}\), hence the assertion. \(\square\)

Proof of Lemma 4.12

The LO part is immediate from \(\theta \le \widetilde{\theta }\) implying the inclusion of associated max-zonoids \(K^* \subset \widetilde{K}^*\) or Choquet integrals \(\ell ^* \le \widetilde{\ell }^*\) (cf. Theorem 2.9) and then follows directly from Theorem 4.1 part a). For the UO part, note from the Proof of Corollary 4.4 that for \(A \subset \{1,\dots ,d\}\), \(A \ne \emptyset\), \({\varvec{a}} \in (0,\infty )^d\)

if \({\varvec{Z}}\) and \(\widetilde{\varvec{Z}}\) are generators of the respective models, hence the assertion with Theorem 4.1 part b). \(\square\)

1.2 Proofs concerning the Dirichlet and Hüsler-Reiß models

Proof of Theorem 2.3

The equivalence of (ii) and (iii) has been verified in Coles and Tawn (1991). The equivalence of (i) and (ii) follows similarly to Aulbach et al. (2015) (3) from the fact that \({\varvec{D}}\) is distributed like \({\varvec{\Gamma }}/\Vert {\varvec{\Gamma }}\Vert _1\) and the independence of \({\varvec{\Gamma }}/\Vert {\varvec{\Gamma }} \Vert _1\) and \(\Vert {\varvec{\Gamma }} \Vert _1\). More precisely, let \(\ell _1\) and \(\ell _2\) be the stable tail dependence functions that arise from the generators (i) and (ii), respectively. Then \(\ell _1\) and \(\ell _2\) can be expressed as follows for any \({\varvec{x}} \in [0,\infty )^d\)

If suffices to note \(\mathbb {E}\Vert {\varvec{\Gamma }} \Vert _1 = \Vert {\varvec{\alpha }}\Vert _1\) in order to conclude \(\ell _1=\ell _2\). \(\square\)

In order to prove Theorem 4.5 we will use a simple inequality that follows from the theory of majorisation (Marshall et al. 2011).

Proposition A.1

(Marshall and Proschan (1965) Corollary 3, Marshall et al. (2011) Proposition B.2.b.) Let \(g:\mathbb {R}\rightarrow \mathbb {R}\) be continuous and convex and let \(X_1,X_2,\dots\) be a sequence of independent and identically distributed random variables, then

is nonincreasing in \(n=1,2,\dots\).

Corollary A.2

Let \(g:\mathbb {R}\rightarrow \mathbb {R}\) be continuous and convex and, let \(Z^{(\alpha )} \sim \Gamma (\alpha )\) follow a univariate Gamma distribution with shape parameter \(\alpha >0\), then

is nonincreasing in \(\alpha \in (0,\infty )\).

Proof

We consider first the case that \(\alpha =(k/n) \cdot \beta\) for some natural numbers \(1\le k < n\). Then consider independent and identically distributed random variables \(\Gamma _1,\Gamma _2,\dots\) following a \(\Gamma (\beta /n)\) distribution. Then Proposition A.1 gives

By the convolution stability of the Gamma distribution

Hence, the assertion is shown for \(\alpha\) and \(\beta\) that differ by a rational multiplier.

If we only know \(\alpha < \beta\), consider a decreasing sequence \(\beta _n \downarrow \beta\), such that \(\alpha\) and \(\beta _n\) differ by a rational multiplier. This gives \(\mathbb {E}g({Z^{(\alpha )}}/{\alpha }) \ge \limsup _{n \rightarrow \infty } \mathbb {E}g({Z^{(\beta _n)}}/{\beta _n})\) by the above argument. On the other hand, Fatou’s lemma gives \(\mathbb {E}g({Z^{(\beta )}}/{\beta }) \le \liminf _{n \rightarrow \infty } \mathbb {E}g({Z^{(\beta _n)}}/{\beta _n})\). This finishes the proof. \(\square\)

Proof of Theorem 4.5

If \({\varvec{\alpha }}={\varvec{\beta }}\), the statement is clear. Else, because the parameter space of the Dirichlet model is \((0,\infty )^d\), we can find a chain of parameter vectors \({\varvec{\alpha }}={\varvec{\alpha }}^{(0)}\le {\varvec{\alpha }}^{(1)}\le \dots \le {\varvec{\alpha }}^{(m)}={\varvec{\beta }}\), such that for each \(i=0,\dots ,m-1\), the vectors \({\varvec{\alpha }}^{(i)}\) and \({\varvec{\alpha }}^{(i+1)}\) differ only by one component. Hence it suffices to consider the case, where \({\varvec{\alpha }}\) and \({\varvec{\beta }}\) differ only in one component. Without loss of generality, let this be the first component.

Let \({\varvec{Z}}\) be a Gamma generator for \(\textrm{MaxDir}({\varvec{\alpha }})\) and \(\widetilde{\varvec{Z}}\) be a Gamma generator for for \(\textrm{MaxDir}({\varvec{\beta }})\) in the sense of Theorem/Definition 2.3. Then we may assume that \(Z_i=\widetilde{Z}_i\) for \(i=2,\dots ,d\), whereas \(\alpha _1Z_1\sim \Gamma (\alpha _1)\) and \(\beta _1 \widetilde{Z}_1 \sim \Gamma (\beta _1)\) are independent from \((Z_2,\dots ,Z_d)^\top\), and \(\alpha _1<\beta _1\) by assumption. We will need to show (cf. Theorem 4.1) that for fixed \({\varvec{a}} \in (0,\infty )^d\) and \(A \subset \{1,\dots ,d\}\), \(A \ne \emptyset\)

Due to the setting above, it suffices to consider only subsets A with \(1 \in A\), and due to the marginal standardisation \(\mathbb {E}(Z_1)=1\), it suffices to restrict our attention to \(A \setminus \{1\} \ne \emptyset\). Setting \(V_A= \min _{i \in A \setminus \{1\}}{(a_iZ_i)}\) and \(W= \max _{i=2,\dots ,d}{(a_iZ_i)}\) this means the assertion will follow from