Abstract

Context

Tangled commits are changes to software that address multiple concerns at once. For researchers interested in bugs, tangled commits mean that they actually study not only bugs, but also other concerns irrelevant for the study of bugs.

Objective

We want to improve our understanding of the prevalence of tangling and the types of changes that are tangled within bug fixing commits.

Methods

We use a crowd sourcing approach for manual labeling to validate which changes contribute to bug fixes for each line in bug fixing commits. Each line is labeled by four participants. If at least three participants agree on the same label, we have consensus.

Results

We estimate that between 17% and 32% of all changes in bug fixing commits modify the source code to fix the underlying problem. However, when we only consider changes to the production code files this ratio increases to 66% to 87%. We find that about 11% of lines are hard to label leading to active disagreements between participants. Due to confirmed tangling and the uncertainty in our data, we estimate that 3% to 47% of data is noisy without manual untangling, depending on the use case.

Conclusion

Tangled commits have a high prevalence in bug fixes and can lead to a large amount of noise in the data. Prior research indicates that this noise may alter results. As researchers, we should be skeptics and assume that unvalidated data is likely very noisy, until proven otherwise.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Detailed and accurate information about bug fixes is important for many different domains of software engineering research, e.g., program repair (Gazzola et al. 2019), bug localization (Mills et al. 2018), and defect prediction (Hosseini et al. 2019). Such research suffers from mislabeled data, e.g., because commits are mistakenly identified as bug fixes (Herzig et al. 2013) or because not all changes within a commit are bug fixes (Herzig and Zeller 2013). A common approach to obtain information about bug fixes is to mine software repositories for bug fixing commits and assume that all changes in the bug fixing commit are part of the bug fix, e.g., with the SZZ algorithm (Śliwerski et al. 2005). Unfortunately, prior research showed that the reality is more complex. The term tangled commitFootnote 1 was established by Herzig and Zeller (2013) to characterize the problem that commits may address multiple issues, together with the concept of untangling, i.e., the subsequent separation of these concerns. Multiple prior studies established through manual validation that tangled commits naturally occur in code bases. For example, Herzig and Zeller (2013), Nguyen et al. (2013), Kirinuki et al. (2014), Kochhar et al. (2014), Kirinuki et al. (2016), and Wang et al. (2019), and Mills et al. (2020). Moreover, Nguyen et al. (2013), Kochhar et al. (2014), and Herzig et al. (2016), and Mills et al. (2020) have independently shown that tangling can have a negative effect on experiments, e.g., due to noise in the training data that reduces model performance as well as due to noise in the test data which significantly affects performance estimates.

However, we identified four limitations regarding the knowledge on tangling within the current literature.The first and most common limitation of prior work is that it either only considered a sample of commits, or that the authors failed to determine whether the commits were tangled or not for a large proportion of the data.Footnote 2 Second, the literature considers this problem from different perspectives: most literature considers tangling at the commit level, whereas others break this down to the file-level within commits. Some prior studies focus on all commits, while others only consider bug fixing commits. Due to these differences, in combination with limited sample sizes, the prior work is unclear regarding the prevalence of tangling. For example, Kochhar et al. (2014) and Mills et al. (2020) found a high prevalence of tangling within file changes, but their estimates for the prevalence of tangling varied substantially with 28% and 50% of file changes affected, respectively. Furthermore, how the lower boundary of 15% tangled commits that Herzig and Zeller (2013) estimated relates to the tangling of file changes is also unclear. Third, there is little work on what kind of changes are tangled. Kirinuki et al. (2014) studied the type of code changes that are often tangled with other changes and found that these are mostly logging, checking of pre-conditions, and refactorings. Nguyen et al. (2013) and Mills et al. (2020) provide estimations on the types of tangled commits on a larger scale, but their results contradict each other. For example, Mills et al. (2020) estimate six times more refactorings in tangled commits than Nguyen et al. (2013). Fourth, these studies are still relatively coarse-grained and report results at the commit and file level.

Despite the well established research on tangled commits and their impact on research results, their prevalence and content is not yet well understood, neither for commits in general, nor with respect to bug fixing commits. Due to this lack of understanding, we cannot estimate how severe the threat to the validity of experiments due to the tangling is, and how many tools developed based on tangled commits may be negatively affected.

Our lack of knowledge about tangled commits notwithstanding, researchers need data about bug fixing commits. Currently, researchers rely on three different approaches to mitigate this problem: (i) seeded bugs; (ii) no or heuristic untangling; and (iii) manual untangling. First, we have data with seeded bugs, e.g., the SIR data (Do et al. 2005), the Siemens data (Hutchins et al. 1994), and through mutation testing (Jia and Harman 2011). While there is no risk of noise in such data, it is questionable whether the data is representative for real bugs. Moreover, applications like bug localization or defect prediction cannot be evaluated based on seeded bugs.

The second approach is to assume that commits are either not tangled or that heuristics are able to filter tangling. Examples of such data are ManyBugs (Le Goues et al. 2015), Bugs.jar (Saha et al. 2018), as well as all defect prediction data sets (Herbold et al. 2019). The advantage of such data sets is that they are relatively easy to collect. The drawback is that the impact of noise due to tangled commits is unclear, even though modern variants of the SZZ algorithm can automatically filter some tangled changes like comments, whitespaces (Kim et al. 2006) or even some refactorings (Neto et al. 2018).

Third, there are also some data sets that were manually untangled. While the creation of such data is very time consuming, such data sets are the gold standard. They represent real-world defects and do not contain noise due to tangled commits. To the best of our knowledge, there are only very few such data sets, i.e., Defects4J (Just et al. 2014) with Java bugs, BugsJS (Gyimesi et al. 2019) with JavaScript bugs, and the above mentioned data by Mills et al. (2020).Footnote 3 Due to the effort required to manually validate changes, the gold standard data sets contain only samples of bugs from each studied project, but no data covers all reported bugs within a project, which limits the potential use cases.Footnote 4

This article fills this gap in our current knowledge about tangling bug fixing commits. We provide a new large-scale data set that contains validated untangled bug fixes for the complete development history of 23 Java projects and partial data for five further projects. In comparison to prior work, we label all data on a line-level granularity. We have (i) labels for each changed line in a bug fixing commit; (ii) accurate data about which lines contribute to the semantic change of the bug fix; and (iii) the kind of change contained in the other lines, e.g., whether it is a change to tests, a refactoring, or a documentation change. This allows us not only to untangle the bug fix from all other changes, but also gives us valuable insights into the content of bug fixing commits in general and the prevalence of tangling within such commits.

Through our work, we also gain a better understanding of the limitations of manual validation for the untangling of commits. Multiple prior studies indicate that there is a high degree of uncertainty when determining whether a change is tangled or not (Herzig and Zeller 2013; Kirinuki et al. 2014, 2016). Therefore, we present each commit to four different participants who labeled the data independently from each other. We use the agreement among the participants to gain insights into the uncertainty involved in the labeling, while also finding lines where no consensus was achieved, i.e., that are hard for researchers to classify.

Due to the massive amount of manual effort required for this study, we employed the research turk approach (Herbold 2020) to recruit participants for the labeling. The research turk is a means to motivate a large number of researchers to contribute to a common goal, by clearly specifying the complete study together with an open invitation and clear criteria for participation beforehand (Herbold et al. 2020). In comparison to surveys or other studies where participants are recruited, participants in the research turk actively contribute to the research project, in our case by labeling data and suggesting improvements of the manuscript. As a result, 45 of the participants we recruited became co-authors of this article. Since this is, to the best of our knowledge, a new way to conduct research projects about software engineering, we also study the effectiveness of recruiting and motivating participants.

Overall, the contributions of this article are the following.

-

The Line-Labelled Tangled Commits for Java (LLTC4J) corpus of manually validated bug fixing commits covering 2,328 bugs from 28 projects that were fixed in 3,498 commits that modified 289,904 lines. Each changed line is annotated with the type of change, i.e., whether the change modifies the source code to correct the problem causing the bug, a whitespace or documentation change, a refactoring, a change to a test, a different type of improvement unrelated to the bug fix, or whether the participants could not determine a consensus label.

-

Empirical insights into the different kinds of changes within bug fixing commits which indicate that, in practice, most changes in bug fixing commits are not about the actual bug fix, but rather related changes to non-production artifacts such as tests or documentation.

-

We introduce the concept of problematic tangling for different uses of bug data to understand the noise caused by tangled commits for different research applications.

-

We find that researchers tend to unintentionally mislabel lines in about 7.9% of the cases. Moreover, we found that identifying refactorings and other unrelated changes seems to be challenging, which is shown through 14.3% of lines without agreement in production code files, most of which are due to a disagreement whether a change is part of the bug fix or unrelated.

-

This is the first use of the research turk method and we showed that this is an effective research method for large-scale studies that could not be accomplished otherwise.

The remainder of this article is structured as follows. We discuss the related work in Section 2. We proceed with the description of the research protocol in Section 3. We present and discuss the results for our research questions in Section 4 and Section 5. We report the threats to the validity of our work in Section 6. Finally, we conclude in Section 7.

2 Related Work

We focus the discussion of the related work on the manual untangling of commits. Other aspects, such as automated untangling algorithms (e.g., Kreutzer et al. 2016; Pârtachi et al. 2020), the separation of concerns into multiple commits (e.g., Arima et al. 2018; Yamashita et al. 2020), the tangling of features with each other (Strüder et al. 2020), the identification of bug fixing or inducing commits (e.g., Rodríguez-Pérez et al. 2020), or the characterization of commits in general (e.g., Hindle et al. 2008), are out of scope.

2.1 Magnitude of Tangling

The tangling of commits is an important issue, especially for researchers working with software repository data, that was first characterized by Kawrykow and Robillard (2011). They analyzed how often non-essential commits occur in commit histories, i.e., commits that do not modify the logic of the source code and found that up to 15.5% of all commits are non-essential. Due to the focus on non-essential commits, the work by Kawrykow and Robillard (2011) only provides a limited picture on tangled commits in general, as tangling also occurs if logical commits for multiple concerns are mixed within a single commit.

The term tangled change (commit) was first used in the context of repository mining by Herzig and Zeller (2013) (extended in Herzig et al. (2016)Footnote 5). The term tangling itself was already coined earlier in the context of the separation of concerns (e.g., Kiczales et al., 1997). Herzig and Zeller (2013) studied the commits of five Java projects over a period of at least 50 months and tried to manually classify which of the commits were tangled, i.e., addressed multiple concerns. Unfortunately, they could not determine if the commits are tangled for 2,498 of the 3,047 bug fixing commits in their study. Of the bug fixing commits they could classify, they found that 298 were tangled and 251 were not tangled. Because they could not label large amounts of the commits, they estimate that at least 15% of the bug fixing commits are tangled.

Nguyen et al. (2013) also studied tangling in bug fixing commits, but used the term mixed-purpose fixing commits. They studied 1296 bug fixing commits from eight projects and identified 297 tangled commits, i.e., 22% of commits that are tangled. A strength of the study is that the authors did not only label the tangled commits, but also identified which file changes in the commit were tangled. Moreover, Nguyen et al. (2013) also studied which types of changes are tangled and found that 4.9% were due to unrelated improvements, 1.1% due to refactorings, 1.8% for formatting issues, and 3.5% for documentation changes unrelated to the bug fix.Footnote 6 We note that it is unclear how many of the commits are affected by multiple types of tangling and, moreover, that the types of tangling were analyzed for only about half of the commits. Overall, this is among the most comprehensive studies on this topic in the literature. However, there are two important issues that are not covered by Nguyen et al. (2013). First, they only explored if commits and files are tangled, but not how much of a commit or file is tangled. Second, they do not differentiate between problematic tangling and benign tangling (see Section 4.2.2), and, most importantly, between tangling in production files and tangling in other files. Thus, we cannot estimate how strongly tangling affects different research purposes.

Kochhar et al. (2014) investigated the tangling of a random sample of the bug fixing commits for 100 bugs. Since they were interested in the implications of tangling on bug localization, they focused on finding out how many of the changed files actually contributed to the correction of the bug, and how many file changes are tangled, i.e., did not contribute to the bug fix. They found that 358 out of 498 file changes were corrective, the remaining 140 changes, i.e., 28% of all file changes in bug fixing commits were tangled.

Kirinuki et al. (2014) and Kirinuki et al. (2016) are a pair of studies with a similar approach: they used an automated heuristic to identify commits that may be tangled and then manually validated if the identified commits are really tangled. Kirinuki et al. (2014) identified 63 out of 2,000 commits as possibly tangled and found through manual validation that 27 of these commits were tangled and 23 were not tangled. For the remaining 13 commits, they could not decide if the commits are tangled. Kirinuki et al. (2016) identified 39 out of 1,000 commits as potentially tangled and found through manual validation that 21 of these commits were tangled, while 7 were not tangled. For the remaining 11 commits, they could not decide. A notable aspect of the work by Kirinuki et al. (2014) is that they also analyzed what kind of changes were tangled. They found that tangled changes were mostly due to logging, condition checking, or refactorings. We further note that these studies should not be seen as an indication of low prevalence of tangled commits, just because only 48 out of 3,000 commits were found to be tangled, since only 102 commits were manually validated. These commits are not from a representative random sample, but rather from a sample that was selected such that the commits should be tangled according to a heuristic. Since we have no knowledge about how good the heuristic is at identifying tangled commits, it is unclear how many of the 2,898 commits that were not manually validated are also tangled.

Tao and Kim (2015) investigated the impact of tangled commits on code review. They manually investigated 453 commits and found that 78 of these commits were tangled in order to determine ground truth data for the evaluation of their code review tool. From the description within the study, it is unclear if the sample of commits is randomly selected, if these are all commits in the four target projects within the study time frame that changed multiple lines, or if a different sampling strategy was employed. Therefore, we cannot conclude how the prevalence of tangling in about 17% of the commits generalizes beyond the sample.

Wang et al. (2019) also manually untangled commits. However, they had the goal to create a data set for the evaluation of an automated algorithm for the untangling of commits. They achieved this by identifying 50 commits that they were sure were tangled commits, e.g., because they referenced multiple issues in the commit message. They hired eight graduate students to perform the untangling in pairs. Thus, they employed an approach for the untangling that is also based on the crowd sourcing of work, but within a much more closely controlled setting and with only two participants per commit instead of four participants. However, because (Wang et al. 2019) only studied tangled commits, no conclusions regarding the prevalence of the tangling can be drawn based on their results.

Mills et al. (2020) extends Mills et al. (2018) and contains the most comprehensive analysis of tangled commits to date. They manually untangled the changes for 803 bugs from fifteen different Java projects. They found that only 1,154 of the 2,311 changes of files within bug fixing commits contributed to bug fixes, i.e., a prevalence of 50% of tangling. Moreover, they provide insights into what kinds of changes are tangled: 31% of tangled changes are due to tests, 9% due to refactorings, and 8% due to documentation. They also report that 47% of the tangled changes are due to adding source code. Unfortunately, Mills et al. (2020) flagged all additions of code as tangled changes. While removing added lines makes sense for the use case of bug localization, this also means that additions that are actually the correction of a bug would be wrongly considered to be tangled changes. Therefore, the actual estimation of prevalence of tangled changes is between 32% and 50%, depending on the number of pure additions that are actually tangled. We note that the findings by Mills et al. (2020) are not in line with prior work, i.e., the ratio of tangled file changes is larger than the estimation by Kochhar et al. (2014) and the percentages for types of changes are different from the estimations by Nguyen et al. (2013).

Dias et al. (2015) used a different approach to get insights into the tangling of commits: they integrated an interface for the untangling of commits directly within an IDE and asked the developers to untangle commits when committing their local changes to the version control system. They approached the problem of tangled commits by grouping related changes into clusters. Unfortunately, the focus of the study is on the developer acceptance, as well as on the changes that developers made to the automatically presented clusters. It is unclear if the clusters are fully untangled, and also if all related changes are within the same cluster. Consequently, we cannot reliably estimate the prevalence or contents of tangled commits based on their work.

2.2 Data Sets

Finally, there are data sets of bugs where the data was untangled manually, but where the focus was only on getting the untangled bug fixing commits, not on the analysis of the tangling. Just et al. (2014) did this for the Defects4J data and Gyimesi et al. (2019) for the BugsJS data. While these data sets do not contain any noise, they have several limitations that we overcome in our work. First, we do not restrict the bug fixes but allow all bugs from a project. Defects4J and BugsJS both only allow bugs that are fixed in a single commit and also require that a test that fails without the bug fix was added as part of the bug fix. While bugs that fulfill these criteria were manually untangled for Defects4J, BugJS has additional limitations. BugJS requires that bug fixes touch at most three files, modify at most 50 lines of code, and do not contain any refactorings or other improvements unrelated to the bug fix. Thus, BugsJS is rather a sample of bugs that are fixed in untangled commits than a sample of bugs that was manually untangled. While these data sets are gold standard untangled data sets, they are not suitable to study tangling. Moreover, since both data sets only contain samples, they are not suitable for research that requires all bugs of a specific release or within a certain time frame of a project. Therefore, our data is suitable for more kinds of research than Defects4J and BugsJS, and because our focus is not solely on the creation of the data set, but also on the understanding of tangling within bug fixing commits, we also provide a major contribution to the knowledge about tangled commits.

2.3 Research Gap

Overall, there is a large body of work on tangling, but studies are limited in sampling strategies, inability to label all data, or because the focus was on different aspects. Thus, we cannot form conclusive estimates, neither regarding the types of tangled changes, nor regarding the general prevalence of tangling within bug fixing commits.

3 Research Protocol

Within this section, we discuss the research protocol, i.e., the research strategy we pre-registered (Herbold et al. 2020) to study our research questions and hypotheses. The description and section structure of our research protocol is closely aligned with the pre-registration, but contains small deviations, e.g., regarding the sampling strategy. All deviations are described as part of this section and summarized in Section 3.8.

3.1 Terminology

We use the term “the principal investigators” to refer to the authors of the registered report, “the manuscript” to refer to this article that resulted from the study, and “the participants” to refer to researchers and practitioners who collaborated on this project, many of whom are now co-authors of this article.

Moreover, we use the term “commit” to refer to observable changes within the version control system, which is in line with the terminology proposed by Rodríguez-Pérez et al. (2020). We use the term “change” to refer to the actual modifications within commits, i.e., what happens in each line that is modified, added, or deleted as part of a commit.

3.2 Research Questions and Hypotheses

Our research is driven by two research questions for which we derived three hypotheses. The first research question is the following.

RQ1: What percentage of changed lines in bug fixing commits contributes to the bug fix and can we identify these changes?

We want to get a detailed understanding of both the prevalence of tangling, as well as what kind of changes are tangled within bug fixing commits. When we speak of contributing to the bug fix, we mean a direct contribution to the correction of the logical error. We derived two hypotheses related to this research question.

-

Fewer than 40% of changed lines in bug fixing commits contribute to the bug fix.

-

A label is a consensus label when at least three participants agree on it. Participants fail to achieve a consensus label on at least 10.5% of lines.Footnote 7

We derived hypothesis H1 from the work by Mills et al. (2018), who found that 496 out of 1344 changes to files in bug fixing commits contributed to bug fixes.Footnote 8 We derived our expectation from Mills et al. (2018) instead of Herzig et al. (2013) due to the large degree of uncertainty due to unlabeled data in the work by Herzig et al. (2013). We derived H2 based on the assumption that there is a 10% chance that participants misclassify a line, even if they have the required knowledge for correct classification. We are not aware of any evidence regarding the probability of random mistakes in similar tasks and, therefore, used our intuition to estimate this number. Assuming a binomial distribution B(k|p, n) with the probability of random mislabels p = 0.1 and n = 4 participants that label each commit, we do not get a consensus for k ≥ 2 participants randomly mislabeling the line, i.e.,

Thus, if we observe 10.5% of lines without consensus, this would be twice more than expected given the assumption of 10% random errors, indicating that lack of consensus is not only due to random errors. We augment the analysis of this hypothesis with a survey among participants, asking them how often they were unsure about the labels.

The second research question regards our crowd working approach to organize the manual labor required for our study.

RQ2: Can gamification motivate researchers to contribute to collaborative research projects?

-

The leaderboard motivates researchers to label more than the minimally required 200 commits.

We derived H3 from prior evidence that gamification (Werbach and Hunter 2012) is an efficient method for the motivation of computer scientists, e.g., as demonstrated on Stack Overflow (Grant and Betts 2013). Participants can view a nightly updated leaderboard, both to check their progress, as well as where they would currently be ranked in the author list. We believe that this has a positive effect on the amount of participation, which we observe through larger numbers of commits labeled than minimally required for co-authorship. We augment the analysis of this hypothesis with a survey among participants, asking them if they were motivated by the leaderboard and the prospect of being listed earlier in the author list.

3.3 Materials

This study covers bug fixing commits that we re-use from prior work (see Section 3.5.1). We use SmartSHARK to process all data (Trautsch et al. 2018). We extended SmartSHARK with the capability to annotate the changes within commits. This allowed us to manually validate which lines in a bug fixing commit are contributing to the semantic changes for fixing the bugs (Trautsch et al. 2020).

3.4 Variables

We now state the variables we use as foundation for the construct of the analysis we conduct. The measurement of these variables is described in Section 3.6.1 and their analysis is described in Section 3.6.2.

For bug fixing commits, we measure the following variables as percentages of lines with respect to the total number of changed lines in the commit.

-

Percentage contributing to bug fixes.

-

Percentage of whitespace changes.

-

Percentage of documentation changes.

-

Percentage of refactorings.

-

Percentage of changes to tests.

-

Percentage of unrelated improvements.

-

Percentage where no consensus was achieved (see Section 3.6.1).

We provide additional results regarding the lines without consensus to understand what the reasons for not achieving consensus are. These results are an extension of our registered protocol. However, we think that details regarding potential limitations of our capabilities as researchers are important for the discussion of research question RQ1. Concretely, we consider the following cases:

-

In the registration, we planned to consider whitespace and documentation changes within a single variable. Now, we use separate variables for both. Our reason for this extension is to enable a better understanding of how many changes are purely cosmetic without affecting functionality (whitespace) and distinguish this from changes that modify the documentation. We note that we also used the term “comment” instead of “documentation” within the registration. Since all comments (e.g., in code) are a form of documentation, but not all documentation (e.g., sample code) is a comment, we believe that this terminology is clearer.

-

Lines where all labels are either test, documentation, or whitespace. We use this case, because our labeling tool allows labeling of all lines in a file with the same label with a single click and our tutorial describes how to do this for a test file. This leads to differences between how participants approached the labeling of test files: some participants always use the button to label the whole test file as test, other participants used a more fine-grained approach and also labeled whitespace and documentation changes within test files.

-

Lines that were not labeled as bug fix by any participant. For these lines, we have consensus that this is not part of the bug fix, i.e., for our key concern. Disagreements may be possible, e.g., if some participants identified a refactoring, while others marked this as unrelated improvement.

A second deviation from our pre-registered protocol is that we present the results for the consensus for two different views on the data:

-

all changes, i.e., as specified in registration; and

-

only changes in Java source code files that contain production code, i.e., Java files excluding changes to tests or examples.

Within the pre-registered protocol, we do not distinguish between all changes and changes to production code. We now realize that both views are important. The view of all changes is required to understand what is actually part of bug fixing commits. The view on Java production files is important, because changes to non-production code can be easily determined automatically as not contributing to the bug fix, e.g., using regular expression matching based on the file ending “.java” and the file path to exclude folders that contain tests and example code. The view on Java production files enables us to estimate the amount of noise that cannot be automatically removed. Within this study, we used the regular expressions shown in Table 1 to identify non-production code. We note that we have some projects which also provide web applications (e.g., JSP Wiki), that also have production code in other languages than Java, e.g., JavaScript. Thus, we slightly underestimate the amount of production code, because these lines are only counted in the overall view, and not in the production code view.

Additionally, we measure variables related to the crowd working.

-

Number of commits labeled per participant.

-

Percentage of correctly labeled lines per participant, i.e., lines where the label of the participant agrees with the consensus.

We collected this data using nightly snapshots of the number of commits that each participant labeled, i.e., a time series per participant.

We also conducted an anonymous survey among participants who labeled at least 200 commits with a single question to gain insights into the difficulty of labeling tangled changes in commits for the evaluation of RQ1.

-

Q1: Please estimate the percentage of lines in which you were unsure about the label you assigned.

-

A1: One of the following categories: 0%–10%, 11%–20%, 21%–30%, 31%–40%, 41%–50%, 51–60%, 61%–70%, 71%–80%, 81%–90%, 91%–100%.

Finally, we asked all participants who labeled at least 250 commits a second question to gain insights into the effect of the gamification:

-

Q2: Would you have labeled more than 200 commits, if the authors would have been ordered randomly instead of by the number of commits labeled?

-

A2: One of the following categories: Yes, No, Unsure.

3.5 Subjects

This study has two kinds of subjects: bugs for which the lines contributing to the fix are manually validated and the participants in the labeling who were recruited using the research turk approach.

3.5.1 Bugs

We use manually validated bug fixes. For this, we harness previously manually validated issue types similar to Herzig et al. (2013) and validated trace links between commits and issues for 39 Apache projects (Herbold et al. 2019). The focus of this data set is the Java programming language. The usage of manually validated data allows us to work from a foundation of ground truth and avoids noise in our results caused by the inclusion of commits that are not fixing bugs. Overall, there are 10,878 validated bug fixing commits for 6,533 fixed bugs in the data set.

Prior studies that manually validated commits limited the scope to bugs that were fixed in a single commit, and commits in which the bug was the only referenced issue (e.g., Just et al. 2014; Gyimesi et al. 2019; Mills et al. 2020). We broaden this scope in our study and also allow issues that were addressed by multiple commits, as long as the commits only fixed a single bug. This is the case for 2,283 issues which were addressed in 6,393 commits. Overall, we include 6,279 bugs fixed in 10,389 commits in this study. The remaining 254 bugs are excluded, because the validation would have to cover the additional aspect of differentiating between multiple bug fixes. Otherwise, it would be unclear to which bug(s) the change in a line would contribute, making the labels ambiguous.

Herbold et al. (2019) used a purposive sampling strategy for the determination of projects. They selected only projects from the Apache Software Foundation, which is known for the high quality of the developed software, the many contributors both from the open source community and from the industry, as well as the high standards of their development processes, especially with respect to issue tracking (Bissyandí et al. 2013). Moreover, the 39 projects cover different kinds of applications, including build systems (ant-ivy), web applications (e.g., jspwiki), database frameworks (e.g., calcite), big data processing tools (e.g., kylin), and general purpose libraries (commons). Thus, our sample of bug fixes should be representative for a large proportion of Java software. Additionally, Herbold et al. (2019) defined criteria on project size and activity to exclude very small or inactive projects. Thus, while the sample is not randomized, this purposive sampling should ensure that our sample is representative for mature Java software with well-defined development processes in line with the discussion of representativeness by Baltes and Ralph (2020).

3.5.2 Participants

In order to only allow participants that have a sufficient amount of programming experience, each participant must fulfill one of the following criteria: 1) an undergraduate degree majoring in computer science or a closely related subject; or 2) at least one year of programming experience in Java, demonstrated either by industrial programming experience using Java or through contributions to Java open source projects.

Participants were regularly recruited, e.g., by advertising during virtual conferences, within social media, or by asking participants to invite colleagues. Interested researchers and practitioners signed up for this study via an email to the first author, who then checked if the participants are eligible. Upon registration, participants received guidance on how to proceed with the labeling of commits (see Appendix A). Participants became co-authors of this manuscript if

-

1.

they manually labeled at least 200 commits;

-

2.

their labels agree with the consensus (Section 3.6.1) for at least 70% of the labeled lines;

-

3.

they contributed to the manuscript by helping to review and improve the draft, including the understanding that they take full responsibility for all reported results and that they can be held accountable with respect to the correctness and integrity of the work; and

-

4.

they were not involved in the review or decision of acceptance of the registered report.

The first criterion guarantees that each co-author provided a significant contribution to the analysis of the bug fixing commits. The second criterion ensures that participants carefully labeled the data, while still allowing for disagreements. Only participants who fulfill the first two criteria received the manuscript for review. The third criterion ensures that all co-authors agree with the reported results and the related responsibility and ethical accountability. The fourth criterion avoids conflicts of interest.Footnote 9

3.6 Execution Plan

The execution of this research project was divided into two phases: the data collection phase and the analysis phase.

3.6.1 Data Collection Phase

The primary goal of this study is to gain insights into which changed lines contribute to bug fixing commits and which additional activities are tangled with the correction of the bug. Participants were shown the textual differences of the source code for each bug with all related bug fixing commits. The participants then assigned one of the following labels to all changed lines:

-

contributes to the bug fix;

-

only changes to whitespaces;

-

documentation change;

-

refactoring;

-

change to tests; and

-

unrelated improvement not required for the bug fix.

Figure 1 shows a screenshot of the web application that we used for labeling. The web application ensured that all lines were labeled, i.e., participants could not submit incomplete labels for a commit. The web application further supported the participants with the following convenience functions:

-

Buttons to mark all changes in a file with the same label. This could, e.g., be used to mark all lines in a test file with a single click as test change.

-

Heuristic pre-labeling of lines as documentation changes using regular expressions.

-

Heuristic pre-labeling of lines with only whitespace changes.

-

Heuristic pre-labeling of lines as refactoring by automatically marking changed lines as refactorings, in case they were detected as refactorings by the RefactoringMiner 1.0 (Tsantalis et al. 2018).

Screenshot of VisualSHARK (Trautsch et al. 2020) that was used for the labeling of the data

All participants were instructed not to trust the pre-labels and check if these labels are correct. Moreover, we did not require differentiation between whitespaces and documentation/test changes in files that were not production code files, e.g., within test code or documentation files such as the project change log.

Each commit was shown to four participants. Consensus is achieved if at least three participants agree on the same label. If this is not the case, no consensus for the line is achieved, i.e., the participants could not clearly identify which type of change a line is.

The data collection phase started on May 16th, 2020 and ended on October 14th, 2020, registration already finished on September 30th, 2020.Footnote 10 The participants started by watching a tutorial videoFootnote 11 and then labeling the same five commits that are shown in the tutorial themselves to get to know the system and to avoid mislabels due to usability problems.

Participants could always check their progress, as well as the general progress for all projects and the number of commits labeled by other participants in the leaderboard. However, due to the computational effort, the leaderboard did not provide a live view of the data, but was only updated once every day. All names in the leaderboard were anonymized, except the name of the currently logged in participant and the names of the principal investigators. The leaderboard is also part of a gamification element of our study, as participants can see how they rank in relation to others and may try to improve their ranks by labeling more commits.

The participants are allowed to decide for which project they want to perform the labeling. The bugs are then randomly selected from all bugs of that project, for which we do not yet have labels by four participants. We choose this approach over randomly sampling from all projects to allow participants to gain experience in a project, which may improve both the labeling speed and the quality of the results. Participants must finish labeling each bug they are shown before the next bug can be drawn. We decided for this for two reasons. First, skipping bugs could reduce the validity of the results for our second research question, i.e., how good we actually are at labeling bug fixes at this level of granularity, because the sample could be skewed towards simpler bug fixes. Second, this could lead to cherry picking, i.e., participants could skip bugs until they find particularly easy bugs. This would be unfair for the other participants.The drawback of this approach is that participants are forced to label bugs, even in case they are unsure. However, we believe that this drawback is countered by our consensus labeling that requires agreement of three participants: even if all participants are unsure about a commit, if three come to the same result, it is unlikely that they are wrong.

3.6.2 Analysis Phase

The analysis took place after the data collection phase was finished on October 14th, 2020. In this phase, the principal investigators conducted the analysis as described in Section 3.7. The principal investigators also informed all participants of their consensus ratio. All participants who met the first two criteria for co-authorship received a draft of the manuscript for review. Due to the number of participants, this was conducted in multiple batches. In between, the principal investigators improved the manuscript based on the comments of the participants. All participants who reviewed the draft were added to the list of authors. Those who failed to provide a review were added to the acknowledgements, unless they specifically requested not to be added. Finally, all co-authors received a copy of the final draft one week in advance of the submission for their consideration.

3.6.3 Data Correction Phase

Due to a bug in the data collection software that we only found after the data collection was finished, we required an additional phase for the correction of data. The bug led to possibly corrupt data in case line numbers in the added and deleted code overlapped, e.g., single line modifications. We computed all lines that were possibly affected by this bug and found that 28,827 lines possibly contained corrupt labels, i.e., about 10% of our data. We asked all co-authors to correct their data through manual inspection by relabeling all lines that could have been corrupted. For this, we used the same tooling as for the initial labeling, with the difference that all labels that were not affected by the bugs were already set, only the lines affected by the bug needed to be relabeled.Footnote 12 The data correction phase took place from November 25th, 2020 until January 17th, 2021. We deleted all data that was not corrected by January 18th which resulted in 679 less issues for which the labeling has finished. We invited all co-authors to re-label these issues between January 18th and January 21th. Through this, the data for 636 was finished again, but we still lost the data for 43 issues as the result of this bug. The changes to the results were very minor and the analysis of the results was not affected. However, we note that the analysis of consensus ratios and participation (Section 5.1) was done prior to the correction phase. The bug affected only a small fraction of lines that should only have a negligible impact on the individual consensus ratios, as data by all participants was affected equally. We validated this intuition by comparing the consensus ratios of participants who corrected their data before and after the correction and found that there were no relevant changes. Since some participants could not participate in the data correction and we had to remove some labelled commits, the number of labeled commits per author could now be below 200. This altered the results due to outliers, caused by single very large commits. This represents an unavoidable trade-off arising from the need to fix the potentially corrupt data.

3.7 Analysis Plan

The analysis of data consists of four aspects: the contribution to bug fixes, the capability to label bug fixing commits, the effectiveness of gamification, and the confidence level of our statistical analysis.

3.7.1 Contributions to Bug Fixes

We used the Shapiro-Wilk test (Shapiro and Wilk 1965) to determine if the nine variables related to defects are normally distributed. Since the data is not normal, we report the median, median absolute deviation (MAD), and an estimation for the confidence interval of the median based on the approach proposed by Campbell and Gardner (1988). We reject H1 if the upper bound of the confidence interval of the median lines contributing to the bug fix in all code is greater than 40%. Within the discussion, we provide further insights about the expectations on lines within bug fixing commits based on all results, especially also within changes to production code files.

3.7.2 Capability to Label Bug Fixing Commits

We use the confidence interval for the number of lines without consensus for this evaluation. We reject H2 if the lower bound of the confidence interval of the median number of lines without consensus is less than 10.5%. Additionally, we report Fleiss’ κ (Fleiss 1971) to estimate the reliability of the consensus, which is defined as

where \(\bar {P}\) is the mean agreement of the participants per line and \(\bar {P}_{e}\) is the sum of the squared proportions of the label assignments. We use the table from Landis and Koch (1977) for the interpretation of κ (see Table 2).

Additionally, we estimate the probability of random mistakes to better understand if the lines without consensus can be explained by random mislabels, i.e., mislabels that are the consequence of unintentional mistakes by participants. If we assume that all lines with consensus are labeled correctly, we can use the minority votes in those lines to estimate the probability of random mislabels. Specifically, we have a minority vote if three participants agreed on one label, and one participant selected a different label. We assume that random mislabels follow a binomial distribution (see Section 3.2) to estimate the probability that a single participant randomly mislabels a bug fixing line. Following Brown et al. (2001), we use the approach from Agresti and Coull (1998) to estimate the probability of a mislabel p, because we have a large sample size. Therefore, we estimate

as the probability of a mislabel of a participant with n1 the number of minority votes in lines with consensus, n the total number of individual labels in lines with consensus, and zα the \(1-\frac {1}{2}\alpha \) quantile of the standard normal distribution, with α the confidence level. We get the confidence interval for p as

We estimate the overall probabilities of errors, as well as the probabilities of errors in production files for the different label types to get insights into the distribution of errors. Moreover, we can use the estimated probabilities of random errors to determine how many lines without consensus are expected. If ntotal is the number of lines, we can expect that there are

lines without consensus under the assumption that they are due to random mistakes. If we observe more lines without consensus, this is a strong indicator that this is not a random effect, but due to actual disagreements between participants.

We note that the calculation of the probability of mislabels and the number of expected non-consensus lines was not described in the pre-registered protocol. However, since the approach to model random mistakes as binomial distribution was already used to derive H2 as part of the registration, we believe that this is rather the reporting of an additional detail based on an already established concept from the registration and not a substantial deviation from our protocol.

In addition to the data about the line labels, we use the result of the survey among participants regarding their perceived certainty rates to give further insights into the limitations of the participants to conduct the task of manually labeling lines within commits. We report the histogram of the answers given by the participants and discuss how the perceived difficulty relates to the actual consensus that was achieved.

3.7.3 Effectiveness of Gamification

We evaluate the effectiveness of the gamification element of the crowd working by considering the number of commits per participant. Concretely, we use a histogram of the total number of commits per participant. The histogram tells us whether there are participants who have more than 200 commits, including how many commits were actually labeled. Moreover, we create line plots for the number of commits over days, with one line per participant that has more than 200 commits. This line plot is a visualization of the evolution of the prospective ranking of the author list. If the gamification is efficient, we should observe two behavioral patterns: 1) that participants stopped labeling right after they gained a certain rank; and 2) that participants restarted labeling after a break to increase their rank. The first observation would be in line with the results from Anderson et al. (2013) regarding user behavior after achieving badges on Stack Overflow. We cannot cite direct prior evidence for our second conjecture, other than that we believe that participants who were interested in gaining a certain rank, would also check if they still occupy the rank and then act on this.

We combine the indications from the line plot with the answer to the survey question Q2. If we can triangulate from the line plot and the survey that the gamification was effective for at least 10% of the overall participants, we believe that having this gamification element is worthwhile and we fail to reject H3. If less than 10% were motivated by the gamification element, this means that future studies could not necessarily expect a positive benefit, due to the small percentage of participants that were motivated. We additionally quantify the effect of the gamification by estimating the additional effort that participants invested measured in the number of commits labeled greater than 200 for the subset of participants where the gamification seems to have made a difference.

3.7.4 Confidence Level

We compute all confidence intervals such that we have a family-wise confidence level of 95%. We need to adjust the confidence level for the calculation of the confidence intervals, due to the number of intervals we determine. Specifically, we determine 2 ⋅ 9 = 18 confidence intervals for the ratios of line labels within commits (see Section 3.4) and 27 confidence intervals for our estimation of the probability of random mistakes. Due to the large amount of data we have available, we decided for an extremely conservative approach for the adjustment of the confidence level (see Section 3.7.2). We use Bonferroni correction (Dunnett 1955) for all 18 + 27 = 45 confidence intervals at once, even though we could possibly consider these as separate families. Consequently, we use a confidence level of \(1-\frac {0.05}{45} = 0.99\bar {8}\) for all confidence interval calculations.Footnote 13

3.8 Summary of Deviations from Pre-Registration

We deviated from the pre-registered research protocol in several points, mostly through the expansion on details.

-

The time frame of the labeling shifted to May 16th–October 14th. Additionally, we had to correct a part of the data due to a bug between November 25th and January 21st.

-

We updated H2 with a threshold of 10.5% of lines, due to a wrong calculation in the registration (see footnote 6).

-

We consider the subset of mislabels on changes to production code files, as well as mislabels with respect to all changes.

-

We have additional details because we distinguish between whitespace and documentation lines.

-

We have additional analysis for lines without consensus to differentiate between different reasons for no consensus.

-

We extend the analysis with an estimation of the probability of random mislabels, instead of only checking the percentage of lines without consensus.

4 Experiments for RQ1

We now present our results and discuss their implications for RQ1 on the tangling of changes within commits.

4.1 Results for RQ1

In this section, we first present the data demographics of the study, e.g., the number of participants, and the amount of data that was labeled. We then present the results of the labeling. All labeled data of the LLTC4J corpus and the analysis scripts we used to calculate our results can be found online in our replication package.Footnote 14

4.1.1 Data Demographics

Of 79 participants registered for this study, 15 participants dropped out without performing any labeling. The remaining 64 participants labeled data. The participants individually labeled 17,656 commits. This resulted in 1,389 commits labeled by one participant, 683 commits labeled by two participants, 303 commits that were labeled by three participants, 3,498 commits labeled by four participants, and five commits that were part of the tutorial were labeled by all participants. Table 3 summarizes the completed data for each project. Thus, we have validated all bugs for 23 projects and incomplete data about bugs for five projects. We have a value of Fleiss’ κ = 0.67, which indicates that we have substantial agreement among the participants.

4.1.2 Content of Bug Fixing Commits

Table 4 summarizes the overall results of the commit labeling. Overall, 289,904 lines were changed as part of the bug fixing commits. Only 25.1% of the changes were part of bug fixes. The majority of changed lines were modifications of tests with 39.6%. Documentation accounts for another 14.0% of the changes. For 8.9% of the lines there was no consensus among the participants and at least one participant labeled the line as bug fix. For an additional 1.5% of lines, the participants marked the line as either documentation, whitespace change or test change, but did not achieve consensus. We believe this is the result of different labeling strategies for test files (see Section 3.4). For 4.5% of the lines no participant selected bug fix, but at least one participant selected refactoring or unrelated improvement. When we investigated these lines, we found that the majority of cases were due to different labeling strategies: some participants labeled updates to test data as unrelated improvements, others labeled them as tests. How this affected production code files is discussed separately in Section 4.1.3.

256,689 of the changed lines were Java code, with 144,863 lines in production code files and 11,826 lines in other code files. The other code is almost exclusively test code. Within the production code files, 49.2% of the changed lines contributed to bug fixes and 21.7% were documentation. Refactorings and unrelated improvements only represent 4.3% of the lines. In 14.3% of the lines, the participants did not achieve consensus with at least one participant labeling the line as bug fix.

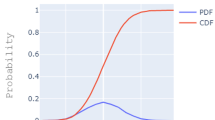

Figure 2 summarizes the results of labeling per commit, i.e., the percentages of each label in bug fixing commits. We found that a median of 25.0% of all changed lines contribute to a bug fix. When we restrict this to production code files, this ratio increases to 75.0%. We note that while the median for all changes is roughly similar to the overall percentage of lines that are bug fixing, this is not the case for production code files. The median of 75.0% per commit is much higher than the 49.2% of all production lines that are bug fixing. The histograms provide evidence regarding the reason for this effect. With respect to all changed lines, Fig. 2b shows that there are many commits with a relatively small percentage of bug fixing lines close to zero, i.e., we observe a peak on the left side of the histogram. When we focus the analysis on the production code files, Fig. 2c shows that we instead observe that there are many commits with a large percentage of bug fixing lines (close to 100%), i.e., we observe a peak on the right side of the histogram, but still a long tail of lower percentages. This tail of lower percentages influences the ratio of lines more strongly in the median per commit, because the ratio is not robust against outliers.

The most common change to be tangled with bug fixes are test changes and documentation changes with a median of 13.0% and 10.2% of lines per commit, respectively. When we restrict the analysis to production code files, all medians other than bug fix drop to zero. For test changes, this is expected because they are, by definition, not in production code files. For other changes, this is due to the extremeness of the data which makes the statistical analysis of most label percentages within commits difficult. What we observe is that while many commits are not pure bug fixes, the type of changes differs between commits, which leads to commits having percentages of exactly zero for all labels other than bug fix. This leads to extremely skewed data, as the median, MAD, and CI often become exactly zero such that the non-zero values are – from a statistical point of view – outliers. The last column of the table in Fig. 2a shows for how many commits the values are greater than zero. We can also use the boxplots in Fig. 2b and c to gain insights into the ratios of lines, which we analyze through the outliers.

The boxplots reveal that documentation changes are common in production code files, the upper quartile is at around 30% of changed lines in a commit. Unrelated improvements are tangled with 2.9% of the commits and are usually less than about 50% of the changes, refactorings are tangled with 7.8% of the commits and usually less than about 60% of the changes. Whitespace changes are more common, i.e., 46.9% of the commits are tangled with some formatting. However, the ratio is usually below 40% and there are no commits that contain only whitespace changes, i.e., pure reformatting of code. The distribution of lines without consensus shows that while we have full consensus for 71.3% of the commits, the consensus ratios for the remaining commits are distributed over the complete range up to no consensus at all.

As a side note, we also found that the pre-labeling of lines with the RefactoringMiner was not always correct. Sometimes logical changes were marked as refactoring, e.g., because side effects were ignored when variables were extracted or code was reordered. Overall, 21.6% of the 23,682 lines marked by RefactoringMiner have a consensus label of bug fix. However, the focus of our study is not the evaluation of RefactoringMiner and we further note that we used RefactoringMiner 1.0 (Tsantalis et al. 2018) and not the recently released version 2.0 (Tsantalis et al. 2020), which may have resolved some of these issues.

4.1.3 Analysis of Disagreements

Based on the minority votes in lines with consensus, we estimate the probability of random mistakes in all changes as 7.5% ± 0.0, when we restrict this to production code files we estimate the probability as 9.0% ± 0.0. We note that the confidence intervals are extremely small, due to the very large number of lines in our data. Figure 3 shows the expected number of lines without consensus given these probabilities versus the observed lines without consensus. For all changes, we additionally report a cleaned version of the observed data. With the cleaned version, we take the test/doc/whitespace and no bug fix labels into account, i.e., lines where there is consensus that the line is not part of the bug fix. The results indicate that there are more lines without consensus than could be expected under the assumption that all mislabels in our data are the result of random mislabels.

Table 5 provides additional details regarding the expectation of the mislabels per type in production code files. The data in this table is modeled using a more fine-grained view on random mistakes. This view models the probabilities for all labels separately, both with respect to expected mislabels, as well as the mislabel that occurs. The estimated probabilities are reported in Table 7 in the Appendix. Using the probabilities, we calculated the expected number of two random mistakes for each label type, based on the distribution of consensus lines. Table 5 confirms that we do not only observe more lines without consensus, but also more pairs of mislabels than could be expected given a random distribution of mistakes for all labels. However, the mislabels are more often of type bug fix, unrelated improvement, or refactoring than of the other label types. Table 8 in the Appendix shows a full resolution of the individual labels we observed in lines without consensus and confirms that disagreements between participants whether a line is part of a bug fix, an unrelated improvement, or a refactoring, are the main driver of lines without consensus in production code files.

In addition to the view on disagreements through the labels, we also asked the participants that labeled more than 200 commits how confident they were in the correctness of their labels. Figure 4 shows the results of this survey. 55% of the participants estimate that they were not sure in 11%–20% of lines, another 20% estimate 21%–30% of lines. Only one participant estimates a higher uncertainty of 51%–60%. This indicates that participants have a good intuition about the difficulty of the labeling. If we consider that we have about 8% random mistakes and 12% lines without consensus, this indicates that about 20% of the lines should have been problematic for participants. We note that there are also three that selected between 71%–100% of lines. While we cannot know for sure, we believe that these three participants misread the question, which is in line with up to 10% of participants found by Podsakoff et al. (2003).

Answers of the participants regarding their labeling confidence. Participants were not informed about the distribution of consensus ratios of participants or their individual consensus ratio before answering the survey to avoid bias. 35 out of 48 participants with at least 200 commits answered this question

4.2 Discussion of RQ1

We now discuss our results for RQ1 with respect to our hypotheses to gain insights into the research question, put our results in the context of related work, and identify consequences for researchers who analyze bugs.

4.2.1 Prevalence of Tangled Commits

Tangling is common in bug fixing commits. We estimate that the average number of bug fixing lines per commit is between 22% and 38%. The 22% of lines are due to the lower bound of the confidence interval for the median bug fixing lines per commit. The 38% of lines assume that all 8.9% of the lines without consensus would be bug fixing and that the median would be at the upper bound of the confidence interval. However, we observe that a large part of the tangling is not within production code files, but rather due to changes in documentation and test files. Only half of the changes within bug fixing commits are to production code files. We estimate that the average number of bug fixing lines in production code files per commit is between 60% and 93%.

4.2.2 Impact of Tangling

The impact of tangling on software engineering research depends on the type of tangling as well as the research application. Moreover, tangling is not, by definition, bad. For example, adding new tests as part of a bug fix is actually a best practice. In the following, we use the term benign tangling to refer to tangled commits that do not negatively affect research and problematic tangling to refer to tangling that results in noise within the data without manual intervention. Specifically, we discuss problematic tangling for the three research topics we already mentioned in the motivation, i.e., program repair, bug localization, and defect prediction.

If bug fixes are used for program repair, only changes to production code are relevant, i.e., all other tangling is benign as the tangling can easily be ignored using regular expressions, same as we did in this article. In production code files, the tangling of whitespaces and documentation changes is also benign. Refactorings and unrelated improvements are problematic, as they are not required for the bug fix and needlessly complicate the problem of program repair. If bug fixes are used as training data for a program repair approach (e.g., Li et al. 2020), this may lead to models that are too complex as they would mix the repair with additional changes. If bug fixes are used to evaluate the correctness of automatically generated fixes (e.g., Martinez et al. 2016), this comparison may be more difficult or noisy, due to the tangling. Unrelated improvements are especially problematic if they add new features. This sort of general program synthesis is usually out of scope of program repair and rather considered as a different problem, e.g., as neural machine translation (Tufano et al. 2019) and, therefore, introduces noise in dedicated program repair analysis tasks as described above.

For bug localization, tangling outside of production code files is also irrelevant, as these changes are ignored for the bug localization anyways. Within production code files, all tangling is irrelevant, as long as a single line in a file is bug fixing, assuming that the bug localization is at the file level.

For defect prediction, we assume that a state-of-the-art SZZ variant that accounts for whitespaces, documentations, and automatically detectable refactorings is used Neto et al. (2018) to determine the bug fixing and bug inducing commits. Additionally, we assume that a heuristic is used to exclude non-production changes, which means that changes to non-production code files are not problematic for determining bug labels. For the bug fixes, we have the same situation as with bug localization: labeling of files would not be affected by tangling, as long as a single line is bug fixing. However, all unrelated improvements and non-automatically detectable refactorings may lead to the labeling of additional commits as inducing commits and are, therefore, potentially problematic. Finally, defect prediction features can also be affected by tangling. For example, the popular metrics by Kamei et al. (2013) for just-in-time defect prediction compute change metrics for commits as a whole, i.e., not based on changes to production code only, but rather based on all changes. Consequently, all tangling is problematic with respect to these metrics. According to the histogram for overall tangling in Fig. 2, this affects most commits.

Table 6 summarizes the presence of problematic tangling within our data. We report ranges, due to the uncertainty caused by lines without consensus. The lower bound assumes that all lines without consensus are bug fixing and that all refactorings could be automatically detected, whereas the upper bound assumes that the lines without consensus are unrelated improvements and refactorings could not be automatically detected. We observe that all use cases are affected differently, because other types of tangled changes cause problems. Program repair has the highest lower bound, because any refactoring or unrelated improvement is problematic. Bug localization is less affected than defect prediction, because only the bug fixing commits are relevant and the bug inducing commits do not matter. If bug localization data sets would also adopt automated refactoring detection techniques, the numbers would be the same as for defect prediction bug fix labels. However, we want to note that the results from this article indicate that the automated detection of refactorings may be unreliable and could lead to wrong filtering of lines that actually contribute to the bug fix. Overall, we observe that the noise varies between applications and also between our best case and worst case assumptions. In the best case, we observe as little as 2% problematically tangled bugs (file changes for bug fixes in defect prediction), whereas in the worst case this increases to 47% (total number of bugs with noise for defect prediction). Since prior work already established that problematic tangling may lead to wrong performance estimations (Kochhar et al. 2014; Herzig et al. 2016; Mills et al. 2020) as well as the degradation of performance of machine learning models (Nguyen et al. 2013), this is a severe threat to the validity of past studies.

4.2.3 Reliability of Labels

Participants have a probability of random mistakes of about 7.5% overall and 9.0% in production code files. Due to these numbers, we find that our approach to use multiple labels per commit was effective. Using the binomial distribution to determine the likelihood of at least three random mislabels, we expect that 111 lines with a consensus label are wrong, i.e., an expected error rate of 0.09% in consensus labels. This error rate is lower than, e.g., using two people that would have to agree. In this case, the random error rate would grow to about 0.37%. We fail to achieve consensus on 8.9% of all lines and 14.3% of lines in production code files. Our data indicates that most lines without consensus are not due to random mistakes, but rather due to disagreements between participants whether a line contributes to the bug fix or not. This indicates that the lines without consensus are indeed hard to label. Our participant survey supports our empirical analysis of the labeled data. A possible problem with the reliability of the work could also be participant dependent “default behavior” in case they were uncertain. For example, participants could have labeled lines as bug fixing in case they were not sure, which would reduce the reliability of our work and also possibly bias the results towards more bug fixing lines. However, we have no data regarding default behavior and believe that this should be studied in an independent and controlled setting.

4.2.4 Comparison to Prior Work

Due to the differences in approaches, we cannot directly compare our results with those of prior studies that quantified tangling. However, the fine-grained labeling of our data allows us to restrict our results to mimic settings from prior work. From the description in their paper, Herzig and Zeller (2013) flagged only commits as tangled, that contained source code changes for multiple issues or clean up of the code. Thus, we assume that they did not consider documentation changes or whitespace changes as tangling. This definition is similar to our definition of problematic tangling for program repair. The 12%–35% affected bugs that we estimate includes the lower bound of 15% on tangled commits. Thus, our work seems to replicate the findings from Herzig and Zeller (2013), even though our methodologies are completely different. Whether the same holds true for the 22% of tangled commits reported by Nguyen et al. (2013) is unclear, because they do not specify how they handle non-production code. Assuming they ignore non-production code, our results would replicate those by Nguyen et al. (2013) as well.

Kochhar et al. (2014) and Mills et al. (2020) both consider tangling for bug localization, but have conflicting results. Both studies defined tangling similar to our definition of problematic tangling for bug localization, except that they did not restrict the analysis to production code files, but rather to all Java files. When we use the same criteria, we have between 39% and 48% problematic tangling in Java file changes. Similar to what we discussed in Section 4.2.2, the range is the result of assuming lines with consensus either as bug fixes or as unrelated improvements. Thus, our results replicate the work by Mills et al. (2020) who found between 32% and 50% tangled file changes. We could not confirm the results by Kochhar et al. (2014) who found that 28% of file changes are problematic for bug localization.

Regarding the types of changes, we can only compare our work with the data from Nguyen et al. (2013) and Mills et al. (2020). The percentages are hard to compare, due to the different levels of abstraction we consider. However, we can compare the trends in our data with those of prior work. The results regarding tangled test changes are similar to Mills et al. (2020). For the other types of changes, the ratios we observe are more similar to the results reported by Mills et al. (2020) than those of Nguyen et al. (2013). We observe only few unrelated improvements, while this is as common as tangled documentation changes in the work by Nguyen et al. (2013). Similarly, Mills et al. (2020) observed a similar amount of refactoring as documentation changes. In our data, documentation changes are much more common than refactorings, both in all changes as well as in changes to production code files. A possible explanation for this are the differences in the methodology: refactorings and unrelated improvement labels are quite common in the lines without consensus. Thus, detecting such changes is common in the difficult lines. This could mean that we underestimate the number of tangled refactorings and unrelated improvements, because many of them are hidden in the lines without consensus. However, Nguyen et al. (2013) and Mills et al. (2020) may also overestimate the number of unrelated improvements and refactorings, because they had too few labelers to have the active disagreements we observed. Regarding the other contradictions between the trends observed by Nguyen et al. (2013) and Mills et al. (2020), our results indicate that the findings by Mills et al. (2020) generalize to our data: we also find more refactorings than other unrelated improvements.

4.2.5 Anectodal Evidence on Corner Cases

Due to the scope of our analysis, we also identified some interesting corner cases that should be considered in future work on bug fixes. Sometimes, only tests were added as part of a bug fixing commit, i.e., no production code was changed. Usually, this indicates that the reported issue was indeed not a bug and that the developers added the tests to demonstrate that the case works as expected. In this case, we have a false positive bug fixing commit, due to a wrong issue label. However, sometimes it also happened, that the label was correct, but the bug fixing commit still only added tests. An example for this is the issue IO-466,Footnote 15 where the bug was present, but was already fixed as a side effect of the correction of IO-423. This means we have an issue that is indeed a bug and a correct link from the version control system to the issue tracking system, but we still have a false positive for the bug fixing commit. This shows that even if the issue types and issue links are manually validated, there may be false positives in the bug fixing commits, if there is no check that production code is modified.

Unfortunately, it is not as simple as that. We also found some cases, where the linked commit only contained the modification of the change log, but no change to production code files. An example for this is NET-270.Footnote 16 This is an instance of missing links: the actual bug fix is in the parent of the linked commit, which did not reference NET-270. Again, we have a correct issue type, correct issue link, but no bug fix in the commit, because no production code file was changed. However, a directly related fix exists and can be identified through manual analysis.

The question is how should we deal with such cases in future work? We do not have clear answers. Identifying that these commits do not contribute to the bug fix is trivial, because they do not change production code files. However, always discarding such commits as not bug fixing may remove valuable information, depending on the use case. For example, if we study test changes as part of bug fixing, the test case is still important. If you apply a manual correction, or possibly even identify a reliable heuristic, to find fixes in parent commits, the second case is still important. From our perspective, these examples show that even the best heuristics will always produce noise and that even with manual validations, there are not always clear decisions.