Abstract

Spatial skills can predict mathematics performance, with many researchers investigating how and why these skills are related. However, a literature review on spatial ability revealed a multiplicity of spatial taxonomies and analytical frameworks that lack convergence, presenting a confusing terrain for researchers to navigate. We expose two central challenges: (1) many of the ways spatial ability is defined and subdivided are often not based in well-evidenced theoretical and analytical frameworks, and (2) the sheer variety of spatial assessments. These challenges impede progress in designing spatial skills interventions for improving mathematics thinking based on causal principles, selecting appropriate metrics for documenting change, and analyzing and interpreting student outcome data. We offer solutions by providing a practical guide for navigating and selecting among the various major spatial taxonomies and instruments used in mathematics education research. We also identify current limitations of spatial ability research and suggest future research directions.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Spatial ability can be broadly defined as imagining, maintaining, and manipulating spatial information and relations. Over the past several decades, researchers have found reliable associations between spatial abilities and mathematics performance (e.g., Newcombe, 2013; Young et al., 2018a). However, the sheer plurality of spatial taxonomies and analytical frameworks that scholars use to describe spatial skills, the lack of theoretical spatial taxonomies, and the variety of spatial assessments available makes it very difficult for education researchers to make the appropriate selection of spatial measures for their investigations. Education researchers also face the daunting task of selecting the ideal spatial skills to design studies and interventions to enhance student learning and the development of reasoning in STEM (science, technology, engineering, and mathematics) more broadly. To address these needs, we have provided a review that focuses on the relationship between spatial skills and mathematical thinking and learning. Our specific contribution is to offer a guide for educational researchers who recognize the importance of measuring spatial skills but who are themselves not spatial skills scholars. This guide will help researchers navigate and select among the various major taxonomies on spatial reasoning and among the various instruments for assessing spatial skills for use in mathematics education research.

We offer three central objectives for this paper. First, we aim to provide an updated review of the ways spatial ability is defined and subdivided. Second, we list some of the currently most widely administered instruments used to measure subcomponents of spatial ability. Third, we propose an organizational framework that acknowledges this complex picture and — rather than offer overly optimistic proposals for resolving long-standing complexities — offers ways for math education researchers to operate within this framework from an informed perspective. This review offers guidance through this complicated state of the literature to help STEM education researchers select appropriate spatial measures and taxonomies for their investigations, assessments, and interventions. We review and synthesize several lines of the spatial ability literature and provide researchers exploring the link between spatial ability and mathematics education with a guiding framework for research design. To foreshadow, this framework identifies three major design decisions that can help guide scholars and practitioners seeking to use spatial skills to enhance mathematics education research. The framework provides a theoretical basis to select: (1) a spatial ability taxonomy, (2) corresponding analytical frameworks, and (3) spatial tasks for assessing spatial performance (Fig. 1). This guiding framework is intended to provide educational researchers and practitioners with a common language and decision-making process for conducting research and instruction that engages learners’ spatial abilities. The intent is that investigators’ use of this framework may enhance their understanding of the associative and causal links between spatial and mathematical abilities, and thereby improve the body of mathematics education research and practice.

The Importance of Spatial Reasoning for Mathematics and STEM Education

Spatial ability has been linked to the entrance into, retention in, and success within STEM fields (e.g., Shea et al., 2001; Wolfgang et al., 2003), while deficiencies in spatial abilities have been shown to create obstacles for STEM education (Harris et al., 2013; Wai et al., 2009). Although spatial skills are not typically taught in the general K-16 curriculum, these lines of research have led some scholars to make policy recommendations for explicitly teaching children about spatial thinking as a viable way to increase STEM achievement and retention in STEM education programs and career pathways (Sorby, 2009; Stieff & Uttal, 2015). Combined, the findings suggest that spatial ability serves as a gateway for entry into STEM fields (Uttal & Cohen, 2012) and that educational institutions should consider the importance of explicitly training students’ spatial thinking skills as a way to further develop students’ STEM skills.

Findings from numerous studies have demonstrated that spatial ability is critical for many domains of mathematics education, including basic numeracy and arithmetic (Case et al., 1996; Gunderson et al., 2012; Hawes et al., 2015; Tam et al., 2019) and geometry (Battista et al., 2018; Davis, 2015), as well as more advanced topics such as algebra word problem-solving (Oostermeijer et al., 2014), calculus (Sorby et al., 2013), and interpreting complex quantitative relationships (Tufte, 2001). For example, scores on the mathematics portion of the Program for International Student Assessment (PISA) are significantly positively correlated with scores on tests of spatial cognition (Sorby & Panther, 2020). Broadly, studies have found evidence of the connections between success on spatial tasks and mathematics tasks in children and adults. For example, first grade girls’ spatial skills were correlated with the frequency of retrieval and decomposition strategies when solving arithmetic problems (Laski et al., 2013), and these early spatial ability scores were the strongest predictors of their sixth-grade mathematics reasoning abilities (Casey et al., 2015). In adults (n = 101), spatial ability scores were positively associated with mathematics abilities measured through PISA mathematics questions (Schenck & Nathan, 2020).

Though there is a clear connection between spatial and mathematical abilities, understanding the intricacies of this relationship is difficult. Some scholars have sought to determine which mathematical concepts engage spatial thinking. For example, studies on specific mathematical concepts found spatial skills were associated with children’s one-to-one mapping (Gallistel & Gelman, 1992), missing-term problems (Cheng & Mix, 2014), mental computation (Verdine et al., 2014), and various geometry concepts (Hannafin et al., 2008). Schenck and Nathan (2020) identified associations between several specific sub-components of spatial reasoning and specific mathematics skills of adults. Specifically, adults’ mental rotation skills correlated with performance on questions about change and relationships, spatial orientation skills correlated with quantity questions, and spatial visualization skills correlated with questions about space and shape. Burte and colleagues (2017) proposed categories of mathematical concepts such as problem type, problem context, and spatial thinking level to target math improvements following spatial invention training. Their study concluded that mathematics problems that included visual representations, real-world contexts, and that involved spatial thinking are more likely to show improvement after embodied spatial training.

However, these lines of work are complicated by the variety of problem-solving strategies students employ when solving mathematics problems and issues with generalizability. While some students may rely on a specific spatial ability to solve a particular mathematics problem, others may use non-spatial approaches or apply spatial thinking differently for the same assessment item. For example, some students solving graphical geometric problem-solving tasks utilized their spatial skills by constructing and manipulating mental images of the problem, while others created external representations such as isometric sketches, alleviating the need for some aspects of spatial reasoning (Buckley et al., 2019). Though this difference could be attributed to lower spatial abilities in the students who used external representations, it could also be attributed to high levels of discipline-specific knowledge seen in domains such as geoscience (Hambrick et al., 2012), physics (Kozhevnikov & Thorton, 2006), and chemistry (Stieff, 2007). Though some amount of generalization is needed in spatial and mathematics education research, investigators should take care not to overgeneralize findings of specific spatial ability and mathematic domain connections.

This selective review shows ample reasons to attend to spatial abilities in mathematics education research and the design of effective interventions. However, studies across this vast body of work investigating the links between spatial abilities and mathematics performance use different spatial taxonomies, employ different spatial measures, and track improvement across many different topics of mathematics education. This variety makes it difficult for mathematics education scholars to draw clear causal lines between specific spatial skills interventions and specific mathematics educational improvements and for educators to follow clear guidance as to how to improve mathematical reasoning through spatial skills development.

The Varieties of Approaches to Explaining the Spatial-Mathematics Connection

Meta-analyses have suggested that domain-general reasoning skills such as fluid reasoning and verbal skills may mediate the relationships between spatial and mathematical skills (Atit et al., 2022), and that the mathematical domain is a moderator with the strongest association between logical reasoning and spatial skills (Xie et al., 2020). Despite these efforts, the specific nature of these associations remains largely unknown. Several lines of research have suggested processing requirements shared among mathematical and spatial tasks could account for these associations. Brain imaging studies have shown similar brain activation patterns in both spatial and mathematics tasks (Amalric & Dehaene, 2016; Hawes & Ansari, 2020; Hubbard et al., 2005; Walsh, 2003). Hawes and Ansari’s (2020) review of psychology, neuroscience, and education spatial research described four possible explanatory accounts (spatial representations of numbers, shared neuronal processing, spatial modeling, and working memory) for how spatial visualization was linked to numerical competencies. They suggest integrating the four accounts to explain an underlying singular mechanism to explain lasting neural and behavioral correlations between spatial and numerical processes. In a study of spatial and mathematical thinking, Mix et al. (2016) showed a strong within-domain factor structure and overlapping variance irrespective of task-specificity. They proposed that the ability to recognize and decompose objects (i.e., form perception), visualize spatial information, and relate distances in one space to another (i.e., spatial scaling) are shared processes required when individuals perform a range of spatial reasoning and mathematical reasoning tasks.

Efforts to date to document the relationship between mathematics performance and spatial skills or to enhance mathematics through spatial skills interventions show significant limitations in their theoretical framing. One significant issue is theory-based. Currently, there is no commonly accepted definition of spatial ability or its exact sub-components in the literature (Carroll, 1993; Lohman, 1988; McGee, 1979; Michael et al., 1957; Yilmaz, 2009). For example, many studies designed to investigate and improve spatial abilities have tended to focus on either a particular spatial sub-component or a particular mathematical skill. Much of the research has primarily focused on measuring only specific aspects of object-based spatial ability, such as mental rotation. Consequently, there is insufficient guidance for mathematics and STEM education researchers to navigate the vast landscape of spatial taxonomies and analytical frameworks, select the most appropriate measures for documenting student outcomes, design potential interventions targeting spatial abilities, select appropriate metrics, and analyze and interpret outcome data.

One notable program of research that has been particularly attentive to the spatial qualities of mathematical reasoning is the work by Battista et al. (2018). They collected think-aloud data about emerging spatial descriptions from individual interviews and teaching experiments with elementary and middle-grade students to investigate the relationship between spatial reasoning and geometric reasoning. Across several studies, the investigators seldom observed the successful application of generalized object-based spatial skills of the type typically measured by psychometric instruments of spatial ability. Rather, they found that students’ geometric reasoning succeeded when “spatial visualization and spatial analytic reasoning [were] based on operable knowledge of relevant geometric properties of the spatial-geometric objects under consideration” (Battista et al., p 226; emphasis added). By highlighting the ways that one’s reasoning aligns with geometric properties, Battista and colleagues shifted the analytic focus away from either general, psychological constructs that can be vague and overly broad, and away from a narrow set of task-specific skills, to a kind of intermediate-level that are relevant for describing topic and task-specific performance while identifying forms of reasoning that may generalize beyond the specific tasks and objects at hand. For example, property-based spatial analytic reasoning might focus on an invariant geometric property, such as the property of rectangles that their diagonals always bisect each other, to guide the decomposition and transformation of rectangles and their component triangles in service of a geometric proof. Establishing bridges and analytic distinctions between education domain-centric analyses of this sort and traditional psychometric accounts about domain-general spatial abilities is central to our review and broader aims to relate mathematical reasoning processes to spatial processes.

Selecting a Spatial Taxonomy

As noted, a substantial body of empirical evidence indicates that students’ spatial abilities figure into their mathematical reasoning, offering promising pathways toward interventions designed to improve math education. To capitalize on this association, one of the first decisions mathematics education researchers must make is selecting a spatial taxonomy that suits the data collected and analyzed. A spatial taxonomy is an organizational system for classifying spatial abilities and, thus, serves an important role in shaping the theoretical framework for any inquiry as well as interpreting and generalizing findings from empirical investigations. However, the manner in which spatial abilities are subdivided, defined, and named has changed over the decades of research on this topic. In practice, the decision for how to define and select spatial abilities is often difficult for researchers who are not specialists due to the expansive literature in this area.

In an attempt to make the vast number of spatial definitions and subcomponents more navigable for mathematics researchers and educators, we describe three general types of spatial taxonomies that are reflected in the current literature: Those that (1) classify according to different specific spatial abilities, (2) distinguish between different broad spatial abilities, and (3) those that treat spatial abilities as derived from a single, or unitary, factor structure. Although this is not a comprehensive account, these spatial taxonomies were chosen to highlight the main sub-factor dissociations in the literature.

Specific-Factor Structures

Since the earliest conceptualization (e.g., Galton, 1879), the communities of researchers studying spatial abilities have struggled to converge on one all-encompassing definition or provide a complete list of its subcomponents. Though the literature provides a variety of definitions of spatial ability that focus on the capacity to visualize and manipulate mental images (e.g., Battista, 2007; Gaughran, 2002; Lohman, 1979; Sorby, 1999), some scholars posit that it may be more precise to define spatial ability as a constellation of quantifiably measurable skills based on performance on tasks that load on specific individual spatial factors (Buckley et al., 2018). Difficulties directly observing the cognitive processes and neural structures involved in spatial reasoning have, in practice, spurred substantive research focused on uncovering the nature of spatial ability and its subcomponents. Historically, scholars have used psychometric methods to identify a variety of specific spatial subcomponents, including closure flexibility/speed (Carroll, 1993), field dependence/independence (McGee, 1979; Witkin, 1950), spatial relations (Carroll, 1993; Lohman, 1979), spatial orientation (Guilford & Zimmerman, 1948), spatial visualization (Carroll, 1993; McGee, 1979), and speeded rotation (Lohman, 1988). However, attempts to dissociate subfactors were often met with difficulty due to differing factor analytic techniques and variations in the spatial ability tests that were used (D'Oliveira, 2004). The subsequent lack of cohesion in this field of study led to different camps of researchers adopting inconsistent names for spatial subcomponents (Cooper & Mumaw, 1985; McGee, 1979) and divergent factorial frameworks (Hegarty & Waller, 2005; Yilmaz, 2009). Such a lack of convergence is clearly problematic for the scientific study of spatial ability and its application to mathematics education research.

In the last few decades, several attempts have been made to dissociate subcomponents of spatial ability further. Yilmaz (2009) combined aspects of the models described above with studies identifying dynamic spatial abilities and environmental spatial abilities to divide spatial ability into eight factors, which acknowledge several spatial skills (e.g., environmental ability and spatiotemporal ability) needed in real-life situations. More recently, Buckley et al. (2018) proposed an extended model for spatial ability. This model combines many ideas from the previously described literature and the spatial factors identified in the Cattell-Horn-Carroll theory of intelligence (see Schneider & McGrew, 2012). It currently includes 25 factors that can also be divided into two broader categories of static and dynamic, with the authors acknowledging that additional factors may be added as research warrants. It is unclear how a dissociation of this many subfactors could be practically applied in empirical research, which we regard as an important goal for bridging theory and research practices.

Dissociation Between Spatial Orientation and Rotational Spatial Visualization

Though specific definitions vary, many authors of the models discussed above agree on making a dissociation between spatial orientation and visualization skills. While performing perspective-taking (a subfactor of spatial orientation) and rotational spatial visualization tasks often involve a form of rotation, several studies have indicated that these skills are psychometrically separable. Measures for these skills often ask participants to anticipate the appearance of arrays of objects after either a rotation (visualization) of the objects or a change in the objects’ perspective (perspective-taking). Findings show that visualization and perspective-taking tasks have different error patterns and activate different neural processes (e.g., Huttenlocher & Presson, 1979; Kozhevnikov & Hegarty, 2001; Wraga et al., 2000). Perspective rotation tasks often lead to egocentric errors such as reflection errors when trying to reorient perspectives, while object rotation task errors are not as systematic (Kozhevnikov & Hegarty, 2001; Zacks et al., 2000). For example, to solve a spatial orientation/perspective-taking task (Fig. 2A), participants may imagine their bodies moving to a new position or viewpoint with the objects of interest remaining stationary. In contrast, the objects in a spatial visualization task are often rotated in one’s imagination (Fig. 2B). Behavioral and neuroscience evidence is consistent with these findings, suggesting a dissociation between an object-to-object representational system and a self-to-object representational system (Hegarty & Waller, 2004; Kosslyn et al., 1998; Zacks et al., 1999). Thus, within the specific-factor structure of spatial ability, spatial orientation/perspective-taking can be considered a separate factor from spatial visualization/mental rotation (Thurstone, 1950).

Exemplars of spatial orientation, mental rotation, and non-rotational spatial visualization tasks. The spatial orientation task (A) is adapted from Hegarty and Waller’s (2004) Object Perception/Spatial Orientation Test. The mental rotation task (B) is adapted from Vandenberg and Kuse’s (1978) Mental Rotation Test. The non-rotational spatial visualization task (C) is adapted from Ekstrom et al.’s (1976) Paper Folding Task

Dissociation Between Mental Rotation and Non-rotational Spatial Visualization

The boundaries between specific factors of spatial ability are often blurred and context dependent. To address this, Ramful and colleagues (2017) have created a three-factor framework that clarifies the distinctions between spatial visualization and spatial orientation (see the “Dissociation Between Spatial Orientation and Rotational Spatial Visualization” section) by treating mental rotation as a separate factor. Their framework is unique in that they used mathematics curricula, rather than solely basing their analysis on a factor analysis, to identify three sub-factors of spatial ability: (1) mental rotation, (2) spatial orientation, and (3) spatial visualization. Mental rotation describes how one imagines how a two-dimensional or three-dimensional object would appear after it has been turned (Fig. 2B). Mental rotation is a cognitive process that has received considerable attention from psychologists (Bruce & Hawes, 2015; Lombardi et al., 2019; Maeda & Yoon, 2013). Spatial orientation, in contrast, involves egocentric representations of objects and locations and includes the notion of perspective-taking (Fig. 2A). Spatial visualization in their classification system (previously an umbrella term for many spatial skills that included mental rotation) describes mental transformations that do not require mental rotation or spatial orientation (Linn & Peterson, 1985) and can be measured through tasks like those shown in Fig. 2C that involve operations such as paper folding and unfolding. Under this definition, spatial visualization may involve complex sequences in which intermediate steps may need to be stored in spatial working memory (Shah & Miyake, 1996). In mathematics, spatial visualization skills often correlate with symmetry, geometric translations, part-to-whole relationships, and geometric nets (Ramful et al., 2017).

Summary and Implications

As described above, decades of research on spatial ability have involved scholars using factor-analytic methods to identify and define various spatial sub-components. The results of these effects have created a multitude of specific-factor structures, with models identifying anywhere from two to 25 different spatial subcomponents. However, there are two dissociations that may be particularly important for mathematics education research. The first is the dissociation between spatial orientation and spatial visualization abilities. Spatial orientation tasks typically involve rotating one’s perspective for viewing an object or scene, while spatial visualization tasks require imagining object rotation. The second dissociation is between mental rotation and non-rotational spatial visualization. While this distinction is relatively recent, it separates the larger spatial visualization sub-component into tasks that either involve rotating imagined objects or a sequence of visualization tasks that do not require mental rotation or spatial orientation. The historical focus on psychometric accounts of spatial ability strove to identify constructs that could apply generally to various forms of reasoning, yet it has contributed to a complex literature that may be difficult for scholars who are not steeped in the intricacies of spatial reasoning research to parse and effectively apply to mathematics education.

Studies of mathematical reasoning and learning that rely on specific-factor structures can yield different results and interpretations depending on their choices of factors. For example, Schenck et al. (2022) fit several models using different spatial sub-factors to predict undergraduates’ production of verbal mathematical insights. The authors demonstrated that combining mental rotation and non-rotational spatial visualization into a single factor (per McGee, 1979) rather than separating them (per Ramful et al., 2017) can lead to conflicting interpretations on the relevance of these skills for improving mathematics. Some scholars argue that a weakness of many traditional specific-factor structures of spatial ability is that they rely on exploratory factor analysis rather than confirmatory factor analyses informed by a clear theoretical basis of spatial ability (Uttal et al., 2013; Young et al., 2018b). Finding differing results based on small and reasonable analytic choices presents a serious problem for finding convergence of the role of particular spatial abilities on particular mathematics concepts.

Broad-Factor Structures

Alternative approaches to factor-analytic methods rely on much broader distinctions between spatial ability subcomponents. We refer to these alternatives as broad-factor structure approaches since their categorizations align with theoretically motivated combinations of specific spatial ability subfactors. Some scholars who draw on broad-factor structures have argued for a partial dissociation (Ferguson et al., 2015; Hegarty et al., 2006, 2018; Jansen, 2009; Potter, 1995). Large-scale spatial abilities involve reasoning about larger-scale objects and space, such as physical navigation and environmental maps. Small-scale spatial abilities are defined as those that predominantly rely on mental transformations of shapes or objects (e.g., mental rotation tasks). A meta-analysis (Wang et al., 2014) examining the relationship between small- and large-scale abilities provided further evidence that these two factors should be defined separately. Hegarty et al. (2018) recommend measuring large-scale abilities through sense-of-direction measures and navigation activities. These scholars suggest that small-scale abilities, such as mental rotation, may be measured through typical spatial ability tasks like those discussed in the “Choosing Spatial Tasks in Mathematics Education Research” section of this paper.

Other lines of research that use broad-factor structures have drawn on linguistic, cognitive, and neuroscientific findings to develop a 2 × 2 classification system that distinguishes between intrinsic and extrinsic information along one dimension, and static and dynamic tasks another an orthogonal dimension (Newcombe & Shipley, 2015; Uttal et al., 2013). Intrinsic spatial skills involve attention to a single object's spatial properties, while extrinsic spatial skills predominately rely on attention to the spatial relationships between objects. The second dimension in this classification system defines static tasks as those that involve recognizing and thinking about objects and their relations. In contrast, dynamic tasks often move beyond static coding of the spatial features of an object and its relations to imagining spatial transformations of one or more objects.

Uttal and colleagues (2013) describe how this 2 × 2 broad-factor classification framework can be mapped onto Linn and Peterson’s (1985) three-factor model, breaking spatial ability into spatial perception, mental rotation, and spatial visualization sub-factors. Spatial visualization tasks fall into the intrinsic classification and can address static and dynamic reasoning depending on whether the objects are unchanged or require spatial transformations. The Embedded Figures Test (Fig. 3A; Witkin et al., 1971) is an example of an intrinsic-static classification, while Ekstrom and colleagues’ (1976) Form Board Test and Paper Folding Test (Fig. 3B) are two examples of spatial visualization tasks that measure the intrinsic-dynamic classification. Mental rotation tasks (e.g., the Mental Rotations Test of Vandenberg & Kuse, 1978) also represent the intrinsic-dynamic category. Spatial perception tasks (e.g., water level tasks; Fig. 3C; see Inhelder & Piaget, 1958) capture the extrinsic-static category in the 2 × 2 because they require coding spatial position information between objects or gravity without manipulating them. Furthermore, Uttal et al. (2013) address a limitation of Linn and Peterson’s (1985) model by including the extrinsic/dynamic classification, which they note can be measured through spatial orientation and navigation instruments such as the Guilford-Zimmerman Spatial Orientation Task (Fig. 3D; Guilford & Zimmerman, 1948).

Exemplar tasks that map to Uttal and colleagues’ (2013) framework. The intrinsic-static task (A) is adapted from Witkin and colleagues’ (1971) Embedded Figures Test. The intrinsic-dynamic task (B) is adapted from Ekstrom and colleagues’ (1976) Paper Folding Task. The extrinsic-static task (C) is adapted from Piaget and Inhelder’s (1956) water level tasks. The extrinsic-dynamic task (D) is adapted from Guilford and Zimmerman’s (1948) Spatial Orientation Survey Test

Though Uttal et al.’s (2013) classification provides a helpful framework for investigating spatial ability and its links to mathematics (Young et al., 2018b), it faces several challenges. Some critics posit that spatial tasks often require a combination of spatial subcomponents and cannot be easily mapped onto one domain in the framework (Okamoto et al., 2015). For example, a think-aloud task might ask students to describe a different viewpoint of an object. The student may imagine a rotated object (intrinsic-dynamic), imagine moving their body to the new viewpoint (extrinsic-dynamic), use a combination of strategies, or employ a non-spatial strategy such as logical deduction. Additionally, an experimental study by Mix et al. (2018) testing the 2 × 2 classification framework using confirmatory factor analysis on data from children in kindergarten, 3rd, and 6th grades failed to find evidence for the static-dynamic dimension at any age or for the overall 2 × 2 classification framework. This study demonstrates that there are limitations to this framework in practice. It suggests that other frameworks with less dimensionality may be more appropriate for understanding children's spatial abilities.

Even in light of these challenges, broad-factor taxonomies can benefit researchers who do not expect specific sub-factors of spatial ability to be relevant for their data or those controlling for spatial ability as part of an investigation of a related construct. Currently, no validated and reliable instruments have been explicitly designed to assess these broad-factor taxonomies. Instead, the scholars proposing these broad-factor taxonomies suggest mapping existing spatial tasks, which are usually tied to specific sub-factors of spatial ability, to the broader categories.

Unitary-Factor Structure

Many scholars understand spatial ability to be composed of a set of specific or broad factors. Neuroimaging studies have even provided preliminary evidence of a distinction between object-based abilities such as mental rotation and orientation skills (e.g., Kosslyn & Thompson, 2003). However, there is also empirical support for considering spatial ability as a unitary construct. Early studies (Spearman, 1927; Thurstone, 1938) identified spatial ability as one factor separate from general intelligence that mentally operates on spatial or visual images. Evidence for a unitary model of spatial ability proposes a common genetic network that supports all spatial abilities (Malanchini et al., 2020; Rimfeld et al., 2017). When a battery of 10 gamified measures of spatial abilities was given to 1,367 twin pairs, results indicated that tests assessed a single spatial ability factor and that the one-factor model of spatial ability fit better than the two-factor model, even when controlling for a common genetic factor (Rimfeld et al., 2017). In another study, Malanchini et al. (2020) administered 16 spatial tests clustered into three main sub-components: Visualization, Navigation, and Object Manipulation. They then conducted a series of confirmatory factor analyses to fit one-factor (Spatial Ability), two-factor (Spatial Orientation and Object Manipulation), and three-factor models (Visualization, Navigation, and Object Manipulation). The one-factor model gave the best model fit, even when controlling for general intelligence.

A unitary structure is beneficial for researchers interested in questions about general associations between mathematics and spatial ability or for those using spatial ability as a moderator in their analyses. However, to date, no valid and reliable instruments have been created to fit within the unitary taxonomy, such as those that include various spatial items. Instead, researchers who discuss spatial ability as a unitary construct often choose one or multiple well-known spatial measures based on a particular sub-factor of spatial ability (e.g., Boonen et al., 2013; Burte et al., 2017). This issue motivates the need for an evidence-based, theory-grounded task selection procedure as well as the need to develop a unitary spatial cognition measure. In the absence of a single spatial cognition measure designed to assess spatial ability from a unitary perspective, researchers will need to think critically about selecting measures and analytic frameworks for their studies to cover a range of spatial ability sub-factors and address the limitations of such decisions.

Summary

This section reviewed ways spatial abilities have been historically defined and subdivided, with a focus on three of the most widely reported taxonomies: specific-factor structure, broad-factor structure, and unitary structure. The specific-factor structure taxonomy includes subcomponents, such as spatial orientation and rotational and non-rotational spatial visualization, that primarily arise using factor-analytic methods such as exploratory factor analyses. However, discrepancies in factor analytic techniques and test variations led to divergent nomenclature and factorial frameworks. A few dissociations in spatial skills arose from these well-supported methods, such as the distinction between spatial orientation and perspective-taking. The broad-factor structures taxonomy dissociates spatial abilities based on theoretically motivated categories, such as large-scale and small-scale spatial abilities. While these classifications may be helpful for investigating the links between spatial abilities and mathematics, there is currently no empirical evidence to support using these frameworks in practice. The unitary structure taxonomy is based on factor-analytic evidence for a single, overarching spatial ability factor that is separate from general intelligence. Despite the potential advantages of simplicity, there are currently no valid and reliable instruments for measuring a single spatial factor, so this must be based on performance using instruments that measure performance for a specific factor or are imputed across multiple instruments. Additional complexities of directly applying existing measures to mathematics education research include the awareness that mathematical task performance often involves the use of a variety of spatial and non-spatial skills.

Choosing Spatial Tasks in Mathematics Education Research

The context of mathematical reasoning and learning often leads to scenarios where the choice of spatial sub-components influences interpretations. Given the complex nature of spatial ability and the reliance on exploratory rather than confirmatory analyses, there is a need for dissociation approaches with clearer theoretical foundations. Due to the absence of comprehensive spatial cognition measures that address the possible broad-factor and unitary structure of spatial ability, researchers often resort to well-established spatial measures focusing on specific sub-factors, necessitating critical consideration in task selection and analytical frameworks. Thus, there is a need for evidence-based, theory-grounded task selection procedures to help address the current limitations in spatial ability as it relates to mathematics education research.

With so many spatial ability taxonomies to choose from, education researchers must carefully select tasks and surveys that match their stated research goals and theoretical frameworks, the spatial ability skills of interest, and the populations under investigation. As mentioned, mathematics education researchers often select spatial tasks based on practical motivations, such as access or familiarity, rather than theoretical ones. These decisions can be complicated by the vast number of spatial tasks, with little guidance for which ones best align with the various spatial taxonomies. In recent years, there has been a concerted effort by groups such as the Spatial Intelligence and Learning Center (spatiallearning.org) to collect and organize a variety of spatial measurements in one place. However, there is still work to be done to create a list of spatial instruments that researchers can easily navigate. To help guide researchers with these decisions, we have compiled a list of spatial instruments referenced in this paper and matched them with their associated spatial sub-components and intended populations (Table 1). These instruments primarily consist of psychometric tests initially designed to determine suitability for occupations such as in the military before being adapted for use with university and high school students (Hegaryt & Waller, 2005). As such, the majority of instruments are intended to test specific spatial sub-components derived from factor-analytic methods and were created by psychologists for use in controlled laboratory-based studies rather than in classroom contexts (Atit et al., 2020; Lowrie et al., 2020). Therefore, we have organized Table 1 by specific spatial sub-components described in the “Specific-Factor Structures” section that overlap with skills found in mathematics curricula as proposed by Ramful and colleagues (2017).

Comparing the instruments in these ways reveals several vital gaps that must be addressed to measure spatial cognition in a way that correlates with mathematics and spatial abilities across the lifespan. In particular, this analysis reveals an over-representation of certain spatial sub-components, such as mental rotation and spatial visualization, which also map to quadrants of the 2 × 2 (intrinsic-extrinsic/static-dynamic) classification system described in the “Broad-Factor Structures” section. It shows a pressing need for more tasks explicitly designed for other broad sub-components, such as the extrinsic-static classifications. It also reveals that the slate of available instruments is dominated by tasks that have only been tested on adults and few measures that test more than one subcomponent. These disparities are essential for educational considerations and are taken up in the final section.

Due to the sheer number of spatial tasks, the observations that these tasks may not load consistently on distinct spatial ability factors and the lack of tasks that address broad and unitary factor structures, it is not possible in the scope of this review to discuss every task-factor relationship. As a practical alternative, we have grouped spatial ability tasks into three aggregated categories based on their specific-factor dissociations, as discussed in the previous section: Spatial orientation tasks, non-rotational spatial visualization tasks, and mental rotation tasks (for examples, see Fig. 2). We have chosen these three categories for two reasons: (1) there is empirical evidence linking these spatial sub-categories to mathematical thinking outcomes, and (2) these categories align with Ramful et al.’s (2017) three-factor framework, which is one of the only spatial frameworks that was designed with links to mathematical thinking in mind. We acknowledge that other scholars may continue to identify different aggregations of spatial reasoning tasks, including those used with mechanical reasoning and abstract reasoning tasks (e.g., Tversky, 2019; Wai et al., 2009). In our aggregated categories, mechanical reasoning tasks would align with either mental rotation or non-rotational tasks depending on the specific task demands. In contrast, abstract reasoning tasks would align most closely with non-rotational spatial visualization tasks.

As there are no universally accepted measures of spatial ability for each spatial factor, we have narrowed our discussion to include exemplars of validated, cognitive, pen-and-pencil spatial ability tasks. These tasks have been historically associated with various spatial ability factors rather than merely serving as measures of general intelligence or visuospatial working memory (Carroll, 1993) and are easily implemented and scored by educators and researchers without specialized software or statistical knowledge. Notably, this discussion of spatial ability tasks and instruments excludes self-report questionnaires such as the Navigational Strategy Questionnaire (Zhong & Kozhevnikov, 2016) and the Santa Barbara Sense of Direction Scale (Hegarty et al., 2002); navigation simulations such as the Virtual SILC Test of Navigation (Weisberg et al., 2014) and SOIVET-Maze (da Costa et al., 2018); and tasks that involve physical manipulation such as the Test of Spatial Ability (Verdine et al., 2014). As such, we were unable to find any published, validated instruments for large-scale spatial orientation, a sub-factor of spatial orientation, that meet our inclusion criteria.

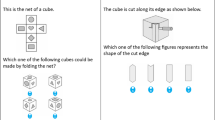

Additionally, we would like to highlight one instrument that does not fit into the categories presented in the following sections but may be of use to researchers. The Spatial Reasoning Instrument (SRI; Ramful et al., 2017) is a multiple-choice test that consists of three spatial subscales (spatial orientation, spatial visualization, and mental rotation). Notably, the questions that measure spatial visualization are specifically designed not to require mental rotation or spatial orientation. Unlike previously mentioned instruments, the SRI is not a speed test, though students are given a total time limit. This instrument targets middle school students and was designed to align more closely with students’ mathematical curricular experiences rather than a traditional psychological orientation. Mathematical connections in the SRI include visualizing lines of symmetry, using two-dimensional nets to answer questions about corresponding three-dimensional shapes, and reflecting objects.

In the next sections, we detail the types of tasks and instruments commonly used to measure spatial orientation, non-rotational spatial visualization, and mental rotation. Ultimately, these help form a guide for navigating and selecting among the various instruments for assessing spatial skills in relation to mathematical reasoning.

Spatial Orientation Tasks

Much like spatial ability more generally, spatial orientation skills fit into the broad distinctions of large-scale (e.g., wayfinding, navigation, and scaling abilities) and small-scale (e.g., perspective-taking and directional sense) skills, with small-scale spatial orientation skills being shown to be correlated with larger-scale spatial orientation skills (Hegarty & Waller, 2004; Hegarty et al., 2002). Aspects of mathematical thinking that may involve spatial orientation include scaling, reading maps and graphs, identifying orthogonal views of objects, and determining position and location. Although few empirical studies have attempted to determine statistical associations between spatial orientation and mathematics, spatial orientation has been correlated with some forms of scholastic mathematical reasoning. One area of inquiry showed associations between spatial orientation and early arithmetic and number line estimation (Cornu et al., 2017; Zhang & Lin, 2015). In another, spatial orientation skills were statistically associated with problem-solving strategies and flexible strategy use during high school-level geometric and non-geometric tasks (Tartre, 1990). Studies of disoriented children as young as three years old show that they reorient themselves based on the Euclidean geometric properties of distance and direction, which may contribute to children's developing abstract geometric intuitions (Izard et al., 2011; Lee et al., 2012; Newcombe et al., 2009).

Historically, the Guilford-Zimmerman (GZ) Spatial Orientation Test (1948) was used to measure spatial orientation. Critics have shown that this test may be too complicated and confusing for participants (Kyritsis & Gulliver, 2009) and that the task involves both spatial orientation and spatial visualization (Lohman, 1979; Schultz, 1991). To combat the GZ Spatial Orientation Test problems, Kozhevnikov and Hegarty (2001) developed the Object Perspective Taking Test, which was later revised into the Object Perspective/Spatial Orientation Test (see Fig. 2A; Hegarty & Waller, 2004). Test takers are prevented from physically moving the test booklet, and all items involved an imagined perspective change of at least 90°. Unlike previous instruments, results from the Object Perspective/Spatial Orientation Test showed a dissociation between spatial orientation and spatial visualization factors (though they were highly correlated) and correlated with self-reported judgments of large-scale spatial cognition. A similar instrument, the Perspective Taking Test for Children, has been developed for younger children. (Frick et al., 2014a, 2014b). Additionally, simpler versions of these tasks that asked participants to match an object to one that has been drawn from an alternative point of view have also been used, such as those in the Spatial Reasoning Instrument (Ramful et al., 2017).

Non-Rotational Spatial Visualization Tasks

With differing definitions of spatial visualization, measures of this spatial ability sub-component often include tasks that evaluate other spatial ability skills, such as cross-sectioning tasks (e.g., Mental Cutting Test; CEEB, 1939, and Santa Barbara Solids Test; Cohen & Hegarty, 2012), that may require elements of spatial orientation or mental rotation. Though these tasks may be relevant for mathematical thinking, this section focuses on tasks that do not overtly require mental rotation. Non-rotational spatial visualization may be involved in several aspects of mathematical thinking, including reflections (Ramful et al., 2015) and visual-spatial geometry (Hawes et al., 2017; Lowrie et al., 2019), visualizing symmetry (Ramful et al., 2015), symbolic comparison (Hawes et al., 2017), and imagining problem spaces (Fennema & Tarte, 1985). A recent study by Lowrie and Logan (2023) posits that developing students’ non-rotational spatial visualization abilities may be related to better mathematics scores by improving students generalized mathematical reasoning skills and spatial working memory.

The three tests for non-rotational spatial visualization come from the Kit of Factor-Referenced Cognitive Tests developed by Educational Testing Services (Ekstrom et al., 1976). These instruments were developed for research on cognitive factors in adult populations. The first instrument is the Paper Folding Test (PFT), one of the most commonly used tests for measuring spatial visualization (see Fig. 2C). In this test, participants view diagrams of a square sheet of paper being folded and then punched with a hole. They are asked to select the picture that correctly shows the resulting holes after the paper is unfolded. Though this task assumes participants imagine unfolding the paper without the need to rotate, studies have shown that problem attributes (e.g., number and type of folds and fold occlusions) impact PFT accuracy and strategy use (Burte et al., 2019a).

The second instrument is the Form Board Test. Participants are shown an outline of a complete geometric figure with a row of five shaded pieces. The task is to decide which of the shaded pieces will make the complete figure when put together. During the task, participants are told that the pieces can be turned but not flipped and can sketch how they may fit together.

The third instrument, the Surface Development Test, asks participants to match the sides of a net of a figure to the sides of a drawing of a three-dimensional figure. Like the PFT, strategy use may also impact accuracy on these two measures. This led to the development of a similar Make-A-Dice test (Burte et al., 2019b), which relies on the number of squares in a row and consecutive folding in different directions rather than just increasing the number of folds to increase difficulty. Additionally, none of these three instruments were explicitly designed to test non-rotational spatial visualization but rather a broader definition of spatial visualization that includes mental rotation. Thus, it is possible that some participants’ strategies may include mental rotation or spatial orientation.

Other common types of spatial visualization tasks include embedded figures adapted from the Gottschaldt Figures Test (Gottschaldt, 1926). These tasks measure spatial perception, field-independence, and the ability to disembed shapes from a background, which may be a necessary problem-solving skill (Witkin et al., 1977). One instrument, the Embedded Figures Test, originally consisted of 24 trials during which a participant is presented with a complex figure, then a simple figure, and then shown the complex figure again with instructions to locate the simple figure within it (Witkin, 1950). Others have used Witkin’s (1950) stimuli as a basis to develop various embedded figures tests, including the Children’s Embedded Figures Test (Karp & Konstadt, 1963) and the Group Embedded Figure Test (Oltman et al., 1971).

Mental Rotation Tasks

Mental rotation can be broadly defined as a cognitive operation in which a mental image is formed and rotated in space. Though mental rotation skills are often subsumed under spatial visualization or spatial relations sub-components, they can be treated as a separate skill from spatial orientation and spatial visualization (Linn & Peterson, 1985; Shepard & Metzler, 1971). As many definitions of general spatial ability include a “rotation” aspect, several studies have investigated the links between mental rotation and mathematics. For young children, cross-sectional studies have shown mixed results. In some studies, significant correlations were found between mental rotation and both calculation and arithmetic skills (Bates et al., 2021; Cheng & Mix, 2014; Gunderson et al., 2012; Hawes et al., 2015). Conversely, Carr et al. (2008) found no significant associations between mental rotation and standardized mathematics performances in similar populations. In middle school-aged children (11–13 years), mental rotation skill was positively associated with geometry knowledge (Battista, 1990; Casey et al., 1999) and problem-solving (Delgado & Prieto, 2004; Hegarty & Kozhevnikov, 1999). Studies of high school students and adults have indicated that mental rotation is associated with increased accuracy on mental arithmetic problems (Geary et al., 2000; Kyttälä & Lehto, 2008; Reuhkala, 2001).

Behavioral and imaging evidence suggests that mental rotation tasks invoke visuospatial representations that correspond to object rotation in the physical world (Carpenter et al., 1999; Shepard & Metzler, 1971). This process develops from 3 to 5 years of age with large individual differences (Estes, 1998) and shows varying performance across individuals irrespective of other intelligence measures (Borst et al., 2011). Several studies have also demonstrated significant gender differences, with males typically outperforming females (e.g., Voyer et al., 1995). However, this gap may be decreasing across generations (Richardson, 1994), suggesting it is due at least in part to sociocultural factors such as educational experiences rather than exclusively based on genetic factors. Historically, three-dimensional mental rotation ability has fallen under the spatial visualization skill, while two-dimensional mental rotation occasionally falls under a separate spatial relations skill (e.g., Carroll, 1993; Lohman, 1979). Thus, mental rotation measures often include either three-dimensional or two-dimensional stimuli rather than a mixture of both.

Three-Dimensional Mental Rotation Tasks

In one of the earliest studies of three-dimensional mental rotation, Shepard and Metzler (1971) presented participants with pictures of pairs of objects and asked them to answer as quickly as possible whether the two objects were the same or different, regardless of differences in orientation. The stimuli showed objects that were either the same, differing in orientation, or mirror images of those objects. This design provided a nice control since the mirror images had comparable visual complexity but could not be rotated to match the original. Results revealed a positive linear association between reaction time and the angular difference in the orientation of objects. In combination with participant post-interviews, this finding illustrated that in order to make an accurate comparison between the object and the answer questions, participants first imagined the object as rotated into the same orientation as the target object and that participants perceived the two-dimensional pictures as three-dimensional objects in order to complete the imagined rotation. Additional studies have replicated these findings over the last four decades (Uttal et al., 2013). Shepard and Metzler-type stimuli have been used in many different instruments, including the Purdue Spatial Visualization Test: Rotations (Guay, 1976) and the Mental Rotation Test (see Fig. 2B; Vandenberg & Kuse, 1978). However, recent studies have shown that some items on the Mental Rotation Test can be solved using analytic strategies such as global-shape strategy to eliminate answer choices rather than relying on mental rotation strategies (Hegarty, 2018).

One common critique of the Shepard and Metzler-type stimuli is that the classic cube configurations’ complex design is not appropriate for younger populations, leading to few mental rotation studies on this population. Studies have shown that children under 5 years of age have severe difficulties solving standard mental rotation tasks, with children between the ages of 5 and 9 solving such tasks at chance (Frick et al., 2014a, 2014b). To combat this, studies with pre-school age children often lower task demands by reducing the number of answer choices, removing mirrored and incongruent stimuli, and using exclusively images of two-dimensional objects (Krüger, 2018; Krüger et al., 2013). In response, some scholars have begun developing appropriate three-dimensional mental rotation instruments for elementary school students, such as the Rotated Colour Cube Test (Lütke & Lange-Küttner, 2015). In this instrument, participants are presented with a stimulus consisting of a single cube with different colored sides and are asked to identify an identical cube that has been rotated. However, studies on both three-dimensional and two-dimensional rotation have found that cognitive load depends more on the stimulus angle orientation than the object’s complexity or dimensionality (Cooper, 1975; Jolicoeur et al., 1985).

Two-Dimensional Mental Rotation Tasks

To measure two-dimensional mental rotation, tasks for all populations feature similar stimuli. These tasks, often referred to as spatial relations or speeded rotation tasks, typically involve single-step mental rotation (Carroll, 1993). One common instrument for two-dimensional mental rotation is the Card Rotation Test (Ekstrom et al., 1976). This instrument presents an initial figure and asks participants to select the rotated but not reflected items. Importantly, these tasks can be modified for various populations (Krüger et al., 2013). One standardized instrument for pre-school and early primary school-age children, the Picture Rotation Test, demonstrates how easily these two-dimensional stimuli can be modified (Quaiser-Pohl, 2003).

Summary

This section aims to provide an updated review of the various ways in which spatial ability has been historically measured and critically evaluates these assessment tools. As the majority of these measures were designed based on specific-factor structures outlined in the “Specific-Factor Structures” section, we chose to organize our discussion by grouping assessments based on the specific factor it was intended to capture. We also decided to focus on the spatial sub-components that have been suggested to be linked to mathematical thinking, including spatial orientation, spatial visualization, and mental rotation. Ultimately, we found that although there are many spatial measures that researchers can choose from, there is a need for additional measures that address gaps in population and include more than one spatial subcomponent. Additionally, there is a critical need for spatial assessments that can be used in contexts outside of controlled laboratory and one-on-one settings to more deeply understand the complex connections between spatial ability and mathematics education in more authentic learning settings such as classrooms.

A Guiding Framework

We contend that the decisions made regarding the choice of spatial subdivisions, analytical frameworks, and spatial measures will impact both the results and interpretations of findings from studies on the nature of mathematical reasoning in controlled studies. One way these decisions affect the outcomes of a study is that they may change the specific spatial ability sub-components that reliably predict mathematics performance. This is because some factors of spatial ability have been shown to be more strongly associated with certain sub-domains of mathematics than with others (Delgado & Prieto, 2004; Schenck & Nathan, 2020), but it is unclear how generalizable these findings are as students may use a variety of spatial and non-spatial strategies. Additionally, some models and classifications of spatial ability, such as Uttal et al.’s (2013) classification and the unitary model of spatial ability, currently do not have validated instruments. Thus, selecting a spatial skills instrument poorly suited to the mathematical skills or population under investigation may fail to show a suitable predictive value. This can lead to an overall weaker model of the dependent variable and lead the research team to conclude that spatial reasoning overall is not relevant to the domain of mathematical reasoning interest. These limitations are often not discussed in the publications we reviewed and, perhaps, may not even be realized by many education researchers. However, as noted, it can be difficult for education researchers to select an appropriate framework among the many alternatives that match their specific domains of study.

Due to the various spatial taxonomies and the assumptions and design decisions needed for choosing the accompanying analytical frameworks, we assert that it is beneficial for most education researchers who do not identify as spatial cognition researchers to avoid attempts to create a specific, universal taxonomy of spatial ability. The evidence of the ways individuals interact with spatial information through the various spatial subcomponents may be based on a particular scholar's perspective of spatial ability, which should inform their choices of spatial taxonomies and analytical frameworks and measures based on their goals.

To help education researchers who may be unfamiliar with the vast literature on spatial ability navigate this large and potentially confusing landscape in service of their study objectives, we have designed a guide in the form of a flowchart that enables them to match spatial taxonomies to analytic frameworks (Fig. 4). Our guide, understandably, does not include every possible spatial taxonomy or study aim. Instead, it offers a helpful starting point for incorporating spatial skills into an investigation of mathematical reasoning by focusing on how researchers can draw on specific factor taxonomies and current validated measures of spatial ability in controlled studies.

The first question in the flowchart, Q1, asks researchers to decide how spatial ability will be used in their investigation: either as a covariate or as the main variable of interest. If spatial ability is a covariate, the most appropriate taxonomy would be the unitary model to capture the many possible ways participants could utilize spatial thinking during mathematical reasoning. However, as mentioned in the above section, this model has no validated measure. Thus, we recommend researchers select several measures that cover a variety of specific spatial subcomponents, or a measure designed to test more than one spatial subcomponent, such as the Spatial Reasoning Instrument (Ramful et al., 2017). We would then suggest using an analytical framework with a single composite score across multiple tasks to combat issues such as task-related biases (Moreu & Weibels, 2021).

If spatial ability is the main variable of interest, answering Q2 in the flowchart directs the researcher to consider whether they are interested in investigating the role of spatial ability as a general concept or as one or more specific sub-components. For example, suppose the researcher is interested in understanding links between spatial ability and a specific mathematic domain. In that case, we recommend using the unitary model of spatial ability and following the recommendations outlined above for using spatial ability as a covariate. For example, Casey et al. (2015) found that children’s early spatial skills were long-term predictors of later math reasoning skills. In their analysis, the authors identified two key spatial skills, mental rotation, and spatial visualization, that previous work by Mix and Cheng (2012) found to be highly associated with mathematics performance. To measure these constructs, Casey and colleagues administered three spatial tasks to participants: a spatial visualization measure, a 2-D mental rotation measure, and a 3-D mental rotation measure. The authors were interested in the impact of overall spatial ability on analytical math reasoning and in partially replicating previous findings rather than whether these two factors impacted mathematics performance. Thus, they combined these three spatial scores into a single composite score.

For investigations centering around one or more specific spatial sub-components, we recommend novice researchers use sub-components from specific factor taxonomies (e.g., mental rotation, spatial visualization, spatial orientation). Specific-factor taxonomies are used in a variety of lines of research, including mathematics education. Studies exploring the association between spatial ability and mathematics often focus on a particular sub-factor. For example, some studies have focused on the association between mental rotation and numerical representations (e.g., Rutherford et al., 2018; Thompson et al., 2013), while others have focused on spatial orientation and mathematical problem solving (e.g., Tartre, 1990). Similarly, scholars investigating spatial training efficacy often use spatial tasks based on a single factor or a set of factors as pre- and post-test measures and in intervention designs (e.g., Bruce & Hawes, 2015; Gilligan et al., 2019; Lowrie et al., 2019; Mix et al., 2021).

The third question in the flowchart, Q3, asks researchers to select whether their investigation will focus on one particular spatial sub-component or several to provide guidance for analytic frames. In new studies, the sub-components of interest may be selected based on prior studies for confirmatory analyses or on a theoretical basis for exploratory studies. If only a single spatial sub-component is of interest to the investigation, we suggest an analytic approach that includes a single score from one task. If multiple spatial sub-components are relevant to the investigation, we recommend using a single score from one task for each sub-component of interest.

Task selection, the final step in the flow chart, will depend on practical considerations such as which spatial sub-components are relevant, population age, and time constraints. Though thousands of spatial tasks are available, the tasks listed in Table 1, which also identifies corresponding broad and specific spatial sub-components, can be a useful starting point for designing a study. We recommend that researchers acknowledge that students may solve mathematical problems in various spatial and non-spatial ways and, thus, their results may not generalize to all students or all mathematical tasks and domains. We also remind researchers that the majority of the measures described in the “Choosing Spatial Tasks in Mathematics Education Research” section are designed as psychometric instruments for use in tightly controlled studies. The guidance above is not intended for studies that involve investigating spatial ability in classrooms or other in situ contexts.

Conclusions and Lingering Questions

Researchers largely agree that spatial ability is essential for mathematical reasoning and success in STEM fields (National Research Council, 2006). The two goals of this review were, first, to summarize the relevant spatial ability literature, including the various factor structures and measures, in an attempt to more clearly understand the elements of spatial ability that may relate most closely to mathematics education; and second, to provide recommendations for education researchers and practitioners for selecting appropriate theoretical taxonomies, analytical frameworks, and specific instruments for measuring, interpreting, and improving spatial reasoning for mathematics education. Our review exposed a wide array of spatial taxonomies and analytical frameworks developed by spatial ability scholars for understanding and measuring spatial reasoning. However, this review shows no convergence on a definition of spatial ability or agreement regarding its sub-components, no universally accepted set of standardized measures to assess spatial skills, and, most importantly, no consensus on the nature of the link between mathematical reasoning and spatial ability. Thus, this review exposes several challenges to understanding the relationship between spatial skills and performance in mathematics. One is that the connections between mathematical reasoning and spatial skills, while supported, are complicated by the divergent descriptions of spatial taxonomies and analytical frameworks, the sheer volume of spatial measures one encounters as a potential consumer, and a lack of a universally accepted means of mapping spatial measures to mathematical reasoning processes. These challenges should be seen as the responsibility of the educational psychology research communities. The lack of progress on these issues impedes progress in designing effective spatial skills interventions for improving mathematics thinking and learning based on clear causal principles, selecting appropriate metrics for documenting change, and for analyzing and interpreting student outcome data.

Our primary contribution in the context of these challenges is to provide a guide, well situated in the research literature, for navigating and selecting among the various major spatial taxonomies and validated instruments for assessing spatial skills for use in mathematics education research and instructional design. In order to anchor our recommendations, we first summarized much of the history and major findings of spatial ability research as it relates to education (“Selecting a Spatial Taxonomy” section). In this summary, we identified three major types of spatial taxonomies: specific, broad, and unitary, and provided recommendations for associated analytical frameworks. We then discussed the plethora of spatial ability tasks that investigators and educators must navigate (“Choosing Spatial Tasks in Mathematics Education Research” section). To make the landscape more tractable, we divided these tasks into three categories shown to be relevant to mathematics education — spatial orientation, mental rotation, and non-rotational spatial visualization (see Table 1) — and mapped these tasks to their intended populations. We acknowledge that researchers and educators often select spatial tasks and analytic frameworks for practical rather than theoretical reasons, which can undermine the validity of their own research and assessment efforts. To provide educators with a stronger evidence-based foundation, we then offered a guiding framework (“A Guiding Framework” section) in the form of a flowchart to assist investigators in selecting appropriate spatial taxonomies and analytic frameworks as a precursor to making well-suited task sections to meet their particular needs. A guide of this sort provides some of the best steps forward to utilizing the existing resources for understanding and improving education through the lens of spatial abilities. We focused on providing a tool to guide the decision-making of investigators seeking to relate spatial skills with mathematics performance based on the existing resources, empirical findings, and the currently dominant theoretical frameworks.

Several limitations remain, however. One is that the vast majority of published studies administered spatial skills assessments using paper-and-pencil instruments. In recent years, testing has moved online, posing new challenges regarding the applicability and reliability of past instruments and findings. Updating these assessments will naturally take time until research using online instruments and new immersive technologies catches up (see Uttal et al., 2024, for discussion). A second limitation is that studies investigating the associations between spatial ability and mathematics have often focused on a particular spatial ability or particular mathematical skill. There are many unknowns about which spatial abilities map to which areas of mathematics performance. This limitation can only be addressed through careful, systematic, large-scale studies. A third limitation is that many of the instruments in the published literature were developed for and tested on adult populations. This greatly limits their applicability to school-aged populations. Again, this limitation can only be addressed through more research that extends this work across a broader developmental range. Fourth, many spatial ability instruments reported in the literature include tasks that may be solved using various strategies, some that are non-spatial, thus calling into question their construct validity of whether they measure the specific spatial skills they claim to measure. For example, some tasks in assessments, such as the Paper Folding Test may be effectively solved through non-spatial methods such as logic or counting rather than pure spatial visualization. Thus, there is a pressing need for process-level data, such as immediate retrospective reports and eye tracking (cf. Just & Carpenter, 1985), to accurately describe the various psychological processes involved and how they vary by age, individual differences, and assessment context. A fifth limitation relates to the 2 × 2 classification system using intrinsic and extrinsic information along one dimension and static and dynamic tasks along the other (Newcombe & Shipley, 2015; Uttal et al., 2013). In mapping existing tasks to this system, it became clear that there is a need for more development of extrinsic-static tasks and instruments. We found no studies investigating the link between mathematical reasoning and extrinsic-static spatial abilities, perhaps because of the lack of appropriate assessments. The sixth, and arguably greatest limitation is that scholarly research on spatial ability still lacks a convergent taxonomy and offers no clear picture as to which aspects of spatial thinking are most relevant to STEM thinking and learning. More research is needed to test additional models of spatial ability, such as the unitary model, and to expand spatial ability assessment tools to capture the complex and multifaceted nature of spatial thinking needed in mathematics education environments.

The objectives of this paper were to provide researchers with an updated review of spatial ability and its measures and to provide a guide for researchers new to spatial cognition to help navigate this vast literature when making study design decisions. Overall, research to understand the structure of spatial ability more deeply is at a crossroads. Spatial ability is demonstrably relevant for the development of mathematics reasoning and offers a malleable factor that may have a profound impact on the design of future educational interventions and assessments. Synthesizing these lines of research highlighted several areas that remain unexplored and in need of future research and development. STEM education and workforce development remain essential for scientific and economic advancements, and spatial skills are an important aspect of success and retention in technical fields. Thus, it is critical to further understand the connections between spatial and mathematical abilities as ways to increase our understanding of the science of learning and inform the design of future curricular interventions that transfer skills for science, technology, engineering, and mathematics.

References

Amalric, M., & Dehaene, S. (2016). Origins of the brain networks for advanced mathematics in expert mathematicians. Proceedings of the National Academy of Sciences, 113(18), 4909–4917. https://doi.org/10.1073/pnas.1603205113

Atit, K., Power, J. R., Pigott, T., Lee, J., Geer, E. A., Uttal, D. H., Ganley, C. M., & Sorby, S. A. (2022). Examining the relations between spatial skills and mathematical performance: A meta-analysis. Psychonomic Bulletin & Review, 29, 699–720. https://doi.org/10.3758/s13423-021-02012-w

Atit, K., Uttal, D. H., & Stieff, M. (2020). Situating space: Using a discipline-focused lens to examine spatial thinking skills. Cognitive Research: Principle and Implications, 5(19), 1–16.

Bates, K. E., Gilligan-Lee, K., & Farran, E. K. (2021). Reimagining mathematics: The role of mental imagery in explaining mathematical calculation skills in childhood. Mind, Brain, and Education, 15(2), 189–198.

Battista, M. T. (1990). Spatial visualization and gender differences in high school geometry. Journal for Research in Mathematics Education, 21(1), 47–60. https://doi.org/10.2307/749456

Battista, M. T. (2007). The development of geometric and spatial thinking. In F. K. Lester (Ed.), Second handbook of research on mathematics teaching and learning (pp. 843–908). Information Age Publishing.

Battista, M.T., Frazee, L. M., & Winer, M. L. (2018). Analyzing the relation between spatial and geometric reasoning for elementary and middle school students. In K. S. Mix & M. T. Battista (Eds.), Visualizing Mathematics. Research in Mathematics Education (pp. 195 – 228). Springer, Cham. https://doi.org/10.1007/978-3-319-98767-5_10

Boonen, A. J. H., van der Schoot, M., van Wesel, F., de Vries, M. H., & Jolles, J. (2013). What underlies successful world problem solving? A path analysis in sixth grade students. Contemporary Educational Psychology, 38, 271–279. https://doi.org/10.1016/j.cedpsych.2013.05.001

Borst, G., Ganis, G., Thompson, W. L., & Kosslyn, S. M. (2011). Representations in mental imagery and working memory: Evidence from different types of visual masks. Memory & Cognition, 40(2), 204–217. https://doi.org/10.3758/s13421-011-0143-7

Bruce, C. D., & Hawes, Z. (2015). The role of 2D and 3D mental rotation in mathematics for young children: What is it? Why does it matter? And what can we do about it? ZDM, 47(3), 331–343. https://doi.org/10.1007/s11858-014-0637-4

Buckley, J., Seery, N., & Canty, D. (2018). A heuristic framework of spatial ability: A review and synthesis of spatial factor literature to support its translation into STEM education. Educational Psychology Review, 30, 947–972. https://doi.org/10.1007/s10648-018-9432z

Buckley, J., Seery, N., & Canty, D. (2019). Investigating the use of spatial reasoning strategies in geometric problem solving. International Journal of Technology and Design Education, 29, 341–362. https://doi.org/10.1007/s10798-018-9446-3

Burte, H., Gardony, A. L., Hutton, A., & Taylor, H. A. (2017). Think3d!: Improving mathematical learning through embodied spatial training. Cognitive Research: Principles and Implications, 2(13), 1–8. https://doi.org/10.1186/s41235-017-0052-9

Burte, H., Gardony, A. L., Hutton, A., & Taylor, H. A. (2019). Knowing when to fold ‘em: Problem attributes and strategy differences in the Paper Folding Test. Personality and Individual Differences, 146, 171–181.

Burte, H., Gardony, A. L., Hutton, A., & Taylor, H. A. (2019). Make-A-Dice test: Assessing the intersection of mathematical and spatial thinking. Behavior Research Methods, 51(2), 602–638. https://doi.org/10.3758/s13428-018-01192-z

Carpenter, P. A., Just, M. A., Keller, T. A., Eddy, W., & Thulborn, K. (1999). Graded functional activation in the visuospatial system with the amount of task demand. Journal of Cognitive Neuroscience, 11(1), 9–24. https://doi.org/10.1162/089892999563210