Abstract

Blended learning combines online and traditional classroom instruction, aiming to optimize educational outcomes. Despite its potential, student engagement with online components remains a significant challenge. Gamification has emerged as a popular solution to bolster engagement, though its effectiveness is contested, with research yielding mixed results. This study addresses this gap by examining the impact of adaptive gamified assessments on young learners' motivation and language proficiency within a blended learning framework. Under Self-Determination Theory (SDT) and Language Assessment Principles, the study evaluates how adaptive gamified tests affect learner engagement and outcomes. A 20-week comparative experiment involved 45 elementary school participants in a blended learning environment. The experimental group (n = 23) took the adaptive gamified test, while the control group (n = 22) engaged with non-gamified e-tests. Statistical analysis using a paired t-test in SPSS revealed that the implementation of adaptive gamified testing in the blended learning setting significantly decreased learner dissatisfaction (t (44) = 10.13, p < .001, SD = 0.87). Moreover, this approach markedly improved learners' accuracy rates (t (44) = -25.75, p < .001, SD = 2.09), indicating enhanced language proficiency and motivation, as also reflected in the attitude scores (t(44) = -14.47, p < .001, SD = 4.73). The adaptive gamified assessment primarily enhanced intrinsic motivation related to competence, with 69% of students in the experimental group reporting increased abilities. The findings suggest that adaptive gamified testing is an effective instructional method for fostering improved motivation and learning outcomes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the rapidly evolving landscape of educational technology, blended learning (BL) has become a prominent approach, seamlessly integrating face-to-face and online learning experiences (Hill & Smith, 2023; Rasheed et al., 2020). While previous research has emphasized the widespread adoption and benefits of BL, including improved academic achievement (Boelens et al., 2017; Hill & Smith, 2023), challenges faced by students, teachers, and educational institutions in its implementation are also recognized (Rasheed et al., 2020). Designing effective blended learning (BL) presents several key challenges, including diminished learner attention and a decline in motivation, which result in decreased engagement and participation in courses (Khaldi et al., 2023). Furthermore, students may face difficulties with preparatory tasks and quizzes prior to in-person classes, often due to inadequate motivation (Albiladi & Alshareef, 2019; Boelens et al., 2017).

Gamification, defined as the educational use of game mechanics and design principles extending beyond traditional games (Schell, 2008), has garnered attention. Studies highlight its positive impact on learning motivation, emphasizing the mediating role of psychological needs under the self-determination theory (Deci & Ryan, 2016). This positions gamification as a potential solution for addressing challenges in blended learning. Recent systematic research underscores the significance of leveraging gamification in online environments to enhance student engagement (Bizami et al., 2023; Jayawardena et al., 2021).

The increasing popularity of gamified tests has positively influenced academia, supporting blended learning models and formal education settings (Bolat & Taş, 2023; Saleem et al., 2022). Recent findings suggest that gamified assessments contribute to higher process satisfaction among students compared to traditional assessments (Cortés-Pérez et al., 2023; Sanchez et al., 2020). The advent of machine learning algorithms has given rise to adaptive gamified assessments, offering a novel approach to personalized testing and feedback, thereby enhancing learning autonomy (Llorens-Largo et al., n.d.). Therefore, this study focuses on investigating the impact of gamified assessment on blended learning.

While existing research has explored the impact of gamification in online environments (Can & Dursun, 2019; Ramírez-Donoso et al., 2023), a noticeable gap remains in understanding the specific effects of gamified tests in online settings, particularly within the context of K-12 education. Research on adaptive gamified assessments is limited, emphasizing the need for further exploration (Bennani et al., 2022). Consequently, this study primarily focuses on investigating adaptive gamified assessments, with research objectives centered around motivation and knowledge levels in early education. The research objectives are outlined as follows:

-

1.

Does adaptive gamified assessment enhance learners' motivation on blended learning? What is the effect of the adaptive gamified assessment on learners' motivation?

-

2.

Does adaptive gamified assessment improve learners’ academic performance on blended learning?

To address the challenges present in blended learning, this research contributes to the field by providing insights into the effects of machine learning-based gamified assessments on motivation and performance, offering valuable recommendations for the improvement of blended learning. The findings could also facilitate the design and adoption of blended learning, particularly in the context of K-12 education.

The subsequent sections will delve into a comprehensive literature review, conceptual framework, outline the chosen methodology, present results, and discussions, and conclude with implications and avenues for future research.

2 Literature review

2.1 Blended learning challenges and benefits

Blended learning has emerged as a popular educational model, distinct from traditional instructional methods. It represents a convergence of in-person and online learning, leveraging the strengths of each to enhance the educational experience (Poon, 2013). The hybrid approach combines classroom effectiveness and socialization with the technological benefits of online modules, offering a compelling alternative to conventional teaching models. It has identified significant improvements in academic performance attributable to blended learning's efficiency, flexibility, and capacity (Hill & Smith, 2023). The approach also facilitates increased interaction between staff and students, promotes active engagement, and provides opportunities for continuous improvement (Can & Dursun, 2019).

Despite these advantages, blended learning is not without its challenges, particularly for students, teachers, and educational institutions during implementation. Boelens et al. (2017) highlight that students often face self-regulation challenges, including poor time management and procrastination. The degree of autonomy in blended courses requires heightened self-discipline, especially online, to mitigate learner isolation and the asynchronous nature of digital interactions (Hill & Smith, 2023). Isolation can be a critical issue, as students engaged in pre-class activities such as reading and assignments often do so in solitude, which can lead to a decrease in motivation and an increase in feelings of alienation (Chuang et al., 2018; Yang & Ogata, 2023).

Teachers, on the other hand, encounter obstacles in technological literacy and competency. Personalizing learning content, providing feedback, and assessing each student can demand considerable time and effort (Cuesta Medina, 2018; Bower et al., 2015). These challenges can adversely affect teachers' perceptions and attitudes towards technology (Albiladi & Alshareef, 2019). Furthermore, from a systems perspective, implementing Learning Management Systems (LMSs) that accommodate diverse learning styles is a significant hurdle. It necessitates a custom approach to effectively support differentiated learning trajectories (Albiladi & Alshareef, 2019; Boelens et al., 2017; Brown, 2016). Current research efforts are thus focused on enhancing the effectiveness of blended learning and its facilitation of independent learning practices.

2.2 Gamification in education

Gamification in education signifies the integration of game design elements into teaching activities that are not inherently game-related. This approach is distinct from game-based learning, where the primary focus is on engaging learners in gameplay to acquire knowledge. Gamification introduces game dynamics into non-gaming environments to enrich the learning experience (Alsawaier, 2018).

With the progression of technology, gamification has become increasingly prevalent within educational frameworks, aiming to amplify student engagement, motivation, and interactivity (Oliveira et al., 2023). Empirical evidence supports that gamification can effectively address issues such as the lack of motivation and frustration in educational contexts (Alt, 2023; Buckley & Doyle, 2016). Components like levels and leaderboards have been successful as external motivators, promoting a competitive spirit among learners (Mekler et al., 2017). Furthermore, research indicates that gamification can have enduring effects on student participation, fostering beneficial learning behaviors (Alsawaier, 2018).

Despite these positive aspects, some scholarly inquiries have presented a more nuanced view, suggesting that gamification does not unilaterally enhance academic outcomes. These varying results invite deeper investigation into the conditions under which gamification can truly enhance the educational experience (Oliveira et al., 2023). In light of such findings, recent gamified designs have increasingly emphasized personalization, taking into account the unique characteristics, needs, and preferences of each student. Studies have explored the tailoring of gamification frameworks to align with diverse student profiles (Dehghanzadeh et al., 2023; Ghaban & Hendley, 2019), learning styles (Hassan et al., 2021), pedagogical approaches, and knowledge structures (Oliveira et al., 2023). However, the literature still presents contradictory findings, and there is a relative dearth of research focusing on learning outcomes (Oliveira et al., 2023).

2.3 Adaptive assessment in education

Adaptive learning harnesses technological advancements to create a supportive educational environment where instructional content is tailored to individual learning processes (Muñoz et al., 2022). This pedagogical approach is grounded in the principle of differentiated instruction, allowing for the personalization of educational resources to meet diverse learning requirements (Reiser & Tabak, 2014).

Adaptive assessments, stemming from the philosophy of adaptive learning, dynamically adjust the difficulty of questions based on a learner's previous answers, terminating the assessment once enough data is collected to form a judgment (Barney & Fisher Jr, 2016). In the digital age, with the proliferation of e-learning, there has been a significant shift towards adaptive computer-based assessments (Muñoz et al., 2022), utilizing AI-based modeling techniques (Coşkun, 2023), and emotion-based adaptation in e-learning environments (Boughida et al., 2024). These assessments are characterized by their ability to modify testing parameters in response to student performance, employing machine learning algorithms to ascertain a student’s proficiency level.

Prior studies on adaptive methods have revealed several advantages, such as delivering personalized feedback promptly, forecasting academic achievement, and facilitating interactive learning support. These advantages extend to potentially enhancing learner engagement and outcomes (Muñoz et al., 2022). However, adapting instruction to cater to varied skill levels remains a challenge, as does addressing the issues of demotivation and anxiety among students undergoing assessment (Akhtar et al., 2023). Consequently, current research is concentrated on boosting student motivation and engagement in adaptive assessments.

In the field of gamified education, adaptive gamification aims to merge adaptive learning principles with game elements to enrich the learning experience. This approach has been explored through the use of data mining techniques on student logs to foster motivation within adaptive gamified web-based environments (Hassan et al., 2021). Despite these innovative efforts, empirical research on gamified adaptive assessment is limited, as the field is still developing.

2.4 Integration of blended learning and gamified assessment

The combination of blended learning with gamified assessment has been recognized for its potential to increase student engagement, a critical factor often lacking in online learning compared to traditional classroom settings (Dumford & Miller, 2018; Hill & Smith, 2023). Studies investigating the role of gamification within online learning environments have found that it can enhance students’ achievement by fostering greater interaction with content (Taşkın & Kılıç Çakmak, 2023). Moreover, gamified activities that demand active participation can promote active engagement (Özhan & Kocadere, 2020).

Investigations into the efficacy of Gamified Assessment in online environments suggest that students may reap the benefits of its motivational potential. For instance, research has adapted motivational formative assessment tools from massively multiplayer online role-playing games (MMORPGs) for use in cMOOCs, demonstrating positive outcomes (Danka, 2020). Another study compared the effects of traditional online assessment environments to those employing gamified elements, such as point systems, observing the impact on student task completion and quality in mathematics assessments (Attali & Arieli-Attali, 2015). Collectively, these studies indicate that gamified tests can indirectly benefit learning by enhancing the instructional content.

While many studies affirm the efficacy of gamified tests as a valuable, cost-effective tool for educators in blended learning environments (Sanchez et al., 2020), there is a noted gap in research addressing individual differences within gamified testing. Particularly, empirical research on adaptive gamified assessment is scarce, with more focus on the computational aspects of system development than on the impacts on motivation and academic achievement. Furthermore, while studies suggest that gamified tests may enhance the 'testing effect'—the phenomenon where testing an individual's memory improves long-term retention—most of this research is centered in higher education (Pitoyo & Asib, 2020).

The use of gamification spans various educational levels, from primary and secondary schooling to university and lifelong learning programs. However, research focusing on the implementation of gamification in primary and secondary education tends to prioritize the perspective of educators and the application within instructional activities (Yang & Ogata, 2023), rather than the online assessment itself. Therefore, this study aims to advance the empirical understanding of the application of gamification in assessments and its potential to improve learning outcomes, particularly in early education.

3 Theoretical framework

3.1 Self-determination theory (SDT)

Self-Determination Theory (SDT) is a well-known theory of motivation that offers an in-depth understanding of human needs, motivation, and well-being within social and cultural environments (Chiu, 2021). Gamification, which applies gaming elements in non-game settings, frequently employs SDT to address educational challenges in both gamified and online learning platforms (Chapman et al., 2023). SDT distinguishes itself by its focus on autonomous versus controlled forms of motivation and the impact of intrinsic and extrinsic motivators, as characterized by Ryan and Deci (2000). Unlike intrinsic motivation, which is driven by internal desires, extrinsic motivation relies on external incentives such as rewards, points, or deadlines to elicit behaviors—commonly seen in the reward structures of gamified learning environments. In these adaptive gamified assessments, the provision of points and rewards for task completion serves to regulate extrinsic motivation, offering various rewards and titles each time a student completes an exercise task.

SDT is a comprehensive theory that explores the intricacies of human motivation. A subset of SDT, Cognitive Evaluation Theory, postulates that three innate psychological needs—autonomy, competence, and relatedness—propel individuals to act (Deci & Ryan, 2012). Autonomy is experienced when individuals feel they have control over their learning journey, making choices that align with their self-identity, such as selecting specific content areas or types of questions in an adaptive gamified assessment. Competence emerges when individuals encounter optimal challenges that match their skills, where adaptive gamified assessments can adjust in difficulty and provide feedback, thereby promoting skill acquisition and mastery. Relatedness is the sense of connection with others, fostered by supportive and engaging learning environments. In gamified contexts, this can be achieved through competitive elements and parental involvement in the learning process, enhancing the learning atmosphere.

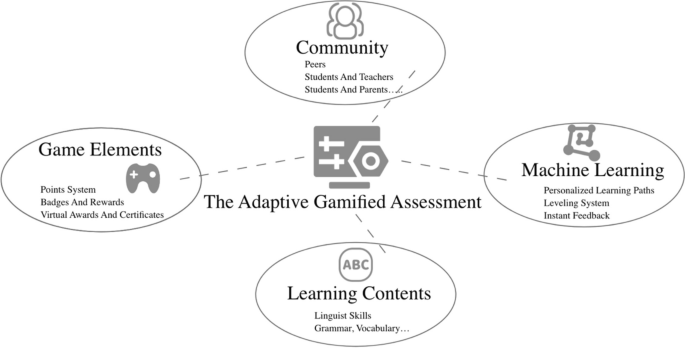

The fulfillment of these psychological needs, particularly those of autonomy and competence, is central to fostering intrinsic motivation according to SDT. Figure 1 examines the adaptive gamified assessment process and how it aligns with SDT.

3.2 Principles of language assessment

The adaptive gamified assessment in this study utilizes Quizizz, an online educational technology platform that offers formative gamified tests to help students develop academic skills in various subjects, including English language (Göksün & Gürsoy, 2019). Drawing on the five principles of language assessment as outlined by Brown and Abeywickrama (2004), this study analyzes the adaptive gamified assessment. These principles—authenticity, practicality, reliability, validity, and washback—are foundational in foreign language teaching and assessment.

Practicality refers to the flexibility of the test to operate without constraints of time, resources, and technical requirements. Quizizz’s adaptive assessments are seamlessly integrated into blended learning environments, designed for time efficiency, and require minimal resources, making them suitable for a broad range of educational contexts. The platform's user-friendly design ensures that assessments are easily administered and completed by students, necessitating only an internet connection and a digital device (Göksün & Gürsoy, 2019).

Reliability is the extent to which an assessment consistently yields stable results over time and across different learner groups, providing dependable measures of language proficiency. Quizizz's algorithms adapt task difficulty based on learner responses, offering consistent outcomes and measuring student performance reliably over time (Munawir & Hasbi, 2021).

Validity concerns the assessment's ability to accurately measure language abilities in alignment with intended learning outcomes and real-world language application. Quizizz's assessments measure language skills that correlate directly with curriculum-defined learning outcomes, ensuring that results are valid representations of a student's language capabilities. The gamified context also mirrors competitive real-life scenarios, enhancing the authenticity of language use (Priyanti et al., 2019).

Authenticity indicates that assessments should mirror real-life language usage, providing tasks that are meaningful and indicative of actual communication situations. Quizizz's assessments incorporate tasks resembling real-world communicative scenarios, such as reading passages, interactive dialogues, and written responses that reflect authentic language use (Brown & Abeywickrama, 2004).

Washback refers to the influence of assessments on teaching and learning practices, which should be constructive and foster language learning. Quizizz's immediate feedback from adaptive assessments can positively affect teaching and learning. Instructors can utilize the results to pinpoint student strengths and areas for improvement, customizing their teaching strategies accordingly. Students benefit from being challenged at the appropriate level, bolstering motivation and facilitating the acquisition of new language skills in a gradual, supportive manner (Munawir & Hasbi, 2021).

Previous research has demonstrated that Quizizz has a significant impact on academic performance across various educational institutions (Munawir & Hasbi, 2021). As an exemplar of gamified adaptive assessment, Quizizz is designed to be practical and reliable while offering valid and authentic assessments of language proficiency. Moreover, it strives for a positive washback effect on the learning process, promoting effective language learning strategies and accommodating personalized education.

4 Methodology

4.1 Research design

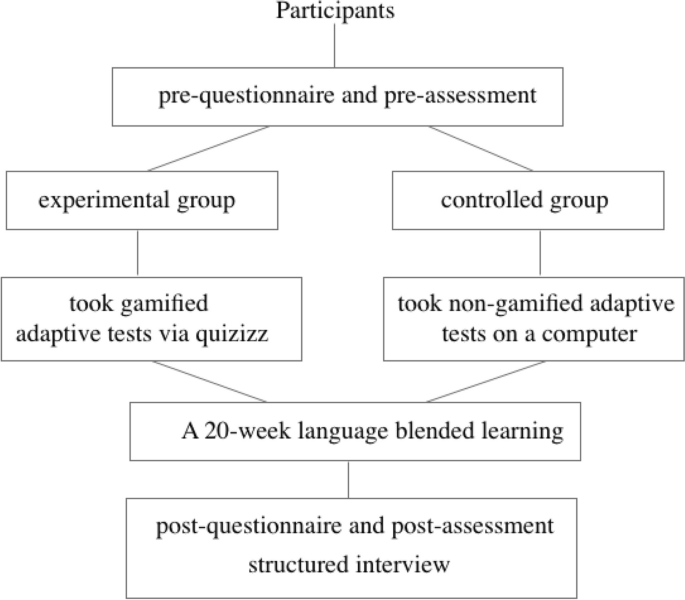

This study employed a controlled experimental design within a quantitative research framework. The methodology involved several stages, as illustrated in Fig. 2. Firstly, participants were selected based on their responses to a pre-questionnaire and a pre-assessment, ensuring comparable baseline levels in English proficiency and computer literacy among all participants. Subsequently, participants were randomly assigned to either the control or the experimental group to ensure variability and minimize bias. Over a period of 20 weeks, a blended language learning intervention was administered to both groups. This intervention involved accessing identical online learning resources before and after traditional classroom sessions, with equal amounts of offline instruction time. Daily assessments were conducted throughout the intervention period. The experimental group completed gamified adaptive tests via Quizizz, while the control group undertook non-gamified adaptive tests on a computer. Upon completion of the intervention, surveys were conducted to assess the motivation levels of both groups and compare their English language proficiency. Data were collected from both pre- and post-assessments, as well as responses from the questionnaires and structured interviews.

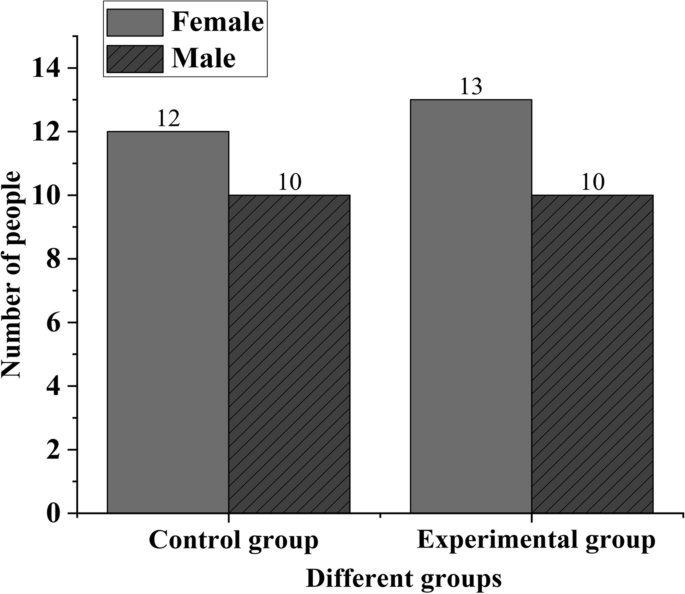

4.2 Participants

Forty-five English learners from primary schools in China, aged 8 to 10 years (M = 9.40, SD = 0.62), participated in this study. The sample comprised 25 girls (55.56%) and 20 boys (44.44%). Insights into students' previous experiences, motivations for formative assessments, and attitudes toward language learning were gathered through a pre-questionnaire. Informed consent was obtained from all participants and their guardians, and confidentiality and anonymity were maintained throughout the study. Participants see in Fig. 3 were randomly divided into a control group (n = 22; 12 girls and 10 boys) and an experimental group (n = 23; 13 girls and 10 boys). The experimental group received instructions on completing and utilizing the adaptive gamified assessment, Quizizz, while the control group completed non-gamified adaptive tests on a computer. Both groups adopted the same blended learning model and were informed of identical deadlines for weekly formative assessments, requiring an accuracy rate of over 90%. Immediate feedback was provided on the accuracy rates, and participants were informed they could attempt the assessment again if the target was not met.

4.3 Instruments

The study utilized Quizizz's Adaptive Question Bank mode, offering a range of question difficulties and allowing students to progress at their own pace. The questionnaire was adapted from the Student Evaluation of Educational Quality (SEEQ), which has demonstrated a high level of reliability, with Cronbach's alpha ranging from 0.88 to 0.97. Additionally, according to Pecheux and Derbaix (1999), the questionnaire was designed to be as concise as possible for young learners and was administered in their native language, Chinese.

The content of the questionnaire includes a 5-point Likert scale used to measure students' attitudes toward adaptive gamified tests. The response options are as follows: strongly agree = 9, agree = 7, neutral = 5, disagree = 3, and strongly disagree = 1. The statements cover various aspects of gamified testing, including Engagement and Enjoyment, exemplified by 'You enjoy learning new words through game tests. Game tests make learning grammar and vocabulary more fun for you.' Anxiety and Confidence, as indicated by 'Game tests help you feel less worried about making mistakes in learning.' Understanding and Retention, highlighted by 'Playing educational games helps you understand your lessons better.' And preference over traditional testing methods, as shown by 'You prefer taking quizzes when they are like games compared to regular tests.' This total score will provide a cumulative measure of their attitude toward gamified language tests. In addition, there are questions asking participants to express their overall satisfaction with the blended learning experience as a percentage. This metric is instrumental in assessing the role of gamified testing within the blended learning framework. Furthermore, there are specific aspects of gamification: binary yes/no questions that delve into specific components and potential effects of gamified tests, such as the impact of leaderboards and rewards on motivation, and willingness to spend extra time on gamified tests.

Moreover, to explore the impact of adaptive gamified assessment on motivation, structured interviews were conducted with the experimental group. The questions, adapted from Chiu (2021), primarily focused on aspects of motivation such as amotivation, external regulation, intrinsic motivation, and the psychological needs related to relatedness, autonomy, and competency, as seen in Table 1. Responses were quantified on the same Likert scale, with options ranging from 'strongly agree' to 'strongly disagree.'

5 Results and discussion

5.1 Comparison language learning attitude scores and satisfaction of participants

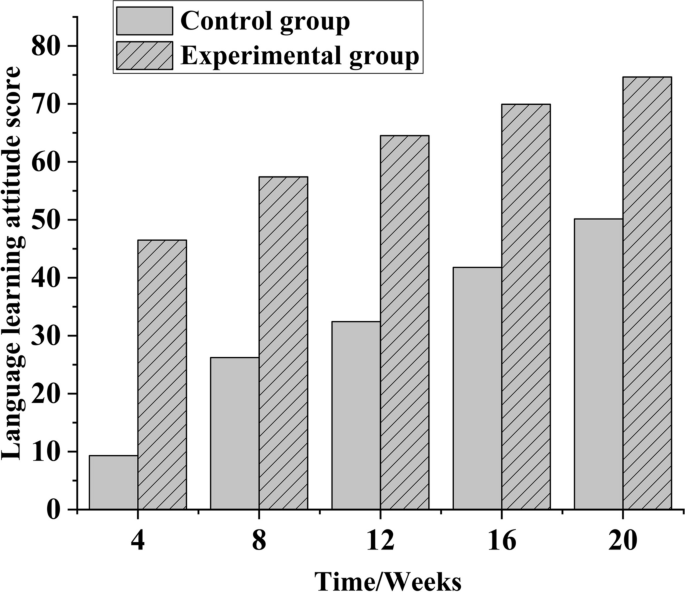

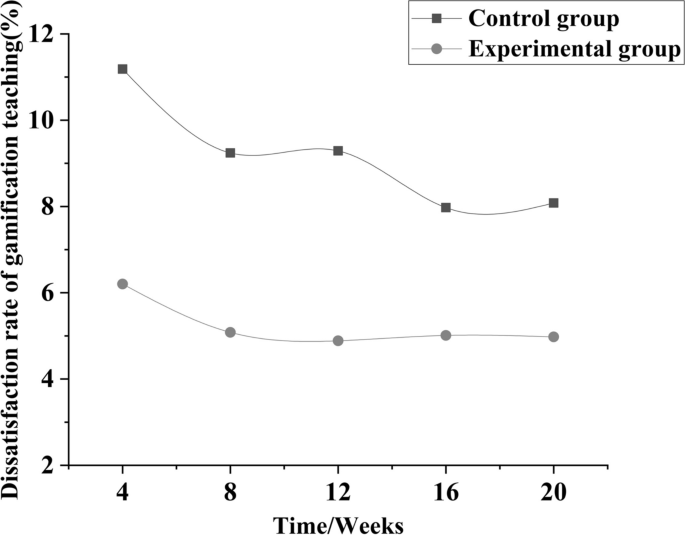

To analyze the impact of adaptive gamified assessments on learners, the trajectory of language learning attitude scores and satisfaction percentage for two groups over the course of the experiment was explored, with results depicted in Fig. 4 and Fig. 5.

In Fig. 4, the total score of language learning attitude for the control group's online assessment and the experimental group's adaptive gamified assessment demonstrates an increasing trend as the experiment progressed. After 4 weeks, the language learning attitude scores of the control and experimental groups were 10 and 47, respectively. By week 16, the experimental group's score increased to 70, and after 20 weeks, the control group's score was 50, while the experimental group's score reached 75. A paired-samples t-test conducted via SPSS indicated that the attitude scores were significantly higher in the experimental group than in the control group (t(44) = -14.47, p < 0.001, SD = 4.73), as detailed in Table 2. This significant difference in attitude scores demonstrates the effectiveness of the adaptive gamified assessment in enhancing the language learning attitude of students over the duration of the experiment.

Figure 5 reveals that as the experiment progressed, the students' dissatisfaction rates with gamification online learning decreased significantly in both groups. Initially, after 4 weeks, the average dissatisfaction rate for the control and experimental groups was 11% and 6%, respectively. As the experiment continued, the dissatisfaction rates declined, dropping to about 5% in the experimental group and 8% in the control group after 20 weeks. Paired t-test results further show a significant decrease in dissatisfaction (t(44) = 10.13, p < 0.001, SD = 0.87). This suggests a marked downward trend in students' dissatisfaction with gamified online learning over the duration of the study, in accordance with their attitudes towards adaptive gamified assessment.

Our research found that students maintain a positive attitude towards the blended learning model of online assessment, which aligns with previous research (Abduh, 2021; Albiladi & Alshareef, 2019), indicating that e-assessment can benefit online learning and teaching. However, a deeper comparison between non-gamified and gamified adaptive testing groups in terms of satisfaction and students' subjective perceptions reveals differences. The experimental group, which incorporated gamified adaptive testing, demonstrated a more positive attitude, corroborating the positive role of gamification in education as outlined by Bolat and Taş (2023). Gamified assessment promotes student motivation in a manner consistent with previous research (Bennani et al., 2022), and our study has similarly shown that gamified assessment positively influences learners' behaviors and attitudes (Özhan & Kocadere, 2020).

This result appears to contradict the findings of Kwon and Özpolat (2021), which suggest that gamification of assessments had a significantly adverse effect on students' perceptions of satisfaction and their experience of courses in higher education. Our findings, however, indicate that adaptive gamified assessments enhance motivation and engagement, thus contributing positively to the learning process for young learners. Furthermore, the motivational levels in the experimental group remained stable, whereas motivation in the control group decreased over time. This suggests that adaptive gamified assessments may help to sustain or enhance learner motivation within a blended learning environment.

5.2 Effect of adaptive gamified assessment on learners' motivation

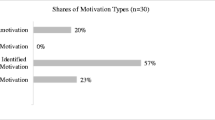

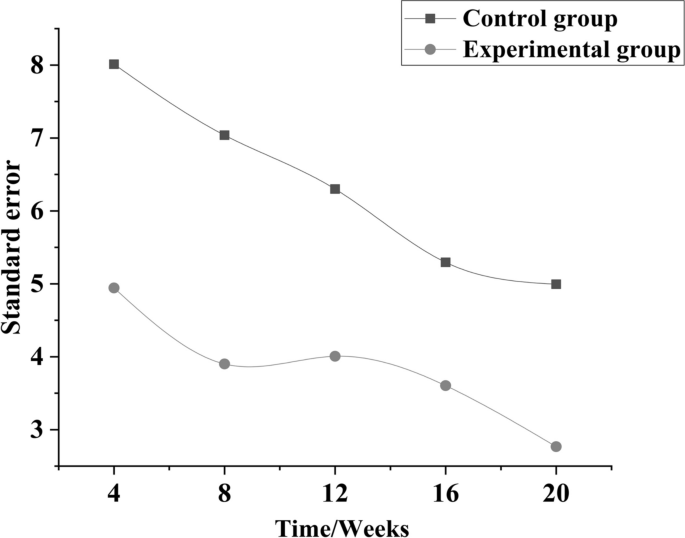

To further examine the effect of adaptive gamified assessments, the standard error of dissatisfaction for both groups was evaluated, while also including a statistical analysis of the distribution of motivation within the experimental group. The outcomes of these analyses are depicted in Fig. 6.

In Fig. 6, a notable decrease in standard error scores for both the control and experimental groups is observed as the experiment progresses. Initially, after 4 weeks, the standard error scores stood at 8 for the control group and 5 for the experimental group. At the end of the 20-week study period, these scores had diminished to 5.4 and 2.8, respectively.

This study's findings are consistent with previous research on the benefits of personalization in gamification. Rodrigues et al. (2021) reported that personalized gamification mitigated negative perceptions of common assessment activities while simultaneously motivating and supporting learners. This reinforces the pivotal role of adaptive assessment in tailoring learning experiences compared to traditional e-assessment methods. Furthermore, structured interviews conducted with the experimental group revealed the distribution of students' motivation in Table 3. For younger learners, external motivation induced by gamified testing was found to be predominant, with 73% of the children acknowledging its influence. Notably, the tests' impact on students' intrinsic motivation was also significant, especially regarding the sense of competency; 69% of students reported feeling an enhancement in their abilities. This finding presents a nuanced difference from Dahlstrøm's (2012) proposition that gamified products and services could both facilitate and undermine intrinsic motivation through supporting or neglecting the basic psychological needs for autonomy and competence. It suggests an alternate conclusion: the gamified adaptive assessment enhances intrinsic motivation and participation. Of course, the effectiveness of such interventions is significantly dependent on individual and contextual factors, thus highlighting the adaptive gamified approach's role in effectively moderating these effects.

5.3 Impact of adaptive gamified assessment on academic performance

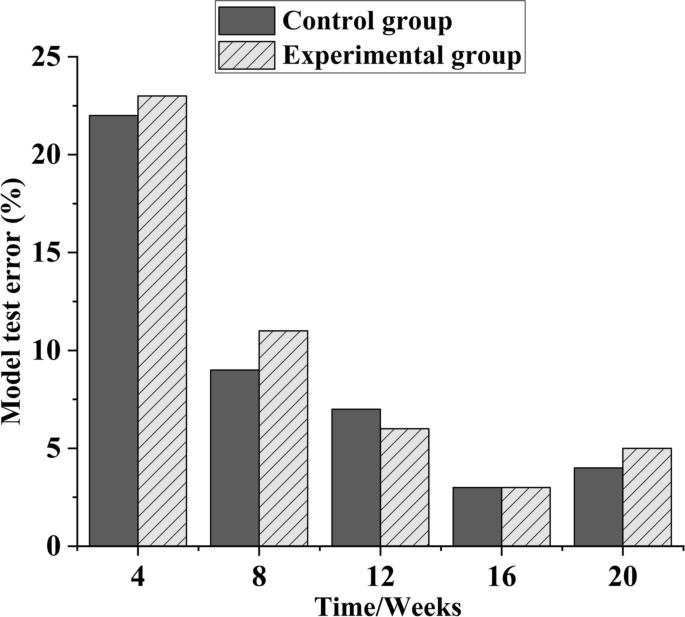

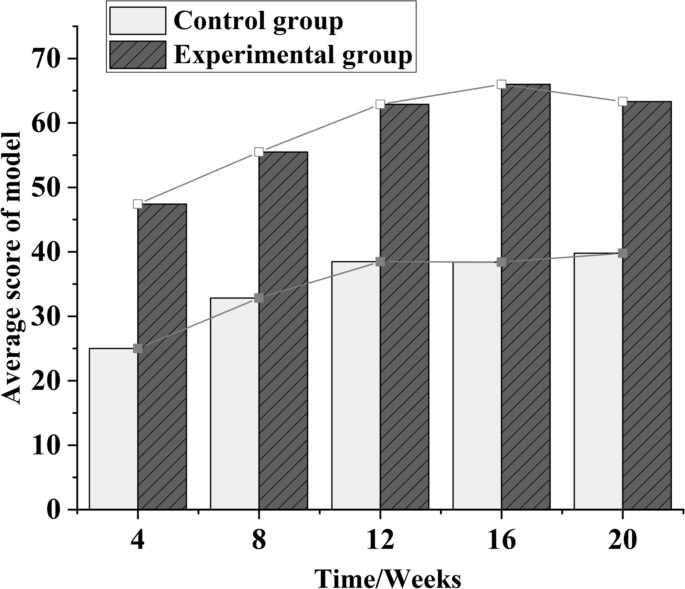

To evaluate the impact of adaptive gamified assessment on learners’ academic performance, the errors and system score data from the model tests of different groups were organized. Figure 7 depicts the error variation of the system model test, while Fig. 8 analyzes the change curve of the system’s average score data.

Figure 7 demonstrates that systematic errors in model testing for both groups exhibited a decreasing trend over the course of the experiment. Initially, after 4 weeks, the model test errors were 22% for the control group and 23% for the experimental group. Following 16 weeks, both groups reached a minimum test error value of 3%. However, after 20 weeks, a rebound and increasing trend in model test errors were observed in both groups. Consequently, setting the experiment duration to 16 weeks appears to effectively improve the accuracy of the gamified assessment. A paired-samples t-test in Table 4 indicates a significant reduction in standard error (t(44) = -25.75, p < 0.001, SD = 2.09), reinforcing the effectiveness of the adaptive gamified strategy optimization in reducing learning standard errors and, consequently, improving learners' efficiency and knowledge acquisition.

As shown in Fig. 8, the average learning scores of students in both groups increased as the experiment progressed. After four weeks, the average learning score was 25 for the control group and 48 for the experimental group. After 16 weeks, these scores increased to 36 and 66, respectively. By week 20, the average score for the experimental group slightly decreased to 63. This indicates that learners' average scores in different experimental groups peaked after 16 weeks. A comprehensive evaluation, which included a comparison of average learning scores and standard deviation (SD) changes, was used to assess the impact of the gamified assessment. The results are detailed in Table 5.

These comparisons reveal that adaptive gamified assessments can enhance students' online learning experiences. This supports the findings of Attali and Arieli-Attali (2015), who demonstrated that under a points-gamification condition, participants, particularly eighth graders, showed higher likeability ratings. Additionally, the effect of gamified assessment on students' final scores was mediated by intrinsic motivation levels. This contrasts with previous studies on gamification in education, such as Alsawaier (2018), which indicated that students in gamified courses exhibited less motivation and lower final exam scores than those in non-gamified classes. Furthermore, the element of peer competition introduced by gamification was more meaningful to students with better results, aligning with the findings of Göksün and Gürsoy (2019). Adaptive gamified tests, serving as a formative assessment platform, have been found to positively influence young learners' learning outcomes. Moreover, gamified testing could reduce language anxiety, consistent with the study by Hong et al. (2022). Compared to traditional gamified assessments, adaptive assessments are better equipped to address issues of repetition and learner capability fit, and they align more closely with the principles of scaffolding in education, thereby enhancing students' academic performance.

6 Conclusion

This research explores the influence of adaptive gamified assessment within a blended learning context on young learners' motivation and academic performance. Grounded in Self-Determination Theory (SDT), this investigation categorizes student motivation and analyzes their engagement and learning capabilities in relation to non-gamified and gamified adaptive tests. The findings suggest that the gamified adaptive test can significantly help learners improve their motivation and foster enhanced language proficiency performance in a blended learning environment.

The study verifies the enhancing effect of gamified evaluation on the internalization of students' motivation (Özhan & Kocadere, 2020) and confirms the regulatory role of gamified elements in blended learning, aiding in increasing student participation and satisfaction (Jayawardena et al., 2021). Furthermore, the positive role of gamification in language learning and as a tool for reinforcing assessment is corroborated (Priyanti et al., 2019). This study extends our understanding of the motivational impacts of gamification in younger education settings, suggesting that while previous research indicated a lesser effect on intrinsic motivation for young learners (Mekler et al., 2017), the adaptive mode of gamified assessment could enhance students' sense of competency and, thereby, their intrinsic motivation. Additionally, this research integrates the relationship between motivation and academic level, suggesting that the transition from external motivations provided by rewards in adaptive gamified assessments to enjoying personalized feedback and growth can enhance satisfaction in blended learning, facilitating the internalization of motivation towards participation and language proficiency.

In terms of managerial and policy implications, the introduction of gamification into blended learning environments is advisable, not only as a teaching method but also as an assessment tool. Gamified assessment, with its interactive nature, can be used to alleviate negative impacts of language learning, such as anxiety and lack of confidence, especially for young learners who may benefit from guided external motivational factors. Educators should implement a variety of formative assessments using technology in evaluation activities, especially to promote active learning.

However, the short duration of the experiment and the limited sample size are insufficient to substantiate long-term positive effects of gamification. Future research should delve into a more nuanced examination of students' intrinsic motivation, with longitudinal tracking to observe the internalization of motivation. The inclusion of delayed tests can help study the long-term effects of gamification. Further research could also compare adaptive gamified experiments with gamified experiments to enhance understanding of how gamification influences the internalization of students' intrinsic motivation.

Data availability

All data and questionnaires seen in the attachment.

Data will be available on request due to privacy/ethical restrictions.

Code availability

Not Applicable.

References

Abduh, M. Y. M. (2021). Full-time online assessment during COVID-19 lockdown: EFL teachers’ perceptions. Asian EFL Journal, 28(1.1), 26–46.

Akhtar, H., Silfiasari, Vekety, B., & Kovacs, K. (2023). The effect of computerized adaptive testing on motivation and anxiety: a systematic review and meta-analysis. Assessment, 30(5), 1379–1390.

Albiladi, W. S., & Alshareef, K. K. (2019). Blended learning in English teaching and learning: A review of the current literature. Journal of Language Teaching and Research, 10(2), 232–238.

Alsawaier, R. S. (2018). The effect of gamification on motivation and engagement. The International Journal of Information and Learning Technology, 35(1), 56–79.

Alt, D. (2023). Assessing the benefits of gamification in mathematics for student gameful experience and gaming motivation. Computers & Education, 200, 104806.

Attali, Y., & Arieli-Attali, M. (2015). Gamification in assessment: Do points affect test performance? Computers & Education, 83, 57–63.

Barney, M., & Fisher, W. P., Jr. (2016). Adaptive measurement and assessment. Annual Review of Organizational Psychology and Organizational Behavior, 3, 469–490.

Bennani, S., Maalel, A., & Ben Ghezala, H. (2022). Adaptive gamification in E-learning: A literature review and future challenges. Computer Applications in Engineering Education, 30(2), 628–642.

Bizami, N. A., Tasir, Z., & Kew, S. N. (2023). Innovative pedagogical principles and technological tools capabilities for immersive blended learning: A systematic literature review. Education and Information Technologies, 28(2), 1373–1425.

Boelens, R., De Wever, B., & Voet, M. (2017). Four key challenges to the design of blended learning: A systematic literature review. Educational Research Review, 22, 1–18.

Bolat, Y. I., & Taş, N. (2023). A meta-analysis on the effect of gamified-assessment tools’ on academic achievement in formal educational settings. Education and Information Technologies, 28(5), 5011–5039.

Boughida, A., Kouahla, M. N., & Lafifi, Y. (2024). emoLearnAdapt: A new approach for an emotion-based adaptation in e-learning environments. Education and Information Technologies, 1–55. https://doi.org/10.1007/s10639-023-12429-6

Bower, M., Dalgarno, B., Kennedy, G., Lee, M. J. W., & Kenney, J. (2015). Design and implementation factors in blended synchronous learning environments: Outcomes from a cross-case analysis. Computers and Education/Computers & Education, 86, 1–17.

Brown, M. G. (2016). Blended instructional practice: A review of the empirical literature on instructors’ adoption and use of online tools in face-to-face teaching. The Internet and Higher Education, 31, 1–10.

Brown, H. D., & Abeywickrama, P. (2004). Language assessment. Principles and Classroom Practices. Pearson Education.

Buckley, P., & Doyle, E. (2016). Gamification and student motivation. Interactive Learning Environments, 24(6), 1162–1175.

Can, M. E. S. E., & Dursun, O. O. (2019). Effectiveness of gamification elements in blended learning environments. Turkish Online Journal of Distance Education, 20(3), 119–142.

Chapman, J. R., Kohler, T. B., Rich, P. J., & Trego, A. (2023). Maybe we’ve got it wrong. An experimental evaluation of self-determination and Flow Theory in gamification. Journal of Research on Technology in Education, 1-20. https://doi.org/10.1080/15391523.2023.2242981

Chiu, T. K. (2021). Digital support for student engagement in blended learning based on self-determination theory. Computers in Human Behavior, 124, 106909.

Chuang, H. H., Weng, C. Y., & Chen, C. H. (2018). Which students benefit most from a flipped classroom approach to language learning? British Journal of Educational Technology, 49(1), 56–68.

Cortés-Pérez, I., Zagalaz-Anula, N., López-Ruiz, M. D. C., Díaz-Fernández, Á., Obrero-Gaitán, E., & Osuna-Pérez, M. C. (2023). Study based on gamification of tests through Kahoot!™ and reward game cards as an innovative tool in physiotherapy students: A preliminary study. Healthcare, 11(4), 578. https://doi.org/10.3390/healthcare11040578

Coşkun, L. (2023). An advanced modeling approach to examine factors affecting preschool children’s phonological and print awareness. Education and Information Technologies, 1–28. https://doi.org/10.1007/s10639-023-12216-3

Cuesta Medina, L. (2018). Blended learning: Deficits and prospects in higher education. Australasian Journal of Educational Technology, 34(1). https://doi.org/10.14742/ajet.3100

Dahlstrøm, C. (2012). Impacts of gamification on intrinsic motivation. Education and Humanities Research, 1–11.

Danka, I. (2020). Motivation by gamification: Adapting motivational tools of massively multiplayer online role playing games (MMORPGs) for peer-to-peer assessment in connectivist massive open online courses (cMOOCs). International Review of Education, 66(1), 75–92.

Deci, E. L., & Ryan, R. M. (2016). Optimizing students’ motivation in the era of testing and pressure: A self-determination theory perspective. In Building autonomous learners (pp. 9–29). https://doi.org/10.1007/978-981-287-630-0_2

Deci, E. L., & Ryan, R. M. (2012). Self-determination theory. Handbook of Theories of Social Psychology, 1(20), 416–436.

Dehghanzadeh, H., Farrokhnia, M., Dehghanzadeh, H., Taghipour, K., & Noroozi, O. (2023). Using gamification to support learning in K‐12 education: A systematic literature review. British Journal of Educational Technology, 55(1), 34–70. https://doi.org/10.1111/bjet.133

Dumford, A. D., & Miller, A. L. (2018). Online learning in higher education: Exploring advantages and disadvantages for engagement. Journal of Computing in Higher Education, 30, 452–465.

Ghaban, W., & Hendley, R. (2019). How different personalities benefit from gamification. Interacting with Computers, 31(2), 138–153.

Göksün, D. O., & Gürsoy, G. (2019). Comparing success and engagement in gamified learning experiences via Kahoot and Quizizz. Computers & Education, 135, 15–29.

Hassan, M. A., Habiba, U., Majeed, F., & Shoaib, M. (2021). Adaptive gamification in e-learning based on students’ learning styles. Interactive Learning Environments, 29(4), 545–565.

Hill, J., & Smith, K. (2023). Visions of blended learning: Identifying the challenges and opportunities in shaping institutional approaches to blended learning in higher education. Technology, Pedagogy and Education, 32(3), 289–303.

Hong, J. C., Hwang, M. Y., Liu, Y. H., & Tai, K. H. (2022). Effects of gamifying questions on English grammar learning mediated by epistemic curiosity and language anxiety. Computer Assisted Language Learning, 35(7), 1458–1482.

Jayawardena, N. S., Ross, M., Quach, S., Behl, A., & Gupta, M. (2021). Effective online engagement strategies through gamification: A systematic literature review and a future research agenda. Journal of Global Information Management (JGIM), 30(5), 1–25.

Khaldi, A., Bouzidi, R., & Nader, F. (2023). Gamification of e-learning in higher education: a systematic literature review. Smart Learning Environments, 10(1). https://doi.org/10.1186/s40561-023-00227-z

Kwon, H. Y., & Özpolat, K. (2021). The dark side of narrow gamification: Negative impact of assessment gamification on student perceptions and content knowledge. INFORMS Transactions on Education, 21(2), 67–81.

Llorens-Largo, F., Gallego-Durán, F. J., Villagrá-Arnedo, C. J., Compañ-Rosique, P., Satorre-Cuerda, R., & Molina-Carmona, R. (2016). Gamification of the learning process: Lessons learned. IEEE Revista Iberoamericana de Tecnologias del Aprendizaje, 11(4), 227–234. https://doi.org/10.1109/rita.2016.2619138

Mekler, E. D., Brühlmann, F., Tuch, A. N., & Opwis, K. (2017). Towards understanding the effects of individual gamification elements on intrinsic motivation and performance. Computers in Human Behavior, 71, 525–534.

Munawir, A., & Hasbi, N. P. (2021). The effect of using quizizz to efl students’engagement and learning outcome. English Review: Journal of English Education, 10(1), 297–308.

Muñoz, J. L. R., Ojeda, F. M., Jurado, D. L. A., Peña, P. F. P., Carranza, C. P. M., Berríos, H. Q., … & Vasquez-Pauca, M. J. (2022). Systematic review of adaptive learning technology for learning in higher education. Eurasian Journal of Educational Research, 98(98), 221–233.

Oliveira, W., Hamari, J., Shi, L., Toda, A. M., Rodrigues, L., Palomino, P. T., & Isotani, S. (2023). Tailored gamification in education: A literature review and future agenda. Education and Information Technologies, 28(1), 373–406.

Özhan, ŞÇ., & Kocadere, S. A. (2020). The effects of flow, emotional engagement, and motivation on success in a gamified online learning environment. Journal of Educational Computing Research, 57(8), 2006–2031.

Pecheux, C., & Derbaix, C. (1999). Children and attitude toward the brand: A new measurement scale. Journal of Advertising Research, 39(4), 19–19.

Pitoyo, M. D., & Asib, A. (2020). Gamification-Based Assessment: The Washback Effect of Quizizz on Students’ Learning in Higher Education. International Journal of Language Education, 4(1), 1–10.

Poon, J. (2013). Blended learning: An institutional approach for enhancing students’ learning experiences. Journal of Online Learning and Teaching, 9(2), 271.

Priyanti, N. W. I., Santosa, M. H., & Dewi, K. S. (2019). Effect of quizizz towards the eleventh-grade english students’reading comprehension in mobile learning context. Language and Education Journal Undiksha, 2(2), 71–80.

Ramírez-Donoso, L., Pérez-Sanagustín, M., Neyem, A., Alario-Hoyos, C., Hilliger, I., & Rojos, F. (2023). Fostering the use of online learning resources: Results of using a mobile collaboration tool based on gamification in a blended course. Interactive Learning Environments, 31(3), 1564–1578.

Rasheed, R. A., Kamsin, A., & Abdullah, N. A. (2020). Challenges in the online component of blended learning: A systematic review. Computers & Education, 144, 103701.

Reiser, B. J., & Tabak, I. (2014). Scaffolding. In The Cambridge Handbook of the Learning Sciences (pp. 44–62). https://doi.org/10.1017/cbo9781139519526.005

Rodrigues, L., Palomino, P. T., Toda, A. M., Klock, A. C., Oliveira, W., Avila-Santos, A. P., … & Isotani, S. (2021). Personalization improves gamification: Evidence from a mixed-methods study. Proceedings of the ACM on Human-Computer Interaction, 5(CHI PLAY), 1–25.

Ryan, R. M., & Deci, E. L. (2000). Intrinsic and extrinsic motivation: Classic definitions and new directions. Contemporary Educational Psychology, 25, 54–67.

Saleem, A. N., Noori, N. M., & Ozdamli, F. (2022). Gamification applications in E-learning: A literature review. Technology, Knowledge and Learning, 27(1), 139–159.

Sanchez, D. R., Langer, M., & Kaur, R. (2020). Gamification in the classroom: Examining the impact of gamified quizzes on student learning. Computers & Education, 144, 103666.

Schell, J. (2008). The Art of Game Design: A Book of Lenses. Elsevier Inc

Taşkın, N., & Kılıç Çakmak, E. (2023). Effects of gamification on behavioral and cognitive engagement of students in the online learning environment. International Journal of Human-Computer Interaction, 39(17), 3334–3345.

Yang, C. C., & Ogata, H. (2023). Personalized learning analytics intervention approach for enhancing student learning achievement and behavioral engagement in blended learning. Education and Information Technologies, 28(3), 2509–2528.

Funding

Open access funding provided by SCELC, Statewide California Electronic Library Consortium

Author information

Authors and Affiliations

Contributions

Zhihui Zhang, 60%, conceived and designed the experiments, performed the experiments, prepared the figures and/or tables, drafted the work.

Xiaomeng Huang, 40%, analyzed the data, performed the experiments, wrote the code, designed the software or performed the computation work, drafted the work, revised it.

Corresponding author

Ethics declarations

Ethics approval

Consent to participate—(include appropriate consent statements).

Conflicts of interest/Competing interests

There is no conflicts of interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, Z., Huang, X. Exploring the impact of the adaptive gamified assessment on learners in blended learning. Educ Inf Technol (2024). https://doi.org/10.1007/s10639-024-12708-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10639-024-12708-w