Abstract

Background

Electronic portfolios (e-portfolios) are valuable tools to scaffold workplace learning. Feedback is an essential element of the learning process, but it often lacks quality when incorporated in ePortfolios, while research on how to incorporate feedback into an ePortfolio design is scarce.

Objectives

To compare the ease of use, usefulness and attitude among three feedback formats integrated in an ePortfolio: open-text feedback, structured-text feedback and speech-to-text feedback.

Methods

In a mixed method designed experiment, we tested with 85 participants from different healthcare disciplines, three feedback formats in an ePortfolio prototype. Participants provided feedback on students’ behaviour after observing video-recorded simulation scenarios. After this, participants completed a questionnaire derived from the Technology Acceptance Model (TAM). The experiment ended with a semi-structured interview.

Results

Structured-text feedback received highest scores on perceived ease of use, usefulness, and attitude. This type of feedback was preferred above open-text feedback (currently the standard), and speech-to-text feedback. However, qualitative research results indicated that speech-to-text feedback is potentially valuable for feedback input on-premise. Respondents would use it to record short feedback immediately after an incident as a reminder for more expanded written feedback later or to record oral feedback to a student.

Implications

Structured-text feedback was recommended over open-text feedback. The quality of the speech-to-text technology used in this experiment, was insufficient to use in a professional ePortfolio but holds the potential to improve the feedback process and should be considered when designing new versions of ePortfolios for healthcare education.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In healthcare education, there is a general shift towards competency-based education where students demonstrate learned knowledge and skills to achieve specific predetermined competencies (Ross et al., 2022). To acquire this new knowledge and skills, feedback—which can include self-, peer-, patient-, and instructor feedback (Holmboe et al., 2015)—is considered crucial on the students’ learning process (Baird et al., 2016; Carter et al., 2017; Sidebotham et al., 2018; Tekian et al., 2017; Tickle et al., 2021; Van Ostaeyen et al., 2022).

Since the early 2000s, ePortfolios have replaced paper-based portfolios and have gone through a series of technical advancements (Buzzetto-More, 2006; Gray et al., 2019; Segaran & Hasim, 2021). Nevertheless, the input formats for feedback among stakeholders in ePortfolios have barely evolved. They are heavily text-based (Bing-You et al., 2017; Birks et al., 2016; Bramley et al., 2021), which may be a legacy from the original paper-based portfolios and challenges with handling media in the early days of the Internet (Marty et al., 2023; Siegle, 2002). In addition, feedback is often suboptimal and research on how to incorporate feedback into an ePortfolio design is scarce (Tickle et al., 2022).

Granted the discrepancy between technological advancement and feedback format, there is a need to investigate new ways to capture feedback in ePortfolios. To improve the use of feedback in ePortfolios within healthcare education we compared the preference for three feedback formats with participants from different disciplines within healthcare education. This leads to the following research objectives:

-

(1)

to compare the usefulness, ease of use and attitude towards three feedback formats: open-text feedback, structured-text feedback or speech-to-text feedback.

-

(2)

to explore recommendations for the design of feedback formats for future ePortfolios.

1.1 Implementation of feedback in ePortfolios

An ePortfolio (also known as a digital portfolio, online portfolio, e-portfolio, or eFolio) can be defined as a collection of electronic evidence assembled and managed by a user, usually online (Cedefop, 2023). The digital switch has brought several practical advantages to students and supervisors. They offer the opportunity for wireless synchronization, preventing the loss of documents, no size restrictions, reduced printing costs, increased security, avoiding duplication, ease of use, and the opportunity to receive digital notifications when feedback was provided (Janssens et al., 2022). Moores and Parks (2010) proposed 12 tips for introducing ePortfolios in health professions education, which have been updated with the introduction of newer technologies and possibilities (Siddiqui et al., 2022).

1.2 Benefits and challenges of effective feedback in clinical education

Effective feedback is defined as a process whereby learners obtain information about their work in order to appreciate the similarities and differences between the appropriate standards for any given work, and the qualities of work itself, in order to generate improved work (Baud & Molley, 2013). Effective feedback is central to support cognitive, technical and professional development in healthcare education (Archer, 2010). It plays an important role in supporting the learner to reflect, and allowing progress to be made on the experience ladder. When it is systematically delivered from credible sources, individual feedback can change clinical performance (Veloski et al., 2006).

Research mainly focusses on the content of the feedback and provides guidelines on how to give feedback during assessments (Bing-You et al., 2017; Van der Leeuw & Slootweg, 2013). Some of the principles of effective feedback are that it should be clear, specific and based on direct observations. It should be communicated through an appropriate setting and focus on the performance, not on the individual. The wording should be neutral, non-judgmental and emphasize positive aspects, this by being descriptive, rather than evaluative (Qureshi, 2017).

Bing-You et al. (2017) argue that there are significant gaps in feedback. First, most of the feedback given to the students is given orally or written. More progressive forms such as feedback cards, audio tapes and videotapes of feedback interactions are described in literature in a limited number of cases. Moreover, evaluations on a preferred type of feedback and use are lacking.

Second, written feedback is time-consuming and, therefore, not given on the spot. Healthcare professionals work in a fast-paced environment and struggle with providing timely feedback and reflection on actions. Students and mentors on the work floor are challenged to balance between teaching moments and responding to the demands of patient care. An example of this struggle for qualitative feedback is written by Kamath et al. (2015) in their experimental study with a head-mounted high-definition video camera (GoPro HERO3). They recorded a patient during induction of general anaesthesia to provide qualitative feedback. This study highlights the need for a more dynamic and different type of feedback. The introduction of smartphones on the work floor in the healthcare disciplines opens up more digital reflections (Marty et al., 2023).

Nonetheless, despite the attention to feedback, healthcare students have long indicated that they receive insufficient feedback (De Sumit et al., 2004; Liberman et al., 2005) and that it is often mainly noted down by the student, at the end of an internship (Tickle et al., 2022).

1.3 Speech-to-text Technology in Education

The domain of Human Computer Interaction (HCI), how humans and computers interact, evolved at a firm paste over the years making computers more ubiquitous, invisible and easier to work with (Weiser, 1991). Speech technology has proven its benefits and is integrated in commercial successful products such as smartphones, car control, or home speakers (Hoy, 2018).

In an educational context, Automatic Speech Recognition (ASR) technology and speech-to-text technology are less common (Shadiev et al., 2014). The technology supports deaf and hard of hearing students in the lack of spoken classroom information and the translation of auditory to visual adaptations (Kushalnagar et al., 2015). It can also help international- or students with cognitive or physical limitations understand lectures better, take notes concurrently during these lectures, and finish their homework (Shadiev et al., 2014). As the technology got more mature, the target users got broader and speech-to-text was adopted to assist not only students with special needs but also general population of students in a broad context such as improving students’ understanding of a learning content as well as offering guidance to accomplish reflective writing and homework (Hwang et al., 2012; Shadiev et al., 2014).

The usefulness and the experience for users with the use of Natural User Interfaces (NUI) – and other gesture interfaces – are frequently evaluated by researchers and software developers (Guerino & Valentim, 2020). In a recent study comparing speech with text input for document processing, college students preferred typing over speech input even though they perceived speech input simple and helpful (Tsai, 2021). Results showed that functional barriers (i.e. usage, value, and risk barriers) and psychological barriers (i.e. tradition and image barriers) positively affected users’ resistance to change (Tsai, 2021).

In sum, there is a discrepancy between available technology (i.e. AI, audio and video recordings, speech recognition,…) and the options for feedback implemented in current ePortfolios (Birks et al., 2016; Slade & Downer, 2020). Research is needed to confirm that the enhancement of new technology will increase the quality and efficiency of workplace-based feedback and assessment in professional education (Marty et al., 2023; van der Schaaf et al., 2017).

2 Method

2.1 Study Design

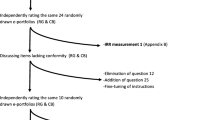

A within-subject and mixed method designed experiment was conducted between 15/02/2021 and 30/03/2021. The study is part of the project SBO SCAFFOLD to develop an evidence-based ePortfolio to support workplace learning in healthcare education. We compared three different feedback formats in an ePortfolio prototype: open-text feedback, structured- text feedback and speech-to-text feedback. The following Fig. 1 explains the sequence of the experiment.

First, a participant watched a video showing a fictious scenario involving a patient, student and mentor in the hospital workplace (Fig. 2). Secondly, the participant provided feedback in the ePortfolio prototype. Participants were invited to imagine that they were in practice, so to observe and give feedback as they would do in real life. The participants gave feedback to the student in the videos through three different formats (described in detail in Data Collection Tools): by means of open-text input, by answering three structured-text questions or through the speech-to-text transcription with spoken text input. Thirdly, after having watched 2 different scenarios and providing feedback twice in the same feedback format, participants completed a survey derived from the Technology Acceptance Model (TAM) (Davis, 1989) to measure their acceptance of the presented feedback format. Finally, at the end of the experiment, when the participant had tested the 3 feedback formats, a qualitative post-interview was conducted questioning participants about their experience and the use of the different feedback formats in real situations.

2.2 Participants

85 participants from different disciplines in healthcare education were recruited: 35 Midwives (EQF level 6), 23 Nurses (EQF level 5) and 27 Nurses (EQF level 6) (Europass, 2022). Participants had different roles being: 26 students, 24 workplace mentors and 35 supervisors (Table 1) from the region of Flanders, Belgium. All of them were familiar with the use of an ePortfolio, which only offered open-text feedback. Participants were recruited through mailings and posters at three different educational organisations. For their contribution and transport costs, they received a small financial compensation. All participants signed an informed consent before coming to the lab.

2.3 Data collection

The experiment took place in lab circumstances due to Covid countermeasures at the time, restricting external visitors in hospitals. The experiment duration was between 60 to 90 min. Participants received a personal login for the ePortfolio and were classified into one of four groups for the order of scenario presentation.

2.4 Scenarios through video

The researchers created eight videos of approximately three minutes each. Each video reflected a possible scenario between a student and a mentor in the workplace. The actors in these videos were the researchers that are involved in the project. All scenarios took place in a simulated hospital room or in the corridor next to the room where a simulation patient was being treated. For example, one scenario was an intravenous insertion to be performed by a student who was confronted with a patient showing little trust in her skills.

To prevent bias and order effects, the videos were presented in altered order depending on the participant's assigned group for a balanced design (Table 2). This study used a within-subjects design, in which participants had to provide feedback in all conditions (i.e. using all three feedback formats). Each participant watched 2 videos per format, thus 6 scenarios in total.

2.5 Feedback Formats

In the following paragraph we describe the technical details for the ePortfolio prototype that was used. Each time after watching a video scenario on the tablet, participants were presented with the general ePortfolio feedback page. They were instructed to press the ‘Add Feedback’ button. On the feedback page, participants were presented with one out of the three different feedback formats (i.e. open-, structured- and speech-to-text), depending on which group they were assigned. Each feedback module required different user actions in the ePortfolio.

Open-Text Feedback: the open-text feedback module (Fig. 3) showed an open text field with the title “Add feedback”. This type of open text input was common in ePortfolios, and had been used by participants before. There was no space restriction to add feedback.

Structured-Text Feedback: the structured-text feedback module (Fig. 4) had three questions with accompanying open text fields for the answers: (1) What were the strong points? What did the student do well? (2) What were the working points? What went less well? and (3) What concrete tips can you give the student to improve a subsequent similar situation? The questions were based on Pendleton’s rule (Pendleton, 1984) a model to provide feedback in advanced life support training.

Speech-to-text: The speech-to-text module (Fig. 5) converted speech fragments into text by the use of advanced deep learning neural network algorithms for automatic speech recognition (ASR) (Google Cloud, n.d.). For our prototype, the API-powered module of Google was used. To record a fragment, the user taped the record button, talked to the computer, and terminated the recording when finished. The transcription of the recording was done by default. However, the user could choose not to initiate it. Technically the recorded audio file was sent to the Google cloud through the API, where the Cloud processed the file and returned a written transcript. This process was done through a synchronous request: it could only transcribe one file at a time, while other files had to wait in a queue. The transcription typically took a few seconds, whereafter the user received a written interpretation of the audio file. Finally, the audio file was wiped from the cloud and stored on the mandated servers.

Along with the audio file, the transcribed text document was saved and could be accessed and edited by the user. The ePortfolio was a prototype used in Belgium, where Dutch was the main language.

2.6 Survey feedback formats

Numerous theoretical models have been developed to explain users’ acceptance of technology; the Technology Acceptance Model (TAM) is one of the most cited. This model builds on the Theory of Reasoned Action TRA (Ajzen, 1991) in an effort to understand acceptance related specifically to the uptake of new technologies (Davis, 1989). The survey consists of three subscales with 14 items for Perceived Usefulness (PU), 13 items for Perceived Ease of Use (PEOU) and 4 items for Attitude towards the technology. All 31 items were statements that had to be rated on a 5-point Likert scale ranging from “totally disagree” (1) to “totally agree” (5). In total, 8 items were reverse scored for the analysis and the results reflect average score for the subscale items. For example, PU was questioned with items such as “In general, I think this feedback module is useful for my job as a supervisor” or “This feedback module helps me to save time”. The PEOU subscale consisted of items such as "I often make mistakes when using this feedback module” or “Interacting with this feedback module requires a lot of mental effort”. Finally, an example of the Attitude subscale is “Usage of this feedback module provides a lot of advantages”.

2.7 Post-Interview

After the experiment, the qualitative post-interview was conducted by the same researcher. This phase involved a personal semi-structured interview with a duration ranging from 10 to 15 min. All 85 participants participated in the post-interviews, but due to changes in the interview script, the first 12 participants did not rate the top three format preference and were excluded from the analysis. Following questions were asked: Are there positive or negative remarks on the different feedback formats and do you see areas for improvement?, Can you ranking your preferred feedback format from 1 to 3 and motivate why?, What is the possible impact of real-life situations in the workplace when using the feedback formats? All interviews were conducted in (omitted) (the native language of the participants) and were transcribed by the interviewer.

2.8 Data analysis

The data from the usability survey of the different feedback formats was analysed with JASP software (JASP Team, 2022). Different repeated measures ANOVA models were performed for the three subscales (i.e. PEOU, PU and Attitude) with feedback format condition as a within-subjects factor that had three levels (i.e. open, structured, speech-to-text). Degrees of freedom were corrected using Greenhouse–Geisser estimates when Mauchly’s test indicated that the assumption of sphericity had been violated, and partial eta squared effect sizes are reported. Pairwise comparisons between feedback format conditions were conducted with holm-adjusted p values and Cohen’s d effect sizes are reported.

To analyse the data from the post-interviews, thematic content analysis was used (Guest et al., 2014) in order to find common themes in participants’ responses. This means that data were explored without any predetermined framework and themes were inductively drawn from the data. Two researchers (ODR, JM) read the open-ended responses and interview notes several times to get acquainted with the data. Once completed, the emerging themes were discussed between the same researchers.

3 Results

We first describe the quantitative results from the survey, followed by the qualitative results from the post-interviews. The sample of participants comprised 10 men and 75 women. They were between 18 and 58 years old, the mean age was 35,5 (SD 12,1).

3.1 Survey on evaluation of feedback formats

Table 3 presents the self-reported perceived Usefulness (PU), Ease of Use (PEOU) and Attitude towards the feedback formats used in the ePortfolio. The results are elaborated in the following paragraphs.

Usefulness

The analysis confirmed that the three feedback format conditions significantly differed in reported PU, F(2,168) = 14.185, p < 0.001, ηp2 = 0.14 (see Fig. 6). Post-hoc analysis revealed a significant difference in reported usefulness between all conditions. The structured-feedback format was perceived as the most useful, followed by respectively the open-feedback format and the speech-to-text format.

Ease of Use

Similarly, the analysis for PEOU, F(1.85,155.75) = 52.654, p < 0.001, ηp2 = 0.39, also indicated there were differences between the feedback formats (Fig. 7). Consistently, participants reported a greater subjective experience of ease of use for both the structured- and open-feedback formats. Compared to these formats, the speech-to-text feedback format was perceived as more difficult to use (with a mean difference of 0.81 on the scale).

Attitude

Finally, also the analysis for attitude confirmed a similar difference between the feedback formats F(1.84,154.21) = 10.211, p < 0.001, ηp2 = 11. Users reported a greater attitude and thus preferred both structured- and open-feedback formats, when compared to the speech-to-text format (Fig. 8).

3.2 Preferred feedback format

In the post-interview, participants were asked to decide on the top three preferences of the feedback formats. Results of the non-parametric Friedman test indicated there was a statistically significant difference in preference of feedback format, χ2(2) = 26.042, p < 0.001, Kendall’s W = 0.020. Post hoc analysis with Conover’s comparisons shows that structured feedback was rated better in the top three compared to open- and speech-to-text feedback, all p’s < 0.001. There was no significant difference in the rating between open and speech-to-text feedback in the top three, p = 0.559.

3.3 Thematic analysis of post-interviews

The qualitative post-interview revealed that, although participants did not score the speech-to-text feedback as the best format, many participants would like to use such type of oral feedback during their daily job. However, the quality of the transcription of the speech-to-text feedback was below participants’ expectations.

The analysis of the qualitative post-interviews resulted in eight themes, later refined to three based on their similarities: (1) Usability and quality of feedback (2) New context of use (3) Shortcomings and recommendations to speech-to-text feedback. Table 4 summarizes the outcome per feedback format and theme.

Usability and quality of feedback

Below, we structure the positive and negative comments about the perceived usability and quality of feedback for each feedback format:

(1) Open-text feedback was the common way to provide feedback in ePortfolios. Consequently, the participants perceived this format easy to use and especially suited mentors and supervisors already experienced in providing feedback. It was praised because users felt free to write feedback as they were used to doing, and ideally, this form of feedback resulted in a well-considered and nuanced story. However, participants recognized the risk of telling an incomplete or too one-sided story (e.g. only negative). Some participants critically reflected on this format and mentioned that open-text feedback did not challenge them to improve the quality of their feedback as was realized with the directed questions (structured feedback) or by incorporating audio recorded feedback fragments during the day into the portfolio as speech-to-text feedback does.

(2) Structured-text feedback in this experiment proposed three questions: 1. What were the strong points? What did the student do well?; 2. What were the working points? What went less well?; 3. What concrete tips can you give the student to improve a subsequent similar treatment? The majority of participants mentioned that the questions guided them to organise their thoughts and to provide balanced feedback with positive elements and points of improvement. However, the feedback format with an open box under each question gave some participants, especially experienced mentors and supervisors, the feeling of repetition if answers to questions overlapped with feedback that they already had written under another question. Some participants recommended adding a fourth text box with an open place to add a personalised title, followed by feedback that did not fit into one of the pre-given boxes.

“This (structured-text) feedback format takes longer than open-text feedback. For the student this is clear, he receives concrete action points; to me, it doesn't matter because I'm already doing this (giving structured feedback) anyway” – R35.

(3) Speech-to-text feedback was new for all participants. In general, participants found it hard to provide oral feedback to the computer or felt awkward doing so. Some found the feedback lacked structure and could, therefore, be confusing for the person receiving it. The intonation was lost when converting speech to text. Subsequently, some nuances were not fully taken into account. Because of the need to rework and correct mistakes in the transcriptions, the tool was not time efficient for the participants, even on the contrary. Participants spontaneously came up with solutions to improve the usability of the tool. An overview can be found below in the shortcomings and recommendations to speech-to-text feedback. However, they believed that, under the condition that the technology would improve, the content and the quality of speech-to-text feedback could become more accurate and detailed compared to the open- and structured text formats. The main reason was that the recording could be made on-premise.

New contexts of use

Nowadays, mentors and supervisors often give feedback at home at the end of the day or on a free day, because of the high workload during patient care. The use of oral feedback during work shifts would give the possibility to shift away from the computer and give feedback immediately on-premise, in the hospital or workplace.

Instead of recording a complete feedback comment on a scenario, as was tested in this experiment, participants mentioned they would rather make short recordings when they noticed an important event in the workplace related to the student’s positive or negative performance or attitude. Afterwards, they would provide more elaborate written feedback later on. By recording just a couple of sentences during the day, the feedback would be more rich and accurate.

Respondents also suggested to recording the oral feedback when given to the student. The recordings would avoid possible discussions with students about what had or had not been said and would save time when writing the final assessment. In sum, the use of speech-to-text feedback in ePortfolios to take notes and to record oral feedback would be beneficial for the amount of feedback given to the students, a crucial element in ePortfolios. To make this shift from providing feedback at home or during shift-hours to feedback on-premise, the accessibility on the smartphone instead of a portable computer is important to consider when designing an ePortfolio. This shift to a new context of use was not encountered with structured-text and open-text feedback.

“I would record something quickly, during working hours. At home I would reread the transcript on PC and wonder if it came across the way I wanted it to”—R44.

“The advantage of recording immediately is that it is faster, and it is a reminder to myself. It feels like having a paper with me to quickly write something down – R35”

Shortcomings and recommendations to speech-to-text feedback

The quality of the transcriptions was the biggest shortcoming of the speech-to-text format. The lack of punctuation, sentences that were not understood or completely differently transcribed, were some of the technical issues encountered. None of the participants could publish the transcribed text without first making corrections and reworking the text. Especially participants speaking a dialect or participants whose native language was not Dutch experienced a poor quality of speech-to-text transcription. On top of that, the specialized healthcare jargon was also difficult for the AI to efficiently transcribe the audio file.

Some participants were not able to record the feedback in one track or needed to retake the audio fragment. They requested a pause or stop button when recording feedback or a memorization list on the screen in the form of a post-it note, which they could consult while providing oral feedback to structure their monologue. Additionally, they preferred to be in a room alone to make the recording. A situation which is not always possible in a real-life context in the hospital or other healthcare settings with shared lunch- and workspaces. The quality of the recording during the experiment was not influenced by environmental variables. But participants warned about possible problems such as Internet availability in the care unit, background noise, restrictions and rules around smartphone use, or transcription with different voices.

“I would prefer to leave feedback (with voice recording) without having anyone around me. Feedback has to grow, is a process, it will be incomplete if I say something quickly”. – R76

To improve the quality of the transcriptions, instructions could be provided. Based on the analysis of the interviews, we list participants’ recommendations:

-

provide tutorials on how to make a speech-to-text recording in the form of a video tutorial or text: pay attention to articulation, avoid non-lexical utterances (e.g. “huh”, “uh”, or erm”), avoid the use of dialects, use complete sentences and no phrases in bullet points, use formal wording, keep the microphone close to the source, limit the background noise

-

make speech-to-text available in different languages to allow participants recode in their native language

-

introduce speech-to-text as a tool to make notes and record oral feedback when given to a student instead of using it for final feedback. This would be beneficial for the amount of feedback given to the student during the period of students’ internship

-

combine the speech-to-text format with the structured-text feedback format. Doing so, the participant will not need to provide long speech input, but shorter answers to the structured questions

-

create pause and stop buttons during the recording to avoid having to repeat the recording

-

create a “post-it note” list which can be personalised with bullet points and which is visible on the side of the screen while giving the oral feedback. It will help the participant to structure his thoughts during the recording.

4 Discussion

This study is new to our knowledge since feedback formats in ePortfolios are underexplored. The input formats for feedback among stakeholders in ePortfolios have barely evolved; they are predominantly based on text input, and neglect new interfaces possibilities (Bing-You et al., 2017; Birks et al., 2016; Bramley et al., 2021). However, the enhancement of new technology will increase the quality and efficiency of workplace-based feedback and assessment in professional education (Marty et al., 2023; van der Schaaf et al., 2017). Up until now, ePortfolios in healthcare education are often designed without a focus on the users’ experiences. Therefore, understanding how users perceive their experiences can lead to improved practices and policies with regard to ePortfolios and should be considered in future research (Slade & Downer, 2020; Strudler & Wetzel, 2008).

In this experimental study, we focussed on ePortfolio users to compare three feedback formats. Structured-text feedback received the highest scores on perceived usefulness, perceived ease of use and attitude towards technology. Participants preferred this type of feedback above open-text feedback, currently the standard, and the less common speech-to-text feedback. An unintended but valuable result of this study was the interest to use the speech-to-text feedback, not to record and transcribe complete feedback comments to students, as was foreseen in the experiment, but for short recordings or to record conversations with a student as a proof that they had occurred. These insights are valuable and different to the initial purpose of the system.

5 Limitations

Previous research already showed that a major limitation of the speech-to-text technology is its accuracy and that only speech-to-text generated text with a reasonable accuracy is useful and meaningful for students (Colwell et al., 2005; Hwang et al., 2012). Ranchal et al. (2013) observed adapted and moderate speed and volume by the speakers using the technology; they speak less spontaneously and with a better fluency. Clear pronunciations and avoidance of non-lexical utterances (e.g. “huh”, “uh”, or erm”), the usage of short sentences, and a good position towards the microphone are common guidelines to improve the quality of the transcriptions. We add in this paper some recommendations on how the outcome of the transcripts can be improved with e.g. a pause button or written notes next to the recording button.

All recommendations should be read, bearing in mind that this ePortfolio prototype used the Google Cloud speech-to-text application in Dutch in November 2021; probably the most commercially available advanced product on the market at that time (Google Cloud, n.d.). However, our experiment indicated that the ease of use, usability and attitude score below expectations of the participants. The product website mentioned it is challenging for the API to create transcripts of specialized domain-jargon (legal, medical, …) which is certainly applicable for this experiment. Outdoors or noisy backgrounds can also affect the end result of the transcript, but this effect could be minimized in this experiment thanks to the lab setting where the experiment took place. However, it should be considered for following research in hospital settings.

Our study was held with respondents with good computer skills and experienced in giving feedback. Years of experience with typing and typing speed were not questioned or captured in advance, which is a limitation of our study. However, we noticed that it impacted the preference for feedback, and especially the older participants (mentors and supervisors) preferred speech technology due to their lower typing skills in comparison with the younger students. This study was limited to the region of Flanders, Belgium, the results can vary in regions with different computer and feedback skills.

Another shortcoming of the study was the fact that participants were questioned in a lab, out of their work context. This was due to the Covid regulations at that time, restricting external visitors in hospitals. Because of this, we could not take all real-life situations into account that could have had an impact on the preferred feedback format, such as being with several people in a crowded room when feedback was given, receiving calls during the feedback,… Also, the cases on which participants provided feedback, were recorded on video in several simulated scenarios and were thus not real-life scenarios in the hospital. However, the lab environment gave researchers the possibility to test with consistent quality of output and more control in an experimental design. The differences with real-life context were later discussed in a qualitative post-interview, which gives us the possibility to prepare a follow-up study on a smaller scale with a more evolved prototype.

The aim for future research was to translate the recommendations into a working prototype of an ePortfolio. The study focussed on the preference for a feedback format, not on the content nor the quality of the feedback given. Future research will amongst others compare the quality of the given feedback between the different feedback formats; study how feedback loops can be created and how competencies of students can be inputted and visualised. In this upcoming stage, different mock-ups will be first tested in a lab setting and later also tested and implemented in a hospital setting.

6 Conclusion

This study compares preferences for three feedback formats used in an ePortfolio for healthcare education using a mixed method design. It goes even further by giving recommendations for the design of feedback formats for future ePortfolios based on user-experience. Structured-text feedback was the preferred feedback format in this study in terms of usefulness, ease of use and attitude, all p’s < 0.001. There was no significant difference in the rating between open-text feedback and speech-to-text feedback in the top three, p = 0.559. It is, thus, recommended to offer structured-text as a feedback format in ePortfolios.

However, these findings need to be nuanced with the qualitative data indicating that participants have high expectations of speech-to-text technology but the quality of the speech-to-text transcriptions in the experiment being below expectations. This could be due to the language of the participants Dutch not being English. Participants mentioned that they would use the speech-to-text in a different way than offered in the experiment. The use of oral feedback will give them the possibility to shift away from the computer and make the feedback immediately on-premise. Instead of recording complete feedback reports, as tested in this study, participants mentioned they would rather make oral notes as a reminder for more elaborate written feedback later on. In sum, the quality of the speech-to-text technology used in this experiment was insufficient to use in a ePortfolio but holds potential to improve the process of feedback and increase the amount of feedback given to the students, a crucial element in ePortfolios and workplace learning.

The authors provide recommendations for designers and managers developing ePortfolio with feedback modules. Among them a list of recommendations from participants to increase the quality of speech-to-text feedback.

Data availability

The datasets generated and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Ajzen, I. (1991). The theory of planned behavior. Organizational Behavior and Human Decision Processes, 50(2), 179–211. https://doi.org/10.1016/0749-5978(91)90020-T

Archer, J. C. (2010). State of the science in health professional education: Effective feedback. Medical Education, 44(1), 101–108. https://doi.org/10.1111/j.1365-2923.2009.03546.x

Baird, K., Gamble, J., & Sidebotham, M. (2016). Assessment of the quality and applicability of an e-portfolio capstone assessment item within a bachelor of midwifery program. Nurse Education Practice, 20, 11–16. https://doi.org/10.1016/j.nepr.2016.06.00

Baud, D., & Molley, E. (2013). Rethinking models of feedback for learning: The challenge of design. Assessment & Evaluation in Higher Education, 38, 698–712.

Bing-You, R., Hayes, V., Varaklis, K., Trowbridge, R., Kemp, H., & McKelvy, D. (2017). Feedback for Learners in Medical Education: What Is Known? A Scoping Review. Academic Medicine, 92(9), 1346–1354. https://doi.org/10.1097/ACM.0000000000001578

Birks, M., Hartin, P., Woods, C., Emmanuel, E., & Hitchins, M. (2016). Students’ perceptions of the use of eportfolios in nursing and midwifery education. Nurse Education in Practice, 18, 46–51.

Bramley, A. L., Thomas, C. J., Mc Kenna, L., & Itsiopoulos, C. (2021). E-portfolios and Entrustable Professional Activities to support competency-based education in dietetics. Nursing & Health Sciences, 23(1), 148–156. https://doi.org/10.1111/nhs.12774

Buzzetto-More, N. (2006). Using electronic portfolios to build information literacy. Glob Digital Business Rev., 1(1), 6–11.

Carter, A. G., Creedy, D. K., & Sidebotham, M. (2017). Critical thinking evaluation in reflective writing: Development and testing of carter assessment of critical thinking in midwifery. Midwifery, 54, 73–80. https://doi.org/10.1016/j.midw.2017.08.003

Cedefop. (2023, March 3). https://Www.Cedefop.Europa.Eu/En/Tools/Vet-Glossary/Glossary?Search=eportfolio.

Colwell, C., Jelfs, A., & Mallett, E. (2005). Initial requirements of deaf students for video: Lessons learned from an evaluation of a digital video application. Learning, Media and Technology, 30(2), 201–217.

Davis, F. D. (1989). Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Quarterly, 13(13), 319–339.

De Sumit, K., Henke, P. K., Ailawadi, G., Dimick, J. B., & Colletti, L. M. (2004). Attending, house officer, and medical student perceptions about teaching in the third-year medical school general surgery clerkship. Journal of the American College of Surgeons, 199(6), 932–942.

Europass. (2022, February 14).

Google Cloud. (n.d.). Retrieved January 25, 2023, from https://cloud.google.com/speech-to-text

Gray, M., Downer, T., & Capper, T. (2019). Australian midwifery student’s perceptions of the benefits and challenges associated with completing a portfolio of evidence for initial registration: Paper based and ePortfolios. Nurse Education in Practice, 39, 37–44. https://doi.org/10.1016/j.nepr.2019.07.003

Guerino, G. C., & Valentim, N. M. C. (2020). Usability and user experience evaluation of natural user interfaces: A systematic mapping study. IET Software, 14(5), 451–467. https://doi.org/10.1049/iet-sen.2020.0051

Guest, G., MacQueen, K., & Namey, E. (2014). Introduction to Applied Thematic Analysis. Applied Thematic Analysis, 3–20. https://doi.org/10.4135/9781483384436.n1

Holmboe, E. S., Yamazaki, K., Edgar, L., Conforti, L., Yaghmour, N., Miller, R. S., & Hamstra, S. J. (2015). Reflections on the First 2 Years of Milestone Implementation. Journal of Graduate Medical Education, 7(3), 506–511. https://doi.org/10.4300/JGME-07-03-43

Hoy, M. B. (2018). Alexa, Siri, Cortana, and More: An Introduction to Voice Assistants. Medical Reference Services Quarterly, 37(1), 81–88. https://doi.org/10.1080/02763869.2018.1404391

Hwang, A., Truong, K., & Mihailidis, A. (2012). Using participatory design to determine the needs of informal caregivers for smart home user interfaces. Proceedings of the 6th International Conference on Pervasive Computing Technologies for Healthcare, 41–48. https://doi.org/10.4108/icst.pervasivehealth.2012.248671

Janssens, O., Haerens, L., Valcke, M., Beeckman, D., Pype, P., & Embo, M. (2022). The role of ePortfolios in supporting learning in eight healthcare disciplines: A scoping review. Nurse Education in Practice, 63(May), 103418. https://doi.org/10.1016/j.nepr.2022.103418

JASP Team. JASP [Computer software]. (Version 0.16.3). (2022).

Kamath, A., Schwartz, A., Simpao, A., Lingappan, A., Rehman, M., & Galvez, J. (2015). Induction of general anesthesia is in the eye of the beholder—Objective feedback through a wearable camera. Journal of Graduate Medical Education, 7(2), 268–269. https://doi.org/10.4300/JGME-D-14-00680.1

Kushalnagar, R. S., Behm, G. W., Kelstone, A. W., & Ali, S. S. (2015). Tracked Speech-To-Text Display : Enhancing Accessibility and Readability of Speech-To-Text Categories and Subject Descriptors. ASSETS, 15, 223–230.

Liberman, A. S., Liberman, M., Steinert, Y., McLeod, P., & Meterissian, S. (2005). Surgery residents and attending surgeons have different perceptions of feedback. Medical Teacher, 27(5), 470–472. https://doi.org/10.1080/0142590500129183

Marty, A. P., Linsenmeyer, M., George, B., Young, J. Q., Breckwoldt, J., & ten Cate, O. (2023). Mobile technologies to support workplace-based assessment for entrustment decisions: guidelines for programs and educators: AMEE Guide No. 154. Medical Teacher, 1–11. https://doi.org/10.1080/0142159x.2023.2168527

Moores, A., & Parks, M. (2010). Twelve tips for introducing E-Portfolios with undergraduate students. Medical Teacher, 32(1), 46–49. https://doi.org/10.3109/01421590903434151

Pendleton, D. (1984). he consultation: An approach to learning and teaching (p. 6). Oxford University Press.

Qureshi, N. S. (2017). Giving effective feedback in medical education. The Obstetrician & Gynaecologist, 19(3), 243–248. https://doi.org/10.1111/tog.12391

Ranchal, R., Taber-Doughty, T., Guo, Y., Bain, K., Martin, H., Paul Robinson, J., & Duerstock, B. S. (2013). Using speech recognition for real-time captioning and lecture transcription in the classroom. IEEE Transactions on Learning Technologies, 6(4), 299–311. https://doi.org/10.1109/TLT.2013.21

Ross, S., Pirraglia, C., Aquilina, A. M., & Zulla, R. (2022). Effective competency-based medical education requires learning environments that promote a mastery goal orientation: A narrative review. Medical Teacher, 44(5), 527–534. https://doi.org/10.1080/0142159X.2021.2004307

Segaran, M. K., & Hasim, Z. (2021). SELF-REGULATED LEARNING THROUGH ePORTFOLIO: A META-ANALYSIS. Malaysian Journal of Learning and Instruction, 18(1), 131–156. https://doi.org/10.32890/MJLI2021.18.1.6

Shadiev, R., Hwang, W., Chen, N., & Huang, Y. (2014). Review of Speech-to-Text Recognition Technology for Enhancing Learning. Journal of Educational Technology & Society, 17(4), 65–84.

Siddiqui, Z. S., Fisher, M. B., Slade, C., Downer, T., Kirby, M. M., McAllister, L., Isbel, S. T., & Brown Wilson, C. (2022). Twelve tips for introducing E-Portfolios in health professions education. Medical Teacher, 0(0), 1–6. https://doi.org/10.1080/0142159X.2022.2053085

Sidebotham, M., Baird, K., Walters, C., & Gamble, J. (2018). Preparing student midwives for professional practice : Evaluation of a student e-portfolio assessment item. Nurse Education in Practice, 32(July), 84–89. https://doi.org/10.1016/j.nepr.2018.07.008

Siegle, D. (2002). Creating a living portfolio: Documenting student growth with electronic portfolios. Gifted Child Today, 25(3), 60–63.

Slade, C., & Downer, T. (2020). Students’ conceptual understanding and attitudes towards technology and user experience before and after use of an ePortfolio. Journal of Computing in Higher Education, 32(3), 529–552. https://doi.org/10.1007/s12528-019-09245-8

Strudler, N., & Wetzel, K. (2008). Costs and Benefits of Electronic Portfolios in Teacher Education: Faculty Perspectives. Journal of Computing in Teacher Education, 24(4), 135–142. http://search.ebscohost.com/login.aspx?direct=true&db=ehh&AN=33063380&site=ehost-live&scope=site

Tekian, A., Watling, C. J., Roberts, T. E., Steinert, Y., & Norcini, J. (2017). Qualitative and quantitative feedback in the context of competency-based education. Medical Teacher, 39(12), 1245–1249. https://doi.org/10.1080/0142159X.2017.1372564

Tickle, N., Gamble, J., & Creedy, D. (2021). Feasibility of a novel framework to routinely survey women online about their continuity of care experiences with midwifery students. Nurse Education Practice, 55, 84–89. https://doi.org/10.1016/j.nepr.2021.103176

Tickle, N., Creedy, D., Carter, A., & Gamble, J. (2022). The use of eportfolios in pre-registration health professional clinical education : An integrative review ☆. Nurse Education Today, 117(June), 105476. https://doi.org/10.1016/j.nedt.2022.105476

Tsai, L. L. (2021). Why College Students Prefer Typing over Speech Input: The Dual Perspective. IEEE Access, 9, 119845–119856. https://doi.org/10.1109/ACCESS.2021.3107457

Van der Leeuw, R. M., & Slootweg, I. A. (2013). Twelve tips for making the best use of feedback. Medical Teacher, 35(5), 348–351. https://doi.org/10.3109/0142159X.2013.769676

van der Schaaf, M., Donkers, J., Slof, B., Moonen-van Loon, J., van Tartwijk, J., Driessen, E., Badii, A., Serban, O., & Ten Cate, O. (2017). Improving workplace-based assessment and feedback by an E-portfolio enhanced with learning analytics. Educational Technology Research and Development, 65(2), 359–380. https://doi.org/10.1007/s11423-016-9496-8

Van Ostaeyen, S., Embo, M., Schellens, T., & Valcke, M. (2022). Training to Support ePortfolio Users During Clinical Placements: A Scoping Review. Medical Science Educator, 32(4), 921–928. https://doi.org/10.1007/s40670-022-01583-0

Veloski, J., Boex, J., Grasberger, M., Evans, A., & Wolfson, D. B. (2006). Systematic review of the literature on assessment, feedback and physicians’ clinical performance. Medical Teacher, 28(2), 117–128.

Weiser, M. (1991). the computer for the 21st century. Scientific American (international Edition), 265, 66–75.

Acknowledgements

We would like to thank Yannick Gils and Iwan Lemmens from Cronos Foursevens for the development of the prototype and the technical input in this paper. We thank Helena Demey and Clara Wasiak for the help with the recruitment and other support. Finally, we would like to thank our participants that, although they were healthcare professionals, were willing to participate in our study during the COVID-19 pandemic.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethical approval was provided by Ghent University, Faculty of Political and Social Sciences. This study was funded by Flemish Research Foundation as a part of a funded research project, http://www.sbo-scaffold.com/EN. FWO-registration number of the fellowship/project: S003219N_.

Permission to perform the research was obtained from the deans, program directors, heads of department, appointed student representatives, and departmental staff. The full procedure was also subjected to the legal requirements of admission and selection of all four universities and in agreement with the federal legislation.

Conflict of Interest

None.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

De Ruyck, O., Embo, M., Morton, J. et al. A comparison of three feedback formats in an ePortfolio to support workplace learning in healthcare education: a mixed method study. Educ Inf Technol 29, 9667–9688 (2024). https://doi.org/10.1007/s10639-023-12062-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10639-023-12062-3