Abstract

The purpose of this study was to examine the effect of technology support, teachers’ confidence in using technology, their beliefs about using technology, and their perceived skills on the use of instructional and application software. The data we used were from 1335 K-12 teachers involved in the Technology Uses and Perceptions Survey (TUPS) study. Teachers were located in 40 different institutions across the state of Florida. According to the findings of this study, the most significant factor in the instructional and application software use of teachers was found as perceived technology skills. Therefore, professional development for perceived skills or technology competencies of teachers might contribute to increasing the instructional or application software use of teachers. In terms of confidence and comfort using technology, they both had direct and positive effects on instructional and application software. Also, the total effect of support from technology specialists for instructional software use is more than application software use. As evidenced by the models developed in this research, teachers’ perceived technology skills for instructional and application software use directly and positively affected their beliefs on usefulness. Our effort to provide more emphasis on the combined effect of a collective set of factors to explain teacher use of technology leads us to address the gaps in the literature on technology use (i.e., the effect of demographic factors and technology availability).

Similar content being viewed by others

1 Introduction

A considerable number of resources, reform efforts, and policy changes have been made to equip K-12 schools with technology that enables all students to be successful (Ritzhaupt et al. 2012). As the access to technology, software, and application has been granted, accordingly, more and more educators and researchers have paid attention to technology integration and use (Fernandes et al. 2020; Russell et al. 2003). Given this increasing interest in technology and its use over the two decades, the understanding of teachers’ viewpoints and perceptions about technology use in K-12 settings has been of utmost importance (Mueller et al. 2008).

The literature on use of technology in schools abounds. Even though extant literature and available research enrich our understanding of technology use, a comprehensive and detailed account of teacher use of technology along with its related factors is yet limited. The first issue surfaced is that research on technology use has mostly been towards teachers’ integration of computers (laptop or desktop) (e.g., Inan and Lowther 2010; Tondeur et al. 2008). Yet, little research has been conducted on certain software that is closely aligned with instruction and application of teacher use in and out of the classroom for their professional purposes.

Second issue we have noticed is that while the benefits of teacher use of technology have been well recognized, research identifying factors contributing to this use revolves around studies that focus mainly on the effect of demographic variables of either schools or teachers, such as background (age, gender, and teaching experience) (Hsu and Kuan 2013; Shiue 2007), more specifically teacher readiness (Inan and Lowther 2010), pressure to use technology (O’Dwyer et al. 2003a, b), contextual variables of schools, such as availability and access to technology (Pelgrum 2001), school policy on technology (Tondeur et al. 2008). Technology use is a complex domain to uncover. Even though demographic factors are crucial in explaining teacher use of technology, there are other significant factors worth investigating. Rapid advances in technology and school-level reforms and opportunities to integrate technology into the classroom make it crucial to take a fresh focus on the role of other factors that account for technology use in K-12 settings (Mueller et al. 2008). Considering the most effective factor to integrate technology into the classroom as being the teacher themselves (Scherer et al. 2019), we are not content with the singular emphasis on teachers’ demographic characteristics. Researchers today need to focus more on the individual variation in beliefs, attitudes, and skills among teachers (Mishra and Koehler 2006).

Another issue that limits our perspective is that the conceptualization of the factors affecting technology use and their alignment with technology use vary widely. As an illustration, Karaca et al. (2013) examined the effect of teacher attitudes and beliefs on technology use, which includes the incorporation of technology into various professional practices, such as course delivery and communication. The operational definition of technology was generic and not specified, which might possibly be tablets or overhead projectors. However, attitudes and beliefs were defined by general statements like “technology use makes the lessons more student centered.” On the other hand, there are studies in which the alignment between studied factors and technology use was well established. Mathews and Guarino (2000) used teachers’ perception of their ability to use computers to predict their actual use of computers. The instruments for technology ability and technology measure a specific technology, computers. For consistency in the research, we believe that what intends to be measured should be aligned with what is being used in the classroom.

The current study is unique in that it uses a set of particular instructional and software technologies and asked teachers to report their beliefs, skills, and perceived use of those technologies. For example, teachers reported their perceived skill for a specific simulation software; belief in its usefulness; and the extent to which they use that simulation software. Hew and Brush (2007) supported the interconnectedness of skills, beliefs, and technology use with their conceptual model created based on previous studies. They argue that these factors are related to one another and influence each other. Therefore, we need studies to examine the correlates of technology use with well-defined factors to conserve the interrelations among them.

Lastly, a look at previous studies investigating technology use reveals that the incorporation of crucial factors is either dispersed or blurry. Tondeur et al. (2008) examined teacher (structural and cultural) and school (cultural and contextual) characteristics and associated them to teachers’ computer use. Although the inclusion of the characteristics was wide enough to address important factors, it is in danger of losing the real focus of the investigation. Having numerous related variables to explain teacher’ computer use does not always have significant payoffs. Similarly, a narrow focus on adding factors to account for technology use might provide a complicated depiction of understanding the most and least important factors. Ritzhaupt et al. (2012) demonstrated how teacher, school, and contextual characteristics affected student use of technology through classroom technology integration and teacher use of technology. Despite predicting technology use was not the primary purpose of this study, the researchers focused mainly on demographic variables to explain teacher use of technology. Indeed, a number of factors was shown to be related to technology use more than some teacher characteristics. A limited focus on more important factors to account for technology use needs to be replaced with a more inclusive approach with pivotal factors.

As Miranda and Russell (2012) point out factors in previous research on technology use were handled in isolation. They also argue that researchers overlooked interactions among the factors being studied that account for technology use. Factors surfaced across the literature are highly interrelated which requires them to be addressed together in the context of technology use (Ertmer, 1999). For these reasons, hypothesized models for technology use need to identify a particular focus and context first. Then, using specific variables and the inter-correlations among them, researchers need to provide a comprehensive, detailed picture of what drives teachers to use technology.

2 Conceptual framework

Our conceptual framework consists of technology use, the factors shown to affect technology use, and how each factor is related to technology use and other factors. Based on previous research and relevant literature on technology use, we developed a hypothesized model to test the interrelationships between the factors and teacher use of technology, shown in Fig. 1.

2.1 Technology use: Instructional and application software

The meaning of “technology use” changes widely and “with so many different types of technology uses emerging, defining it has become even more complex” (Russell et al. 2003, p. 300). Research acknowledges that technology use is a multi-faceted phenomenon (O’Dwyer et al. 2003a, b) and has been conceptualized with a variety of ways and classifications (Nelson and Hawk 2020; Tondeur et al. 2008). Across the literature, two approaches are prevalent to conceptualize technology use.

The first approach is technology use for professional purposes. Instructional purposes followed by using technology or how technology is used for instruction, teaching, and learning is addressed in this approach (Russell et al. 2003). The conceptualization of this approach was also highlighted by Bebell et al. (2004). The researchers examined the multi-faceted nature of technology use of teachers, and they developed seven separate dimensions of teachers’ technology use, listed as teachers’ use of technology for delivering instruction, class preparation, professional email, accommodation, student use to create products, and grading (O’Dwyer et al. 2003a, b). Miranda and Russell (2012) employed this approach by using teacher-directed student use of technology for completing work assigned. This dimension represents technology use for teaching and learning purposes in the classroom and the ways and frequency with which teachers use technology.

The second approach to define technology use is connected to incorporation of a particular technology as a tool. It is the adoption of particular software, applications, or tools that are used by teachers to complete school-related activities (Hogarty et al. 2003). To measure teachers’ use of technology through this approach, as Bebell et al. (2004) put forward, composite measures (i.e., summated scores) are used to represent an observed use of general technologies, mostly through self-reported frequencies. This approach also has been embraced by a considerable number of researchers (e.g., Asadi et al. 2019; Morquin et al. 2019; Ritzhaupt et al. 2012). For example, Niederhauser and Stoddart (2001) explored the software teachers used for instruction to engage students in learning. The researchers found several categories of software, such as drill-and-practice, key-boarding, reader rabbit, interactive and educational games, exploratory tools programs, and mental mathematics games.

The discussion on the decision to choose one approach over another is out of the scope of this paper. Despite the lack of a clear and universal definition, we prefer to adapt the second approach to operationalize teachers’ technology use. The definition of technology use we embrace for the current study is primarily based on any use of 1) application software and 2) instructional software that supports instruction and helps teachers in their profession. Basically, it emphasizes teacher use of particular types of technology, such as drill and practice or spreadsheets, or web browsers.

Instructional software use includes the incorporation of computer-assisted learning software. Instructional software is subject-dependent. For example, a science teacher could deliver instruction about the respiratory system using a simulation. A mathematics teacher enables their students to practice algebra problems with the help of a drill-and-practice software. Hogarty et al. (2003) conducted an exploratory analysis to provide examples of instructional software use with the data collected via a survey. The researchers showed that tutorials, simulations, drill and practice, integrated learning systems, games, and similar technologies are able to represent subject dependent software.

Application software is composed of context-dependent tools and devices. Most of the time application software involves teachers’ use of basic software to solve problems, prepare instructional materials or share and talk through their ideas with their colleagues (Fernandes et al. 2020; Morrison and Lowther 2010). Based on the investigation conducted by Hogarty et al. (2003), word processors, spreadsheets, presentation programs, web browsers and like technologies appeared to be application software.

Technology use, either instructional or application software, is a multifaceted and complex process that is under the effect of numerous interrelated factors (Ertmer et al. 2001; Hogarty et al. 2003). Teachers’ experiences, dispositions, assumptions about teaching and learning, beliefs, skills, and perception interact within the context in which they teach and use technology to accomplish a professional task (Tondeur et al. 2008). It is essential to examine the potential factors that explain the extent of teachers’ use of technology (Leem and Sung 2019; Siyam 2019). The factors that have still been hypothesized to be related to technology use and detailed in this study are technology support, confidence and comfort using technology, perceived technology skills and perceived technology usefulness for particular technology.

2.2 Technology support and technology use

A variety of resources and support structures are provided by school districts or other reform efforts for teachers to integrate technology into their classroom. School support through a person who is knowledgeable about and can facilitate the process of technology use is often addressed in the literature. Nelson et al. (2019) state that technology support as an essential ingredient to technology use of teachers. However, across the literature there are mixed results on the effect of technology support on technology use. For example, Inan and Lowther (2010) found the direct effect of teachers’ perception of support (both technical and overall) for technology integration non-significant. Ritzhaupt et al. (2012) and Robinson (2003) reiterated the similar finding. Conversely, some researchers found that perceived support from technical staff or coordinators had a strong effect on technology integration (Hsu and Kuan 2013; Razak et al. 2018). Conflicting findings make the relationship between technology support and technology use more researchable to provide a clearer picture of our understanding on how support affects teacher use of technology. Moreover, the indirect effect of technology support in the form of both technical and pedagogical support was shown to be significant and important. Inan and Lowther (2010) evidenced that support had positive and strong indirect effects on technology use through technology proficiency and beliefs. Karaca et al. (2013) also produced similar findings for the indirect effect of support through technology skills and teachers’ belief on the usefulness of technology.

2.3 Confidence in using technology and technology use

General confidence and comfort using technology includes the state of being comfortable in effectively integrating technology into teaching (Hogarty et al. 2003). A number of research findings justified that comfort level with technology is associated with technology use (e.g., Valtonen et al. 2020; Spiteri and Rundgren 2020; Anderson and Putman 2020). Bebell et al. (2004) provided evidence on the positive and moderately strong direct effect of confidence with technology on technology use. In addition, researchers yielded evidence on the potential indirect effect of comfort and confidence. Miranda and Russell (2012) tested the indirect effect of teachers’ confidence using technology through perceived importance and benefits of technology for teaching. The researchers reported its weak but significant indirect effect on technology use.

2.4 Belief, skills, and technology use

Irrespective of the complicated nature of any technology, teachers need to have skills/competencies and beliefs/attitudes required to use it in the classroom (Spiteri and Rundgren 2020). Beliefs towards technology use can be defined as a certain feeling showing if a teacher believes s/he has skills to integrate technology (Hew & Brush, 2007). Teacher belief and attitudes towards technology use is their perception of the value of technology and its use (Karaca et al. 2013). Wozney et al. (2006) proposed that teachers make deliberate thinking on the value of a particular technology and what it can be capable of. A general inference is that the more positive beliefs teachers adopt, the more they use technology in the classroom (Ertmer 2005).

A number of researchers indicated how beliefs toward technology distinguishes between teachers who use technology and teachers who do not (Mueller et al. 2008). Nelson and Hawk (2020) revealed that beliefs about the importance of technology was a strong predictor for technology use. Similar results were provided by different scholars (Inan and Lowther 2010; Karaca et al. 2013). Hsu and Kuan (2013) reported the positive and strong relationship between teachers’ perception of technology effectiveness in terms of value and efficiency and technology integration.

The other important factor in explaining teacher technology use is teachers’ belief about the skills they have for a particular technology. Teacher technology skills were operationalized to contain teachers’ own perception for their proficiency and competency in using technology (Baylor and Ritchie 2002). They are ‘capability beliefs’ (Lumpe and Chambers 2001) that are fundamentally connected to particular technologies, software or applications.

Technology skills have been identified as one of the correlates of teachers’ use of technology (Hew & Brush, 2007; Robinson 2003; Spiteri and Rundgren 2020). Karaca et al. (2013) found that there is a strong and positive relationship between technology competencies and technology integration. Mathews and Guarino (2000) modeled the relationship between technology ability and technology usage. They produced a positive and strong effect of ability on technology usage.

There are several studies in which researchers examined the indirect effect of teachers’ perceived skills on technology use (Hew and Brush 2006; Inan and Lowther 2010; Robinson 2003). Karaca et al. (2013) underline the indirect effect of competencies on technology use through teacher beliefs. They discuss that increased competencies improve positive beliefs and attitudes, then ultimately, improved beliefs result in frequent use of technology.

2.5 Interrelationships among factors affecting technology use

Across the literature, technology use has been investigated not only with direct but also with indirect effects, outlined in the previous section. Most of these investigations have been conducted using mediation analysis procedures, such as structural equation modeling (SEM) family. The variable of interest in these studies is technology use; and the majority of the relationships among factors are often overlooked. However, mediation analysis is powerful with its capacity to produce mediation and moderation effects. To take advantage of this feature, it is imperative to lay out how factors accounting teachers’ technology use are associated.

We synthesized the relevant literature and found that the aforementioned factors are in relation to each other. First, teachers’ perceived skills for a particular technology are related to teachers’ perceived usefulness (i.e., belief) for that technology (Bebell et al. 2004; Ertmer 2005; Inan and Lowther 2010; Leem and Sung 2019). As teachers gain skills to use technology in the classroom, positive beliefs about usefulness of technology improve (Albirini 2006). Similarly, technology support affects teachers’ perception for the skills they have and confidence in using technology (Ertmer 2005). For example, Inan and Lowther (2010) reported that both technical and overall support were effective in improving teachers’ perception on their proficiency and skills using technology. Karaca et al. (2013) also examined how support (both principal and colleague) affects teachers’ technology competencies and demonstrated parallel findings. The association between confidence and comfort in using technology and teachers’ skills for technology and their belief for its usefulness is another interrelation to focus. Miranda and Russell (2012) reported how confidence indirectly affects, despite weak in magnitude, teachers perceived beliefs on the usefulness of technology.

2.6 Demographic variables as control

Research draws attention to a number of demographic characteristics that are correlated with teacher use of technology. Gender (e.g., Islahi 2019; Tondeur et al. 2008), previous experience with technology (Ritzhaupt et al. 2012; Ifinedo et al. 2020), and teaching experience (Inan and Lowther 2010) are among the factors demonstrated effective in technology use. For instance, female teachers’ use of technology was reported lower compared to their male counterparts (Scherer and Siddiq 2015). Experience with technology is another important correlate of technology use in the classroom. Teachers having more frequent use of technology in the classroom have more experience with technology (Tondeur et al. 2008). Teaching experience is of utmost importance in the context of technology use. Most of the time, researchers provided evidence of non-significant direct effect of teaching experience on technology integration (e.g., Ritzhaupt et al. 2012). Russell et al. (2003), however, demonstrated how technology use for different purposes differed across years of experience in teaching: as teachers with 15+ years experience used technology less compared to those who have 6–15 years of experience. Moreover, Inan and Lowther (2010) reported a significant indirect effect of years of teaching on technology integration. Because these control variables were shown as factors in explaining teachers’ use of technology, they were added to the conceptual/hypothesized model. Their effect on technology use and variation explained were taken into account. However, we used them as control variables to partial out their individual effect on technology use. These variables are substantially different from main variables of interest, technology support, confidence and comfort, skills, and beliefs about usefulness of technology.

3 A hypothesized model

Research in technology use has identified several factors as important to use technology, as outlined above. Yet, most of these factors have been examined in isolation from one another (O’Dwyer et al. 2003a, b; Zhao and Frank 2003). We believe that a more comprehensive picture of technology use is needed to explore factors affecting it (as also suggested by Tondeur et al. 2008). Based on theoretical foundations and previous research we laid out in the last section, we developed a hypothesized model shown in Fig. 1.

Our hypothesized model included four distinct yet interrelated blocks. Each block has one to two variables. The block of primary interest for this study is use, defined by teachers’ use of technology. Lightly colored variables denote the control variables. In the last decade, there are a plethora of studies in which similar models to test technology use were created. Most of these studies used an analytic procedure from the SEM family, such as path modeling or multilevel SEM. A comparison search between our model and the models surfaced across the literature showed that some models lacked comfort and confidence using technology (Inan and Lowther 2010; Karaca et al. 2013), belief about the usefulness of technology (Ritzhaupt et al. 2012), teachers’ perceived skills, competence, or proficiency in using technology (Tondeur et al. 2008), or technology support (Nelson et al. 2019). Even though choosing variables are essentially related to researchers’ preferences and limitations of studies, we need more emphasis on the combined effect of a collective set of factors (Sang et al. 2011). Considering these gaps in studies using SEM procedures to explore the potential correlates of technology use, our hypothesized model provides a holistic and more clear depiction of teacher use of technology.

As it is seen from the previous related literature, the support, confidence, and beliefs are the variables that the most mentioned and the most effective in teachers’ technology use in their instruction (Scherer et al. 2019; Leem and Sung 2019; Scherer and Siddiq 2015; Zhao and Frank 2003). However, teachers may have different beliefs, skills, and perceived use of those technologies (van Braak et al. 2004). The factors such as support, beliefs, and confidence may affect technology use at different level. Therefore, it is necessary to explore variables that are effective in a particular technology and its integration. For this need, we have created two path models including the support, confidence, and beliefs in order to explore teachers’ instructional and software technology use. Therefore, the purpose of this study is to examine the effectFootnote 1 of technology support, teachers’ confidence in using technology, their beliefs about using technology, and their perceived skills on technology on the use of instructional and application software, controlling for teacher characteristics. For this purpose, three interrelated research questions formed the basis of the current work. 1) What are the effects of technology support, teachers’ confidence in using technology, their beliefs about using technology, and their perceived skills on technology on the use of instructional software? 2) What are the effects of technology support, teachers’ confidence in using technology, their beliefs about using technology, and their perceived skills on technology on the use of application software? 3) What is the comparison of relative effects on teacher use of instructional and application software?

4 Method

4.1 Participants

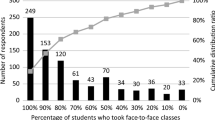

The data we used were from 1335 K-12 teachers involved in the Technology Uses and Perceptions Survey (TUPS) study. Teachers were located in 40 different institutions across the state of Florida. Participating teachers were approximately 81% female. The highest degree earned by the participants was a bachelor’s degree (44%), master’s degree (49%), specialist degree (4%) or doctorate (3%). On the ethnicity of participants, 82% identified as White, 8% identified as Black, 6% identified as Hispanic or Latino, and the remaining identified as mixed or other. Teachers taught at elementary (52%), middle (22%), and high schools (%25), and mixed (1%). The average years of teaching experience was 19.3 with 21.8 standard deviation. Experience with technology on average appeared to be 15.9 with 22.4 standard deviation.

4.2 Data and instrument

The original TUPS is a data collecting instrument to obtain a better picture of how teachers use technology in their teaching, their level of experience with technology, and their comfort with and attitudes toward technology (Dawson and Ritzhaupt 2015). It has 10 domains and 185 items: 1) access and support (7 items), 2) preparation for technology use (6 items), 3) perceptions of professional development (5 items), 4) perceptions of technology use (12 items), 5) comfort and confidence using technology (11 items), 6) technology integration (16 items), 7) teacher use of technology (32 items), 8) student use of technology (32 items), 9) perceived technology skills (32 items), and 10) perceptions of technology usefulness (32 items). The first version of the instrument was initially validated by (Hogarty et al. 2003). The revised instrument was then investigated further for evidence of validity and reliability by Ritzhaupt et al. (2017) These researchers found that TUPS might not be treated as a series of ten different scales because some of the domains did not show a unidimensional structure. They also suggested the individual uses of the items.

In this study we used the item-level data of TUPS, rather than focusing on the domains themselves. The aim of the inclusion of the items is to tap into an interpretable latent construct on which TUPS provide data, which are not well explored. Therefore, we used the relevant theories and findings from previous research to construct meaningful indexes by select items in TUPS to represent underlying constructs that need to be studied. This strategy is also common across large-scale national (the National Teacher and Principal Survey) and international (the Teaching and Learning International Survey) assessments that let researchers develop latent constructs to test (Rutkowski et al. 2010).

4.3 Variables and indexes

Teacher use of technology indexes included totally 8 items from TUPS which measure teachers’ use of a variety of technologies. Based on information from Hogarty et al. (2003), we used confirmatory factor analysis (CFA) to validate and group these technologies. Our analysis driven by theoretical considerations led us to group these technologies into two different categories: instructional software and application software. The instructional software category (4 items) included subject-dependent technologies, such as drill and practice software, instructional games, simulations, and tutorials. However, the application software category included more context-dependent technologies. It (4 items) was composed of word processors, spreadsheets, presentation software, and web browsers. Teachers reported the frequency of their use in these technologies from not at all (1) to multiple times per day (6). An individual and separate model was fitted for each technology use category.

Perceived technology skills indexes provided information on teachers’ perceived skills in the instructional software and the application software. Teachers reported their skills on a six-scale ranging from none (1) to very high (6). All items, four in each index, corresponded to one of the technology use indexes.

Technology usefulness indexes measured the extent to which teachers find the instructional software and the application software useful, which ranged from none (1) to very high (6). All items, four in each index, corresponded to one of the technology use indexes.

Confidence and comfort using technology index had four items: 1) I am prepared to effectively integrate technology into my teaching; 2) I am comfortable using technology in my teaching; 3) I use technology effectively in my teaching; and 4) I am developing expertise in the uses of technology in teaching. This index included a five-point Likert scale from strongly disagree (1) to strongly agree (5). We decided to select these four items from TUPS to construct this index based on previous research. We used the same index across the two technology use indexes.

Support from technology specialist (technical support) index represented the support provided by technology specialists either in the school or from the district with three items. Based on the previous studies on technology support, logic, and available data, we decided to select three items from TUPS for each technology use to construct an index changing from strongly disagree (1) to strongly agree (5). The items were: 1) The technology specialist models techniques to integrate technology into my teaching; 2) The technology specialist provides professional development; and 3) The technology specialist adequately assists me in planning and implementing the use of technology in my teaching. Again, we used the same index across the two technology use indexes.

Teacher characteristics as control variables we also included a set of control variables to partial out their potential effect on the relationship among other variables. We added gender as dichotomous variables, experience with technology, and teaching experience as continuous variables. They all regressed on teacher use of technology variables.

4.4 Analysis procedures

Our analysis procedures began with determining if multilevel modeling was needed for the current study due to the nested nature of our data; teachers (level 1) are naturally grouped within schools (level 2). First, we tested an unconditional model, in which there was not any predictor for each technology use variable. To examine variation between schools, intraclass correlation (ICC) was calculated to evaluate how much variation was explained across the schools. Design effect coefficient (deff) was also estimated to determine if multilevel modeling was the appropriate procedure to proceed (Peugh 2010). We used the formula deff = 1 + [(average cluster size) – 1] * ICC, suggested by Muthen and Satorra (1995). We evaluated deff using the “design effect smaller than two” rule (Hox and Maas 2002). Average cluster size was 33.38. ICCs for instructional and application software use were .016 and .013, respectively. Even though there are no particular cutoffs for ICCs (Heck and Thomas 2015), based on variations on variables from previous studies and variables under consideration, we decided that approximately 1–2% of the total variance in technology use was relatively lower. Moreover, for both technology use variables, deffs were computed as lower than two. With these two criteria in mind, we preferred to continue with the single-level analysis, as suggested by Peugh (2010).

For construction of the eight indexes, using theoretical information, findings from previous research, and discussion among the researchers, we selected the items from TUPS data set for the current study. The single-level CFA was the primary analysis method to develop composites (i.e., summated scores) and for construct validation because it adjusts estimates of construct validity for measurement error (Brown 2015). We used Mplus 7 (Muthén & Muthén 1998–2012) with a robust maximum likelihood estimator (MLR) to obtain standard errors that were corrected for normality violations. We assumed all items to be continuous in the portions we used from TUPS. The indexes were scaled in that the first factor loading from each index was fixed at 1.

Global fit indices were reported in Table 1 and evaluated based on the cutoff criteria suggested by Hu and Benter (1999) (χ2 > .05; Tucker-Lewis Index, TLI > .95; Comparative Fit Index, CFI > .95; Standardized Root Mean Square Residual, SRMR < .08; Root Mean Square Error Approximation, RMSEA < .06). As for the composite reliability coefficient, factor rho, ρ, for each index was estimated (Raykov 1997, 2004). It is a better alternative than Chronbach’s alpha (Kline 2015) with large samples (Raykov 1997) since it underestimates composite reliability and requires unique cases to be close to composite reliability (e.g., a unidimensional congeneric test, uncorrelated errors, and uniformly high factor loadings) (Raykov and Marcoulides 2011).

Based on the information in Table 1, the indexes constructed provided adequate to good fit to the data from TUPS. Moreover, the factor loadings ranged from .694 to .799, evidencing adequate convergent validity (Brown 2015). No major problems were observed for correlated residuals and standardized residuals. For the evaluation of reliability, the factor rho’s ranged from .62 to .92. Therefore, the indexes accounted for an adequate amount of how the constructs represent the attribute being measured.

We illustrated our hypothesized model with a graphical conceptual model in Fig. 1. To examine expected relationships among variables, we preferred to use path analysis form SEM family (Kline 2015). Path analysis allowed us to specify both direct and indirect effects on teacher use of instructional and application software. For the analysis of the models, we fitted two different models and used the same analytic procedures mentioned above. To test indirect effects, we used Mplus’s default setting, Sobel’s standard errors.

5 Results

We examined the effect of technology support, teachers’ confidence using technology, and their attitudes toward using technology on their use of a variety of instructional and application software, controlling for teacher characteristics. We fitted two separate models for each to provide parameter estimates, presented in Figs. 2 and 3. Only standardized direct effects were provided in the figures. The indirect effects were provided in the tables. The direct effects of the control variables were found in the Appendix Table 5.

5.1 Teacher use of subject-dependent instructional software use

Looking at Fig. 2, we estimated how support, confidence, and attitudes contributed to teacher use of particular subject-dependent instructional software. The fit indices for the model 1 were: χ2 (11) = 53.716, p < .001; TLI = .922; CFI = .961; SRMR = .030; RMSEA = .054, which provided good fit. Explained variance of the model in application software was 28%.

The strongest direct effect on teacher use of instructional software was of perceived skills, ® = .418(.027). This implies that as teachers perceive their skills in particular subject-dependent software better, they would increase their use of that software. The effect of usefulness was considerably less, ® = .114(.027). Technical support and confidence and comfort had relatively lower effects, ® = .082(.024) and ® = .062(.024), respectively. Other than teacher use, there were two notable direct effects to mention: the effect of perceived skills on usefulness, ® = .563(.021), and the effect of confidence and comfort on perceived skills, ® = .389(.024). We also reported the indirect effects towards teachers use of subject-dependent instructional software in Table 2.

Looking across Table 2, the indirect effect of confidence and comfort on teacher use of instructional software through their perceived skills for that instructional software was the strongest, ® = .163(.015). This is also parallel with the direct effect of confidence and comfort on teachers’ perceived skills. These results underscore the importance of perceived skills of teachers to use instructional software. Similar pattern can be seen from the indirect effect of perceived skills mediated by usefulness on teacher use of instructional software, ® = .064(.016), considering the strong direct effect of perceived skills on usefulness.

Interestingly, there were two negative indirect effects. Both of these effects were from support towards teacher use of instructional software mediated by 1) only perceived skills, ® = −.027(.011) and 2) perceived skills and usefulness, ® = −.004(.002). But this was a reasonable result because the direct effect of support from technology specialists on perceived skills was negative and weak, too (® = −.065(.026)).

5.2 Teacher use of context-dependent application software use

Figure 3 below presented the standardized direct effect of support, confidence, and attitudes on teacher use of context-dependent application software. The fit indices for the model 2 were χ2(11) = 29.574, p < .05; TLI = .963; CFI = .982; SRMR = .029; RMSEA = .036, were considered as good fit. Explained variance of the model in application software was 41%.

The results related to teacher use of application software had much similarity with the results for teacher use of instructional software. For example, the strongest direct effect on teacher use of instructional software was from perceived skills (® = .518(.027)). With an exception, the direct effect of technology support on teacher use of application software produced statistically non-significant results. (® = .025(.021), p = .238). Parallel to the first model, the indirect effects towards teacher use of context-dependent application software showed a similar pattern, in Table 3. As an example, the indirect effect of confidence and comfort on teacher use of application software through their perceived skills for that instructional software was the strongest, ® = .252(.020). Noticeably, the indirect effects of technology support were always statistically significant.

5.3 Total effects of support, confidence, and attitudes on technology use

As far as total effects (direct + indirect effects) on teacher use of technology is concerned, for both models we used, perceived skills for particular software had the strongest effect, shown in Table 4.

Important to note that confidence and comfort totally affected teacher use of particular subject- and context-dependent software use more than the corresponding effect of usefulness. Support from technology specialists had a statistically non-significant total effect on teacher use of technology.

5.4 The comparison of relative effects on teacher use of instructional and application software

Across eight direct and eight indirect effects we specified in the models 1 and 2 separately, we observed similar patterns in magnitude: 1) the direct effects towards teacher use of technology were always positive, but differed in magnitude. 2) The strongest direct effect on teacher use of technology in both models was from perceived skills. 3) Second strongest effect on teacher use of technology in both models was of usefulness. 4) The direct effects of confidence and comfort was relatively lower in magnitude, which can be considered as weak. 5) The indirect effect of confidence and comfort through perceived skills was the strongest among the other indirect effects. The only difference across the two models, technology support yielded a statistically non-significant effect on teachers’ use of application software. In general, we noticed that the model 2 produced stronger effects on teacher use of technology. For example, perceived skills in application software (® = .570) matter more than perceived skills in instructional software (® = .417). However, support from technology specialists produced a stronger effect in teacher use of instructional software.

6 Discussion

Our effort to provide more emphasis on the combined effect of a collective set of factors to explain teacher use of technology leads us to address the gaps in the literature on technology use (i.e., the effect of demographic factors and technology availability). In addition, we tried to use aligned variables (i.e., confidence using a particular technology and use of that particular technology) to make sure our measurement is robust.

According to the findings of this study, the most significant factor in the instructional and application software use of teachers was found as perceived technology skills. As teachers perceived that they have skills in using particular software, they would use that particular software in the class more. This is parallel to the related literature concluding that perceived skills or technology competency directly and positively influenced teachers’ use of technology (Celik et al. 2014; Inan and Lowther 2010; Kabakci Yurdakul and Çoklar 2014; Mathews and Guarino 2000). Therefore, professional development for perceived skills or technology competencies of teachers might contribute to increasing the instructional or application software use of teachers. It is inevitable for teachers to change their competencies in the use of technology (instructional or application software) as technology is constantly renewed and changed (Tondeur et al. 2017). For this reason, professional development can be considered as important for the perceived skills of teachers to keep up-to-date on the use of technology. In addition, the lack of skills and knowledge of teachers on the use of technology is one of the barriers in technology integration in education (Pelgrum 2001). Bauer and Kenton (2005) stated that teachers, who were highly skilled with technology, were innovative and adept at overcoming technology use obstacles. According to the findings of the current study, it can be stated that the teacher with more technology competency is more successful in solving the problems related to the instructional or application software use.

Teachers’ belief regarding their use of instructional and application software directly and positively affected the use of these technologies. As teachers think that instructional and application software will contribute to their profession and performance, they are likely to use these software. In many studies, perceived usefulness has been found to be the strongest factor directly affecting attitudes and intention to use ICT (Chien et al. 2014; Oye et al. 2014; Teo 2011). As seen in the models developed in this research, teachers’ beliefs on usefulness increase their actual use of software. One possible explanation might be that teachers’ perceived usefulness towards ICT could be conceptualized in more than one dimension based on teaching and learning objectives (teaching preparation, evaluation, cooperation, feedback etc.; Niederhauser & Perkmen, 2010; Scherer et al. 2015). Perceived usefulness related to any technology is multidimensional, so teachers’ usefulness perceptions for instructional and application software may differ. This difference may affect teachers’ use of technology at different levels as shown in this study. For teachers to use more instructional and application software in their teaching process, their awareness of what kind of benefits these technologies will provide to the teaching process can be raised.

As evidenced by the models developed in this research, teachers’ perceived technology skills for instructional and application software use directly and positively affected their beliefs on usefulness. Moreover, perceived technology skills also have an indirect effect on teachers’ use of technology through technology usefulness, which shows another role it plays in the model. In support of these results from the previous literature, teachers’ competencies for the use of ICT directly and positively influenced their perceived usefulness of ICT (Liaw and Huang 2013; Niederhauser & Perkmen, 2010) and a positive relationship between perceived usefulness of ICT and teachers’ self-efficacy of technology use has been observed (Scherer et al. 2015). Accordingly, as teachers become more proficient in the use of technology, the perceived benefits of instructional and application software increase. For example, a science teacher with a high ability to use simulation software on planets can predict the contribution of the software’s specifications to the teaching process. The science teacher’s skill level for the simulation program helps to determine the educational benefit of the software.

We found that the total effect of support from technology specialists for instructional software use is more than application software use. The results of relevant previous studies are consistent with our result showing that technological support given teachers has a positive effect on teachers’ use of technology (Drent and Meelissen 2008; Hsu and Kuan 2013). Instructional software is mostly a subject-dependent technology used in teaching a subject, while application software is used for pre-class preparation or communicating with other teachers and students (Morrison and Lowther 2010). Instructional software use requires higher-level information than the application software use requires. Therefore, it is more important for technology specialists involved in schools to support teachers regarding how to use technology in their lessons, rather than just technology help in terms of hardware and IT support, for effective technology integration. In this regard, the pedagogical knowledge should be taken into account as well as the technology knowledge of the staff who support teachers in the use of technology considering that technology is mostly utilized to support existing practices and not so much as to transform pedagogical practices (Mioduser et al. 2003; Tondeur et al. 2013). Besides, it is also crucial to introduce possible usage of emerging technologies in education during professional development for teachers. Teachers need hands-on experience with these technologies through professional learning opportunities. In this regard, they are more likely to be aware of instructional use of these technologies. As long as their awareness increases, it is more likely for them to perceive any particular technology as useful.

In terms of confidence and comfort using technology, they both had direct and positive effects on instructional and application software. Instructional and application software usage increases, as teachers feel comfortable while using technology in their teaching process. Stress and anxiety in using technology negatively affect teachers’ use of technology, which is an obstacle to technology integration (Shu et al. 2011; Tu et al., 2005).

Even though we didn’t use the demographics variables, such as gender in our models, we did account for their direct effect on technology use. By adding the four control variables, we partialed out their effect on teacher use of technology, which means that we have “pure” effects in our models. In addition to these variables, future research may analyze the effect of other variables such as innovativeness level and techno stress on teachers’ instructional and software technology use. Besides, in order to obtain better information regarding the process of teachers’ technology integration, future work may explore challenges of using emerging technologies by employing a qualitative research approach. For example, a focus group with teachers to elaborate how they use emerging technologies and to discuss the purposes and uses of technologies would establish a more in-depth understanding of teachers’ technology use.

7 Study limitations

Our study has limitations related to the data and design of the study. First, we are not claiming causal inferences using our path models. TUPS data is cross-sectional, and this study is a correlational observational study. Considering the nature of the data we collected and the research design we preferred, the term “effect” we used always signifies a statistical parameter that represents a linear relationship between an independent and a dependent variable, controlling for other independent variables. Second, the selection of variables was based on our data-informed and theory-based decisions. Moreover, we were limited to the scope of TUPS, the data and variables TUPS provided us. A number of different variables, or common causes might be theoretically related to our hypothesized model, but they could not be utilized in this study, which might lead to omitted variable bias. Third, the effect of technology support was relatively weak and sometimes statistically non-significant in this study. However, we believe that this is one of the limitations related to the items we utilized to create technology support index. Different indexes with different items might yield stronger effects of technology support.

Notes

the term effect in this study was used to signify a linear relationship from an exogenous to an endogenous variable. It does not represent a causal claim, but is used to be consistent with the terminology used in SEM.

References

Albirini, A. (2006). Teachers’ attitudes toward information and communication technologies: The case of Syrian EFL teachers. Computers & Education, 47(4), 373–398.

Anderson, S. E., & Putman, R. S. (2020). Special education teachers’ experience, confidence, beliefs, and knowledge about integrating technology. Journal of Special Education Technology, 35(1), 37–50.

Asadi, Z., Abdekhoda, M., & Nadrian, H. (2019). Understanding and predicting teachers’ intention to use cloud computing in smart education. Interactive Technology and Smart Education, 14–27.

Bauer, J., & Kenton, J. (2005). Toward technology integration in the schools: Why it isn't happening. Journal of Technology and Teacher Education, 13(4), 519–546.

Baylor, A. L., & Ritchie, D. (2002). What factors facilitate teacher skill, teacher morale, and perceived student learning in technology-using classrooms? Computers & Education, 39(4), 395–414.

Bebell, D., Russell, M., & O’Dwyer, L. (2004). Measuring teachers’ technology uses: Why multiple measures are more revealing. Journal of Research on Technology in Education, 37(1), 45–63.

Brown, T. A. (2015). Confirmatory factor analysis for applied research (2nd ed.). New York: The Guilford Press.

Celik, I., Sahin, I., & Aktürk, A. O. (2014). Analysis of the relations among the components of technological pedagogical and content knowledge (TPACK): A structural equation model. Journal of Educational Computing Research, 51(1), 1–22.

Chien, S. P., Wu, H. K., & Hsu, Y. S. (2014). An investigation of teachers’ beliefs and their use of technology-based assessments. Computers in Human Behavior, 31, 198–210.

Dawson, K., & Ritzhaupt, A. (2015). Technology uses and perceptions survey: Final report. Florida: Alachua County Public Schools.

Drent, M., & Meelissen, M. (2008). Which factors obstruct or stimulate teacher educators to use ICT innovatively? Computers & Education, 51(1), 187–199.

Ertmer, P. A. (1999). Addressing first-and second-order barriers to change: Strategies for technology integration. Educational Technology Research and Development, 47(4), 47–61.

Ertmer, P. A. (2005). Teacher pedagogical beliefs: The final frontier in our quest for technology integration? Educational Technology Research and Development, 53(4), 25–39.

Ertmer, P. A., Gopalakrishnan, S., & Ross, E. (2001). Technology-using teachers: Comparing perceptions of exemplary technology use to best practice. Journal of Research on Technology in Education, 33(5).

Fernandes, G. W. R., Rodrigues, A. M., & Ferreira, C. A. (2020). Professional development and use of digital technologies by science teachers: A review of theoretical frameworks. Research in Science Education, 50(2), 673–708.

Heck, R. H., & Thomas, S. L. (2015). An introduction to multilevel modeling techniques: MLM and SEM approaches using Mplus (3rd ed.). New York: Routledge.

Hew, K. F., & Brush, T. (2006). Integrating technology in K-12 teaching and learning: Current knowledge gaps and recommendations for future research. Educational Technology Research and Development, 55(3), 223–252.

Hew, K. F., & Brush, T. (2007). Integrating technology into K-12 teaching and learning: Current knowledge gaps and recommendations for future research. Educational Technology Research and Development, 55(3), 223–252.

Hogarty, K. Y., Lang, T. R., & Kromrey, J. D. (2003). Another look at technology use in classrooms: The development and validation of an instrument to measure teachers’ perceptions. Educational and Psychological Measurement, 63(1), 139–162.

Hox, J. J., & Maas, C. J. M. (2002). Sample sizes for multilevel modeling. In J. Blasius, J. J. Hox, E. de Leeuw, & P. Schmidt (Eds.), Social science methodology in the new millennium: Proceedings of the fifth international conference on logic and methodology (2nd expanded ed.). Opladen: Leske + Budrich.

Hsu, S., & Kuan, P. Y. (2013). The impact of multilevel factors on technology integration: The case of Taiwanese grade 1–9 teachers and schools. Educational Technology Research and Development, 61(1), 25–50.

Hu, L. T., & Benter, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6, 1–55.

Ifinedo, E., Rikala, J., & Hämäläinen, T. (2020). Factors affecting Nigerian teacher educators’ technology integration: Considering characteristics, knowledge constructs, ICT practices and beliefs. Computers & Education, 146, 1–17.

Inan, F. A., & Lowther, D. L. (2010). Factors affecting technology integration in K-12 classrooms: A path model. Educational Technology Research and Development, 58(2), 137–154.

Islahi, F. (2019). Exploring teacher attitude towards information technology with a gender perspective. Contemporary Educational Technology, 10(1), 37–54.

Kabakci Yurdakul, I., & Çoklar, A. N. (2014). Modeling preservice teachers’ TPACK competencies based on ICT usage. Journal of Computer Assisted Learning, 30(4), 363–376.

Karaca, F., Can, G., & Yildirim, S. (2013). A path model for technology integration into elementary school settings in Turkey. Computers & Education, 68, 353–365.

Kline, R. B. (2015). Principles and practices of structural equation modeling (4th ed.). New York: Guilford Press.

Leem, J., & Sung, E. (2019). Teachers' beliefs and technology acceptance concerning SMART mobile devices for SMART education in South Korea. British Journal of Educational Technology, 50(2), 601–613.

Liaw, S. S., & Huang, H. M. (2013). Perceived satisfaction, perceived usefulness and interactive learning environments as predictors to self-regulation in e-learning environments. Computers & Education, 60(1), 14–24.

Lumpe, A. T., & Chambers, E. (2001). Assessing teachers’ context beliefs about technology use. Journal of Research on Technology in Education, 34(1), 93–107.

Mathews, J. G., & Guarino, A. J. (2000). Predicting teacher computer use: A path analysis. International Journal of Instructional Media, 27(4), 385–392.

Mioduser, D., Nachmias, R., Tubin, D., & Forkosh-Baruch, A. (2003). Analysis schema for the study of domains and levels of pedagogical innovation in schools using ICT. Education and Information Technologies, 8(1), 23–36.

Miranda, H., & Russell, M. (2012). Understanding factors associated with teacher-directed student use of technology in elementary classrooms: A structural equation modeling approach. British Journal of Educational Technology, 43(4), 652–666.

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108, 1017–1054.

Morquin, D., Challoo, L., & Green, M. (2019). Teachers’ perceptions regarding the use of Google classroom and Google docs, E-Learn: World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education (pp. 21–30). Association for the Advancement of Computing in Education (AACE).

Morrison, G. M., & Lowther, D. L. (2010). Integrating computer technology into the classroom (4th ed.). Upper Saddle River: Pearson, Merrill Prentice Hall.

Mueller, J., Wood, E., Willoughby, R., Ross, C., & Specht, J. (2008). Identifying discriminating variables between teachers who fully integrate computers and teachers with limited integration. Computers & Education, 51, 1523–1537.

Muthén, L. K., & Muthén, B. O. (1998-2012). Mplus user’s guide (7th ed.). Los Angeles: Muthén & Muthén.

Muthen, B. O., & Satorra, A. (1995). Complex sample data in structural equation modeling. Sociological Methodology, 25, 267–316.

Nelson, M. J., & Hawk, N. A. (2020). The impact of field experiences on prospective preservice teachers’ technology integration beliefs and intentions. Teaching and Teacher Education, 89, 1–11.

Nelson, M. J., Voithofer, R., & Cheng, S. L. (2019). Mediating factors that influence the technology integration practices of teacher educators. Computers & Education, 128, 330–344.

Niederhauser, D. S., & Stoddart, T. (2001). Teachers’ instructional perspectives and use of educational software. Teaching and Teacher Education, 17(1), 15–31.

Niederhauser, D. S., & Perkmen, S. (2010). Beyond self-efficacy: Measuring pre-service teachers’ instructional technology outcome expectations. Computers in Human Behavior, 26(3), 436–442.

O’Dwyer, L., Russell, M., & Bebel, D. (2003a). Elementary teachers’ use of technology: Characteristics of teachers, schools, and districts associated with technology use. Boston: Technology and Assessment Study Collaborative, Boston College.

O’Dwyer, L., Russell, M., & Bebell, D. (2003b). Elementary teachers' use of technology: Characteristics of teachers, schools, and districts associated with technology use. inTASC Publications, 2.

Oye, N. D., Iahad, N. A., & Rahim, N. A. (2014). The history of UTAUT model and its impact on ICT acceptance and usage by academicians. Education and Information Technologies, 19(1), 251–270.

Pelgrum, W. J. (2001). Obstacles to the integration of ICT in education: Results from a worldwide educational assessment. Computers & Education, 37, 163–178.

Peugh, J. L. (2010). A practical guide to multilevel modeling. Journal of School Psychology, 48, 85–112.

Raykov, T. (1997). Scale reliability, Cronbach’s coefficient alpha, and violations of essential tau equivalence with fixed congeneric components. Multivariate Behavioral Research, 32, 329–353.

Raykov, T. (2004). Behavioral scale reliability and measurement invariance evaluation using latent variable modeling. Behavior Therapy, 35, 299–331.

Raykov, T., & Marcoulides, G. A. (2011). Introduction to psychometric theory. New York: Taylor and Francis Group.

Razak, N. A., Jalil, H. A., Krauss, S. E., & Ahmad, N. A. (2018). Successful implementation of information and communication technology integration in Malaysian public schools: An activity systems analysis approach. Studies in Educational Evaluation, 58, 17–29.

Ritzhaupt, A. D., Dawson, K., & Cavanaugh, C. (2012). An investigation of factors influencing student use of technology in K-12 classrooms using path analysis. Journal of Educational Computing Research, 46(3), 229–254.

Ritzhaupt, A. D., Huggins-Manley, A. C., Dawson, K., Ağaçlı-Doğan, N., & Doğan, S. (2017). Validity and appropriate uses of the revised technology uses and perceptions survey (TUPS). Journal of Research on Technology in Education, 49(1-2), 73–87.

Robinson, W. I. (2003). External, and internal factors which predict teachers’ computer usage in K-12 classrooms. Detroit: Wayne State University.

Russell, M., Bebell, D., O’Dwyer, L., & O’Connor, K. (2003). Examining teacher technology use: Implications for preservice and inservice teacher preparation. Journal of Teacher Education, 54(4), 297–310.

Rutkowski, L., Gonzalez, E., Joncas, M., & von Davier, M. (2010). International large-scale assessment data: Issues in secondary analysis and reporting. Educational Researcher, 39(2), 142–151.

Sang, G., Valcke, M., van Braak, J., Tondeur, J., & Zhu, C. (2011). Predicting ICT integration into classroom teaching in Chinese primary schools: Exploring the complex interplay of teacher-related variables. Journal of Computer Assisted Learning, 27, 160–172.

Scherer, R., & Siddiq, F. (2015). Revisiting teachers’ computer self-efficacy: A differentiated view on gender differences. Computers in Human Behavior, 53, 48–57.

Scherer, R., Siddiq, F., & Teo, T. (2015). Becoming more specific: Measuring and modeling teachers' perceived usefulness of ICT in the context of teaching and learning. Computers & Education, 88, 202–214.

Scherer, R., Siddiq, F., & Tondeur, J. (2019). The technology acceptance model (TAM): A meta-analytic structural equation modeling approach to explaining teachers’ adoption of digital technology in education. Computers & Education, 128, 13–35.

Shiue, Y. M. (2007). Investigating the sources of teachers’ instructional technology use through the decomposed theory of planned behavior. Journal of Educational Computing Research, 36(4), 425–453.

Shu, Q., Tu, Q., & Wang, K. (2011). The impact of computer self-efficacy and technology dependence on computer-related technostress: A social cognitive theory perspective. International Journal of Human-Computer Interaction, 27(10), 923–939.

Siyam, N. (2019). Factors impacting special education teachers’ acceptance and actual use of technology. Education and Information Technologies, 24(3), 2035–2057.

Spiteri, M., & Rundgren, S. N. C. (2020). Literature review on the factors affecting primary teachers’ use of digital technology. Technology, Knowledge and Learning, 25(1), 115–128.

Teo, T. (2011). Factors influencing teachers’ intention to use technology: Model development and test. Computers & Education, 57(4), 2432–2440.

Tondeur, J., Valcke, M., & van Braak, J. (2008). A multidimensional approach to determinants of computer use in primary education: Teacher and school characteristics. Journal of Computer Assisted Learning, 24(6), 494–506.

Tondeur, J., Kershaw, L. H., Vanderlinde, R. R., & Van Braak, J. (2013). Getting inside the black box of technology integration in education: Teachers' stimulated recall of classroom observations. Australasian Journal of Educational Technology, 29(3), 434–449.

Tondeur, J., Aesaert, K., Pynoo, B., Braak, J., Fraeyman, N., & Erstad, O. (2017). Developing a validated instrument to measure preservice teachers’ ICT competencies: Meeting the demands of the 21st century. British Journal of Educational Technology, 48(2), 462–472.

Tu, Q., Wang, K. L., & Shu, Q. (2005). Computer-related technostress in China. Communications of the ACM, 48(4), 77–81.

Valtonen, T., Leppänen, U., Hyypiä, M., Sointu, E., Smits, A., & Tondeur, J. (2020). Fresh perspectives on TPACK: Pre-service teachers’ own appraisal of their challenging and confident TPACK areas. Education and Information Technologies, 1–20.

van Braak, J., Tondeur, J., & Valcke, M. (2004). Explaining different types of computer use among primary school teachers. European Journal of Psychology of Education, 19(4), 407–422.

Wozney, L., Venkatesh, V., & Abrami, P. (2006). Implementing computer technologies: Teachers’ perceptions and practices. Journal of Technology and Teacher Education, 14(1), 173–207.

Zhao, Y., & Frank, K. A. (2003). Factors affecting technology uses in schools: An ecological perspective. American Educational Research Journal, 40(4), 807–840.

Acknowledgements

We thank Dr. Albert Ritzhaupt and Dr. Kara Dawson from Univerity of Florida who provided us with the data set and feedback through manuscript writing.

Funding

Open access funding provided by University of Oulu including Oulu University Hospital.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dogan, S., Dogan, N.A. & Celik, I. Teachers’ skills to integrate technology in education: Two path models explaining instructional and application software use. Educ Inf Technol 26, 1311–1332 (2021). https://doi.org/10.1007/s10639-020-10310-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10639-020-10310-4