Abstract

P-time event graphs are discrete event systems suitable for modeling processes in which tasks must be executed in predefined time windows. Their dynamics can be represented by max-plus linear-dual inequalities (LDIs), i.e., systems of linear dynamical inequalities in the primal and dual operations of the max-plus algebra. We define a new class of models called switched LDIs (SLDIs), which allow to switch between different modes of operation, each corresponding to a set of LDIs, according to a sequence of modes called schedule. In this paper, we focus on the analysis of SLDIs when the considered schedule is fixed and either periodic or intermittently periodic. We show that SLDIs can model a wide range of applications including single-robot multi-product processing networks, in which every product has different processing requirements and corresponds to a specific mode of operation. Based on the analysis of SLDIs, we propose algorithms to compute: i. minimum and maximum cycle times for these processes, improving the time complexity of other existing approaches; ii. a complete trajectory of the robot including start-up and shut-down transients.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Time-window constraints arise in different settings. Typical examples from manufacturing are the bakery and the semi-conductor industries, where some processes (e.g., yeast fermentation in the former case, metal deposition in the latter) must be executed under strict temporal constraints in order to obtain the desired quality of the final product (Hecker et al. 2014; Manier and Bloch 2003). In transportation, time windows can be used to specify admissible pickup and delivery times for customers (Solomon and Desrosiers 1988). From the pharmaceutical domain, high-throughput screening systems are another example where, due to chemical requirements, the time allowed to elapse between two operations is restricted by lower and/or upper bounds (Mayer and Raisch 2004).

P-time event graphs (P-TEGs) are event graphsFootnote 1 in which tokens are forced to sojourn in places in predefined time windows. Given their ability to model time-window constraints, they have been applied to solve scheduling and control problems in various types of systems including bakeries, electroplating lines, and cluster tools (Declerck 2021; Špaček et al. 1999; Becha et al. 2017; Kim et al. 2003). The signal describing firing times of transitions in P-TEGs evolves – non-deterministically – according to max-plus linear-dual inequalities (LDIs), i.e., dynamical inequalities that are linear in the primal operations and in the dual operations of the max-plus algebra (see Eq. 2 in Sect. 2.4).

Since temporal upper bound constraints can be considered as specifications that a system driven exclusively by lower time bounds (i.e., a max-plus linear system) needs to satisfy, several authors have addressed the problem of limiting the sojourn time of tokens in places from a control point of view. This resulted in a variety of techniques; we mention for instance (Katz 2007; Maia et al. 2011; Maia and Andrade 2011; Declerck 2016), in which the authors find sufficient conditions for the control of max-plus linear systems subject to constraints using, respectively, geometric control theory, max-plus spectral theory, residuation theory, and model predictive control. Other sufficient conditions were discovered in Amari et al. (2012). Note that, in its most general formulation, this control problem is still open, as no necessary and sufficient condition for its solution has been found. The reason for this can be traced to the fact that a more fundamental problem, namely, checking the existence of solutions of LDIs, has not yet been fully understood. On the other hand, the steady-state version of the problem was solved for a large class of instances in Gonçalves et al. (2017), exploiting the results of Katz (2007). Moreover, the simple nature of the dynamics of P-TEGs has led to a number of theoretical results regarding the analysis of their cycle time (Declerck et al. 2007; Lee et al. 2014; Špaček and Komenda 2017; Zorzenon et al. 2022), which is defined as the temporal difference between the occurrence of two repetitions of the same event in the system, assuming events occur in a periodic manner (for a formal definition, see Sect. 3.2).

Such a simple characterization comes, however, at the cost of limited modeling power. To illustrate this, consider the example of flow shops, which are manufacturing systems consisting of a sequence of machines of unitary capacity where all the jobs (i.e., parts to be processed) must visit all the machines in the same order (see Fig. 1). P-TEGs are the ideal tool for representing flow shops where all the jobs are identical. Suppose instead that jobs of different types are present, each of which requires different processing times in machines, and that the entrance order of jobs is periodic and repeats every \(V\in \mathbb {N}\) jobs. An example of periodic entrance order of period \(V = 2\) for the flow shop of Fig. 1 is \(\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\ldots \) In this case, P-TEGs can still be adopted to model the system (this is shown formally in Proposition 5), but:

-

the number of transitions in the resulting P-TEGs increases (linearly) with V, which leads to a rather high computational complexity for the cycle time analysis where large values of V are considered;

-

for a different entrance order of jobs, a new P-TEG must be built.

Moreover, when the number of jobs to be processed is infiniteFootnote 2 and the entrance order is not periodic, no P-TEG can model the manufacturing system.

With the aim of overcoming these limitations, in this paper we introduce a new class of dynamical systems called switched max-plus linear-dual inequalities (SLDIs). SLDIs extend the modeling power of P-TEGs by allowing to switch among different modes of operation, each corresponding to a system of LDIs. Let us take the example of the flow shop again. By assigning a mode of operation to each job type, we can model the manufacturing system by SLDIs in which different job entrance orders simply correspond to different schedules, i.e., sequences of modes. A first advantage of SLDIs is thus that, with a single dynamical system, one can represent all possible entrance orders of jobs in the flow shop, including non-periodic ones.

It is worth noting that this property is not exclusive to SLDIs, as also P-time Petri nets can be utilized to model flow shops under any job order (Khansa et al. 1996; Bonhomme 2013). In fact, there exists a strong relation between the two models (which is briefly discussed in Sect. 6). However, in contrast to P-time Petri nets, the dynamics of SLDIs possesses another appealing feature: a switched-linear formulation in the max-plus algebra. By exploiting the abundance of existing results in this algebraic framework (see, e.g., Cuninghame-Green 1979; Baccelli et al. 1992; Butkovič 2010; Hardouin et al. 2018), in this paper we will derive low-complexity algorithms for the cycle time computation, considering two types of schedules: periodic and intermittently periodic schedules. In the example of the flow shop, the first type of schedules corresponds to periodic arrivals of jobs of different types in the manufacturing system (as in schedule \(\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\ldots \)), whereas the second one is a generalization in which the entrance order of jobs alternates among periodic and non-periodic regimes. An example of a schedule of the second type for the flow shop of Fig. 1 is \(\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\ldots \mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\ldots \), which consists of an initial transient \(\mathsf {\MakeLowercase {A}}\) followed by periodic regime \(\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\ldots \), an intermediate transient \(\mathsf {\MakeLowercase {B}}\), and the final periodic subschedule \(\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\ldots \)Footnote 3 In the cycle time analysis for this kind of schedules, one is interested in finding the cycle times that can be achieved in each periodic regime. The motivation for studying intermittently periodic schedules is two-fold:

-

1.

they are ubiquitous in applications (as discussed in Sect. 4.4), and

-

2.

differently from systems described by only lower time-bounds, the cycle time analysis in this class of schedules can produce non-trivial results in the presence of time-window constraints.

Here, by "non-trivial" we mean that the cycle times of the periodic subschedules in an intermittently periodic schedule may be different from those obtained by studying the periodic subschedules independently.

The present article enhances and extends the recent conference paper (Zorzenon et al. 2022) in several ways. Besides simplifying the preliminaries in Sect. 2 and adding a number of simple and illustrative examples, an important contribution of this extended paper is to systematically investigate the initial conditions of P-TEGs (in Sect. 3) and their relation to SLDIs. Two types of initial conditions are presented, loose and strict, and it is proven that P-TEGs with strict initial conditions can be represented by SLDIs but not by pure LDIs (Sect. 4.2). In Sect. 4, after formally presenting SLDIs, the cycle time analysis in periodic and intermittently periodic trajectories is discussed; intermittently periodic trajectories are introduced for the first time in this extended version. In Zorzenon et al. (2022), the correctness of the low-complexity method for computing the cycle time in SLDIs under periodic schedules was proven using tools from automata and regular languages theory; in this paper, the proof is entirely based on algebraic arguments. Although the initial inspiration for the algorithm was drawn from analogies between multi-precedence graphs and automata theory, the new proof relies on more established results, which hopefully makes it less arduous to read.

In Sect. 5, the analysis of the case study considered in Zorzenon et al. (2022) has been deepened further. The case study consists of a robotic job shop example derived from Kats et al. (2008), where parts of different type are required to visit a sequence of processing stations in different order, and are transported by a single robot. The authors of Kats et al. (2008) proved that the cycle time analysis in this class of systems can be performed in strongly polynomial time complexity \(\mathcal {O}(V^4n^4)\), where V is the period of the entrance order of different types of parts in the system, and n is the number of processing stations. In this paper, we show that the complexity can be reduced to \(\mathcal {O}(Vn^3 + n^4)\) using SLDIs. Computational tests show that the advantage is not only theoretical, but translates into a tangibly faster cycle time analysis. This makes our algorithm an appealing optimization subroutine for the solution of (NP-hard) cyclic scheduling problems in which the goal is to find the optimal path of the robot. Additionally, in this paper we show how to derive a complete (intermittently periodic) trajectory of the system, consisting of a start-up transient (where parts are initially introduced into the system), a periodic regime, and a shut-down transient (in which all parts are removed from the stations).

Finally, Sect. 6 provides concluding remarks, comparisons with related classes of dynamical systems, and suggestions for future work.

1.1 Notation

The set of positive, respectively non-negative, integers is denoted by \(\mathbb {N}\), respectively \(\mathbb {N}_0\). The set of non-negative real numbers is denoted by \(\mathbb {R}_{\ge 0}\). Moreover, \(\mathbb {R}_{\text {max}}:= \mathbb {R} \cup \{-\infty \}\), \(\mathbb {R}_{\text {min}}:= \mathbb {R}\cup \{\infty \}\), and \(\mathbb {R}bar:= \mathbb {R}_{\text {max}} \cup \{\infty \}=\mathbb {R}_{\text {min}}\cup \{-\infty \}\). If \(A\in \mathbb {R}bar^{n\times n}\), we will use notation \(A^\sharp \) to indicate \(-A^\top \). Given \(a, b \in \mathbb {Z}\) with \(b \ge a\), \([\![{a, b}]\!]\) denotes the discrete interval \(\{a, a + 1, a + 2, \ldots , b\}\).

2 Preliminaries

In this section, some basic concepts of max-plus algebra (Sect. 2.1) and precedence graphs (Sect. 2.2) are recalled; for a more detailed discussion of those topics, we refer to Baccelli et al. (1992); Butkovič (2010); Hardouin et al. (2018). Sections 2.3 and 2.4 present the non-positive circuit weight problem (introduced in Zorzenon et al. 2022) and max-plus linear-dual inequalities.

2.1 Max-plus algebra

The max-plus algebra operates on the set of real numbers extended with \(-\infty \) and \(+\infty \), and is endowed with operations \(\oplus \) (addition), \(\otimes \) (multiplication), \(\boxplus \) (dual addition), and \(\boxtimes \) (dual multiplication), defined as follows: for all \(a,b\in \mathbb {R}bar\),

These operations can be extended to matrices; given \(A,B\in \mathbb {R}bar^{m\times n}\), \(C\in \mathbb {R}bar^{n\times p}\), for all \(i\in [\![1,m]\!]\), \(j\in [\![1,n]\!]\), \(h\in [\![1,p]\!]\),

When the meaning is clear from the context, we will denote the max-plus multiplication between matrices A and C, \(A\otimes C\), simply by AC. The symbols \(\mathcal {E}\), \(\mathcal {T}\), and \(E_\otimes \) denote, respectively, the neutral element for \(\oplus \), \(\boxplus \), and \(\otimes \), i.e., \(\mathcal {E}_{ij} = -\infty \) and \(\mathcal {T}_{ij} = +\infty \) for all i, j, and \(E_\otimes \) is a square matrix with \((E_\otimes )_{ij} = 0\) if \(i=j\) and \((E_\otimes )_{ij}=-\infty \) if \(i\ne j\). Given a square matrix A, its rth power is defined recursively by \(A^0 = E_\otimes \) and, for all \(r\ge 1\), \(A^r = A^{r-1}\otimes A\). Moreover, the Kleene star of a matrix \(A\in \mathbb {R}bar^{n\times n}\) is

The partial order relation \(\le \) between two matrices A and B of the same size is defined elementwise: \(A\le B\) if and only if \(A_{ij} \le B_{ij}\) for all i, j. Analogously to the standard algebra, we define the max-plus trace of matrix \(A\in \mathbb {R}bar^{n\times n}\) by \({{\,\textrm{tr}\,}}(A) = \bigoplus _{i = 1}^n A_{ii}\). The product and dual product between scalar \(\lambda \in \mathbb {R}bar\) and matrix \(A\in \mathbb {R}bar^{m\times n}\) are given by

If \(\lambda \notin \{-\infty ,+\infty \}\), the two expressions coincide. We will therefore simply write \(\lambda A\) in place of \(\lambda \otimes A\) or \(\lambda \boxtimes A\) when \(\lambda \in \mathbb {R}\). When \(\lambda \in \mathbb {R}\), we indicate by \(\lambda ^{-1}\) the element such that \(\lambda ^{-1}\otimes \lambda = \lambda \otimes \lambda ^{-1} = 0\); thus, in the standard algebra, \(\lambda ^{-1}\) coincides with \(-\lambda \). When \(\lambda \in \{-\infty ,+\infty \}\), \(\lambda \) does not have a multiplicative inverse; nevertheless, we will use symbol \(\lambda ^{-1}\) to denote \(-\lambda \).

2.2 Precedence graphs

A directed graph is a pair \((N,E)\) where \(N\) is a finite set of nodes and \(E\subseteq N\times N\) is the set of arcs. A weighted directed graph is a triplet \((N,E,w)\), where \((N,E)\) is a directed graph, and \(w:E\rightarrow \mathbb {R}\) is a function that associates a weight w((i, j)) to each arc \((i,j)\in E\) of graph \((N,E)\). A sequence of \(r+1\) nodes \(\rho =(i_1,i_2,\ldots ,i_{r+1})\), \(r\ge 0\), such that \((i_j,i_{j+1})\in E\) for all \(j\in [\![1,r]\!]\) is a path of length r; a path \(\rho \) such that \(i_1 = i_{r+1}\) is called a circuit. The weight of a path is the sum (in conventional algebra) of the weights of the arcs composing it; conventionally, the weight of a path of length \(r=0\), i.e., \(\rho =(i_1)\), is equal to 0.

The precedence graph associated with matrix \(A\in \mathbb {R}_{\text {max}}^{n\times n}\) is the weighted directed graph \(\mathcal {G}(A)=(N,E,w)\), where \(N=[\![1,n]\!]\), and there is an arc \((j,i)\in E\) of weight \(w((j,i))=A_{ij}\) if and only if \(A_{ij}\ne -\infty \). We say that \(\mathcal {G}(A)\) is a parametric precedence graph when elements of A are functions of some real parameters \(\lambda _1,\ldots ,\lambda _p\), i.e., \(A = A(\lambda _1,\ldots ,\lambda _p)\).

There are important connections between the max-plus algebra and precedence graphs. For instance, element (i, j) of the rth max-plus power of a matrix \(A\in \mathbb {R}_{\text {max}}^{n\times n}\), \((A^r)_{ij}\), corresponds to the maximum weight of all paths in \(\mathcal {G}(A)\) of length r from node j to node i. A direct consequence is that \((A^*)_{ij}\) is equal to the largest weight of all paths (of any length) from node j to i. Observe that a precedence graph \(\mathcal {G}(A)\) does not contain circuits with positive weight if and only if \({{\,\textrm{tr}\,}}(A^*) = 0\); in presence of at least one circuit with positive weight, \({{\,\textrm{tr}\,}}(A^*) = \infty \). In the following, we indicate by \(\Gamma \) the set of all precedence graphs that do not contain circuits with positive weight, i.e.,

The following proposition will be used later to verify the existence of, and compute, trajectories satisfying time-window constraints in (switched) max-plus linear-dual inequalities.

Proposition 1

Butkovič (2010); Baccelli et al. (1992) Let A be an \(n\times n\) matrix with elements in \(\mathbb {R}_{\text {max}}\). Inequality \(A\otimes x \le x\) admits a solution \(x\in \mathbb {R}^n\) if and only if \({\mathcal {G}(A)}\in \Gamma \). In this case, any column of \(A^*\) solves the inequality, i.e., \(A\otimes (A^*)_{\cdot ,i} \le (A^*)_{\cdot ,i}\) for all \(i\in [\![1,n]\!]\).

The maximum circuit mean of precedence graph \(\mathcal {G}(A)\), denoted by \(\text{ mcm }(A)\), is the maximum weight-over-length ratio of all circuits of positive length in the graph; this value coincides with the largest max-plus eigenvalue (the max-plus spectral radius) of A, i.e., the largest \(\lambda \in \mathbb {R}_{\text {max}}\) such that \(A\otimes x = \lambda x\) for some vector x with elements from \(\mathbb {R}_{\text {max}}\). For a matrix of dimension \(n\times n\), the maximum circuit mean can be computed through the following formula (Baccelli et al. 1992):

where \(a^{\frac{1}{k}}\) (corresponding to \(\frac{a}{k}\) in the standard algebra) is the kth max-plus root of \(a\in \mathbb {R}_{\text {max}}\); a more efficient algorithm that returns the same value in time complexity \(\mathcal {O}(n\times m)\) in the worst case, where m is the number of edges in \(\mathcal {G}(A)\), is due to Karp (1978).

We recall that it is possible to check whether \(\mathcal {G}(A)\in \Gamma \) and, when \(\mathcal {G}(A)\in \Gamma \), to compute \(A^*\) in time \(\mathcal {O}(n^3)\) in the worst case, using the Floyd-Warshall algorithm (Hougardy 2010). In the case \(\mathcal {G}(A)\notin \Gamma \), computing \(A^*\) is an NP-hard problem; fortunately, the algorithms presented in the next sections will never face this issue in practice.

2.3 The non-positive circuit weight problem

Given a parametric precedence graph \(\mathcal {G}(A)\), where \(A= A(\lambda _1,\ldots ,\lambda _p)\), the non-positive circuit weight problem (NCP) consists in characterizing the set \(\Lambda _{{}_{\text {NCP}}}(A) = \{(\lambda _1,\dots ,\lambda _p)\in \mathbb {R}^p\ |\ \mathcal {G}(A)\in \Gamma \}\) of all values of parameters \(\lambda _1, \dots ,\lambda _p\) for which \(\mathcal {G}(A)\) does not contain circuits with positive weight. Specific classes of the NCP find applications in the analysis of periodic trajectories in max-plus dynamical systems. An application example is shown in Sect. 2.4.

2.3.1 The PIC-NCP

When matrix \(A\) has the form

for arbitrary matrices \(P,I,C\in \mathbb {R}_{\text {max}}^{n\times n}\) (called proportional, inverseFootnote 4, and constant matrix, respectively), then the problem is referred to as the proportional-inverse-constant-NCP. In this case, \(\Lambda _{{}_{\text {NCP}}}(\lambda P\oplus \lambda ^{-1}I\oplus C)=[\lambda _{\text {min}},\lambda _{\text {max}}]\cap \mathbb {R}\) is an interval, and its extreme points can be found either in weakly polynomial time using linear programming solvers such as the interior-point method, or in strongly polynomial time \(\mathcal {O}(n^4)\) using Algorithm 1 (Zorzenon et al. 2022). The functioning of the latter algorithm is briefly described in the following.

We remark that the PIC-NCP represents a generalization of the max-plus subeigenproblem (see Gaubert 1995), i.e., the problem of finding a real \(\lambda \) such that the inequality

admits a solution \(x\in \mathbb {R}^{n}\) for a given matrix \(I\in \mathbb {R}_{\text {max}}^{n\times n}\). Indeed, the above inequality can be rewritten (by multiplying both sides by \(\lambda ^{-1}\)) as

and, from Proposition 1, admits a solution \(x\in \mathbb {R}^n\) if and only if the precedence graph \(\mathcal {G}(\lambda ^{-1}I)\) does not have circuits with positive weight; the PIC-NCP thus simplifies into the max-plus subeigenproblem when matrices P and C are \(\mathcal {E}\). We recall from (Gaubert 1995, Lemma 1) that the least solution of the subeigenproblem is the max-plus spectral radius of matrix I, i.e.,

When matrices P and C are not \(\mathcal {E}\), a more sophisticated approach is necessary to solve the PIC-NCP. In particular, in Algorithm 1 some pre-computations are first performed (lines 3-4) to simplify the problem into an equivalent PI-NCP (a PIC-NCP where matrix C is \(\mathcal {E}\)) by redefining P as \(C^* \otimes P \otimes C^*\) and I as \(C^* \otimes I \otimes C^*\). Then, through the for-loop of lines 5-7, a matrix S is constructed such that, in every new iteration of the loop, the spectral radius of matrix \(I\otimes S^*\) and the inverse of the spectral radius of matrix \(P\otimes S^*\) approximate better and better \(\lambda _{min }\) and \(\lambda _{max }\), respectively. It can be shown (see Zorzenon et al. 2022) that after at most \(\left\lfloor {\frac{n}{2}} \right\rfloor \) iterations, the two quantities converge to the desired values (line 10), i.e.,

If some conditions on matrices C and S do not hold, the algorithm can be terminated prematurely as no \(\lambda \) solving the PIC-NCP exists (lines 1-2 and 8-9).

\(\mathsf {Solve\_NCP}(P,I,C)\) (from Zorzenon et al. 2022).

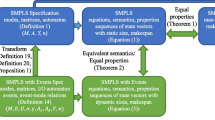

2.3.2 The MPIC-NCP

A natural generalization of the PIC-NCP is the multivariable PIC-NCP (MPIC-NCP), in which matrix A takes the form

for some matrices \(P_i,I_i,C\in \mathbb {R}_{\text {max}}^{n\times n}\) and parameters \(\lambda _i\in \mathbb {R}\), for all \(i\in [\![1,q]\!]\). In this case, it can be shown that the problem becomes "trivially intractable" in q,Footnote 5 in the sense that the set \(\Lambda _{{}_{\text {NCP}}}(A)\) corresponds, in the worst case, to the solution of a system of \(3^q-1\) (i.e., exponentially many) non-redundant linear inequalities (in the conventional sense) in variables \(\lambda _1,\dots ,\lambda _q\); in other words, \(\Lambda _{{}_{\text {NCP}}}(A)\) is a polytope with at most \(3^q-1\) facets living in a q-dimensional space. Consequently, it is unrealistic to expect to efficiently solve the MPIC-NCP, since the solution set \(\Lambda _{{}_{\text {NCP}}}(A)\) cannot be described in polynomial space. Nevertheless, it is possible to verify the non-emptiness of \(\Lambda _{{}_{\text {NCP}}}(A)\) in (weakly) polynomial time from the following observation. Proposition 1 suggests that the parameters \(\lambda _i\) such that \(\mathcal {G}(A)\in \Gamma \) are those for which the inequality

admits a solution \(x\in \mathbb {R}^{n}\). We can rewrite the inequality above in the standard algebra as the system

which is equivalent to

The system above consists of at mostFootnote 6\((2q+1)n^2\) linear inequalities in \(n+q\) real unknowns \(x_1,\dots ,x_n,\lambda _1,\dots ,\lambda _q\). Therefore, the non-emptiness of its solution set can be checked in polynomial time using linear programming techniques.

2.4 Max-plus linear-dual inequalities

In the following, we define max-plus linear-dual inequalities (LDIs) from a purely formal point of view. Their application to describe the dynamics of P-time event graphs is discussed in the next section. Let \(A^0,A^1\in \mathbb {R}_{\text {max}}^{n\times n}\), \(B^0,B^1\in \mathbb {R}_{\text {min}}^{n\times {n}}\), and \(K\in \mathbb {N}\cup \{+\infty \}\). LDIs are systems of \((\oplus ,\otimes )\)- and \((\boxplus ,\boxtimes )\)-linear dynamical inequalities in the dater function \(x:[\![1,K]\!]\rightarrow \mathbb {R}^n\) of the form

A finite (when \(K\in \mathbb {N}\)) or infinite (when \(K=+\infty \)) trajectory \(\{x(k)\}_{k\in [\![1,K]\!]}\) of length K is consistent if it satisfies Eq. 2 for all k. It is often useful in practice to restrict the focus on the simple class of 1-periodic trajectories, which are those of the form \(\{\lambda ^{k-1}x(1)\}_{k\in [\![1,K]\!]}\); in the standard algebra, 1-periodic trajectories are those that satisfy: \(\forall k\in [\![1,K]\!]\), \(i\in [\![1,n]\!]\), \(x_i(k) = (k-1)\times \lambda + x_i(1)\). The number \(\lambda \) is called period or cycle time of the 1-periodic trajectory.

In the following, we recall how to verify the existence of 1-periodic trajectories for given LDIs. Substituting in Eq. 2 the formula \(x(k) = \lambda ^{k-1} x(1)\), we obtain

which, after multiplying left and right hand sides of the above inequalities by \((\lambda ^{k-1})^{-1}\),Footnote 7 simplifies to

We recall the following result.

Proposition 2

Cuninghame-Green (1979) Let \(x,y\in \mathbb {R}^n\), \(A,B\in \mathbb {R}_{\max }^{n\times n}\). ThenFootnote 8

and

Because of Proposition 2 we can rewrite Eq. 3 as

which, from Proposition 1, admits solution \(x(1)\in \mathbb {R}^n\) if and only if \(\mathcal {G}(\lambda B^{1\sharp } \oplus \lambda ^{-1} A^1 \oplus A^0\oplus B^{0\sharp })\in \Gamma \). Note that we obtained a PIC-NCP with matrices \(P = B^{1\sharp }\), \(I = A^1\), and \(C = A^0 \oplus B^{0\sharp }\). Therefore, a consistent 1-periodic trajectory exists if and only if \(\Lambda _{{}_{\text {NCP}}}(\lambda B^{1\sharp } \oplus \lambda ^{-1}A^1 \oplus A^0 \oplus B^{0\sharp }) = [\lambda _{min },\lambda _{max }]\cap \mathbb {R}\) is nonempty, where \(\lambda _{min }\) and \(\lambda _{max }\) can be found in time \(\mathcal {O}(n^4)\) using Algorithm 1.

3 P-time event graphs

Definition 1

(From Calvez et al. 1997) An ordinary (or unweighted) P-time Petri net (P-TPN) is a 5-tuple \((\mathcal {P},\mathcal {T},E,m,\iota )\), where \((\mathcal {P}\cup \mathcal {T},E)\) is a directed graph in which the set of nodes is partitioned into the set of places, \(\mathcal {P}\), and the set of transitions, \(\mathcal {T}\), the set of arcs, \(E\), is such that \(E\subseteq (\mathcal {P}\times \mathcal {T})\cup (\mathcal {T}\times \mathcal {P})\), \(m:\mathcal {P}\rightarrow \mathbb {N}_0\) is a map such that m(p) represents the number of tokens initially residing in place \(p\in \mathcal {P}\) (also called initial marking of p), and

is a map that associates to every place \(p\in \mathcal {P}\) a time interval \(\iota (p)=[\tau ^-_p,\tau ^+_p]\).

In the following, we briefly describe the dynamics of an ordinary P-TPN.Footnote 9 A transition t is enabled when either it has no upstream places or each upstream place p of t contains at least one token which has resided in p for a time between \(\tau ^-_{p}\) and \(\tau ^+_{p}\) (extremes included). When transition t is enabled, it may fire; its firing causes one token to be removed instantaneously from each of the upstream places of t, and one token to be added, again instantaneously, to each of the downstream places of t. If a token sojourns more than \(\tau ^+_{p}\) time instants in a place p, then the token becomes dead.

A P-time event graph (P-TEG) is an ordinary P-TPN in which every place has exactly one upstream and one downstream transition. Let \(|\mathcal {T}|=n\) be the number of transitions in a P-TEG and let \(x:[\![1,K]\!]\rightarrow \mathbb {R}^n\) be a dater function of length \(K\in \mathbb {N}\cup \{+\infty \}\), i.e., a function such that \(x_i(k)\) represents the time at which transition \(t_i\) fires for the kth time. Since the \((k+1)\)st firing of any transition cannot occur before the kth, we require the dater to be a non-decreasing function, i.e., \(\forall i\in [\![1,n]\!]\), \(x_i(k+1)\ge x_i(k)\). The evolution of the marking in a P-TEG is entirely described by its corresponding dater trajectory \(\{x(k)\}_{k\in [\![1,K]\!]}\), and if a non-decreasing dater trajectory exists for which no token death occurs, then it is said to be consistent for the P-TEG. It is always possible to transform a P-TEG into an equivalent one whose places have at most 1 initial token each (Špaček and Komenda 2017). Therefore, in the following we will only focus on P-TEGs in which the initial marking m(p) is either 0 or 1 for each place \(p\in \mathcal {P}\). Under this assumption, a consistent trajectory for a given P-TEG must satisfy LDIs as in Eq. 2, where matrices \(A^0,A^1\in {\mathbb {R}}_{ \text{ max }}^{n\times n}\), \(B^0,B^1\in \mathbb {R}_{\text {min}}^{n\times n}\) are called characteristic matrices of the P-TEG, and are defined as follows. If there exists a place \(p_{ij}\) with initial marking \(\mu \in \{0,1\}\), upstream transition \(t_j\) and downstream transition \(t_i\), then \(A^\mu _{ij}=\tau ^-_{p_{ij}}\) and \(B^\mu _{ij}=\tau ^+_{p_{ij}}\); otherwise, \(A^\mu _{ij} = -\infty \) and \(B^\mu _{ij} = \infty \).

Illustration of the heat treatment line of Example 1

Before introducing some structural properties of P-TEGs, it is useful to clarify the role of initial conditions for these dynamical systems.

3.1 Initial conditions

Depending on the P-TEG’s intended application, the restrictiveness of initial conditions may vary; in the following, we introduce two alternatives.

3.1.1 Loose initial conditions

Inequalities Eq. 2 suggest that x(1) can assume any value in \(\mathbb {R}^n\), as long as it satisfies

Note that Eq. 4 does not restrict the first firing time based on the arrival times of the initial tokens in the Petri net; the arrival times of initial tokens are, in fact, not even defined. For this reason, we say that the initial conditions of a P-TEG are loose if no other restriction other than Eq. 4 applies to x(1).

P-TEGs with loose initial conditions evolve entirely according to LDIs, and are suitable to model manufacturing systems operating in periodic regime, after a transient period has passed (an example is given in Sect. 5.1). Another scenario where loose initial conditions may be convenient is when the time-window constraints need to be fulfilled only after the occurrence of the first events, as shown in the following example.

Example 1

(Heat treatment line) This example is adapted from Zorzenon et al. (2020). Consider a simple heat treatment line, schematically represented in Fig. 2, consisting of a furnace, which performs a heat treatment, and an autonomous guided vehicle that receives processed pieces and transports them to the next stage. Both the furnace and the vehicle have unitary capacity, i.e., they can process and transport one piece at a time, respectively. The heat treatment must last between 2 and 3 time units, and the autonomous vehicles takes (at least) 0.5 time units both to transport a processed piece to the next stage and to travel back to the furnace. Customers’ demand imposes that the time difference between subsequent unloadings of processed pieces from the autonomous guided vehicle must not exceed 4 time units; the specification needs to be met for all pieces after the first one. Moreover, each piece must spend at least 6 time units in the processing line, from the moment it enters the furnace to the one it is removed from the vehicle, in order to synchronize with other processing stages. Initially, the furnace is empty and the vehicle is waiting for a piece at the furnace. The P-TEG in Fig. 3 models the described plant; a firing of transitions \(t_1\), \(t_2\), and \(t_3\) represents, respectively, the arrival of an unprocessed piece in the furnace, the loading of a processed piece onto the autonomous guided vehicle, and the unloading of a piece from the vehicle.

The characteristic matrices of the P-TEG are

It is possible to verify that

is a consistent trajectory for the P-TEG under loose initial conditions. Observe that the first firing time of transition \(t_3\) does not violate the upper bound associated to place \(p_{33}\) (i.e., the place that is upstream and downstream of transition \(t_3\)), even though \(x_3(1) = 6 > 4 = B_{33}^1\). Indeed, the sojourn time of the initial token in place \(p_{33}\) does not restrict the dynamics of the P-TEG. This is convenient from a practical point of view, as the constraint on the processing rate must be enforced only after the first piece leaves the plant.

3.1.2 Strict initial conditions

For the considered application, it may be necessary to impose further restrictions on the initial conditions. Here we take into account the amount of time that initial tokens have resided in places prior to the initial time \(t_0\in \mathbb {R}\); we call this value the time tag of the token.Footnote 10 Time tags can be useful, for instance, in manufacturing, to specify that some machines have already been processing a part since time \(t_0-\tau \), or in transportation, to indicate that a vehicle has left a station at time \(t_0-\tau \), for \(\tau \ge 0\).

Let \(\rho \) be a function that associates a time tag to every place with an initial token in a P-TEG. Formally, if there is a place \(p_{ij}\) with marking \(m(p_{ij}) = 1\), upstream transition \(t_j\), and downstream transition \(t_i\), then we denote \(\rho (p_{ij}) = \rho _{ij}\in \mathbb {R}_{\ge 0}\), otherwise \(\rho (p_{ij})\) is not defined. Then, in addition to Eq. 2, the first firing time of the transitions of the P-TEG must satisfy, for all \(i,j\in [\![1,n]\!]\),

for each i, j, the inequality specifies that the first firing time of transition \(t_i\) must not violate the time-window constraint \([A^1_{ij},B^1_{ij}]\) associated with place \(p_{ij}\), considering that the initial token of this place arrived at time \(t_0 - \rho _{ij}\). In the max-plus algebra, the latter inequalities can be expressed as

where

and

Note that other possible definitions for \(\underline{\Delta }\) and \(\overline{\Delta }\), corresponding to different requirements for the first firings of transitions, may be considered. In general, given any \(\underline{\Delta }\in \mathbb {R}_{\text {max}}^{n\times n}\), \(\overline{\Delta }\in \mathbb {R}_{\text {min}}^{n\times n}\) such that \((\underline{\Delta },\overline{\Delta }) \ne (\mathcal {E},\mathcal {T})\), inequality Eq. 5 restricts the set of consistent trajectories for a P-TEG; hence, we say that the initial conditions of a P-TEG are strict if x(1) is required to satisfy them for some \((\underline{\Delta },\overline{\Delta })\ne (\mathcal {E},\mathcal {T})\). We will refer to consistent trajectories with either loose or strict initial conditions depending if they satisfy only Eq. 2 or also Eq. 5. Note that, without loss of generality, we can assume that \(t_0=0\), as P-TEGs (with either loose or strict initial conditions) are time-invariant systems, i.e., if \(\{x(k)\}_{k\in [\![1,K]\!]}\) is a consistent trajectory, then \(\{t_0 \otimes x(k)\}_{k\in [\![1,K]\!]}\) is consistent as well for any \(t_0\in \mathbb {R}\). In other words, the choice of the initial time \(t_0\) does not affect the dynamics of P-TEGs.

Example 2

(Heat treatment line, cont.) Consider again the P-TEG of Fig. 3. It is not difficult to see that, if we assign a time tag to each initial token of the P-TEG, no consistent trajectory that satisfies strict initial conditions can be found; indeed, it is not possible to fire transition \(t_3\) before time \(4-\rho _{33}\), for any time tag \(\rho _{33}\in \mathbb {R}_{\ge 0}\). So, let us modify the configuration of the initial tokens as in Fig. 4; time tags are indicated in the figure.

The interpretation is that:

-

a piece is inside the heat treatment line since time \(t_0 - 3\), as \(\rho _{31} = 3\),

-

an autonomous guided vehicle is at the unloading location with a processed piece from time \(t_0\), as \(\rho _{32} = 0.5 = A^1_{32}\),

-

the furnace has completed the last heat treatment at time \(t_0 - 0.5\), as \(\rho _{12} = 0.5\), and

-

the first processed piece is required to leave the heat treatment plant before time \(t_0 + 3\), as \(\rho _{33} = 1\).

The characteristic matrices for this example are

and the matrices \(\underline{\Delta }\) and \(\overline{\Delta }\) are

Assuming that \(t_0 = 0\), the following is a consistent trajectory for the P-TEG under strict initial conditions:

Despite their usefulness in applications, strict initial conditions present an additional complexity: the inequalities describing the dynamics of P-TEGs with strict initial conditions are not (pure) LDIs. This means that mathematical results in LDIs can be directly applied to P-TEGs with loose but not with strict initial conditions; this will be made evident in the following section. In Sect. 4.2, we will see that the dynamics of P-TEGs with strict initial conditions falls in the category of switched LDIs.

3.2 Structural properties

In this section we recall the definition of some structural properties of P-TEGs. These properties can be equivalently stated for P-TEGs under loose or strict initial conditions.

A P-TEG is said to be consistent if it admits a consistent, non-decreasing trajectory \(\{x(k)\}_{k\in \mathbb {N}}\) of infinite length. The non-decreasingness of the dater trajectory, equivalent to having \(x(k+1)\ge x(k)\) for all \(k\in [\![1,K-1]\!]\), is a natural requirement, as the \((k+1)\)st firing of a transition cannot occur before the kth one; because of Proposition 2, to restrict the evolution of the dater trajectory such that this restriction is always satisfied, it is sufficient to modify the definition of matrix \(A^1\) into \(A^1 \oplus E_\otimes \).

We say that a trajectory \(\{x(k)\}_{k\in \mathbb {N}}\) is delay-bounded if there exists a positive real number M such that, for all \(i,j\in [\![1,n]\!]\) and for all \(k\in \mathbb {N}\), \(x_i(k)-x_j(k)<M\); a P-TEG admitting a consistent delay-bounded trajectory of the dater function is said to be boundedly consistent. Although in consistent P-TEGs it is possible to find a marking evolution such that no time-window constraint is violated, if the stronger property of bounded consistency does not hold, any consistent, infinite trajectory will accumulate unbounded delay between the firing times of two distinct transitions. This phenomenon is usually not desirable in manufacturing systems represented by P-TEG, where the firings of transitions represent the start or end of processes, and the kth product entering the system is finished when all transitions fire for the kth time. Indeed, in this context it implies that the total time the kth product spends in the manufacturing system increases without bounds with k.

Analogously to LDIs, in P-TEGs we say that dater trajectories of the form \(\{\lambda ^{k-1}x(1)\}_{k\in [\![1,K]\!]}\) are 1-periodic with period \(\lambda \in \mathbb {R}_{\ge 0}\). Clearly, in P-TEGs with loose initial conditions, 1-periodic trajectories can be found in time complexity \(\mathcal {O}(n^4)\) using Algorithm 1, as their evolution satisfies LDIs. To our knowledge, no algorithm that checks whether a P-TEG is consistent has been found until now; on the other hand, bounded consistency of P-TEGs with loose initial conditions can be verified in time \(\mathcal {O}(n^4)\). This fact comes from the following result.

Theorem 3

Zorzenon et al. (2020) A P-TEG with loose initial conditions is boundedly consistent if and only if it admits a consistent 1-periodic trajectory.

On the other hand, an analogous result for the case with strict initial conditions does not hold: boundedly consistent P-TEGs with strict initial conditions may admit no 1-periodic trajectory, as shown in the following example.

Example 3

Using an algorithm that will be presented in Sect. 4.2, it can be shown that the P-TEG with strict initial conditions in Fig. 5 admits no 1-periodic trajectory. However, assuming that \(t_0 = 0\), it admits the following delay-bounded (2-periodic) trajectory:

Therefore, it is boundedly consistent.

The following example illustrates the discussed properties in the case of P-TEGs with loose initial conditions.

Example 4

Consider the P-TEG represented in Fig. 6, in which time windows are parametrized with respect to label \(\textsf{z}\); in Table 1, values of time windows are given for \(\textsf{z}\in \{\mathsf {\MakeLowercase {A}},\mathsf {\MakeLowercase {B}},\mathsf {\MakeLowercase {C}}\}\). The matrices characterizing the P-TEG labeled \(\textsf{z}\) are:

We analyze structural properties of the P-TEGs under loose initial conditions.

Since lower and upper bounds for the sojourn times of the two places with an initial token coincide, once the vector of first firing times \(x_\textsf{z}(1)\) is chosen (such that the first inequality in Eq. 2 is satisfied for \(k=1\), i.e., \(x_{\textsf{z},2}(1)\ge x_{\textsf{z},1}(1)\)), the only infinite trajectory \(\{x_\textsf{z}(k)\}_{k\in \mathbb {N}}\) that is a candidate to be consistent for the P-TEG labeled \(\textsf{z}\) is deterministically given by

However, for the case \(\textsf{z}=\mathsf {\MakeLowercase {A}}\) it is easy to see that, for any valid choice of the vector of first firing times, the candidate trajectory \(\{x_\mathsf {\MakeLowercase {A}}(k)\}_{k\in \mathbb {N}}\) is not consistent (as for a sufficiently large k, \(x_{\mathsf {\MakeLowercase {A}},2}(k) < x_{\mathsf {\MakeLowercase {A}},1}(k)\), i.e., the first inequality of Eq. 2 is violated). For \(\textsf{z}=\mathsf {\MakeLowercase {B}}\), candidate trajectories \(\{x_\mathsf {\MakeLowercase {B}}(k)\}_{k\in \mathbb {N}}\), despite being consistent, are not delay-bounded and result in the infinite accumulation of tokens in the place between \(t_1\) and \(t_2\) for \(k\rightarrow \infty \). On the other hand, \(\{x_\mathsf {\MakeLowercase {C}}(k)\}_{k\in \mathbb {N}}\) is consistent and delay-bounded (in fact, it is 1-periodic with period 1). Thus we can conclude that the P-TEG labeled \(\mathsf {\MakeLowercase {A}}\) is not consistent, the one labeled \(\mathsf {\MakeLowercase {B}}\) is consistent but not boundedly consistent, and the one labeled \(\mathsf {\MakeLowercase {C}}\) is boundedly consistent. Of course, we would have reached the same conclusion regarding bounded consistency by using Theorem 3.

4 Switched max-plus linear-dual inequalities

This section introduces the class of dynamical systems called switched max-plus linear-dual inequalities (SLDIs), and demonstrates its usefulness by means of simple examples. In Sect. 4.2, the relationship between SLDIs and P-TEGs with strict initial conditions is examined. Methods to efficiently verify the existence of specific trajectories are then presented in Sects. 4.3 and 4.4.

4.1 Mathematical description

We start by defining switched LDIs (SLDIs) as the natural extension of LDIs in which matrices \(A^0,A^1,B^0,B^1\) may be different for all k. Formally, SLDIs are a 5-tuple \(\mathcal {S}=(\Sigma ,A^0,A^1,B^0,B^1)\), where \(\Sigma =\{\mathsf {\MakeLowercase {A}}_1,\ldots ,\mathsf {\MakeLowercase {A}}_m\}\) is a finite alphabet whose symbols are called modes, and \(A^0,A^1:\Sigma \rightarrow \mathbb {R}_{\text {max}}^{n\times n}\), \(B^0,B^1:\Sigma \rightarrow \mathbb {R}_{\text {min}}^{n\times n}\) are functions that associate a matrix to each mode of \(\Sigma \); for the sake of simplicity, given a mode \(\textsf{z}\in \Sigma \), we will write \(A^0_\textsf{z},A^1_\textsf{z},B^0_\textsf{z},B^1_\textsf{z}\) in place of \(A^0(\textsf{z}),A^1(\textsf{z}),B^0(\textsf{z}),B^1(\textsf{z})\), respectively. We denote by \(\Sigma ^*\) and \(\Sigma ^\omega \) the sets of finite and infinite concatenations of modes from \(\Sigma \), respectively. A schedule w is an element of \(\Sigma ^* \cup \Sigma ^\omega \), i.e., it is either a finite or an infinite sequence of modes \(w = w_1w_2\ldots w_{K}\) with \(w_k\in \Sigma \) for all \(k\in [\![1,K]\!]\), where \(K\in \mathbb {N}\cup \{+\infty \}\) denotes the length of schedule w.

The dynamics of SLDIs \(\mathcal {S}\) under schedule \(w\in \Sigma ^*\cup \Sigma ^\omega \) is expressed by the following system of inequalities:

where function \(x:[\![1,K]\!]\rightarrow \mathbb {R}^n\) is called dater of \(\mathcal {S}\) associated with schedule w. Term \(x_i(k)\) represents the occurrence time of event i associated with modeFootnote 11\(w_{k}\). Similar to P-TEGs, it is natural to assume the following non-decreasingness condition for the dater of SLDIs: for all \(k,h\in [\![1,K]\!]\), \(h>k\), such that \(w_k = w_h\), \(x(h) \ge x(k)\). The implication is that events occurring during a later execution of a mode cannot occur before events that took place during an earlier execution of that mode. Note that this does not imply that x(k) is non-decreasing over k; this stronger condition would indeed unnecessarily limit the modeling expressiveness of SLDIs, as illustrated by the dater trajectory in Example 6 at page 21.

For convenience, given a finite sequence of modes \(v=v_1v_2\ldots v_V\in \Sigma ^*\) of length \(V\in \mathbb {N}\) and a number \(K\in \mathbb {N}\), in the remainder of the paper we will denote by \(v^K\in \Sigma ^*\) the sequence of length \(V \cdot K\) formed by concatenating sequence v with itself K times, i.e.,

congruently, if \(K = +\infty \), \(v^K\in \Sigma ^\omega \) denotes an infinite concatenation of sequence v.

We now show possible applications of SLDIs with two simple examples.

Example 5

(Heat treatment line, cont.) Consider again the heat treatment line of Example 1. Now, suppose that two types of parts can be processed in the system: part \(\mathsf {\MakeLowercase {A}}\) and part \(\mathsf {\MakeLowercase {B}}\); in this example, a schedule \(w\in \Sigma ^*\cup \Sigma ^\omega \) represents the entrance order of parts in the heat treatment line. As illustrated in Fig. 7, the two parts require different heating times; pieces of type \(\mathsf {\MakeLowercase {A}}\) must be heated in the furnace for a time between 2 and 3 time units (as in Example 1), whereas pieces of type \(\mathsf {\MakeLowercase {B}}\) between 3 and 4 time units. Moreover, the processing rate requirement changes depending on the type \(w_k\in \{\mathsf {\MakeLowercase {A}},\mathsf {\MakeLowercase {B}}\}\) of the kth part entering the plant: the \((k+1)\)st part must be unloaded from the autonomous guided vehicle at most 4 time units after the kth one if \(w_k=\mathsf {\MakeLowercase {A}}\), and at most 5 time units later if \(w_k=\mathsf {\MakeLowercase {B}}\). As in Example 1, we consider loose initial conditions, i.e., we suppose that the processing rate requirement needs to hold for all pieces after the first one.

Illustration of the multi-product heat treatment line of Example 5

P-TEG representing the heat treatment line if only parts of type \(\mathsf {\MakeLowercase {B}}\) were to be processed. The case where only parts of type \(\mathsf {\MakeLowercase {A}}\) are to be processed is shown in Fig. 3

This system can be modeled by SLDIs \(\mathcal {S} = (\Sigma ,A^0,A^1,B^0,B^1)\), where \(\Sigma = \{\mathsf {\MakeLowercase {A}},\mathsf {\MakeLowercase {B}}\}\),

Clearly, if \(w = \mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {A}}\ldots = \mathsf {\MakeLowercase {A}}^K\) is a concatenation of only mode \(\mathsf {\MakeLowercase {A}}\), the dynamics of the system can be described by the P-TEG of Fig. 3; similarly, if \(w = \mathsf {\MakeLowercase {B}}^K\), then the SLDIs are equivalent to the dynamics of the P-TEG of Fig. 8.

In this simple example, the following trajectory satisfies all the time-window constraints, for any schedule \(w\in \{\mathsf {\MakeLowercase {A}},\mathsf {\MakeLowercase {B}}\}^*\cup \{\mathsf {\MakeLowercase {A}},\mathsf {\MakeLowercase {B}}\}^\omega \):

The example shows that SLDIs are capable of modeling flow shops with time-window constraints, i.e., manufacturing systems where different jobs (in this case, parts) are processed in each machine (the furnace and the autonomous guided vehicle) of the system in the same order.

Example 6

(Starving philosophers problem) We present a variant of the famous dining philosophers problem, which we call the starving philosophers problem. There are \(p\in \mathbb {N}\) philosophers sitting at a table eating spaghetti; on the table, there are p chopsticks, and each philosopher \(i\in [\![1,p-1]\!]\) needs both the ith and the \((i+1)\)st chopstick to start eating, whereas the pth philosopher needs the pth and the 1st chopstick. The ith philosopher takes \(c_{ij}\) time units to grab the jth chopstick and \(e_i\) time units to eat; philosophers are allowed to grab two chopsticks at the same time and are not forced to stop eating after \(e_i\) time units. After eating, a philosopher instantaneously puts the chopsticks on the table, so that they can be used by other philosophers. In our version of the problem, dining can take a "macabre" turn: if the ith philosopher does not eat for more than \(s_i\) time units, s/he will starve. The objective of the problem is to find a dining order such that no philosopher starves.

The problem is a metaphor for an issue encountered in concurrent programming, namely, resource starvation. Consider p critical processes (the philosophers) that need to access some shared resources (the chopsticks); for safety reasons, it might be desirable to prevent some processes from not receiving the requested resources for too long. Thus, a scheduling plan should guarantee safe operation by granting each process the permission to access the resources at the right time.

Here we suppose that there are \(p = 4\) philosophers at the table, and that the following periodic dining order is imposed: initially, the second and the fourth philosophers eat (they can do so concurrently, as they do not need to share chopsticks); after that, the first philosopher eats once while, in the meantime, the third philosopher eats twice in a row; finally, the eating order repeats from the beginning. The order in which philosophers eat before repeating the periodic sequence is referred to as the dining cycle.

Since the considered dining order is periodic, it is possible to describe all trajectories that are valid for the system by means of a P-TEG (with time tags, if we suppose that the ith philosopher should start eating for the first time before time \(t_0+s_i\) for all \(i\in [\![1,p]\!]\)). The P-TEG for this example is shown in Fig. 9, where a firing of transitions \(t_{s,i}\) and \(t_{f,i}\) represents that the ith philosopher has started and finished eating for the first time in a dining cycle, and a firing of transitions \(t_{s,3}'\) and \(t_{f,3}'\) indicate that the third philosopher has started and finished eating for the second time in a dining cycle, respectively. Note that the dimension of the dater function for this problem increases not only with the number of philosophers, but also with the amount of times a philosopher eats in a dining cycle; furthermore, observe that P-TEGs can represent infinite eating orders only if they are periodic.

P-TEG for the starving philosophers problem. Tokens inside a place colored black,

, and

, and

represent, respectively, a philosopher eating, a philosopher waiting to eat, and a chopstick being grabbed. The time tag associated to each place with initial token is 0. Note that a token in the place with upstream transition \(t_{f,3}\) and downstream transition \(t_{s,3}'\) indicates that the third philosopher is both grabbing the third and the fourth chopstick and waiting to eat, hence the double color of this place

represent, respectively, a philosopher eating, a philosopher waiting to eat, and a chopstick being grabbed. The time tag associated to each place with initial token is 0. Note that a token in the place with upstream transition \(t_{f,3}\) and downstream transition \(t_{s,3}'\) indicates that the third philosopher is both grabbing the third and the fourth chopstick and waiting to eat, hence the double color of this place

The same system can be represented more compactly by SLDIs. Let \(\Sigma = \{\textsf{i},\mathsf {\MakeLowercase {P}}_1,\mathsf {\MakeLowercase {P}}_2,\mathsf {\MakeLowercase {P}}_3,\mathsf {\MakeLowercase {P}}_4\}\) be the alphabet associated with the SLDIs, where \(\textsf{i}\) is the auxiliary initial mode, which will be used to impose strict initial conditions on the system (strict initial conditions are analyzed in more depth in Sect. 4.2), and \(\mathsf {\MakeLowercase {P}}_i\) corresponds to the ith philosopher. A meaningful schedule for this system is any sequence \(w\in \Sigma ^{K}\) such that \(w_1 = \textsf{i}\) and, for all \(k\in [\![2,K]\!]\), \(w_k = \mathsf {\MakeLowercase {P}}_{i_k}\) for some \(i_k\in [\![1,p]\!]\). Whereas the first mode \(w_1 = \textsf{i}\) does not have physical interpretation besides mathematically characterizing the initial conditions for the system, for all \(k\in [\![2,K]\!]\), \(w_k\) describes the eating order of philosophers. The interpretation of schedule w is as follows; consider \(k\in [\![2,K]\!]\), \(w_k = \mathsf {\MakeLowercase {P}}_i\) and \(w_{k+1} = \mathsf {\MakeLowercase {P}}_j\):

-

if the ith and jth philosophers require access to the same chopstick, then philosopher i will eat once before philosopher j;

-

otherwise, the ith and jth philosophers will eat independently of each other, i.e., philosopher i will start (and finish) eating either before or after or at the same time as philosopher j. In this case schedules \(u w_k w_{k+1} v\) and \(u w_{k+1} w_k v\) are representative of the same behavior of the systemFootnote 12, for \(u\in \Sigma ^*\) and \(v\in \Sigma ^*\cup \Sigma ^\omega \).

A possible schedule corresponding to the chosen dining order is then given by

We will design \(A^0,A^1,B^0,B^1\) such that any dater function \(x(k)\in \mathbb {R}^{p+1} = \mathbb {R}^5\) satisfying Eq. 6 assumes the following interpretation, from which the evolution of the system can be obtained: for all \(k\in [\![2,K]\!]\), if \(w_k = \mathsf {\MakeLowercase {P}}_i\), then \(x_i(k)\) and \(x_5(k)\) represent, respectively, the time at which the ith philosopher starts and finishes eating; therefore, assuming \(w_{k+1} = \mathsf {\MakeLowercase {P}}_j\), if both the ith and the jth philosophers require access to the hth chopstick, then \(x_i(k)+e_i+c_{jh} \le x_j(k+1)\) (sequential behavior), otherwise, if they do not need to share chopsticks, \(x_i(k)\) could also be greater than \(x_j(k+1)\) (concurrent behavior). For all philosophers \(i\in [\![1,4]\!]\) such that \(w_k\ne \mathsf {\MakeLowercase {P}}_i\), \(x_i(k)\) is an auxiliary variable that stores the time at which the ith philosopher will eat next. For all \(i\in [\![1,5]\!]\), element \(x_i(1)\) will be assigned to the initial time \(t_0\), in order to manage the initial conditions (for more details, see Sect. 4.2).

For example, consider a value of \(k\in [\![2,K-1]\!]\) such that \(w_k = \mathsf {\MakeLowercase {P}}_1\); with the above interpretation, in order to represent the dynamics of the system, the dater function must satisfy

where Eq. 7a imposes the time between starting and finishing eating for the 1st philosopher, Eq. 7b is used to force the 1st philosopher to start eating again only after s/he has grabbed once more the 1st and 2nd chopsticks but before starving, Eqs. 7c and 7d impose the 2nd and 4th philosophers to start eating only after grabbing the chopsticks left by the 1st philosopher, and Eqs. 7e–7g are auxiliary constraints to impose that \(x_i(k+1) = x_i(k)\) for all philosophers \(i\in [\![2,4]\!]\). Finally, for the initial condition, we want to impose that

to make sure that philosophers start eating for the first time after grabbing the chopsticks and before starving.

In order to get the above interpretation for the dater function, the matrices for the initial mode \(\textsf{i}\) can be defined as

For mode \(\mathsf {\MakeLowercase {P}}_1\) we define

for the sake of brevity, we leave it to the reader to derive the matrices for modes \(\mathsf {\MakeLowercase {P}}_2,\mathsf {\MakeLowercase {P}}_3,\mathsf {\MakeLowercase {P}}_4\).

Gantt chart of a possible trajectory for the starving philosophers problem. Opaque bars indicate either that a chopstick is being grabbed or that a philosopher is eating; transparent bars represent chopsticks being used by a philosopher to eat. Different colors correspond to different philosophers. The dashed line indicates the period of the trajectory

We consider the following numerical parameters:

The Gantt chart of Fig. 10 represents a valid trajectory for the P-TEGFootnote 13 of Fig. 9 and for the SLDIs defined above, supposing that the initial time \(t_0\) is equal to 0.

The first 5 elements of the dater trajectory for the SLDIs are:

It is worth noting that, differently from P-TEGs, the dater function of SLDIs does not need to be non-decreasing: for instance, in this example we have

as the time in which the first philosopher (\(w_4 = \mathsf {\MakeLowercase {P}}_1\)) stops eating for the first time is after the time in which the third philosopher (\(w_5 = \mathsf {\MakeLowercase {P}}_3\)) stops eating for the first time.

The three main advantages of using SLDIs instead of P-TEGs for this problem are the following:

-

1.

higher computational efficiency: the dater function for the SLDIs has smaller dimension compared to the P-TEG. As we shall see, this corresponds to lower computational complexity for analyzing trajectories of the system;

-

2.

lower modeling effort: the P-TEG in Fig. 9 can only represent the dining order specified above; to analyze a different dining order, a new P-TEG needs to be provided. On the other hand, for the SLDIs different dining orders simply correspond to different schedules w;

-

3.

larger modeling expressiveness: only SLDIs are able to represent dining orders that are not periodic (with a dater function of finite dimension).

When schedule w is fixed, we can extend the definition of some properties of P-TEGs to SLDIs in a natural way. For instance, if there exists a trajectory of the dater \(\{x(k)\}_{k\in [\![1,K]\!]}\) that satisfies Eq. 6, then the trajectory is consistent for the SLDIs under schedule w, and we say that w is a consistent schedule for the SLDIs (or that the SLDIs are consistent under schedule w). Note that, different from the simple case of Example 5, there are SLDIs for which not all schedules admit consistent trajectories.

The definitions of delay-bounded trajectory and bounded consistency are generalized to SLDIs in a similar fashion. The interpretation of bounded consistency of a schedule w is analogous to the one of P-TEGs; when a process consisting of several tasks (the start and end of which are represented by events) is modeled by SLDIs under a schedule w that is not boundedly consistent, then either the execution of every possible sequence of tasks following w will lead to the violation of some time window constraints (if w is not even consistent), or we will certainly observe an infinite accumulation of delay between the start or end of some tasks (if the only consistent trajectories are not delay-bounded).

4.2 SLDIs and P-TEGs with strict initial conditions

As discussed in Section 3.1.2, the dynamics of P-TEGs with strict initial conditions are not pure LDIs. In this subsection, we prove that they can be expressed by means of SLDIs under specific types of schedules, with the immediate consequence that any property of SLDIs also holds for P-TEGs with strict initial conditions.

We want to prove that inequalities

can be written as SLDIs. For this aim, let us define an auxiliary variable \(x(0)\in \mathbb {R}^n\). Note that the first inequality of Eq. 8 is equivalent to

where \(\mathbb {E}_{ij} = 0\) for all \(i,j\in [\![1,n]\!]\). Indeed, the first of the latter inequalities can be rewritten as

which admits as solution all x(0) that satisfy \(x_i(0) = x_j(0)\) for all \(i,j\in [\![1,n]\!]\); therefore, solutions can be parametrized in \(t_0\in \mathbb {R}\) as \(x(0) = t_0 \tilde{e}\). Recalling that P-TEGs (as well as SLDIs) are time-invariant systems (see Section 3.1.2), this proves that Eq. 8 is equivalent to SLDIs \(\mathcal {S} = (\{\textsf{i},\mathsf {\MakeLowercase {A}}\},A^0,A^1,B^0,B^1)\) under schedule \(w = \textsf{i}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {A}}\ldots = \textsf{i}\mathsf {\MakeLowercase {A}}^{K}\) (i.e., mode \(\textsf{i}\) followed by a sequence of K modes \(\mathsf {\MakeLowercase {A}}\)), where

The following result is an immediate consequence of this fact.

Proposition 4

The P-TEG characterized by matrices \(A^0,A^1,B^0,B^1\) under strict initial conditions determined by matrices \(\underline{\Delta }\) and \(\overline{\Delta }\) is (boundedly) consistent if and only if schedule \(w = \textsf{i}\mathsf {\MakeLowercase {A}}^{+\infty }\) is (boundedly) consistent for the SLDIs \(\mathcal {S}\) defined as above.

Although Proposition 4 alone does not answer the question about how to verify (bounded) consistency of P-TEGs with strict initial conditionsFootnote 14, it suggests that any result regarding SLDIs under schedules of the form \(w = \textsf{i}\mathsf {\MakeLowercase {A}}^{K}\) holds automatically for P-TEGs with strict initial conditions.

In the following, we provide an example for the application of this fact. Let us study the existence of 1-periodic trajectories for P-TEGs with strict initial conditions. From the above discussion, such trajectories correspond to "ultimately" 1-periodic trajectories of the form

for SLDIs \(\mathcal {S} = (\{\textsf{i},\mathsf {\MakeLowercase {A}}\},A^0,A^1,B^0,B^1)\) under schedule \(w = \textsf{i}\mathsf {\MakeLowercase {A}}^K\). We proceed with a strategy similar to the one seen in Section 2.4: substituting formula \(x(k+2) = \lambda ^{k}x(2)\) for all \(k\in [\![1,K-1]\!]\) into Eq. 6, we get

multiplying by \(\lambda ^{-k+1}\) in the third and fourth inequalities, we obtain

By defining the extended dater vector \(\tilde{x} = [x^\top (1)\ \ x^\top (2)]^\top \) and using Proposition 2, the inequalities can be rewritten in terms of \(\tilde{x}\) as

where

Clearly, Eq. 9 defines a PIC-NCP, whose solution can be found in time \(\mathcal {O}(n^4)\) using Algorithm 1. The conclusion is that periods of consistent 1-periodic trajectories for P-TEGs under strict initial conditions can be obtained in the same strongly polynomial time complexity as for P-TEGs under loose initial conditions.

4.3 Analysis of periodic schedules

In this subsection, we analyze bounded consistency and cycle times of SLDIs when schedule \(w\in \Sigma ^*\cup \Sigma ^\omega \) is periodic, i.e., when it can be written as \(w = v^K\), \(K\in \mathbb {N}\cup \{+\infty \}\), and \(v=v_1\cdots v_V\in \Sigma ^*\) is a finite subschedule of length V. We define v-periodic trajectories of period \(\lambda \in \mathbb {R}_{\ge 0}\) for SLDIs under schedule \(w=v^K\) as those dater trajectories that, for all \(k\in [\![1,K-1]\!]\), \(h\in [\![1,V]\!]\), satisfy

\(\Lambda _{{}_{\text {SLDI}}}^{v}(\mathcal {S})\) denotes the set of all periods (or cycle times) \(\lambda \) for which there exists a consistent v-periodic trajectory. Their relationship with 1-periodic trajectories in P-TEGs is illustrated in the following example.

Example 7

Let us analyze the SLDIs \(\mathcal {S}\), with \(\Sigma =\{\mathsf {\MakeLowercase {A}},\mathsf {\MakeLowercase {B}},\mathsf {\MakeLowercase {C}}\}\), and \(A^0_\textsf{z},A^1_\textsf{z},B^0_\textsf{z},B^1_\textsf{z}\) defined as in Example 4; now label \(\textsf{z}\in \Sigma \) is to be interpreted as a mode. Thus, for each event k, the dynamics of the SLDIs may switch among those specified by the P-TEGs labeled \(\mathsf {\MakeLowercase {A}}\), \(\mathsf {\MakeLowercase {B}}\), and \(\mathsf {\MakeLowercase {C}}\). We consider periodic schedules \((\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {C}})^K\) and \((\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}})^K\); observe that for \(w=v^K\), with \(v\in \{\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {C}},\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\}\) (i.e., \(v_1=\mathsf {\MakeLowercase {A}}\) and \(v_2=\mathsf {\MakeLowercase {C}}\) or \(v_2=\mathsf {\MakeLowercase {B}}\)), the SLDIs following w can be written as:

By defining \(\tilde{x}(k) = [x^\top (2(k-1)+1),x^\top (2(k-1)+2)]^\top \), the above set of inequalities can be rewritten as LDIs:

where

To see the equivalence of Eqs. 10 and 11, observe that the second block of Eq. 11a reads

From this transformation, we can easily conclude that \(\mathcal {S}\) is boundedly consistent under \(v^{+\infty }\) if and only if the LDIs with characteristic matrices \(A^0_v,A^1_v,B^0_v,B^1_v\) are boundedly consistent, and that all consistent v-periodic trajectories of \(\mathcal {S}\) coincide with consistent 1-periodic trajectories of the LDIs; hence, using Algorithm 1 we obtain

It is worth noting that, although P-TEGs labeled \(\mathsf {\MakeLowercase {A}}\) and \(\mathsf {\MakeLowercase {B}}\) are not boundedly consistent, the SLDIs under schedule \((\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}})^{+\infty }\) are. Thus, in general it is not possible to infer bounded consistency of SLDIs under a fixed schedule w solely based on the analysis of each mode appearing in w.

By generalizing the procedure shown in Example 7, we can derive the following proposition through some algebraic manipulations (to set up equivalent LDIs) and applying Theorem 3 (for a formal proof, see Appendix 1).

Proposition 5

SLDIs \(\mathcal {S}\) are boundedly consistent under schedule \(w=v^{+\infty }\) if and only if they admit a v-periodic trajectory. Moreover, set \(\Lambda _{{}_{\text {SLDI}}}^{v}(\mathcal {S})\) coincides with \(\Lambda _{{}_{\text {NCP}}}(\lambda {P}_{v}\oplus \lambda ^{-1}I_{v}\oplus {C}_{v})\), where

\(P_h = B_{v_h}^{1\sharp }\), \(I_h = A_{v_h}^1\), and \(C_h = A_{v_h}^0\oplus B_{v_h}^{0\sharp }\) for all \(h\in [\![1,V]\!]\).

Proposition 5 directly provides an algorithm to compute the minimum and maximum cycle times of SLDIs under a fixed periodic schedule. Indeed, these values come from solving the NCP for the parametric precedence graph \(\mathcal {G}(\lambda P_v\oplus \lambda ^{-1}I_v\oplus C_v)\). However, this approach results in a slow (although strongly polynomial time) algorithm when the length of subschedule v is large; indeed, its time complexity is \(\mathcal {O}((Vn)^4) = \mathcal {O}(V^4n^4)\), as the considered precedence graph has Vn nodes.

To speed up the computation of \(\Lambda _{{}_{\text {SLDI}}}^{v}(\mathcal {S})\), we may note the following fact: the longer subschedule v is, the larger is the number of \(-\infty \)’s compared to real numbers in \(\lambda P_v\oplus \lambda ^{-1}I_v\oplus C_v\). In other words, the matrix becomes sparser and sparser with larger values of V. Moreover, the real entries of the matrix have a recognizable pattern. The following theorem, proven in Appendix 2, exploits this observation, achieving time complexity \(\mathcal {O}(Vn^3+n^4)\) for computing the set \(\Lambda _{{}_{\text {SLDI}}}^{v}(\mathcal {S})\). The resulting complexity is thus linear in the length of subschedule v.

Theorem 6

Precedence graph \(\mathcal {G}(\lambda P_v \oplus \lambda ^{-1}I_v \oplus C_v)\) does not contain circuits with positive weight if and only if the following conditions hold:

-

1.

labelen:1 for all \(h\in [\![1,V]\!]\), \(\mathcal {G}(C_{h})\in \Gamma \);

-

2.

for all \(h\in [\![1,V-1]\!]\), \(\mathcal {G}(\mathbb {C}^{P}_h)\in \Gamma \) and \(\mathcal {G}(\mathbb {C}^{I}_{h+1})\in \Gamma \), where

$$ \begin{array}{ll} \forall h\in [\![1,V-1]\!], \quad &{} \mathbb {C}^{P}_h = \mathbb {P}_h(\mathbb {P}_{h+1}(\cdots (\mathbb {P}_{V-1}\mathbb {I}_{V-1})^*\cdots )^*\mathbb {I}_{h+1})^*\mathbb {I}_{h},\\ \forall h\in [\![2,V]\!], &{}\mathbb {C}^{I}_h = \mathbb {I}_h(\mathbb {I}_{h-1}(\cdots (\mathbb {I}_{2}\mathbb {P}_{2})^*\cdots )^*\mathbb {P}_{h-1})^*\mathbb {P}_{h},\\ \forall h\in [\![1,V]\!],&{} \mathbb {P}_h = C_{h}^*P_{h}C_{{h+1}}^*, \quad \quad \mathbb {I}_h = C_{{h+1}}^*I_{h}C_{h}^*,\\ &{} C_{V+1} = C_1; \end{array} $$ -

3.

\(\lambda \in \Lambda _{{}_{\text {NCP}}}(\lambda \mathbb {M}^{P}\oplus \lambda ^{-1}\mathbb {M}^{I}\oplus \mathbb {M}^{C})\), where

$$ \begin{array}{rcl} \mathbb {M}^P &{}=&{} \mathbb {P}_1(\mathbb {C}^{P}_2)^*\mathbb {P}_{2}(\mathbb {C}^{P}_{3})^*\cdots (\mathbb {C}^{P}_{V-1})^*\mathbb {P}_{V-1} \mathbb {P}_V,\\ \mathbb {M}^I &{}=&{}\mathbb {I}_V(\mathbb {C}^{I}_{V-1})^*\mathbb {I}_{V-1}(\mathbb {C}^{I}_{V-2})^*\cdots (\mathbb {C}^{I}_{2})^*\mathbb {I}_2\mathbb {I}_1,\\ \mathbb {M}^C &{}=&{} \mathbb {C}^{P}_1\oplus \mathbb {C}^{I}_V. \end{array} $$

The time complexity is analyzed as follows. Item 1 from Theorem 6 requires to check the existence of circuits with positive weight in V precedence graphs consisting of n nodes each; since each verification takes time \(\mathcal {O}(n^3)\), all operations in item 1 are computed in time \(\mathcal {O}(Vn^3)\). As for item 1, since \(\mathbb {C}^P_h = \mathbb {P}_h(\mathbb {C}^P_{h+1})^* \mathbb {I}_h\) and \(\mathbb {C}^I_h = \mathbb {I}_h (\mathbb {C}^I_{h-1})^* \mathbb {P}_h\), there are \(\mathcal {O}(V)\) multiplications and Kleene star operations to be performed, each of which requires \(\mathcal {O}(n^3)\) operations; the total computational time is thus \(\mathcal {O}(Vn^3)\). In item 3, \(\mathbb {M}^P\), \(\mathbb {M}^I\), and \(\mathbb {M}^C\) can be obtained performing \(\mathcal {O}(V)\) multiplications and Kleene star operations and \(\mathcal {O}(1)\) additions on \(n\times n\) matrices; finally, set \(\Lambda _{{}_{\text {NCP}}}(\lambda \mathbb {M}^{P}\oplus \lambda ^{-1}\mathbb {M}^{I}\oplus \mathbb {M}^{C})\) is computable in time \(\mathcal {O}(n^4)\) using Algorithm 1.

The formulas of Theorem 6 generalize those found in (Gaubert and Mairesse 1999, Theorem 5.2) for the throughput evaluation in max-plus automata (or heap models). The formula in (Gaubert and Mairesse 1999, Theorem 5.2) can indeed be recovered from Theorem 6 considering the special case when \(P_h = C_h = \mathcal {E}\) for all h, i.e., when no upper bound constraints or relations between \(x_i(k)\) and \(x_j(k)\) for all i, j, k exist; in order to do so, observe that in this particular case Algorithm 1 simplifies significantly, see (Zorzenon et al. 2022, Remark 3).

4.4 Analysis of intermittently periodic schedules

We conclude this section with the analysis of particular trajectories of SLDIs with intermittently periodic schedules, i.e., schedules that can be factorized in the form

where

-

\(u_0,\ldots ,u_{q},v_1,\ldots ,v_{q}\) are finite subschedules of lengths \(U_0,\ldots ,U_q\in \mathbb {N}_0\) and \(V_1,\ldots ,V_{q}\in \mathbb {N}\), respectively,

-

\(2\le m_1,\ldots ,m_{q-1}<+\infty \),

-

\(m_q\) is either an element of \(\mathbb {N}\) or \(+\infty \); in the second case, \(u_q\) is the empty string (of length \(U_q=0\)) and the schedule is called ultimately periodic.

We call \(u_0,\ldots ,u_q\) the transient subschedules and \(v_1,\ldots ,v_q\) the periodic subschedules of the schedule w. Observe that the factorization of an intermittently periodic schedule into transient and periodic subschedules may be not unique; for example, schedule \(w = \mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\) can be factorized into \(w = \mathsf {\MakeLowercase {A}}(\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}})^2 \mathsf {\MakeLowercase {A}}\) (\(u_0=\mathsf {\MakeLowercase {A}}\), \(v_1=\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\), \(u_1 = \mathsf {\MakeLowercase {A}}\), \(m_1=2\)), into \(w = \mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {A}}(\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}})^2\) (\(u_0 = \mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {A}}\), \(v_1 = \mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\), \(m_1 = 2\)), or even into \(w = (\mathsf {\MakeLowercase {A}})^2 (\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}})^2\) (\(U_0 = U_1 = U_2 = 0\), \(v_1 = \mathsf {\MakeLowercase {A}}\), \(v_2 = \mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\), \(m_1=m_2=2\)). In the reminder of the paper, to unequivocally indicate the intended schedule factorization into periodic and transient subschedules, we adopt the practice of writing explicitly exponents \(m_h\) that elevate periodic subschedules \(v_h\), and of writing extensively transient subschedules \(u_h\) (even when \(u_h\) could be written as a concatenation of a sequence of modes).

The interpretation of intermittently periodic schedules in systems modeled by SLDIs is as follows: after the start-up of the system (\(u_0\)), a number of operations are executed cyclically (\(v_1^{m_1}\)), after which the system is re-initialized (\(u_1\)) before starting a new sequence of cyclical tasks (\(v_2^{m_2}\)), and so on; finally, after a finite number (q) of alternations between transient and cyclic working regimes, the system is either shut down (\(u_q\)) if \(m_q\in \mathbb {N}\) or works in periodic regime forever if \(m_q = +\infty \).

In the reminder of the paper, we let \(K_h = U_0+\sum _{j = 1}^{h} \left( m_jV_j+U_j\right) \) for all \(h\in [\![0,q]\!]\). The objective of this section is to show that trajectories with important practical relevance under intermittently periodic schedules can be efficiently analyzed in SLDIs. Namely, we study the existence of intermittently periodic trajectories, that is, trajectories of the dater function \(\{x(k)\}_{k\in [\![1,K_q]\!]}\) such that

are \(v_h\)-periodic trajectories of period \(\lambda _h\) for all \(h\in [\![1,q]\!]\). In other words, for all \(h\in [\![1,q]\!]\), \(j\in [\![1,m_h-1]\!]\), \(k\in [\![1,V_h]\!]\), an intermittently periodic trajectory satisfies, for some \(\lambda _h\in \mathbb {R}_{\ge 0}\),

Intermittently periodic trajectories in which \(m_q = +\infty \) are referred to as ultimately periodic trajectories. Intermittently periodic trajectories generalize v-periodic trajectories, as they are \(v_h\)-periodic in each sequence of cyclical tasks \(v_h^{m_h}\).

Note that the definition of intermittently periodic trajectory depends on the specific factorization of schedule w into transient (\(u_h\)) and periodic (\(v_h\)) subschedules. For instance, let \(w = \mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}}\). A trajectory \(\{x(k)\}_{k\in [\![1,6]\!]}\) for schedule w factorized as \(\mathsf {\MakeLowercase {A}}(\mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {B}})^2\mathsf {\MakeLowercase {A}}\) is intermittently periodic if it satisfies, for some \(\lambda _1\in \mathbb {R}_{\ge 0}\),

Considering the factorization \(w = \mathsf {\MakeLowercase {A}}\mathsf {\MakeLowercase {A}}(\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}})^2\), the trajectory is intermittently periodic if, for some \(\lambda _1\in \mathbb {R}_{\ge 0}\),

According to factorization \(w = (\mathsf {\MakeLowercase {A}})^2 (\mathsf {\MakeLowercase {B}}\mathsf {\MakeLowercase {A}})^2\), instead, the trajectory is intermittently periodic if, for some \(\lambda _1,\lambda _2 \in \mathbb {R}_{\ge 0}\),

Trajectories of this type appear frequently in applications. Typical examples are batch manufacturing systems, where each batch is processed in a periodic workflow, but switching between different batches leads to pauses and irregular transients (see, e.g., Lee and Lee 2012; Fröhlich and Steneberg 2011). Urban railway systems also operate on a similar principle, with trains arriving at stations in a periodic manner during peak and off-peak hours, although the period may vary based on the time of day. Moreover, note that, as shown in Section 4.2, 1-periodic trajectories of P-TEGs with strict initial conditions correspond to a particular case of ultimately periodic trajectories of SLDIs, in which \(q=1\) and \(U_0 = 1\).

Example 8

This example shows how to transform the problem of finding intermittently periodic trajectories into an MPIC-NCP. We consider again the SLDIs of Example 7 under the intermittently periodic schedule

for some \(m_1\ge 2\). The inequalities corresponding to schedule w are: for all \(k\in [\![1,m_1]\!]\),

To analyze the existence of intermittently periodic trajectories, we substitute in Eq. 14, for all \(k\in [\![1,m_1-1]\!]\), \(x(k+2) = \lambda _1^k x(2)\), obtaining: for all \(k\in [\![1,m_1]\!]\),

It is possible to get rid of the term \(\lambda _1^{m_1-1}\) in the penultimate inequalities by performing a change of variable. Let \(\xi :[\![1,3]\!]\rightarrow \mathbb {R}^2\) be defined by

By substituting \(\xi \) into Eq. 15, we obtain