Abstract

Several business cycle models exhibit a recursive timing structure, which enforces delayed propagation of exogenous shocks driving short-run dynamics. We propose a bootstrap-based empirical strategy to test for the relevance of timing restrictions and ensuing shock transmission delays in DSGE environments. In the presence of strong identification, we document how likelihood-based tests in bootstrap-resamples can be used to empirically assess short-run restrictions placed by informational structures on a given model’s equilibrium representation, thereby enhancing coherence between theory and measurement. We evaluate the size properties of our procedure in short time series by conducting a number of numerical experiments on a popular New Keynesian model of the monetary transmission mechanism. An empirical application to U.S. data from the Great Moderation period allows us to revisit and qualify previous findings in the field by lending support to the conventional (unrestricted) timing protocol, whereby inflation and output gap do respond on impact to monetary policy innovations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Recently developed business cycle models feature a recursive timing structure, according to which decision rules of forward-looking, rationally optimizing agents reflect the presence of delayed information dissemination across the economy. Two main examples in the policy domain stand out: first, models where government spending entails planning lags and thus cannot respond to current economic developments—see e.g. Kormilitsina and Zubairy (2018), Schmitt-Grohé and Uribe (2012); secondly, monetary frameworks where slow-moving variables, such as consumption and wages, are bound not to respond on impact to unexpected changes in the policy rate, which only gradually propagate in the private sector—see e.g. Altig et al. (2011), Christiano et al. (2005).Footnote 1

Model-wise, timing restrictions in structural modeling are micro-founded via informational constraints that relate agents’ expectation formation and the ensuing decision rules to increasing sequences of nested and temporarily asymmetric information sets—see Kormilitsina (2013). The timing mismatch between optimal decisions and the evolution of the state of the economy generates propagation delays for a subset of the exogenous forces (i.e. the structural shocks) driving short-run dynamics, with potentially significant implications for the model’s predictions about the time series properties of endogenous variables—see Angelini and Sorge (2021).

On a positive side, a burgeoning number of studies in modern macroeconomic writing has underscored the role of informational frictions in reconciling theoretical predictions of otherwise standard rational expectations frameworks with observed features of the data, e.g. the persistent and hump-shaped responses of inflation and output measures to unanticipated monetary shocks (Mankiw and Reis 2002), the relationship between inflation illusion and asset prices (Piazzesi and Schneider 2008), or the excess return predictability in financial markets (Bacchetta et al. 2009). While empirical work exploiting survey data has been supportive of imperfect information models in general, e.g. Branch (2007), Mankiw et al. (2003), the question whether macroeconomic data favor the adoption of the recursive timing assumption as opposed to the common (unrestricted) timing protocol, conditional on a given DSGE structure, still remains open to debate. We believe this modeling issue qualifies as a key concern for the specification of macroeconomic models and their empirical validation: failure to control for the informational transmission channel in estimated models can in principle distort inference on the relative contribution of aggregate shocks to business cycle fluctuations, and/or the assessment of competing policy measures whose effects are shaped, among other things, by agents’ expectations.

To tackle these issues, we provide a handy frequentist procedure, based on a bootstrap variant of the likelihood ratio (LR) test in state-space systems, to empirically assess the relevance of timing restrictions and ensuing shock transmission delays in small-scale dynamic stochastic general equilibrium (DSGE) environments. Specifically, we submit to formal testing the null hypothesis that a subset of endogenous model variables of interests (e.g. the inflation rate) fail to simultaneously and/or fully respond to the state of the economy (e.g. movements in the nominal interest rate), thus providing evidence against the alternative of contemporaneous timing. Upon estimating the model-implied set of endogenous responses across timing structures (restricted versus unrestricted) along with estimates of a given model’s structural parameters, information stemming from likelihood-based tests for the rational expectations cross-equation restrictions (CERs) placed by the model’s structure on its equilibrium (reduced form) representation can be exploited to evaluate the empirical plausibility of the recursive timing assumption in the DSGE context.

Operationally, we build on recent contributions by Angelini et al. (2022), Bårdsen and Fanelli (2015), Stoffer and Wall (1991) on estimation and hypothesis testing in state-space models. Stoffer and Wall (1991) propose a nonparametric Monte Carlo bootstrap that abstracts from distributional assumptions that are hardly valid in small to moderate samples. Bårdsen and Fanelli (2015) develop a frequentist approach to testing sequentially cointegration/common-trend restrictions along with conventional rational expectations CERs in DSGE models, arguing in favor of classical likelihood-based tests to handle both long- and short-run restrictions placed by the model on its reduced form representation. Angelini et al. (2022) emphasize the role of bootstrap resampling as a conceptually simple diagnostic tool for asymptotic inference in estimated state-space models. Among other things, these authors show that, in the case of strong identification the bootstrap maximum likelihood (ML) estimator of the structural parameters replicates the asymptotic distribution of the ML estimator, and prove formally that the restricted bootstrap (i.e. with the null hypothesis under investigation being imposed in estimation) is consistent. Under these circumstances, not only the (either standard or bootstrap) LR test is asymptotically pivotal and chi-square distributed, but the bootstrap tends to reduce the discrepancy between actual and nominal probabilities of type-I error. In fact, the bootstrap in DSGE models (and, more generally, in frameworks that admit a conventional state space representation) has the potential to mitigate the over-rejection phenomenon that characterizes tests of non-linear hypothesis that rely on first-order asymptotic approximations vis-à-vis short time series, as those usually employed for business cycle analysis. We indeed find our resampling method to improve upon the asymptotic LR test, for the empirical size of the bootstrap-based LR test tends to approach the chosen nominal level.

The computation of our bootstrap-based LR test for the timing-specific CERs associated with the DSGE model entails the estimation of the structural parameters, which in our setup is accomplished by maximizing the likelihood function of the (locally unique) reduced form equilibrium subject to the CERs. Model estimation via classical ML is not very common in the DSGE literature, and Bayesian methods are typically preferred given their inherent ability to deal with misspecification issues and small-sample inference—see Del Negro et al. (2007), Del Negro and Schorfheide (2009) among others. Bårdsen and Fanelli (2015) emphasize that classical statistical methods can also be useful for empirically evaluating small DSGE models, insofar as they offer a number of indications about the qualitative and/or quantitative features of the data that a given framework fails to adequately capture, with an explicit measure of adequacy being provided by the pre-fixed nominal probability of type-I error associated with the LR test. In a similar vein, the outcome of our testing strategy can be thought of as providing information about possible directions for enhancing coherence between theory and measurement in the class of small-scale DSGE models.

While linear Gaussian state space systems are in widespread use in macroeconometrics, e.g. Chan and Strachan (2023), likelihood-based inferential analysis in these models still remains relatively scant. A main obstacle to establishing the asymptotic properties of conventional likelihood-based tests is the well-known lack of identification of these models in the absence of further restrictions. In fact, since any similarity transform (or rotation) of the vector of latent variables by a non-singular (conformable) arbitrary matrix yields a state-space representation of the system with equivalent second-order properties, information on the autocovariance patterns for the observables fail to ensure identification of the state-space parameters; this in turn violates standard regularity conditions for likelihood-based inference, e.g. Komunjer and Ng (2011). Komunjer and Zhu (2020) ingeniously address this issue by locally re-parameterizing the state-space system in terms of a lower-dimensional canonical parameter which is by construction identified, without affecting the likelihood of the model; this in turn allows them to derive the asymptotic distribution in conventional chi-squared form (with known degrees of freedom) of the LR test under several hypotheses of interest, which can therefore be used to assess the validity of DSGE model specifications.Footnote 2

We fully acknowledge the pervasiveness of weak identification (or lack thereof) of structural parameters in richly parameterized DSGE models, e.g. Canova and Sala (2009); Consolo et al. (2009); Mavroeidis (2010); Qu and Tkachenko (2012), and the fact that any direct test for CERs in dynamic macroeconomic models should preferably be set out when parameter (local) identifiability is ensured. Population identification of the deep parameters of the DSGE model, i.e. the existence of an injective mapping from the reduced-form parameters under the CERs and the structural deep ones, can be numerically checked by means of appropriate rank conditions, e.g. Iskrev (2010); necessary and sufficient (rank/order) identification conditions in terms of equivalent spectral densities, that do not require the numerical evaluation of analytical moments, can also be invoked, e.g. Komunjer and Ng (2011). In order not to shift the focus on identifiability issues, our investigation of the properties of the bootstrap-based LR test is therefore conducted on the assumption of strong identification, meaning that all the regularity conditions for standard asymptotic inference are at work. An advantage of our approach is that, as argued in Angelini et al. (2022), the asymptotic distribution of the bootstrap estimator of the structural parameters reveals possible identification failures. By the same token, failure of either version of the DSGE model (one with recursive timing, the other free of timing restrictions) to pass the LR test for the short-run CERs can be interpreted as an indication toward envisioning alternative structural frameworks and/or shock transmission mechanisms that possibly allow to capture some of the patterns observed in the data.

To showcase the validity of our testing procedure, we adopt the hybrid New Keynesian (NK) model popularized by Benati and Surico (2009), and formalize timing restrictions as follows: private sector variables (inflation and output gap) and expectations cannot respond on impact to monetary policy innovations, yet they can fully adjust to other sources of uncertainty and model’s states (e.g. past inflation). Since Rotemberg and Woodford (1997), NK structures embodying nominal rigidities and recursive timing with respect to the propagation of monetary policy surprises have been used to shed light on the origins of aggregate fluctuations and the historical evolution of the monetary transmission mechanism in the U.S. economy—see e.g. Altig et al. (2011), Boivin and Giannoni (2006), Christiano et al. (2005). Across all of these studies, no formal test for the over-imposed timing restrictions is ever performed, meaning that the estimated transmission mechanism necessarily reflects the (arbitrary) way timing restrictions are framed and embedded into the underlying model specification. Conditional on such restrictions being operative, the model’s general equilibrium dynamics is then evaluated in response to cyclical variation in the systematic component of the monetary policy rule as well as to unexpected changes in the Fed funds rate in the US economy (policy surprises).

We first conduct a battery of Monte Carlo experiments in order to evaluate the empirical size properties of the proposed test, explicitly considering two distinct scenarios: one where information-based timing restrictions produce non-negligible variation in the dynamic adjustment paths of non-policy variables to the non-systematic component of monetary policy; and the other where (with the exclusion of the zero effect on impact) the dynamic properties of the model are almost identical across informational structures (restricted vs. unrestricted). In either case, simulation results robustly indicate that the bootstrap-based approach manages to counterbalance the tendency of the standard LR test to over-reject the hypothesis of structural timing restrictions in small samples, with rejection frequencies close to the \(5\%\) nominal level.

We then revisit the evidence on the transmission of monetary policy in the so-called Great Moderation period of U.S. macroeconomic history, using our likelihood-based testing approach. Characterized by a sharp decline in macroeconomic volatility that began in the mid-1980s, the Great Moderation has attracted a great deal of attention from macroeconomic analysts, interested in uncovering the deep causes of such phenomenon. One main view, supported by both system-based and reduced form evidence, has credibly attributed it to an active monetary policy behavior that managed to stabilize inflationary expectations via commitment to a strong response of the nominal interest rate to deviations of the inflation rate from the policy target—see, among others, Clarida et al. (2000); Fanelli (2012); Hirose et al. (2020); Lubik and Schorfheide (2004). Our estimation results appear to lend support to the conventional (unrestricted) timing protocol, whereby monetary policy shocks—i.e. unexpected exogenous changes in the Federal funds rate—have entailed contemporaneous effects on both inflation and output gap dynamics for the period running from 1985 to 2008. This finding calls for some caution in interpreting the responses of inflation and real economic activity to the conduct of monetary policy as estimated in the earlier NK literature that routinely adopted, without testing, timing restrictions on the observability of policy shocks, e.g. Altig et al. (2011), Boivin and Giannoni (2006).

The remainder of the paper is organized as follows. Section (2) reviews the general state space representation of first-order approximate solution to general DSGE models featuring timing restrictions. Section (3) introduces the testing problem and discusses the bootstrap algorithm used to test for the relevance of shock propagation delays in DSGE environments. Section (4) reports the outcome of our simulation experiments, whereas Sect. (5) presents an empirical application for the U.S. economy. Section (6) concludes.

2 Setup

2.1 General DSGE Model Representation

DSGE models are generally described by an \(n_f\)-dimensional stochastic difference system

where the random processes \(\left( y_t \right)\) and \(\left( x_t \right)\) are defined on the same filtered probability space, and \(E_t\) is the conditional expectation associated with the underlying probability measure. The \(n_y\)-dimensional vector y collects the model’s endogenous jump variables, whereas the \(n_x\)-dimensional vector x contains \(n^1_x\) endogenous predetermined variables as well as \(n^2_x\) exogenous states (where \(n^1_x+n^2_x=n_x\), \(n_y+n_x=n_f\)). Finally, the vector \(\theta\) collects the structural parameters and the scalar \(\sigma \ge 0\) captures surrounding uncertainty, see Schmitt-Grohé and Uribe (2004).

Let the prime superscript denotes one-step ahead variables. Under the common timing protocol, decision rules for all variables y depend on the whole set of states x. The linearly perturbed solution to (1) then reads as

where the conformable matrix \(\kappa (\theta )\) loads the \(n^2_x\)-dimensional vector of structural economic shocks \(\epsilon \sim i.i.d.(0,I_{n^2_x})\) (e.g. preference shocks, supply-side shocks, policy innovations) on the state variables x, and the coefficient matrices \(g_x\) and \(h_x\) are evaluated at the non-stochastic steady state \(({\bar{y}}, {\bar{x}})\) solving (1) when \(\sigma =0\).

2.2 DSGE Models Under Timing Restrictions

Following Kormilitsina (2013), Sorge (2020), we are interested in a particular class of limited information DSGE models, namely those in which some state variables are unobserved in the current period or observed with some lag, and where some endogenous variables that adjust to observed states can serve as additional states forcing variation in other endogenous variables. This approach acknowledges the fact that it is not the date at which expectations are formed that matters, but rather the date and the structure of the information set upon which expectations are framed. In this context, restricted (or limited) information means that, in the face of exogenous shocks that do not occur simultaneously, agents’ expectation formation and the ensuing decision rules are to be conditioned on different information sets. Accordingly, timing restrictions are naturally formalized by means of fictitious informational sub-periods characterized by heterogeneous (across rational decision-makers) information sets and the associated process of expectations formation and the timing of decisions.Footnote 3

Remarkably, being rooted in the theory of perturbation of non-linear systems, this approach allows one to embed the assumed set of timing restrictions directly into the non-linear equilibrium conditions that fully characterize a given DSGE model; approximated (up to second-order) optimal decision rules can then be derived on the basis of the imposed informational structure. To this aim, let \(f = [f^y,\, f^x]'\) denote the set of \((n_y+n_x)\) equations of the model, and \({\mathcal {E}}_t\) the collection of (conditional) expectation operators accounting for the heterogeneous information sets, that is

where \(f^{(y, x)}_k\) (\(k\le n_y+n^1_x\)) is the model’s equation used to pin down the k-th endogenous variable in \((y, x^1)\), conditional on the equilibrium values for the other endogenous variables and the relevant states, for which model-consistent expectations (optimal projections) at date t are determined on the basis of the restricted (and in principle different across these equations) information set \(\mathbb {I}_{i, t}\), \(i \le n_y\); and \(f^{(x)}_j\) (\(j \le n^2_x\)), is the possibly nonlinear equation that governs the dynamics of j-th exogenous state variable \(x_j\). Apparently, one can make the DSGE model embody information-based timing restrictions by simply specifying the information sets \(\mathbb {I}_{i,t}\). We maintain, as assumed in the aforementioned literature, that all types of agents (indexed by the information sets \(\mathbb {I}_{i,t}\)) know the actual structure of the model and form expectations rationally. Differently from dynamic structures with persistently dispersed information, e.g. Kasa et al. (2014), the specification of information-based timing restrictions does not involve an infinite regress of expectations, and the underlying model’s representation will generically be finite dimensional, see Angelini and Sorge (2021).

As detailed in the Appendix, structural timing restrictions in the DSGE setting can be modeled via system partitions of the form

where \(y_u \in y\) collects endogenous variables which respond to the whole set of state variables x, and the \(n_{y_r}\)-dimensional vector \(y_r \in y\) includes variables that can respond to observed states \(x_u \in x\) and the best (minimum mean square error) forecast of unobserved states \(x_u \in x\), whose dynamics obey

where P is a stable square matrix of autoregressive coefficients, and \(\epsilon _{x_r}\) collects the exogenous shocks associated with the states \(x_r\).

The non-linear recursive RE solution under timing restrictions is

where the coefficient matrices

are readily constructed via linear transformations of those entering (2)—please see the Appendix for full details on the solution method up to first-order of approximation, and Kormilitsina (2013) for a complete reference and examples.

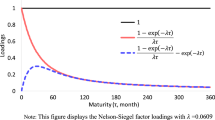

We remark that timing restrictions enrich the autocovariance patterns for the endogenous variables: matrices \({\hat{h}}_{x_r}\) and \({\hat{h}}_{x_{r,-1}}\) (and thereby \({\hat{g}}_{x_r}\) and \({\hat{g}}_{x_{r,-1}}\)) will generally differ from those implied by the counterpart model with unrestricted timing—see Angelini and Sorge (2021). Remarkably, dynamic impulse responses and other statistics will depend (among other things) on the structure of the matrix \({\hat{j}}_{x_r}\) which maps exogenous states \(x_r\) into partially endogenous variables \(y_r\), and that of the matrix \({\hat{g}}_{x_{r,-1}}\) which governs the dependence of the fully endogenous variables \(y_u\) on the lagged states \(x_{r,-1}\). Information contained in the likelihood function can therefore be exploited to derive (classical or Bayesian) inference about the relevance of delayed propagation for the shock(s) of interest.Footnote 4

To frame our testing procedure, we exploit the structural form in (6) embodying timing restrictions against the following state-space counterpart

with the non-zero parameters in \({\tilde{h}}_{x}\) and \({\tilde{g}}_{x}\) collected in the vector \(\phi\) (i.e. \({\tilde{g}}_{x}={\tilde{g}}_{x}(\phi )\) and \({\tilde{h}}_{x}={\tilde{h}}_{x}(\phi )\)). Notice that, when the P matrix is non-empty, the time series representation for the endogenous variables y in (6) is in VARMA-type form, even when its unrestricted counterpart (2) admits a finite-order VAR representation. The testing strategy discussed below exploits the Kalman filter to evaluate the likelihood function associated with the minimal state-space representation of the system (6) under the implicit non-linear CERs embodied in (7), maintaining that the regularity (identification) conditions for standard asymptotic inference in the state-space representation of the DSGE model are valid both under the null and the alternative.Footnote 5

2.3 On the Properties of the RE Equilibrium Under Timing Restrictions

As pointed out in Hespeler and Sorge (2019) and acknowledged in Kormilitsina (2019), different informational partitions typically generate different CERs in equilibrium representations of RE models. In the presence of informational constrains, solving for endogenous variables requires consistency between optimal projections determined on the basis of restricted information sets and conditional expectations which by contrast exploit full information. This in turn may over-constrain the RE forecast errors associated with the model exhibiting timing restrictions, and thus impact on the latter’s dynamic stability properties. Recall that the first-order approximate RE solution can be fully characterized by a sequence of RE forecast errors under which the dynamics of the endogenous variables is non-explosive, see e.g. Sims (2002). As a consequence, different ways of restricting the informational structure may produce distinct effects on the ability of RE forecast errors to neutralize the model’s unstable behavior. Sorge (2020) provides technical conditions under which, on the assumption that the unrestricted (full information) RE model exhibits saddle-path stability, the model counterpart with timing restrictions admits a locally unique RE equilibrium (i.e. under which the determinacy property is preserved across informational structures). Such conditions hold generically (in the space of the model’s parameters) in nearly all cases of interest, and we explicitly check them in the simulation/estimation exercises reported below.

It is also worth emphasizing that, by their very nature, DSGE models with timing restrictions do not overlap with linear RE models where two types of agents permanently display asymmetric information, as those examined in e.g. Lubik et al. (2023). In the presence of timing restrictions, fully informed agents observe histories of all exogenous and endogenous variables, while less informed agents observe only a strict subset of the full information set and need to solve a simple signal extraction problem to gather information about the shocks/states that have not yet materialized and endogenous variables that have not yet been decided upon. When, for any given unit of time, all the informational sub-periods have occurred, information sets are perfectly aligned, and filtering estimates of previously unobserved variables replaced by realized shock/states/endogenous outcomes for the current optimal decisions (based on the current unfolding of informational sub-periods) to be undertaken. One distinctive feature of this setting is that a rank condition—as formalized in Klein (2000)—that is necessary for existence of RE equilibria may fail to hold, implying non-existence of dynamically stable solutions to the model exhibiting timing restrictions; however, when a RE solution exists in the restricted information environment, it is certainty equivalent and is generically (in a measure theoretic sense) unique provided the full information model admits a determinate RE equilibrium, see Sorge (2020).

3 Testing Procedure

We consider the testing problem

by a LR test. The null \({\textsf{H}}_{0}\) incorporates the timing restrictions encoded in the informational partition (4). Let \(\ell _{T}(\phi )\) and \(\ell _{T}(\theta )\) denote the log-likelihoods of the DSGE model under \({\textsf{H}}_{1}\) and \({\textsf{H}} _{0}\), respectively, and \({\hat{\phi }}_{T}=\arg \max _{\phi \in {\mathcal {P}} _{\phi }}\ell _{T}(\phi )\) and \({\hat{\theta }}_{T}=\arg \max _{\theta \in {\mathcal {P}}^{D}}\ell _{T}(\theta )\) be the ML estimators of \(\phi\) and \(\theta\). Estimation of the model under the null (\({\textsf{H}}_{0}\)) and under the alternative (\({\textsf{H}}_{1}\)) is a preliminary step to the computation of the LR test, which reads as

see the Appendix for details on the derivation of the LR statistic. The asymptotic properties of the tests statistics \(LR_{T}\) are intimately related to the asymptotic properties of \({\hat{\theta }}_{T}\) and \({\hat{\phi }}_{T}\) and these crucially depend on whether the regularity conditions for inference are valid in the estimated DSGE model.

To improve inference in small samples, we employ a nonparametric ‘restricted bootstrap’ algorithm, according to which the bootstrap samples are generated using the parameter estimates \({\hat{\theta }}_{T}\) obtained under \({\textsf{H}}_{0}\). The LR test statistic, \(LR_{T}({\hat{\theta }}_{T})\), computed as in Eq. (10) in the main text, is stored along with \({\hat{\theta }}_{T}\). Our procedure is described by the following algorithm. Here, steps 1–4 define the bootstrap sample, the bootstrap parameter estimators and related bootstrap LR statistic; steps 5–7 describe the numerical computation of the bootstrap p-value associated to the bootstrap LR test.

-

1.

Let the superscript ‘0’ denote any item obtained from the application of the Kalman filter to the state space representation of the DSGE model under the null \({\textsf{H}}_{0}\). Given the innovation residuals \({\hat{\epsilon }}_{t}^{0}=y_{t}-{\hat{g}}_{x}({\hat{\theta }}_T) {\hat{x}}_{t\mid t-1}\) and the estimated covariance matrices \({\hat{\Sigma }}_{\epsilon ^{0},t}\) produced by the estimation of the restricted DSGE model, construct the standardized innovations as

$$\begin{aligned} {\hat{e}}_{t}^{0}={\hat{\Sigma }}_{\epsilon ^{0},t}^{-1/2}{\hat{\epsilon }}_{t}^{0,c}, \quad t=1,\ldots ,T, \end{aligned}$$(11)where \({\hat{\Sigma }}_{\epsilon ^{0},t}^{-1/2}\) is the inverse of the square-root matrix of \({\hat{\Sigma }}_{\epsilon ^{0},t}\) and \({\hat{\epsilon }} _{t}^{0,c}\), \(t=1,\ldots ,T\), are the centered residuals \({\hat{\epsilon }}_{t}^{0,c}={\hat{\epsilon }}_{t}^{0}-T^{-1}\sum \nolimits _{t=1}^{T}{\hat{\epsilon }}_{t}^{0}\);

-

2.

Sample, with replacement, T times from \({\hat{e}}_{1}^{0},{\hat{e}}_{2}^{0},\ldots ,{\hat{e}}_{T}^{0}\) to obtain the bootstrap sample of standardized innovations \(e_{1}^{*},e_{2}^{*},\ldots ,e_{T}^{*}\);

-

3.

Mimicking the innovation form representation of the DSGE model, the bootstrap sample \(y_{1}^{*},y_{2}^{*},\ldots ,y_{T}^{*}\) is generated recursively by solving, for \(t=1,\ldots ,T,\) the system

$$\begin{aligned} \left( \begin{array}{c} {\hat{x}}_{t+1\mid t}^{*} \\ y_{t}^{*} \end{array} \right) =\left( \begin{array}{cc} {\hat{h}}_{x}({\hat{\theta }}_T) &{} 0_{n_{m}\times n_{y}} \\ {\hat{g}}_{x}({\hat{\theta }}_T) &{} 0_{n_{y}\times n_{y}} \end{array} \right) \left( \begin{array}{c} {\hat{x}}_{t\mid t-1}^{*} \\ y_{t-1}^{*} \end{array} \right) +\left( \begin{array}{c} K_{t}({\hat{\theta }}_T){\hat{\Sigma }}_{\epsilon ^{0},t}^{1/2} \\ {\hat{\Sigma }}_{\epsilon ^{0},t}^{1/2} \end{array} \right) e_{t}^{*}\, \end{aligned}$$(12)with initial condition \({\hat{x}}_{1\mid 0}^{*}={\hat{x}}_{1\mid 0}\);

-

4.

From the generated pseudo-sample \(y_{1}^{*},y_{2}^{*},\ldots ,y_{T}^{*}\), estimate the DSGE model under \({\textsf{H}}_{0}\) obtaining the bootstrap estimator \({\hat{\theta }}_{T}^{*}\) and the associated log-likelihood \(\ell _{T}^{*}({\hat{\theta }}_T^{*})\), and estimate the DSGE model under \({\textsf{H}}_{1}\) obtaining the bootstrap estimator \({\hat{\phi }}_{T}^{*}\) and the associated log-likelihood \(\ell _{T}^{*}({\hat{\phi }}_{T}^{*})\); the bootstrap LR test for the CERs is

$$\begin{aligned} LR_{T}^{*}({\hat{\theta }}_{T}^{*})=-2[\ell _{T}^{*}({\hat{\theta }}_T^{*})-\ell _{T}^{*}({\hat{\phi }}_{T}^{*})]; \end{aligned}$$(13) -

5.

Steps 2–4 are repeated B times in order to obtain B bootstrap realizations of \({\hat{\theta }}_{T}\) and \({\hat{\phi }}_{T}\), say \(\{{\hat{\theta }} _{T:1}^{*},\) \({\hat{\theta }}_{T:2}^{*},\ldots ,{\hat{\theta }}_{T:B}^{*}\}\) and \(\{{\hat{\phi }}_{T:1}^{*},\) \({\hat{\phi }}_{T:2}^{*},\ldots ,{\hat{\phi }}_{T:B}^{*}\}\), and the B bootstrap realizations of the associated bootstrap LR test, \(\{LR_{T:1}^{*}\), \(LR_{T:2}^{*},\ldots ,LR_{T:B}^{*}\}\), where \(LR_{T:b}^{*}=LR_{T}^{*}({\hat{\theta }}_{T:b}^{*})\), \(b=1,\ldots ,B\);

-

6.

The bootstrap p-value of the test of the timing restrictions is computed as

$$\begin{aligned} {\widehat{p}}_{T,B}^{*}={\hat{G}}_{T,B}^{*}(LR_{T}({\hat{\theta }}_{T}))\quad , \quad {\hat{G}}_{T,B}^{*}(\delta )=B^{-1}\sum _{b=1}^{B} \mathbb {I\{}LR_{T:b}^{*}>\delta \}, \end{aligned}$$(14)\(\mathbb {I}\left\{ \cdot \right\}\) being the indicator function;

-

7.

The bootstrap LR test for the timing restrictions at the \(100\eta \%\) (nominal) significance level rejects \({\textsf{H}}_{0}\) if \({\widehat{p}}_{T,B}^{*}\le \eta\).

4 Simulation Experiments

4.1 Model

We showcase the usefulness of our testing procedure by running a series of Monte Carlo simulation experiments based on the hybrid NK model put forward by Benati and Surico (2009) in their exploration of the causes of the so-called Great Moderation.

The canonical NK model with unrestricted timing is fully characterized by the following system of equations

where

The variables \(g_{t}\), \(\pi _{t}\), and \(i_{t}\) stand for the output gap, inflation, and the nominal interest rate, respectively; \(\gamma\) weights the forward-looking component in the dynamic IS curve; \(\alpha\) is price setters’ extent of indexation to past inflation; \(\delta\) is the intertemporal elasticity of substitution in consumption; \(\kappa\) is the slope of the Phillips curve; \(\rho\), \(\varphi _{\pi }\), and \(\varphi _{g}\) are the interest rate smoothing coefficient, the long-run coefficient on inflation, and that on the output gap in the monetary policy rule, respectively; finally, \(\omega ^g_{t}\), \(\omega ^\pi _{t}\) and \(\omega ^i_{t}\) in Eq. (18) are the mutually independent, asymptotically stable AR(1) exogenous shock processes and \(\epsilon ^g_{t}\), \(\epsilon ^\pi _{t}\) and \(\epsilon ^i_{t}\) are the structural innovations.

Exploiting the general DSGE model representation under timing restrictions (3), the retricted NK model is rather in the form:

where \({\mathcal {E}}_{j,t}=E(\cdot \, | \, \mathbb {I}_{j,t})\), \(j=g,\pi ,i\) is the rational (model-consistent) expectation operator conditioned on the information set \(\mathbb {I}_{j,t}\), with

i.e. private agents do not observe the current monetary policy shock \(\epsilon ^i_t\) and thus cannot infer the current value of the nominal interest rate \(i_t\) but only project it as a function of the observables in their information set; and

i.e. the monetary policy authority observes at time t the entire history of all the endogenous and exogenous variables up to time t.Footnote 6

Clearly, the information partition supporting this set of timing restrictions is

where

and

Accordingly, the computation of the RE equilibrium under timing restrictions requires the following assignment of variables:

As is known, the model (15)–(18) can admit a continuum of asymptotically stable equilibria (equilibrium indeterminacy) depending on the strength of the monetary authority’s response to inflation. Under these circumstances, short-run dynamics for the endogenous variables can be arbitrarily driven by both structural and non-structural (sunspot) shocks, e.g. Lubik and Schorfheide (2003). We shall remark that the NK model under timing restrictions model displays, generically in the space of the admissible parameters, a locally unique (determinate) RE solution insofar as its unrestricted counterpart does. In our Monte Carlo simulation experiments, we explicitly confine attention to the determinate equilibrium version of Benati and Surico (2009)’s model, so that variation in the likelihood across the two information structures (restricted vs. unrestricted) is to be ascribed to the presence of timing restrictions solely, on the assumption that the structural model is correctly specified.Footnote 7

4.2 Monte Carlo Simulations

In this section we investigate the empirical performance of the bootstrap test using the NK structure (15)–(18) as our data generating process (DGP). More specifically, we consider two DSGE-based equilibrium state space representations, denoted as DGP under timing restrictions and DGP with unrestricted timing, respectively. In the former, it is assumed that the data are generated by the determinate equilibrium representation that emerges in the presence of structural timing restrictions embodied in (23); in the latter, artificial series are rather generated by allowing for contemporaneous effects of policy innovations on the inflation rate and the output gap (i.e. when no informational constraints are at work), again imposing equilibrium determinacy. Assuming Gaussian distributions for the structural shocks, and for given initial values, the ML estimation of the model’s parameters, as a preliminary step to the construction of the bootstrap-based LR test, is carried out iteratively by means of a standard BFGS quasi-Newton optimization method, as described in Bårdsen and Fanelli (2015). For either experiment, the nominal significance level is set to \(5\%\).

In a first experiment, we allow timing restrictions to produce quantitatively non-negligible differences in the propagation of the monetary policy shock relative to the unrestricted model. To this aim, we let the structural innovations display relatively high dispersion (\(\sigma _j=2\), \(j=g,\pi ,i\)), and assign a markedly larger persistence to the monetary shock process (\(\rho _i=0.9>0.1=\rho _j\), \(j=g,\pi\)). We then investigate the empirical size of the LR test, using the restricted model as the actual DGP (column DGP under timing restrictions), and its power, when the unrestricted model serves as the underlying DGP (column DGP with unrestriced timing). For either DGP we consider \(K=500\) simulations and a sample size \(T \in \left\{ 100,500\right\}\) with a burn-in of 200 observations.

We estimate five key structural parameters on artificial data: \(\delta\) (shaping the intertemporal channel of monetary policy transmission to non-policy variables); \(\kappa\) (governing the output-inflation trade-off faced by central banks); and the inertial parameters \(\gamma\), \(\alpha\) (both relevant for stabilization goals) and \(\rho\) (capturing policy persistence). Other parameters are calibrated to Benati and Surico (2009)’s posterior median estimates over the Great Moderation—see Table 1.

Results are summarized in Table 2, which reports the estimates for the subset of structural parameters \(\theta ^s=(\kappa , \gamma , \alpha , \rho , \delta ^{-1})'\) from the state-space form (6), when the DGP complies with either information structure (restricted vs. unrestricted). We notice, first, that sample estimates across information structures are roughly equal, speaking in favour of identification of the population deep parameters (which, by definition, are invariant with respect to the timing of decisions). Second, the bootstrap tends to mitigate the discrepancy between actual and nominal probabilities of type-I error. Indeed, when asymptotic critical values taken from the \(\chi _{x}^{2}\) distribution (\(x=\dim (\phi )-\dim (\theta )\)) are employed, the rejection frequency of the LR test for the timing restrictions is \(7.2\%\) and \(11.2\%\) for \(T=500\) and 100 respectively. Therefore, in finite samples our bootstrap-based approach attenuates the tendency of the asymptotic LR test to over-reject the CERs associated with the restricted timing protocol, with rejection frequencies close to the \(5\%\) level. Remarkably, the bootstrap test also shows satisfactory power (column DGP with unrestricted timing).

On empirical grounds, a standard deviation \(\sigma _i=2\) for the monetary policy shock is implausibly large compared to both the historically observed 0.25 ppt increments in central banks’ interest rates, and to estimates from structural VARs that tend to be much smaller (at least since the onset of the Great Moderation until the upsurge of aggregate prices in recent times). This observation motivates our second experiment, where structural disturbances driving short-run dynamics are assumed to exhibit low volatility (standard deviation equal to 0.1), and all the structural parameters are calibrated to Benati and Surico (2009)’s posterior median estimates over the Great Moderation—see Table 3. In this scenario, with the exception of the (mechanically arising) zero on-impact effect of the monetary policy innovation on the inflation rate and the output gap when timing restriction are at work, the dynamics of the impulse response functions of the model are almost identical across informational structures (restricted vs. unrestricted), making it relatively harder to distinguish between the two. This notwithstanding, the bootstrap LR test is able to detect the presence of timing restrictions in the underlying DGP (column DGP under timing restrictions), with rejection frequencies approaching the pre-fixed nominal level—see Table 4.

5 Empirical Application

In this section we employ our bootstrap-based testing strategy to evaluate the empirical relevance of timing restrictions in a particular historical juncture of the U.S. economy, i.e. the so-called Great Moderation. Given their focus on empirically evaluating the effectiveness of monetary policy in the US post-WWII macroeconomic history, several studies have developed small- to medium-scale frameworks with recursive timing, under which the model’s responses to a monetary innovation are zero on impact, in keeping with the recursive (Cholesky-type) identification scheme in sVAR systems, in order to pave the way for the implementation of a straightforward impulse response matching procedure—see e.g. Altig et al. (2011), Guerron-Quintana et al. (2017), Rotemberg and Woodford (1997).

In our application, we closely follow Bårdsen and Fanelli (2015) and perform a ML estimation of Benati and Surico (2009)’s NK monetary business cycle model on U.S. quarterly data for the 1985Q1 - 2008Q3 window (\(T=95\) observations, not including initial lags); the observables include the natural rate of output (proxied by the official measure from the Congressional Budget Office), the real GDP, the inflation rate (quarterly growth rate of the GDP deflator) and the short-term nominal interest rate (effective federal funds rate expressed in averages of monthly values). We intentionally disregard the zero-lower bound phase, beginning in December 2008, which entailed non-standard policy measures by the Federal Reserve that are not consistent with the feedback rule embodied in the interest rate equation (17). The length of the elected sample also reflects our intention of focusing on a determinate (locally unique) RE equilibrium, see e.g. Castelnuovo and Fanelli (2015); and allows us to emphasize the empirical appeal of the bootstrap-based LR test in short data samples.

As mentioned, a main disadvantage of classical estimation methods for DSGE models compared to Bayesian techniques lies in the difficulty to handle identification failure for some of the structural parameters of the model \(\theta\), and their reduced form analogs \(({\hat{g}}_x(\theta ), {\hat{h}}_x(\theta ))\), given the non-linear mapping induced by the CERs (identification in population); the relationship between the structural parameters and the sample objective function (here, the likelihood function) is also problematic, for strong identification can be precluded by the nature and size of available data (sample identification).Footnote 8 Besides, given the tight set of (sign and bound) restrictions that theory typically imposes on structural parameters (e.g. the slope of the Phillips curve), estimation approaches that do not exploit prior information may well fail to safeguard against the generation of economically implausible estimates, see e.g. An and Schorfheide (2007). To partially address these concerns, the parameter vector \(\theta\) is split into two sub-vector: \(\theta ^{ng}=(\gamma , \rho , \rho _g, \rho _{\pi }, \rho _i)'\), which are directly estimated via the ML algorithm, and \(\theta ^g=(\delta ^{-1}, \alpha , \kappa , \varphi _g, \varphi _{\pi }, \sigma _j)'\), which are fine-tuned via a numerical grid-search method with pre-specified ranges. Operationally, the log-likelihood function associated with the NK model featuring timing restrictions is maximized over the parameters in \(\theta ^{ng}\) conditional on random draws (15,000 points) from a uniform distribution for each of the parameters in \(\theta ^g\); optimal estimates for the the latter are then selected as those that maximize the log-likelihood evaluated at the ML estimate for the free parameters \(\theta ^{ng}\) (see the Notes to Table 5). A rank condition for local identifiability (in population), based on the differentiation of the CERs, is then performed along the lines of Iskrev (2010).Footnote 9

Three differences between the approach of Bårdsen and Fanelli (2015) and ours require further discussion. First, full-information ML estimation methods for DSGE models are intimately related to the system reduction method employed to derive the reduced form representation of the model under scrutiny; while Bårdsen and Fanelli (2015) adopt the method put forward in Binder and Pesaran (1999), we follow the algorithm outlined in Schmitt-Grohé and Uribe (2004) to compute first-order approximate solutions, which in turn exploits Klein (2000)’s package to determine the unknown coefficient matrices in the equilibrium dynamics for both the control and the state variables. Second, we assume that the exogenous structural innovations \(\epsilon _t^j\) (\(j=g, \pi , i\)) are orthogonal white noises, whose variances are determined numerically via grid search; Bårdsen and Fanelli (2015), instead, do not restrict the covariance matrix of the structural shocks, whose elements are then indirectly recovered from inverting the CERs associated with the law of motion for exogenous state variables (evaluated at the ML estimates for the other structural parameters). Third, since the bootstrap version of the LR test in state-space models is computationally intensive, the values for \(\theta ^g\) in the grid that optimize the log-likelihood function given the observed sample are kept fixed in the bootstrap replications; taking advantage from the existence of a finite-order VAR representation for the Benati and Surico (2009)’s model free of timing restrictions, Bårdsen and Fanelli (2015) compute the bootstrap version of their test of the implied CERs by drawing 1500 points from the grid for each bootstrap replication.

As argued in Angelini and Sorge (2021), timing restrictions generally induce moving average (MA) components in equilibrium reduced form representatins of DSGE models. As a result, the model does not generically admit a finite-order VAR representation. The estimation of (6) and of its unrestricted analog (8) requires finding the minimal state-space representation associated with the specified NK model among the set of equivalent representations, see e.g. Guerron-Quintana et al. (2013). Provided this representation is at hand, the Kalman filter can be combined with the ML estimation algorithm and the bootstrap procedure to build and evaluate the log-likelihood function of the NK model under the Gaussian assumption, and then compute the LR test for timing restrictions.

Estimates for the parameters of interest and bootstrap standard errors are both reported in the second column of Table 5, along with the point estimates obtained by Bårdsen and Fanelli (2015) for exactly the same parameters entering Benati and Surico (2009)’s model under the assumption of unrestricted timing (third column). We notice that the inertial parameters \((\rho , \rho _j)\), \(j=g, \pi , i\) are not precisely estimated in the model embodying timing restrictions, and generally exhibit lower magnitudes relative to their counterparts in the unrestricted model, for the likelihood tends to ascribe a fraction of the persistence in the data to the informational channel (endogenous backward dependence). The bootstrap p-value associated with the LR test for the CERs associated with the conventional (unrestricted) timing protocol is 0.8, while it falls dramatically to 0.005 when recursive timing is imposed instead. Modulo the previously discussed caveat on the adverse impact of weak identification on the asymptotic and bootstrap distributions of estimators, this evidence indicates that information-induced timing restrictions played no significant role in shaping business cycle dynamics over the period of interest. Accordingly, we view the outcome of our test as suggesting that, as far as short-run macroeconomic fluctuations over the Great Moderation period are concerned, the recursive timing protocol adopted in the aforementioned literature is not favored by aggregate data, thereby calling for caution in the interpretation of the estimated responses of inflation and real economic activity to monetary policy shocks as reported in those studies.

6 Conclusion

This paper develops a simple bootstrap-based testing procedure for the relevance of timing restrictions and ensuing shock transmission delays in small-scale DSGE model environments. Remarkably, the computer code is consistent with standard MATLAB packages—such as Sims (2002)’s gensys.m— that are routinely used to compute first-order approximate solutions to dynamic macroeconomic models; and can be straightforwardly adapted to allow for relatively more sophisticated recursive timing structures than those considered herein, e.g. those involving multi-period informational partitions (Kormilitsina, 2013).

Notes

Further instances in the DSGE literature include, but are not limited to, models of factor hoarding, where employment decisions predate the full realization of aggregate uncertainty (e.g. Burnside and Eichenbaum 1996), and limited participation settings in which households might engage in financial ecision-making prior to observing the whole set of current period shocks (e.g. Fuerst 1992).

The literature on solving RE models with limited information structure has a long tradition. The study of the implications of partial current information (“observability") about endogenous variables traces back to the well-known Lucas (1972)’s model of spatially separated markets. Classical contributions in this area are, among others, Pearlman et al. (1986); Matthews et al. (1994), and the more recent Baxter et al. (2011). In the case of partial current information, the forecaster is aware of the values of some endogenous variables only with a lag but can observe other current endogenous variables at the time the forecast is made. Hence, specific tools (like the Kalman filter) are needed to extract information from observables regarding current disturbances.

When timing restrictions are operative, \({\hat{j}}_{x_r}\) will necessarily be zero-valued, whereas \({\hat{g}}_{x_{r,-1}}\) will not, for \(y_u\) will adjust to movements in \(y_r\) enforced by the best forecast of \(x_r\).

Minimality of state space solutions to DSGE models are also important for the existence of a VAR representation in the observables, which in turn plays a key role in the estimation/validation of a given model that relies on impulse-response matching techniques, e.g. Franchi and Paruolo (2015). State space representations can typically be manipulated to deliver a locally identified system in minimal form which is also stochastically non-singular, see e.g. Komunjer and Ng (2011).

The assumption of currently unobservable monetary policy shocks on the part of private sector agents (consumers/workers and firms) reproduces the one adopted by seminal studies on the estimation of the monetary policy transmission mechanism in NK settings, see Altig et al. (2011), Boivin and Giannoni (2006) among others. This assumption, embedded in fully-fledged structural models, echoes the recursive identification schemes based on short-run exclusion restrictions that have traditionally been used to identify the macroeconomic effects of monetary policy shocks, with the policy rate placed between slow and fast moving variables, e.g. Christiano et al. (1999).

See Dave and Sorge (2021), Fanelli (2012) for an analysis of identification and estimation issues arising in Benati and Surico (2009)’s model in the presence of equilibrium indeterminacy; Angelini and Sorge (2021), Sorge (2020) for a discussion of the implications of the co-existence of timing restrictions and equilibrium existence/indeterminacy.

It must be remarked, however, that the lack of point identification of the structural parameters of DSGE models can also entail a tight dependence of the Bayesian posterior on the priors specified by the investigator, possibly causing the posterior mode not to be a consistent estimator of the true parameter vector, see e.g. Guerron-Quintana et al. (2013) and references therein.

We would like to refer the reader to the Technical Supplement to Bårdsen and Fanelli (2015) for further details.

Baxter et al. (2011) emphasize the signal extraction problems arising in the presence of imperfect information. They identify problems with instantaneously invertible, asymptotically invertible and non-invertible information sets. Our analysis deals with a simple signal extraction problem, where the only missing signal is the current realization of an exogenous state variable. Therefore, the signal extraction problem consists in substituting the missing state variable with its expected value.

References

Altig, D., Christiano, L. J., Eichenbaum, M., & Linde, J. (2011). Firm-specific capital, nominal rigidities and the business cycle. Review of Economic Dynamics, 14(2), 225–247.

An, S., & Schorfheide, F. (2007). Bayesian analysis of dsge models. Econometric Reviews, 26(2–4), 113–172.

Andrews, D. W., & Cheng, X. (2012). Estimation and inference with weak, semi-strong, and strong identification. Econometrica, 80(5), 2153–2211.

Angelini, G., Cavaliere, G., & Fanelli, L. (2022). Bootstrap inference and diagnostics in state space models: With applications to dynamic macro models. Journal of Applied Econometrics, 37(1), 3–22.

Angelini, G., & Sorge, M. M. (2021). Under the same (chole)sky: Dnk models, timing restrictions and recursive identification of monetary policy shocks. Journal of Economic Dynamics and Control, 133, 104265.

Bacchetta, P., Mertens, E., & Van Wincoop, E. (2009). Predictability in financial markets: What do survey expectations tell us? Journal of International Money and Finance, 28(3), 406–426.

Bårdsen, G., & Fanelli, L. (2015). Frequentist evaluation of small DSGE models. Journal of Business & Economic Statistics, 33(3), 307–322.

Baxter, B., Graham, L., & Wright, S. (2011). Invertible and non-invertible information sets in linear rational expectations models. Journal of Economic Dynamics and Control, 35(3), 295–311.

Benati, L., & Surico, P. (2009). Var analysis and the great moderation. American Economic Review, 99(4), 1636–1652.

Binder, M. & Pesaran, M. H. (1999). Multivariate rational expectations models and macroeconometric modeling: A review and some new results. Handbook of Applied Econometrics Volume 1: Macroeconomics: 111-155.

Boivin, J., & Giannoni, M. P. (2006). Has monetary policy become more effective? The Review of Economics and Statistics, 88(3), 445–462.

Branch, W. A. (2007). Sticky information and model uncertainty in survey data on inflation expectations. Journal of Economic Dynamics and Control, 31(1), 245–276.

Burnside, C., & Eichenbaum, M. (1996). Factor-hoarding and the propagation of business cycle shocks. American Economic Review, 86(5), 1154–1174.

Canova, F., & Sala, L. (2009). Back to square one: Identification issues in DSGE models. Journal of Monetary Economics, 56(4), 431–449.

Castelnuovo, E., & Fanelli, L. (2015). Monetary policy indeterminacy and identification failures in the us: Results from a robust test. Journal of Applied Econometrics, 30(6), 924–947.

Chan, J. C., & Strachan, R. W. (2023). Bayesian state space models in macroeconometrics. Journal of Economic Surveys, 37(1), 58–75.

Christiano, L. J. (2002). Solving dynamic equilibrium models by a method of undetermined coefficients. Computational Economics, 20(1), 21–55.

Christiano, L. J., Eichenbaum, M., & Evans, C. L. (1999). Monetary policy shocks: What have we learned and to what end? Handbook of Macroeconomics, 1, 65–148.

Christiano, L. J., Eichenbaum, M., & Evans, C. L. (2005). Nominal rigidities and the dynamic effects of a shock to monetary policy. Journal of Political Economy, 113(1), 1–45.

Clarida, R., Gali, J., & Gertler, M. (2000). Monetary policy rules and macroeconomic stability: Evidence and some theory. The Quarterly Journal of Economics, 115(1), 147–180.

Consolo, A., Favero, C. A., & Paccagnini, A. (2009). On the statistical identification of DSGE models. Journal of Econometrics, 150(1), 99–115.

Dave, C., & Sorge, M. M. (2021). Equilibrium indeterminacy and sunspot tales. European Economic Review, 140, 103933.

Del Negro, M., & Schorfheide, F. (2009). Monetary policy analysis with potentially misspecified models. American Economic Review, 99(4), 1415–1450.

Del Negro, M., Schorfheide, F., Smets, F., & Wouters, R. (2007). On the fit of New Keynesian models. Journal of Business & Economic Statistics, 25(2), 123–143.

Dufour, J.-M., Khalaf, L., & Kichian, M. (2013). Identification-robust analysis of DSGE and structural macroeconomic models. Journal of Monetary Economics, 60(3), 340–350.

Fanelli, L. (2012). Determinacy, indeterminacy and dynamic misspecification in linear rational expectations models. Journal of Econometrics, 170(1), 153–163.

Franchi, M., & Paruolo, P. (2015). Minimality of state space solutions of DSGE models and existence conditions for their VAR representation. Computational Economics, 46, 613–626.

Fuerst, T. S. (1992). Liquidity, loanable funds, and real activity. Journal of Monetary Economics, 29(1), 3–24.

Guerron-Quintana, P., Inoue, A., & Kilian, L. (2013). Frequentist inference in weakly identified dynamic stochastic general equilibrium models. Quantitative Economics, 4(2), 197–229.

Guerron-Quintana, P., Inoue, A., & Kilian, L. (2017). Impulse response matching estimators for DSGE models. Journal of Econometrics, 196(1), 144–155.

Hespeler, F., & Sorge, M. M. (2019). Solving rational expectations models with informational subperiods: A comment. Computational Economics, 53, 1649–1654.

Hirose, Y., Kurozumi, T., & Van Zandweghe, W. (2020). Monetary policy and macroeconomic stability revisited. Review of Economic Dynamics, 37, 255–274.

Iskrev, N. (2010). Local identification in DSGE models. Journal of Monetary Economics, 57(2), 189–202.

Kasa, K., Walker, T. B., & Whiteman, C. H. (2014). Heterogeneous beliefs and tests of present value models. Review of Economic Studies, 81(3), 1137–1163.

King, R. G., & Watson, M. W. (2002). System reduction and solution algorithms for singular linear difference systems under rational expectations. Computational Economics, 20(1–2), 57–86.

Klein, P. (2000). Using the generalized schur form to solve a multivariate linear rational expectations model. Journal of Economic Dynamics and Control, 24(10), 1405–1423.

Komunjer, I., & Ng, S. (2011). Dynamic identification of dynamic stochastic general equilibrium models. Econometrica, 79(6), 1995–2032.

Komunjer, I., & Zhu, Y. (2020). Likelihood ratio testing in linear state space models: An application to dynamic stochastic general equilibrium models. Journal of Econometrics, 218(2), 561–586.

Kormilitsina, A. (2013). Solving rational expectations models with informational subperiods: A perturbation approach. Computational Economics, 41(4), 525–555.

Kormilitsina, A. (2019). A reply to reaction on Kormilitsina (2013): “Solving rational expectations models with informational subperiods: A perturbation approach’’. Computational Economics, 53(4), 1655–1656.

Kormilitsina, A., & Zubairy, S. (2018). Propagation mechanisms for government spending shocks: A bayesian comparison. Journal of Money, Credit and Banking, 50(7), 1571–1616.

Liu, X., & Shao, Y. (2003). Asymptotics for likelihood ratio tests under loss of identifiability. The Annals of Statistics, 31(3), 807–832.

Lubik, T. A., Matthes, C., & Mertens, E. (2023). Indeterminacy and imperfect information. Review of Economic Dynamics, 49, 37–57.

Lubik, T. A., & Schorfheide, F. (2003). Computing sunspot equilibria in linear rational expectations models. Journal of Economic Dynamics and Control, 28(2), 273–285.

Lubik, T. A., & Schorfheide, F. (2004). Testing for indeterminacy: An application to us monetary policy. American Economic Review, 94(1), 190–217.

Lucas, R. E. (1972). Expectations and the neutrality of money. Journal of Economic Theory, 4(2), 103–124.

Mankiw, N. G., & Reis, R. (2002). Sticky information versus sticky prices: A proposal to replace the New Keynesian Phillips curve. The Quarterly Journal of Economics, 117(4), 1295–1328.

Mankiw, N. G., Reis, R., & Wolfers, J. (2003). Disagreement about inflation expectations. NBER Macroeconomics Annual, 18, 209–248.

Matthews, K. G. P., Minford, A. P. L., & Blackman, S. (1994). An algorithm for the solution of non-linear forward rational expectations models with current partial information. Economic Modelling, 11(3), 351–358.

Mavroeidis, S. (2010). Monetary policy rules and macroeconomic stability: Some new evidence. American Economic Review, 100(1), 491–503.

Pearlman, J., Currie, D., & Levine, P. (1986). Rational expectations models with partial information. Economic Modelling, 3(2), 90–105.

Piazzesi, M. & Schneider, M. (2008). Inflation illusion, credit, and asset prices. In Asset prices and monetary policy, 147-189. University of Chicago Press.

Qu, Z., & Tkachenko, D. (2012). Identification and frequency domain quasi-maximum likelihood estimation of linearized dynamic stochastic general equilibrium models. Quantitative Economics, 3(1), 95–132.

Rotemberg, J. J., & Woodford, M. (1997). An optimization-based econometric framework for the evaluation of monetary policy. NBER Macroeconomics Annual, 12, 297–346.

Schmitt-Grohé, S., & Uribe, M. (2004). Solving dynamic general equilibrium models using a second-order approximation to the policy function. Journal of Economic Dynamics and Control, 28(4), 755–775.

Schmitt-Grohé, S., & Uribe, M. (2012). What’s news in business cycles. Econometrica, 80(6), 2733–2764.

Sims, C. A. (2002). Solving linear rational expectations models. Computational Economics, 20(1–2), 1–20.

Sorge, M. M. (2020). Computing sunspot solutions to rational expectations models with timing restrictions. The BE Journal of Macroeconomics, 20(2), 20180256.

Stoffer, D. S., & Wall, K. D. (1991). Bootstrapping state-space models: Gaussian maximum likelihood estimation and the Kalman filter. Journal of the American Statistical Association, 86(416), 1024–1033.

Funding

Open access funding provided by Università degli Studi di Salerno within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose. The authors have no Conflict of interest to declare that are relevant to the content of this article. All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript. The authors have no financial or proprietary interests in any material discussed in this article. All authors contributed to the study conception and design, read and approved the final manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We wish to thank an anonymous Reviewer for many constructive comments and suggestions, which have helped us to greatly improve the manuscript along several dimensions. We also express our gratitude to Efrem Castelnuovo, Chetan Dave, Christian Matthes, Aleksandar Vasilev (discussant) and workshop participants (Workshop in Empirical and Theoretical Macroeconomics, King’s College London; ICEEE 2023, University of Cagliari; Workshop on Macroeconomic Research, Cracow University of Economics; CFE 2023, HTW Berlin) for insightful discussions on the topic of the present work. Giovanni Angelini and Marco M. Sorge thankfully acknowledge financial support from MUR (PRIN grant 2022H2STF2), University of Bologna (RFO grants) and University of Salerno (FARB). Luca Fanelli gratefully acknowledges financial support from MUR (PRIN grant 20229PFAX5) and the University of Bologna (RFO grants). Any errors are our own.

Appendix

Appendix

1.1 DSGE Models Under Timing Restrictions

In the simple case with two informational sub-periods only, the control vector y and state vector x are partitioned as follows

where \(x_u\) comprises endogenous predetermined as well as exogenous variables which materialize in the beginning of the first sub-period, \(x_r\) contains exogenous variables with realizations in the second sub-period, \(y_u\) is the vector of fully endogenous jump variables, i.e. endogenous variables which are conditioned on all the state variables x. Finally, the vector \(y_r\) collects partially endogenous variables, which are decided upon in the first sub-period, when realizations of only a subset of state variables are known.

Kormilitsina (2013)’s solution approach requires that the RE system (1) be partitioned as follows

so that the sub-system \(f^0\) includes \(n_{y_r}\) equations pinning down endogenous variables \(y_r\), the sub-system \(f^1\) includes \(n_{y_u}\) equations that determine endogenous variables \(y_u\) and \(n_{x_u}\) equations delivering the dynamics of the states \(x_u\), and the sub-system \(f^{x_r}\) describes the evolution of exogenous shocks \(x_r\), represented as a first-order stationary autoregressive process (5).

Letting again \({\mathcal {E}}\) denote the (conditional) expectation operator accounting for timing restrictions, the RE system with informational sub-periods can be rewritten as

and its non-linear, recursive solution represented in general form as

where endogenous (jump) variables in \(y_r\) are allowed to only react to the conditional forecast of states in \(x_r\) (a function of previous period variables \(x_{r,-1}\)), as the latter do not belong in the first sub-period information set. Notice the solution to the filtering problem associated with the autoregressive process (5) is already embedded in the h function. By the same token, endogenous (jump) variables in \(y_u\) are a function of \(y_r\)—a state variable in the second informational sub-period—and thus of lagged states \(x_{r,-1}\). Notice that the timing restrictions only involve exogenous variables \(x_r\) which are uncorrelated with other exogenous variables in \(x_u\); also, all the \(x_r\) variables are not observed in the first sub-period, hence the filtering problem does not require using the variance-covariance matrix of the \(\epsilon _{x_r}\) shocks in order to compute an optimal (in the mean-square sense) estimate of unobserved states.Footnote 10

The linear RE solution under timing restrictions is

where the coefficient matrices can be decomposed as follows

The first-order approximation the DSGE model under timing restrictions then reads as

where the dependence of the reduced form matrices on the structural parameters \(\theta\) has been made explicit.

Provided the rank condition characterized in Sorge (2020) is fulfilled, the solution to the restricted model can be readily constructed via uniquely defined linear transformations of (2), however computed (e.g. exploiting algorithms put forward in Christiano (2002), King and Watson (2002), Klein (2000), Sims (2002)). In fact, upon partitioning the equilibrium coefficient matrices \((g_x(\theta ), h_x(\theta ))\) in (2) as follows

we can easily map the coefficient matrices under conventional timing into those appearing in (28), i.e.

where \(\nabla (f^1)\) denotes the Jacobian of the sub-system \(f^1\) with respect to the vector \([x'_u, y_u]\), \(f^{1}_{y_r}\) is the matrix of partial derivatives of \(f^1\) with respect to the slow moving endogenous variables collected in the vector \(y_r\), and \(\left[ M \right] _{m}\) is used to denote the selection of the first (or last) m rows of some matrix M.

Operationally, the first-order approximate solution (and its minimal state space representation) to the general DSGE mode under informational constraints can be obtained via the following algorithm:

- Step 1.:

-

Compute the steady state \(({\bar{y}}, {\bar{x}})\) of the unrestricted RE model (1);

- Step 2.:

-

Arrange variables in y and x in vectors \([y_u, y_r]\) and \([x_u, x_r]\). Sort the equilibrium conditions into vectors \(f^0\), \(f^1\) and \(f^{x_r}\), and arrange them into the partition \(f=\left[ f^0; \, f^1; \, f^{x_r} \right]\) accordingly;

- Step 3.:

-

Obtain matrices \(g_x\) and \(h_x\) for the unrestricted RE model, and partition them as follows

$$\begin{aligned} g_x= \left( \begin{array}{cc} g_{x_u} &{} g_{x_r} \\ j_{x_{u}} &{} j_{x_{r}} \end{array} \right) , \quad h_x= \left( \begin{array}{cc} h_{x_{u}} &{} h_{x_{r}}\\ 0 &{} P \end{array} \right) \end{aligned}$$(32)where \(g_{x_{u}}\) is \((n_{y_u} \times n_{x_{u}})\)-dimensional, \(g_{x_r}\) is \((n_{y_u} \times n_{x_r})\)-dimensional, \(j_{x_u}\) is \((n_{y_r} \times n_{x_u})\)-dimensional, \(j_{x_r}\) is \((n_{y_r} \times n_{x_r})\)-dimensional, \(h_{x_u}\) is \((n_{x_u} \times n_{x_u})\)-dimensional and \(h_{x_r}\) is \((n_{x_u} \times n_{x_r})\)-dimensional;

- Step 4.:

-

Set

$$\begin{aligned}&{\hat{g}}_{x_u}=g_{x_u},\quad {\hat{j}}_{x_u}=j_{x_u}, \quad {\hat{h}}_{x_u}=h_{x_u}, \end{aligned}$$(33)$$\begin{aligned}&{\hat{g}}_\sigma =0, \quad {\hat{j}}_\sigma =0,\quad {\hat{h}}_\sigma =0; \end{aligned}$$(34) - Step 5.:

-

Compute the partial derivatives \(f^1_{y'}, f^1_{x'_u}, f^1_{y_u}\), evaluate them at the steady state \(({\bar{x}}, {\bar{y}})\) and check invertibility of the square matrix

$$\begin{aligned} \nabla (f^1)=\left[ f^{1}_{y'}g_{x_u} + f^{1}_{x'_{u}},\, f^{1}_{y_u}\right] . \end{aligned}$$Then compute

$$\begin{aligned} \left( \begin{array}{c} {\hat{h}}_{x_{r,-1}}\\ {\hat{g}}_{x_{r,-1}} \end{array} \right)= & {} -\nabla (f^1)^{-1} f^1_{y_r} j_{x_r} P \end{aligned}$$(35)$$\begin{aligned} \left( \begin{array}{c} {\hat{h}}_{x_r} \\ {\hat{g}}_{x_r} \end{array} \right)= & {} \left( \begin{array}{c} h_{x_r} \\ g_{x_r} \end{array} \right) +\nabla (f^1)^{-1} f^1_{y_r} j_{x_r} \end{aligned}$$(36)$$\begin{aligned} {\hat{j}}_{x_{r,-1}}= & {} j_{x_r} P \end{aligned}$$(37) - Step 6.:

-

Derive the minimal state space representation under timing restrictions as follows

$$\begin{aligned} \left( \begin{array}{c} x'_{u} \\ x'_{r} \\ x_r \end{array} \right)= & {} \left( \begin{array}{ccc} {\hat{h}}_{x_u} &{} {\hat{h}}_{x_r} &{} {\hat{h}}_{x_{r,-1}} \\ 0_{n_{x_r} \times n_{x_u}} &{} P &{} 0_{n_{x_r} \times n_{x_r}} \\ 0_{n_{x_r} \times n_{x_u}} &{} I_{n_{x_r} \times n_{x_r}} &{} 0_{n_{x_r} \times n_{x_r}}\end{array} \right) \left( \begin{array}{c} x_u \\ x_r \\ x_{r,-1} \end{array} \right) +\sigma \left( \begin{array}{c} \epsilon _{x_u} \\ \epsilon _{x_r} \end{array} \right) \end{aligned}$$(38)$$\begin{aligned} \left( \begin{array}{c} y_u \\ y_r \end{array} \right)= & {} \left( \begin{array}{ccc} {\hat{g}}_{x_u} &{} {\hat{g}}_{x_r} &{} {\hat{g}}_{x_{r,-1}} \\ {\hat{j}}_{x_u} &{} 0_{n_{y_r} \times n_{x_r}} &{} {\hat{j}}_{x_{r,-1}} \end{array} \right) \left( \begin{array}{c} x_u \\ x_r \\ x_{r,-1} \end{array} \right) \end{aligned}$$(39)

1.2 LR Test

We here illustrate the derivation of the LR test upon which our bootstrap procedure builds. To this end, let us start with the representation in Eq. (8) in the main text. The innovation form representation associated with the latter can be written in the form

where \(K_{t}=K_{t}(\phi _{\theta })\) is the Kalman gain and

are the innovation residuals with covariance matrix

and \(P_{t\mid t-1}=E((x_{t}-{\hat{x}}_{t\mid t-1})(x_{t}-{\hat{x}} _{t\mid t-1})^{\prime }\mid {\mathcal {F}}_{t-1}^{y})\), \(P_{1\mid 0}\) being given. Imposing the normality of \(\epsilon _{t}\) in (42), i.e.

the estimation of \(\phi\) can be accomplished via Gaussian maximum likelihood estimation.

Let \(\ell _{T}(\phi )\) be the Gaussian log-likelihood function associated with the state space model in (40)–(41). The essential part of the log-likelihood \(\ell _{T}(\phi )\), denoted for simplicity by \(\ell _{\circ ,T}(\phi ):=\sum _{t=1}^{T}l(y_{t}\mid {\mathcal {F}}_{t-1}^{y};\phi )\), is given by

where \(\epsilon _{t}\left( \phi \right)\) and \(\Sigma _{\epsilon ^{0},t}\left( \phi \right)\) are defined above. Given \(\ell _{\circ ,T}(\phi )\) in (44), the ML estimator of \(\phi\) solves

and can be computed by combining the Kalman filter with numerical optimization methods. To estimate the structural parameters in \(\theta\), we can consider analogs of systems (40)–(41) and replace \({\tilde{h}}_{x}(\phi )\) and \({\tilde{g}}_{x}(\phi )\) with \({\hat{h}}_{x}(\theta )\) and \({\hat{g}}_{x}(\theta )\). The ML estimator of \(\theta\) is therefore obtained from

The LR test for the timing restrictions is then given in Eq. (10) in the main text.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Angelini, G., Fanelli, L. & Sorge, M.M. Is Time an Illusion? A Bootstrap Likelihood Ratio Test for Shock Transmission Delays in DSGE Models. Comput Econ (2024). https://doi.org/10.1007/s10614-024-10640-2

Accepted:

Published:

DOI: https://doi.org/10.1007/s10614-024-10640-2