Abstract

Under suitable conditions, generalized method of moments (GMM) estimates can be computed using a comparatively fast computational technique: filtered two-stage least squares (2SLS). This fact is illustrated with a special case of filtered 2SLS—specifically, the forward orthogonal deviations (FOD) transformation. If a restriction on the instruments is satisfied, GMM based on the FOD transformation (FOD-GMM) is identical to GMM based on the more popular first-difference (FD) transformation (FD-GMM). However, the FOD transformation provides significant reductions in computing time when the length of the time series (T) is not small. If the instruments condition is not met, the FD and FOD transformations lead to different GMM estimators. In this case, the computational advantage of the FOD transformation over the FD transformation is not as dramatic. On the other hand, in this case, Monte Carlo evidence provided in the paper indicates that FOD-GMM has better sampling properties—smaller absolute bias and standard deviations. Moreover, if T is not small, the FOD-GMM estimator has better sampling properties than the FD-GMM estimator even when the latter estimator is based on the optimal weighting matrix. Hence, when T is not small, FOD-GMM dominates FD-GMM in terms of both computational efficiency and sampling performance.

Similar content being viewed by others

Notes

A flop consists of an addition, subtraction, multiplication, or division.

All computations were performed using GAUSS. To calculate elapsed times for the FOD and FD algorithms, the GAUSS command hsec was used to calculate the number of hundredths of a second since midnight before and after calculations were executed.

References

Alvarez, J., & Arellano, M. (2003). The time series and cross-section asymptotics of dynamic panel data estimators. Econometrica, 71(4), 1121–1159.

Arellano, M. (2003). Panel data econometrics. Oxford: Oxford University Press.

Arellano, M., & Bond, S. (1991). Some tests of specification for panel data: Monte Carlo evidence and an application to employment equations. The Review of Economic Studies, 58(2), 277–297.

Arellano, M., & Bover, O. (1995). Another look at the instrumental variable estimation of error-components models. Journal of Econometrics, 68(1), 29–51.

Hayakawa, K. (2009). First difference or forward orthogonal deviation- which transformation should be used in dynamic panel data models?: A simulation study. Economics Bulletin, 29, 2008–2017.

Hayakawa, K., & Nagata, S. (2016). On the behaviour of the GMM estimator in persistent dynamic panel data models with unrestricted initial conditions. Computational Statistics and Data Analysis, 100, 265–303.

Hunger, R. (2007). Floating point operations in matrix-vector calculus (version 1.3). Munich: Technical University of Munich, Associate Institute for Signal Processing. https://mediatum.ub.tum.de/doc/625604. Accessed 5 Aug 2016.

Keane, M. P., & Runkle, D. E. (1992). On the estimation of panel-data models with serial correlation when instruments are not strictly exogenous. Journal of Business & Economic Statistics, 10, 1–9.

Phillips, R. F. (2019). A numerical equivalence result for generalized method of moments. Economics Letters, 179, 13–15.

Schmidt, P., Ahn, S. C., & Wyhowski, D. (1992). Comment. Journal of Business & Economic Statistics, 10, 10–14.

Strang, G. (2003). Introduction to linear algebra. Wellesley: Wellesley-Cambridge Press.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This paper has benefited from the comments of several anonymous referees.

Appendix A: Floating Point Operations for One-Step GMM

Appendix A: Floating Point Operations for One-Step GMM

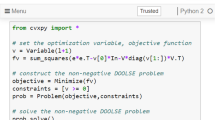

A floating point operation (flop) is an addition, subtraction, multiplication, or division. This appendix shows how the number of flops required to calculate \(\widehat{\delta }\) via the formulas in (5) and (6) depends on N, T, and k, when at most k instruments are used per period.

To find the number of flops required to calculate \(\widehat{\delta }\), the following facts will be used repeatedly throughout this appendix. Let \(\varvec{B}\), \(\varvec{ E}\), and \(\varvec{H}\) be \(q\times r\), \(q\times r\), and \(r\times s\) matrices, and let d be a scalar. Then \(d\varvec{B}\), \(\varvec{B} \pm \varvec{E}\), and \(\varvec{BH}\) consist of qr, qr, and \( qs \left( 2r-1\right) \) flops, respectively (see Hunger 2007).

1.1 Appendix A.1: Floating Point Operations Using Differencing and All Available Instruments

To calculate \(\varvec{\tilde{y}}_{i} =\varvec{D}\varvec{y}_{i}\) (\(i=1,\ldots ,N\)), a total of \(N\left( T-1\right) \left( 2T-1\right) \) flops are needed. After the \(\varvec{\tilde{y}}_{i}\)s are calculated, the number of flops required to compute \(\varvec{\tilde{s}} =\sum _{i}\varvec{Z}_{i}^{\prime } \varvec{\tilde{y}}_{i}=\left( \varvec{Z}_{1}^{\prime },\ldots \varvec{Z}_{N}^{\prime }\right) \left( \varvec{\tilde{y}}_{1}^{\prime },\ldots , \varvec{\tilde{y}} _{N}^{\prime }\right) ^{\prime }\) is \(m\left[ 2N\left( T-1\right) -1\right] \), where \(m=T (T -1) /2\) is the number of moment restrictions. Therefore, the total number of flops required to calculate \(\varvec{\tilde{s}}\) is \(N\left( T-1\right) \left( 2T-1\right) +m\left[ 2N\left( T-1\right) -1\right] \). Given m increases with T at a quadratic rate, the number of flops required to compute \(\varvec{\tilde{s}}\) is therefore of order O(\(NT^{3}\)). The same number of flops are needed to compute \(\varvec{\tilde{s}}_{-1}\). Hence, the number of flops needed to compute \(\varvec{\tilde{s}}\) and \(\varvec{\tilde{s}}_{-1}\) increase with N linearly, for given T, and with T at a cubic rate, for given N.

To compute \(\varvec{A} _{N}\) we must first compute \(\varvec{G}=\varvec{D}\varvec{D}^{\prime }\), which requires \(\left( T-1\right) ^{2}\left( 2T{-}1\right) \) flops. Then the products \(\varvec{GZ}_{i}\)\( \left( i=1,\ldots ,N\right) \) are computed, which requires another \(Nm\left( T-1\right) \left( 2T-3\right) \) flops. The products \(\varvec{Z}_{i}^{\prime }\left( \varvec{GZ}_{i}\right) \)\(\left( i=1,\ldots ,N\right) \) require \(Nm^{2}\left( 2T-3\right) \) flops. Finally, we execute \(N-1\) summations of the \(m\times m\) matrices \(\varvec{Z} _{i}^{\prime }\varvec{GZ}_{i}\)\(\left( i=1,\ldots ,N\right) \) for another \(\left( N-1\right) m^{2}\) flops. From this accounting, we see that \(\left( T-1\right) ^{2}\left( 2T-1\right) +Nm\left( T-1\right) \left( 2T-3\right) + Nm^{2}\left( 2T-3\right) +\left( N-1\right) m^{2}\) flops are required to compute \(\varvec{A} _{N}\). Given m is quadratic in T, the number of flops required to compute \(\varvec{A} _{N}\) is of order O(\(NT^{5}\)). Hence, the number of flops increase with N linearly, for given T, but they increase with T at the rate \(T^{5}\), for given N.

The number flops required to compute \(\varvec{A} _{N}^{-1}\) increases with T at the rate \(T^{6}\). To see this, note that standard methods for inverting a \(q\times q\) matrix require on the order of \(q^{3}\) operations (see Hunger 2007; Strang 2003, pp. 452–455). The matrix \(\varvec{A} _{N}\) is \(m\times m\), and m increases with T at the rate \(T^{2}\) if all available moment restrictions are exploited. Hence, the number of flops required to invert \(\varvec{A} _{N}\) is of order O(\(T^{6}\)).

No additional calculations increase with T and N as quickly as computing \(\varvec{A}_{N}\) and its inversion. For example, after \(\varvec{A} _{N}^{-1}\) is calculated, \(m\left( 2m-1\right) \) flops are required to calculate \(\varvec{\tilde{a}}=\varvec{\tilde{s}}_{-1}^{\prime }\varvec{A} _{N}^{-1}\), while computing \( \varvec{\tilde{a}}\varvec{\tilde{s}}_{-1}\), and \(\varvec{\tilde{a}}\varvec{\tilde{s}}\) both require \(2m-1\) flops.

1.2 Appendix A.2: Floating Point Operations Using FOD and All Available Instruments

Calculation of \(\varvec{\ddot{y}}_{i} =\varvec{F}\varvec{y}_{i}\) (\(i=1,\ldots ,N\)) requires \(N\left( T-1\right) \left( 2T-1\right) \) flops. An additional \(t\left( 2N-1\right) \) flops are needed to calculate \(\varvec{\ddot{s}}_{t}=\left( \varvec{z}_{1t},\ldots , \varvec{z}_{Nt}\right) \left( \ddot{y}_{1t},\ldots ,\ddot{y}_{Nt}\right) ^{\prime }\). Therefore, calculation of all of the \(\varvec{\ddot{s}} _{t}\)s (\(t=1,\ldots ,T-1\)) requires \(\ddot{f}_{1}=N\left( T-1\right) \left( 2T-1\right) +\left( 2N-1\right) \sum _{t=1}^{T-1}t=N\left( T-1\right) \left( 2T-1\right) +\left( 2N-1\right) T\left( T-1\right) /2\) flops, which is of order O(\(NT^{2}\)). Calculation of \(\varvec{\ddot{s}}_{t-1}\) (\(t=1,\ldots ,T-1\)) requires another \(\ddot{f}_{2}=\ddot{f}_{1}\) flops.

On the other hand, computing \( \varvec{S}_{t}=\left( \varvec{z}_{1t},\ldots , \varvec{z}_{Nt}\right) \left( \varvec{z}_{1t},\ldots , \varvec{z}_{Nt}\right) ^{ \prime }\) requires \(t^{2}\left( 2N-1\right) \) flops. Therefore, calculation of \(\varvec{S}_{t}\) (\(t=1,\ldots ,T-1\)) requires \( \ddot{f}_{3}=\left( 2N-1\right) \sum _{t=1}^{T-1}t^{2}=\left( 2N-1\right) T\left( 2T-1\right) \left( T-1\right) /6\) flops, which is of order O(\(NT^{3}\)).

The matrix \(\varvec{S} _{t} \) is a \(t\times t\) matrix, which requires on the order of O(\(t^{3}\)) flops to invert. Given there are \(T-1\)\(\varvec{S}_{t}\) matrices that must be inverted, the number of operations required to invert all of them is on the order of \(\ddot{f}_{4}= \sum _{t=1}^{T-1}t^{3}=T^{2}\left( T-1\right) ^{2}/4\) flops. In other words, the number of flops required to invert all of the \(\varvec{S}_{t}\) matrices is of order O(\(T^{4}\)).

After \(\varvec{S}_{t}^{-1}\) (\(t=1,\ldots ,T-1 \)) are computed, computing \(\varvec{\ddot{a}}_{t}=\varvec{\ddot{s}} _{t-1}^{ \prime }\varvec{S}_{t}^{-1}\) (\(t=1,\ldots ,T-1\)) requires another \(\ddot{f}_{5}=\sum _{t=1}^{T-1}t\left( 2t-1\right) =T\left( T-1\right) \left( 4T-5\right) /6\) flops, which is of order O(\(T^{3}\)). Next, calculation of \(\varvec{\ddot{a}} _{t}\varvec{\ddot{s}}_{t}\) (\(t=1,\ldots ,T-1\)) requires \( \ddot{f}_{6}=\sum _{t=1}^{T-1}\left( 2t-1\right) =T\left( T-2\right) +1\) flops, and then summing the computed \(\varvec{\ddot{a}}_{t}\varvec{\ddot{s} }_{t}\)s—i.e., \(\sum _{t=1}^{T-1}\varvec{\ddot{a}}_{t} \varvec{\ddot{s}}_{t}\)—is another \(\ddot{f}_{7}=T-2\) flops.

Hence, calculation of \(\sum _{t=1}^{T-1} \varvec{\ddot{a}}_{t}\varvec{\ddot{s}}_{t}\) requires \( \sum _{j=1}^{7}\ddot{f}_{j}\) flops. This work increases with N at the rate N increases, for given T, and increases with T at the rate \(T^{4}\) increases, for given N.

Of course, to compute \(\widehat{\delta }_{F}\) we must also compute \(\sum _{t=1}^{T-1} \varvec{\ddot{a}}_{t}\varvec{\ddot{s}}_{t-1}\), but the \( \varvec{\ddot{a}}_{t}\)s and \(\varvec{\ddot{s}}_{t-1}\)s have already been calculated. Therefore, the remaining calculations required to compute \(\widehat{\delta }_{F}\) are but a small part of the total number of flops required.

1.3 Appendix A.3: Floating Point Operations Using Differencing and a Subset of Instruments

As shown in Appendix A.1, the number of flops required to calculate \(\varvec{\tilde{s}}\) is \(N\left( T-1\right) \left( 2T-1\right) +m\left[ 2N\left( T-1\right) -1\right] \), where m is the number of moment restrictions. Computation of \(\varvec{\tilde{s}}_{-1}\) requires the same number of flops. Moreover, if \(\varvec{z}_{it}\) contains k or fewer instruments (\(t=1,\ldots ,T-1\)), then m is of order no greater than O(kT). Hence, the number of flops needed to compute \(\varvec{\tilde{s}}\) and \(\varvec{\tilde{s}}_{-1}\) increase with N linearly, for given k and T; with T at a quadratic rate, for given k and N; and with k no faster than linearly, for given N and T.

Derivations provided in Appendix A.1 show that computation of \(\varvec{A} _{N}\) requires \(\left( T-1\right) ^{2}\left( 2T-1\right) +Nm\left( T-1\right) \left( 2T-3\right) + Nm^{2}\left( 2T-3\right) +\left( N-1\right) m^{2}\) flops. Hence, the number of flops increase with N linearly, for given k and T; with T at a cubic rate, for given k and N; and with k at no faster than a quadratic rate, for given N and T.

Because \(\varvec{A}_{N}\) is \(m\times m\), the number of flops required to compute \(\varvec{A} _{N}^{-1}\) is of order no greater than O(\(k^{3}T^{3}\)) (see Hunger 2007; Strang 2003, pp. 452–455). In particular, it is of order O(\(T^{3}\)) for given k, and of order no greater than O(\(k^{3}\)) for given T. And, as noted in Appendix A.1, after \(\varvec{A} _{N}^{-1}\) is calculated, \(m\left( 2m-1\right) \) flops are required to calculate \(\varvec{\tilde{a}}=\varvec{\tilde{s}}_{-1}^{\prime }\varvec{A} _{N}^{-1}\), and \(2m-1\) flops are required to compute \(\varvec{\tilde{a}}\varvec{\tilde{s}}_{-1}\), and \(\varvec{\tilde{a}}\varvec{\tilde{s}}\).

1.4 Appendix A.4: Floating Point Operations Using FOD and a Subset of Instruments

Calculation of \(\varvec{\ddot{y}}_{i} =\varvec{F}\varvec{y}_{i}\) (\(i=1,\ldots ,N\)) requires \(N\left( T-1\right) \left( 2T-1\right) \) flops. And, calculation of \(\varvec{\ddot{s}}_{t}=\left( \varvec{z}_{1t},\ldots , \varvec{z}_{Nt}\right) \left( \ddot{y}_{1t},\ldots ,\ddot{y}_{Nt}\right) ^{\prime }\) requires an additional \(k\left( 2N-1\right) \) flops at most. (“At most” because k is the maximum number of instruments in a \(\varvec{z}_{it}\).) Therefore, calculation of all of the \(\varvec{\ddot{s}} _{t}\)s (\(t=1,\ldots ,T-1\)) requires \(N\left( T-1\right) \left( 2T-1\right) + k \left( 2N-1\right) \left( T-1\right) \) flops at most. Calculation of \(\varvec{\ddot{s}}_{t-1}\) (\(t=1,\ldots ,T-1\)) requires the same number of flops. Therefore, the number of flops required to calculate \(\varvec{\ddot{s}}_t\) and \(\varvec{\ddot{s}}_{t-1}\) (\(t=1,\ldots ,T-1\)) increase with N linearly, for given k and T; they increase with T at a quadratic rate, for given k and N; and they increase with k at most linearly, for given N and T.

Computing \( \varvec{S}_{t}=\left( \varvec{z}_{1t},\ldots , \varvec{z}_{Nt}\right) \left( \varvec{z}_{1t},\ldots , \varvec{z}_{Nt}\right) ^{ \prime }\) requires no more than \(k^{2}\left( 2N-1\right) \) flops. Therefore, calculation of \(\varvec{S}_{t}\) (\(t=1,\ldots ,T-1\)) requires \(k^{2}\left( T-1\right) \left( 2N-1\right) \) flops at most.

The matrix \(\varvec{S} _{t} \) is at most a \(k\times k\) matrix, which requires, at most, on the order of O(\(k^{3}\)) flops to invert. Given there are \(T-1\)\(\varvec{S}_{t}\) matrices that must be inverted, the number of operations required to invert all of them is on the order of at most O(\(k^{3}T\)).

Next, calculation of \(\varvec{\ddot{a}}_{t}=\varvec{\ddot{s}} _{t-1}^{ \prime }\varvec{S}_{t}^{-1}\) (\(t=1,\ldots ,T-1\)) requires another \(\left( T-1\right) k\left( 2k-1\right) \) flops at most. Computing \(\varvec{\ddot{a}} _{t}\varvec{\ddot{s}}_{t}\) (\(t=1,\ldots ,T-1\)) requires \( \left( T-1\right) \left( 2k-1\right) \) flops at most. And the sum \(\sum _{t=1}^{T-1}\varvec{\ddot{a}}_{t} \varvec{\ddot{s}}_{t}\) requires \(T-2\) flops.

Hence, the number of flops needed to compute \(\sum _{t=1}^{T-1} \varvec{\ddot{a}}_{t}\varvec{\ddot{s}}_{t}\) increase with N linearly, for given k and T; they increase with T at a quadratic rate, for given k and N; and they increase with k no faster than \(k^{3}\), for given N and T.

Finally, we must also compute the sum \(\sum _{t=1}^{T-1} \varvec{\ddot{a}}_{t}\varvec{\ddot{s}}_{t-1}\), but the \( \varvec{\ddot{a}}_{t}\)s and \(\varvec{\ddot{s}}_{t-1}\)s have already been calculated. Hence, this sum requires little additional computational work.

Rights and permissions

About this article

Cite this article

Phillips, R.F. Quantifying the Advantages of Forward Orthogonal Deviations for Long Time Series. Comput Econ 55, 653–672 (2020). https://doi.org/10.1007/s10614-019-09907-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10614-019-09907-w