Abstract

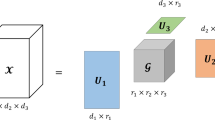

A strongly orthogonal decomposition of a tensor is a rank one tensor decomposition with the two component vectors in each mode of any two rank one tensors are either colinear or orthogonal. A strongly orthogonal decomposition with few number of rank one tensors is favorable in applications, which can be represented by a matrix-tensor multiplication with orthogonal factor matrices and a sparse tensor; and such a decomposition with the minimum number of rank one tensors is a strongly orthogonal rank decomposition. Any tensor has a strongly orthogonal rank decomposition. In this article, computing a strongly orthogonal rank decomposition is equivalently reformulated as solving an optimization problem. Different from the ill-posedness of the usual optimization reformulation for the tensor rank decomposition problem, the optimization reformulation of the strongly orthogonal rank decomposition of a tensor is well-posed. Each feasible solution of the optimization problem gives a strongly orthogonal decomposition of the tensor; and a global optimizer gives a strongly orthogonal rank decomposition, which is however difficult to compute. An inexact augmented Lagrangian method is proposed to solve the optimization problem. The augmented Lagrangian subproblem is solved by a proximal alternating minimization method, with the advantage that each subproblem has a closed formula solution and the factor matrices are kept orthogonal during the iteration. Thus, the algorithm always can return a feasible solution and thus a strongly orthogonal decomposition for any given tensor. Global convergence of this algorithm to a critical point is established without any further assumption. Extensive numerical experiments are conducted, and show that the proposed algorithm is quite promising in both efficiency and accuracy.

Similar content being viewed by others

Notes

We can reformulate (23) as an optimization problem with a smooth objective function by packing \(\Vert \mathcal {B}\Vert _1\) into the constraints as well. Then, optimality conditions can be derived as [39]. While, it seems that it is not a wise choice here to destroy the smooth nature of the constraints and introduce a heavy task on computing the normal cone of a feasible set whose constraints involve nonsmooth functions.

References

Absil, P.-A., Hosseini, S.: A collection of nonsmooth Riemannian optimization problems. In: Hosseini, S., Mordukhovich, B., Uschmajew, A. (eds.) Nonsmooth Optimization and Its Applications, International Series of Numerical Mathematics, vol. 170, pp. 1–15. Birkhäuser, Cham (2019)

Absil, P.-A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton (2008)

Anandkumar, A., Ge, R., Hsu, D., Kakade, S.M., Telgarsky, M.: Tensor decompositions for learning latent variable models. J. Mach. Learn. Res. 15, 2773–2832 (2014)

Attouch, H., Bolte, J., Svaiter, B.F.: Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward-backward splitting, and regularized Gauss–Seidel methods. Math. Program. 137, 91–129 (2013)

Bader, B.W., Kolda, T.G.: MATLAB Tensor Toolbox Version 2.6, February 2015. http://www.sandia.gov/~tgkolda/TensorToolbox/

Batselier, K., Liu, H., Wong, N.: A constructive algorithm for decomposing a tensor into a finite sum of orthonormal rank-1 terms. SIAM J. Matrix Anal. Appl. 36, 1315–1337 (2015)

Bertsekas, D.P.: Constrained Optimization and Lagrange Multiplier Methods. Athena Scientific, Belmont (1982)

Bochnak, J., Coste, M., Roy, M.-F.: Real Algebraic Geometry. Ergebnisse der Mathematik und ihrer Grenzgebiete, vol. 36. Springer, Berlin (1998)

Bolte, J., Sabach, S., Teboulle, M.: Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 146, 459–494 (2014)

Chen, J., Saad, Y.: On the tensor SVD and the optimal low rank orthogonal approximation of tensors. SIAM J. Matrix Anal. Appl. 30, 1709–1734 (2009)

Chen, Y., Ye, Y., Wang, M.: Approximation hardness for a class of sparse optimization problems. J. Mach. Learn. Res. 20, 1–27 (2019)

Comon, P.: MA identification using fourth order cumulants. Signal Process. 26, 381–388 (1992)

Comon, P.: Independent component analysis, a new concept? Signal Process. 36, 287–314 (1994)

De Lathauwer, L., De Moor, B., Vandewalle, J.: A multilinear singular value decomposition. SIAM J. Matrix Anal. Appl. 21, 1253–1278 (2000)

De Silva, V., Lim, L.-H.: Tensor rank and the ill-posedness of the best low-rank approximation problem. SIAM J. Matrix Anal. Appl. 30, 1084–1127 (2008)

Donoho, D.L.: For most large underdetermined systems of linear equations the minimal \(1\)-norm solution is also the sparsest solution. Commun. Pure Appl. Math. 59, 797–829 (2006)

Franc, A.: Etude Algébrique des Multitableaux: Apports de l’Algébre Tensorielle, Thèse de Doctorat, Spécialité Statistiques. Univ. de Montpellier II, Montpellier (1992)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 4th edn. Johns Hopkins University Press, Baltimore (2013)

Håstad, J.: Tensor rank is NP-complete. J. Algorithms 11, 644–654 (1990)

Hillar, C.J., Lim, L.-H.: Most tensor problems are NP-hard. J. ACM 60(6), 1–39 (2013)

Horn, R.A., Johnson, C.R.: Matrix Analysis. Cambridge University Press, New York (1985)

Hu, S.: Bounds on strongly orthogonal ranks of tensors, revised manuscript (2019)

Hu, S., Li, G.: Convergence rate analysis for the higher order power method in best rank one approximations of tensors. Numer. Math. 140, 993–1031 (2018)

Ishteva, M., Absil, P.-A., Van Dooren, P.: Jacobi algorithm for the best low multilinear rank approximation of symmetric tensors. SIAM J. Matrix Anal. Appl. 34, 651–672 (2013)

Jiang, B., Dai, Y.H.: A framework of constraint preserving update schemes for optimization on Stiefel manifold. Math. Program. 153, 535–575 (2015)

Jordan, C.: Essai sur la géométrie à n dimensions. Bull. Soc. Math. 3, 103–174 (1875)

Kolda, T.G.: Orthogonal tensor decompositions. SIAM J. Matrix Anal. Appl. 23, 243–255 (2001)

Kolda, T.G.: A counterexample to the possibility of an extension of the Eckart–Young low-rank approximation theorem for the orthogonal rank tensor decomposition. SIAM J. Matrix Anal. Appl. 24, 762–767 (2003)

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51, 455–500 (2009)

Kroonenberg, P.M., De Leeuw, J.: Principal component analysis of three-mode data by means of alternating least squares algorithms. Psychometrika 45, 69–97 (1980)

Kruskal, J.B.: Three-way array: rank and uniqueness of trilinear decompositions, with application to arithmetic complexity and statistics. Linear Algebra Appl. 18, 95–138 (1977)

Landsberg, J.M.: Tensors: Geometry and Applications, Graduate Studies in Mathematics, vol. 128. AMS, Providence (2012)

Leibovici, D., Sabatier, R.: A singular value decomposition of a \(k\)-way array for principal component analysis of multiway data, PTA-k. Linear Algebra Appl. 269, 307–329 (1998)

Liu, Y.F., Dai, Y.H., Luo, Z.Q.: On the complexity of leakage interference minimization for interference alignment. In: 2011 IEEE 12th International Workshop on Signal Processing Advances in Wireless Communications, pp. 471–475 (2011)

Mangasarian, O.L., Fromovitz, S.: The Fritz–John necessary optimality conditions in the presence of equality and inequality constraints. J. Math. Ana. Appl. 7, 34–47 (1967)

Martin, C.D.M., Van Loan, C.F.: A Jacobi-type method for computing orthogonal tensor decompositions. SIAM J. Matrix Anal. Appl. 30, 1219–1232 (2008)

Nie, J.: Generating polynomials and symmetric tensor decompositions. Found. Comput. Math. 17, 423–465 (2017)

Robeva, E.: Orthogonal decomposition of symmetric tensors. SIAM J. Matrix Anal. Appl. 37, 86–102 (2016)

Rockafellar, R.T.: Lagrange multipliers and optimality. SIAM Rev. 35, 183–238 (1993)

Rockafellar, R.T., Wets, R.: Variational Analysis. Grundlehren der Mathematischen Wissenschaften, vol. 317. Springer, Berlin (1998)

Zhang, T., Golub, G.H.: Rank-one approximation to high order tensors. SIAM J. Matrix Anal. Appl. 23, 534–550 (2001)

Acknowledgements

This work is partially supported by National Science Foundation of China (Grant No. 11771328). The author is very grateful for the annoynous referees for their helpful suggestions and comments in revising this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A. Convergence theorem for PAM

Let \(f : \mathbb {R}^{n_1}\times \dots \times \mathbb {R}^{n_k}\rightarrow \mathbb {R}\cup \{+\infty \}\) be a function of the following structure

where Q is a \(C^1\) (continuously differentiable) function with locally Lipschitz continuous gradient, and \(g_i : \mathbb {R}^{n_i}\rightarrow \mathbb {R}\cup \{+\infty \}\) is a proper lower semicontinuous function for each \(i\in \{1,\dots ,k\}\).

We introduce the following algorithmic scheme to solve the optimization problem

Since f is proper and lower semicontinuous, \(\mathbf {x}\) is an optimizer of this minimization problem only if it is a critical point of f, i.e., \(0\in \partial f(\mathbf {x})\).

Algorithm A.1

general PAM

Step 1 can be implemented through several methods. In particular, (36), (37) and (38) are fulfilled if for all \(j\in \{1,\dots ,k\}\), \(\mathbf {x}^s_j\) is taken as a minimizer of the optimization problem

We now state the global convergence of Algorithm A.1 for a wide class of objective functions [4, Theorem 6.2].

Theorem A.2

(Proximal Alternating Minimization) Let f be a Kurdyka–Łojasiewicz function and bounded from below. Let \(\{\mathbf {x}^s\}\) be a sequence produced by Algorithm A.1. If \(\{\mathbf {x}^s\}\) is bounded, then it converges to a critical point of f.

Appendix B. Nonsmooth Lagrange multiplier

The following materials can be found in [40, Chapter 10].

Let \(X\subseteq \mathbb {R}^n\) be nonempty and closed, \(f_0 : \mathbb {R}^n\rightarrow \mathbb {R}\) be locally Lipschitz continuous, \(F : \mathbb {R}^n\rightarrow \mathbb {R}^m\) with \(F:=(f_1,\dots ,f_m)\) and each \(f_i\) locally Lipschitz continuous, and \(\theta : \mathbb {R}^m\rightarrow \mathbb {R}\cup \{\pm \infty \}\) be proper, lower semicontinuous, convex with effective domain D.

Consider the following optimization problem

If \(\overline{\mathbf {x}}\) is a local optimal solution to (40) such that the following constraint qualification being satisfied

then there exists a vector \(\overline{\mathbf {y}}\) such that

A vector \(\overline{\mathbf {y}}\) satisfying (42) is called a Lagrange multiplier, and the pair \((\overline{\mathbf {x}},\overline{\mathbf {y}})\) satisfying (42) is a Karush–Kuhn–Tucker pair with \(\overline{\mathbf {x}}\) a KKT point. Let \(M(\overline{\mathbf {x}})\) be the set of Lagrange multipliers for a KKT point \(\overline{\mathbf {x}}\). Under the constraint qualification (41), the set \(M(\overline{\mathbf {x}})\) is compact.

A particular case is \(\theta =\delta _{\{\mathbf {0}\}}\), the indicator function of the set \(\{\mathbf {0}\}\subset \mathbb {R}^m\). Then problem (40) reduces to

If each \(f_i\) is continuously differentiable for \(i\in \{1,\dots ,m\}\), then the constraint qualification is

It is the basic constraint qualification discussed in [39], an extension of the Mangasarian–Fromovitz constraint qualification [35].

The optimality condition (42) becomes

or in a more familiar form as

Appendix C. Proof of Proposition 3.3

Proof

It follows from (16) that \(U^{(i)}_s\in \mathbb {O}(n_i)\) for all \(i\in \{1,\dots ,k\}\) and \(s=1,2,\dots \) and hence the sequence \(\{\mathbb {U}_s\}\) is bounded.

Let \(\Xi _s\in \partial L_{\rho _s}(\mathbb {U}_s,\mathcal {B}_s;\mathcal {X}_s)\) be such that \(\Vert \Xi _s\Vert \le \epsilon _s\) which is guaranteed by (17). Thus,

for some \(\mathcal {W}_s\in \partial \Vert \mathcal {B}_s\Vert _1\), and \(B^{(i)}_s\in N_{\mathbb {O}(n_i)}(U^{(i)}_s)\) for all \(i\in \{1,\dots ,k\}\).

It follows from the last row in (45) and (17) that

By the fact that \(\mathcal {W}_s\) is uniformly bounded (cf. (22)), and \(\epsilon _s\rightarrow 0\), we conclude that \(\rho _s\big (\mathcal {B}_s-(U_s^{(1)},\dots ,U_s^{(k)})\cdot \mathcal {A}-\frac{1}{\rho _s}\mathcal {X}_s\big )\) is bounded. Therefore, the sequence \(\{\mathcal {X}_{s+1}\}\) is bounded by the multiplier update rule (18).

Since \(\mathcal {W}_s\) and \(\mathcal {X}_s\) are both bounded, it follows from (46) that \(\rho _s\big (\mathcal {B}_s-(U_s^{(1)},\dots ,U_s^{(k)})\cdot \mathcal {A}\big )\) is bounded. As \(\{\rho _s\}\) is a nondecreasing sequence of positive numbers and \(\{\mathbb {U}_s\}\) is bounded, we must have that the sequence \(\{\mathcal {B}_s\}\) is bounded.

In a conclusion, the sequence \(\{\mathbb {U}_s,\mathcal {B}_s,\mathcal {X}_s\}\) is bounded.

For the feasibility, note that \(\mathbb {U}_*\) satisfies the orthogonality by (16). The rest proof is divided into two parts, according to the boundedness of the sequence \(\{\rho _s\}\).

Part I. Suppose first that the penalty sequence \(\{\rho _s\}\) is bounded. By the penalty parameter update rule (19), it follows that \(\rho _s\) stabilizes after some \(s_0\), i.e., \(\rho _s=\rho _{s_0}\) for all \(s\ge s_0\). Thus,

The feasibility result then follows from a standard continuity argument.

Part II. In the following, we assume that \(\rho _s\rightarrow \infty \) as \(s\rightarrow \infty \).

Likewise, it follows from the last row in (45) and (17) that

By the fact that \(\mathcal {W}_s\) and \(\mathcal {X}_s\) are both bounded, \(\rho _s\rightarrow \infty \), and \(\epsilon _s\rightarrow 0\), we have that

Thus, by continuity, we have that \((\mathbb {U}_*,\mathcal {B}_*)\) is a feasible point.

In the following, we show that \((\mathbb {U}_*,\mathcal {B}_*)\) is a KKT point. Let

It follows from the above analysis that \(\{\mathcal {M}_s\}\) is bounded. By the multiplier update rule (18), the system (45) can be rewritten as

The boundedness of \(\{\mathbb {U}_s,\mathcal {B}_s,\mathcal {X}_s,\mathcal {M}_s\}\) and \(\{\Xi _s\}\) implies the boundedness of each \(\{B_s^{(i)}\}\) for all \(i\in \{1,\dots ,k\}\) as well. We assume without loss of generality that

for an infinite index set \(\mathcal {K}\subseteq \{1,2,\dots \}\), and in where

Taking limitations on both sides of (49) within \(\mathcal {K}\), we have then

where \(V^{(i)}_*\) is defined as (21) with \(U^{(i)}\)’s being replaced by \(U^{(i)}_*\)’s. By the closedness of subdifferentials, we have

and

Since each \(N_{\mathbb {O}(n_i)}(U^{(i)}_*)\) is a linear subspace, we have shown that \((\mathbb {U}_*,\mathcal {B}_*)\) is a KKT point of (12) with Lagrange multiplier \(\mathcal {X}_*+\mathcal {M}_*\) (cf. (25)). The proof is complete. \(\square \)

Appendix D. Proof of Proposition 4.2

Proof

It is known that for all \(i\in \{1,\dots ,k\}\) each orthogonal group \(\mathbb {O}(n_i)\) is an algebraic set, defined by a system of polynomial equations. Therefore, \(\mathbb {O}(n_i)\) is a semi-algebraic set and its indicator function is semi-algebraic [8]. The \(l_1\)-norm \(\Vert \cdot \Vert _1\) is also semi-algebraic. Also known is that each semi-algebraic function is a Kurdyka–Łojasiewicz function (cf. [9, Appendix]). Thus, as a summation of the \(l_1\)-norm, the indicator functions of the orthogonal groups, and polynomials, the augmented Lagrangian function \(L_{\rho }(\cdot ,\cdot ;\mathcal {X})\) is a Kurdyka–Łojasiewicz function.

If the iteration sequence \(\{(\mathbb {U}_s,\mathcal {B}_s)\}\) generated by Algorithm 4.1 is bounded, and the function \(L_{\rho }(\cdot ,\cdot ;\mathcal {X})\) is bounded from below, then the sequence \(\{(\mathbb {U}_s,\mathcal {B}_s)\}\) converges by Theorem A.2.

For any given \(\mathcal {X}\), it follows immediately from (14) that the function \(L_{\rho }(\cdot ,\cdot ;\mathcal {X})\) is bounded from below, since

In the language of Appendix A, the variable \(\mathcal {B}\) refers to the \(j=0\)-th block variable, and \(U^{(j)}\) the j-th block for \(j\in \{1,\dots ,k\}\). Then,

and the function Q is defined naturally to comprise \(L_\rho \) in (50).

We first show that (38) is satisfied. By (26), we know that (38) is satisfied by \(\alpha _j=\overline{c}\) for all \(j\in \{0,1,\dots ,k\}\).

It follows from (26), (28) and (29) that

Summing up these inequalities, we have

Therefore, the sequence \(\{L_{\rho }(\mathbb {U}_s,\mathcal {B}_s;\mathcal {X})\}\) monotonically decreases to a finite limit.

On the other hand, since each component matrix of \(\mathbb {U}_s\) is an orthogonal matrix, the sequence \(\{\mathbb {U}_s\}\) is bounded. Suppose that the sequence \(\{\mathcal {B}_s\}\) is unbounded. Then, it follows from (50) that the sequence \(\{L_{\rho }(\mathbb {U}_s,\mathcal {B}_s;\mathcal {X})\}\) should diverge to infinity, which is an immediate contradiction. Thus, the iteration sequence \(\{(\mathbb {U}_s,\mathcal {B}_s)\}\) must be bounded, and hence converges by Theorem A.2.

In the following, we show that \(\Vert \Theta _s\Vert \rightarrow 0\) as \(s\rightarrow \infty \). First of all, we derive an upper bound estimate for \(\Vert V^{(j)}_s-\tilde{V}^{(j)}_s\Vert \) as

where the second inequality follows from the fact that \(U^{(i)}_t\in \mathbb {O}(n_i)\) for all \(i\in \{1,\dots ,k\}\) and \(t=1,2,\dots \), and the third from a standard induction.

Likewise, we have

Thus, we have

Since the iteration sequence \(\{(\mathbb {U}_s,\mathcal {B}_s)\}\) converges, we conclude that \(\Vert \Theta _s\Vert \rightarrow 0\) as \(s\rightarrow \infty \). As \(\epsilon >0\) is a given parameter, Algorithm 4.1 terminates after finitely many iterations. \(\square \)

Rights and permissions

About this article

Cite this article

Hu, S. An inexact augmented Lagrangian method for computing strongly orthogonal decompositions of tensors. Comput Optim Appl 75, 701–737 (2020). https://doi.org/10.1007/s10589-019-00128-3

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-019-00128-3