Abstract

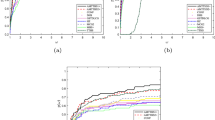

In this paper we propose a new Riemannian conjugate gradient method for optimization on the Stiefel manifold. We introduce two novel vector transports associated with the retraction constructed by the Cayley transform. Both of them satisfy the Ring-Wirth nonexpansive condition, which is fundamental for convergence analysis of Riemannian conjugate gradient methods, and one of them is also isometric. It is known that the Ring-Wirth nonexpansive condition does not hold for traditional vector transports as the differentiated retractions of QR and polar decompositions. Practical formulae of the new vector transports for low-rank matrices are obtained. Dai’s nonmonotone conjugate gradient method is generalized to the Riemannian case and global convergence of the new algorithm is established under standard assumptions. Numerical results on a variety of low-rank test problems demonstrate the effectiveness of the new method.

Similar content being viewed by others

References

Absil, P.-A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton (2008)

Absil, P.-A., Malick, J.: Projection-like retractions on matrix manifolds. SIAM J. Optim. 22, 135–158 (2012)

Balogh, J., Csendes, T., Rapcsák, T.: Some global optimization problems on Stiefel manifolds. J. Glob. Optim. 30, 91–101 (2004)

Barzilai, J., Borwein, J.M.: Two-point step size gradient methods. IMA J. Numer. Anal. 8, 141–148 (1988)

Dai, Y.: A nonmonotone conjugate gradient algorithm for unconstrained optimization. J. Syst. Sci. Complex. 15, 139–145 (2002)

Dai, Y., Yuan, Y.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10, 177–182 (1999)

Dennis, J.E., Schnabel, R.B.: Numerical Methods for Unconstrained Optimization and Nonlinear Equations. Prentice-Hall, Englewood Cliffs. Reprinted by SIAM Publications (1993)

do Carmo, M.P.: Riemannian geometry. Translated from the second Portuguese edition by Francis Flaherty. Mathematics: Theory & Applications. Birkhäuser Boston Inc., Boston (1992)

Edelman, A., Arias, T.A., Smith, S.T.: The geometry of algorithms with orthogonality constraints. SIAM J. Matrix Anal. Appl. 20, 303–353 (1998)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 4th edn. The Johns Hopkins University Press, Baltimore (2013)

Gower, J.C., Dijksterhuis, G.B.: Procrustes Problems, Volume 30 of Oxford Statistical Science Series. Oxford University Press, Oxford (2004)

Grubišić, I., Pietersz, R.: Efficient rank reduction of correlation matrices. Linear Algebra Appl. 422, 629–653 (2007)

Higham, N.J.: Functions of Matrices: Theory and Computation. SIAM, Philadelphia (2008)

Huang, W.: Optimization algorithms on Riemannian manifolds with applications. Ph.D. thesis, Department of Mathematics, Florida State University (2013)

Huang, W., Absil, P.-A., Gallivan, K.A.: A Riemannian symmetric rank-one trust-region method. Math. Program. 150, 179–216 (2015)

Huang, W., Absil, P.-A., Gallivan, K.A.: Intrinsic representation of tangent vectors and vector transports on matrix manifolds. Technical Report UCL-INMA-2016.08

Huang, W., Gallivan, K.A., Absil, P.-A.: A Broyden class of quasi-Newton methods for Riemannian optimization. SIAM J. Optim. 25, 1660–1685 (2015)

Jiang, B., Dai, Y.: A framework of constraint preserving update schemes for optimization on Stiefel manifold. Math. Program. 153, 535–575 (2015)

Li, Q., Qi, H.: A sequential semismooth Newton method for the nearest low-rank correlation matrix problem. SIAM J. Optim. 21, 1641–1666 (2011)

Liu, X., Wen, Z., Zhang, Y.: Limited memory block Krylov subspace optimization for computing dominant singular value decompositions. SIAM J. Sci. Comput. 35, 1641–1668 (2013)

Liu, X., Wen, Z., Wang, X., Ulbrich, M., Yuan, Y.: On the analysis of the discretized Kohn–Sham density functional theory. SIAM J. Numer. Anal. 53, 1758–1785 (2015)

Ngo, T.T., Bellalij, M., Saad, Y.: The trace ratio optimization problem. SIAM Rev. 54, 545–569 (2012)

Nishimori, Y., Akaho, S.: Learning algorithms utilizing quasi-geodesic flows on the Stiefel manifold. Neurocomputing 67, 106–135 (2005)

Nocedal, J., Wright, S.J.: Numerical Optimization, 2nd edn. Springer, New York (2006)

Ring, W., Wirth, B.: Optimization methods on Riemannian manifolds and their application to shape space. SIAM J. Optim. 22, 596–627 (2012)

Saad, Y.: Iterative Methods for Sparse Linear Systems, 2nd edn. SIAM, Philadelphia (2003)

Saad, Y.: Numerical Methods for Large Eigenvalue Problems, Revised Edition. SIAM, Philadelphia (2011)

Sato, H.: A Dai–Yuan-type Riemannian conjugate gradient method with the weak Wolfe conditions. Comput. Optim. Appl. 64, 101–118 (2016)

Sato, H., Iwai, T.: A Riemannian optimization approach to the matrix singular value decompositon. SIAM J. Optim. 23, 188–212 (2013)

Sato, H., Iwai, T.: A new, globally convergent Riemannian conjugate gradient method. Optimization. 64, 1011–1031 (2015)

Stiefel, E.: Richtungsfelder und fernparallelismus in n-dimensionalen mannigfaltigkeiten. Comment. Math. Helv. 8, 305–353 (1935)

Theis, F.J., Cason, T.P., Absil, P.-A.: Soft dimension reduction for ICA by joint diagonalization on the Stiefel manifold. In: Proceedings of the 8th International Conference on Independent Component Analysis and Signal Separation, vol. 5441, pp. 354–361 (2009)

Van Loan, C.F.: The ubiquitous Kronecker product. J. Comput. Appl. Math. 123, 85–100 (2000)

Wen, Z., Milzarek, A., Ulbrich, M., Zhang, H.: Adaptive regularized self-consistent field iteration with exact Hessian for electronic structure calculations. SIAM J. Sci. Comput. 35, A1299–A1324 (2013)

Wen, Z., Yin, W.: A feasible method for optimization with orthogonality constraints. Math. Program. 142, 397–434 (2013)

Wen, Z., Yang, C., Liu, X., Zhang, Y.: Trace-penalty minimization for large-scale eigenspace computation. J. Sci. Comput. 66, 1175–1203 (2016)

Yuan, Y.: Subspace techniques for nonlinear optimization. In: Jeltsch, R., Li, D.O., Sloan, I.H. (eds.) Some Topics in Industrial and Applied Mathematics (Series in Contemporary Applied Mathematics CAM 8), pp. 206–218. Higher Education Press, Beijing (2007)

Zhang, H., Hager, W.W.: A nonmonotone line search technique and its application to unconstrained optimization. SIAM J. Optim. 14, 1043–1056 (2004)

Zhang, X., Zhu, J., Wen, Z., Zhou, A.: Gradient type optimization methods for electronic structure calculations. SIAM J. Sci. Comput. 36, C265–C289 (2014)

Zhang, L., Li, R.: Maximization of the sum of the trace ratio on the Stiefel manifold, I: theory. Sci. China Math. 57, 2495–2508 (2014)

Zhang, L., Li, R.: Maximization of the sum of the trace ratio on the Stiefel manifold, II: computation. Sci. China Math. 58, 1549–1566 (2015)

Zhu, X.: A feasible filter method for the nearest low-rank correlation matrix problem. Numer. Algorithms 69, 763–784 (2015)

Zhu, X., Duan, C.: Gradient methods with approximate exponential retractions for optimization on the Stiefel manifold. Optimization (under review)

Acknowledgements

This research is supported by National Natural Science Foundation of China (Nos. 11601317 and 11526135) and University Young Teachers’ Training Scheme of Shanghai (No. ZZsdl15124). The author is very grateful to the coordinating editor and two anonymous referees for their detailed and valuable comments and suggestions which helped improve the quality of this paper. The author would also like to thank Prof. Zaiwen Wen and Prof. Wotao Yin for sharing their MATLAB codes of OptStiefelGBB online.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhu, X. A Riemannian conjugate gradient method for optimization on the Stiefel manifold. Comput Optim Appl 67, 73–110 (2017). https://doi.org/10.1007/s10589-016-9883-4

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-016-9883-4

Keywords

- Riemannian optimization

- Stiefel manifold

- Conjugate gradient method

- Retraction

- Vector transport

- Cayley transform