Abstract

The cloud service provider market has recently expanded its offerings by providing edge as a service. This involves offering resources equivalent to those already available in the cloud, but through data centers located closer to the end user, with the goal of improving service latencies. Application providers face the challenge of selecting appropriate resources, both from the edge and cloud, to deploy their applications in a way that minimizes deployment costs while satisfying latency requirements. This paper presents Edarop (EDge ARchitecture OPtimization), an innovative orchestration mechanism for the optimal allocation of virtual machines in geographically distributed edge and cloud infrastructures. Edarop is capable of handling different edge and cloud vendors, each offering various types of VMs in different regions, with different prices, and network latencies. It also supports multiple simultaneous applications with different latency requirements and load profiles. Edarop employs Integer Linear Programming (ILP) to ensure the globally optimal solution within a reasonable time frame for the considered use cases. Several variants of the mechanism are provided, depending on whether the objective is to minimize cost, response times, or both. These variants are compared to each other and to alternative approaches, with the results showing that, unlike other methods, Edarop consistently respects latency constraints while minimizing the proposed objectives.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

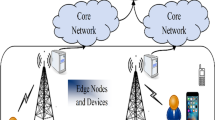

The IaaS public cloud market has been traditionally dominated by a few major vendors (such as Amazon Web Services, Google Cloud, and Microsoft Azure) that provided users with the ability to rent computing resources, such as processing power, storage, and network connectivity, on an as-needed basis. These cloud services are mainly based in large, centralized datacenters with a growing number of points of presence in different worldwide regions. More recently, edge computing has emerged in response to the growing demand for low latency and high performance, as well as the need for real-time data processing of modern applications. Edge computing involves processing data at the edge of a network, close to the source of data generation, rather than in the cloud. Although the edge market is still relatively nascent, it is expected to expand significantly in the coming years, showing a compound annual growth rate (CAGR) of almost 38% from 2023 to 2030.Footnote 1 Many companies, including large cloud providers, telco companies, or regional datacenter providers, are starting to offer edge resources as a service (including computing, storage and networks) close to the final users. For example, AWS (Amazon Web Services) is now offering two new types of zones, local and wavelength zones,Footnote 2 with lower latency than traditional cloud regions. Local zones are an extension of an AWS Region to place resources in multiple locations closer to end users, and Wavelength zones deploy standard AWS compute and storage services to the edge of telecommunication carriers’ 5 G networks. Other providers, such as Equinix Metal,Footnote 3 are specialized in offering a range of edge infrastructure solutions, including virtual infrastructure and bare-metal infrastructure, to support the deployment of edge computing solutions, with many points of presence around the world. Also, many telco companies, such as TelefónicaFootnote 4 or Vodafone,Footnote 5 are starting to offer multi-access edge computing architectures that enable the placement of edge resources, methods and technologies in data centers located within the telco operator network.

As a consequence of this growth in the edge market, we are seeing a significant increase in the number of edge and cloud providers, in the number of zones and regions available, and in the variety of resource or instance types available (both virtual and bare-metal), pricing schemes and network latencies. Therefore, for an application provider with multiple geo-distributed users, selecting the most appropriate cloud and edge resources to optimize, for example, the total cost of the deployment while meeting a given latency limit for each application, is an important challenge.

In this paper, we present a novel orchestration mechanism for the optimal allocation of virtual machines (VMs) over geographically distributed cloud and edge infrastructures, called Edarop (EDge ARchitecture OPtimization). This orchestrator can be used and integrated with any existing edge/cloud management platform or simulator, and can deal with different cloud and edge vendors, regions or zones, each offering different instance types (in terms of CPU, memory and storage capacity) and prices, and exhibiting different network latencies with end users. Edarop can also manage simultaneous applications, with different latency requirements, and different workload profiles. For a given application, its workload is characterized by the number of user requests received in each time interval from a given geographic region, assuming that users may be distributed in different geographic regions. We also assume that, associated with each user region, there is at least one edge data center that can serve requests from users located in that region. In addition, there also may be several cloud data centers, so that user requests can also be redirected to these remote clouds if needed.

The goal of Edarop is to find the best VM allocation, instance type selection and workload distribution, across various geographically distributed cloud and edge data centers, that meet the demand of all applications, while minimizing some optimization criteria, such as the total infrastructure cost or the average application latency, and subject to some constraints, such as a budget limit or the maximum latency allowed per application. The approach adopted in Edarop is based on an Integer Linear Programming (ILP) formulation, since, for the considered use cases, a global optimal solution can be reached in a reasonable time interval despite the problem being NP-hard. These use cases are based on realistic application workload information obtained from actual traces [1], and on realistic performance and latency values measured on actual edge and cloud platforms (local and cloud zones from AWS).

The main contributions of this work are the following:

-

Several mathematical models with different combinations of optimization criteria (cost and response time) to allocate VMs in multiple edge and cloud regions from the point of view of an application provider (which in this context is a client of the edge and cloud providers). The total response time has been modelled taking into account the network latency from multiple user regions and the response time in the VMs.

-

Implementation of the proposed mathematical models to allocate a set of VMs, enabling efficient management of application workloads in edge architectures.

-

A set of experiments to compare the different models with each other and with other approaches. All data and code required to repeat the experiments has been made publicly available.

The rest of this paper is organized as follows. Section 2 provides a discussion of related works. Then, Sect. 3 presents the mathematical models used, while Sect. 4 evaluates the different variants of these models. Finally, Sect. 5 summarizes the conclusions of this study and suggests possible directions for future work.

2 Related work

Cost optimization is an established research area in cloud computing [2] and, in recent years, there has been a growing interest in optimization for edge computing and the related field of fog computing. Many approaches have been proposed with different objectives, architectures and optimization techniques. These three criteria will be used to classify the proposals in the state of the art, which are summarized in Table 1.

The first criterion is the objective of the approach. Two of the most common are considered in this work: minimization of monetary cost (simply referred to as “C (cost)” in Table 1) and minimization of response time, sometimes called end-to-end latency or application latency. Another common objective is energy consumption minimization. Less common objectives include resource usage minimization, reliability and privacy preservation. These objectives can be addressed individually or jointly in multi-objective approaches.

The second criterion is the architecture of the system. One of the key differences is whether one or multiple regions are considered, both in the edge and in the cloud. If multiple regions are considered, the problem does not only consist in deciding whether to use the edge or the cloud, but also in deciding which region to use. In addition, some approaches take into account that the users can move between regions. This is typical of what has been called “the mobile edge computing” (MEC) paradigm [29]. Finally, the problem may address distributing different elements: applications, requests, tasks, functions in a FaaS (Function as a Service) platform, or data.

The third criterion is the optimization technique used. The most common are heuristic algorithms, such as genetic algorithms, particle swarm optimization, tabu search or greedy algorithms. Other less common approaches include dynamic programming, Lyapunov optimization, reinforcement learning or variations of linear programming.

One of the fields where edge computing is most used is the Internet of Things (IoT). Nan et al. [13] proposes a technique to optimize the placement of IoT applications in fog or cloud regions. It considers that the fog layer has no cost but is less powerful than the cloud. It proposes an algorithm called “Unit-slot optimization” that uses Lyapunov optimization to minimize the cost while guaranteeing the response time. Another work focused on IoT that only considers one cloud is [4], which minimizes deadline misses by moving jobs from the fog to the cloud. The authors consider that the fog nodes have less computing power but also lower latency than the cloud. They propose a Mixed Integer Programming (MIP) model and a Genetic Algorithm (GA). Brogi et al. [6] proposes mixing the GA approach of [30] with the Monte Carlo simulations proposed in [31] to find feasible solutions taking into account Quality of Service (QoS), cost and resource usage, although considering resources in the cloud nodes to be infinite. In [14], an Elitism-based GA is proposed to optimize response time, cost and energy consumption in a fog architecture for IoT services deployed using containers. However, the technique only considers how to distribute the services between different fog devices and it does not consider placement in the cloud. Ali et al. [5] optimizes both the response time and the cost using a Discrete Non-dominated Sorting GA II (DNSGA-II), but they do not consider different regions for the users.

Many works focus on tasks organized in Directed Acyclic Graphs (DAGs). One of the first works in this line is [18], which uses a heuristic based on a utility function of cost and response time. This work is extended in [19], where an algorithm called CMaS (Cost-Makespan aware Scheduling) is proposed. In both works, the location of the users is not taken into account and the communication times only depend on the type of node. Röger et al. [20] formulates, using a DAG, the integer linear programming model for a latency distribution problem and then proposes a greedy heuristic. It minimizes cost while meeting latency constraints, but it is limited to stream processing applications deployed in heterogeneous infrastructures. Another work that uses a DAG is [28], which proposes a two-step heuristic algorithm to optimize both cost and response time using a utility function, but only takes into account communication costs in the fog layer. Guevara and Fonseca [8] introduces different classes of services and proposes two algorithms based on ILP, but only minimizes response time.

This limitation of only optimizing response time applies to other works, such as [22] or [3]. Memari et al. [11] adds memory consumption to the objectives to minimize, but does not consider cost either.

Xu et al. [27] proposes using geo-distributed data centers in the edge and the cloud to minimize latency and cost and provide fault tolerance using a reinforcement learning approach. However, it is only applied to data streaming applications. The approach in [26] takes into account geo-distributed edge clouds, but the objective is to maximize resource revenue and does not minimize response time. Wang and Varghese [25] proposes using reinforcement learning to optimize QoS and cost, but it only considers which services of an application are scheduled in the fog or the cloud, without considering different regions.

Some works include energy among their objectives. Li et al. [10] proposes a set of algorithms to minimize cost, response time and energy consumption, but does not take into account the cost of the edge nodes and only considers one cloud region. Saif et al. [21] proposes using Grey Wolf Optimization for minimizing response time and energy consumption, but does not consider cost. Tychalas et al. [24] proposes using an algorithm based on utilization thresholds for distributing Bag-of-Tasks workloads optimizing cost and energy, but does not consider response time. Pasdar et al. [15] considers cost in the cloud nodes and energy also a concern when using public clouds. This concern is also considered in [23], in addition to cost and deadline miss minimization. A two-step algorithm is proposed: first clustering, where deep learning is found to be the best approach, and then scheduling, where Earliest Deadline First is used. However, only one edge and one cloud region are taken into account.

Some recent works consider the use of containers in microservices architectures deployed in the edge. Guisheng et al. [9] uses Particle Swarm Optimization to optimize the transmission latency, average number of failing requests and imbalance the of resource usages, but not cost. On the other hand, [7] does consider cost in the allocation of microservices to edge nodes, but not response time. Another limitation of this work is that only edge nodes can run containers and the cloud layer is only used for monitoring edge servers.

Another recent advance is deploying applications in FaaS architectures that include an edge layer. Pelle [16] proposes a technique based on dynamic programming to optimize the cost with end-to-end latency constraints by leveraging AWS Lambda and Greengrass platforms. It only considers one edge region and one cloud region. Pelle [17] extends this work to consider user mobility with several edge regions and the distribution of data stores. Moreno et al. [12] studies how to limit the impact of the cold-start problem with instance reuse and instance pre-warning in serverless applications. It proposes two heuristic algorithms, edge first and warm first, to optimize the response time and the resource usage, but it does not take cost into account.

In conclusion, despite the extensive research on VM allocation, our review of the related work reveals a gap in the existing approaches. Specifically, no current method proposes an optimal solution for cost and response time, considering that users, edge nodes, and cloud nodes may be located in different regions. As indicated in Table 1, we have not found any study that offers an optimal solution for cost and response time across multiple regions in both the edge and the cloud, denoted as C, RT, (n, n), LP in the table’s nomenclature.

Existing methodologies have limitations, such as the assumption of a single region in the edge or the cloud, the disregard of edge costs, or the focus on distributing containers or functions rather than VMs. The most relevant studies we have identified are [27] and [28]. However, [27] is specific to streaming applications, and [28] is more challenging to apply than our proposal due to the necessity of defining a DAG. Furthermore, none of these studies propose a formulation for the optimal solution, instead resorting to heuristic approaches. Therefore, to the best of our knowledge, our paper is the first to propose a formulation to obtain the optimal solution to this problem, thereby addressing these limitations and advancing the field.

3 System model

3.1 Overview

This section details the model proposed for cost and response time optimization of edge architectures. As an overview, the inputs for the model are the infrastructure options (edge and cloud regions, VM types, prices) and the workload for a given scheduling time slot, i.e., for a period of time where the VMs used are not changed. The workload is given as the number of requests for each application coming from each user region. The output will be an allocation, that is, which VMs should be deployed in each edge or cloud region and how many requests should be served by each VM. There are two possible objectives to optimize (cost and response time), so four integer linear programming problems will be proposed, balancing these two objectives in different ways. The proposed models for these problems can be efficiently solved using standard solvers for linear programming, such as COIN CBC [32] or CPLEX [33].

The following subsections provide a detailed formulation of the problems. Table 2 can be used as reference for the notation.

3.2 Inputs

In an edge architecture, there is a set of users sending requests to different applications. Let \(R_r\) denote each of the regions where the users and the data centers of the edge and cloud providers are located. Each region can be classified into one of these three types:

-

User regions Users (clients) are distributed in different user regions around the world.

-

Edge regions These regions include edge data centers close to the user regions, so the latency with these users is low. We assume that each user region has at least one edge region that only serves requests from users in this nearby region.

-

Cloud regions They correspond to traditional cloud data centers, which are further away from users than edge regions and, therefore, exhibit higher latency with user regions. We assume that cloud regions can serve requests from any user region.

Let \(A_a\) denote each application and \(T_a\) denote the maximum response time allowed for that application from the moment the user sends the request until the response is received, i.e., the response time includes network latencies and server processing time. Let \(w_{a,u}\) represent the number of requests for application \(A_a\) from all the users in region \(R_u\) in the scheduling time slot.

Cloud providers offer different types of VMs in each region. Each type might have different performance and pricing. A type of VM in a region will be called an “instance class” and will be denoted as \(I_i\). It is assumed that a VM of instance class \(I_i\) only runs one type of application in a given time slot.

Let \(p_i\) represent the price per scheduling time slot for instance class \(I_i\), \(R_{r_i}\) represent the edge or cloud region where \(I_i\) is located, and \(\text{perf}_{i,a}\) represent the performance given as the number of requests that can be served in a time slot for application \(A_a\), i.e. Fig. 1 represents a typical response time curve for an application. It is based on the principles of queuing theory, particularly Little’s Law [34]. In order to avoid working in the saturation region, where the system becomes unstable, a Service Level Objective (SLO) \(S_{i,a}\) in the zone before the response time spikes must be selected as the limiting point. The arrival rate corresponding to this SLO is the performance \(\text{perf}_{i,a}\). If a VM of instance class \(I_i\) receives requests of application \(A_a\) at a rate smaller than \(\text{perf}_{i,a}\), it can be guaranteed that the server response time will be smaller than \(S_{i,a}\).

Let \(n_{u,r}\) represent the network latency between user region \(R_u\) and edge or cloud region \(R_r\).

3.3 Outputs

There are two set of decision variables in the linear programming model. The first one, \(X_{a,i}\), denotes the number of VMs of instance class \(I_i\) (which are in region \(R_{r_i}\)) that serve requests for application \(A_a\), and represent how VMs are allocated in the different clouds. The second set of decision variables is \(Y_{a,u,i}\), which denotes the number of requests of application \(A_a\) coming from users in the region \(R_u\) that are served by VMs of instance class \(I_i\), and represent how the requests are distributed between different VMs.

3.4 Problem formulation

As cost and response time are both important objectives to consider in edge architectures, several problem formulations will be proposed depending on which is the focus. The general approach presented here is called Edarop (EDge ARchitecture OPtimization), and there are four variants.

The first variant, called EdaropC (Edarop Cost), takes only one objective into account: the cost. However, it has the maximum response time per application, \(T_a\), as a constraint. The objective function to minimize is as follows:

and it is subject to the following constraints:

The constraints presented in Eq. (2) ensure that the aggregated performance of all VMs assigned to an application is sufficient to meet the overall workload demand (i.e., number of requests) for that application.

Constraints from Eq. (3) guarantee that the aggregated performance of all VMs of each instance class assigned to an application is sufficient to meet the overall workload demand assigned to that instance class.

Constraints from Eq. (4) ensure that all requests for an application in a particular user region are served by the instance classes assigned to that application and region.

Constraints from Eq. (5) enable the analyst to impose a limit on the average of the maximum response time, even when only the cost is optimized. The numerator on the right-hand side of this equation is the total maximum response time for all requests. The maximum response time for a request of application \(A_a\) coming from user region \(R_u\) and served by an instance class \(I_i\) located in region \(R_{r_i}\) can be computed as the sum of the network latency \(n_{u,r_i}\) and the server response time that can be guaranteed in the VM by its SLO, \(S_{i,a}\). The denominator is the total number of requests, which is a constant. Therefore, the division generates the average of the maximum response time.

Constraints from Eq. (6) guarantee that, for a given application \(A_a\), the response time of its requests does not exceed \(T_a\), the maximum allowed response time for that application. These constraints only have to be fulfilled if there are requests from application \(A_a\) coming from region \(R_u\) assigned to instance \(I_i\), i.e., if \(Y_{a,u,i}>0\). This condition makes these constraints non-linear, so they must be transformed into these two sets of constraints:

For the transformation, Boolean indicator variables \(Z_{a,u,i}\) must be introduced. \(Z_{a,u,i}\) is 1 (true) if there are requests of application \(A_a\) coming from users in region \(R_u\) assigned to a VM of instance class \(I_i\). In constraint Eq. (7), M is a large enough number, larger than the maximum number of requests that can be assigned to an instance class. This means that \(Z_{a,u,i}\) has to be 1 if \(Y_{a,u,i}>0\), and when \(Z_{a,u,i}\) is 1, constraints from Eq. (8) guarantee that the maximum response time for \(A_a\) is fulfilled.

The second variant proposed is called EdaropR (Edarop Response time). Its only objective is to minimize the average of the maximum response time. The maximum total cost, \(C_{\text{max}}\), will be a constraint. The objective function to minimize is as follows:

EdaropR is subject to the same constraints already seen in Eqs. (2), (3, (4), (7) and (8), and it has this additional constraint for the cost:

The two variants proposed so far have an issue: there may be more than one optimal solution for an objective (cost or response time), but the other is not optimized. For instance, EdaropC might find an optimal solution in terms of cost, but there may be other solutions with the same cost and a lower average maximum response time. This is why two additional multi-objective variants have been developed. Both are based on a lexicographic approach [35], which is is a decision-making method for multi-objective optimization where the objectives are prioritized in a strict order of importance. Optimization is then performed by sequentially satisfying the objectives in the specified order. We opt for this approach over a weighted formula approach due to the challenges associated with determining specific weights for each objective. Additionally, the lexicographic approach provides the analyst with a more intuitive method for deciding which objective to prioritize.

The variant called EdaropCR (Edarop Cost then Response time) optimizes the cost first using EdaropC and then the average response time, using EdaropR with the maximum cost fixed to the result of the first optimization process. This means that two optimization problems are solved.

The first step for obtaining the solution with EdaropCR is obtaining the solution to the EdaropC problem, using Eqs. (1), (2), (3), (4), (5), (7) and (8). The optimal cost obtained will be called \(C_\text{EdaropC}\). The next step is optimizing the average response time with EdaropR, i.e., solving a second ILP problem using Eq. (9) as objective and the constraints in Eqs. (2), (3), (4), (7), (8) and (10), while setting \(C_\text{max}\) to \(C_\text{EdaropC}\) in the last constraint. The solution for EdaropCR will be the solution obtained by the solver for this second problem.

The last variant is the complementary one, optimizing the average response time first with EdaropR and then the cost, using EdaropC with the maximum response time fixed to the result of the first optimization process, and is therefore called EdaropRC (Edarop Response time then Cost). Again, two problems are solved.

The first step is solving the EdaropR problem using Eq. (9) as objective and Eqs. (2), (3), (4), (7), (8) and (10) as constraints. The optimal response time obtained will be called \(T_\text{EdaropR}\). The next step is optimizing the cost with EdaropC, i.e., solving a second ILP problem using Eq. (1) as objective to optimize the cost, and the constraints in Eqs. (2), (3), (4), (5), (7), and (8), while setting \(T_\text{max}\) to \(T_\text{EdaropR}\) in Eq. (5). The solution obtained by the solver to this second problem is the solution for EdaropRC.

4 Evaluation

4.1 Experimental set-up

In order to compare the different variants proposed, a case study has been developed using AWS offerings. Specifically, AWS provides Local and Wavelength Zones to allow users to run low latency applications as part of the EC2 (Elastic Compute Cloud) services. These Local and Wavelength zones have a parent cloud region. For this case study, four local zones (modelled in Edarop as edge regions) in different parts of the world and their parent cloud regions have been selected, as shown in Fig. 2 and Table 3, where the short name for each region that will be used hereafter can also be seen. Figure 2 also shows the user regions employed.

The VM types offered by Amazon in each region can vary. When available, the c5.2xlarge and c5.4xlarge types were selected as input options for the optimization algorithm; when not available, the most similar types were employed instead. Table 3 shows the VM types used and their prices in each region, obtained from the AWS website.

An important parameter in edge computing architectures, which has been incorporated in our optimization model, is latency. In order to measure it, an IP address geolocated in each of the user regions was used. The average time obtained by the ping tool from a VM deployed in each of the edge and cloud regions to the geolocated IP addresses was measured. The results are shown in Table 4. As shown in Fig. 2, it is assumed that a request from a host in a user region can only be sent to the nearest edge region, and therefore, the latencies to other edge regions have not been measured.

Three applications are used in the case study. As workloads for edge architectures are not publicly available [36, 37], workload composition [36] has been used to generate the traces. There are three applications and four user regions, so 12 traces are required. The microservice call rate of the 12 most used microservices in the traces provided in [1] have been used to model the requests per minute received by each application in each region. The traces encompass 12 h of requests to microservices deployed in the Alibaba cloud. The scheduling slot is set to one hour. Figure 3 shows all the resulting traces and Table 5 summarizes them per application. In addition, the table shows \(T_a\), the maximum response time allowed for each application. The workload composition source code has been made publicFootnote 6 to allow for reproducibility.

Another parameter needed for the model is the performance for each application of each VM type used. To obtain this, a synthetic benchmark called “flask_bench”Footnote 7 has been developed and run in each VM type. This benchmark consists of a client that sends requests to a server implemented in Python using Flask as the web framework. Each request receives a parameter with a number of iterations to select the amount of computation performed. In order to model applications with different computational requirements, a different number of iterations has been selected for each application (see Table 6).

An integration into PKB (PerfKit BenchmarkerFootnote 8) has been developed, making it easy to repeat the experiments or execute them in new VM types. Table 7 shows the performance of each VM type for each application obtained by the benchmark. It also shows \(S_{i,a}\), the Service Level Objective (SLO) for obtaining these performance levels. These values have been selected so that the response times in the VMs do not spike, as explained in Sect. 3.2.

4.2 Results

An open source Python package called “edarop”Footnote 9 has been developed to apply the optimization techniques presented in this research. This package allows the user to represent in Python the inputs of the problems presented above. It then uses COIN CBC 2.10.8 as a solver to obtain the solutions and converts the outputs to Python variables. The case study presented in the previous sections has been implemented using this package and has also been open sourced.Footnote 10 The results were obtained on an Intel Core i7-4790 CPU at 3.60 GHz with 32 GB of RAM.

As none of the techniques in the related work is directly applicable to the case study, the new techniques will be compared with these two other approaches:

-

A Greedy algorithm that selects, for each application and each region, the cheapest instance class in terms of performance per dollar. If there are several instance classes with the same price, it selects the one with the smallest latency from the source region. If there are multiple instances with the same price and latency, the one with the lowest cost per period is chosen, as it allows for more flexibility.

-

Malloovia [38], a state-of-the-art technique for cloud VM allocation. This technique is not designed for edge computing and, thus, it does not take into account the request source region or the latencies between regions. This can lead to invalid solutions where requests from a user region are assigned to be served by an edge region that is not nearby, which is not allowed. Therefore, only cloud regions have been made available to Malloovia. In addition, the model used by Malloovia is different from the model used by Edarop variants, so an adaptation was carried out. The requests from all regions were aggregated to generate a workload that is valid for Malloovia. Furthermore, the solution provided by Malloovia indicates how many VMs are used in each region, but it does not indicate the user region where the requests originated. To be able to compute the maximum response time of the Malloovia solution, it has been assumed that the requests from each user region are divided proportionally according to the performance of the allocated VMs.

The Greedy and Malloovia approaches do not take into account the maximum response time limit for each application that the Edarop model includes. Therefore, the solutions generated by the Greedy and Malloovia approaches may be more cost-effective, but invalid, because they do not respect this limit. This will be shown in the experiments.

Figure 4 shows the cost per time slot of the different optimization approaches in each time slot, and Fig. 5 shows the corresponding average of the maximum response time. These figures are summarized in Fig. 6 and Table 8, where the total cost and the average of the maximum response time for each approach are displayed.

As Fig. 4 shows, in some time slots (1, 4, 6, 7 and 11), EdaropR has a higher cost than the rest of the approaches, as it obtains a solution that fulfills the maximum cost constraint (\(C_\text{max}\), which has been set to $200) but does not optimize it. EdaropRC has the same maximum response time, as shown in Figs. 5 and 6, but with a lower cost. However, the cost obtained by EdaropRC is still significantly higher than that obtained by EdaropC and EdaropCR. The cost savings achieved by these variants are traded off by their increased average of the maximum response time.

Figure 4 also shows that Malloovia obtains the lowest cost and that the Greedy approach obtains a cost slightly better than EdaropC and EdaropCR. However, Fig. 5 shows that Malloovia has a much higher average of the maximum response time than any other approach and, as can be seen in Table 8, it violates the maximum response time (\(T_a\)) for more than half of the requests of application a0 and all the requests of application a2. The Greedy approach, on the other hand, has an average of the maximum response time between EdaropC and EdaropCR, but it fails to guarantee compliance with the maximum response time for all the requests of application a2, as can be seen in Table 8.

Figure 7 shows how the requests are distributed between edge and cloud regions. As can be seen, EdaropC (Fig. 7a) and EdaropCR (Fig. 7b) send all requests of applications a0 and a1 to cloud regions, but the requests of application a2, as they have a smaller maximum response time limit, are sent to the edge, which is necessary to meet this response time requirement. On the other hand, both the Greedy (Fig. 7e) and Malloovia (Fig. 7f) approaches send all requests to the cloud and, consequently, they do not meet the maximum response time limit required for a2.

Regarding EdaropR (Fig. 7c) and EdaropRC (Fig. 7d), the figures show that both variants send all requests to the edge in order to optimize the average response time.

Figure 6 and Table 8 show that there is no significant difference in cost between EdaropC, EdaropCR, Greedy and Malloovia, but EdaropCR has the lowest response time. This figure also shows that the average of the maximum response time obtained by the variants that optimize this parameter first (EdaropR and EdaropRC) is less than half of that of the rest of the approaches, while the cost does not increase in the same proportion.

Figure 8 helps to understand how the different approaches allocate VMs. Different hues have been used to represent different regions (blue for EU, orange for US-E, green for US-W and pink/purple for AP), with lighter colors representing cloud regions and darker colors representing edge regions. It can be seen that in variants EdaropC (Fig. 8a) and EdaropCR (Fig. 8b), mainly cloud regions are used, but some VMs are allocated to edge regions in order to meet the maximum response time requirements of a2. In addition, EdaropCR uses VMs in different regions to optimize the response time at the same cost.

Variants EdaropR (Fig. 8c) and EdaropRC (Fig. 8d) mainly use edge regions. These figures show a counterintuitive result: EdaropR uses cloud VMs, which have higher latencies, in some time slots (for instance, time slots 0, 4 and 11). However, as can be seen in Fig. 9c, which will be analyzed later, there are no requests sent to these VMs but, as there is no optimization of the cost and their cost fits below the maximum allowed, they are allocated in spite of not being used. This demonstrates that EdaropR can be very inefficient from a cost perspective and EdaropRC should be used instead.

The Greedy approach (Fig. 8e) uses different cloud instance classes in order to take advantage of different latencies, but, unlike the Edarop variants, it does not use edge instance classes. Finally, Malloovia (Fig. 8f) is the simplest approach, mainly using VMs of only one instance class, the cheapest one. The other instance class is selected because there is a small number of requests that do not justify selecting a larger instance, even when it has higher performance per dollar, as its capacity will not be used.

Another difference between the variants is how they distribute requests coming from a source region to a destination region, which can be seen in the Sankey diagrams presented in Fig. 9. These diagrams present the source of requests on the left and the destination on the right. Fig. 9a shows that EdaropC is inefficient in selecting the destination regions. For instance, many requests from region US-E\(_\text{u}\) are sent to US-W\(_\text{c}\). EdaropCR (Fig. 9b), on the other hand, sends these requests to US-E\(_\text{c}\), reducing the response time. This happens in other regions, as can be deduced by noticing that there is less change in colors between the source and destination regions in Fig. 9b compared to Fig. 9a.

Both EdaropR (Fig. 9c) and EdaropRC (Fig. 9d) send all requests to the corresponding edge region, optimizing response time. This is the opposite behaviour to the Greedy (Fig. 9e) and Malloovia (Fig. 9f) approaches, which serve all requests in cloud regions. Malloovia is particularly inefficient in this regard, as it concentrates requests from most regions in only one cloud region, AP\(_\text{c}\), because it is the most economical and Malloovia does not consider latencies.

One of the challenges of integer linear programming is that it may take a very long time to obtain the optimal solution, so one of the goals of the experimentation is to assess whether a valid result can be found in a reasonable time. In the experiments above, a maximum time of 120 s was used. If this time is reached during the solver execution, the process is aborted and the best integer solution found so far is reported, along with the best lower bound for the optimal solution.

In the experiments carried out, all cases but one for time slot 7 of EdaropC and EdaropCR found the optimal solution. Fig. 10 shows the linear problem creation and solving times for each time slot and each approach, using a logarithm scale on the Y axis. As can be seen, both times are usually just some milliseconds. In conclusion, the times required for creating and solving the linear problem are not significant compared to the scheduling window size (1 h).

In summary, the results show that Malloovia finds the most cost-effective allocation, but with the worst average of the maximum response time and violating the maximum response time limit for many requests. The Greedy approach finds a trade-off between cost and response time, similar to EdaropCR, but does not guarantee the maximum response time limit.

EdaropC optimizes cost but does not obtain a good average response time. Conversely, EdaropR optimizes the average response time, but it has a significantly higher cost. These approaches have smaller creation and solving times than EdaropCR and EdaropRC, but the difference is not significant enough to prefer them, taking into account the indicated disadvantages.

EdaropCR and EdaropRC are the approaches that obtain the best trade-off between cost and response time, in addition to guaranteeing the maximum response time. The choice of one or the other strategy will depend on whether the analyst prefers to prioritize savings over response time (EradopCR) or prioritizes average response time over incurring in slightly higher costs (EdaropRC).

5 Conclusions

This paper presents Edarop, a novel orchestration mechanism for edge architectures. We develop a mathematical model to compute costs and maximum response times, taking into account that users and datacenters of edge and cloud providers are localized in different geographical regions. We propose several variants, based on linear programming, to optimize cost and/or response time. We analyze and compare these variants to two other approaches: a Greedy algorithm and Malloovia, an optimization technique for cloud computing that does not consider latencies. Our experimentation shows that two of the proposed variants are the most useful: EdaropCR, which optimizes cost first and then response time, and EdaropRC, which uses the opposite order. This way, the analyst can choose which approach to use depending on the preferred objectives. The results show that, unlike the Greedy and Malloovia approaches, all Edarop variants meet the response time requirements while keeping low costs and/or response times.

We have made all the code public to allow for reproducibility. This includes a set of traces with localization data that can be used in other studies.

Future work will include developing a simulator to perform a deeper statistical analysis of the results, such as computing the 95th percentile of the response time or the utilization of the VMs. The work can also be extended to include the deployment in containers in addition to VMs.

Data availability

The datasets generated and analysed during the current study are available on the GitHub repositories https://github.com/asi-uniovi/edge_traces and https://github.com/asi-uniovi/edarop_use_case.

Change history

04 May 2024

The original online version of this article was revised: The author Jose Luis Diaz’s biography and photo was missing, the biography and photo has been included now.

Notes

References

Luo, S., Xu, H., Lu, C., Ye, K., Xu, G., Zhang, L., Ding, Y., He, J., Xu, C.: Characterizing microservice dependency and performance: Alibaba trace analysis. In: Proceedings of the ACM Symposium on Cloud Computing, pp. 412–426 (2021)

Kumar, M., Sharma, S.C., Goel, A., Singh, S.P.: A comprehensive survey for scheduling techniques in cloud computing. J. Netw. Comput. Appl. 143, 1–33 (2019). https://doi.org/10.1016/j.jnca.2019.06.006

Abouaomar, A., Cherkaoui, S., Mlika, Z., Kobbane, A.: Resource provisioning in edge computing for latency-sensitive applications. IEEE Internet Things J. 8(14), 11088–11099 (2021). https://doi.org/10.1109/JIOT.2021.3052082

Aburukba, R.O., Landolsi, T., Omer, D.: A heuristic scheduling approach for fog-cloud computing environment with stationary iot devices. J. Netw. Comput. Appl. 180, 102994 (2021). https://doi.org/10.1016/j.jnca.2021.102994

Ali, I.M., Sallam, K.M., Moustafa, N., Chakraborty, R., Ryan, M., Choo, K.: An automated task scheduling model using non-dominated sorting genetic algorithm ii for fog-cloud systems. IEEE Trans. Cloud Comput. 10(04), 2294–2308 (2022). https://doi.org/10.1109/TCC.2020.3032386

Brogi, A., Forti, S., Guerrero, C., Lera, I.: Meet genetic algorithms in monte carlo: optimised placement of multi-service applications in the fog. In: 2019 IEEE International Conference on Edge Computing (EDGE), pp. 13–17 (2019). https://doi.org/10.1109/EDGE.2019.00016

Chen, Y., He, S., Jin, X., Wang, Z., Wang, F., Chen, L.: Resource utilization and cost optimization oriented container placement for edge computing in industrial internet. J. Supercomput. 79(4), 3821–3849 (2023). https://doi.org/10.1007/s11227-022-04801-z

Guevara, J.C., Fonseca, N.L.S.: Task scheduling in cloud-fog computing systems. Peer Peer Netw. Appl. 14(2), 962–977 (2021). https://doi.org/10.1007/s12083-020-01051-9

Guisheng, F., Liang, C., Huiqun, Y., Wei, Q.: Multi-objective optimization of container-based microservice scheduling in edge computing. Comput. Sci. Inf. Syst. 18, 41–41 (2021). https://doi.org/10.2298/CSIS200229041F

Li, C., Liu, J., Lu, B., Luo, Y.: Cost-aware automatic scaling and workload-aware replica management for edge-cloud environment. J. Netw. Comput. Appl. 180, 103017 (2021). https://doi.org/10.1016/j.jnca.2021.103017

Memari, P., Mohammadi, S.S., Jolai, F., Tavakkoli-Moghaddam, R.: A latency-aware task scheduling algorithm for allocating virtual machines in a cost-effective and time-sensitive fog-cloud architecture. J. Supercomput. 78(1), 93–122 (2022). https://doi.org/10.1007/s11227-021-03868-4

Moreno Vozmediano, R., Huedo Cuesta, E., Santiago Montero, R., Martín Llorente, I.: Latency and resource consumption analysis for serverless edge analytics. Preprint (2022). https://doi.org/10.21203/rs.3.rs-1457500/v1

Nan, Y., Li, W., Bao, W., Delicato, F.C., Pires, P.F., Zomaya, A.Y.: Cost-effective processing for delay-sensitive applications in cloud of things systems. In: 2016 IEEE 15th International Symposium on Network Computing and Applications (NCA), pp. 162–169 (2016). https://doi.org/10.1109/NCA.2016.7778612

Natesha, B.V., Guddeti, R.M.R.: Adopting elitism-based genetic algorithm for minimizing multi-objective problems of iot service placement in fog computing environment. J. Netw. Comput. Appl. 178, 102972 (2021). https://doi.org/10.1016/j.jnca.2020.102972

Pasdar, A., Lee, Y.C., Hassanzadeh, T., Almi’ani, K.: Resource recommender for cloud-edge engineering. Information (2021). https://doi.org/10.3390/info12060224

Pelle, I., Czentye, J., Dóka, J., Kern, A., Gerő, B.P., Sonkoly, B.: Operating latency sensitive applications on public serverless edge cloud platforms. IEEE Internet Things J. 8(10), 7954–7972 (2021). https://doi.org/10.1109/JIOT.2020.3042428

Pelle, I., Szalay, M., Czentye, J., Sonkoly, B., Toka, L.: Cost and latency optimized edge computing platform. Electronics 11(4), 561 (2022). https://doi.org/10.3390/electronics11040561

Pham, X.-Q., Huh, E.-N.: Towards task scheduling in a cloud-fog computing system. In: 2016 18th Asia-Pacific Network Operations and Management Symposium (APNOMS), pp. 1–4 (2016). https://doi.org/10.1109/APNOMS.2016.7737240

Pham, X.-Q., Man, N.D., Tri, N.D.T., Thai, N.Q., Huh, E.-N.: A cost- and performance-effective approach for task scheduling based on collaboration between cloud and fog computing. Int. J. Distrib. Sens. Netw. 13(11), 1550147717742073 (2017). https://doi.org/10.1177/1550147717742073

Röger, H., Bhowmik, S., Rothermel, K.: Combining it all: cost minimal and low-latency stream processing across distributed heterogeneous infrastructures. In: Proceedings of the 20th International Middleware Conference. Middleware ’19, pp. 255–267. Association for Computing Machinery, New York, NY, USA (2019). https://doi.org/10.1145/3361525.3361551

Saif, F.A., Latip, R., Hanapi, Z.M., Shafinah, K.: Multi-objective grey wolf optimizer algorithm for task scheduling in cloud-fog computing. IEEE Access 11, 20635–20646 (2023). https://doi.org/10.1109/ACCESS.2023.3241240

Scoca, V., Aral, A., Brandić, I., Nicola, R.D., Uriarte, R.B.: Scheduling latency-sensitive applications in edge computing. In: International Conference on Cloud Computing and Services Science, pp. 158–168. SciTePress (2018). https://doi.org/10.5220/0006706201580168 . INSTICC

Shadroo, S., Rahmani, A.M., Rezaee, A.: The two-phase scheduling based on deep learning in the internet of things. Comput. Netw. 185, 107684 (2021). https://doi.org/10.1016/j.comnet.2020.107684

Tychalas, D., Karatza, H.: A scheduling algorithm for a fog computing system with bag-of-tasks jobs: simulation and performance evaluation. Simul. Model. Pract. Theory 98, 101982 (2020). https://doi.org/10.1016/j.simpat.2019.101982

Wang, N., Varghese, B.: Context-aware distribution of fog applications using deep reinforcement learning. J. Netw. Comput. Appl. 203, 103354 (2022). https://doi.org/10.1016/j.jnca.2022.103354

Wei, W., Wang, Q., Yang, W., Mu, Y.: Efficient stochastic scheduling for highly complex resource placement in edge clouds. J. Netw. Comput. Appl. 202, 103365 (2022). https://doi.org/10.1016/j.jnca.2022.103365

Xu, J., Palanisamy, B.: Cost-aware & fault-tolerant geo-distributed edge computing for low-latency stream processing. In: 2021 IEEE 7th International Conference on Collaboration and Internet Computing (CIC), pp. 117–124 (2021). https://doi.org/10.1109/CIC52973.2021.00026

Yadav, A.M., Sharma, S.C., Tripathi, K.N.: A two-step technique for effective scheduling in cloud-fog computing paradigm. In: Gao, X.-Z., Tiwari, S., Trivedi, M.C., Mishra, K.K. (eds.) Advances in Computational Intelligence and Communication Technology, pp. 367–379. Springer, Singapore, Cham (2021)

Akhlaqi, M.Y., Mohd Hanapi, Z.B.: Task offloading paradigm in mobile edge computing-current issues, adopted approaches, and future directions. J. Netw. Comput. Appl. 212, 103568 (2023). https://doi.org/10.1016/j.jnca.2022.103568

Guerrero, C., Lera, I., Juiz, C.: Evaluation and efficiency comparison of evolutionary algorithms for service placement optimization in fog architectures. Future Gener. Comput. Syst. 97, 131–144 (2019). https://doi.org/10.1016/j.future.2019.02.056

Brogi, A., Forti, S., Ibrahim, A.: Predictive Analysis to Support Fog Application Deployment, pp. 191–221. John Wiley & Sons Ltd., Hoboken (2019)

Forrest, J., Ralphs, T., Santos, H.G., Vigerske, S., Hafer, L., Kristjansson, B., Lubin, M., Brito, S., Saltzman, M., Pitrus, B., Matsushima, F.: coin-or/Cbc: Release releases/2.10.8. Zenodo (2022). https://doi.org/10.5281/zenodo.6522795

Cplex, I.I.: V12. 1: User’s manual for cplex. Int. Bus. Mach. Corp. 46(53), 157 (2009)

Jain, R.: The Art of Computer Systems Performance Analysis- Techniques for Experimental Design, Measurement, Simulation, and Modeling, p. 1685. Wiley, New York (1991)

Lai, L., Fiaschi, L., Cococcioni, M., Deb, K.: Pure and mixed lexicographic-paretian many-objective optimization: state of the art. Nat. Comput. (2022). https://doi.org/10.1007/s11047-022-09911-4

Kolosov, O., Yadgar, G., Maheshwari, S., Soljanin, E.: Benchmarking in the dark: on the absence of comprehensive edge datasets. In: 3rd USENIX Workshop on Hot Topics in Edge Computing (HotEdge 20), pp. 1–11. USENIX Association (2020). https://www.usenix.org/conference/hotedge20/presentation/kolosov

Toczé, K., Schmitt, N., Kargén, U., Aral, A., Brandić, I.: Edge workload trace gathering and analysis for benchmarking. In: 2022 IEEE 6th International Conference on Fog and Edge Computing (ICFEC), pp. 34–41 (2022). https://doi.org/10.1109/ICFEC54809.2022.00012

Entrialgo, J., García, M., Díaz, J.L., García, J., García, D.F.: Modelling and simulation for cost optimization and performance analysis of transactional applications in hybrid clouds. Simul. Model. Pract. Theory 109, 102311 (2021). https://doi.org/10.1016/j.simpat.2021.102311

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. This research was funded by the project PID2021-124383OB-I00 of the Spanish National Plan for Research, Development and Innovation from the Spanish Ministerio de Ciencia e Innovación.

Author information

Authors and Affiliations

Contributions

JE: conceptualization, methodology, software, validation, investigation, writing, visualization. RMV: conceptualization, methodology, writing. JL: software, writing, visualization.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no Conflict of interest to declare that are relevant to the content of this article.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Entrialgo, J., Moreno-Vozmediano, R. & Díaz, J.L. Cost and response time optimization of edge architectures. Cluster Comput (2024). https://doi.org/10.1007/s10586-024-04359-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10586-024-04359-w