Abstract

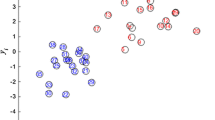

Feature selection is an important step dealing with high dimensional data. In order to select categories related features, the importance of feature need to be measured. The existing importance measure algorithms can’t reflect different distributions of data space and have poor interpretabilities. In this paper, a new feature weight calculation method via potential value estimation is proposed. The potential values indicate different data distributions in different dimensions. The quality of data points is another parameter needed to calculate the potential value of the data points in data field. The quality of the data points is related to the density and the type of the surrounding points. At the same time, the extraction of important features should not only consider the distribution of the feature itself but also consider the correlation with other features or categories. This method adopts the \(S_{w}\) (potential value within class) and \(S_{b} \)(potential value between different classes) to calculate the information entropy of each feature. The representative features have been selected to structure classifier. In order to accelerate the speed of operation, different grids are divided with different dimensions. By estimating the potential value of different data points on the same dimension, the correlation between feature and label is evaluated. After a series of analysis and experiments, the proposed method has been proved has overall classification accuracy with the fewest features. The effect of dimensionality reduction is significantly higher than FRGDF and the other manual information methods.

Similar content being viewed by others

References

Zhao, L., Wang, S., Lin, Y.: A new filter approach based on generalized data field. Lect. Notes Comput. Sci. 8933, 319–333 (2014)

Samsudin, S.H., Shafri, H.Z.M., Hamedianfar, A., et al.: Spectral feature selection and classification of roofing materials using field spectroscopy data. J. Appl. Remote Sens. 9(1), 967–976 (2015)

Zhang, D., Chen, S., Zhou, Z.H.: Constraint score: a new filter method for feature selection with pairwise constraints. Pattern Recognit. 41(5), 1440–1451 (2008)

Kamkar, Iman, Gupta, Sunil Kumar, Phung, Dinh, et al.: Stabilizing [formula omitted]-norm prediction models by supervised feature grouping. J. Biomed. Inf. 59, 149–168 (2016)

Shojaie, A., Michailidis, G.: Discovering graphical Granger causality using the truncating lasso penalty. Bioinformatics 26(18), i517–i523 (2010)

Kato K.: Group Lasso for high dimensional sparse quantile regression models. Statistics (2011)

Cover, T.M., Thomas, J.A.: Elements of information theory. Cognit. Sci. 3(3), 177–212 (2005)

Witten, I.H., Frank, E., Hall, M.A.: Data Mining: Practical Machine Learning Tools and Techniques. Morgan Kaufmann Publishers Inc., San Francisco (2011)

Gauvreau, K., Pagano, M.: Student’s t test. Nutrition 9(4) (1995)

Yu, L., Liu, H.: Feature selection for high-dimensional data: a fast correlation-based filter solution. Int. Conf. Mach. Learn. 3, 856–863 (2003)

Hall, M.A.: Correlation-based feature selection for discrete and numeric class machine learning. In: Proceedings of the Seventeenth International Conference on Machine Learning (ICML 2000), Stanford University, Stanford, CA, USA, June 29–July 2, pp. 359–366 (2000)

Jakulin, A.: Machine learning based on attribute interactions. Computer & Information Science (2005)

Meyer, Patrick E.: Bontempi, G.: On the use of variable complementarity for feature selection in cancer classification. Lect. Notes Comput. Sci. 3907, 91–102 (2006)

Bennasar, M., Hicks, Y., Setchi, R.: Feature selection using joint mutual information maximisation. Expert Syst. Appl. 42(22), 8520–8532 (2015)

Cheng, H., et al.: Conditional mutual information-based feature selection analyzing for synergy and redundancy. ETRI J. 33(2), 210–218 (2011)

Lin, D., Tang, X.: Conditional Infomax Learning: An Integrated Framework for Feature Extraction and Fusion. Computer Vision—ECCV 2006, pp. 68–82. Springer, Berlin (2006)

Ramachandran, S.B., Gillis, K.D.: Estimating the Parameters of Amperometric Spikes Detected using a Matched-Filter Approach. Biophys. J. 110(3), 429a (2016)

Garcaía-Torres, M., Gómez-Vela, F., Melián-Batista, B., et al.: High-dimensional feature selection via feature grouping: A variable neighborhood search approach. Inf. Sci. 326(C), 102–118 (2016)

Acknowledgments

This work was supported by the funds of Shandong provincial water conservancy scientific research and technology promotion project. The project number is SDSLKY201320 (Research on hidden danger intelligent warning system of water conservancy security based on big data). This work was partly supported by National Natural Science Foundation of China (71271125, 61502260) and Natural Science Foundation of Shandong Province, China (ZR2011FM028).

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Zhao, L., Jiang, L. & Dong, X. Supervised feature selection method via potential value estimation. Cluster Comput 19, 2039–2049 (2016). https://doi.org/10.1007/s10586-016-0635-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10586-016-0635-0