Abstract

Amid a heated debate on what are possible and what are plausible climate futures, ascertaining evident changes that are attributable to historical climate change can provide a clear understanding of how warmer climates will shape our future habitability. Hence, we detect changes in the streamflow simulated using three different datasets for the historical period (1901–2019) and analyze whether these changes can be attributed to observed climate change. For this, we first calibrate and validate the Soil and Water Integrated Model and then force it with factual (observed) and counterfactual (baseline) climates presented in the Inter-Sectoral Impact Model Intercomparison Project Phase 3a protocol. We assessed the differences in simulated streamflow driven by the factual and counterfactual climates by comparing their trend changes ascertained using the Modified Mann–Kendall test on monthly, seasonal, and annual timescales. In contrast to no trend for counterfactual climate, our results suggest that mean annual streamflow under factual climate features statistically significant decreasing trends, which are − 5.6, − 3.9, and − 1.9 m3s−1 for the 20CRv3-w5e5, 20CRv3, and GSWP3-w5e5 datasets, respectively. Such trends, which are more pronounced after the 1960s, for summer, and for high flows can be attributed to the weakening of the monsoonal precipitation regime in the factual climate. Further, discharge volumes in the recent factual climate dropped compared to the early twentieth-century climate, especially prominently during summer and mainly for high flows whereas earlier shifts found in the center of volume timings are due to early shifts in the nival regime. These findings clearly suggest a critical role of monsoonal precipitation in disrupting the hydrological regime of the Jhelum River basin in the future.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The future climate is uncertain as we are not sure how the responsible factors will evolve and whether the Earth System will follow a more plausible pathway out of infinite possibilities. In contrast, we are sure about changes that are evident in the system and can be detected through evaluating historical observations. However, these detected changes need to be attributed to the responsible factors. Such attribution can indicate how much disruption in the system is solely caused by any single factor, such as climate change, and which system component is most sensitive to it. The Working Group II (WGII) of the IPCC describes that climate change impact is “detected” if a natural or human system has changed relative to the counterfactual (system’s behavior without climate change) baseline scenario (IPCC 2014, chap. 18.2.1), whereas “attribution” elucidates the contribution of climate change to the observed change in a system. According to these definitions, we can attribute a particular detected change in natural, human, or managed system to climate change if we compare the observed state of our system to that of the baseline or the reference state that features no change in climate.

Since the Fourth Assessment Report (AR4), there has been a growing consensus that recent climate change has impacted the overall natural and human systems and that the hydrological cycle has gone through serious alterations, which are distinct on global, regional, and local scales (Khattak et al. 2011). Since the economic progress of a region is highly dependent upon hydrological processes, their changes under climate change may affect socioeconomic development across the globe. This is particularly true for the irrigated agrarian economies, such as Pakistan, where shrinking frozen water reservoirs and altering precipitation patterns under climate change are already affecting the glacial, nival, and pluvial regimes, transforming the overall hydrological system, and subsequently the water yield and its seasonality. For instance, the water availability over the 1951–2005 period has been abridged from 5000 to 1100 m3 per capita per year making the country highly water-stressed (WWF 2007). As climate change will exacerbate existing water resource management problems in the future, it is timely to investigate which changes can be attributed to climate change and which system components are most sensitive to support policymakers in devising efficient action plans to enhance resilience and mitigate future risks.

Hardly few studies explored to which extent the observed changes in the hydrological cycle are attributable to climate change or how the hydrological cycle would look should the climate remain stationary, particularly at the regional scale. For instance, Wei et al. (2022), Zhao et al. (2023), Xu et al. (2022), and Mondal and Mujumdar (2012) have attributed decreasing streamflow of the Han River, Danjiang River, Weihe River, and Mahanadi River basins to climate change, respectively. Similarly, Ahmed et al. (2022) and Luo et al. (2016) attributed the increasing streamflow of the Yangtze River Source Region and the Heihe River to climate change, respectively. These studies suggest that although changes in the hydrological systems are distinct, they are caused solely by observed climate change.

Pakistan is one of the least contributors to greenhouse gas emissions yet one of the most affected countries in the world (Government of Pakistan 2021). Besides this fact, studies mainly focused on analyzing historical changes and future projections of the water availability in Pakistan (Hasson 2016; Hasson et al. 2019). Yet, no study has attributed observed changes in the country’s water resources to historical climate change for any watershed, including the Jhelum River basin (JRB), which feeds Pakistan’s second largest reservoir of Mangla for irrigation and hydropower generation, and in turn, plays a significant role in the socioeconomic development of the country.

Hence, following the Inter-Sectoral Impact Model Intercomparison Project (ISIMIP) framework’s 3a protocol (https://www.isimip.org/), we first set up and evaluate the Soil and Water Integrated Model (SWIM) for the transboundary JRB and then detect changes in the simulated discharge and analyze whether such changes can be attributed to climate change. For impact attribution, we force the validated SWIM model with the long-term datasets of both factual (reanalysis, corresponding to observed) and counterfactual (no climate change baseline) climates provided by the ISIMIP-3a protocol, and then, we compare the simulated discharges under factual climate with those under counterfactual climate. We compare the simulated discharges for quantitative changes, such as their trends in annual mean, median, minimum, and maximum discharges, their 10th, 25th, 75th, and 90th percentiles, and for qualitative changes, such as overall hydrograph alteration, and changes in the center of timings.

2 Study area

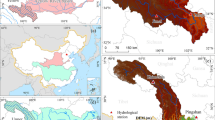

The JRB is a sub-basin of the Indus basin. It is located within the western Himalayas, between 73°–75.62°E and 33°–35°N (Fig. 1). With an elevation range of 438–6111 masl, the basin is around 26,426 km2 in area, 56% of which belongs to India and the rest to Pakistan. The Jhelum River originates from the northwestern slopes of the Pir Punjal mountains and feeds the Mangla reservoir, which is the second largest reservoir in Pakistan after the Tarbela. Commissioned in 1967 with a storage capacity of 5.88 Million Acre Feet (Kayani 2012), the Mangla reservoir regulates irrigated waters for around six million hectares and generates hydropower of around 1000 MW (Archer and Fowler 2008).

The JRB is located at the extreme margins of two prevailing precipitation regimes that are associated with two distinct modes of large-scale circulation, such as the South Asian summer monsoon and the westerly disturbances (Hasson et al. 2013; Hasson et al. 2016) . Hence, the hydrological cycle of JRB is nourished by the monsoonal precipitation regime between July and September and by the westerly precipitation for the rest of the year, both contributing 62% and 38% of around 1200 mm mean annual precipitation, respectively (Mahmood and Babel 2013). Elevated areas receive moisture in the form of snow, mainly from the westerly precipitation, whereas lower valleys mostly receive monsoonal rainfall (Hasson et al. 2017). During winter, around 65% of the basin area is covered by snow, which reduces to around 3% in summer (Hasson et al. 2013; Azmat et al. 2018). Glaciers cover only around 200 km2 of the basin area. The mean annual temperature is around 13.72 °C.

The overall hydrological regime of the basin is dominated by snowmelt and rainfall runoff. The high flow period spans from March to September, which starts with the melting of snow in March and extends with heavy monsoonal rains between July and September. Annual average streamflow at the Azad Pattan gauging site (33.73°N and 73.60°E), upstream of the Mangla reservoir, is around 829 m3s−1, which constitutes around 16% of the total water availability in Pakistan. In the JRB, two hydroelectric power projects, URI-II and Kishenganga, were recently commissioned in 2014 and 2018, respectively. Hence, these projects had little effect on the JRB’s discharge dynamics for the studied period.

3 Dataset

3.1 Hydro-climatic datasets

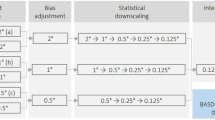

We obtained observed daily mean, maximum, and minimum temperatures (tas, tasmax, tasmin in °C), surface downwelling shortwave radiation (rsds in Wm−2), relative humidity (hurs in %), and precipitation (pr in mm) from three different datasets for factual and counterfactual climates. The details regarding these datasets are added to the supplementary material (SM). These datasets have been prepared at a half-a-degree resolution and included in the ISIMIP-3a protocol (SM, Table A1). The factual climate corresponds to the observed climate, which is represented by the reanalysis data whereas the counterfactual climate is the baseline from which the climate change signal has been removed. We used the counterfactual climate prepared using the ATTRICI (ATTRIbuting Climate Impacts) method described in Mengel et al. (2021). By assuming a smooth annual cycle of the associated scaling coefficients for each day of the year, the ATTRICI method eliminates solely the long-term regional trends in the observed daily climatic variables that are correlated to the fluctuations in the global mean temperature, instead of time (Lange 2019). The ATTRICI approach however retains the short-term natural climate variability caused by events like the El-Nino-Southern Oscillation and ensures that both factual and counterfactual data for a given day have identical ranks in their corresponding statistical distributions. For calibration and validation of the SWIM model, observed daily discharges for the period 1994–2004 were obtained from the Surface Water Hydrology Project (SWHP) of the Water and Power Development Authority (WAPDA) of Pakistan.

3.2 Physiographic datasets

The SWIM model considers basin at three level disaggregation scheme: basin-subbasins-hydrotopes (HRU), where HRUs are unique combinations of soil and LULC within subbasins. For basin delineation and topographical properties, we employed a one-arc second Digital Elevation Model (DEM) from the Shuttle Radar Topography Mission (SRTM) (https://earthexplorer.usgs.gov/), provided by the United States Geological Survey (Farr et al. 2007). The LULC features were taken from the GlobCover global land cover map for the year 2009, generated at 300-m planar resolution based on the imagery of the MERIS sensor on board the ENVISAT satellite (Arino et al. 2010). The GlobCover land use classification was translated into 15 land cover classes adopted by the SWIM. The soil data were acquired from the Harmonized World Soil Database (HWSD), available at a one-kilometer spatial resolution (Fischer et al. 2008). Glacier ice thickness was taken from the Randolph Glacier Inventory version 6.0 (RGI Consortium 2017) prepared by Farinotti et al. (2019).

4 Methods

The present study exclusively focuses on the ISIMIP-3a protocol. According to the guidelines provided in the IPCC AR5 Working Group II, Chapter 18, the ISIMIP-3a component of the third-round framework focuses on both the evaluation and improvement of impact models as well as on the detection and attribution of observed impacts. For evaluation and improvement, we set up the SWIM hydrological model over the JRB and calibrated and validated it against the observed daily discharge over the 1994–2004 period. We objectively selected the calibration period of 1999–2004 as it included a severe drought in Pakistan. This helped in the robust calibration of the snowmelt regime. The model performance was assessed against multiple criteria. The adopted methodology is explained in Fig. 2.

The simulated flows under both factual and counterfactual climates were compared against each other for quantitative changes, such as trends in the annual mean, median, minimum, and maximum discharges, and their 10th, 25th, 75th, and 90th (P10, P25, P75, and P90) percentiles. The trends in annual minimum and maximum are further assessed based on smoothed daily timeseries using a 7-day moving average to eliminate day-to-day variability and to highlight the continued periods of very low and high flows (Stahl et al. 2012). We also quantify how discharges have changed in the recent 30-year climatology relative to the first 30-year climatology of the twentieth century for each dataset using the Tukey test. Further, flow duration curves (FDCs) were compared for the factual and counterfactual discharges to analyze how climate change has changed the overall hydrological regime of JRB. The FDC demonstrates the percentage of time (or duration) a specified discharge is equaled or exceeded during a given period (Vafakhah and Khosrobeigi Bozchaloei 2020). It unfolds the dynamics of high, medium, and low flows (Kim et al. 2014). We also ascertained trends in the center of timing (CT), which refers to the day of a hydrological year at which 50% of the annual discharge volume is reached (Hidalgo et al. 2009). CT is a robust measure as compared to peak discharges, which can occur before or after most of the seasonal flows (Hodgkins et al. 2003).

We employed a nonparametric Mann–Kendall (MK) test for trend detection (Kendall 1948; Mann 1945) and Theil-Sen (Sen 1968; Theil 1950) method to estimate the trend slope. These methods neither require a timeseries to be normally distributed (Tabari and Talaee 2011) nor are sensitive to missing values, outliers, and breaks (Bocchiola and Diolaiuti 2013). The MK test has been widely used to detect monotonic trends in hydrometeorological timeseries and has been thoroughly explained in numerous studies (Hasson et al. 2017; Karki et al. 2017). To prevent the impact of serial dependence of naturally observed timeseries in detecting false trend and its slope magnitude, we used a modified version of MK, which pre-whitens the timeseries for the autoregression process before ascertaining a trend (Hasson et al. 2017; Yue and Wang 2002).

4.1 Hydrological model setup

The study employs SWIM, which is a continuous, semi-distributed, and process-based deterministic eco-hydrological model (Krysanova et al. 2000). The SWIM is developed on top of SWAT (Arnold et al. 1998) and MATSALU models (Krysanova et al. 1989). This is the first SWIM application in Pakistan, although the model has already been successfully applied across the world (Didovets et al. 2021; Hattermann et al. 2017). For instance, the model has been employed to study the impacts of climate change on discharges (Lobanova et al. 2016), comprehend extremes (Aich et al. 2016), assess dynamics of glacial lake outburst floods (Wortmann et al. 2014), investigate impacts of hydrological processes on irrigation (Huang et al. 2015), and for the agriculture studies (Liersch et al. 2013). The settings of the SWIM are added to the supplementary material.

For the JRB, the sensitive parameters (SM, Table A2) have been calibrated and validated over the hydrological years of the 1994–2004 period. The model was calibrated manually considering the second half of the observational record (1999–2004), which encompasses the worst drought period of 1999–2003. This prevented the possible bias compensations from snowmelt to rainfall runoff. We validated the model for the 1994–1999 period. We used multiple efficiency criteria to assess the robustness of the model setup on a daily time step. These include the Nash–Sutcliffe Efficiency (NSE) (Nash and Sutcliffe 1970), Percentage Bias (PBIAS), Volumetric Efficiency (VE), Mean Error (ME), Coefficient of Determination (R2), and Root Mean Square Error (RMSE). Ritter and Muñoz-Carpena (2013) have interpreted the model performance solely based on NSE by subjectively establishing four classes that range between the lower acceptable limit of NSE = 0.65 (Moriasi et al. 2007) and a perfect fit of NSE = 1.0. Using multiple efficiency criteria, Moriasi et al. (2015) considered the watershed-scale model performance “satisfactory” if all the conditions of R2 > 0.60, NSE > 0.50, and PBIAS within ± 15% are satisfied for the daily simulated flows. Here, we considered four performance evaluation classes based on R2, NSE, and PBIAS, as given in SM, Table A3.

5 Results

5.1 Calibration and validation

The SWIM calibration and validation results are summarized in Table 1 and are shown in Fig. 3. For the calibration period, model NSE, R2, and VE are above 0.83, 0.84, and 0.76, respectively whereas PBIAS and ME are negligible and RMSE is above 200 m3s−1. For the validation period, NSE, R2, and VE dropped to 0.76, 0.76, and 0.73, whereas PBIAS increased to around − 5%, ME to around − 46, and RMSE to above 350 m3s−1. Evaluating overall model performance based on multiple criteria is difficult. Moriasi et al. (2015) classified the overall model performance into four categories based on R2, NSE, and PBIAS (SM, Table A3). Based on such criteria, the SWIM model performance can be classified as “Very Good” for the calibration period and “Good” for the validation period. For the whole period, the model efficiency criteria indicate that the SWIM reasonably reproduces the hydrological processes of the JRB, and its overall performance can be classified as “Good.” The mean monthly annual cycle also suggests only small differences between the observed and simulated flows for all periods.

Comparison of observed and simulated discharges for the calibration (1999–2004), validation (1994–1999), and overall (1994–2004) periods, a daily timeseries of observed and simulated discharges for the period (1994–2004), b long-term mean monthly observed and simulated discharges for the calibration, validation, and overall periods, c scatter plots of observed versus simulated daily discharges for overall, calibration, and validation periods in the left, middle, and right columns, respectively

5.2 Streamflow changes

Discharges simulated under counterfactual climates largely exhibit statistically insignificant trends for all indices, seasons, and for all datasets. This indicates that the JRB water availability would not have changed throughout the century should there be no climate change. To quantify how much climate change has impacted the water availability from the JRB, we compared discharges driven by the factual and counterfactual climates more simplistically by only plotting the differences in their annual mean, minimum, maximum, and median estimates (Fig. 4). We calculated the differences as the annual simulated discharges driven by the factual dataset minus those driven by the corresponding counterfactual dataset. All annual quantities are based on the hydrological year (Oct–Sep).

Differences in a annual minimum discharges, b annual maximum discharges, c annual mean discharges, and d annual median discharges between simulations driven by the factual and counterfactual climates for each of the three climate datasets over the 1901–2019 period. Note: differences are calculated as the annual discharges simulated under factual climate minus the annual discharges simulated under counterfactual climates

Qualitative analysis suggests that there was no significant difference between factual and counterfactual discharges at the beginning of the twentieth century, but the difference emerged by 1920 and became stark first around 1930 and then after 1960. Such signal is uniform among the mean, minimum, maximum, and median indices, which all are decreasing under factual climate relative to counterfactual climate throughout the century. Quantitatively, such a decrease is stronger for the 20CRv3-w5e5 as compared to the 20CRv3 and GSWP3-w5e5 datasets for all indices. For instance, annual minimum discharges for the 20CRv3-w5e5 decreased by about 100 m3s−1 in the last decade compared to a negligible decrease for the other two datasets. For the annual maximum and mean discharges, simulations driven by 20CRv3 also follow the pattern of simulations driven by the 20CRv3-w5e5 dataset, both suggesting a higher decrease relative to simulations driven by GSWP3-w5e5. Overall, the largest difference between simulations forced by the factual and counterfactual climates is observed for the annual maximum discharge, followed by the annual mean discharge (Table 2). The 7-daily smoothed time-series analysis also reveals a noticeable decrease in annual maximum discharges (Table 2). Annual trend analysis suggests that the annual maximum factual flows are decreasing at the rates of up to 15 m3s−1 for 20CRv3-w5e5 and 20CRv3, whereas the decreasing trend for the GSWP3-w5e5 is around − 4.5 m3s−1 (Table 2).

Seasonal trend analysis clearly exhibits inter-dataset differences. Table 2 shows that simulations driven by 20CRv3 and 20CRv3-w5e5 factual datasets feature significant decreasing trends for all seasons. In contrast, such trends for the GSWP3-w5e5 are significant only for the summer and autumn seasons, implying that the simulated nival regime driven by this dataset is less affected by climate change. Nevertheless, the decreasing trends in factual discharges are the highest for summer, followed by spring, autumn, and winter seasons. Moreover, such decrease is most obvious for the maximum discharges of the season, followed by mean, median, and minimum discharges, and higher for simulations driven by 20CRv3-w5e5 dataset followed by those driven by 20CRv3 and GSWP3-w5e5 datasets.

We further investigated inter-dataset differences by analyzing trends in factual and counterfactual discharge indices on the monthly basis (Table 3). In addition to insignificant trends for counterfactual simulations, we note significant decreasing trends for the simulations driven by 20CRv3 and 20CRv3-w5e5 factual datasets throughout the year, where such trends are stark for the latter dataset for all indices. In contrast, simulations driven by the GSWP3-w5e5 factual dataset suggest such significant decreasing trends only for the June–November period. In addition, significant increasing trends in most indices are observed for February and March for the simulations driven by the GSWP3-w5e5 factual dataset. These datasets further differ from each other quantitatively. For instance, simulations driven by the 20CRv3-w5e5 factual dataset exhibit stark decreases in monthly mean and maximum factual discharges (above 6 m3s−1) between high flow months of April and September and for minimum and median factual discharges between May and August. In contrast, simulations driven by the 20CRv3 factual dataset feature such stark decreases in relatively fewer months, such as, in May–August for the maximum, and only in June and July for the rest of the indices.

Figure 5 shows the long-term mean annual cycles of all indices from simulations driven by factual and counterfactual datasets. All the simulated discharge indices, driven by three datasets, consistently feature a discharge peak during May–June and a high flow period spanning over April to September. The difference between the simulations driven by factual and counterfactual climates is prominent for the maximum, mean, and median discharges, particularly for summer months. Since summer flows for the JRB are generated from the monsoonal rainfall-runoff (Archer and Fowler 2008), such a decrease may result from the weakening of the monsoonal regime under climate change (Fig. 5). This is confirmed by decreased mean monthly precipitation for monsoon months in factual as compared to counterfactual datasets (SM, Fig A1). Precipitation in factual datasets of 20CRv3 and 20CRv3-w5e5 decreased throughout the year compared to the counterfactual datasets, except in September and October for the former dataset. For GSWP3-w5e5, precipitation in factual climate decreases in all months except in February, March, and June. Subsequently, February and March feature a significant positive trend in discharge indices for the simulations driven by the GSWP3-w5e5 factual dataset (Table 3).

5.3 Comparing climates

We compared annual cycles of the discharge climatology driven by the factual dataset with that driven by the counterfactual dataset to assess how climate change has altered the overall hydrological regime until now. For this, we calculated the discharge climatology for the first 30 years of the twentieth century (p1) and for the last 30 years (p2) for both factual and counterfactual datasets. Comparing p1 with p2 from the counterfactual dataset revealed no significant changes on the monthly and annual scale (Fig. 6a). Same is the case when comparing p1 between counterfactual and factual datasets as changes remain markedly below 10%. On the other hand, p2 from factual datasets is substantially different from that of counterfactual datasets, mainly because factual climatology has changed significantly over the period of 120 years. Main changes are observed during the monsoon months of June to August (Fig. 6b). We have applied the Tukey test to describe the differences between different periods (SM, Fig A2). Differences are up to 50% for July, up to 40% for June and August, and around 20% for the rest of the months. Comparing p1 and p2 from factual datasets also provides similar changes, indicating how much climate change has altered the overall hydrological regime of the JRB, so far.

a Annual cycles of mean monthly discharges for the p1 climate of the twentieth century and p2 climate for simulations driven by both factual (F) and counterfactual (CF) datasets, b monthly percentage change in discharge between the simulations driven by both factual (F) and counterfactual (CF) datasets for the p1 climate (first column), and for the p2 climate (middle column). The third column refers to the percentage change in discharge between the p1 and p2 climates of the simulation driven by the factual dataset. The p1 climate is defined as the initial 30 years, and the p2 climate represents the recent 30 years for each dataset

The timing of peak discharges has also been shifted between the p1 and p2 climates from the simulation driven by the factual dataset. The simulation driven by the GSWP3-w5e5 dataset clearly suggests that the discharge peak in p2 has dampened relative to p1 and shifted to May–June. For 20CRv3-w5e5, the original peak was not clearly designated for the month of June among p1 of factual or counterfactual datasets and p2 of the latter. The 20CRv3 dataset however suggests no change in the timing of peak discharge. Discharge peak in p2 factual climate either shifted from June as in the case of GSWP3-w5e5 or sustained in May as in the case of 20CRv3-w5e5, which is more realistic because the observed discharge of JRB also peaks in May as shown in Fig. 3b. Overall, the discharge has been reduced mainly for the high flow period, where such decrease is higher for pluvial and post-monsoonal regimes than for nival regimes (April–June).

5.4 Flow duration curve and center of timing

Flow duration curves are generally divided into five zones, representing high flows (0–10%), moist conditions (10–40%), mid-range flows (40–60%), dry conditions (60–90%), and low flows (90–100%). These ranges can be used to assess the hydrological state of the river (US Environmental Protection Agency 2007). In simulations driven by all three datasets, the exceedance probability of high flows and moist conditions is reduced in the case of factual relative to counterfactual climate, suggesting a lower frequency of these events in the former climate (Fig. 7). The FDCs of the simulations driven by 20CRv3 and 20CRv3-w5e5 datasets show a relatively high drop in exceedance probability as compared to GSWP3-w5e5. For the simulations driven by the 20CRv3 dataset, the difference between factual and counterfactual exceedance probabilities is more pronounced between high flows and moist condition flows, while in the case of 20CRv3-w5e5 driven simulations, the difference is evident from high to medium flows. In GSWP3-w5e5 driven simulations, the variance between factual and counterfactual exceedance probabilities occurs in the range of high flows and moist condition flows, but it is relatively less pronounced as compared to the simulations driven by 20CRv3 and 20CRv3-w5e5 datasets.

In addition to analyzing transient changes in the median flows (50th percentile), we can see how certain percentiles belonging to low and high flows evolve over time. For this we plot differences in the 10th, 25th, 75th, and 90th percentiles of factual and counterfactual discharges (SM, Fig A2). Differences are calculated as factual minus counterfactual discharge percentiles for each corresponding hydrological year. Low percentiles of 10% and 25% refer to low flow and dry conditions or high exceedance probability, whereas high percentiles of 75% and 90% correspond to high flows and moist conditions or lower exceedance probability (SM, Fig A2). Our results suggest significant falling trends for all types of flows driven by factual datasets except for low flows (P10 and P25) driven by GSWP3-w5e5. The decreasing trends were more pronounced for high flows (90th percentile) relative to the low flows and dry conditions (Table 4). Specifically, for the 20CRv3-w5e5 factual case, the decreasing trend was prominent for the 90th percentile (− 12.30 m3s−1) and less pronounced for the 10th percentile (− 0.93 m3s−1). The same is the case with the 20CRv3 factual scenario; the falling trend was more pronounced for the high flows at the 90th percentile (− 11.79 m3s−1) but less marked for the low flows at the 10th percentile (− 0.48 m3s−1).

For simulations driven by all three datasets, the CT for both factual and counterfactual climates lie within the second half of the hydrological year, mostly varying between May and July (Table 4). The trends for all three datasets indicate that the CT is shifting to earlier days (Table 4 and Fig. 8). Such shifts are mainly due to the shifts in the timings of the snowmelt runoff to earlier dates. Our inter-dataset comparison suggests that such a shift was more pronounced for the simulations driven by the GSWP3-w5e5 dataset (12 days per century) followed by the 20CRv3-w5e5 dataset (8 days per century) and the 20CRv3 dataset (6 days per century). The shift of CT to earlier dates is somehow consistent with an earlier shift of peak discharge in the p2 of factual climate.

6 Discussions

We note that performance for the validation period is relatively lower than that of the calibration period because the model failed to capture a few of the discharge peaks during the wet validation period (Fig. 3a, c). Since NSE is particularly sensitive to the peaks (Krause et al. 2005), its value is, therefore, lower for the validation period. The model’s failure in simulating discharge peaks is probably due to a half-a-degree resolution of input datasets, which is rather coarse for the mountainous JRB and is not able to retain the intense localized precipitation events. We believe that the model can capture the right intensity of streamflow peaks given it is driven by station observations or high-resolution robust datasets. For instance, forcing the semi-distributed hydrological model of the University of British Columbia for the JRB using observed stations, Hasson et al. (2019) reported higher NSE of 0.88, 0.80, and 0.84 for the calibration, validation, and whole periods, respectively. Hence, the use of high-resolution datasets for the complex mountainous catchments can resolve the fine-scale hydro-meteorological processes and improve the model evaluation and robustness of its projections, allowing better comprehension of the climate change impacts. Performing resolution sensitivity experiments is however not the focus of our study. Further, we fixed the LULC throughout the simulation period based on the reports of insignificant changes in the mean annual runoff of the Kunhar subbasin of JRB due to changes in LULC (Akbar & Gheewala 2021). However, LULC changes need to be assessed for the whole JRB and across the whole length of the simulation period from multiple datasets to represent them more realistically in the model. This will be the focus of our future studies.

We found that climate change is responsible for a decrease in the JRB mean annual water availability. Similar decreasing trends have been attributed to climate change by various studies. For instance, Najafi et al. (2017) have attributed decreasing streamflow for the British Columbia basins to anthropogenic climate change. Lv et al. (2021) have reported that Yellow River flows were decreasing between 1958–1993 and 1994–2017 period and that the observed climate change is responsible for such a decrease. Similarly, Kormann et al. (2015) have attributed declining streamflow to climate change for western Austria. In contrast to only decreasing trends, seasonally distinct streamflow changes detected in the Western United States between 1950 and 1999 have also been attributed to observed climate change (Barnett et al. 2008).

We observed earlier shifts in the CT due to an early shift in nival regime. These results are consistent with the reports of Hidalgo et al. (2009) for the Western United States since 1950 where they attributed earlier shifts in CT to climate change. Similar findings are reported for the snowmelt runoff from the mountains of Colorado (Clow 2010). In contrast, shifts in CT are found insignificant for four large Sierra Nevada basins (Maurer et al. 2007).

7 Conclusions

To understand how climate change can shape our future habitability, this study first detects changes in the simulated streamflow of the JRB for the historical period (1901–2019) and then analyzes whether these changes can be attributed to observed climate change. Following the ISIMIP-3a protocol, we forced a well-calibrated and validated SWIM model with three datasets prepared for both factual and counterfactual climate datasets. The intercomparison was performed based on MK trend tests and Sen Slopes for monthly, seasonal, and annual mean, minimum, maximum, and median discharges. We further analyzed flow duration curves, extreme percentiles, and center-of-volume timings to understand differences in low, medium, and high flows and their timings between simulations forced by factual and counterfactual climates. The study concludes that the discharges simulated under the counterfactual climates largely exhibit statistically insignificant trends for all indices, seasons, and for all datasets. This indicates that the JRB water availability would not have changed notably throughout the century should there be no climate change.

The simulations driven by the three datasets differed mainly on the quantitative scale but agreed well qualitatively. Nevertheless, the decreasing trends in factual discharges are the highest for the summer, followed by the spring, autumn, and winter seasons. Moreover, such a decrease is stark for the maximum discharges, followed by mean, median, and minimum discharges, and higher in the simulations driven by the 20CRv3-w5e5 followed by the 20CRv3 and GSWP3-w5e5 datasets. Overall, the discharge has been reduced mainly for the high flow period where such decrease is higher for pluvial monsoonal regimes (monsoon and post-monsoon season) than for nival regime (April–June). Earlier shifts in the CT are due to early shifts in nival regime. Substantial streamflow decrease during summer months clearly suggests a critical role of the monsoonal precipitation regime in disrupting the hydrological cycle of JRB in the future. Further, future studies can focus on disentangling the effect of anthropogenic climate change factors from natural factors.

Data Availability

The climate datasets are obtained from the ISIMIP Repository (https://data.isimip.org). The Harmonized World Soil Database (HWSD) can be accessed at http://www.fao.org/soils-portal/data-hub/soil-maps-anddatabases/harmonized-world-soil-database-v12. The GlobCover Land Cover Map is accessible at http://due.esrin.esa.int/page_globcover.php, and the SRTM Digital Elevation Model can be obtained from https://earthexplorer.usgs.gov. The ice thickness distribution of all glaciers can be accessed at https://doi.org/10.3929/ethz-b-000315707. The observed streamflow datasets analyzed during the current study are available from the Pakistan Water and Power Development Authority (WAPDA). The simulated streamflow datasets can be requested from the corresponding author.

References

Ahmed N, Wang G, Lü H, Booij MJ, Marhaento H, Prodhan FA, Ali S, Imran MA (2022) Attribution of changes in streamflow to climate change and land cover change in Yangtze River Source Region, China. Water 14(2):259. https://doi.org/10.3390/w14020259

Aich V, Liersch S, Vetter T, Fournet S, Andersson JCM, Calmanti S, van Weert FHA, Hattermann FF, Paton EN (2016) Flood projections within the Niger River Basin under future land use and climate change. Sci Total Environ 562:666–677. https://doi.org/10.1016/j.scitotenv.2016.04.021

Akbar H, Gheewala SH (2021) Impact of Climate and Land Use Changes on flowrate in the Kunhar River Basin, Pakistan, for the Period (1992–2014). Arab J Geosci 14:1–11. https://doi.org/10.1007/s12517-021-07058-7/Published

Archer DR, Fowler HJ (2008) Using meteorological data to forecast seasonal runoff on the River Jhelum. Pakistan Journal of Hydrology 361(1–2):10–23. https://doi.org/10.1016/j.jhydrol.2008.07.017

Arino O, Ramos J, Kalogirou V, Defourny P, Achard F (2010) GlobCover 2009. In: Proceedings of ESA Living Planet Symposium, Bergen, Norway

Arnold JG, Srinivasan R, Muttiah RS, Williams JR (1998) Large area hydrologic modeling and assessment part I: Model development. J Am Water Resour Assoc 34(1):73–89. https://doi.org/10.1111/j.1752-1688.1998.tb05961.x

Azmat M, Qamar MU, Huggel C, Hussain E (2018) Future climate and cryosphere impacts on the hydrology of a scarcely gauged catchment on the Jhelum river basin, Northern Pakistan. Sci Total Environ 639:961–976. https://doi.org/10.1016/j.scitotenv.2018.05.206

Barnett TP, Pierce DW, Hidalgo HG, Bonfils C, Santer BD, Das T, Bala G, Wood AW, Nozawa T, Mirin AA, Cayan DR, Dettinger MD (2008) Human-induced changes in the hydrology of the Western United States. Science 319(5866):1080–1083. https://doi.org/10.1126/science.1152538

Bocchiola D, Diolaiuti G (2013) Recent (1980–2009) evidence of climate change in the upper Karakoram. Pakistan Theoretical and Appl Climatol 113(3–4):611–641. https://doi.org/10.1007/s00704-012-0803-y

Clow DW (2010) Changes in the timing of snowmelt and streamflow in Colorado: a response to recent warming. J Clim 23(9):2293–2306. https://doi.org/10.1175/2009JCLI2951.1

Government of Pakistan (2021) Updated nationally determined contributions. Islamic Republic of Pakistan, Islamabad

Didovets I, Lobanova A, Krysanova V, Menz C, Babagalieva Z, Nurbatsina A, Gavrilenko N, Khamidov V, Umirbekov A, Qodirov S, Muhyyew D, Hattermann FF (2021) Central Asian rivers under climate change: Impacts assessment in eight representative catchments. J Hydrol Reg Stud 34:100779

Farinotti D, Huss M, Fürst JJ, Landmann J, Machguth H, Maussion F, Pandit A (2019) A consensus estimate for the ice thickness distribution of all glaciers on Earth. Nat Geosci 12(3):168–173. https://doi.org/10.1038/s41561-019-0300-3

Farr TG, Rosen PA, Caro E, Crippen R, Duren R, Hensley S, Kobrick M, Paller M, Rodríguez E, Roth L, Seal D, Shaffer S, Shimada J, Umland J, Werner M, Oskin M, Burbank D, Alsdorf D (2007) The shuttle radar topography mission. Rev Geophys 45(2):R2004. https://doi.org/10.1029/2005RG000183

Fischer G, Nachtergaele F, Prieler S, van Velthuizen HT, Verelst L, Wiberg D (2008) Global agro-ecological zones assessment for agriculture (GAEZ 2008). IIASA, Laxenburg, Austria and FAO, Rome, Italy

Hasson S (2016) Future water availability from Hindukush-Karakoram-Himalaya upper indus basin under conflicting climate change scenarios. Climate 4(3). https://doi.org/10.3390/cli4030040

Hasson S, Böhner J, Lucarini V (2017) Prevailing climatic trends and runoff response from Hindukush–Karakoram–Himalaya, upper Indus Basin. Earth Syst Dynam 8(2):337–355

Hasson S, Lucarini V, Pascale S (2013) Hydrological cycle over South and Southeast Asian river basins as simulated by PCMDI/CMIP3 experiments. Earth System Dynamics 4(2):199–217. https://doi.org/10.5194/esd-4-199-2013

Hasson S, Pascale S, Lucarini V et al (2016) Seasonal cycle of precipitation over major river basins in south and Southeast Asia: a review of the CMIP5 climate models data for present climate and future climate projections. Atmos Res 180:42–63. https://doi.org/10.1016/j.atmosres.2016.05.008

Hasson S, Saeed F, Böhner J, Schleussner CF (2019) Water availability in Pakistan from Hindukush–Karakoram–Himalayan watersheds at 1.5°C and 2°C Paris Agreement targets. Adv Water Resour 131. https://doi.org/10.1016/j.advwatres.2019.06.010

Hattermann FF, Krysanova V, Gosling SN et al (2017) Cross-scale intercomparison of climate change impacts simulated by regional and global hydrological models in eleven large river basins. Clim Chang 141:561–576. https://doi.org/10.1007/s10584-016-1829-4

Hidalgo HG, Das T, Dettinger MD, Cayan DR, Pierce DW, Barnett TP, Bala G, Mirin A, Wood AW, Bonfils C, Santer BD, Nozawa T (2009) Detection and attribution of streamflow timing changes to climate change in the Western United States. J Clim 22(13):3838–3855. https://doi.org/10.1175/2009JCLI2470.1

Hodgkins GA, Dudley RW, Huntington TG (2003) Changes in the timing of high river flows in New England over the 20th century. J Hydrol 278(1–4):244–252. https://doi.org/10.1016/S0022-1694(03)00155-0

Huang S, Krysanova V, Zhai J, Su B (2015) Impact of intensive irrigation activities on river discharge under agricultural scenarios in the semi-arid Aksu River Basin. Northwest China Water Res Manag 29(3):945–959. https://doi.org/10.1007/s11269-014-0853-2

IPCC (2014) Climate change 2014: impacts, adaptation, and vulnerability. Part A: global and sectoral aspects. Contribution of working group II to the fifth assessment report of the intergovernmental panel on climate change, eds field CB, et al. Cambridge Univ Press, New York

Karki R, Hasson S, Schickhoff U, Scholten T, Boehner J (2017) Rising precipitation extremes across Nepal. Climate 5(1):4. https://doi.org/10.3390/cli5010004

Kayani SA (2012) Mangla Dam Raising Project (Pakistan): general review and socio-spatial impact assessment; Hal-00719226: Islamabad, Pakistan. https://hal.archives-ouvertes.fr/hal-00719226

Kendall MG (1948) Rank correlation methods. Griffin

Khattak MS, Babel MS, Sharif M (2011) Hydro-meteorological trends in the upper Indus River basin in Pakistan. Climate Res 46(2):103–119. https://doi.org/10.3354/cr00957

Kim J-T, Kim G-B, Chung IM, Jeong G-C (2014) Analysis of flow duration and estimation of increased groundwater quantity due to groundwater dam construction. J Eng Geol 24(1):91–98. https://doi.org/10.9720/KSEG.2014.1.91

Kormann C, Francke T, Renner M, Bronstert A (2015) Attribution of high resolution streamflow trends in Western Austria—an approach based on climate and discharge station data. Hydrol Earth Syst Sci 19(3):1225–1245. https://doi.org/10.5194/hess-19-1225-2015

Krause P, Boyle DP, Bäse F (2005) Comparison of different efficiency criteria for hydrological model assessment. Adv Geosci 5:89–97. https://doi.org/10.5194/adgeo-5-89-2005

Krysanova V, Meiner A, Roosaare J, Vasilyev A (1989) Simulation modelling of the coastal waters pollution from agricultural watershed. Ecol Model 49(1):7–29. https://doi.org/10.1016/0304-3800(89)90041-0

Krysanova V, Wechsung F, Arnold J, Srinivasan R, Williams J (2000) SWIM (soil and water integrated model). User manual, PIK report 69. Potsdam Institute for Climate Impact Research, Potsdam

Lange S (2019) Trend-preserving bias adjustment and statistical downscaling with ISIMIP3BASD (v1. 0). Geosci Model Dev 12(7):3055–3070. https://doi.org/10.5194/gmd-12-3055-2019

Liersch S, Cools J, Kone B, Koch H, Diallo M, Reinhardt J, Fournet S, Aich V, Hattermann FF (2013) Vulnerability of rice production in the Inner Niger Delta to water resources management under climate variability and change. Environ Sci Policy 34:18–33. https://doi.org/10.1016/j.envsci.2012.10.014

Lobanova A, Koch H, Liersch S, Hattermann FF, Krysanova V (2016) Impacts of changing climate on the hydrology and hydropower production of the Tagus River basin. Hydrol Process 30(26):5039–5052. https://doi.org/10.1002/hyp.10966

Luo K, Tao F, Moiwo JP, Xiao D (2016) Attribution of hydrological change in Heihe River Basin to climate and land use change in the past three decades. Sci Rep 6(1):33704. https://doi.org/10.1038/srep33704

Lv X, Liu S, Li S, Ni Y, Qin T, Zhang Q (2021) Quantitative estimation on contribution of climate changes and watershed characteristic changes to decreasing streamflow in a typical basin of yellow river. Front Earth Sci 9:752425. https://doi.org/10.3389/feart.2021.752425

Mahmood R, Babel MS (2013) Evaluation of SDSM developed by annual and monthly sub-models for downscaling temperature and precipitation in the Jhelum basin, Pakistan and India. Theoret Appl Climatol 113(1–2):27–44. https://doi.org/10.1007/s00704-012-0765-0

Mann HB (1945) Nonparametric tests against trend. Econometrica: Journal of the Econometric Society 13:245–259. https://www.jstor.org/stable/1907187

Maurer EP, Stewart IT, Bonfils C, Duffy PB, Cayan DR (2007) Detection, attribution, and sensitivity of trends toward earlier streamflow in the Sierra Nevada. J Geophy Res 112:D11118. https://doi.org/10.1029/2006JD008088

Mengel M, Treu S, Lange S, Frieler K (2021) ATTRICI v11—counterfactual climate for impact attribution. Geosci Model Dev 14(8):5269–5284. https://doi.org/10.5194/gmd-14-5269-2021

Mondal A, Mujumdar PP (2012) On the basin-scale detection and attribution of human-induced climate change in monsoon precipitation and streamflow. Water Resour Res 48:1–18. https://doi.org/10.1029/2011WR011468

Moriasi DN, Gitau MW, Pai N, Daggupati P (2015) Hydrologic and water quality models: performance measures and evaluation criteria. Trans ASABE 58(6):1763–1785. https://doi.org/10.13031/trans.58.10715

Moriasi DN, Arnold JG, Van Liew MW, Bingner RL, Harmel RD, Veith TL (2007) Model evaluation guidelines for systematic quantification of accuracy in watershed simulations. Trans ASABE 50(3):885–900. https://doi.org/10.13031/2013.23153

Najafi MR, Zwiers FW, Gillett NP (2017) Attribution of observed streamflow changes in key British Columbia drainage basins. Geophys Res Lett 44(21):11012–11020. https://doi.org/10.1002/2017GL075016

Nash JE, Sutcliffe JV (1970) River flow forecasting through conceptual models part I—a discussion of principles. J Hydrol 10(3):282–290. https://doi.org/10.1016/0022-1694(70)90255-6

RGI Consortium (2017) Randolph glacier inventory – a dataset of global glacier outlines: Version0: Technical Report. Digital Media, USA, Global Land Ice Measurements from Space, Colorado. https://doi.org/10.7265/N5-RGI-60

Ritter A, Muñoz-Carpena R (2013) Performance evaluation of hydrological models: statistical significance for reducing subjectivity in goodness-of-fit assessments. J Hydrol 480:33–45. https://doi.org/10.1016/j.jhydrol.2012.12.004

Sen PK (1968) Estimates of the regression coefficient based on Kendall’s tau. J Am Stat Assoc 63(324):1379–1389. https://doi.org/10.2307/2285891

Stahl K, Tallaksen LM, Hannaford J, Van Lanen HAJ (2012) Filling the white space on maps of European runoff trends: estimates from a multi-model ensemble. Hydrol Earth Syst Sci 16(7):2035–2047. https://doi.org/10.5194/hess-16-2035-2012

Tabari H, Talaee PH (2011) Recent trends of mean maximum and minimum air temperatures in the western half of Iran. Meteorol Atmos Phys 111(3–4):121–131. https://doi.org/10.1007/s00703-011-0125-0

Theil H (1950) A rank invariant method of linear and polynomial regression analysis. i, ii, iii. Proceedings of the Koninklijke Nederlandse Akademie Wetenschappen. Ser A e Math Sci 53(1):386–392

US Environmental Protection Agency (2007) An approach for using load duration curves in the development of TMDLs. Washington DC. http://www.epa.gov/owow/tmdl/techsupp.html

Vafakhah M, Khosrobeigi Bozchaloei S (2020) Regional analysis of flow duration curves through support vector regression. Water Resour Manage 34:283–294

Wei M, Yuan Z, Xu J, Shi M, Wen X (2022) Attribution assessment and prediction of runoff change in the Han River Basin, China. Int J Environ Res Public Health 19(4):2393. https://doi.org/10.3390/ijerph19042393

Wortmann M, Krysanova V, Kundzewicz ZW, Su B, Li X (2014) Assessing the influence of the Merzbacher Lake outburst floods on discharge using the hydrological model SWIM in the Aksu headwaters. Kyrgyzstan/nw China Hydrological Processes 28(26):6337–6350. https://doi.org/10.1002/hyp.10118

WWF Pakistan (2007) Pakistan’s water at risk, water and health related issues and key recommendations. Freshwater & Toxics Programme, Communications Division, WWF Pakistan

Xu J, Gao X, Yang Z, Xu T (2022) Trend and attribution analysis of runoff changes in the Weihe River basin in the last 50 years. Water 14(1):47. https://doi.org/10.3390/w14010047

Yue S, Wang CY (2002) Applicability of prewhitening to eliminate the influence of serial correlation on the Mann-Kendall test. Water Resources Research 38(6):4–7. https://doi.org/10.1029/2001wr000861

Zhao L, He Y, Liu W (2023) Attribution of runoff changes in the Danjiang River Basin in the Qinba Mountains, China. Front Forest Global Change 6:1187515. https://doi.org/10.3389/ffgc.2023.1187515

Acknowledgements

The authors are grateful to the Inter-Sectoral Impact Model Intercomparison Project (ISIMIP) for providing the datasets. We also acknowledge the Water and Power Development Authority (WAPDA) Pakistan for providing the daily streamflow data for the Jhelum Basin. Mustafa Javed, Jürgen Böhner, and Shabeh ul Hasson acknowledge the support from the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germany’s Excellence Strategy-EXC 2037 “CLICCS-Climate, Climatic Change, and Society”-Project Number: 390683824, contribution to the Center for Earth System Research and Sustainability (CEN) of Universität Hamburg.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Javed, M., Didovets, I., Böhner, J. et al. Attributing historical streamflow changes in the Jhelum River basin to climate change. Climatic Change 176, 149 (2023). https://doi.org/10.1007/s10584-023-03628-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10584-023-03628-8