Abstract

Mounting evidence suggests members of the general public are not homogeneous in their receptivity to climate science information. Studies segmenting climate change views typically deploy a top-down approach, whereby concepts salient in scientific literature determine the number and nature of segments. In contrast, in two studies using Australian citizens, we used a bottom-up approach, in which segments were determined from perceptions of climate change concepts derived from citizen social media discourse. In Study 1, we identified three segments of the Australian public (Acceptors, Fencesitters, and Sceptics) and their psychological characteristics. We find segments differ in climate change concern and scepticism, mental models of climate, political ideology, and worldviews. In Study 2, we examined whether reception to scientific information differed across segments using a belief-updating task. Participants reported their beliefs concerning the causes of climate change, the likelihood climate change will have specific impacts, and the effectiveness of Australia’s mitigation policy. Next, participants were provided with the actual scientific estimates for each event and asked to provide new estimates. We find significant heterogeneity in the belief-updating tendencies of the three segments that can be understood with reference to their different psychological characteristics. Our results suggest tailored scientific communications informed by the psychological profiles of different segments may be more effective than a “one-size-fits-all” approach. Using our novel audience segmentation analysis, we provide some practical suggestions regarding how communication strategies can be improved by accounting for segments’ characteristics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

In 2016, the Paris Agreement was ratified. Parties to the agreement have pledged to cooperate to keep global temperature increases well below 2∘C above pre-industrial levels (United Nations 2015). The continued cooperation of democratic countries is partly determined by public support. Yet, in America and Australia, public concern for climate change often lags behind other social issues, such as strengthening the economy (Markus 2021; Pew Research Center 2022).

Various interventions have been proposed to increase support for climate policy. For example, telling people the proportion of climate scientists who believe in anthropogenic climate change (97%) enhances concern about climate change and policy support (van der Linden et al. 2015; van der Linden 2021). Such interventions treat the public as a homogeneous entity. However, reception to climate change messages can vary due to differences in motivation, ideology, and worldview (Kahan 2012; Feygina et al. 2010). For example, Hart and Nisbet (2012) exposed Americans to a news story describing climate change risks, before measuring support for mitigation policies. Compared to controls not exposed to an article, liberals who read the news story showed greater support for mitigation policy, whereas conservatives showed reduced support. Thus, when climate change messages clash with a person’s pre-existing political beliefs, they can backfire.

To improve interventions, communicators may use audience segmentation to divide the public into homogeneous groups (Smith 1956). Messages can then be tailored to the characteristics of each group, which may enhance communication effectiveness and mitigate backfire effects (Corner and Randall 2011). A meta-analysis of health communication suggests segmentation approaches are more effective than a “one-size-fits-all” approach, particularly when psychological theory is used to understand the segments (Noar et al. 2007).

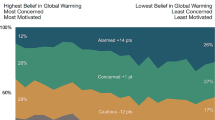

The most established audience segmentation for climate change communication is the Six Americas (Maibach et al. 2011; Yale Program on Climate Change Communication 2022). Six segments were developed from a nationally representative survey of Americans. Although multidimensional, the Six Americas may be ordered on continuous dimensions of belief and concern about climate change. The segments range from the “Alarmed”, the segment most accepting of climate change science; via the “Concerned”; the “Cautious”; the “Disengaged”; the “Doubtful”; to the “Dismissive”, a segment which rejects climate science. Conceptual replications have revealed comparable segments in other Western nations, such as Australia and Germany (Hine et al. 2013; Morrison et al. 2013; Morrison et al. 2018; Neumann et al. 2022; Metag et al. 2017). However, different segments have been observed in non-Western nations, such as India (Leiserowitz et al. 2013) and Singapore (Detenber et al. 2016), indicative of economic, social, political, and cultural differences between countries (Leiserowitz et al. 2021).

Most climate change audience segments, including the Six Americas, have been developed using a top-down approach. Specifically, the audience is statistically grouped across psychological characteristics known to correlate with climate change perceptions, policy support, and pro-environmental behaviour (for a review, see Hine et al. 2014). Alternatively, a bottom-up approach groups segments according to the audience’s views of lay concepts of climate change, such as those found in social media discussions. This overcomes a disadvantage of top-down approaches—they may omit features of climate change salient to citizens, but not to researchers. However, bottom-up approaches are sorely lacking in the climate change domain. Thus, it is unknown to what degree current understandings of segmentation are limited by researchers’ preconceptions. Here we addressed this shortcoming using a bottom-up audience segmentation approach.

We conducted bottom-up segmentation using the Q methodology—an analytical approach to representing viewpoints (Brown 1980). It uses a Q sort task whereby participants rank statements about a topic, usually along a dimension of agreement. Statements can be generated using a bottom-up approach, where statements capture the breadth of conversational possibilities (Brown 1980; Stephenson 1986). Using factor analysis, participants are then segmented based on ranks assigned to statements. Applications of the Q methodology to climate change audiences are rare and limited to small non-representative samples (e.g. Wolf et al. 2009; Hobson and Niemeyer 2012). The current research is the first to apply the Q methodology to nationally representative samples.

We report two studies using representative samples of the Australian public. Study 1 sought to identify audience segments with the Q methodology using bottom-up generated statements derived from prevalent Australian social media discourse on climate change (Andreotta et al. 2019), and determine segment differences with auxiliary measures of various psychological characteristics. The study provided evidence for three different segments: Acceptors, Fencesitters, and Sceptics. Analysis of psychological characteristics indicated segments differed in their mental models, climate change concern and scepticism, political ideology, and environmental worldviews. In Study 2, we replicated the three segments and examined if they differed in their receptivity to climate science information. Participants completed a belief-updating task in which they were asked their beliefs about the contribution of different causes to climate change, the likelihood climate change will have specific impacts, and the effectiveness of Australia’s mitigation policy. They were then given actual scientific estimates before submitting their revised belief estimates. We found considerable heterogeneity across segments in their belief-updating tendencies. These results provide insights into the effectiveness of communicating scientific information to each segment.

1 Study 1

Climate change views may arise from the interaction of cognitive representations, ideology, personality, and affect. Regarding cognitive representation, audience segments typically vary in climate change scepticism (e.g. Yale Program on Climate Change Communication 2022), both epistemic (doubting climate science) and response scepticism (doubting mitigation is possible; Capstick and Pidgeon 2014). Segments may also differ in terms of their mental models—the internalised representations that underpin causal beliefs used to generate descriptions, explanations, and predictions (Granger et al. 2002; Rouse and Morris 1986; Jones et al. 2011). A multinational survey found mitigation policy support is associated with different climate change mental models, formulated as perceived causes of climate change, perceived climate change consequences, and perceived policy effectiveness (Bostrom et al. 2012). To illustrate, consider two common mental models: one features greenhouse gas emissions as the predominant cause of climate change, whereas the other features toxic air pollution as the predominant cause (Kempton et al. 1996; Reynolds et al. 2010; Fleming et al. 2021). Individuals with the second model may be most likely to suggest the ineffective strategy of mitigating climate change by additional filtering of factory smokestacks (Kempton et al. 1996). Thus, knowledge of segments’ mental models provides insight into the logic by which trusted information will be transformed into action or knowledge (Granger et al. 2002), and which policies may be endorsed (Bostrom et al. 2012).

Deliberation through mental models and policy support may depend upon cognitive styles. For example, individuals with a high need for cognition engage in, and enjoy, effortful thinking (Cacioppo and Petty 1982). Those high in need for cognition tend to think openly and critically about issues, potentially leading to greater acceptance of scientific perspectives on climate change (Sinatra et al. 2014). Conversely, individuals with high self-perceived climate change knowledge may be reluctant to seek out and accept scientific information (Stoutenborough and Vedlitz 2014). Those with an elevated considerations of future consequences cognitive style are predisposed to orient themselves to long-term over short-term goals (Strathman et al. 1994), leading to higher support for mitigative policy (Wang 2017). Rejection of anthropogenic climate change may be underscored by conspiracist ideation, whereby individuals believe climate change to be a “hoax” and may be resistant to updating their views in light of scientific evidence (Lewandowsky et al. 2013; Lewandowsky et al. 2013; Sarathchandra and Haltinner 2021).

Ideological characteristics may underscore the contents of mental models and whether mental models are used in deliberation (Fleming et al. 2021). For example, right-wing political ideologies de-emphasise climate change risks and concerns, thereby legitimising mental models that represent climate change as a natural fluctuation in climate (Leiserowitz 2006; Zia and Todd 2010; Campbell and Kay 2014; Drews and van den Bergh 2016). Similarly, individuals with high system justification, a tendency to perceive the prevailing social system as fair, legitimate, and justifiable (Kay and Jost 2003), are motivated to deny the risks of climate change, as mitigative policies often challenge the status quo (van der Linden 2017; Feygina et al. 2010). Individuals differ in their environmental worldviews, culturally shared values and beliefs about the environment (Thompson et al. 1990; Douglas and Wildavsky 1983). For example, individuals may have an environment-as-ductile worldview that the environment is unable to recover from human impacts or an environment-as-elastic worldview that the environment is readily able to recover from human impacts (Price et al. 2014). Individuals with greater environment-as-ductile worldviews, or reduced environment-as-elastic worldviews, demonstrate greater belief in anthropogenic climate change, environmental concern, and pro-environmental behaviour (Price et al. 2014). Lastly, values may influence climate change scepticism. Individuals with high conservation values (the motivation to preserve the past, respect order, and resist change) and low self-transcendence may be motivated to engage in climate change denial (Corner et al. 2014; Schwartz 2012; Drews and van den Bergh 2016).

Personality may have wide-ranging effects on engagement with, and views of, climate change. Personality mediates the effects of risk perceptions, values, social norms, and environmental concern on pro-environmental attitudes (Yu and Yu 2017). Emission-reducing behaviours are associated with the traits of openness (imaginative, curious), conscientiousness (reliable, organised), and extraversion (sociability, assertiveness; Brick and Lewis 2014).

Affect, particularly worry about climate change, can influence climate change views and support for action (Fleming et al. 2021; van der Linden et al. 2019; Smith and Leiserowitz 2014). For example, experimental and correlational research has supported a gateway belief model, where the influence of the perceived scientific consensus on support for public action is mediated by cognitive (belief in climate change and human causation) and affective variables (worry about climate change; van der Linden et al. 2019). Worry about climate change is one the largest predictors of mitigative policy support, surpassing the predictive capacity of other emotions (Smith and Leiserowitz 2014).

Accordingly, a complex myriad of psychological characteristics underpin tendencies to process and seek information and engage in pro-environmental behaviour. This study aimed to segment the audience using the Q methodology and examine segment differences in a range of psychological characteristics. Although we used a bottom-up approach to audience segmentation, we adopted a top-down approach to facilitate segment interpretation by incorporating auxiliary measures of the just reviewed psychological characteristics, which may help explain differences in segment membership. Critically, these auxiliary measures were used to facilitate interpretation of audience segments after they had been derived—they did not contribute to the segmentation process itself. We expected each segment would be underscored by a unique signature of psychological characteristics.

1.1 Method

This study was preregistered using the Open Science Framework (https://osf.io/tc8v6).

1.1.1 Participants

Four-hundred and thirty-five Australian adults were recruited online by Qualtrics. A targeted and stratified sampling process was used, whereby the age (M = 46.71, SD = 17.77) and gender (female = 50.34%) were matched to the general population of Australian adults reported in the national 2016 census. We did not record the data of extremely fast responders, defined as those who completed the study in less than 873 s (a preregistered criterion based on pilot testing).

1.1.2 Materials and procedure

Participants completed the Q sort followed by an inventory of psychological survey scales. Administration of scales was counterbalanced to control order effects (see Supplementary Material). The median time taken to complete the study was 26.17 min (interquartile range = 16.15 min).

Q sort

The Q sort requires a set of statements capturing the breadth of conversational possibilities of an issue (Stephenson 1986). To create our statements, we drew upon previous work that used an inductive process to identify the structure of climate change commentary of Australian tweets (Andreotta et al. 2019). This research revealed five enduring themes of public discourse on climate change: climate change action, climate change consequences, climate change conversations, climate change denial, and the legitimacy of climate science and climate change. For each theme, we selected six tweets that captured the heterogeneity of the theme (see Supplementary Materials). The resulting 30 tweets were transcribed as statements that could be understood without the social context of the original tweet. Where possible, language, sentiment, and tone were preserved. Statements included: “it is important to vote for leaders who will combat climate change” (climate change action), “climate change is a threat to the health and safety of our children” (climate change consequences), “it is shameful that climate change, the greatest problem of our time, is barely discussed in the media” (climate change conversations), “climate change sceptics ignore basic climate science facts” (climate change denial), and “scientists should stop falsely claiming that climate change is a settled science” (legitimacy of climate science and climate change).

The Q sort comprised three phases. In phase 1, participants read each statement and assigned it to one of three categories: (1) like their point of view; (2) unlike their point of view; or (3) neutral or unsure. In phase 2, participants were required to rank statements from “most unlike my point of view” (− 4) to “most like my point of view” (+ 4). The number of statements that could be placed at each rank was predetermined, such that more statements could be placed at the midpoint rather than the extremes (Fig. 1). Thus, participants had to be selective in the statements used to represent their most extreme views. In phase 3, participants responded to open-ended questions prompting them to justify their placement of the statements ranked most extreme.

Schematic of the Q sort task. Participants progressed through a stack of unsorted statements (A) by dragging the top-most statement into the grey box that best corresponded to their point of view (B), as indicated by the blue solid line. Participants could re-arrange statements at any time during the task. To facilitate this process, statements could be placed in the orange temporary holding area (C), as indicated by the pink dashed line

Scales

Twenty-eight scales were used to measure cognitive, ideological, personality, and affective psychological characteristics. For brevity, the scales are summarised in Table 1.

1.2 Results and discussion

1.2.1 Segments

To identify segments, we conducted a factor analysis on the Q sort data (collected in phase 2). Unlike traditional survey approaches which characterise factors of statements that generate common response patterns across people, the Q methodology reverses this statistical procedure to identify factors of people with common sorting styles (Brown 1980; Watts and Stenner 2012; McKeown and Thomas 2013). We conducted a principal components analysis using varimax rotation. We extracted a single factor, as the first component accounted for a substantially larger proportion of variance (34.06% after rotation) than the second component. Broadly, this extracted factor represents a dimension of acceptance of anthropogenic climate change. We segmented individuals on the basis of their factor loading: (1) Acceptors whose positive load onto the factor is statistically significant (n = 281; 64.60%); (2) Sceptics whose negative load onto the factor is statistically significant (n = 36; 8.28%); and (3) Fencesitters whose loading onto the factor is not statistically significant (n = 118; 27.13%). Fencesitters are necessarily less homogeneous in their climate change views than Acceptors and Sceptics; otherwise, Fencesitters would have emerged as a second factor.

To understand each segment’s perspective, we constructed a “representative” Q sort (Brown 1980; Watts and Stenner 2012). For each segment, the average ranking assigned to each statement by participants, weighted by participants’ factor loading, was calculated. The weighted-averages of statements were then mapped onto the rankings enforced by the structure of the Q sort, known as factor scores (Table 2). Factor scores were not calculated for Fencesitters, as the segment’s sorting style was necessarily heterogeneous. Next, we report the representative Q sorts for Acceptors and Sceptics further elaborated on with the participants’ text justification of their rankings (collected in phase 3).

Acceptors believe anthropogenic climate change is occurring (statements: 3, 10, 11, 13, 28), as indicated by an increased frequency of extreme weather and hotter days (statements: 5, 10, 11). Acceptors reject the notion climate change is a hoax (statements: 2, 6). Acceptors claim climate change will cause widespread changes: physical changes in weather (statements: 9, 10, 11); biological changes to ecosystems, such as damage to the Great Barrier Reef (statement: 4); and changes to human systems, threatening agriculture (statement: 6), future generations (statement: 4), and to a lesser degree, the poor (statement: 21). According to Acceptors, these impacts are worse than scientists initially thought (statement: 8), though there are still opportunities to mitigate and adapt (statements: 12, 15, 25, 26). Although Acceptors believe action partly rests on collective society (statement: 27), they believe leaders must take charge (statements: 1, 25) as “there are too many weak and idiot politicians in parliament. we need to vote in people who will take action” otherwise “climate change will spiral out of control”.

Sceptics reject the concept of anthropogenic climate change (statements: 3, 10, 11, 13, 28). According to Sceptics, there is conclusive evidence human activities do not influence climate. Scientists who say otherwise are viewed by Sceptics to rely on “dodgy modelling” and “bullshit thought up by some brain dead idiots in university”. Thus, Sceptics claim climate scientists have overestimated the frequency and intensity of current extreme weather events (statements: 10, 11), and will be incorrect in their projections for the future (statements: 4, 7, 8). Consequently, Sceptics agree scientists changed the name of their area of study from “global warming” to “climate change”, as the world is not warming (statement: 5). As anthropogenic climate change “has nothing to do with science and reality”, Sceptics question the motives of institutions that endorse mitigative action (statements: 2, 5). For example, one Sceptic claimed “the United Nations is hiding behind climate change to acquire money”. Similarly, Sceptics argue against voting for leaders who will combat climate change (statement: 1) as such leaders are only concerned “about what they can get”. These leaders are “going to bankrupt Australia” by “chasing ghosts” as there is no way to “tame mother nature, money can’t”. Citizens who demand solutions for climate change are the usual “torch-and-pitchfork” crowd (statement: 12).

1.2.2 Predictors of segment membership

Segments differed in their psychological attributes (Table 3). To explore statistical differences, we constructed a multinomial regression model that predicted segment membership as a function of the z-scores of psychological characteristics. Due to the large number of related predictors, we used a ridge regression. A ridge regression penalises the estimates of highly correlated terms to achieve greater reliability (a bias-variance tradeoff). For each level of the penalty placed on highly correlated terms (corresponding to a shrinkage parameter λ), different coefficients are estimated. A cross-validation process (k-fold) was used to determine the ideal penalty (λ). Confidence intervals of the coefficient were calculated using a bootstrap procedure (Efron and Tibshirani 1994). One thousand samples were created by sampling participants (with replacement) from the study data. For each sample, coefficients were estimated using the aforementioned ridge regression and cross-validation processes. From the coefficient distributions, 95% confidence intervals were identified.

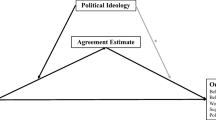

Regression coefficients are presented in Fig. 2. Acceptors (Sceptics) were characterised by lower (greater) epistemic and response scepticism, greater (lower) belief in anthropogenic climate change, greater (lower) worry about climate change, lower (greater) endorsement of environment-as-elastic worldviews, less (more) conservative political ideology, lower (greater) knowledge volume, and greater (lower) belief carbon-emitting human activities cause climate change. High belief in societal consequences of climate change reliably distinguished Acceptors only, whereas low belief of environmental harms climate change causes and greater self-perceived climate change knowledge reliably predicted Sceptics only. Fencesitters were distinguished by greater levels of conspiratorial ideation. Fencesitters and Acceptors were also distinguished by relatively higher and lower belief in the effectiveness of engineering policies, respectively. However, this finding may be a result of suppression via other predictors, as on average, Acceptors had numerically greater belief in the effectiveness of engineering policies than Fencesitters. Likewise, the relatively low coefficient of knowledge volume predicting Acceptor membership should also be cautiously interpreted, as on average, Acceptors had greater knowledge volume than Fencesitters. Personality, need for cognition, consideration of future consequences, system justification, and values were not reliable predictors of segment membership.

Ridge regression coefficients for each predictor of segment membership. The coefficients (dot) and 95% confidence intervals (error bars) are presented for Acceptors (blue), Fencesitters (yellow), and Sceptics (purple). Predictors are ordered by the magnitude of coefficients. The grey background highlights predictors where confidence intervals contain zero for all segments

2 Study 2

Study 1 provided evidence for three audience segments that differ in psychological characteristics that transcend climate change (conspiratorial ideation, environmental worldviews, and political ideology) and specific climate change beliefs and mental models that may be more easily changed. However, the association between climate change scepticism and ideological variables suggests some climate change beliefs may be resistant to revision because of motivated reasoning (Bayes and Druckman 2021). For example, the association between climate change scepticism and right-leaning ideology may be due to scepticism becoming a symbol of in-group membership for conservatives—a process known as identity-protective cognition (Kahan et al. 2013). If so, conservatives may be motivated to reject opposing beliefs as these would threaten the material and emotional benefits gained from in-group membership. Thus, segments may differ in their receptivity to climate science information.

To test this idea, in Study 2, we examined whether revision of climate change beliefs differed across segments. We used a belief-updating paradigm (Garrett and Sharot 2017). In this paradigm, in each trial, individuals generate numerical estimates of an event or process, and then are shown an estimate and required to generate another estimate. Although not commonly used in climate change communication research (though, see Sunstein et al. 2017), the belief-updating paradigm allows researchers to quantify belief-updating tendencies in a rigorous manner that generalises across beliefs and accounts for individual base rates (e.g. Garrett and Sharot 2017; Ma et al. 2016). In the current study, we assessed updating across three mental model domains: climate change causes, climate change consequences, and effective mitigation of climate change (Bostrom et al. 2012). The dependent measure of interest was the direction and degree of belief updating following receipt of scientific estimates.

Additionally, we explored two cognitive mechanisms which may account for a relationship between segment membership and belief revision. The first is trust in the source of incoming information. Acceptors may be more likely to trust scientific institutions than Sceptics due to observed differences in political ideology, environmental worldviews, and climate change scepticism (Cook and Lewandowsky 2016; Sunstein et al. 2017). The second mechanism is optimism bias, where individuals tend to revise their beliefs to a greater degree when receiving good news (e.g. initially overestimating an event perceived as bad) than when receiving bad news (e.g. initially overestimating an event perceived as good; Garrett and Sharot 2017; Ma et al. 2016). Sunstein et al. (2017) demonstrated that segments differ in their optimism bias—when revising future-warming estimates, Sceptics updated optimistically, whereas Acceptors updated pessimistically. Accordingly, we explored the possibility segment differences in belief updating can be explained by group differences in an optimism bias. To determine which events were good or bad news, we included a sentiment inventory for participants to indicate their feelings towards each climate change outcome.

Finally, Study 2 was an opportunity to replicate the three segment solution from Study 1. However, the length of the belief-updating paradigm meant it was not practical to include the psychological scales from Study 1.

2.1 Method

2.1.1 Participants

Qualtrics was used to recruit Australian adults (N = 413) using the same targeted and stratified sampling process focussed on age (M = 46.82, SD = 18.04) and gender (female = 47.94%). Along these characteristics, the sample was representative of the Australian population. We did not record the data of extremely fast responders, defined as those who completed the study in less than 664 s (a preregistered criterion based on pilot testing).

2.1.2 Materials and procedure

All materials were presented to participants on a computer screen via a web browser. After providing informed consent and demographic data, participants completed the Q sort task used in Study 1. Next, participants completed the trust inventory, belief-updating tasks (administered in a counterbalanced order, see Supplementary Materials), and sentiment inventory. The median completion time of the study was 25.02 min (interquartile range = 18.28 min).

Trust inventory

Participants were informed they would be shown information from two sources: the peer-reviewed climate science literature and Climate Action Tracker (an organisation that provides scientific analysis of government climate action). For each source, participants read a lay description and indicated their trust of the source on a 7-point Likert scale, ranging from “strongly distrust” (1) to “strongly trust” (7).

Belief-updating tasks

We tested belief updating across three domains with five belief-updating tasks: (1) belief in causes of climate change (three tasks); (2) belief in consequences of climate change (one task); and (3) belief in effectiveness of mitigative policies (one task). Each belief-updating task contained two stages. Firstly, participants provided estimates for climate change drivers or outcomes (see Supplementary Materials for more detail) by entering values from 0 to 100 into text boxes. Secondly, participants were shown their initial estimates alongside the scientific estimate. Participants then provided a new estimate. The presentation of belief-updating tasks was counterbalanced across participants (see Supplementary Materials).

The first three belief-updating tasks concerned causal beliefs. For the first task, participants estimated the percentage of human-driven and nature-driven causes of climate change between 1980 and 2011, separately. For the second task, participants estimated the percentage of climate change caused by each of six mechanisms (e.g. “carbon dioxide emissions” and “changes in solar activity”) between 1750 and 2011. For the third task, participants estimated the percentage of warming caused by greenhouse gas emissions from six human activities (e.g. “electricity use in residential buildings”). Before supplying their estimates, participants were informed greenhouse gas emissions drive most climate change.

Another belief-updating task concerned consequence beliefs. Participants estimated the degree to which nine climate events (e.g. “the number of hot days globally between 1901–2005”) occurred because of anthropogenic climate change.

The final belief-updating task concerned mitigation beliefs. Participants were given information about the Paris Agreement and the Emissions Reduction Fund (hereafter “ERF”), Australia’s central climate policy. Then, participants predicted the change in Australia’s carbon dioxide emissions by the year 2030 (compared to 2005 levels) under Australia’s current climate policies. Unlike other tasks, participants indicated the direction of change of emissions by using a drop-down menu (options: increase, decrease, no change) and the amount of change by entering a percentage (participants who indicated there would be no change had to enter “0”). Alongside both their initial and revised estimates, participants indicated their approval of the ERF on a 7-point Likert scale, ranging from “strongly disagree” (1) to “strongly agree” (7); their level of approval of Australia’s climate policies on the same 7-point Likert scale; and the likelihood Australia will meet the Paris Agreement (as a percentage, from 0 to 100).

Sentiment inventory

Participants indicated their feelings towards each climate change event presented in the belief-updating tasks on a 5-point Likert scale, ranging from “very negative” (1) to “very positive” (5). On the same scale, participants were asked “If Australia met its commitment to the Paris Agreement, how positive or negative would you feel about that?” Participants were not asked about their sentiment towards climate change causes, as responses would be difficult to interpret.

2.2 Results and discussion

2.2.1 Segmentation solution

Following the analysis steps of Study 1, we replicated the Acceptors, Fencesitters, and Sceptics segments. The factor scores of each statement were near-identical for Acceptors and Sceptics (see Supplementary Material for factor scores). Of the sample, 256 participants were Acceptors (61.99%), 114 were Fencesitters (27.60%), and 43 were Sceptics (10.41%).

2.2.2 Belief updating

The dependent variable of interest is update—the degree to which a participant revised their estimate following exposure to scientific information, as a proportion of their initial error. An update score was calculated for each participant and for each belief. The magnitude of an update score is the difference between the initial and revised estimate, divided by the magnitude of difference between the initial and scientific estimate. The sign of the update score conveys whether the update was towards (positive) or away (negative) from the scientific estimate. Thus, an update score of one indicates a revision to match the scientific estimate. Using this formula, update scores could not be defined when the initial estimate equalled the scientific estimate (293 of 9888 updates; 2.96%) and such cases were therefore excluded from further analysis.

Linear mixed-effects modelling was used to determine whether update varied as a function of segment, trust in source information, or an optimism bias. Each domain of belief (cause, consequence, and mitigation) was modelled separately. The general strategy involved constructing several models, each with a different combination of predictors (known as fixed effects). We then compared the consistency between data and each model, as captured by the Akaike Information Criterion (AIC), to determine the best fitting models (detailed in the Supplementary Materials). For models of cause and consequence beliefs, intercepts were assumed to randomly vary across participants, belief items, and task administration orders (known as random effects). For mitigation beliefs, intercepts were assumed to randomly vary across only task administration orders; as only a single belief was measured, within-unit and between-unit variability cannot be partitioned. All models were fit using maximum likelihood estimation. Predictor coefficients are reported with a 95% confidence interval (CI), estimated using the Wald method. One participant was excluded from analysis, as their data prevented model convergence due to a belief update score several magnitudes higher than other participants.

Updating varied as a function of segment (Fig. 3). Models with a main effect of segment (and no other predictors) had better fit than models with no main effects. For cause beliefs, Acceptors updated more than Fencesitters (difference = 0.25, CI = [0.12, 0.38]), and Fencesitters updated more than Sceptics (difference = 0.39, CI = [0.19, 0.59]). For consequence beliefs, Acceptors again updated more than Fencesitters (difference = 0.25, CI = [0.15, 0.34]), who again updated more than Sceptics (difference = 0.24, CI = [0.09, 0.40]). For mitigation beliefs, Fencesitters updated the most, although they did not statistically differ from Acceptors (difference = 0.07, CI = [− 0.20, 0.34]). However, Acceptors updated more than Sceptics (difference = 0.53, CI = [0.13, 0.92]). Thus, the patterns of segment updating for cause and consequence beliefs are not reflected in mitigation beliefs.

Segments may have differed in belief updating solely due to differences in trust in the source of information. However, this is not well supported by the evidence. For all domains, models with only a main effect of segment had considerably better fit than models with only a main effect of trust. Thus, trust alone cannot account for the effect of segment on belief update.

Generally, the best fitting models for update of cause and consequence beliefs were those including effects for both trust and segment membership. Additionally, there was substantial evidence for interactions. For cause beliefs, greater trust was associated with greater update for Acceptors (0.09 more update per point of trust, CI = [0.01, 0.16]) and Sceptics (0.18 more update per point of trust, CI = [0.08, 0.28]), but not for Fencesitters (0.02 less update per point of trust, CI = [− 0.06, 0.10]). For consequence beliefs, greater trust was associated with greater update for Acceptors (0.10 more update per point of trust, CI = [0.04, 0.16]), but not for Sceptics (0.04 more update per point of trust, CI = [− 0.04, 0.12]) or Fencesitters (0.04 more update per point of trust, CI = [− 0.02, 0.10]). For mitigation beliefs, there was little evidence for an interaction.

Segment differences in updating may be due to differences in optimistic revisions. To explore this possibility, we determined which scientific messages were good news and bad news on the basis of participants’ emotional appraisals of events and their first estimates. For example, if a participant indicated an event was negative and was exposed to a scientific estimate greater than their first estimate, the event is bad news; conversely, if this scientific estimate was instead less than a participant’s first estimate, the event is good news. Combinations of effects for segment and news type (coded as good or bad) were entered into mixed-effects models. We did not model participant update of neutral belief/news, which included 1420 of 3708 consequence belief updates (38.30%) and 90 of 412 mitigation belief updates (21.84%). Of note, we did not model optimistic updating for cause beliefs as we did not collect sentiment data for causes.

For consequence and mitigation beliefs, the best fitting models were those containing main effects for segment and news type, but no interactions. There was a tendency towards a pessimistic updating for all segments, such that updating is larger for bad news than good news (Fig. 4). As the best fitting models contained an effect for segment, segment differences in update could not be solely accounted for by differences in an optimism or pessimism bias.

2.2.3 Change in policy support

Additionally, we identified the predictors of changes in policy support. To do so, we determined the fit of linear mixed-effects models with combinations of main effects and interactions of segment; change in perceived mitigation effectiveness (positively signed when policy is perceived to be more effective); and change in perceived likelihood of Australia satisfying the Paris Agreement (positively signed change when the Paris Agreement is perceived to be more likely to be satisfied).

For both support of the ERF and Australia’s policy, two models had considerably better fit. The first model contained a main effect of segment, a main effect of perceived likelihood Australia will satisfy its Paris Agreement commitment, and an interaction between these two variables. The second model contained the same effects as the first, with an additional main effect of change in perceived mitigation effectiveness. For these models, the coefficient for the interaction between segment and perceived likelihood of meeting the Paris Agreement was only reliability signed (positive) for Acceptors (that is, the confidence interval did not intersect zero). Additionally, participants who updated towards greater perceived effectiveness of the ERF increased their support for the ERF (0.00378 change on the scale per 1% increment of carbon dioxide reduction, CI = [0.00065, 0.00692]), and their support for Australia’s mitigation policies, although not reliably (0.00185 change on the scale per 1% increment of carbon dioxide reduction, CI = [− 0.00172, 0.00542]). Overall, these models indicate participants of all segments who reduce their belief in a policy’s effectiveness also reduce their support for that specific policy, but not general policy action. Additionally, Acceptors lowered their support for specific policy to the degree that it harmed Australia’s likelihood of meeting the Paris Agreement. However, detrimental impacts on the Paris Agreement were unrelated to changes in policy support of Fencesitters and Sceptics.

3 General discussion

We used a novel bottom-up approach to segment climate change views. Across two studies, we find consistent evidence for three distinct audience segments: Acceptors, Fencesitters, and Sceptics. In Study 1, we combined our bottom-up approach to segmentation based on the Q sort with a top-down approach to segment interpretation by incorporating auxiliary measures of potentially relevant psychological characteristics. Importantly, these auxiliary measures were used to help interpret the segments once they had been derived—they did not contribute to the segmentation process itself. This combination of approaches revealed the three segments differ in their mental models of climate change and other psychological characteristics. Study 2 demonstrated segments differ in their belief-updating tendencies when exposed to scientific information. Overall, our studies indicate the Australian public are divisible into three audience segments with unique psychological characteristics and belief-updating tendencies. Next, we summarise the characteristic differences between segments, how these can inform communication strategies, and how our segmentation solution differs from previous research.

3.1 Characteristic differences between segments

Segment differences can be understood by referring to their sorting behaviour in the Q sort task, responses on the psychological characteristics measures, and updating tendencies in the belief-updating paradigm. From the Q sorts, it is apparent Acceptors strongly believe in the urgency and reality of climate change. They recognise climate change will have wide-ranging impacts on environment and society, and these impacts may be worse than climate scientists expect. They reject conspiratorial notions of climate change as a hoax, and they want to see political leadership and climate action. By contrast, Sceptics have an alternative perception of reality—one where the science suggests human actions are not influencing climate. Instead, climate change is a hoax manufactured to serve a hidden agenda. Accordingly, climate scientists are thought to use questionable research practices to create the illusion climate change is occurring. They think climate scientists’ forecasts of global warming have been proved wrong and that, because of this, they deliberately changed the name of their field of study from “global warming” to “climate change”. We cannot say anything specific about Fencesitters other than that their sorting responses are more heterogeneous than the other two segments.

Turning to psychological characteristics measures, Acceptors strongly believe climate change is occurring and that carbon-emitting human activities cause climatic changes. They are more worried about the issue than other segments and strongly support climate action. This segment is politically liberal with an environment-as-ductile worldview, meaning they think the natural environment has a limited capacity to recover from damage. By contrast, Sceptics are less likely to believe climate change is occurring and that carbon-emitting human activities cause climatic changes—yet, Sceptics have the greatest self-confidence in their knowledge about climate change. They are therefore sceptical of the need for climate action. This segment is politically conservative with an environment-as-elastic worldview, meaning they think the environment easily recovers from damage. Surprisingly, Sceptics did not show the highest levels of dispositional conspiratorial ideation of all segments, despite their strong endorsement of climate-conspiracy related items in the Q sort. Instead, Fencesitters were the highest in dispositional conspiratorial ideation.

Our finding that dispositional conspiratorial ideation was elevated in Fencesitters, but not Sceptics, seemingly contradicts an extensive literature showing conspiratorial ideation predicts climate change scepticism (Hornsey et al. 2018; Kaiser and Puschmann 2017; Lewandowsky et al. 2013). However, Lewandowsky (2021) recently suggested individuals may deploy conspiratorial explanations for two different reasons: (1) they have a general disposition towards engaging in conspiratorial ideation; and/or (2) they seek to guard against worldview-incongruent information. In the latter case, conspiracy theories may not reflect people’s real attitudes to climate change but may instead be a pragmatic tool to indicate a person’s political stance on the issue. Consistent with this, Fencesitters showed greater general disposition towards conspiracism in the absence of a specific tendency towards climate change conspiracy theorising, and have moderate political ideology and environmental worldviews. By comparison, Sceptics do not show an increased general disposition towards conspiracism, but they do show a specific tendency towards climate change conspiracy theorising, accompanied by politically conservative ideology and environment-as-elastic worldviews. Thus, unlike Fencesitters, Sceptics may be ideologically motivated to believe conspiratorial accounts of climate change.

Finally, segments differed in the degree they revised their beliefs towards scientific information. Specifically, for climate change causes and consequences, Acceptors updated their beliefs more than Fencesitters, who in turn updated their beliefs more than Sceptics. For mitigation, Acceptors and Fencesitters revised their beliefs to a comparable degree, and more so than Sceptics. The segment differences in belief updating could not be fully accounted for by trust in information source or an optimism bias. In general, Acceptors and Fencesitters showed high degrees of willingness to revise their beliefs, whereas Sceptics were highly resistant to revising their beliefs. The willingness of Fencesitters but not Sceptics to update their beliefs in response to scientific information confers further support for the notion the two segments may deploy conspiracy theories for different reasons.

We found all segments updated pessimistically, meaning their belief update in response to scientific information was greater for bad news than good news. We do not find evidence for a general optimism bias in Sceptics, which was observed by Sunstein et al. (2017) in their study of the revision of climate change consequence beliefs. This discrepancy may be due to differences in the classification of bad news. Sunstein et al. (2017) classified bad news as the event in which a participant was given an estimate for climate change consequences worse than their initial prediction. In contrast, we measured participant sentiment for each consequence, and only when an event was perceived as negative was an under-estimate labelled as bad news. Thus, our use of sentiment measurement allowed for a more personalised method for categorising news as good or bad. However, other factors could have accounted for differences with previous research, such as the operationalisation of Acceptors or the specific beliefs measured in studies.

3.2 Communicating with the different segments

To bolster public support for mitigative policies, our findings suggest communicators should focus on Fencesitters. Acceptors already trust climate science and support strong leadership to address climate change, whereas Sceptics are few in number and politically motivated to oppose mitigative policy, and are thereby resistant to belief updating. In contrast, Fencesitters show potential for belief change, as they update their beliefs in response to scientific findings and are not characterised by extreme environmental worldviews or political ideology. However, just as Fencesitters could be tipped towards greater climate change acceptance by exposure to scientific information, they could be tipped towards scepticism by exposure to disinformation.

To protect Fencesitters from climate change disinformation, communicators could preemptively use inoculation techniques to build psychological resistance to disinformation before it is perceived (McGuire and Papageorgis 1961). Inoculation involves warning individuals they may be exposed to disinformation and explaining to them the deceptive strategies and rhetorical techniques used by those that seek to mislead (van der Linden et al. 2017). For example, Cook et al. (2017) found climate change disinformation could be successfully inoculated by alerting individuals to the use of “fake experts” by the fossil fuel industry. Alternatively, if disinformation has already informed belief, communicators may use various best-practice debunking strategies to correct the disinformation (Lewandowsky et al. 2020; Lewandowsky et al. 2017). For example, communicators could provide clear explanations for the established knowledge that undermines the misinformation alongside an explanation of what is true instead (Lewandowsky et al. 2020; Paynter et al. 2019; Ecker et al. 2020).

Although we emphasise Fencesitters, public support for mitigation policy can be bolstered within Acceptors and potentially Sceptics. Despite worry about climate change and possessing knowledge of its causes, many Acceptors fail to distinguish effective and ineffective policies (Kempton et al. 1996; Read et al. 1994; Reynolds et al. 2010). To reduce support for ineffective policies, communicators could encourage Acceptors to apply their causal knowledge of greenhouse gases to the policy domain. Alternatively, communicators could directly highlight the ineffectiveness of a policy and the adverse impacts on international agreements. Lastly, messages from climate scientists could be persuasive, as climate scientists are trusted by Acceptors.

Of all segments, Sceptics were most resistant to belief revision when contradicted by science. Thus, communicators may need to deploy unique strategies to foster more positive attitudes towards climate science and policy in this segment. One approach is to leverage people’s motivations to maintain cognitive consistency in attitudes. For example, Gehlbach et al. (2019) found conservatives asked to rate the generally accepted contributions of science to society, such as discovering germs cause disease, had more positive attitudes towards climate science than conservatives asked solely about their climate science attitudes. Alternatively, communicators may avoid climate science entirely by appealing to the benefits of mitigation policy to improve policy endorsement, such as communicating the moral or economic co-benefits (Bain et al. 2015). Notwithstanding, communicators should be weary of Sceptics’ aversion to commonly discussed climate change mitigation policies, which may conflict with more conservative political ideology (Campbell and Kay 2014).

3.3 Comparison to previous segmentation research

We identified segments of Acceptors, Fencesitters, and Sceptics, consolidated on a continuum of climate change scepticism, worry about climate change, and political ideology. Despite using a bottom-up approach, our segmentation solution of segments differing upon a continuum of anthropogenic climate change belief is broadly consistent with the findings of top-down approaches, including the Six Americas segmentation (e.g. Hine et al. 2016; Maibach et al. 2011; Morrison et al. 2013). However, the number of segments on this continuum differs, with top-down approaches identifying between three (Hine et al. 2016) and five or six (Maibach et al. 2011; Morrison et al. 2013) segments, compared to the three segments identified using our bottom-up approach. Moreover, the proportions of individuals in each segment do not neatly reflect the proportions of individuals in each Six Americas segment identified in Australians (Neumann et al. 2022). For example, the proportion of Acceptors is larger than Alarmed and Concerned segments, and the proportion of Sceptics is larger than the Dismissive segment. However, the overall number of segments may be largely subjective and arbitrary—what critically matters is that our bottom-up approach validates previous top-down segmentation work, which suggest that segments simply reflect groupings along a continuum of climate change concern.

Although our results largely replicate existing findings, they extend the literature by identifying the conspiratorial disposition of Fencesitters and the typical mental models held by each segment. Furthermore, we identified the belief-updating tendencies of segments, demonstrating that in some contexts, Fencesitters may be more receptive to climate change science than Acceptors.

3.4 Potential limitations

Before concluding, we consider some potential limitations of our studies. First, the samples used in our studies are not truly representative of all Australians, as we sampled Australians who participated in panel services, and matched the sample to Australian demographics only on gender and age. This limits the external validity of the study and it therefore remains to be seen whether our results would generalise to the Australian population at large. Second, short-form scales were used to measure some of the psychological constructs. In consequence, some of these scales (e.g. those measuring the big five personality traits) were associated with low internal consistency reliability (since the internal consistency reliability of a scale depends both upon the consistency amongst its items and the number of items). The low reliability of these scales may have obscured true differences between segments on the psychological constructs they measure.

3.5 Conclusion

The predominant approach to segmentation of climate change audiences has been top-down, which privileges researcher preconceptions over audience conceptions of climate change. In contrast, we used a bottom-up approach to ensure segmentation reflects lay views on climate change, as defined by the public. However, we did not disregard theory—we complimented our segmentation by examining the psychological characteristics of segments. We found the Australian public is composed of three segments—Acceptors, Fencesitters, and Sceptics—with unique psychological characteristics and belief-revision tendencies. Communication can be enhanced, our results suggest, by conceptualising the public as relatively homogeneous segments, rather than a heterogeneous whole. Yet, many communicators rely on a “one-size-fits-all” approach. For these communicators, our research outlines a comprehensive profile of segments along with recommendations for communicating with each. We suggest communicators should target Fencesitters who hold moderate views and are receptive to belief revision. Care must nevertheless be taken since, although Fencesitters are receptive to scientific information, they are also potentially vulnerable to misinformation and conspiratorial thinking.

Data availability

Data is available at https://github.com/AndreottaM/audience-segmentation.

Materials availability

Materials are available at https://github.com/AndreottaM/audience-segmentation.

Code availability

Code is available at https://github.com/AndreottaM/audience-segmentation.

References

Andreotta M, Nugroho R, Hurlstone MJ, Boschetti F, Farrell S, Walker I, Paris C (2019) Analyzing social media data: a mixed-methods framework combining computational and qualitative text analysis. Behav Res Methods 51(4):1766–1781. https://doi.org/10.3758/s13428-019-01202-8

Bain PG, Milfont TL, Kashima Y, Bilewicz M, Doron G, Garoarsdóttir RB, Gouveia VV, Guan Y, Johansson L-O, Pasquali C, Corral-Verdugo V, Aragones JI, Utsugi A, Demarque C, Otto S, Park J, Soland M, Steg L, González R, Lebedeva N, Madsen OJ, Wagner C, Akotia CS, Kurz T, Saiz JL, Schultz PW, Einarsdóttir G, Saviolidis NM (2015) Co-benefits of addressing climate change can motivate action around the world. Nat Clim Change 6(2):154–157. https://doi.org/10.1038/nclimate2814

Bayes R, Druckman JN (2021) Motivated reasoning and climate change. Curr Opin Behav Sci 42:27–35. https://doi.org/10.1016/j.cobeha.2021.02.009

Bostrom A, O’Connor RE, Böhm G, Hanss D, Bodi O, Ekström F, Halder P, Jeschke S, Mack B, Qu M, Rosentrater L, Sandve A, Sælensminde I (2012) Causal thinking and support for climate change policies: international survey findings. Glob Environ Change 22(1):210–222. https://doi.org/10.1016/j.gloenvcha.2011.09.012

Brick C, Lewis GJ (2014) Unearthing the “Green” personality: core traits predict environmentally friendly behavior. Environ Behav 48(5):635–658. https://doi.org/10.1177/0013916514554695

Brown SR (1980) Political subjectivity: applications of Q methodology in political science. Yale University Press, New Haven and London

Cacioppo JT, Petty RE (1982) The Need for Cognition. J Pers Soc Psychol 42(1):116–131

Campbell TH, Kay AC (2014) Solution aversion: on the relation between ideology and motivated disbelief. J Pers Soc Psychol 107(5):809–824. https://doi.org/10.1037/a0037963

Capstick SB, Pidgeon NF (2014) What is climate change scepticism? Examination of the concept using a mixed methods study of the UK public. Glob Environ Change 24:389–401. https://doi.org/10.1016/j.gloenvcha.2013.08.012

Cook J, Lewandowsky S (2016) Rational irrationality: modeling climate change belief polarization using Bayesian networks. Top Cogn Sci 8(1):160–179. https://doi.org/10.1111/tops.12186

Cook J, Lewandowsky S, Ecker UsKH (2017) Neutralizing misinformation through inoculation: exposing misleading argumentation techniques reduces their influence. PLOS ONE 12(5):1–21. https://doi.org/10.1371/journal.pone.0175799

Corner A, Markowitz E, Pidgeon N (2014) Public engagement with climate change: the role of human values. Wiley Interdiscip. Rev. Clim. Change 5(3):411–422 (https://doi.org/10.1002/wcc.269)

Corner A, Randall A (2011) Selling climate change? The limitations of social marketing as a strategy for climate change public engagement. Glob Environ Change 21(3):1005–1014. https://doi.org/10.1016/j.gloenvcha.2011.05.002

Detenber BH, Rosenthal S, Youqing L, Ho SS (2016) Audience segmentation for campaign design: addressing climate change in Singapore. Int. J. Commun. 10:4736–4758

Douglas M, Wildavsky A (1983) Risk and culture: an essay on the selection of technological and environmental dangers. University of California Press, Berkeley, Los Angeles, and London

Drews S, van den Bergh JC (2016) What explains public support for climate policies? A review of empirical and experimental studies. Clim. Policy 16(7):855–876 (https://doi.org/10.1080/14693062.2015.1058240)

Ecker UKH, O’Reilly Z, Reid JS, Chang EP (2020) The effectiveness of short-format refutational fact-checks. Br J Psychol 111(1):36–54. https://doi.org/10.1111/bjop.12383

Efron B, Tibshirani R (1994) Introduction to the Bootstrap. Chapman & Hall, New York

Enzler HB (2015) Consideration of future consequences as a predictor of environmentally responsible behavior: evidence from a general population study. Environ Behav 47(6):618–643. https://doi.org/10.1177/0013916513512204

Feygina I, Jost JT, Goldsmith RE (2010) System justification, the denial of global warming, and the possibility of “system-sanctioned change”. Pers Soc Psychol Bull 36(3):326–338. https://doi.org/10.1177/0146167209351435

Fleming W, Hayes AL, Crosman KM, Bostrom A (2021) Indiscriminate, irrelevant, and sometimes wrong: causal misconceptions about climate change. Risk Anal 41(1):157–178. https://doi.org/10.1111/risa.13587

Garrett N, Sharot T (2017) Optimistic update bias holds firm: three tests of robustness following shah et Al. Conscious Cogn 50:12–22. https://doi.org/10.1016/j.concog.2016.10.013

Gehlbach H, Robinson CD, Vriesema CC (2019) Leveraging cognitive consistency to nudge conservative climate change beliefs. J Environ Psychol 61:134–137. https://doi.org/10.1016/j.jenvp.2018.12.004

Granger MM, Fischhoff B, Bostrom A, Atman CJ (2002) Risk communication: a mental models approach. Cambridge University Press, Cambridge

Hart PS, Nisbet EC (2012) Boomerang effects in science communication: how motivated reasoning and identity cues amplify opinion polarization about climate mitigation policies. Commun Res 39(6):701–723. https://doi.org/10.1177/0093650211416646

Hine DW, Phillips WJ, Cooksey R, Reser JP, Nunn P, Marks ADG, Loi NM, Watt SE (2016) Preaching to different choirs: how to motivate dismissive, uncommitted, and alarmed audiences to adapt to climate change?. Glob Environ Change 36:1–11. https://doi.org/10.1016/j.gloenvcha.2015.11.002

Hine DW, Reser JP, Phillips WJ, Cooksey R, Marks ADG, Nunn P, Watt SE, Bradley GL, Glendon AI (2013) Identifying climate change interpretive communities in a large Australian sample. J Environ Psychol 36:229–239. https://doi.org/10.1016/j.jenvp.2013.08.006

Hine DW, Reser JP, Morrison M, Phillips WJ, Nunn P, Cooksey R (2014) Audience segmentation and climate change communication: conceptual and methodological considerations. Wiley Interdiscip Rev Clim Change 5(4):441–459 (https://doi.org/10.1002/wcc.279)

Hobson K, Niemeyer S (2012) “What Sceptics Believe”: the effects of information and deliberation on climate change scepticism. Public Underst Sci 22(4):1–17 (https://doi.org/10.1177/0963662511430459)

Hornsey MJ, Harris EA, Fielding KS (2018) Relationships among conspiratorial beliefs, conservatism and climate scepticism across nations. Nat Clim Change 8(7):614–620. https://doi.org/10.1038/s41558-018-0157-2

Jones NA, Ross H, Lynam T, Perez P, Leitch A (2011) Mental models: an interdisciplinary synthesis of theory and methods. Ecol Soc 16(1). https://doi.org/10.5751/ES-03802-160146

Kahan DM (2012) Cultural cognition as a conception of the cultural theory of risk. In: Roeser S, Hillerbrand R, Sandin P, Peterson M (eds) Handbook of Risk Theory: Epistemology, Decision Theory, Ethics, and Social Implications of Risk. Springer Netherlands, Dordrecht, pp 725–759. https://doi.org/10.1007/978-94-007-1433-5_28

Kahan DM, Peters E, Dawson EC, Slovic P (2013) Motivated numeracy and enlightened self-government. Behav Public Policy 1(1):54–86. https://doi.org/10.1017/bpp.2016.2

Kaiser J, Puschmann C (2017) Alliance of antagonism: counterpublics and polarization in online climate change communication. Commun Public 2(4):1–17 (https://doi.org/10.1177/2057047317732350)

Kay AC, Jost JT (2003) Complementary justice: effects of “Poor But Happy” and “Poor but Honest” stereotype exemplars on system justification and implicit activation of the justice motive. J Pers Soc Psychol 85(5):823–837. https://doi.org/10.1037/0022-3514.85.5.823

Kempton W, Boster JS, Hartley JA (1996) Environmental values in American culture. MIT Press, Woburn

Leiserowitz A (2006) Climate change risk perception and policy preferences: the role of affect, imagery, and values. Clim Change. 77:45–72. https://doi.org/10.1007/s10584-006-9059-9

Leiserowitz A, Roser-Renouf C, Marlon J, Maibach E (2021) Global Warming’s Six Americas: a review and recommendations for climate change communication. Curr. Opin. Behav. Sci. 42:97–103. https://doi.org/10.1016/j.cobeha.2021.04.007

Leiserowitz A, Thaker J, Feinberg G, Cooper DK (2013) Global Warmings Six Indias (tech. rep.). Yale University, New Haven. https://climatecommunication.yale.edu/publications/global-warmings-six-indias/

Lewandowsky S (2021) Conspiracist cognition: chaos, convenience, and cause for concern. J. Cult. Res. 25(1):12–35. https://doi.org/10.1080/14797585.2021.1886423

Lewandowsky S, Cook J, Ecker UKH, Albarracn D, Amazeen MA, Kendeou P, Lombardi D, Newman EJ, Pennycook G, Porter E, Porter E, Rapp DN, Reifler J, Roozenbeek J, Schmid P, Seifert CM, Sinatra GM, Swire-Thompson B, van der Linden S, Vraga EK, Wood TJ, Zaragoza MS (2020) The debunking handbook 2020 (tech. rep.) https://doi.org/10.17910/b7.1182

Lewandowsky S, Ecker UK, Cook J (2017) Beyond misinformation: understanding and coping with the “Post-Truth” era. J Appl Res Mem Cogn 6(4):353–369. https://doi.org/10.1016/j.jarmac.2017.07.008

Lewandowsky S, Gignac GE, Oberauer K (2013) The role of conspiracist ideation and worldviews in predicting rejection of science. PLOS ONE 8(10). https://doi.org/10.1371/journal.pone.0075637

Lewandowsky S, Oberauer K, Gignac GE (2013) NASA faked the moon landing—therefore, (climate) science is a hoax: an anatomy of the motivated rejection of science. Psychol. Sci. 24(5):622–633. https://doi.org/10.1177/0956797612457686

Lindeman M, Verkasalo M (2005) Measuring values with the short Schwartz’s value survey. J. Pers. Assess. 85(2):170–178. https://doi.org/10.1207/s15327752jpa8502∖_09

Lins de Holanda Coelho G., Hanel PH, Wolf LJ (2018) The very efficient assessment of need for cognition: developing a six-item version. Assessment 27(8):1870–1885. https://doi.org/10.1177/1073191118793208

Ma Y, Li S, Wang C, Liu Y, Li W, Yan X, Chen Q, Han S (2016) Distinct oxytocin effects on belief updating in response to desirable and undesirable feedback. PNAS 113(33):9256–9261. https://doi.org/10.1073/pnas.1604285113

Maibach E, Leiserowitz A, Roser-Renouf C, Mertz CK (2011) Identifying like-minded audiences for global warming public engagement campaigns: an audience segmentation analysis and tool development. PLOS ONE 3(6). https://doi.org/10.1371/journal.pone.0017571

Malka A, Krosnick JA, Langer G (2009) The association of knowledge with concern about global warming: trusted information sources shape public thinking. Risk Anal 29(5):633–647. https://doi.org/10.1111/j.1539-6924.2009.01220.x

Markus A (2021) Mapping social cohension (tech. rep.). Scanlon Foundation Research Institute, Melbourne. https://scanloninstitute.org.au/research/mapping-social-cohesion

McGuire WJ, Papageorgis D (1961) The relative efficacy of various types of prior belief-defense in producing immunity against persuasion. J. Abnorm. Soc. Psychol. 62(2):327–337

McKeown B, Thomas DB (2013) Q methodology (Second). SAGE Publications Ltd., Beverly Hills

Metag J, Füchslin T, Schäfer MS (2017) Global Warming’s Five Germanys: a typology of Germans’ views on climate change and patterns of media use and information. Public Underst Sci 26(4):434–451. https://doi.org/10.1177/0963662515592558

Morrison M, Duncan R, Sherley C, Parton K (2013) A comparison between attitudes to climate change in Australia and the United States. Australas J Environ Manag 20(2):87–100. https://doi.org/10.1080/14486563.2012.762946

Morrison M, Parton K, Hine DW (2018) Increasing belief but issue fatigue: changes in Australian household climate change segments between 2011 and 2016. PLOS ONE 13(6):1–18 (https://doi.org/10.1371/journal.pone.0197988)

Neumann C, Stanley SK, Leviston Z, Walker I (2022) The Six Australias: concern about climate change (and global warming) is rising. Environ Commun. https://doi.org/10.1080/17524032.2022.2048407

Noar SM, Benac CN, Harris MS (2007) Does tailoring matter? Meta-analytic review of tailored print health behavior change interventions. Psychol Bull 133(4):673–693. https://doi.org/10.1037/0033-2909.133.4.673

Paynter J, Luskin-Saxby S, Keen D, Fordyce K, Frost G, Imms C, Miller S, Trembath D, Tucker M, Ecker U (2019) Evaluation of a template for countering misinformation—real-world autism treatment myth debunking. PLOS ONE 14(1):1–13 (https://doi.org/10.1371/journal.pone.0210746)

Pew Research Center (2022) Publics Top Priority for 2022: Strengthening the nations economy (tech. rep.). Pew Research Center, Washington, DC. https://www.pewresearch.org/politics/2022/02/16/publics-top-priority-for-2022-strengthening-the-nations-economy

Price JC, Walker IA, Boschetti F (2014) Measuring cultural values and beliefs about environment to identify their role in climate change responses. J Environ Psychol 37:8–20. https://doi.org/10.1016/j.jenvp.2013.10.001

Rammstedt B, John OP (2007) Measuring personality in one minute or less: a 10-item short version of the Big Five Inventory in English and German. J. Res. Personal. 41(1):203–212. https://doi.org/10.1016/j.jrp.2006.02.001

Read D, Bostrom A, Morgan MG, Fischhoff B, Smuts T (1994) What do people know about global climate change? 2. Survey studies of educated laypeople. Risk Anal 14(6):971–982. https://doi.org/10.1111/j.1539-6924.1994.tb00066.x

Reynolds TW, Bostrom A, Read D, Morgan MG (2010) Now what do people know about global climate change? Survey studies of educated laypeople. Risk Anal 30(10):1520–1538. https://doi.org/10.1111/j.1539-6924.2010.01448.x

Rouse WB, Morris NM (1986) On looking into the black box: prospects and limits in the search for mental models. Psychol Bull 100(3):349–363. https://doi.org/10.1037/0033-2909.100.3.349

Sarathchandra D, Haltinner K (2021) How believing climate change is a “Hoax” shapes climate skepticism in the united states. Environ Sociol 7(3):225–238. https://doi.org/10.1080/23251042.2020.1855884

Schwartz SH (2012) An overview of the Schwartz theory of basic values. Online Read Psychol Cult 2(1):120

Sinatra GM, Kienhues D, Hofer BK (2014) Addressing challenges to public understanding of science: epistemic cognition, motivated reasoning, and conceptual change. Educ Psychol 49(2):123–138. https://doi.org/10.1080/00461520.2014.916216

Smith N, Leiserowitz A (2014) The role of emotion in global warming policy support and opposition. Risk Anal 34(5):937–948. https://doi.org/10.1111/risa.12140

Smith W R (1956) Product differentiation and market segmentation as alternative marketing strategies. J. Mark. 21(1):3–8. https://doi.org/10.2307/1247695

Stephenson W (1986) Protoconcursus: the concourse theory of communication. Operant Subj 9(2):37–58

Stoutenborough JW, Vedlitz A (2014) The effect of perceived and assessed knowledge of climate change on public policy concerns: an empirical comparison. Environ Sci Policy 37:23–33. https://doi.org/10.1016/j.envsci.2013.08.002

Strathman A, Gleicher F, Boninger DS, Edwards CS (1994) The consideration of future consequences: weighing immediate and distant outcomes of behavior. J Pers Soc Psychol 66(4):742–752. https://doi.org/10.1037/0022-3514.66.4.742

Sunstein CR, Bobadilla-Suarez S, Lazzaro SC, Sharot T (2017) How people update beliefs about climate change: good news and bad news. Cornell Law Rev 102(6):1431–1444

Thompson M, Ellis R, Wildavsky A (1990) Cultural theory. Westview Press, New York

United Nations (2015) Paris Agreement (tech. rep.). United Nations, Paris. https://unfccc.int/sites/default/files/english_paris_agreement.pdf

van der Linden S, Leiserowitz A A, Feinberg G D, Maibach E W (2015) The scientific consensus on climate change as a gateway belief: experimental evidence. PLOS ONE 10(2):1–8. https://doi.org/10.1371/journal.pone.0118489

van der Linden S (2021) The Gateway Belief Model (GBM): a review and research agenda for communicating the scientific consensus on climate change. Curr Opin Psychol 42:7–12. https://doi.org/10.1016/j.copsyc.2021.01.005

van der Linden S (2017) Determinants and measurement of climate change risk perception, worry, and concern. In: Nisbet MC, Schafer M, Markowitz S, O’Neill S, Thaker J (eds) The Oxford Encyclopedia of Climate Change Communication. Oxford University Press, Oxford

van der Linden S, Leiserowitz A, Maibach E (2019) The gateway belief model: a large-scale replication. J Environ Psychol 62:49–58. https://doi.org/10.1016/j.jenvp.2019.01.009

van der Linden S, Leiserowitz A, Rosenthal S, Maibach E (2017) Inoculating the public against misinformation about climate change. Glob Chall 1(2):1600008. https://doi.org/10.1002/gch2.201600008

Wang X (2017) Understanding climate change risk perceptions in China: media use, personal experience, and cultural worldviews. Sci Commun 39(3):291–312. https://doi.org/10.1177/1075547017707320

Watts S, Stenner P (2012) Doing Q methodological research theory, method and interpretation. SAGE Publications Ltd., Cornwall

Wolf J, Brown K, Conway D (2009) Ecological citizenship and climate change: perceptions and practice. Environ Polit 18(4):503–521. https://doi.org/10.1080/09644010903007377

Yale Program on Climate Change Communication (2022) Global Warmings Six Americas. https://climatecommunication.yale.edu/about/projects/global-warmings-six-americas. Accessed 18 Oct 2022

Yu T-Y, Yu T-K (2017) The moderating effects of students’ personality traits on pro-environmental behavioral intentions in response to climate change. Int J Environ Res Public Health 14(12):1–20. https://doi.org/10.3390/ijerph14121472

Zia A, Todd AM (2010) Evaluating the effects of ideology on public understanding of climate change science: how to improve communication across ideological divides?. Public Underst Sci 19(6):743–761. https://doi.org/10.1177/0963662509357871

Acknowledgements

The authors are grateful to Blake Cavve for his assistance in annotating data.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions This research was supported by an Australian Government Research Training Program (RTP) Scholarship from the University of Western Australia and a scholarship from the Commonwealth Scientific and Industrial Research Organisation Research Office awarded to the first author.

Author information

Authors and Affiliations

Contributions

Matthew Andreotta: conceptualisation, methodology, software, data curation, writing — original draft, funding acquisition. Fabio Boschetti: formal analysis, writing — review and editing. Simon Farrell: formal analysis, writing — review and editing. Cecile Paris: formal analysis, writing — review and editing. Iain Walker: conceptualisation, writing — review and editing. Mark Hurlstone: conceptualisation, formal analysis, writing — review and editing, supervision.

Corresponding author

Ethics declarations

Ethics approval

The studies reported in this paper were approved by the University of Western Australia Human Research Ethics Office (RA/4/20/5104) and the Commonwealth Scientific and Industrial Research Organisation Human Research Ethics Committee (026/19).

Consent to participate

Participants provided informed consent.

Consent for publication

Participants consented to the publication of data.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Andreotta, M., Boschetti, F., Farrell, S. et al. Evidence for three distinct climate change audience segments with varying belief-updating tendencies: implications for climate change communication. Climatic Change 174, 32 (2022). https://doi.org/10.1007/s10584-022-03437-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10584-022-03437-5