Abstract

Probabilistic projections of baseline (with no additional mitigation policies) future carbon emissions are important for sound climate risk assessments. Deep uncertainty surrounds many drivers of projected emissions. Here, we use a simple integrated assessment model, calibrated to century-scale data and expert assessments of baseline emissions, global economic growth, and population growth, to make probabilistic projections of carbon emissions through 2100. Under a variety of assumptions about fossil fuel resource levels and decarbonization rates, our projections largely agree with several emissions projections under current policy conditions. Our global sensitivity analysis identifies several key economic drivers of uncertainty in future emissions and shows important higher-level interactions between economic and technological parameters, while population uncertainties are less important. Our analysis also projects relatively low global economic growth rates over the remainder of the century. This illustrates the importance of additional research into economic growth dynamics for climate risk assessment, especially if pledged and future climate mitigation policies are weakened or have delayed implementations. These results showcase the power of using a simple, transparent, and calibrated model. While the simple model structure has several advantages, it also creates caveats for our results which are related to important areas for further research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

What is a sound approach to projecting future climate change and its impacts? This is a critical question for understanding the impact of adaptation and mitigation strategies. Projected climatic changes hinge on Earth system properties and future drivers, including anthropogenic carbon dioxide (CO2) emissions. Projections of future anthropogenic CO2 emissions are deeply uncertain; there is no consensus about the probability distribution of future emissions (Ho et al. 2019). Poorly calibrated emissions projections translate into poor climate projections and contribute to poor risk management decisions (Morgan and Keith 2008).

One approach, adopted in the reports of the Intergovernmental Panel on Climate Change, or IPCC (IPCC 2014), to handling the deep uncertainty associated with future emissions and the associated radiative forcing is to use scenarios which cover an appropriate range of plausible futures. For example, the Shared Socioeconomic Pathways, or SSPs (O’Neill et al. 2014; Riahi et al. 2017) explore a plausible range of future emissions through an internally consistent set of future socioeconomic narratives. These scenarios are useful for creating a set of harmonized assumptions for modeling and impacts studies. As they are not intended to be interpreted as predictions of future socioeconomic, emissions, or climate trajectories, they are explicitly provided without probabilities or likelihoods (van Vuuren et al. 2011). This framework is useful for many purposes. However, this lack of probabilistic information makes them difficult to integrate into risk assessment for climate change impacts or adaptation and makes their interpretation susceptible to the cognitive biases that interfere with decision-making under uncertainty (Tversky and Kahneman 1974; Morgan et al. 1992; Webster et al. 2001). For example, one problematic approach is to view all scenarios as equally likely (Wigley and Raper 2001).

Another more recent example is the controversy over presentations of the highest Representative Concentration Pathway, RCP 8.5 (Riahi et al. 2011), as a baseline scenario for impacts (Hausfather and Peters 2020a). We refer a “baseline scenario” as one with no inclusion of the effects of mitigation policies beyond those currently implemented, rather than the original use of the term in the integrated assessment modeling (IAM) literature, where “baseline” refers specifically to a scenario generated by a model run with no forced mitigation through climate policies (van Vuuren et al. 2011). Critics of the interpretation that RCP 8.5 represents a baseline (no inclusion of additional mitigation policies beyond those currently implemented) radiative forcing pathway raise concerns about the increase in coal energy share, relative to present trends, required to achieve this forcing by IAMs (Ritchie and Dowlatabadi 2017a; 2017b; Hausfather and Peters 2020a; Burgess et al. 2021). Indeed, as noted by Hausfather and Peters (2020a) and Skea et al. (2021), current International Energy Agency (IEA) projections diverge from the emissions required by higher-emitting scenarios such as RCP 8.5, and O’Neill et al. (2020) list keeping scenarios up to date and adding additional scenarios in more risk-relevant areas of the scenario space as two key future needs for scenario development. On the other hand, Schwalm et al. (2020) observes that the emissions trajectory associated with the older standalone RCP 8.5 (rather than the newer joint SSP5-8.5 scenario (Kriegler et al. 2014)) closely tracks recent emissions, though this arises from differences between projected and observed land-use change emissions rather than fossil emissions (Hausfather and Peters 2020b). Additionally, along with RCP 4.5, RCP 8.5 bounds a reasonable range surrounding projected emissions through 2050, particularly when land-use change emissions are included. As a result, the authors conclude that RCP 8.5 continues to provide value as a short-to-medium term mean scenario and a longer-term tail-risk scenario.

Both of these seemingly antagonistic perspectives are driven and justified by different use cases and information needs. In this case, the epistemic value provided by RCP 8.5 depends on the question posed and whether the goal is to identify a range of illustrative outcomes or to enumerate probabilities of outcomes to assess and manage risk. This latter consideration is complicated by the underlying deep uncertainties. For example, there is little consensus on key drivers such as projections of future economic growth (Christensen et al. 2018) or the penetration rates of zero-carbon technologies in the global energy mix. Uncertainty about the future strength and direction of mitigation policies further complicates the calculation of probabilities. The potential role of negative emissions technologies (NETs) is another deep uncertainty that directly affects projected emissions trajectories. These considerations demonstrate the need for a transparent and systematic understanding of the relevant assumptions and dynamics and underpin a particular probabilistic projection, so analysts and decision-makers can better understand and account for caveats associated with the resulting probabilities.

A key consideration in producing probabilistic projections is the trade-off between two competing modeling objectives: realistic dynamics to capture key processes and utilize interpretable parameters versus sufficiently fast model evaluations to enable careful uncertainty characterization and quantification. The previous literature on probabilistic emissions projections demonstrates a variety of approaches to navigating this trade-off, which are motivated by the underlying research question. When highly detailed, computationally expensive “bottom-up” models are used, such as the Global Change Assessment Model (GCAM) or the Emissions Prediction and Policy Analysis model (EPPA), one option is to focus on the influence of a few uncertain inputs, as this uncertainty space can be captured with a relatively small-to-moderate number of samples (e.g., Capellán-Pérez et al. 2016; Fyke and Matthews 2015). One downside to this approach with complex models is that the impact of interactions between the inputs treated as uncertain and those which are not may be missed. van Vuuren et al. (2008) use a broader set of inputs, but focus on sampling around each of the no-policy IPCC-SRES scenarios rather than sampling the entire probability space. A larger set of samples can be used with an emulator of the full model (e.g., Webster et al. 2002; Sokolov et al. 2009; Webster et al. 2012; Gillingham et al. 2018), as emulation allows for many more model evaluations within a fixed computational budget, resulting in an ability to explore many more sources of uncertainty at the expense of losing some of the dynamical richness of the full model (depending on the type of emulator or response surface used). Gillingham et al. (2018) go further in building emulators of multiple IAMs, which are used to understand the impact of model structural uncertainty on the resulting projections. Another approach is the use of a Bayesian statistical model calibrated using historical data (e.g., Raftery et al. 2017; Liu and Raftery 2021). These models can also be run many times, allowing them to fully resolve the tails of the projective distributions, and are flexible enough to capture historical dynamics while representing different future scenarios and potential trend breaks. However, they may have parameters which are less interpretable, potentially resulting in fewer insights from examining sensitivities and marginal parameter distributions.

This spread of approaches is valuable as it reveals the impacts of the underlying modeling assumptions and included uncertainties on the resulting projections. In this study, we add to this literature by using a simple, mechanistically motivated integrated assessment model. The simplicity of the model permits millions of model evaluations without requiring a reduced-form emulator, allowing full statistical calibration of the model on historical data and insights into the dynamics of the full model. This level of simplicity provides epistemic benefits by making full uncertainty quantification computationally tractable (Helgeson et al. 2021), much like the statistical approach of Raftery et al. (2017) and Liu and Raftery (2021). The mechanistically motivated model structure allows us to incorporate theoretical insights about the dynamics and structure of the relationships between interpretable model parameters using prior distributions drawn from the literature.

We focus on projecting baseline CO2 emissions for the remainder of the twenty-first century, only incorporating the effects of those mitigation policies which have had sufficient effect to be reflected in the calibration data (both historical observations and expert assessments made under the baseline assumption). These baseline emissions projections will not necessarily be consistent with the 2010 baselines adopted by the SSPs, as we calibrate, constrain, and initialize our model using more recent observations which have been influenced by past and current policies, as well as expectations about potential future policies. We also neglect the impact of currently unproven but potentially impactful NETs, as it is unclear what technologies might eventually penetrate on a wide scale, when they might do so, and what their level of negative emissions may be (Fuss et al. 2014; Smith et al. 2015; Vaughan and Gough 2016). It is also unclear as to whether NETs would be able to achieve a critical deployment level in the absence of additional climate policies (Honegger and Reiner 2018), which we do not consider. As a result, these projections are best understood as a reflection of what CO2 emissions might look like without significant changes in mitigation policy implementation or technological development and deployment patterns. We refrain from projecting global mean temperatures, as we do not consider the effects of carbon-cycle and biogeochemical dynamics and their uncertainties, which can have a large impact on the resulting CO2 concentrations (Booth et al. 2017; Quilcaille et al. 2018), as well as uncertainties related to climate sensitivity (Goodwin and Cael 2021).

Naturally, the simple structure of our model does mean that some assumptions about the structure are particularly influential, which creates a number of caveats. This analysis illustrates some of the challenges faced in navigating the simplicity-realism trade-off, while also showing the potential for what can be learned using this approach.

2 Modeling overview

In this section, we provide a brief overview of the structure of our model. Full details are available in Section S1 of Online Resource 1. As a starting point, we adopt the overall structure of the DICE model (Nordhaus and Sztorc 2013; Nordhaus 2017). While our model structure is similar to DICE, our analysis differs from one of the typical uses of that model (e.g., Nordhaus 1992) as we do not optimize over the space of abatement policies. We expand on the DICE model structure by allowing population growth to be endogenous and affected by economic growth. We also use a different approach to represent changes in the emissions intensity of the global economy, which in our model is the result of successive penetrations of technologies with varying emissions intensities. The resulting model structure involves a logistic population growth component with an uncertain saturation level. We model global economic output using a Cobb-Douglas production function in a Solow-Swan model of economic growth. Population and economic output influence each other through changes in per-capita consumption and labor inputs.

Economic output is translated into emissions using a mixture of four emitting technologies: a zero-carbon pre-industrial technology, a high-carbon intensity fossil fuel technology (representative of coal), a lower-carbon intensity fossil fuel technology (representative of oil and gas), and a zero-carbon advanced technology (representative of renewables and nuclear). The lack of NETs in our modeling framework means that we cannot fully explore the bottom of the emissions range captured by the SSP-RCP framework, as several of these scenarios, namely SSP1-1.9, SSP2-2.6, and SSP4-3.4, include their penetration prior to 2100.

We treat all model parameters, including emissions technology penetration dynamics, as uncertain. The statistical model accounts for cross-correlations across the model errors of the three modules as well as independent observation errors. We calibrate the model using century-scale observations of population and global domestic products per capita (Bolt and van Zanden 2020) and anthropogenic CO2 emissions (excluding land use emissions) (Boden et al. 2017; Friedlingstein et al. 2020). As the Bolt and van Zanden (2020) data extend only to 2018, we extend them to 2020 using World Bank data (The World Bank 2020) for global domestic product per capita and United Nations data for population (United Nations, Department of Economic and Social Affairs, Population Division 2019). We also probabilistically invert (Kraan and Cooke 2000; Fuller et al. 2017) three expert assessments in our calibration procedure to gain additional information about prior distributions and potential future changes to population (United Nations, Department of Economic and Social Affairs, Population Division 2019), economic output (Christensen et al. 2018), and CO2 emissions (Ho et al. 2019) which are not reflected in the historical data. More information about the model calibration procedure is available in Section S2 of Online Resource 1, while full details on the derivation of the likelihood function and the choice of prior distributions are available in Sections S3 and S4 of Online Resource 1, respectively.

Two key deep uncertainties affecting probabilistic projections of CO2 emissions are (i) the size of the fossil fuel resource base (Capellán-Pérez et al. 2016; Wang et al. 2017) and (ii) the prior beliefs about the penetration rate of zero- or low-carbon energy technologies (Gambhir et al. 2017). First, we consider the impact of unknown quantities of remaining fossil fuel resources, which are fossil fuel deposits which are potentially recoverable (as opposed to reserves, which are available for profitable extraction with current prices and technologies) (Rogner 1997). Estimates of the fossil fuel resource base vary widely (McGlade 2012; McGlade et al. 2013; Mohr et al. 2015; Ritchie and Dowlatabadi 2017a). Recent criticisms of the continued use of high-emissions and high-forcings scenarios focus on the plausibility of the required amount of fossil fuels, particularly coal, required to generate the emissions associated with these scenarios (Ritchie and Dowlatabadi 2017a; 2017b). For the penetration rate of low-carbon technologies, historical emissions data can only provide limited information about this transition due to the relatively limited penetration of these technologies to date and differences in the penetration dynamics of other generating technologies such as coal, oil, and natural gas (Gambhir et al. 2017).

We focus on these deep uncertainties to illustrate the sensitivity of probabilistic projections of emissions, and therefore temperature anomalies, to these assumptions, while recognizing that there are other influential deep uncertainties. To account for the impact of deep uncertainties and to simplify the discussion, we design four scenarios. Our “standard” scenario assumes a fossil fuel resource base consistent with the best guess resource estimates from Mohr et al. (2015) and uses a truncated normal prior distribution for the half-saturation year of zero-carbon technologies (that is, the year when that technology achieves a 50% share of the energy mix). This distribution assigns a 2.5% prior probability to half-saturation between 2020 and 2050, and has its mode at 2100. Our “low fossil-fuel” and “high fossil-fuel” scenarios use the same prior distribution over the zero-carbon technology half-saturation year, but adopt the low and high resources estimates, respectively, from Mohr et al. (2015). These three scenarios allow us to explore the implications of varying assumptions about fossil fuel supplies, with the associated knock-on effects for energy-generating costs, on CO2 emissions.

We also examine the sensitivity of our projections to a more pessimistic set of prior beliefs about zero-carbon technology penetration. In this “delayed zero-carbon” scenario, we assign only a 2.5% prior probability to global half-saturation by 2100. To isolate the impact of this prior distribution, we use the same fossil fuel resource constraint as in the standard scenario.

In each of these scenarios, if a set of parameters results in fossil-fuel consumption exceeding the size of the resource base for any fuel, it is excluded from the calibration set. This induces a trade-off between economic growth and fossil-fuel consumption, such that economic growth is slowed down if the economy is still dependent on limited fossil-fuel resources. While we do not explicitly model the prices of fossil fuels, this resulting effect is analogous to the effect of increasing prices due to relative resource scarcity. However, for a fixed rate of economic growth, technology succession can occur earlier than the latest year associated with the fossil fuel constraint, representing changing demand as the driver of technological change, rather than restrictions in supply.

By itself, this fossil-fuel constraint does not rule out ahistorical fuel substitution dynamics despite the statistical calibration procedure, as uncertainties in and correlations between the emissions intensities of the fossil-fuel technologies, the half-saturation years of the various technologies, and the rate of technological penetration create large degrees of freedom in mapping historical economic growth to CO2 emissions. For example, some parameter sets might imply a 50% zero-carbon share and/or a less than 10% coal share in 2020 (see Fig. S1 in Online Resource 1). To constrain this behavior, we add a further constraint on technology shares in 2019. Based on fuel share data from the last 10 years (BP 2020), we require that the coal share in 2019 is between 20 and 30% and that the zero-carbon share is between 10 and 20%. These windows were chosen to be relatively wide to acknowledge year-to-year volatility in these shares. This constraint captures one of the most notable differences between our “baseline” scenarios and the no-policy “baseline” SSP-RCP scenarios, as the share of these various technologies has been influenced by past and current policies such as subsidies and tax credits.

Our model simulations also include error terms to account for discrepancies between the data and the model outputs (Brynjarsdóttir and O’Hagan 2014). These error terms are generated conditionally using the model discrepancy process (see Section S3 of Online Resource 1) conditional on the discrepancies over a period of years which are selected to be fixed. For example, the discrepancy terms for projections are sampled conditionally on the model-data discrepancies over the 1820–2019 historical period to preserve the calibrated variance structure of the discrepancies.

3 Calibration results

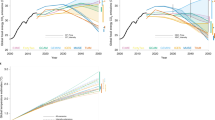

We conduct a hindcasting exercise and perform cross-validation to assess potential biases of the calibrated model and to evaluate out-of-sample projection skill. For the hindcast, we make projections for the period from 1900 to 2019 conditional on data from 1820 to 1899 (see Fig. 1). The median growth simulations slightly underestimate post-2000 economic growth. As a result, the model was unable to reproduce the rapid growth in emissions starting around 2000, while it did capture the recent slowing of the emissions growth rate. The hindcasts also demonstrate a large degree of underconfidence in the post-1950 period.

We further test the model predictive skill use a k-fold cross-validation procedure. We randomly sample fifty hold-out test data sets (each corresponding to 40 years). The model is re-calibrated with the remaining training data, and we generate simulated data for the held-out years conditional on the training data. The average cross-validation coverage of the 90% credible intervals for the held-out data are 92% for population and economic output and 93% for emissions, suggesting good out-of-sample predictive performance despite the hindcast’s underconfidence. The underconfidence seen in the hindcast is likely the result of the accumulation of errors over the 120-year hindcasting period. These errors can grow quite large, as the model’s projections and the data do not diverge strongly until later in the twentieth century across all parameter vectors in the calibrated parameter set. However, we note that this level of underconfidence is similar to that seen in Raftery et al. (2017) despite the many differences between the two models, suggesting that this may be a consequence of using a statistically calibrated, relatively simple model.

4 Projections of future CO2 emissions

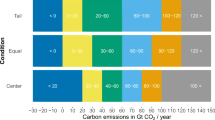

Deep uncertainty about fossil fuel resources causes more variability in CO2 projections than prior beliefs about zero-carbon penetration (Fig. 2c). Under the base scenario assumptions, the median cumulative emissions from 2020 to 2100 are 2200 GtCO2, with the 90% prediction interval covering 1500–3000 GtCO2. The lower end of the prediction interval, as well as the median levels, are only slightly influenced by the fossil fuel constraint, varying by 100–200 GtCO2 at most, which is expected as the constraint does not affect simulations with faster decarbonization than is required by the supply-side limit. On the other hand, the supply constraint matters more at the upper end, as the low-fossil fuel scenario prediction interval’s upper bound is 2600 GtCO2, with the high-fossil fuel interval extending to 3400 GtCO2. This is largely the result of varying the coal constraint, as residual coal emissions can get close to the resource limit when the penetration of lower- and zero-emitting technologies is slower and economic growth rates are more rapid. This is consistent with the analysis by Capellán-Pérez et al. (2016), conducted using the more detailed GCAM, which found that, when considering resource constraints, cumulative CO2 emissions was mainly sensitive to the size of the coal resource base. Both of these results further agree with the argument by Ritchie and Dowlatabadi (2017a) and Ritchie and Dowlatabadi (2017b) that the relative plausibility of higher-emissions scenarios is dependent on their assumptions about coal resource availability and utilization.

Projections of model outputs – Projections of (a) global population (billions of persons), (b) gross world product (trillions 2011US$), and (c) annual carbon dioxide emissions projections from 2020 to 2100 for the considered scenarios. The shaded regions are the central 90% credible intervals. Black dots represent observations from 2000 to 2019. The marginal distributions of each output in 2100 are shown on the right. The relevant quantities from the marker baseline SSP-RCP emissions scenarios (Riahi et al. 2017; Rogelj et al. 2018; O’Neill et al. 2016) are shown for comparison by the lines

The main impact of the technology penetration constraint is to sharply constrain the half-saturation years of the various generating technologies (τi in Fig. S2), and, due to correlations, the inferred emissions intensity of the higher-emissions technology (ρ2 in Fig. S2). Due to this sharpening of the half-saturation year of the zero-emissions technology (τ4 in Fig. S2), there is little difference between the standard and delayed zero-carbon scenario penetrations, despite the influence of the CO2 emissions expert assessment from Ho et al. (2019) in the calibration (see Fig. S3 and Fig. S4; including the CO2 expert assessment increases the upper tail areas of the non-low fossil fuel scenarios, but does not result in a separation of the standard and delayed zero-carbon scenarios despite their different priors, as the marginal distributions of the emissions parameters are the same regardless of what assessments are used). The impact of the constraint on emissions projections can be seen in Fig. S5. The technological constraint also induces a typical half-saturation year in the second-half of the twenty-first century, with the 90% central credible interval between 2057 and 2086, and a median half-saturation year of 2071 (see Fig. S6 for how the distribution of shares of our technologies change over the remainder of the century). These quantities are relatively insensitive to the resource constraint.

After accounting for the additional emissions from 2015 to 2019, the base median estimate of 2100 GtCO2 is slightly higher than that from the “Continued” forecast from Liu and Raftery (2021), which had a median cumulative emissions from 2015 to 2100 of 2100 GtCO2. It should be noted that this Liu and Raftery (2021) scenario assumes that nations will meet their Nationally Determined Contributions (NDCs), and therefore assumes additional mitigation policies beyond those captured by our model’s data. The relatively small difference is likely due to the logistic penetration curve in our model, which causes a faster rate of decarbonization after half-saturation compared to the constant rate of post-NDC carbon intensity decrease in the Liu and Raftery (2021) scenario. However, all of our projections are much lower than the base forecasts by Liu and Raftery (2021), which could be due to their spatial disaggregation, as economic growth with slower decarbonization can result in lower cumulative emissions from countries and regions with relatively small economies and, therefore, low levels of emissions despite high projected utilizations of fossil fuels. In our model, the global economy decarbonizes uniformly, the rate of which (due to the penetration constraint) is heavily influenced by the energy mix in developed countries. This suggests that our projections may be biased towards lower levels of emissions by the level of aggregation, as globally aggregated projections can mask the importance of regional deviations from the global trend (Pretis and Roser 2017).

Our model’s projections are broadly consistent with the extrapolations from current International Energy Agency (IEA) projections made by Hausfather and Peters (2020a). Indeed, in the IEA Stated Policies scenario (IEA 2020), emissions in 2030 are 36 GtCO2/year, which is the median value of our high fossil fuel scenario (90% predictive interval of 28–46 GtCO2/year) and close to the median (35 GtCO2/year in 2030) of our standard scenario’s projections (90% predictive interval 28–45 GtCO2/year) and low-fossil fuel projections (median 34 GtCO2/year, 90% predictive interval 27–43 GtCO2/year). The IEA’s Sustainable Development scenario, which projects 27 GtCO2/year, is just in the lower tail of most of our projections. Unsurprisingly, then, the bulk of our predictive distributions, as shown in Fig. 2, correspond to the range deemed by Hausfather and Peters (2020a) to be “likely” given current policies. However, as with the Hausfather and Peters (2020a) analysis, our model does not account for land-use emissions, which could increase emissions sufficiently to be more consistent with higher-emitting SSP-RCP scenarios (Schwalm et al. 2020). Furthermore, our model cannot by its structure account for the possibility of technological backsliding, which would result in increase in global emissions intensities due to, e.g., an increase in coal use in varying regions around the world or the replacement of nuclear plants with fossil-fueled generation. These possible trend breaks are also not reflected in projections such as those from the IEA, which are mainly based on extrapolating current trends (Skea et al. 2021). For example, as noted by Skea et al. (2021), this class of projections anticipates nuclear generation growth by 39% on average.

The projections for the remainder of the century broadly span the area captured by those SSP-RCP scenarios which include additional decarbonization, but no NET penetration (see Figs. 2 and 3. In particular, SSP2-4.5 closely tracks the upper range of the standard and delayed zero-carbon scenarios until about 2070, when decarbonization occurs more rapidly in that SSP-RCP scenario than in our projections. SSP4-6.0, on the other hand, is a tail scenario under these assumptions, but characterizes the upper level of the likely range of the high-fossil fuel scenario. The no-policy SSP5-8.5 and SSP3-7.0 scenarios are well outside of our distributions, but for different reasons. Our model considers SSP5-8.5 implausible due to the runaway levels of economic growth (see Fig. 2b) fueled by the wide utilization of extremely large coal reserves. On the other hand, SSP3-7.0 has slower economic growth, but both large population growth (Fig. 2a) and continued fossil fuel use.

Cumulative emissions projections from 2018 to 2100 – Cumulative density functions for cumulative emissions projections for the four model scenarios. The grey lines are the cumulative emissions over this period for the labelled IPCC scenario. The green region represents cumulative emissions which are consistent with at least a 50% probability of achieving the 2∘C Paris Accords target (Rogelj et al. 2018)

The impact of projected economic growth is crucial for interpreting these projections as well-calibrated or biased-downward. Due to our choice of calibration period, our projected rates of per-capita economic growth from 2018 to 2100 are lower than 2% per year (see Fig. S7). This is much lower than the expert assessment reported in Christensen et al. (2018), which was used in both this analysis and Gillingham et al. (2018). With the projected levels of growth from our calibrated model and the rate of decarbonization induced by the penetration constraint, almost all of our simulations show the rate of carbon intensity decreasing more rapidly than the increase in per-capita economic growth. The difference between these two Kaya identity components is greater than in the forecast by Liu and Raftery (2021), which could be the result of our spatial aggregation, as discussed earlier.

Our base scenario simulations contain a small fraction (4%) of outcomes where emissions are reduced rapidly enough to be consistent with at least a 50% probability of achieving the 2∘C Paris Agreement target(Rogelj et al. 2018), though we note that this is only in reference to CO2 emissions, not the CO2 equivalent of emissions of other greenhouse gases, and that we also neglect the impact of emissions from land-use change. We classify these simulations using a classification tree (Breiman et al. 1984; Therneau and Atkinson 2019). These states of the world are characterized by combinations of the rate of technological penetration and the emissions intensity of the lower-emitting fossil technology, rather than the half-saturation year of the zero-carbon technology. In particular, this outcomes only occurs in the simulations if the emissions intensity of the lower-emitting fossil technology is below the 44th percentile of the marginal posterior (see Fig. S2 and S8; ρ3). If the rate of technological penetration is sufficiently slow (4%/year, which is the 88th percentile of the marginal posterior; see Fig. S2 and S8; κ), the emissions intensity of the lower-emitting fossil technology must be no greater than its 32nd percentile (approximately 0.044 GtCO2/US$2011). On the other hand, there are no such combinations which characterize relatively high-emissions outcomes, which is likely due to the complex interactions driving rapid economic growth as well as the impact at the high end of residual emissions from coal utilization and the resource constraint.

These results depend on our choice of century-scale data (Fig. 4). Using data from 1950 results in higher projected economic growth and emissions by the end of the century despite a reduced overall level of uncertainty due to the growth in both economic output and production after World War II and the exclusion of previous boom-bust cycles, recessions, and depressions. On the other hand, the high-economic growth tail from the 1820–2019 and 1950–2019 calibrations is not present in the 2000–2019 calibration due to the exclusion of the economic growth second half of the twentieth century. This has interesting implications, as even the other calibrations did not result in particularly rapid projected economic growth for the remainder of the twenty-first century. The use of century-scale data also results in lower emissions projections than the use of data starting in the twentieth century due to differences across all of the emissions parameters (see Fig. S7). Using century-scale calibration data results in a higher inferred technological penetration rate, as well as more constrained half-saturation estimates for the fossil technologies. The end result is that the use of shorter data sets produces projections in which emissions peak later in the century, resulting in higher emissions even when economic growth is slower.

Model projections using different calibration periods – Model projections based on calibrations using data from 1820 to 2019, 1950–2910, and 2000–2019 for (a) global population, (b) gross world product, and (c) global CO2 emissions. For each calibration period, the line is the median of the projections, and the ribbon is the 90% credible interval. These projections were made using the “standard” scenario assumptions about fossil fuel resource limits and zero-carbon technology half-saturation year priors

5 Cumulative emissions sensitivities

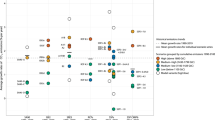

Cumulative emissions variability is driven mainly by uncertainties in interacting economic and technology dynamics, with a much smaller contribution from population dynamics (Fig. 5). Cumulative emissions exhibit statistically significant sensitivities (in the sense that the 95% confidence interval of the sensitivity index does not contain zero) to all model parameters other than the initial population (P0), the half-saturation year of the more-intensive fossil fuel technology (τ2), and the labor force participation rate (π). This illustrates a challenge of constructing parsimonious models for projecting emissions. The first- and higher-order sensitivities to a large number of parameters illustrates the complexity of the system dynamics, even for this highly aggregated, relatively simple IAM. Uncertainties in several economic variables, including those characterizing total factor productivity growth, explain a large fraction of the variability in cumulative emissions, showing the importance of improving our understanding of economic growth dynamics in addition to technological shifts in the energy sector if we are to further constrain future emissions projections.

Global sensitivities of cumulative emissions to model variables – Global sensitivity (Sobol’ 1993; 2001) indices for the decomposition of variance of cumulative emissions under our standard scenario from 2018 to 2100. The computation of sensitivity index estimates is described in the Section S4 of Online Resource 1. Filled green nodes represent first-order sensitivity indices, filled purple nodes represent total-order sensitivity indices, and filled blue bars represent second-order sensitivity indices for the interactions between parameter pairs. Important parameters are labelled with their role in the model. Other model variable names are defined in Table S1. Sensitivity values exceeding thresholds are provided in Tables S4 and S5

Economic variables matter more through higher-order sensitivities and interactions with other parameters, while variables related to emissions intensities and technological substitutions have a more direct influence. This is due to the translation of economic growth into emissions through the mixture of emitting technologies within the model. While it may be surprising that the half-saturation year of the zero-carbon technology (τ4) has a relatively small influence, this results from the same model dynamics discussed earlier when characterizing low-emissions tail scenarios. As the marginal posterior of τ4 is relatively narrow and is limited to the second-half of the twenty-first century, the rate of technological penetration (κ, which influences the shape of the logistic curves) and the emissions intensities of the fossil technologies (ρ2 and ρ3) explain most of the uncertainty in mapping economic output to emissions. These large sensitivities highlight the need for updated accounting of emissions factors to help constrain and update projections of CO2 emissions. The emissions intensity then combines with economic growth, which is dominated by total factor productivity, or TFP (as discussed by Nordhaus (2008) and Gillingham et al. (2018)), as well as the dynamics of capital, including depreciation (controlled by the capital depreciation rate δ and the capital elasticity of production (1 − λ, where λ is the labor elasticity).

It is worth discussing further the importance of TFP growth in this context. The growth rate (α) explains a large degree of cumulative emissions variability through its intersection with other parameters, even though it has a non-statistically sensitivity first-order sensitivity. In this model, there is a strong interaction between the TFP growth rate and the elasticity of production with respect to capital, as these directly affect production growth. Future TFP growth dynamics are likely to be nonstationary compared to our inferences from the historical record, due to the increasing penetration of automation in the global economy, though a more detailed representation of these dynamics might also include trend breaks in TFP growth and an accounting of the displacement effect of automation on the labor share (Acemoglu and Restrepo 2019). Our model’s lack of ability to account for accelerating TFP growth, and therefore even faster economic growth, could partially explain our lower growth projections compared to Christensen et al. (2018), as well as the lack of higher emissions scenarios in our projections.

We do not observe large sensitivities to population dynamics. This is consistent with the analyses from Raftery et al. (2017), which found only a 2% sensitivity of CO2 emissions in 2100 to population, as well as Gillingham et al. (2018), which found a 10% sensitivity. This could be the result of our model’s global aggregation, as some regionally focused analyses have found that population growth is the Kaya identity component most typically associated with changes in CO2 emissions (e.g., van Ruijven et al. 2016). However, our analysis does contradict the finding of van Vuuren et al. (2008), which identitifed population as a major driver of CO2 emissions.

6 Discussion

In this analysis, we produced baseline probabilistic CO2 projections from a simple, mechanistically motivated integrated assessment model calibrated on century-scale historical data under several realizations of different deep uncertainties. This type of modeling exercise has several potential virtues. Our model runs rapidly enough to be statistically calibrated using a long data set as well as to be subjected to a global sensitivity analysis. The coupled uncertainty- and sensitivity- analyses allow us to identify important linkages across different modeling components and interpretable model parameters, illustrating the complexity of the joint social-economic-technical system which ultimately results in CO2 emissions. Some of these linkages would not be directly seen when using a more computationally expensive model which might preclude the required number of model evaluations. By comparing the resulting probabilities across the different scenarios corresponding to the considered deep uncertainty, we could explore the impacts of varying assumptions. For example, we could see the impact of fossil fuel resource uncertainty on the high-emissions upper tail.

One clear lesson is that even for this relatively stylized and highly aggregated model, calibration using historical data is not itself sufficient to fully constrain the model dynamics. For one, the choice of calibration period matters substantially in projecting economic growth and emissions intensities. Second, even this simple model has enough degrees of freedom to produce inaccurate energy-generating technology shares without strong constraints (which still result in underconfident hindcasts). The imposition of these constraints results in consistency with IEA Stated Policy projections (IEA 2020) through 2030. However, our projections assume that current technological substitution trends will continue or accelerate, and do not account for the possibility of technological backsliding. It would be possible and interesting to use a modeling framework similar to ours to understand the extent to which backsliding and increased economic growth could combine to produce high-emissions outcomes which seem unlikely based on current and historical trends. Our projections are also made under the baseline assumption that no new mitigation policies will be implemented. This assumption is unlikely to be true in practice, particularly as the impacts of climate change become more apparent, and we use it purely as a counterfactual.

Global aggregation also runs the risk of masking local and regional dynamics which could be influential in determining how future emissions change, particularly in regions which have so far either no contributed much to total CO2 emissions or which are experiencing rapid economic growth (Pretis and Roser 2017). These could be strong enough to cause trend breaks from the historical dynamics, resulting in emissions higher than those projected by our modeling exercise or the IEA, particularly if currently planned mitigation policies are not fully implemented or are abandoned in the face of political or economic pressures. With its current formulation, our model, and therefore the projections, are incapable of addressing this possibility, which could result in the type of technological backsliding discussed earlier. Our model could, however, be adapted to explore scenarios which allow for non-constant rates of technological penetration or the possibility of an older emitting technology to recapture higher shares of the global energy mix. Another example is the potential impact of population growth in regions such as sub-Saharan Africa, which were a cause of the relative lack of sensitivity of emissions to population growth in Raftery et al. (2017). It would be interesting to use a spatially disaggregated version of our model to explore the combinations of population and economic growth and technological penetrations which would result in increased emissions outside of the likely range reported here.

There are several other caveats that are important to mention. While the economic outputs in our analysis suggest that the higher end of economic growth forecasts, such as those elicited in Christensen et al. (2018), will not be achieved (even before accounting for shocks such as the COVID-19 pandemic), this is partially dependent on both our choice of calibration period as well as the structure of our economic model, which rules out the possibility of trend breaks in growth resulting from, e.g., automation. Additionally, our study is silent on the impacts of negative emissions technologies. Changes in climate policies could result in these technologies becoming viable and widespread prior to the end of the century. This would shift emissions downward starting from the point when these technologies begin to penetration. This effect depends, however, on the currently uncertain details of these technologies. One extension of this study might be to include these deep uncertainties, producing projections which are conditional on both the negative emissions technologies and the rate of penetration of a sample technology. Finally, while baseline scenarios are a useful counterfactual, climate policies evolve, and the odds of policies remaining the same through 2100 are nearly zero. The resulting changes in incentives for technology and energy-use would necessarily alter the dynamics captured by our model calibration.

It is important to stress that our analysis has also neglected the large effect of the Earth-system response to changes in CO2(Friedlingstein et al. 2014; Booth et al. 2017; Quilcaille et al. 2018). From the perspective of managing climate risk, emissions projections and forecasts should be understand in the context of these large climate-system uncertainties. It would be unwise to ignore the implications of higher-emissions scenarios for risk analysis by focusing only on their emissions trajectories, as higher levels of radiative forcing and changes to global mean temperature could be obtained from lower emissions levels than those used in scenario generation. Moreover, these scenarios have important value in climate modeling analyses due to their high signal-to-noise ratio. Our analysis here is not intended to downplay the value of these scenarios for understanding the climate system or for climate risk analysis. We also did not model non-CO2 greenhouse gas emissions.

Ultimately, even with these caveats, simple, mechanistically motivated models have a role to play in understanding the uncertainties associated with future climate risks. Many of the limitations discussed above could be addressed by transparently creating or modifying such a model and comparing projections, parameter distributions, and sensitivities across assumptions. The systems which produce anthropogenic CO2 are sufficiently complex that the ability to map out which parts of the system interact with other parts to produce higher- or lower emissions is important to improve our understanding of climate risk. More computationally expensive and complex models are valuable in representing these complex system dynamics from the ground up, but may not be amenable to this type of analysis without the use of emulators, which smooth out the model dynamics to some degree and may restrict the number of parameters which can be considered (depending on the type of emulator). Ultimately, all models are wrong (Box 1976), but the use of multiple models with varying levels of complexity and which transparently make different assumptions can help us gain a holistic view of future climate risk and its drivers.

References

Acemoglu D, Restrepo P (2019) Automation and new tasks: how technology displaces and reinstates labor. J Econ Perspect 33(2):3–30. https://doi.org/10.1257/jep.33.2.3

Boden TA, Andres RJ, Marland G (2017) Global, regional, and national fossil-fuel CO2 emissions (1751 - 2014) (v. 2017). https://doi.org/10.3334/CDIAC/00001_V2017

Bolt J, van Zanden JL (2020) Maddison style estimates of the evolution of the world economy. A new 2020 update. Working paper. http://reparti.free.fr/maddi2020.pdf

Booth B, Harris G, Murphy J, House J, Jones C, Sexton D, Sitch S (2017) Narrowing the range of future climate projections using historical observations of atmospheric CO2. J Climate 30(8):3039–3053. https://doi.org/10.1175/JCLI-D-16-0178.1

Box GEP (1976) Science and Statistics. J Am Stat Assoc 71(356):10

BP (2020) bp statistical review of World Energy. Tech. rep. https://www.bp.com/content/dam/bp/business-sites/en/global/corporate/pdfs/energy-economics/statistical-review/bp-stats-review-2020-full-report.pdfhttps://www.bp.com/content/dam/bp/business-sites/en/global/corporate/pdfs/energy-economics/statistical-review/bp-stats-review-2020-full-report.pdf

Breiman L, Friedman J, Stone CJ, Olshen RA (1984) Classification and regression trees. CRC Press

Brynjarsdóttir J, O’Hagan A (2014) Learning about physical parameters: the importance of model discrepancy. Inverse Problems 30(11):114007. https://doi.org/10.1088/0266-5611/30/11/114007

Burgess M, Ritchie J, Shapland J, Pielke R (2021) IPCC baseline scenarios have over-projected CO2 emissions and economic growth. Environmental Research Letters 16(1) https://doi.org/10.1088/1748-9326/abcdd2

Capellán-Pérez I, Arto I, Polanco-Martínez JM, González-Eguino M, Neumann MB (2016) Likelihood of climate change pathways under uncertainty on fossil fuel resource availability. Energy Environ Sci 9(8):2482–2496. https://doi.org/10.1039/C6EE01008C

Christensen P, Gillingham K, Nordhaus W (2018) Uncertainty in forecasts of long-run economic growth. Proc Natl Acad Sci 115(21):5409–5414. https://doi.org/10.1073/pnas.1713628115

Friedlingstein P, Meinshausen M, Arora VK, Jones CD, Anav A, Liddicoat SK, Knutti R (2014) Uncertainties in CMIP5 climate projections due to carbon cycle feedbacks. J Climate 27 (2):511–526. https://doi.org/10.1175/JCLI-D-12-00579.1

Friedlingstein P, O’Sullivan M, Jones MW, Andrew RM, Hauck J, Olsen A, Peters GP, Peters W, Pongratz J, Sitch S, Le Quéré C, Canadell JG, Ciais P, Jackson RB, Alin S, Aragão L E O C, Arneth A, Arora V, Bates NR, Becker M, Benoit-Cattin A, Bittig HC, Bopp L, Bultan S, Chandra N, Chevallier F, Chini LP, Evans W, Florentie L, Forster PM, Gasser T, Gehlen M, Gilfillan D, Gkritzalis T, Gregor L, Gruber N, Harris I, Hartung K, Haverd V, Houghton RA, Ilyina T, Jain AK, Joetzjer E, Kadono K, Kato E, Kitidis V, Korsbakken JI, Landschützer P, Lefèvre N, Lenton A, Lienert S, Liu Z, Lombardozzi D, Marland G, Metzl N, Munro DR, Nabel JEMS, Nakaoka SI, Niwa Y, O’Brien K, Ono T, Palmer PI, Pierrot D, Poulter B, Resplandy L, Robertson E, Rödenbeck C, Schwinger J, Séférian R, Skjelvan I, Smith AJP, Sutton AJ, Tanhua T, Tans PP, Tian H, Tilbrook B, van der Werf G, Vuichard N, Walker AP, Wanninkhof R, Watson AJ, Willis D, Wiltshire AJ, Yuan W, Yue X, Zaehle S (2020) Global Carbon Budget 2020. Earth Syst Sci Data 12(4):3269–3340. https://doi.org/10.5194/essd-12-3269-2020

Fuller RW, Wong TE, Keller K (2017) Probabilistic inversion of expert assessments to inform projections about Antarctic ice sheet responses. PLOS ONE 12(12):e0190115. https://doi.org/10.1371/journal.pone.0190115

Fuss S, Canadell JG, Peters GP, Tavoni M, Andrew RM, Ciais P, Jackson RB, Jones CD, Kraxner F, Nakicenovic N, Le Quéré C, Raupach MR, Sharifi A, Smith P, Yamagata Y (2014) Betting on negative emissions. Nat Clim Change 4(10):850–853. https://doi.org/10.1038/nclimate2392

Fyke J, Matthews H (2015) A probabilistic analysis of cumulative carbon emissions and longterm planetary warming. Environ Res Lett 10:11. https://doi.org/10.1088/1748-9326/10/11/115007

Gambhir A, Drouet L, McCollum D, Napp T, Bernie D, Hawkes A, Fricko O, Havlik P, Riahi K, Bosetti V, Lowe J (2017) Assessing the feasibility of global long-term mitigation scenarios. Energies 10(1):89. https://doi.org/10.3390/en10010089

Gillingham K, Nordhaus W, Anthoff D, Blanford G, Bosetti V, Christensen P, McJeon H, Reilly J (2018) Modeling uncertainty in integrated assessment of climate change: a multimodel comparison. J Assoc Environ Resour Econ 5(4):791–826. https://doi.org/10.1086/698910

Goodwin P, Cael BB (2021) Bayesian estimation of Earth’s climate sensitivity and transient climate response from observational warming and heat content datasets. Earth Syst Dyn 12(2):709–723. https://doi.org/10/gngpwz

Hausfather Z, Peters GP (2020a) Emissions – the ‘business as usual’ story is misleading. Nature 577(7792):618–620. https://doi.org/10.1038/d41586-020-00177-3

Hausfather Z, Peters GP (2020b) RCP8.5 is a problematic scenario for near-term emissions. Proc Nat Acad Sci 117(45):27791–27792. https://doi.org/10/ghghkk

Helgeson C, Srikrishnan V, Keller K, Tuana N (2021) Why simpler computer simulation models can be epistemically better for informing decisions. Philos Sci 88(2):213–233. https://doi.org/10.1086/711501

Ho E, Budescu DV, Bosetti V, van Vuuren DP, Keller K (2019) Not all carbon dioxide emission scenarios are equally likely: a subjective expert assessment. Clim Change 155(4):545–561. https://doi.org/10.1007/s10584-019-02500-y

Honegger M, Reiner D (2018) The political economy of negative emissions technologies: consequences for international policy design. Clim Pol 18 (3):306–321. https://doi.org/10.1080/14693062.2017.1413322

IEA (2020) World Energy Outlook 2020. Tech. rep., IEA, Paris, France. https://www.iea.org/reports/world-energy-outlook-2020

IPCC (2014) Climate change 2014. Impacts, adaptation, and vulnerability. Cambridge University Press, Cambridge

Kraan B, Cooke R (2000) Uncertainty in compartmental models for hazardous materials — a case study. J Hazard Mater 71(1-3):253–268. https://doi.org/10.1016/S0304-3894(99)00082-5

Kriegler E, Edmonds J, Hallegatte S, Ebi KL, Kram T, Riahi K, Winkler H, van Vuuren DP (2014) A new scenario framework for climate change research: the concept of shared climate policy assumptions. Clim Change 122(3):401–414. https://doi.org/10.1007/s10584-013-0971-5

Liu PR, Raftery AE (2021) Country-based rate of emissions reductions should increase by 80% beyond nationally determined contributions to meet the 2 °C target. Commun Earth Environ 2(1):29. https://doi.org/10.1038/s43247-021-00097-8

McGlade C (2012) A review of the uncertainties in estimates of global oil resources. Energy 47(1):262–270. https://doi.org/10.1016/j.energy.2012.07.048

McGlade C, Speirs J, Sorrell S (2013) Methods of estimating shale gas resources – comparison, evaluation and implications. Energy 59:116–125. https://doi.org/10.1016/j.energy.2013.05.031

Mohr SH, Wang J, Ellem G, Ward J, Giurco D (2015) Projection of world fossil fuels by country. Fuel 141:120–135. https://doi.org/10.1016/j.fuel.2014.10.030

Morgan MG, Keith DW (2008) Improving the way we think about projecting future energy use and emissions of carbon dioxide. Clim Change 90(3):189–215. https://doi.org/10.1007/s10584-008-9458-1

Morgan MG, Henrion M, Small M (1992) Uncertainty: a guide to dealing with uncertainty in quantitative risk and policy analysis, revised ed. Cambridge University Press, Cambridge

Nordhaus W (2008) A question of balance: weighing the options on global warming policies. Yale University Press, New Haven

Nordhaus W, Sztorc P (2013) DICE 2013R: introduction and user’s manual. http://www.econ.yale.edu/~nordhaus/homepage/homepage/documents/DICE_Manual_100413r1.pdf. For access rate, can use April 12, 2019.

Nordhaus WD (1992) An optimal transition path for controlling greenhouse gases. Science 258(5086):1315–1319. https://doi.org/10.1126/science.258.5086.1315

Nordhaus WD (2017) Revisiting the social cost of carbon. Proc Natl Acad Sci 114(7):1518–1523. https://doi.org/10.1073/pnas.1609244114

O’Neill BC, Kriegler E, Riahi K, Ebi KL, Hallegatte S, Carter TR, Mathur R, van Vuuren DP (2014) A new scenario framework for climate change research: the concept of shared socioeconomic pathways. Clim Change 122 (3):387–400. https://doi.org/10.1007/s10584-013-0905-2

O’Neill BC, Tebaldi C, van Vuuren DP, Eyring V, Friedlingstein P, Hurtt G, Knutti R, Kriegler E, Lamarque JF, Lowe J, Meehl GA, Moss R, Riahi K, Sanderson BM (2016) The scenario model intercomparison project (scenarioMIP) for CMIP6. Geosci Model Dev 9(9):3461–3482. https://doi.org/10.5194/gmd-9-3461-2016

O’Neill BC, Carter TR, Ebi K, Harrison PA, Kemp-Benedict E, Kok K, Kriegler E, Preston BL, Riahi K, Sillmann J, van Ruijven BJ, van Vuuren D, Carlisle D, Conde C, Fuglestvedt J, Green C, Hasegawa T, Leininger J, Monteith S, Pichs-Madruga R (2020) Achievements and needs for the climate change scenario framework. Nat Clim Change 10(12):1074–1084. https://doi.org/10.1038/s41558-020-00952-0

Pretis F, Roser M (2017) Carbon dioxide emission-intensity in climate projections: comparing the observational record to socio-economic scenarios. Energy 135:718–725. https://doi.org/10.1016/j.energy.2017.06.119

Quilcaille Y, Gasser T, Ciais P, Lecocq F, Janssens-Maenhout G, Mohr S (2018) Uncertainty in projected climate change arising from uncertain fossil-fuel emission factors. Environ Res Lett 13:4. https://doi.org/10.1088/1748-9326/aab304

Raftery AE, Zimmer A, Frierson DMW, Startz R, Liu P (2017) Less than 2 ∘C warming by 2100 unlikely. Nat Clim Change 7 (9):637–641. https://doi.org/10.1038/nclimate3352

Riahi K, Rao S, Krey V, Cho C, Chirkov V, Fischer G, Kindermann G, Nakicenovic N, Rafaj P (2011) RCP 8.5—a scenario of comparatively high greenhouse gas emissions. Clim Change 109(1–2):33–57. https://doi.org/10.1007/s10584-011-0149-y

Riahi K, van Vuuren DP, Kriegler E, Edmonds J, O’Neill BC, Fujimori S, Bauer N, Calvin K, Dellink R, Fricko O, Lutz W, Popp A, Cuaresma JC, Kc S, Leimbach M, Jiang L, Kram T, Rao S, Emmerling J, Ebi K, Hasegawa T, Havlik P, Humpenöder F, Da Silva LA, Smith S, Stehfest E, Bosetti V, Eom J, Gernaat D, Masui T, Rogelj J, Strefler J, Drouet L, Krey V, Luderer G, Harmsen M, Takahashi K, Baumstark L, Doelman JC, Kainuma M, Klimont Z, Marangoni G, Lotze-Campen H, Obersteiner M, Tabeau A, Tavoni M (2017) The shared socioeconomic pathways and their energy, land use, and greenhouse gas emissions implications: an overview. Glob Environ Chang 42:153–168. https://doi.org/10.1016/j.gloenvcha.2016.05.009

Ritchie J, Dowlatabadi H (2017a) The 1000 GtC coal question: are cases of vastly expanded future coal combustion still plausible? Energy Econ 65:16–31. https://doi.org/10.1016/j.eneco.2017.04.015

Ritchie J, Dowlatabadi H (2017b) Why do climate change scenarios return to coal? Energy 140:1276–1291. https://doi.org/10.1016/j.energy.2017.08.083

Rogelj J, Shindell D, Jiang K, Fifita S, Forster P, Ginzburg V, Handa C, Kobayashi S, Kriegler E, Mundaca L, Séférian R, Vilariño M V (2018) Mitigation pathways compatible with 1.5 ∘C in the context of sustainable development. In: Masson-Delmotte V, Zhai P, Pörtner H O, Roberts D, Skea J, Shukla P R, Pirani A, Moufouma-Okia W, Péan C, Pidcock R, Connors S, Matthews J B R, Chen Y, Zhou X, Gomis M I, Lonnoy E, Maycock T, Tignor M, Waterfield T (eds) Global warming of 1.5 ∘C. An IPCC special report on the impacts of global warming of 1.5 ∘C above pre-industrial levels and related global greenhouse gas emission pathways, in the context of strengthening the global response to the threat of climate change, sustainable development, and efforts to eradicate poverty, p 82

Rogner HH (1997) An assessment of world hydrocarbon resources. Annu Rev Energy Environ 22(1):217–262. https://doi.org/10.1146/annurev.energy.22.1.217

Schwalm CR, Glendon S, Duffy PB (2020) RCP8.5 tracks cumulative CO2 emissions — PNAS. Proc Nat Acad Sci USA 117(33):19656–19657. https://doi.org/10.1073/pnas.2007117117

Skea J, van Diemen R, Portugal-Pereira J, Khourdajie AA (2021) Outlooks, explorations and normative scenarios: approaches to global energy futures compared. Technol Forecast Soc Chang 120736:168. https://doi.org/10.1016/j.techfore.2021.120736

Smith P, Davis SJ, Creutzig F, Fuss S, Minx J, Gabrielle B, Kato E, Jackson RB, Cowie A, Kriegler E, van Vuuren DP, Rogelj J, Ciais P, Milne J, Canadell JG, McCollum D, Peters G, Andrew R, Krey V, Shrestha G, Friedlingstein P, Gasser T, Grübler A, Heidug WK, Jonas M, Jones CD, Kraxner F, Littleton E, Lowe J, Moreira JR, Nakicenovic N, Obersteiner M, Patwardhan A, Rogner M, Rubin E, Sharifi A, Torvanger A, Yamagata Y, Edmonds J, Yongsung C (2015) Biophysical and economic limits to negative CO2 emissions. Nat Clim Chang 6:42. https://doi.org/10.1038/nclimate2870

Sobol’ I M (1993) Sensitivity estimates for nonlinear mathematical models. Math Model Comput Exp 1(4):407–414

Sobol’ I M (2001) Global sensitivity indices for nonlinear mathematical models and their Monte Carlo estimates. Math Comput Simul 55(1):271–280. https://doi.org/10.1016/S0378-4754(00)00270-6

Sokolov AP, Stone PH, Forest CE, Prinn R, Sarofim MC, Webster M, Paltsev S, Schlosser CA, Kicklighter D, Dutkiewicz S, Reilly J, Wang C, Felzer B, Melillo JM, Jacoby HD (2009) Probabilistic forecast for twenty-first-century climate based on uncertainties in emissions (without policy) and climate parameters. J Climate 22(19):5175–5204. https://doi.org/10.1175/2009JCLI2863.1

The World Bank (2020) GDP, PPP (constant 2017 international $) — data. https://data.worldbank.org/indicator/NY.GDP.MKTP.PP.KD

Therneau T, Atkinson B (2019) Rpart: recursive partitioning and regression trees. https://CRAN.R-project.org/package=rpart. Access date: December 18, 2019

Tversky A, Kahneman D (1974) Judgment under uncertainty: heuristics and biases. Science 185(4157):1124–1131. https://doi.org/10.1126/science.185.4157.112

United Nations, Department of Economic and Social Affairs, Population Division (2019) Probabilistic population projections, Rev. 1, based on the World Population Prospects 2019. Rev. 1

van Ruijven B, Daenzer K, Fisher-Vanden K, Kober T, Paltsev S, Beach R, Calderon S, Calvin K, Labriet M, Kitous A, Lucena A, van Vuuren D (2016) Baseline projections for Latin America: base-year assumptions, key drivers and greenhouse emissions. Energ Econ 56:499–512. https://doi.org/10.1016/j.eneco.2015.02.003

van Vuuren DP, de Vries B, Beusen A, Heuberger PS (2008) Conditional probabilistic estimates of 21st century greenhouse gas emissions based on the storylines of the IPCC-SRES scenarios. Glob Environ Chang 18(4):635–654. https://doi.org/10.1016/j.gloenvcha.2008.06.001

van Vuuren DP, Edmonds J, Kainuma M, Riahi K, Thomson A, Hibbard K, Hurtt GC, Kram T, Krey V, Lamarque JF, Masui T, Meinshausen M, Nakicenovic N, Smith SJ, Rose SK (2011) The representative concentration pathways: an overview. Clim Change 109 (1–2):5–31. https://doi.org/10.1007/s10584-011-0148-z

Vaughan NE, Gough C (2016) Expert assessment concludes negative emissions scenarios may not deliver. Environ Res Lett 11(9):095003. https://doi.org/10.1088/1748-9326/11/9/095003

Wang J, Feng L, Tang X, Bentley Y, Hook M (2017) The implications of fossil fuel supply constraints on climate change projections: a supply-side analysis. Futures 86:58–72. https://doi.org/10.1016/j.futures.2016.04.007

Webster M, Babiker M, Mayer M, Reilly J, Harnisch J, Hyman R, Sarofim M, Wang C (2001) Uncertainty in emissions projections for climate models. Tech. Rep. 79, MIT Joint Program, Cambridge, MA. https://globalchange.mit.edu/sites/default/files/MITJPSPGC_Rpt79.pdf

Webster M, Babiker M, Mayer M, Reilly J, Harnisch J, Hyman R, Sarofim M, Wang C (2002) Uncertainty in emissions projections for climate models. Atmos Environ 36(22):3659–3670. https://doi.org/10.1016/S1352-2310(02)00245-5

Webster M, Sokolov AP, Reilly JM, Forest CE, Paltsev S, Schlosser A, Wang C, Kicklighter D, Sarofim M, Melillo J, Prinn RG, Jacoby HD (2012) Analysis of climate policy targets under uncertainty. Clim Change 112(3):569–583. https://doi.org/10.1007/s10584-011-0260-0

Wigley TM, Raper SC (2001) Interpretation of high projections for global-mean warming. Science 293(5529):451–454. https://doi.org/10.1126/science.1061604

Acknowledgements

The authors thank Louise Miltich and Alexander Robinson for their inputs and contributions. We thank Arnulf Grübler, Jonathan Koomey, Robert Lempert, Michael Obersteiner, Brian O’Neill, Hugh Pitcher, Steve Smith, Rob Socolow, Dan Ricciuto, Mort Webster, Xihao Deng, Tony Wong, Jonathan Lamontagne, Emily Ho, Wei Peng, Casey Helgeson, and Billy Pizer for discussions and comments.

Funding

This work was partially supported by the National Science Foundation (NSF) through the Network for Sustainable Climate Risk Management (SCRiM) under NSF cooperative agreement GEO-1240507 and the Penn State Center for Climate Risk Management. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the funding entities.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Author contribution

V.S., R.T., and K.K. designed the research. V.S. and K.K. conducted the research. V.S., Y.G., and K.K. analyzed the results. V.S., Y.G., R.T., and K.K. wrote the paper.

Availability of data and material

All data used in this study is available at https://github.com/vsrikrish/IAMUQ.

Code availability

All code used in this study is available at https://github.com/vsrikrish/IAMUQ.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(PDF 3.88 MB)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Srikrishnan, V., Guan, Y., Tol, R.S.J. et al. Probabilistic projections of baseline twenty-first century CO2 emissions using a simple calibrated integrated assessment model. Climatic Change 170, 37 (2022). https://doi.org/10.1007/s10584-021-03279-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10584-021-03279-7