Abstract

In this work, we study a global quadrature scheme for analytic functions on compact intervals based on function values on quasi-uniform grids of quadrature nodes. In practice it is not always possible to sample functions at optimal nodes that give well-conditioned and quickly converging interpolatory quadrature rules at the same time. Therefore, we go beyond classical interpolatory quadrature by lowering the degree of the polynomial approximant and by applying auxiliary mapping functions that map the original quadrature nodes to more suitable fake nodes. More precisely, we investigate the combination of the Kosloff Tal-Ezer map and least-squares approximation (KTL) for numerical quadrature: a careful selection of the mapping parameter ensures stability of the scheme, a high accuracy of the approximation and, at the same time, an asymptotically optimal ratio between the degree of the polynomial and the spacing of the grid. We will investigate the properties of this KTL quadrature and focus on the symmetry of the quadrature weights, the limit relations for the mapping parameter, as well as the computation of the quadrature weights in the standard monomial and in the Chebyshev bases with help of a cosine transform. Numerical tests on equispaced nodes show that a static choice of the map’s parameter improve the results of the composite trapezoidal rule, while a dynamic approach achieves larger stability and faster convergence, even when the sampling nodes are perturbed. From a computational point of view the proposed method is practical and can be implemented in a simple and efficient way.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A classical problem in numerical analysis is the numerical approximation of the integral

from discrete function samples \(\varvec{f}=(f_0,\dots ,f_m)^{\top },\; f_i=f(x_i),\) on a set of distinct quadrature nodes \(\mathcal {X}=\{x_i, \; i=0,\ldots ,m\}\subset \Omega \). If the nodes \(\mathcal {X}\) can be chosen freely in the interval \(\Omega \), the interpolatory Gauss quadrature formulas or Clenshaw-Curtis quadrature formulas are the standard choices to approximate the integral \({\mathcal {I}}(f,\Omega )\) if the function f is smooth. In spectral methods, the classical choices for quadrature and interpolation nodes in the reference interval \(\Omega = I = [-1,1]\) are the Chebyshev and Chebyshev-Lobatto nodes given as

These nodes display exceptionally suitable properties for the conditioning and the convergence of quadrature rules. For instance, for the Clenshaw-Curtis rule based on the Chebyshev-Lobatto nodes \(\mathcal {U}_{m+1}\), the quadrature weights are all positive [13] and the respective quadrature rule well-conditioned. Furthermore, as soon as the underlying function is analytic on \(\Omega \) and analytically continuable on an open Bernstein ellipse around \(\Omega \), this quadrature formula will convergence geometrically towards the integral \({\mathcal {I}}(f,\Omega )\) [22, Chapter 19].

However, in practice it is not always possible to sample the function f on arbitrary positions of the interval \(\Omega \) or the knowledge of the function values might be restricted to some a priori fixed node sets \(\mathcal {X}\). In these cases, the efficient quadrature schemes based on, for instance, the Chebyshev nodes \(\mathcal {C}_{m+1}\), \(\mathcal {U}_{m+1}\) or the Gauss-Legendre nodes are not directly accessible. Moreover, if only quasi-equispaced nodes are available, then a high-order interpolatory quadrature scheme gets highly ill-conditioned. This ill-conditioning is linked to a highly oscillatory behavior of the quadrature weights and can be described formally by the rapidly increasing sums of the moduli of the quadrature weights. In particular, it can be shown that for interpolatory quadrature formulas on equidistant nodes (the Newton-Cotes formulas) this sum grows exponentially with order at least \(\mathcal {O}(\frac{2^{m+1}}{(m+1)^3})\) [5, Theorem 5.2.1]. For the respective polynomial interpolation problem on equidistant nodes a similar exponential ill-conditioning is known, and usually referred to as Runge phemonon [21, 24].

If resampling of the function f is not practicable, stable and well-conditioned alternatives to an interpolatory formula have to be found. A classical solution in this regard is the usage of composite quadrature schemes in which the node set \(\mathcal {X}\) is subdivided into smaller portions endowed with a local interpolatory formula. If the set \(\mathcal {X}\) is equidistant, this approach leads to the well-known composite Newton-Cotes formulas. For smooth functions f, a disadvantage of such a composite scheme is the limitation in the achieved convergence rates compared to geometric convergence rates that are possible for interpolatory schemes (if the node set \(\mathcal {X}\) is the right one).

A further standard approach to stabilize a quadrature rule on a fixed node set \(\mathcal {X}\) is given by least-squares quadrature formulas [11, 12, 14, 25]. In this case, the integral of a polynomial least-squares solution is used to define the quadrature rule. The reduced degree \(n < m\) of the polynomial space in the least-squares approach leads to a better conditioning of the respective formulas. For equidistant nodes it is shown in [26] that the grid size m has to scale as \(n^2\) in terms of the polynomial degree n in order to obtain positive least-squares quadrature weights. Thus, although the least-squares approach leads to stable quadrature rules, the required quadratic scaling between m and n is a considerable drawback of polynomial least-squares formulas if simultaneously a high convergence rate for smooth functions should be achieved. When leaving the polynomial setting, other well-established solutions exist in the literature. One prominent approach which performs very well especially at equispaced nodes is given by quadrature formulas based on rational interpolation, [3, 10, 16, 17].

Here, we will focus on an alternative idea based on a mapping function S that maps a set of quasi-uniform quadrature nodes \(\mathcal {X}\) to a node set \(S(\mathcal {X})\) in which the nodes are shifted towards the boundary of \(\Omega \). These shifted nodes display an improved behavior in terms of conditioning and convergence of the respective quadrature formulas. When combining the map S with classical interpolatory or least-squares quadrature formulas on the mapped nodes \(S(\mathcal {X})\), the resulting quadrature formulas on the orginal set \(\mathcal {X}\) are non-polynomial, can however be interpreted as polynomial quadrature formulas on the mapped nodes \(S(\mathcal {X})\). This idea has been investigated thoroughly for interpolation problems in [1] in terms of resulting mapped basis functions and in [7] in which the nodes \(S(\mathcal {X})\) have been referred to as “fake nodes". In this global approach, which has been also investigated in the contexts of barycentric rational approximation [2] and multivariate approximation [8], no resampling of the function f is necessary as the given sampling values are directly used on the new nodes \(S(\mathcal {X})\). Furthermore, for the interpolation problem the well-conditioning can be ensured by an inheritance property of the Lebesgue constant for the mapped nodes \(S(\mathcal {X})\) (cf. [8, Proposition 3.4]).

For the domain \(\Omega = I = [-1,1]\), a prominent example of such a mapping function is the Kosloff Tal-Ezer (KT) map \(M_{\alpha }: I \rightarrow I\) given by (cf. [18])

and \(M_{0}(x) := \lim _{\alpha \rightarrow 0^{+}} M_{\alpha }(x) = x\) for \(\alpha = 0\). While \(M_0\) is the identity map on I, the KT function \(M_{\alpha }\) with \(\alpha =1\) maps the open and closed equidistant Newton-Cotes quadrature nodes to the Chebyshev \(\mathcal {C}_{m+1}\) and Chebyshev-Lobatto nodes \(\mathcal {U}_{m+1}\). Similarly, if \(\mathcal {X}\) is a quasi-uniform grid in the interval I, the distribution of the mapped nodes \(M_{\alpha }(\mathcal {X})\) with the parameter \(\alpha \) close to 1 clusters towards the ends of the interval I which improves the conditioning of corresponding interpolation and least-squares procedures. The properties of the KT map for the conditioning and the accuracy of the weighted least-squares approximation of a function f have been thoroughly studied in [1]. In the literature also other maps have been studied. In [15], for instance, conformal maps have been applied for the acceleration of Gauss-type quadrature schemes. Approximation methods and numerical quadrature through mapped nodes have also been used extensively in the context of spectral methods for PDEs; see [4, Chapter 16] for a large overview.

The goal of this work is to show that the KT map in combination with the least-squares approximant introduced in [1] leads to quickly converging quadrature formulas for analytic functions starting from function values on a quasi-uniform grid \(\mathcal {X}\). Our starting point is the general setting for interpolatory quadrature formulas described in [9], then we will move on to the following main setting:

-

1.

We will give up the interpolatory conditions used in [9] and consider more general types of quadrature formulas based on least-squares approximation. We will see that this transition leads to a faster convergence of the quadrature formulas.

-

2.

As underlying mapping we will consider the KT map (1.1) with a general parameter \(\alpha \) in [0, 1]. We will study the role of the parameter \(\alpha \) in the convergence of the quadrature formulas. In doing so, the theoretical investigation carried out in [1] plays a central role.

Main results

-

In addition to a result given in [9] we show how the composite midpoint rule and the composite Cavalieri-Simpson formula are related to a mapped interpolatory quadrature formula.

-

We introduce and analyse the Kosloff Tal-Ezer map as stabilizing component of interpolatory and least-squares quadrature formulas (referred to as KTI and KTL formulas) for quasi-uniform node sets. A careful selection of the mapping parameter \(\alpha \) ensures on one hand a high accuracy of the approximation and on the other hand an asymptotically optimal ratio between the degree of the polynomial approximation and the spacing of the grid.

-

We study the symmetry of the KTI and KTL quadrature weights, limit relations for \(\alpha \) converging to \(0^{+}\) and \(1^{-}\), as well as the computation of the quadrature weights in the standard monomial and the Chebyshev basis with help of a cosine transform.

Organization of this paper

In Sect. 2, we review interpolatory quadrature formulas combined with a mapping of the quadrature nodes. We shortly recapitulate how these formulas can be computed and we provide three examples of mapped interpolatory formulas.

In Sect. 3 and 4, we introduce and study the KTI and KTL quadrature formulas, and we investigate analytic and numerical properties of the corresponding quadrature schemes.

In the last Sect. 5, we conduct a series of numerical experiments to compare different parameter choices and the efficiency of the method with regard to other quadrature rules. Our experiences with the KTL quadrature scheme show that the formulas are practical, simple and can be implemented efficiently.

2 Interpolatory quadrature formulas based on mapped nodes and mapped basis functions

We start this work with some preliminary facts about interpolatory quadrature formulas and the respective adaptions if an additional mapping is involved.

Let \(\mathcal {X} = \{x_0, \ldots , x_m\}\) be an increasingly ordered set of quadrature nodes in the interval \(\Omega = [a,b]\) and f a continuous function on \(\Omega \). An interpolatory quadrature formula \({\mathcal {I}}_{m,\mathcal {X}}(f,\Omega )\) is built upon the unique polynomial \(P_{m} (f)\) of degree m interpolating f at the nodes \(\mathcal {X}\). This interpolant can be written in terms of the monomial basis \(\{ 1,x,...,x^m\}\) as

where the coefficients \(\gamma _{0},\ldots ,\gamma _{m}\) are determined by the \(m + 1\) interpolatory conditions \(P_{m}(f)(x_i) = f_i = f(x_i)\). Alternatively, \(P_{m} (f)\) can be expressed in terms of the Lagrange basis \(\{ \ell _{0},...,\ell _{m}\}\) as

where the Lagrange polynomials are given as

With the vector \(\varvec{w}=(w_0,\ldots ,w_m)^{\top }\) consisting of the interpolatory quadrature weights \(w_i={\mathcal {I}}(\ell _i,\Omega ),\;i=0,\ldots ,m\,\), the interpolatory quadrature formula \({\mathcal {I}}_{m,\mathcal {X}}(f,\Omega )\) is given as

Going one step further, we consider an additional injective map \(S:\Omega \longrightarrow \mathbb {R}\) included in the interpolation process. The idea of the so-called fake nodes approach (FNA) introduced in [7] is to obtain an interpolant on the nodes \(\mathcal {X}\) by constructing a polynomial interpolant on the fake nodes \(S(\mathcal {X})\). More precisely, if \(P_{m,S(\mathcal {X})} (g)\) denotes the unique polynomial interpolant of the function \(g = f \circ S^{-1}\) on the nodes \(S(\mathcal {X})\), the interpolant of f on \(\mathcal {X}\) is defined as

The interpolant \(R^S_{m,\mathcal {X}}(f)\) can be expressed in terms of the mapped Lagrange basis \(\{ \lambda ^S_{0},...,\lambda ^S_{m}\}\), i.e.,

where \(\lambda ^S_i:= \ell ^{S}_i\circ S\) and \(\ell ^{S}_i\) is the i-th Lagrange polynomial on the node set \(S(\mathcal {X})\). Then, similarly to (2.2), we obtain the interpolatory quadrature formula

where \(\varvec{w}^S=(w_0^S,\ldots ,w_m^S)^{\top }\) are the quadrature weights determined by the conditions

that hold true for every interpolatory quadrature formula. We point out that the vector \(\varvec{w}^S\) can be computed by solving the linear system

where \(\textbf{A}^{S}_{ij} := S(x_i)^j\), \(i,j=0,\ldots ,m\), is the well-known Vandermonde matrix for the set \(S(\mathcal {X})\), and \(\varvec{\tau }^S=(\tau _0^S,\ldots ,\tau _m^S)^{\top }\) is the vector of moments related to the basis \(\{1, S(x), \ldots , S(x)^m\}\), i.e.,

If the map S is at least \(C^1(\Omega )\), we define for \(y=S(x)\) the function

Assuming S is injective, the inverse \(S^{-1}\) is well-defined on \(S(\Omega )\), and we obtain

which leads to the formula

We point out that the smoothness of the map S (and \(S^{-1}\)) is relevant for our objective, since otherwise the regularity of the mapped function g gets affected compared to the original underlying function f. Furthermore, we note that we use the expression (2.4) for theoretical purposes only, since computing the quadrature weights by means of such a formula is unstable due to the usage of the monomial basis. For this, we will later on consider a more stable basis defined upon the Chebyshev polynomials of the first kind.

Example 2.1

(Composite midpoint rule) Let \(\mathcal {X} = \{ x_{i} = a+(i+\frac{1}{2})\frac{b-a}{m+1}, \; i=0,\ldots ,m\}\) and consider the bijective map

Then, \(S(\mathcal {X})\) corresponds to the Chebyshev nodes \(C_{m+1}\) on \([-1,1]\) and the quadrature weights related to the interpolatory quadrature formula (2.3) are given by

Proof

A straightforward check shows that \(S(\mathcal {X})=\mathcal {C}_{m+1}\) are the Chebyshev nodes on \([-1,1]\). Then, if \(\nu : I \longrightarrow \mathbb {R}\) is the Chebyshev weight function, i.e.,

and

we get

From (2.7), we obtain

where \(\ell ^{S}_{i}, i=0,\ldots ,m\), are the Lagrange polynomials computed at the Chebyshev nodes \(\mathcal {C}_{m+1}\).

Using the classical quadrature formula at the Chebyshev nodes [6, 20]

which has degree of accuracy at least m, we obtain the weights

We note that, since the degree of the Lagrange polynomial \(\ell ^{S}_{i}\) is m, the second equality holds due to the exactness of an interpolatory quadrature rule. \(\square \)

In addition to Example 2.1, we add the following two quadrature rules based on the cosine map in (2.8).

Example 2.2

(Composite trapezoidal rule, [9, Theorem 3.1]) Let \(\mathcal {X} = \{ x_{i} = a+i \frac{b-a}{m}, \; i=0,\ldots ,m\}\) be a set of \(m+1\) equidistant nodes in the interval [a, b] containing the two borders a and b, and the map S be given as in (2.8). Then the mapped nodes \(S(\mathcal {X})\), correspond to the Chebyshev-Lobatto nodes \(\mathcal {U}_{m+1}\) on the interval \([-1,1]\) and the respective quadrature weights become

i.e., we obtain the composite trapezoidal rule based on m subintervals of [a, b]. We point out that (2.8) corresponds to \((M_1\circ H)\), where \(H(x) = 2 \cdot \frac{(x-a)}{(b-a)}-1\) and \(M_1\) is the Kosloff Tal-Ezer map introduced in (1.1).

Example 2.3

(Composite Cavalieri-Simpson formula) Let \(\mathcal {X} = \{ x_{i} = a+i \frac{b-a}{2m}, \; i=0,...,2m\}\), and \(\mathcal {X}= \mathcal {X}^{\textrm{e}} \cup \mathcal {X}^{\textrm{o}}\) be the disjoint subdivision of \(\mathcal {X}\) into the nodes with even and odd indices. Further let the map S be given as in (2.8).

Then, the composite Cavalieri-Simpson formula at \(\mathcal {X}\) is a convex combination of the quadrature scheme in Example 2.1 applied to \(\mathcal {X}^{\textrm{o}}\) and of the quadrature rule in Example 2.2 applied to \(\mathcal {X}^{\textrm{e}}\).

Proof

First, we split \(\mathcal{I}(f,\Omega )\) into the following convex combination

Then, we apply the quadrature rule of Example 2.1 to the first integral (using the odd nodes \(\mathcal {X}^{\textrm{o}}\)), and the scheme of Example 2.2 to the second (using the even nodes \(\mathcal {X}^{\textrm{e}}\)), thus achieving

which is the composite Cavalieri-Simpson formula on the nodes \(\mathcal {X}\). \(\square \)

3 Kosloff Tal-Ezer Least-squares (KTL) quadrature

For simplicity, we restrict the integration domain \(\Omega \) to the interval \(\Omega =I=[-1,1]\). In the previous Sect. 2, we considered several examples of well-known composite Newton-Cotes schemes that can be interpreted as mapped Gauss-Chebyshev type formulas in which equidistant nodes are mapped onto Chebyshev or Chebyshev-Lobatto points. This particular mapping can be considered as a special case of the Kosloff Tal-Ezer (KT) map \(M_{\alpha }\) with \(\alpha =1\) as introduced in (1.1).

In the following, we give a brief overview on KT-generated mapped polynomial methods as developed and studied in [1]. First, we observe that the KT maps given by \(M_{\alpha }(x) = \frac{\sin (\alpha \pi x/2)}{\sin (\alpha \pi /2)}\) for \(\alpha \in (0,1]\) and \(M_0(x) = x\) for \(\alpha = 0\), are strictly increasing functions on I. In particular, the maps \(M_{\alpha }(x)\) are bijections of I into itself with the inverse mappings

Further, the derivative of \(M_{\alpha }\) is given by

If \(\mathbb {P}_n\) denotes the space of polynomials of degree at most n, we can associate to \(M_{\alpha }\) the approximation space of mapped polynomials

Note that \(\mathbb {P}_{n}^{0}=\mathbb {P}_{n}\), while \(\mathbb {P}_{n}^{1}\) is a space of functions closely related to trigonometric polynomials (cf. [1]).

If \(\alpha < 1\), it is shown in [1] that polynomial interpolation on the nodes \(M_{\alpha }(\mathcal {X})\) is still ill-conditioned if \(\mathcal {X}\) is, for instance, a set of equidistant nodes in I. In this case, the set \(\mathcal {X}\) is not mapped onto the Chebyshev or Chebyshev-Lobatto nodes, and the polynomial interpolants display Runge type artifacts. To overcome this issue, the size of the node set \(\mathcal {X}\) in [1] was increased compared to the dimension of the polynomial space such that \(m>n\), and the following weighted least-squares approximant to the function f was introduced:

with the weights \(\mu _i\) given by

where \(x_{-1} = -1\) and \(x_{m+1} = 1\) denote the borders of the interval I. For \(\alpha =1\), the weights \(\mu _{i}, \; i=0, \ldots ,m\), correspond to the composite trapezoidal quadrature weights with respect to the the node set \(\mathcal {X}\). The usage of the special weights \(\mu _i\) is motivated by the fact that under some conditions on the involved parameters an upper bound for the conditioning of the least-squares approximation (3.4) can be found. Note that (3.4) defines a non-polynomial weighted least-squares approximation for \(\alpha \ne 0\) which is built upon a mapped polynomial basis. Similarly to the interpolatory framework based on mapped basis elements outlined in Sect. 2, we have also in the least-squares setting the relation

where g is uniquely determined by the relation \(f = g \circ M_{\alpha }\) and where \(F_{n,M_{\alpha }(\mathcal {X})}^{0}(g)\) represents a polynomial least-squares fit of the function g on the mapped nodes \(M_{\alpha }(\mathcal {X})\).

Definition 3.1

(Kosloff Tal-Ezer Least-squares (KTL) quadrature) Let \(F_{n,\mathcal {X}}^{\alpha } (f)\) be the weighted least-squares approximant of a continuous function f as introduced in (3.4), then we define the KTL quadrature formula \({\mathcal {I}}^{\alpha }_{n,\mathcal {X}}(f,I)\) as

In the particular case \(\# \mathcal {X} = m +1 = n+1 = \textrm{dim}(\mathbb {P}_n^{\alpha })\) the formula \({\mathcal {I}}^{\alpha }_{n,\mathcal {X}}(f,I)\) is interpolatory and will be referred to as KTI quadrature formula.

A first fundamental property of the quadrature formula \({\mathcal {I}}^{\alpha }_{n,\mathcal {X}}(f,I)\) is its exactness for all mapped polynomials in the space \(\mathbb {P}_{n}^{\alpha }\). For the calculation of the quadrature formula, we choose a basis \(\Phi ^{\alpha }=\{\phi _{i}^{\alpha } : i=0, \ldots , n\}\) for the space \(\mathbb {P}_{n}^{\alpha }\). Then, we can write the least-squares approximant as

where the coefficient vector \(\varvec{\gamma }:=(\gamma _0,\dots ,\gamma _n)^{\top }\) is determined by the least-squares solution of the following linear system

In this system

denotes the matrix with the roots of the least-squares weights \(\mu _i\) given in (3.5), the matrix \(\textbf{A}^{\alpha } \in \mathbb {R}^{(m+1) \times (n+1)}\) is defined by the entries \(\textbf{A}_{ij}^{\alpha } = \phi _{j}^{\alpha }(x_{i})\) and \(\varvec{f} = (f(x_0), \ldots , f(x_m))^\top \) is the vector with all samples of f on \(\mathcal {X}\).

We now have two possibilities to calculate the KTL quadrature formula:

-

1.

Based on the decomposition (3.7) we have the formula

$$\begin{aligned}{\mathcal {I}}^{\alpha }_{n,\mathcal {X}}(f,I) = \varvec{\gamma }^\top \varvec{\tau }^{\alpha },\end{aligned}$$where \(\varvec{\gamma } = ((\textbf{A}^{\alpha })^{\top } \textbf{W}^2 \textbf{A}^{\alpha })^{-1} (\textbf{A}^{\alpha })^{\top }\textbf{W}^2 \varvec{f}\) is the least-squares solution of the weighted system (3.8) and \(\varvec{\tau }^{\alpha } \in \mathbb {R}^{n+1}\) is a moment vector with the entries \(\tau _i^{\alpha }={\mathcal {I}}(\phi _i^{\alpha },I),\; i=0,\ldots , n\).

-

2.

The formula above can be rewritten in an alternative form as

$$\begin{aligned}{\mathcal {I}}^{\alpha }_{n,\mathcal {X}}(f,I) = (\varvec{w}^{\alpha })^\top \varvec{f},\end{aligned}$$with the quadrature weights \(\varvec{w}^{\alpha } \in \mathbb {R}^{m+1}\) given as the weighted least-squares solution \(\varvec{w}^{\alpha } = \textbf{W}^2 \textbf{A}^{\alpha } ((\textbf{A}^{\alpha })^{\top } \textbf{W}^2 \textbf{A}^{\alpha })^{-1} \varvec{\tau }^{\alpha }\) of the linear system

$$\begin{aligned} (\textbf{A}^{\alpha })^{\top } \varvec{w}^{\alpha } = \varvec{\tau }^{\alpha }. \end{aligned}$$

Note that in the interpolatory case \(n = m\) it is not necessary to construct the weight matrix \(\textbf{W}\). In this case, the matrix \(\textbf{A}^{\alpha }\) is invertible and the linear systems in 1. and 2. can be solved directly. Note also that the formulas in 1. and 2. are analytically the same. From a numerical point of view, small differences can occur in finite precision arithmetic due to a switched order of the operations. The conditioning and the number of computational steps are the same for both formulas. The formula in 1. includes the moments \(\varvec{\tau }^{\alpha }\) explicitly in the rule, while 2. corresponds to the classic quadrature rule formulation in terms of function evaluations.

In the next section, we focus on a particular choice of the basis \(\Phi ^{\alpha }\) for the space \(\mathbb {P}_{n}^{\alpha }\), and provide an efficient and stable procedure for the computation of the moment vector \(\varvec{\tau }^{\alpha }\) as well as a convergence analysis for the resulting quadrature formula.

4 Computation and convergence of KTL quadrature

4.1 Computation of KTL weights in the Chebyshev basis

The usage of the Chebyshev polynomials \( \{T_{i}(x) = \cos (i \arccos (x)), \, i=0,\ldots ,n\}\), as a basis for the space of polynomials of degree n leads to the basis \(\{ \phi _i^{\alpha }(x) = T_i(M_{\alpha }(x)), \, i=0,\ldots ,n\}\), for the mapped space \(\mathbb {P}_n^{\alpha }\). A simple argument provided in [1, Lemma 2.1] shows that the mapped Chebyshev polynomials \(\phi _i^{\alpha }\) form an orthonormal basis with respect to a specific weighted inner product. Further, for this mapped basis an upper bound for the conditioning of the least-squares problem (3.8), and, thus, for the calculation of the quadrature weights is proven in [1]. For equispaced grids \(\mathcal {X}\) this bound essentially depends on the relation between m and n and the mapping parameter \(\alpha \). We illustrate this conditioning for some ratios n/m in Fig. 1. It is visible that decreasing the ratio n/m and choosing the parameter \(\alpha \) close to 1 has a significant impact on the conditioning of the least-squares problem.

Condition numbers of the matrix \(\textbf{W} \textbf{A}^{\alpha }\) in the least-squares problem (3.8) for different values of m (size of the equispaced grid \(\mathcal {X}\), displayed on the x-axis), the mapping parameter \(\alpha \) (displayed on the y-axis) and the ratio n/m between the degree n of the polynomial space and m. The colors represent the value \(\log _{10}(\text {Cond}(\textbf{W} \textbf{A}^{\alpha }))\)

Using the mapped Chebyshev polynomials as basis allows to calculate the KTL quadrature weights \(\varvec{w}^{\alpha }\) in terms of a cosine and a non-equidistant fast Fourier transform. More precisely, the use of the Chebyshev basis leads to the moment vector \(\varvec{\tau }^{\alpha } = (\tau _{0}^{\alpha },...,\tau _{n}^{\alpha })^{\top }\) with the entries

Because of this particular structure, the moments \(\tau _{i}^{\alpha }\) can be calculated by a cosine transform.

Theorem 4.1

For \(0 < \alpha \le 1\) and \(i \in \mathbb {N}_0\), the moment

corresponds to the i-th coefficient \(\mathcal {F}_{\cos }(g_{\alpha })(i)\) of the continuous cosine transform of the function

For \(\alpha = 0\), the moments are given by

Proof

To compute the moments for \(0 < \alpha \le 1\), we use a change of variables:

By observing that

we obtain

As the cosine series of the Lipschitz-function \(g_{\alpha }\) in \([0,\pi ]\) is given by

we have showed that

Finally, for \(\alpha = 0\), we obtain

For \(i = 1\), the evaluation of the integral gives \(\tau _{1}^{0} = 0\). \(\square \)

We conclude this section with a pseudo-code for the calculation of the KTL and KTI quadrature weights using the Kosloff-Tal-Ezer map and the mapped Chebyshev basis. We summarize the main steps in Algorithm 1 and Algorithm 2.

4.2 Why the monomial basis is not so suited for calculations

We continue the previous discussion by analyzing the computation of the quadrature formula using the standard monomial basis instead of the Chebyshev basis. From a computational point of view the usage of the monomial basis is prohibitive also if an additional KT map is used. The main reason is that the matrix \(\textbf{A}^{\alpha }\) in the solution of the least-squares problem 3.8 with the entries \(\textbf{A}^{\alpha }_{ij} = M_{\alpha }(x_{i-1})^{j-1}\) corresponds to a Vandermonde matrix that gets ill-conditioned already for small degrees n.

The monomial basis turns out to be problematic also in regard of the moment vectors \(\varvec{\tau }^{\alpha }\). If we use the monomial polynomial basis \( \{x^{i}: i=0, \ldots , n \}\) for the space \(\mathbb {P}_n\) it is possible to express the moment vector \(\varvec{\tau }^{\alpha }\) explicitly. We have

For the calculation of the integral \(\int _{-1}^{1} \sin ( \alpha \frac{\pi }{2} x)^{i}\text {d}x\), we can make use of the following recursive formula:

Lemma 4.1

Let \(C\in \mathbb {R} \setminus \{ 0\} \), then the following recursive formula holds for even \(i \ge 2\):

For odd numbers \(i \ge 1\), we have

Proof

For odd numbers i the statement is trivial. For even \(i \ge 2\), integration by parts yields

Thus, we obtain

\(\square \)

Although Lemma 4.1 provides a simple scheme to calculate the moment vector \(\varvec{\tau }^{\alpha }\), we show why from a computational point of view it makes little sense to compute the moments in this way. For this, we suppose that \(S_i\) is a sequence of numbers satisfying the recursion

and in which the initial value \(S_0\) is a slight perturbation of the exact moment value \(\int _{-1}^1 1 \textrm{d}x = 2\). Then, by Lemma 4.1, the error \(\mathcal {E}_i\) between \(S_i\) and \(\int _{-1}^1 \sin ( C x)^{i}\text {d}x\) satisfies the recurrence relation

We will only consider the case when \(i = 2k\) is even (the case i odd is not relevant as the odd moments are already known). In this case, we get for the errors

We observe that

We show that for \(k \longrightarrow \infty \) error diverges. We fix \(k^{\star } < k\), then

where

Now, if \(\alpha \ne 1\) then \(\sin \big ( \alpha \frac{\pi }{2}\big )^{2}<1\). Furthermore, since \(\frac{2k^{\star }-1}{2k^{\star }} \longrightarrow 1\) for \(k^{\star } \longrightarrow \infty \), there exists a \(k^{\star }\) such that \(\frac{2k^{\star }-1}{2k^{\star }} > \sin \big ( \alpha \frac{\pi }{2}\big )^{2}\). Since \(\tilde{G}\) does not depend on k we can state that

This implies that the calculation of the moments \(\tau _i^{\alpha } = \int _{-1}^1 M_{\alpha }(x)^i \textrm{d}x\) via the recursion formula (4.5) is not stable.

4.3 Symmetry of the KTI weights

For the quadrature nodes \(\mathcal {X} = \{x_{i} : i=0,\ldots ,m\} \subset I\) we denote by \(z_i = M_{\alpha }(x_{i})\) the respectively mapped nodes. Then, by the simple change of variables \(y = M_{\alpha }(x)\), the interpolatory KTI quadrature weights can be represented as

where

denotes the i-th Lagrange polynomial with respect to the mapped nodes \(z_i = M_{\alpha }(x_{i})\), \(i=0,\ldots ,m\). We next show a result regarding the symmetry of the weights of the interpolatory KTI quadrature scheme. Such a symmetry of the quadrature weights is useful in cases when the integral of an even or odd function f has to be calculated.

Theorem 4.2

If the nodes \(\mathcal {X} = \{x_0, \ldots , x_m\} \subset I\) are symmetric with respect to the origin, i.e., \(x_{i}+x_{m-i}=0\) for \(i = 0, \ldots , m\), then also the KTI weights satisfy the symmetry relations \(w_i^{\alpha } = w_{m-i}^{\alpha }\).

Proof

As we consider the interpolatory KTI quadrature formulas, the respective weights \(w_i^{\alpha }\) can be computed as the integrals of the mapped Lagrange basis \(\lambda _{i}^{\alpha }(x)\) relative to the nodes \(x_i\), \(i = 0, \ldots , m\). As \(x_i\) are symmetric with respect to the origin, also the mapped nodes \(z_i = M_{\alpha }(x_i)\) are symmetric and we get

This equation and the representation (4.8) for the interpolatory quadrature formula give the identity

and therefore the symmetry of the KTI quadrature weights. \(\square \)

4.4 The KTI weights in the limit cases \(\alpha \rightarrow 0^+\) and \(\alpha \rightarrow 1^-\)

We use again the notation of the last section, and, in particular, the representation (4.8) for the interpolatory quadrature weights. For \(\alpha \rightarrow 0^+\) and \(\alpha \rightarrow 1^-\), we get the following limit results.

Theorem 4.3

For the interpolatory KTI quadrature formula, we have the limit relations

and

Proof

We show the two limit relations by using Lebesgue’s dominated convergence theorem. We first observe that

and

We are now looking for an upper bound of \(|\ell ^{\alpha }_{i}|\) for \(\alpha \longrightarrow 1^{-}.\) Elementary properties of sine and cosine give the inequality

As \(x_{j+1} - x_j > 0\) and \(x_{j+1} + x_j < 2\) for all \(j = 0, \ldots , m-1\), we can thus find an \(\epsilon > 0\) such that

We therefore get the bound

and therefore

Similarly, we are also looking for an upper bound of \(|\ell ^{\alpha }_{i}|\) for \(\alpha \longrightarrow 0^{+}\). Using the mean value theorem we obtain

where \(-\alpha \pi / 2 \le \zeta \le \alpha \pi / 2.\) Continuing the computations, we get the lower bound

for \(0 \le \alpha \le \bar{\alpha } \le 1\), where the second inequality arises from \(|\sin (x)| \le |x|\) for all \(x \in \mathbb {R}\). We therefore get also in this second case that

Lebesgue’s dominated convergence theorem now guarantees that we can pass to the limit inside the integral for \(\alpha \longrightarrow 1^{-}\) and \(\alpha \longrightarrow 0^{+}\). This immediately proves the statement. \(\square \)

Based on this theorem we obtain more precise results for certain types of nodes. If the starting nodes \(\mathcal {X}\) are equispaced, the resulting KTI quadrature rules for \(\alpha \rightarrow 1^-\) turn out to be particular composite Newton-Cotes formulas. As we have \(M_{1}(x)=\sin (\pi / 2 x)\), we get for instance

the Chebyshev-Lobatto nodes as mapped nodes, and the limits \(w_{i}^{\alpha }\) for \(\alpha \longrightarrow 1^{-}\) are the composite trapezoidal rule weights in \([-1,1]\) (Example 2.2).

On the other hand, if the starting nodes are the equidistant nodes \(\{ x_{k} = -1+(2k+1)/(m+1), \, k=0,\ldots ,m\}\) then the limits of the weights \(w_{i}^{\alpha }\) for \(\alpha \longrightarrow 1^{-}\) are the composite midpoint rule weights in \([-1,1]\) (Example 2.1).

In Fig. 2, the KTI weights on 21 equidistant nodes for a varying parameter \(\alpha \) are illustrated. For \(\alpha = 0\), we get the closed Newton-Cotes quadrature weights, whilst with \(\alpha = 1\) we get the weights of the composite trapezoidal rule. Well-conditioning of numerical integration can be guaranteed by positive quadrature weights. Therefore, from a computational point of view, a method parameter \(\alpha \) close to 1 is preferable. In Fig. 3 we can see that by increasing the number of nodes and if \( \alpha \nrightarrow \, 1^{-}\), then some of the weights get negative. This inevitably leads to numerical instability.

If we compare Figs. 3, 4 and 5, we see that the stability of the KTL quadrature in terms of the parameter \(\alpha \) depends also strongly on the relation between the dimension \(n+1\) of the polynomial approximation space and the number \(m+1\) of grid points. While for the KTI quadrature rule with \(n = m\) a choice of \(\alpha \) close to 1 is necessary to obtain positive quadrature weights, this limitation can be relaxed or dropped for the KTL scheme. In Fig. 4 we observe that for a least-squares formula with ratio \(n/m = 0.5\) we get positive quadrature weights (and thus stability) already for \(\alpha \approx 0.9\) while smaller values still lead to negative weights. On the other hand Fig. 5 shows that for a ratio \(m \approx n^2\) the parameter \(\alpha \) has only a minor impact on the KTL weights and that in this case the quadrature rule is stable independently of the chosen \(\alpha \). In Sect. 5, we will further see that a fixed choice of \(\alpha \) is less valuable than a dynamic strategy in which the \(\alpha \) depends on the degree n.

4.5 Parameter dependent convergence of KTL quadrature

Convergence properties of KTL quadrature formulas can be derived from the approximation behavior of the least-squares approximant \(F_{n,\mathcal {X}}^{\alpha }(f)\). This behavior depends on the interplay of the space dimension n, the KT parameter \(\alpha \) and the distribution of the quadrature nodes \(\mathcal {X}\). As a main control parameter for the behavior of the node set \(\mathcal {X}\) in the quadrature scheme we will consider the maximal distance

between two nodes in \(\mathcal {X}\), where \(x_{-1} = -1\) and \(x_{m+1} =1\) denote the boundaries of the interval I. For equidistant nodes \(x_i = -1 + 2i/m \), \(i = 0, \ldots , m\), the distance h corresponds to the spacing \(h = 2/m\). A standard argument (see [1, Theorem 3.2]) shows that the uniform approximation error \(\Vert f - F_{n,\mathcal {X}}^{\alpha }(f)\Vert _{\infty }\) can be bounded by

where \(\mathcal {K}_{n,\mathcal {X}}^{\alpha } = \sup _{\Vert f\Vert _\infty = 1} \Vert F_{n,\mathcal {X}}^{\alpha }(f)\Vert _{\infty }\) is the operator norm of the approximation operator \(F_{n,\mathcal {X}}^{\alpha }\) (which we will refer to as Lebesgue constant) and \(E_n^{\alpha }(f) = \inf _{p \in \mathbb {P}_n^{\alpha }} \Vert f - p\Vert _{\infty }\) is the best approximation error in the space \(\mathbb {P}_n^{\alpha }\). For the KTL quadrature formula this bound immediately implies the estimate

In particular, this means that any estimates of the best approximation error \(E_n^{\alpha }(f)\) and the Lebesgue constant \(\mathcal {K}_{n,\mathcal {X}}^{\alpha }\) can be used directly also for the quadrature formulas studied in this work. We give a brief summary of the major statements derived in [1] and its implications for the convergence of the KTL scheme.

The case \(\varvec{\alpha }=\varvec{0}\). The space \(\mathbb {P}_n^0\) corresponds to the space \(\mathbb {P}_n\) of algebraic polynomials of degree n. The term \(E_n^{0}(f)\) therefore corresponds to the best approximation error in \(\mathbb {P}_n\), which implies geometric convergence of \(E_n(f)\) as \(n \rightarrow \infty \) if the function f is analytic in a Bernstein ellipse containing the interval I [22, Theorem 8.3].

A sufficient condition for the parameters n and h to guarantee the boundedness of the Lebesgue constant \(\mathcal {K}_{n,\mathcal {X}}^{0}\) is \(n=\mathcal {O}(1/\sqrt{h})\). If the quadrature nodes \(\mathcal {X}\) are equidistant with spacing \(h = \frac{2}{m}\), this implies that \(n = \mathcal {O}(\sqrt{m})\). This is a quite strong restriction on the choice of the polynomial approximation degree n. It implies, however, the root-exponential convergence rate

in which \(\rho >1\) denotes the index of the Bernstein ellipse.

The case \(\varvec{\alpha }=\varvec{1}\). If the two parameters n and h are related as \(n \le c \frac{1}{h}\) with a proper constant \(c > 0\), the Lebesgue constant \(\mathcal {K}_{n,\mathcal {X}}^{1}\) is bounded. For a uniformly distributed set \(\mathcal {X}\) this means that the degree n can be chosen as a linear function \(n = c m\) of m. On the other hand, the best approximation error \(E_n^{1}(f)\) might decay quite slowly for \(\alpha =1\), i.e., in the order of \(\mathcal {O}(1/n)\) also for smooth functions f (if they don’t satisfy periodic boundary conditions). This will be visible also in our numerical tests and is a drawback for the choice \(\alpha = 1\).

The case \(\varvec{0}<\varvec{\alpha }<\varvec{1}\). For a fixed parameter \(0< \alpha < 1\), the convergence behavior of the formulas is principally the same as for \(\alpha = 0\): the Lebesgue constant is bounded if n and h satisfy a relation of the form \(n=\mathcal {O}(1/\sqrt{h})\) as \(h \longrightarrow 0\). Furthermore, also the best approximation error \(E_{n}^{\alpha }(f)\) decays geometrically if the function is analytic in a neighborhood of I, implying that \(\mathcal {K}_{n,\mathcal {X}}^{\alpha } E_{n}^{\alpha }(f) = \mathcal {O}(\rho ^{-\sqrt{m}})\) for equidistant nodes \(\mathcal {X}\) and a proper \(\rho > 1\). In [19], it was shown that the root-exponential rate \(\mathcal {O}(\rho ^{-\sqrt{m}})\), \(\rho > 1\), is best possible for a stable algorithm approximating an analytic function on equidistant nodes.

The case \(\varvec{\alpha _n}=\varvec{4/\pi \arctan (\epsilon ^{1/n})}\). An asymptotic analysis given in [1] shows that the choice \(n=\mathcal {O}(1/h)\) for \(h \longrightarrow 0\) is sufficient for the boundedness of the Lebesgue constant (similarly as for \(\alpha = 1\)). On the other hand, compared to \(\alpha = 1\) a smaller approximation error \(E_n^{\alpha _n}(f)\) can be expected till a small error tolerance \(\epsilon > 0\) is reached (this is also visible numerically). However, geometric convergence towards 0 as in the case \(\alpha < 1\) can no longer be guaranteed.

5 Numerical experiments

In this section, we provide some numerical experiments that investigate the convergence and stability properties of the KTL and KTI quadrature formulas in more detail. All the code of the following experiments is publicly available at the GitHub page of this work

https://github.com/GiacomoCappellazzo/KTL_quadrature .

We consider the three test functions

and their respective integrals \(\mathcal {I}(f_k,I)\), \(k \in \{1,2,3\}\), over the interval \(I = [-1,1]\).

In the following experiments we plot the relative errors obtained by comparing the KTL quadrature formula with the exact value of the integral:

For the calculation of the exact integral \(\mathcal {I}(f,I)\) with a high precision, we used the Matlab command integral(f,-1,1). All three test functions are analytic in an open neighborhood of \([- 1,1]\). The function \(f_{1}\) has poles close to the interval \([-1,1] \) in the complex plane, \(f_{2}\) is an highly oscillatory entire function and \(f_{3} \) has a singularity close to \(x=-1\).

5.1 KTI formulas for a fixed parameter \(\alpha \)

In the following graphs we show the results obtained by Algorithm 2 implementing the interpolatory KTI quadrature formula through mapped nodes with the Kosloff Tal-Ezer map and equidistant nodes \(\mathcal {X}\). In particular, we have \(m = n\) and use a fixed mapping parameter \(0 \le \alpha \le 1\). From the discussion in Sect. 4.4 we know that if the mapping parameter \(\alpha =1\) the quadrature weights correspond to those of the composed trapezoidal rule. In Fig. 6, the blue, magenta and black curves display the relative quadrature errors using the KTI quadrature rule with \(m+1\) equidistant nodes and with parameters \(\alpha =1\), \(\alpha =0.99\), and \(\alpha =0.98\), respectively.

From a theoretical point of view (Sect. 4.5 or [1, Theorem 3.3]) we expect an algebraic convergence rate of index 1 (\(\mathcal {O}(n^{-1})\)) for \(\alpha =1\), while for \(0 \le \alpha < 1\) the best approximation error decays geometrically (\(\mathcal {O}(\rho ^{-n})\)) while the Lebesgue constant is not necessarily bounded. The numerical tests show that by lowering the value \(\alpha \) the interpolation quadrature potentially improves but becomes unstable if \(\alpha \) is not close to 1 and the degree n gets larger. This phenomenon can be explained by the behavior of the KTI quadrature weights displayed in Sect. 4.4. Namely, the KTI weights get highly oscillatory and include negative values as soon as \(\alpha \) is too far away from 1 and the degree n is large.

5.2 KTL quadrature: \(\alpha \) increasing with the number of nodes

In the previous numerical experiment we have seen that if the ratio between the number of nodes and the degree of the interpolation polynomial is 1 phenomena of ill-conditioning occur. To avoid these instabilities, we go over to the KTL quadrature formula and approximate the integral using the formula \(\mathcal {I}_{n,\mathcal {X}}^{\alpha }(f,I)\) calculated in Algorithm 1 and discussed in Sect. 3.

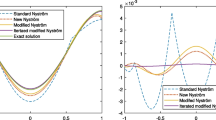

In Fig. 7, the x-axis displays the number m (giving \(m+1\) equidistant nodes) used to determine the KTL approximation of the integral while the y-axis shows the corresponding relative quadrature error. The blue curve describes the relative error of the composed trapezoidal rule (\(\alpha = 1\)), the magenta and black curve correspond to the errors obtained for the mapping parameter \(\alpha =0.9\) and \(\alpha =0.7\), respectively. To guarantee the boundedness of the Lebesgue constant it is sufficient that \(n=\mathcal {O}(\sqrt{m})\), as shown in [1, Corollary 5.2] (see also Sect. 4.5). In this numerical test we chose \(n=4\sqrt{m}\).

As before we expect for \(\alpha = 1\) an algebraic convergence rate of index 1 (\(\mathcal {O}(m^{-1})\)), while for \(0 \le \alpha < 1\) the convergence rate is root exponential (\(\mathcal {O}(\rho ^{-\sqrt{m}})\)) in the number m. The numerical experiment shows that by altering \(\alpha \) the convergence rate of the KTL scheme slightly improves and remains stable even if \(\alpha \) is not close to 1. From Fig. 7 we do not notice an evident relationship between the displayed curves and a particular choice of the parameter \(\alpha \). This indicates that a smart choice of the parameter is more appropriate for KTL schemes in which there is a linear relation between n and m and where \(\alpha \) depends on the degree of the polynomial and/or the number of nodes. As introduced in [1, Theorem 3.3] (see also the exposition in Sect. 4.5) we choose

In our experiments, we take \(\epsilon =10^{-12}\) and \(m=2n\). This choice of \(\alpha _n\) corresponds to the red curve in Fig. 7. We can report that the results significantly improve compared to the KTL quadrature with a fixed parameter \(\alpha \). We also observe that the method remains stable for different values of \(\alpha \) even if the number of nodes increases: the approximation of the integral of the function \(f_{2} \) does not reach the machine precision in 500 nodes, but if we increase m further the approximation of the integral improves and the KTL method shows no signs of instability.

5.3 KTL quadrature on non-equidistant nodes

In the numerical tests presented so far equidistant nodes have been used as quadrature nodes. The KTL quadrature rule, however, also works for quasi-uniform distributions of the nodes without any change in the numerical scheme as described in Algorithm 1. In the next numerical experiment the quadrature nodes are defined as follows:

where \(\delta _{i}\) is a uniform random variable in \(]-1/m, 1/m[\) for \( i = 1,...,m-1\), \( \delta _{0}\) is a uniform random variable in ]0, 1/m[ and \(\delta _{m}\) is a uniform random variable in \(]-1/m,0[\). This defines a set of quasi-equispaced points in the interval \([-1,1]\). The respective mapped nodes are not Chebyshev or Chebyshev-Lobatto nodes but their distribution is concentrated towards the boundaries of the interval when \(\alpha \) is close to 1. This feature of the distribution of the mapped nodes is a common property of well-conditioned polynomial interpolation schemes [23, Chapter 5].

For the proposed numerical scheme the distribution of the nodes plays only a minor role for the convergence as long as quasi-uniformity is given. We highlight this with a second numerical test using a low-discrepancy sequence of nodes such as the Halton points. Note that, when leaving the quasi-uniform setting and considering arbitrary grids, it is possible to construct unfavourable node distributions such that no convergence of the quadrature rule is achieved.

The red curve in Fig. 8 and in Fig. 9 describes the relative error for equidistant nodes while the blue curve represents the error for the perturbed nodes and Halton points, respectively. We can conclude that the convergence rates for the perturbed and the equidistant nodes are approximately the same.

6 Conclusions

We briefly summarize the most important points of our discussion. In order to get well-conditioned and quickly converging quadrature formulas at quasi-uniform grids of an interval, we improved classical interpolatory quadrature formulas using the following two strategies: (i) we included an auxiliary mapping that maps the quadrature nodes onto a new more suitable set of fake nodes. On these fake nodes the interpolatory quadrature is applied using the function values from the original set of nodes; (ii) we reduced the degree of the polynomial spaces with respect to the number of quadrature nodes leading to a least-squares quadrature formula instead of an interpolatory one. While the first strategy (i) alone already yields an improvement with respect to a direct interpolatory formula, fast convergence for the integration of smooth functions is not guaranteed. Moreover, if one particular map is fixed also instabilities can occur if the the number of quadrature nodes gets large. In order to get both, fast convergence and stability, it turned out that the inclusion of the least-squares idea (ii) is necessary and that a smart choice of the mapping parameters is essential. We analyzed such quadrature strategies particularly for the Kosloff Tal-Ezer map and equidistant nodes. We derived several properties of the corresponding quadrature weights, including symmetries, limit relations and convergence properties depending on the central parameters of the scheme. We also showed how the KTL quadrature weights can be calculated efficiently by using a Chebyshev basis and a fast cosine transform for the computations. Our final numerical experiments confirm that the described parameter selections yield KTL quadrature formulas that converge quickly for smooth functions.

References

Adcock, B., Platte, R.B.: A mapped polynomial method for high-accuracy approximations on arbitrary grids. SIAM J. Numer. Anal. 54, 2256–2281 (2016)

Berrut, J.P., De Marchi, S., Elefante, G., Marchetti, F.: Treating the Gibbs phenomenon in barycentric rational interpolation via the S-Gibbs algorithm. Appl. Math. Lett. 103, 106196 (2020)

Bos, L., De Marchi, S., Hormann, K., Klein, G.: On the Lebesgue constant of barycentric rational interpolation at equidistant nodes. Numer. Math. 121, 461–471 (2012)

Boyd, J.P.: Chebyshev and Fourier Spectral Methods. Dover Publications, Second Edition (2013)

Brass, H., Petras, K.: Quadrature Theory: The Theory of Numerical Integration on a Compact Interval. Mathematical Surveys and Monographs Vol 178, American Mathematical Society (2011)

Chebyshev, P.L.: Sur les quadratures. J. Math. Pures Appl. 19(2), 19–34 (1874)

De Marchi, S., Marchetti, F., Perracchione, E., Poggiali, D.: Polynomial interpolation via mapped bases without resampling. J. Comput. Appl. Math. 364, 112347 (2020)

De Marchi, S., Marchetti, F., Perracchione, E., Poggiali, D.: Multivariate approximation at fake nodes. Appl. Math. Comput. 391, 125628 (2021)

De Marchi, S., Elefante, G., Perracchione, E., Poggiali, D.: Quadrature at fake nodes. Dolomites Res. Notes Approx. 14, 27–32 (2021)

Floater, M.S., Hormann, K.: Barycentric rational interpolation with no poles and high rates of approximation. Numer. Math. 107, 315–331 (2007)

Glaubitz, J.: Stable high order quadrature rules for scattered data and general weight functions. SIAM J. Num. Anal. 58(4), 2144–2164 (2009)

Huybrechs, D.: Stable high-order quadrature rules with equidistant points. J. Comput. Appl. Math. 231(2), 933–947 (2009)

Imhof, J.P.: On the method for numerical integration of Clenshaw and Curtis. Numer. Math. 5, 138–141 (1963)

Migliorati, G., Nobile, F.: Stable high-order randomized cubature formulae in arbitrary dimension. J. Approx. Theory 275, 105706 (2020)

Hale, N., Trefethen, L.N.: New quadrature formulas from conformal maps. SIAM J. Numer. Anal. 46, 930–948 (2008)

Hormann, K., Klein, G., De Marchi, S.: Barycentric rational interpolation at quasi-equidistant nodes. Dolomites Res. Notes Approx. 5, 1–6 (2012)

Hormann, K., Schaefer, S.: Pyramid algorithms for barycentric rational interpolation. Comput. Aided Geom. Des. 42, 1–6 (2016)

Kosloff, D., Tal-Ezer, H.: A modified Chebyshev pseudospectral method with an \({\cal{O}}\)(N\(^{-1}\)) time step restriction. J. Comput. Phys. 104, 457–469 (1993)

Platte, R.B., Trefethen, L.N., Kuijlaars, A.B.J.: Impossibility of fast stable approximation of analytic functions from equispaced samples. SIAM Rev. 53(2), 308–318 (2011)

Mason, J.C., Handscomb, D.C.: Chebyshev Polynomials. Chapman and Hall/CRC (2002)

Runge, C.: Über empirische Funktionen und die Interpolation zwischen äquidistanten Ordinaten. Zeit. Math. Phys. 46, 224–243 (1901)

Trefethen, L.N.: Approximation Theory and Approximation Practice. SIAM (2013)

Trefethen, L.N.: Spectral Methods in MATLAB, SIAM (2000)

Turetskii, A.H.: The bounding of polynomials prescribed at equally distributed points. Proc. Pedag. Inst. Vitebsk 3, 117–127 (1940)

Wilson, M.W.: Discrete Least Squares and Quadrature Formulas. Math. Comput. 24(110), 271–282 (1970)

Wilson, M.W.: Necessary and sufficient conditions for equidistant quadrature formula. SIAM J. Numer. Anal. 4(1), 134–141 (1970)

Acknowledgements

We thank the anonymous referees for their valuable feedback which improved the quality of the manuscript considerably. This research has been accomplished within the Rete ITaliana di Approssimazione (RITA) and the thematic group on Approximation Theory and Applications of the Italian Mathematical Union. We received the support of GNCS-IN\(\delta \)AM. FM is funded by the ASI - INAF grant “Artificial Intelligence for the analysis of solar FLARES data (AI-FLARES)”.

Funding

Open access funding provided by Università degli Studi di Padova within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Communicated by Elisabeth Larsson.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Cappellazzo, G., Erb, W., Marchetti, F. et al. On Kosloff Tal-Ezer least-squares quadrature formulas. Bit Numer Math 63, 15 (2023). https://doi.org/10.1007/s10543-023-00948-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10543-023-00948-0

Keywords

- Numerical quadrature

- Mapped polynomial bases

- Fake nodes approach

- Kosloff Tal-Ezer map

- Least-squares quadrature