Abstract

Multi-rotor UAVs suffer from a restricted range and flight duration due to limited battery capacity. Autonomous landing on a 2D moving platform offers the possibility to replenish batteries and offload data, thus increasing the utility of the vehicle. Classical approaches rely on accurate, complex and difficult-to-derive models of the vehicle and the environment. Reinforcement learning (RL) provides an attractive alternative due to its ability to learn a suitable control policy exclusively from data during a training procedure. However, current methods require several hours to train, have limited success rates and depend on hyperparameters that need to be tuned by trial-and-error. We address all these issues in this work. First, we decompose the landing procedure into a sequence of simpler, but similar learning tasks. This is enabled by applying two instances of the same RL based controller trained for 1D motion for controlling the multi-rotor’s movement in both the longitudinal and the lateral directions. Second, we introduce a powerful state space discretization technique that is based on i) kinematic modeling of the moving platform to derive information about the state space topology and ii) structuring the training as a sequential curriculum using transfer learning. Third, we leverage the kinematics model of the moving platform to also derive interpretable hyperparameters for the training process that ensure sufficient maneuverability of the multi-rotor vehicle. The training is performed using the tabular RL method Double Q-Learning. Through extensive simulations we show that the presented method significantly increases the rate of successful landings, while requiring less training time compared to other deep RL approaches. Furthermore, for two comparison scenarios it achieves comparable performance than a cascaded PI controller. Finally, we deploy and demonstrate our algorithm on real hardware. For all evaluation scenarios we provide statistics on the agent’s performance. Source code is openly available at https://github.com/robot-perception-group/rl_multi_rotor_landing.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Classical control approaches suffer from the fact that accurate models of the plant and the environment are required for the controller design. These models are necessary to consider non-linearities of the plant behavior. However, they are subjected to limitations, e.g., with regard to disturbance rejection (Rodriguez-Ramos et al., 2019) and parametric uncertainties (Mo & Farid, 2019). To overcome these problems, reinforcement learning (RL) has been studied for robot control in the last decades, including in the context of UAV control (Mo & Farid, 2019). In model-free, (action-) value based RL methods, an approximation of a value function is learned exclusively through interaction with the environment. On this basis, a policy then selects an optimal action while being in a certain state (Sutton & Barto, 2015). Classical RL approaches such as Q-Learning (Sutton & Barto, 2015) store a tabular representation of that value function approximation. Deep Reinforcement Learning (DRL) methods (Mnih et al., 2013), on the other hand, leverage a deep neural network (DNN) to learn an approximate value function over a continuous state and action space, thus making it a powerful approach for many complex applications. Nevertheless, in both RL and DRL, the training itself is in general sensitive to the formulation of the learning task and in particular to the selection of hyperparameters. This can result in long training times, the necessity to perform a time-consuming hyperparameter search and an unstable convergence behavior. Furthermore, especially in DRL, the neural network acts like a black box, i.e. it is difficult to trace back which training experience caused certain aspects of the learned behaviour. The purpose of this work is to address the aforementioned issues for the task of landing a multi-rotor UAV on a moving platform. Our RL based method aims at (i) achieving a high rate of successful landing attempts, (ii) requiring short training time, and (iii) providing intrepretable ways to compute hyperparameters necessary for training.

To achieve these aims, we leverage the tabular off-policy algorithm Double Q-Learning (Hasselt, 2010), due to the few required hyperparameters which are the decay factor \(\gamma \) and the learning rate \(\alpha \). Using a tabular method does not require defining the DNN’s architecture or finding values for additional hyperparameters such as minibatch and buffer size or the number of gradient steps. Furthermore, unlike a NN-based deep learning algorithm, it does not require a powerful GPU for training, making it also suitable for computers with lower performance and less power-consuming. However, tabular methods only provide discrete state and action spaces and therefore suffer from the “curse of dimensionality” (Lampton & Valasek, 2009). Furthermore, the training performance and control performance is influenced by the sampling rate. When the sampling rate and discretization do not match, the performance of the agent can be low, e. g. due to jittering, where the agent rapidly alternates between discrete states. To solve these problems, our method’s novel aspects are outlined below. The first two of these allow us to address the “curse of dimensionality” issue by reducing the complexity of the learning task. The third addresses the problem to find a matching discretization and sampling rate.

-

1.

Under the assumption of a symmetric UAV and decoupled dynamics, common in literature (Wang et al., 2016), our method is able to control the vehicle’s motion in longitudinal and lateral direction with two instances of the same RL agent. Thus, the learning task is simplified to 1D movement only. The vertical and yaw movements are controlled by PID controllers. The concept of using independent controllers for different directions of motion is an approach which is often used, e.g. when PID controllers are applied (Wenzel et al., 2011; Araar et al., 2017)).

-

2.

We introduce a novel state-space discretization approach, motivated by the insight that precise knowledge of the relative position and relative velocity between the UAV and the moving platform is required only when touchdown is imminent. To this end, we leverage a multiresolution technique (Lampton & Valasek, 2009) and augment it with information about the state space topology, derived from a simple kinematics model of the moving platform. This allows us to restructure the learning task of the 1D movement as a sequence of even simpler problems by means of a sequential curriculum, in which learned action values are transferred to the subsequent curriculum step. Furthermore, the discrete state space allows us to accurately track how often a state has been visited during training.

-

3.

We leverage the discrete action space to ensure sufficient maneuverability of the UAV to follow the platform. To this end, we derive equations that compute the values of hyperparameters, such as the agent frequency and the maximum value of the roll/pitch angle of the UAV. The intention of these equations is twofold. First, they link the derived values to the maneuverability of the UAV in an interpretable way. Second, they ensure that the discretization of the state space matches the agent frequency. The aim is to reduce unwanted side effects resulting from the application of a discrete state space, such as jittering.

Section 2 presents related work, followed by Sect. 3 describing the proposed approach in detail. Section 4 presents the implementation and experimental setup. The results are discussed in Sect. 5, with comments on future work given in Sect. 6.

2 Related work

2.1 Classical control approaches

So far, the problem of landing a multi-rotor aerial vehicle has been tackled for different levels of complexity regarding platform movement. One-dimensional platform movement is considered in (Hu et al., 2015) in the context of maritime applications, where a platform is oscillating vertically. Two-dimensional platform movement is treated in (Wenzel et al., 2011; Ling et al., 2014; Gautam et al., 2015; Vlantis et al., 2015; Araar et al., 2017; Borowczyk et al., 2017; Falanga et al., 2017). Three-dimensional translational movement of the landing platform is covered for docking scenarios involving multi-rotor vehicles in Zhong et al. (2016) and Miyazaki et al. (2018). Various control techniques have been applied to enable a multi-rotor UAV to land in one of these scenarios. In Hu et al. (2015) an adaptive robust controller is used to control the vehicle during a descend maneuver onto a vertically oscillating platform while considering the ground effect during tracking of a reference trajectory. The authors of Gautam et al. (2015) apply guidance laws that are based on missile control principles. Vlantis et al. (2015) uses a model predictive controller to land on an inclined moving platform. Falanga et al. (2017) relies on a non-linear control approach involving LQR-controllers. However, the most used controller type is a PID controller. Wenzel et al. (2011) uses four independent PID controllers to follow the platform that has been identified with visual tracking methods. In Ling et al. (2014), the landing maneuver onto a maritime vessel is structured into different phases. Rendezvous with the vessel, followed by aquiring a visual fiducial marker and descending onto the ship. During all phases, PID controllers are used to control the UAV. Perception and relative pose estimation based methods are the focus of Araar et al. (2017), where again four PID controllers provide the UAV with the required autonomous motion capability. A PID controller is also applied in Borowczyk et al. (2017) to handle the final touchdown on a ground vehicle moving up to \(50{\mathrm{km/h}}\). Also the landing on a 3D moving platform can be solved with PID controllers (Zhong et al., 2016; Miyazaki et al., 2018). Although automatic methods for tuning the gains of a PID controller exist, tuning is often a manual, time-consuming procedure. However, learning-based control methods enable obtaining a suitable control policy exclusively from data through interaction with the environment, making it an attractive and superior approach for controlling an UAV to land on a moving platform. Furthermore, methods such as Q-Learning enable the approximation of an optimal action-value function and thus lead to a (near-) optimal action selection policy (Sutton & Barto, 2015).

2.2 Learning-based control approaches

The landing problem has been approached with (Deep) Reinforcement Learning for static platforms (Kooi & Babuška, 2021; Shi et al., 2019; Polvara et al., 2018, 2019) and for moving platforms (Rodriguez-Ramos et al., 2019; Lee et al., 2018). The authors of Kooi and Babuška (2021) accelerate training by means of a curriculum, where a policy is learned using Proximal Policy Optimization (PPO) to land on an inclined, static platform. Their curriculum is tailored, involving several hyperparameters, whereas in this work we present a structured approach for deriving a curriculum for different scenarios. In Polvara et al. (2018) the authors assign different control tasks to different agents. A DQN-agent aligns a quadcopter with a marker on the ground, whereas a second agent commands a descending maneuver before a closed-loop controller handles the touchdown. Polvara et al. (2019) follows a similar approach but allows small pitch and roll maneuvers of the platform. Our approach also leverages separate RL agents, but for controlling the longitudinal and lateral motion. The authors of Shi et al. (2019) present a deep-learning-based robust non-linear controller to increase the precision of landing and close ground trajectory following. A nominal dynamics model is combined with a DNN to learn the ground effect on aerodynamics and UAV dynamics. Lee et al. (2018) present an actor-critic approach for landing using continuous state and action spaces to control the roll and pitch angle of the drone, but no statistics on its performance. In Rodriguez-Ramos et al. (2019), a DRL-framework is introduced for training in simulation and evaluation in real world. The Deep Deterministic Policy Gradient (DDPG) algorithm, an actor-critic approach, is used to command the movement of a multi-rotor UAV in longitudinal and lateral direction with continuous states and actions. Detailed data about the agent’s success rate in landing in simulation is provided. We use this work as a baseline method and show how we outperform it, providing also statistics about our agent’s performance in the real world. However, the baseline method does not provide any systematic and explainable way for deriving hyperparameters used for the learning problem. For our method, we present equations that link values of hyperparameters to problem properties such as the state space discretization and maneuverability of the UAV in an intuitively understandable way.

3 Methodology

3.1 Preliminaries

3.1.1 Problem statement

The goal is to develop a RL based approach that provides a high rate of successful UAV landings on a horizontally moving platform. A landing trial is considered successful if the UAV touches down on the surface of the moving platform. The approach should require less training time than the baseline method and generalize well enough to be deployed on real hardware. Furthermore, required hyperparameters should be determined in an interpretable way.

3.1.2 Base notations

We first define a fly zone \({\mathcal {F}}=[-x_{max},x_{max}]\times [-y_{max},y_{max}] \times [0,z_{max}]\subset {\mathbb {R}}^3[{\textrm{m}}]\) in which the motion of the multi-rotor UAV mr and the moving platform mp are considered, as is illustrated by Fig. 2. Furthermore, for the goal of landing on the moving platform, the RL agent controlling the multi-rotor vehicle has to consider only the relative translational and rotational motion. This is why we express the relative dynamics and kinematics in the stability frame s, to be able to formulate the RL problem from the UAV’s and thus the agent’s point of view. For this purpose we first denote the Euler angles roll, pitch and yaw in the stability frame with \(_s\varvec{\varphi }= (\phi ,\theta ,\psi )^T\). Each landing trial will begin with the UAV being in hover state, leading to the following initial conditions for rotational movement \( {}_s\dot{\varvec{\varphi }}_{mr,0} = {}_s\dot{\varvec{\varphi }}_{mp,0} = {\textbf{0}} {\mathrm{rad/s}} \), \( {}_s\varvec{\varphi }_{mr,0} = {}_s\varvec{\varphi }_{mp,0} = {\textbf{0}} {\textrm{rad}}\). The initial conditions for the multi-rotor vehicle’s translational motion are expressed in the earth-fixed frame e, it is \(_e\dot{{\textbf{r}}}_{mr,0} ={\textbf{0}}\;{\mathrm{m/s}}\) and \(_e{\textbf{r}}_{mr,0} \in {\mathcal {F}}\). Transforming the translational initial conditions of both, multi-rotor vehicle and moving platform into the stability frame allows to fully express the relative motion as the second order differential equations

For the remainder of this work we specify the following values that will serve as continuous observations of the environment (see Sect. 3.3.4 and Fig. 7)

\({\textbf{p}}_c\) denotes the relative position, \({\textbf{v}}_c\) the relative velocity, \({\textbf{a}}_c\) the relative acceleration and \(\varvec{\varphi }_c\) the relative orientation between vehicle and platform, expressed in the stability frame.

3.2 Motion of the landing platform

We introduce the rectilinear periodic movement (RPM) of the platform that is applied during training. With the initial conditions \(_e\dot{{\textbf{r}}}_{mp,0} = {\textbf{0}}{\mathrm{m/s}}\) and \(_e{\textbf{r}}_{mp,0} = {\textbf{0}}{\textrm{m}}\), the translational motion of the platform is expressed as a second order differential equation in the earth-fixed frame e.

where \(r_{mp}\) denotes the maximum amplitude of the trajectory, \(v_{mp}\) the maximum platform velocity and \(\omega _{mp}\) the angular frequency of the rectilinear movement. The platform is not subjected to any rotational movement. As a consequence, the maximum acceleration of the platform that is required as part of the hyperparameter determination in Sec. 3.5 is

3.3 Basis learning task

3.3.1 Dynamic decoupling

The nonlinear dynamics of a multi-rotor vehicle such as a quadcopter can be greatly simplified when the following assumptions are applied. i) Linearization around hover flight, ii) small angles iii) a rigid, symmetric vehicle body, iv) no aerodynamic effects (Wang et al., 2016). Under these conditions, the axes of motion are decoupled. Thus, four individual controllers can be used to independently control the movement in longitudinal, lateral, vertical direction and around the vertical axis. For this purpose, low-level controllers track a setpoint \(\theta _{ref}\) for the pitch angle (longitudinal motion), \(\phi _{ref}\) for the roll angle (lateral motion), \(T_{ref}\) for the total thrust (vertical motion) and \(\psi _{rel}\) for the yaw angle (rotation around vertical axis). We leverage this fact in our controller structure as is further illustrated in Sect. 4. Furthermore, this enables us to introduce a learning task for longitudinal 1D motion only. Its purpose is to learn a policy for the pitch angle setpoint \(\theta _{ref}\) that induces a 1D movement in longitudinal direction, allowing the vehicle to center over the platform moving in longitudinal direction as well. After the training, we then apply a second instance of the trained agent for controlling the lateral motion, where it produces set point values for the roll angle \(\phi _{ref}\). This way, full 2D motion capability is achieved. We use the basis learning task to compose a curriculum later in Sect. 3.4. PID controllers ensure that \(\phi _{rel} =0{\textrm{rad}},\psi _{rel} = 0{\textrm{rad}}\) and \( v_{z_{rel}} = \text {const} < 0{\mathrm{m/s}}\) during training.

3.3.2 Markov decision process

For the RL task formulation we consider the finite, discrete Markov Decision Process (Sutton & Barto, 2015). The goal of the agent is to learn the optimal action value function \(Q^*\) to obtain the optimal policy \(\pi ^* = {{\,\mathrm{\arg \!\max }\,}}_a Q^*(s,a)\), maximizing the sum of discounted rewards \(\Sigma ^{T-t-1}_{k = 0}\gamma ^{k}r_{t+k+1}\).

3.3.3 Discrete action space

We choose a discrete action space comprising three actions, namely increasing pitch, decreasing pitch and do nothing, denoted by

The pitch angle increment is defined by

where \(\theta _{max}\) denotes the maximum pitch angle and \(n_{\theta }\) the number of intervals intersecting the range \([0,\theta _{max}]\). The set of possible pitch angles that can be taken by the multi-rotor vehicle is then

where \(i_{\theta }\) is used to select a specific element.

3.3.4 Discrete state space

For the discrete state space we first scale and clip the continuous observations of the environment that are associated with motion in the longitudinal direction to a value range of \([-1,1]\). For this purpose, we use the function \(\text {clip}(x,x_{min},x_{max})\) that clips the value of x to the range of \([x_{min},x_{max}]\).

where \(p_{max},v_{max},a_{max}\) are the values used for the normalization of the observations. The reason for the clipping is that our state space discretization technique, a crucial part of the sequential curriculum, is derived assuming a worst case scenario for the platform movement in which the multi-rotor vehicle is hovering (see Sect. 3.4) and where scaling an observation with its maximum values, \(p_{max}, v_{max}\) and \(a_{max}\), would constitute a normalization. However, once the multi-rotor vehicle starts moving too, the scaled observation values could exceed the value range of \([-1,1]\). Clipping allows the application of the discretization technique also for a moving multi-rotor vehicle. Next, we define a general discretization function \(d(x,x_1,x_2)\) that can be used to map a continuous observation of the environment to a discrete state value.

We apply (14) to the normalized observations (11)-(13) to determine the discrete state \(s =(p_d, v_d,a_d,i_{\theta }) \in {\mathbb {S}}\), where \(i_{\theta } \in \left\{ 0,\ldots ,2n_{\theta }\right\} \), \({\mathbb {S}}= {\mathbb {N}}_0^{ 3\times 3\times 3\times 2n_{\theta }+1}\) and

In (15)-(17), the normalized values \(\pm p_{goal}\pm ,v_{goal}, \pm a_{goal}\) define the boundaries of the discrete states the agent should learn to reach whereas the normalized values of \(\pm p_{lim}\pm ,v_{lim}, \pm a_{lim}\) denote the limits in the observations the agent should learn not to exceed when controlling the multi-rotor vehicle.

3.3.5 Goal state

We define the goal state \(s^* \in {\mathbb {S}}\)

This means the goal state is reached if \(-p_{goal} \le p_x \le p_{goal}\) and \(-v_{goal}\le v_x \le v_{goal}\) regardless of the values of \(a_d\) and \(i_{\theta }\).

3.3.6 Reward function

Our reward function \(r_t\), inspired by a shaping approach (Rodriguez-Ramos et al., 2018) is given as

where \(r_{term} = r_{suc}\) if \(s=s^*\) and \(r_{term} = r_{fail}\) if \(|p_x|>p_{lim}\) or if the maximum episode duration \(t_{max}\) is reached. In all other cases, \(r_{term} = 0\). \(r_{suc}\) and \(r_{fail}\) will be defined at the end of this section. Positive rewards \(r_p\), \(r_v\) and \(r_{\theta }\) are given for a reduction of the relative position, relative velocity and the pitch angle, respectively. The negative reward \(r_{dur}\) gives the agent an incentive to start moving. Considering the negative weights \(w_{p},w_{v},w_{\theta }, w_{dur}\) and the agent frequency \(f_{ag}\), derived in Sec. 3.5, with which actions are drawn from \({\mathbb {A}}\), we define one time step as \(\Delta t=1/f_{ag}\) and \(r_p,r_v,r_{\theta },r_{dur}\) as

The weights are negative so that decreasing the relative position, relative velocity or the pitch angle yields positive reward values. Clipping \(r_p\) and \(r_v\) to \(\pm r_{p,max}\) and \(\pm r_{v,max}\), as well as scaling \(r_{\theta }\) and \(r_{dur}\) with \(v_{lim}\) is necessary due to their influence on the value of \(r_{max}\). \(r_{max}\) denotes the maximum achievable reward in a non-terminal timestep if \(v_x \le v_{lim}\) and \(a_x \le a_{lim}\) and thus complies with the limits set by the motion scenario described in Sect. 3.4 during the derivation of the sequential curriculum. To ensure the applicability of the curriculum also in situations in which that compliance is not given is why the clipping is applied in (20) and (21). \(r_{max}\) plays a role in the scaling of Q-values that is performed as part of the knowledge transfer during different curriculum steps. It is defined as follows

With \(r_{max}\) derived and the weights \(w_{suc}\) and \(w_{fail}\), we can finally define the success and failure rewards as

All weights of the reward function were determined experimentally.

3.3.7 Double Q-learning

We use the Double Q-Learning algorithm (Hasselt, 2010) to address the problem of overestimating action values and increasing training stabilty. Inspired by Even-Dar and Mansour (2004), we apply a state-action pair specific learning rate that decreases the more often the state-action pair has been visited.

where \( n_c(s_t,a_t)\) denotes the number of visits the state action-pair \(\left( s_t,a_t \right) \) has been experiencing until the timestep t and \(\omega \) is a decay factor. To keep a certain minimal learning ability, the learning rate is constant once the value of \(\alpha _{min}\) has been reached. Sufficient exploration of the state space can be ensured by applying an \(\varepsilon \)-greedy policy for the action selection during training. The exploration rate \(\varepsilon \) is varied according to a schedule for the episode number.

3.4 Curriculum and discretization

Our curriculum and discretization approach builds on Lampton and Valasek (2009), where a multiresolution method for state space discretization is presented. There, starting with a coarse discretization, a region around a pre-known learning goal is defined. Once the agent has learned to reach that region, it is further refined by discretizing it with additional states. Then, training starts anew but only in the refined region, repeatedly until a pre-defined resolution around the learning goal has been reached. Consequently, a complex task is reduced to a series of smaller, quickly-learnable sub tasks. In our novel approach, we introduce a similar multiresolution technique combined with transfer learning as part of a sequential curriculum (Narvekar et al., 2020) to accelerate and stabilize training even further. Each new round of training in the multiresolution setting constitutes a different curriculum step. A curriculum step is an instance of the basis learning task introduced in Sect. 3.3. This means in particular that each curriculum step has its own Q-table. Since the size of the state and action space is the same throughout all steps, knowledge can be easily transferred to the subsequent curriculum step by copying the Q-table that serves then as a starting point for its training. Figure 3 illustrates the procedure of the sequential curriculum.

However, throughout the curriculum the goal regions become smaller, leading to less spacious maneuvers of the multi-rotor vehicle. Thus, the achievable reward, which depends on relative change in position and velocity, is reduced. In order to achieve a consistent adaptability of the Q-values over all curriculum steps, the initial Q-values need to be scaled to match the maximum achievable reward per timestep. Therefore, we define

where \(i\ge 1\) is the current curriculum step. Furthermore, adding more curriculum steps with smaller goal regions possibly leads to previously unconsidered effects, such as overshooting the goal state. This is why in our work, training is not only restricted to the latest curriculum step but performed also on the states of the previous curriculum steps when they are visited by the agent.

Furthermore, the discretization introduced in Sect. 3.3.4, which is now associated with a curriculum step, covers only a small part of the continuous observation space with few discrete states. In order to completely capture the predominant environment characteristics of the subspace in which the agent is expected to learn the task, it is necessary for it to have the knowledge of the state space topology (Anderson & Crawford-Hines, 1994). Against the background of the multiresolution approach, this can be rephrased as the question of how to define suitable goal regions, i.e., the size of the goal states. To this end, we again leverage the insight that accurate knowledge about the relative position and velocity is only required when landing is imminent. We begin by choosing an exponential contraction of the size of the unnormalized goal state \(p_{c,x,goal,i}\) associated with the \(i^{th}\) curriculum step such that

where \(0<\sigma <1\) is a contraction factor, \(l_{mp} \in {\mathbb {R}}[{\textrm{m}}]\) is the edge length of the squared moving platform, \(x_{max}\) is the boundary of the fly zone \({\mathcal {F}}\) (see Sect. 3.1.2) and \(n_{cs}\) is the number of curriculum steps following the initial step. The contraction factor \(\sigma \) plays a role in how easy it is for the agent to reach the goal state. If it is set too low, the agent will receive success rewards only rarely, thus leading to an increased training time. (34) can be solved for \(n_{cs}\) because \(\sigma \), \(l_{mp}\) and \(x_{max}\) are known parameters. To determine the relationship between the contraction of the positional goal state and the contraction of the velocity goal state, we need a kinematics model that relates these variables. For this purpose, we envision the following worst case scenario for the relative motion (1). Assume that the vehicle hovers right above the platform which is located on the ground in the center of the fly zone \({\mathcal {F}}\), with \(v_x = 0{\mathrm{m/s}}\) and \(\psi _{rel} = 0\textrm{rad}\). Now, the platform starts to constantly accelerate with the maximum possible acceleration \(a_{mp,max}\) defined by (7) until it has reached \(x_{max}\) after a time \(t_{0} = \sqrt{2 x_{max}/a_{mp,max}}\). This is considered to be the worst case since the platform never slows down, unlike the rectilinear periodic movement (6) used for training. The evolution of the continuous observations over time is then expressed by

which constitute a simple kinematics model that relates the relative position and relative velocity via the time t. Next, we consider \(p_{max}=x_{max}\), \(v_{max}= a_{mp,max}t_{0}\) and \(a_{max} = a_{mp,max}\) for the normalization (11) - (13) and discretize (35) - (37) via the time \(t_i = \sigma ^i t_0\). This allows us to determine the values of \(p_{lim,i}\), \(v_{lim,i}\), \(a_{lim,i}\) and \(p_{goal,i}\), \(v_{goal,i}\), \(a_{goal,i}\). They are required for the basis learning task presented in Sect. 3.3 that now constitutes the \(i^{th}\) curriculum step. For this purpose, we define with \(i \in \left\{ 0,1,\ldots ,n_{cs}\right\} \)

where \(\beta _p=\beta _v =\beta _{a} = 1/3\) if the ith curriculum step is the curriculum step that was most recently added to the curriculum sequence, and \(\beta _p=\sigma ^2,\beta _v = \sigma ,\beta _{a}=1\) otherwise. Thus, for the latest curriculum step the goal values result from scaling the associated limit value with a factor of 1/3, a value that has been found empirically, and for all previous steps from the discretized time applied to (35) - (37). The entire discretization procedure is illustrated by Fig. 4. Introducing a different scaling value only for the latest curriculum step has been empirically found to improve the agent’s ability to follow the moving platform. The discretization of the acceleration is the same for all curriculum steps and is defined by a contraction factor \(\sigma _a\) which has been empirically set. Finding a suitable value for \(\sigma _a\) has been driven by the notion that if this value is chosen too high, the agent will have difficulties reacting to changes in the relative acceleration. This is due to the fact that the goal state would cover an exuberant range in the discretization of the relative acceleration. However, if it is chosen too low the opposite is the case. The agent would only rarely be able to visit the discrete goal state \(a_{goal,i}\), thus unnecessarily foregoing one of only three discrete states.

Illustration of the mapping of the normalized observations \(p_x\) and \(v_x\) to the discrete states (red - 0, yellow - 1, blue - 2) for a curriculum with three steps \((i=0,i=1,i=2)\). The yellow chequered regions illustrate the size of the goal state when the curriculum step is the last of the sequence

During the training of the different curriculum steps the following episodic terminal criteria have been applied. On the one hand, the episode terminates with success and the success reward \(r_{suc}\) is received if the goal state \(s^*\) of the latest curriculum step is reached if the agent has been in that curriculum step’s discrete states for at least one second without interruption. This is different to all previous curriculum steps where the success reward \(r_{suc}\) is received immediately after reaching the goal state of the respective curriculum step as is explained in Sect. 3.3.6. On the other hand, the episode is terminated with a failure if the multi-rotor vehicle leaves the fly-zone \({\mathcal {F}}\) or if the success terminal criterion has not been met after the maximum episode duration \(t_{max}\). The reward received by the agent in the terminal time step for successful or failing termination of the episode is defined by (29). Once the trained agent is deployed, the agent selects the actions based on the Q-table of the latest curriculum step to which the continuous observations can be mapped (see Fig. 3).

3.5 Hyperparameter determination

We leverage the discrete action space (8) to determine the hyperparameters agent frequency \(f_{ag}\) and maximum pitch angle \(\theta _{max}\) in an interpretable way. The purpose is to ensure sufficient maneuverability of the UAV to enable it to follow the platform. For sufficient maneuverability, the UAV needs to possess two core abilities. i) Produce a maximum acceleration bigger than the one the platform is capable of and ii) change direction of acceleration quicker than the moving platform. Against the background of the assumptions explained in Sect. 3.3.1 complemented with thrust compensation, we consider the first aspect by the maximum pitch angle \(\theta _{max}\)

\(k_{a}\) denotes the multiple of the platform’s maximum acceleration of which the UAV needs to be capable. Figure 5 illustrates how different values for \(k_a\) affect the maximum pitch angle \(\theta _{max}\) for different values of the maximum platform acceleration \(a_{mp,max}\). For the second aspect, we leverage the platform’s known frequency of the rectilinear periodic movement. According to (6), the moving platform requires the duration of one period to entirely traverse the available range of acceleration. Leveraging equation (9), we can calculate the time required by the copter to do the same as \(4n_{\theta }\Delta t\), where \(\Delta t = 1/f_{ag}\). Next, we introduce a factor \(k_{man}\) which specifies how many times faster the UAV should be able to roam through the entire range of acceleration than the moving platform. Against this background, we obtain the agent frequency as

Figure 6 illustrates how the agent frequency \(f_{ag}\) depends on the values of \(k_{man}\) and the angular frequency of the platform \(\omega _{mp}\) executing the rectilinear periodic movement.

Both hyperparameters \(\theta _{max}\) and \(f_{ag}\) are eventually based on the maximum acceleration of the moving platform \(a_{mp,max}\) as is the discretization of the state space. Therefore, we argue that these hyperparameters pose a matching set of values that is suitable to prevent excessive jittering in the agent’s actions.

4 Implementation

4.1 General

We set up the experiments to showcase the following.

-

Empirically show that our method is able to outperform the RL baseline (Rodriguez-Ramos et al., 2019) with regard to the rate of successful landings while requiring a shorter training time.

-

Empirically show that our method is able to perform successful landings for more complex platform trajectories such as an 8-shape.

-

Demonstrate our method on real hardware.

4.2 Simulation environment

The environment is built within the physics simulator Gazebo 11, in which the moving platform and the UAV are realized. We use the RotorS simulator (Furrer et al., 2016) to simulate the latter. Furthermore, all required tasks such as computing the observations of the environment, the state space discretization as well as the Double Q-learning algorithm are implemented as nodes in the ROS Noetic framework using Python 3.

The setup and data flow are illustrated by Fig. 7. The associated parameters are given in Table 1. We obtain \({\textbf{a}}_c\) by taking the first order derivative of \({\textbf{v}}_c\) and applying a first order Butterworth-Filter to it. The cut-off frequency is set to \(0.3 {\textrm{hz}}\).

4.3 Initialization

In Kooi and Babuška (2021), it is indicated that training can be accelerated when the agent is initialized close to the goal state at the beginning of each episode. For this reason, we use the following normal distribution to determine the UAV’s initial position within the fly zone \({\mathcal {F}}\) during the first curriculum step.

We set \(\sigma _{{\mathcal {F}}} = p_{max}/3\), which will ensure that the UAV is initialized close to the center of the flyzone and thus in proximity to the moving platform more frequently. All subsequent curriculum steps as well as the testing of a fully trained agent are then conducted using a uniform distribution over the entire fly zone.

4.4 Training hardware

Each training is run on a desktop computer with the following specifications. Ubuntu 20, AMD Ryzen threadripper 3960x 24-core processor, 128 GB RAM, 6TB SSD. This allows us to run up to four individual trainings in parallel. Note, that being a tabular method, the Double Q-learning algorithm does not depend on a powerful GPU for training since it does not use a neural network.

4.5 Training

We design two training cases, simulation and hardware. Case simulation is similar to the training conditions in the baseline method so that we can compare our approach in simulation. Case hardware is created to match the spatial limitations of our real flying environment, so that we can evaluate our approach on real hardware. For both cases, we apply the rectilinear periodic movement (RPM) of the platform specified by (6) during training. We consider different scenarios for training regarding the maximum velocity of the platform, which are denoted by “RPM \(v_{mp,train}\)”. For each velocity \(v_{mp,train}\), we train four agents using the same parameters. The purpose is to provide evidence of reproducibility of the training results instead of only presenting a manually selected, best result. We choose the same UAV (Hummingbird) of the RotorS package that is also used in the baseline method. Other notable differences between the baseline and the training cases simulation and hardware are summarized in Table 2.

Note, that some of our training cases deal with a higher maximum acceleration of the moving platform than the training case used for the baseline method and are therefore considered as more challenging. For all trainings, we use an initial altitude for the UAV of \(z_{init} = 4{\textrm{m}}\) and a vertical velocity of \(v_z = -0.1{\mathrm{m/s}}\) so that the UAV is descending during an entire episode. The values used for these variables in the baseline method can not be inferred from the paper. For the first curriculum step, the exploration rate schedule is empirically set to \(\varepsilon = 1\) (episode \(0 - 800\)) before it is linearly reduced to \(\varepsilon =0.01\) (episode \(800-2000\)). For all later curriculum steps, it is \(\varepsilon =0\). For choosing these values, we could exploit the discrete state-action space where for each state-action pair the number of visits of the agent can be tracked. The exploration rate schedule presented above is chosen so that most state action pairs have at least received one visit. Other training parameters are presented in Table 3. The training is ended as soon as the agent manages to reach the goal state (18) associated with the latest step in the sequential curriculum in \(96\%\) of the last 100 episodes. This value has been empirically set. For all trainings, we use noiseless data to compute the observations fed into the agent. However, we test selected agents for their robustness against noisy observations as part of the evaluation.

4.6 Initiation of motion

Illustration of the problem of motion initiation. After initialization, commanding a yaw angle of \(\psi _{rel} = \pi /4\) allows the lateral agent to enter a state associated with another action than “do nothing” due to the state change induced by the longitudinal platform movement. The platform movement is now reflected in \(p_y,v_y,a_y\) that are the observations fed into the lateral agent

During training, the yaw controller ensures \(\psi _{rel} = 0\). However, during evaluation in simulation this sometimes led to the situation that the agent commanding the lateral motion of the UAV occasionally was not able to leave its initial state (see Fig. 8). We hypothesize that the reason for this behavior is that the agent is trained on a platform that moves while considering relative motion in its observations. As a consequence, the agent learns a policy that, while being in certain states, exclusively relies on the platform movement to achieve a desired state change. However, for evaluation using \(\psi _{rel} = 0\), the agent controlling the lateral motion observes no relative movement if the UAV is hovering and the platform following a rectilinear trajectory in longitudinal direction. The issue can be addressed by setting initial \(\psi _{rel} \ne 0\). In this case, the platform’s movement shows a component in the values \({p}_{c,y},{v}_{c,y}\) and \({a}_{c,y}\) that are used as observations for the lateral motion’s agent. A change in the states is therefore much more likely, allowing the agent to enter states in which the policy selects an action other than “do nothing”. For this reason, we apply \(\psi _{rel} = \pi /4\) for all experiments.

4.7 Cascaded PI controller baseline

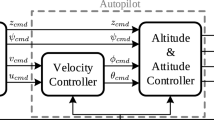

In order to be able to compare the performance of our approach with a non-learning based control method, we implemented a cascaded PI controller with two cascades as is illustrated by Fig. 9.

We apply separate instances for controlling the longitudinal, lateral and vertical movement of the UAV. The properties of the different instances are summarized in Table 4. The outer cascade tracks the setpoint \(0{\textrm{m}}\) for the relative position \(_sr_{rel}\) between the multi-rotor vehicle and the moving platform. For this purpose, it generates a setpoint for the relative velocity \(_s{\dot{r}}_{rel}\) that is tracked by the inner cascade. The inner cascade aims to reach the velocity setpoint by computing setpoints for the attitude angle \(\phi _{ref},\theta _{ref}\) or the thrust \(T_{ref}\), respectively. The attitude angle or thrust is then tracked by the multi-rotor vehicle’s low level controllers. In order to achieve comparability between our RL approach and the cascaded PI controller, we limit the velocity setpoints generated by the outer cascade to the value of \(v_{max} = a_{mp,max}t_0\) (see Sect. 3.4) and the attitude angles generated by the inner cascade to the same angles determined using (44) for the training scenario associated with the agent chosen for comparison.

5 Results

5.1 Evaluation in simulation without noise

In this scenario, all agents trained for case simulation and hardware are evaluated as well in simulation using noiseless observations of the environment, just as in training. Besides a static platform, we use two types of platform trajectories. The first is the rectilinear periodic movement (RPM) specified by (6) and the second is an eight-shaped trajectory defined by

For all landing attempts, we specify an initial altitude of \(z_{init} = 2.5{\textrm{m}}\). A landing attempt is ended once the UAV touches the surface of the moving platform or reaches an altitude that is lower than the platform surface, i. e. misses the platform. If the center of the UAV is located above the moving platform at the moment of touchdown, the landing trial is considered successful. The value of \(z_{init}\) leads to a duration of a landing attempt which corresponds roughly to the time \(t_{max}\) used as maximum episode length during training, see reward function (19). The information regarding the training duration of the agents is summarized in Table 5 for case simulation and case hardware.

The training durations of the different curriculum steps suggest that the majority of the required knowledge is learned during the first curriculum step. The later curriculum steps required significantly fewer episodes to reach the end condition. This is because the exploration rate is \(\varepsilon =0\). Thus, the agent is only exploiting previously acquired knowledge, which is also supported by the accumulated sum of rewards (Fig. 11). This also implies that the decomposition of the landing procedure into several, similar sub tasks is a suitable approach to solve the problem. The achieved success rates in simulation with noiseless observations of the environment are presented in Fig. 10 for training case simulation and in Table 6 for training case hardware. They were determined over a larger set of RPMs than in the baseline method and indicate a good performance of the approach. The success rates become higher when the platform velocity is lower during evaluation compared to the one applied during training. This is to be expected since the equations presented in Sec. 3.5 for hyperparameter determination ensure a sufficient maneuverability up to the velocity of the rectilinear periodic movement used in training. For the training case RPM 0.8, the fourth agent has a comparably poor performance. For the static platform the agent occasionally suffers from the problem of motion initiation as described in Sect. 4.6, despite setting \(\psi _{rel} = \pi /4 {\textrm{rad}}\). For the evaluation case where the platform moves with RPM 0.4, the reason is an oscillating movement, which causes the agent to occasionally overshoot the platform. A similar problem arises for agent four of training case hardware where the agent occasionally expects a maneuver of the platform during the landing procedure. As a consequence, this agent achieves a success rate of only \(82\%\) for a static platform. However, for higher platform velocities, the success rate improves significantly.

Success rates of four agents trained for the case simulation with same parameters on a platform’s rectilinear periodic movement with \(v_{mp,train} = 0.8{\mathrm{m/s}}\) (RPM 0.8 - red), \(v_{mp,train} = 1.2{\mathrm{m/s}}\) (RPM 1.2 - blue) and \(v_{mp,train} = 1.6{\mathrm{m/s}}\) (RPM 1.6 - green). The agents are evaluated on different types of platform movement, indicated by the values of the abscissa. Error bars illustrating the mean success rate and standard deviation are depicted in grey. The success rates have been determined for 150 landing trials for each evaluation scenario

5.2 Selection of agents for further evaluation

We select the first agent of training case simulation which is performing best over all evaluation scenarios and was trained on a rectilinear periodic movement with a platform velocity of \(v_{mp}=1.6{\mathrm{m/s}}\). It is denoted RPM 1.6/1. Its reward curve is depicted in Fig. 11. We compare its results with the RL baseline method and the cascaded PI controller in Table 7. The comparison shows that our approach is able to outperform the RL baseline method. For the RPM 0.4 evaluation scenario with noiseless observations we achieve a success rate of \(99\%\), which is \(+8\% \) better than the baseline. For the RPM 1.2 evaluation scenario, our method is successful in \(99\%\) of the landing trials, increasing the RL baseline’s success rate by \(+26\%\). Note that the maximum radius of the rectilinear periodic movement is \(r_{mp} = \sim 2.5{\textrm{m}}\) in the RL baseline and \(r_{mp}=2{\textrm{m}}\) in our approach. However, the value used for our approach poses a more difficult challenge since the acceleration acting on the platform is higher, due to the same maximum platform velocity, see Table 2. Furthermore, our method requires \(\sim 80\%\) less time to train and \(53\%\) less episodes. Comparison with the cascaded PI controller shows that our method is capable of similar performance than the cascaded PI controller in terms of success rates achieved in the two comparison scenarios. In order to achieve comparability in the first place, we limited the control efforts of the longitudinal and lateral controller’s outer and inner cascade (see Table 4) to values also derived for the training case RPM 1.6. We obtain \(u_o =v_{max} = a_{mp,max}t_0=3.39{\mathrm{m/s}}\) as described in Sect. 3.4 and \(u_i = \theta _{ref} = \phi _{ref} = 21.38^\circ \) by means of (44), respectively. As is further shown by Table 8 the cascaded PI controllers achieve a success rate of \(100\%\) for all types of platform movement. However, the advantage of our learning based method is that it does not require a tuning procedure as is often conducted manually for cascaded PI controllers and therefore time consuming. This is compensated by a smoother flight behaviour enabled by the use of a continuous value range for the control effort of the PI controller.

For training case hardware we select agent 3 for further evaluation, denoted RPM 0.4/3. Its reward curve is also depicted in Fig. 11. It is able to achieve a success rate of \(99\%\) for the evaluation scenario with a static platform, \(100\%\) in case of a platform movement of RPM 0.2, \(99\%\) for RPM 0.4 and \(97\%\) for the eight-shaped trajectory of the platform. It required 2343 episodes to train which took 123min. As already in case simulation, the cascaded PI controller achieves a comparable performance across all types of platform movement as is shown by Table 6. The values for the limits of the control efforts of the longitudinal and lateral controller’s outer and inner cascade have been calculated as \(u_o =v_{max} = a_{mp,max}t_0=0.8{\mathrm{m/s}}\) and \(u_i = \theta _{ref} = \phi _{ref} = 5.59^\circ \)

5.3 Evaluation in simulation with noise

We evaluate the selected agent of case simulation and case hardware as well as the cascaded PI controller for robustness against noise. For this purpose, we define a set of values \(\sigma _{noise}= \left\{ \sigma _{p_x},\sigma _{p_y}, \sigma _{p_z},\sigma _{v_x},\sigma _{v_y},\sigma _{v_z}\right\} \) specifying a level of zero mean Gaussian noise that is added to the noiseless observations in simulation. The noise level corresponds to the noise present in an EKF-based pose estimation of a multi-rotor UAV recorded during real flight experiments.

We evaluate the landing performance again for static, periodic and eight-shaped trajectories of the landing platform in Table 9 for training case simulation and in Table 10 for training case hardware, both also showing the results of the cascaded PI controller. For the selected agent of training case simulation, adding the realistic noise \(\sigma _{noise}\) leads to a slightly reduced performance. However, the achieved success rates are still higher than the agent’s performance in the RL baseline without noise. For the evaluation scenario with RPM 0.4, our success rate is \(+4\%\) higher. With RPM 1.2, it is \(+20\%\) higher. The cascaded PI controllers are unaffected by the specified noise level. They enable a success rate of \(100\%\) across all scenarios of platform movement.

For the selected agent of training case hardware, the drop in performance is slightly more pronounced. The reason is that the fly zone and platform specified for this training case are significantly smaller than for the case simulation. As a consequence, the size of the discrete states is also significantly reduced, whereas the noise level stays the same. Thus, noise affects the UAV more, since it is more likely that the agent takes a suboptimal action due to an observation that was biased by noise. The cascaded PI controller is unaffectd by noise also in the scenario of case hardware, achieving success rates between \(99\%\) and \(100\%\) across the different types of platform movement.

5.4 Evaluation in real flight experiments

5.4.1 General

Unlike the baseline method, we do not evaluate our approach on real hardware in single flights only. Instead, we provide statistics on the agent’s performance for different evaluation scenarios to illustrate the sim-to-real-gap. For this purpose, the selected agent of case hardware was deployed on a quadcopter, see Figs. 1 and 12, that has a mass of \(m_{uav,RL} = 0.72 {\textrm{kg}}\) and a diameter of \(d_{uav,RL}=0.28 {\textrm{cm}}\) and deviates from the UAV used in simulation (mass \(1\%\), diameter \(18\%\)). The cascaded PI controller was tested on a similar quadcopter, the only difference being a different structure carrying the Vicon markers, resulting in a slightly different mass of \(m_{uav,PI} = 0.696{\textrm{kg}}\). The diameter is the same. Both quadcopters are equipped with a Raspberry Pi 4B providing a ROS interface and a LibrePilot Revolution flight controller to enable the tracking of the attitude angles commanded by the respective controllers via ROS. Furthermore, it also controls the commanded descend velocity. A motion capture system (Vicon) provides almost noiseless values of the position and velocity of the moving platform and the quadcopter. However, we do not use these information directly to compute the observations (2)-(5). The reason is that the motion capture system occasionally has wrong detections of markers. This can result in abrupt jumps in the orientation estimate of the UAV. Since the flight controller would immediately react, this could lead to a dangerous condition in our restricted indoor environment. To avoid any dangerous flight condition, we obtain the position and velocity of the UAV from an EKF-based state estimation method running on the flight controller. For this purpose, we provide the flight controller with a fake GPS signal (using Vicon) with a frequency of \(10{\textrm{hz}}\). It is then fused with other noisy sensor data (accelerometer, gyroscope, magnetometer, barometer). The EKF is robust to short periods of wrong orientation estimation. All tested algorithms are run off-board and the setpoint values for the attitude angles generated by the respective controllers are sent to the flight controller via ROS using a WLAN connection. Due to hardware limitations regarding the moving platform, we only evaluate the agent for a static platform and the rectilinear periodic movement. Generally, the Vicon system allows for a state estimate of the copter that has a lower noise level than the one specified by (49). However, the velocity of the platform is determined purely by means of the Vicon system. The rough surface of the ground caused vibrations of the platform that induced an unrealistic high level of noise in the Vicon system’s readings of the platform velocity. For this reason, it is filtered using a low-pass filter with a cut-off frequency of \(10{\textrm{hz}}\).

5.4.2 Evaluation of the RL approach

Figure 13 shows the trajectory of the UAV and moving platform for a landing trial where the platform was executing a rectilinear periodic movement with a maximum velocity of \(0.4{\mathrm{m/s}}\). The depicted x and y component of the multi-rotor vehicle’s trajectory are based on the state estimate calculated by the EKF. All other presented trajectory values are based on the readings of the Vicon system. Table 11 contains the success rates achieved in the experiments with our RL algorithm deployed on real hardware. The starting positions of the UAV were manually selected and as uniformly distributed as possible in an average altitude of \(2.17{\textrm{m}}\). Whereas for the static platform a success rate of \(96\%\) could be reached, there is a noticeable drop in performance for the evaluation scenarios in which the platform is performing the rectilinear periodic movement. We argue that these can be attributed to the following main effects.

-

1.

The deviation in the size of the UAV and the different mass plays a role. Whereas the difference in mass seems negligible, the distribution of mass and thus the inertia of the multi-rotor vehicle is different.

-

2.

A reason for the drop in performance could also be a slightly different behaviour of the low-level controllers than in simulation. They have been tuned manually. A possible solution approach to reduce the sim-to-real gap here could be to vary the controller gains within a small range during training. This should help the agent towards better generalization properties.

-

3.

Trials in which occasional glitches in the state estimate occurred were not treated specially although they could result in extra disturbances the RL controllers had to compensate. Furthermore, we counted also small violations of the boundary conditions as failure, such as leaving the fly zone by a marginal distance even if the agent was able to complete the landing successfully hereafter.

-

4.

The ground effect can play an important role for multi-rotor vehicles (Sanchez-Cuevas et al., 2017). Since it was not considered in the Gazebo based simulation environment used for training, it contributes to the sim-to-real gap.

Furthermore, during the real flight experiments no significant jittering in the agent’s actions could be observed. This substantiates the approach of calculating values for the maximum pitch angle and agent frequency presented in Sect. 3.5.

5.4.3 Evaluation of the cascaded PI controller

Table 12 shows the success rates achieved with the cascaded PI controller. Again, the starting positions of the UAV were manually selected and as uniformly distributed as possible in an average altitude of \(2.09{\textrm{m}}\).

The results show that there is a notable drop in performance for the scenarios RPM 0.2 and RPM 0.4. This was not expected considering the strong results obtained in simulation. Careful investigation of the log files revealed several reasons that could play a role.

-

1.

The multi-rotor vehicle used for testing the cascaded PI controller differed from the one used for the evaluation of the RL controller. Although being identical in terms of size and components, it is \(0.02{\textrm{kg}}\) lighter due to a different structure carrying the markers for the Vicon system.

-

2.

In \(81\%\) of the landing trials a loss of state estimate messages that were sent by the multi-rotor vehicle and received by the ground station running the cascaded PI controller occurred. This happened probably due to issues of ROS queing messages or limited bandwidth of the WLAN module, which was changed. The cascaded PI controller is intended and tuned to run at \(100{\textrm{hz}}\), like in the Gazebo simulation, however, had to handle low frequencies of approximately \(60{\textrm{hz}}\) instead for different lengths of time. As a consequence, the cascaded PI controller’s cycles were executed with a non-constant and often reduced frequency. However, drops in the frequency of the received state estimate were also present for the scenario of a static platform where the cascaded PI controller was able to achieve high success rates. The necessity to transmit attitude setpoints with high frequency can be a disadvantage in situations in which the control method is run off-board and the resulting commands are sent to the multi-rotor vehicle via WLAN. In this regard, our RL based approach is beneficial as it is designed to be run at considerably lower frequencies as is illustrated by Table 2. Drops in frequency could also be noticed in \(58\%\) of the landing trials during the evaluation of the RL controller.

-

3.

All flight experiments were conducted inside a virtual fly box where the tested controller is given authority to control the multi-rotor vehicle. For the RL controller the virtual fly box was set to \([-2{\textrm{m}},2.2{\textrm{m}}]\times [-1{\textrm{m}},1{\textrm{m}}]\times [0.4{\textrm{m}},2.6{\textrm{m}}]\) and for the cascaded PI controller to \([-1{\textrm{m}},1{\textrm{m}}]\times [-1{\textrm{m}},1{\textrm{m}}]\times [0{\textrm{m}},2.3{\textrm{m}}]\). As soon as the virtual fly box is left a fallback controller steers the multi-rotor vehicle back inside before control is handed over to the tested controller again. This maneuver can increase the momentum of the copter which then has to be handled by the limited attitude angles of the tested controller. Leaving the virtual fly box happened frequently during testing of the cascaded PI controller where the initial position of a landing trial was often located outside the fly box, probably due to issues with the altitude estimation. For this reason, leaving the fly box is not counted as a failed attempt for the cascaded PI controller in Table 12.

Besides the aforementioned issues, an additional problem of the cascaded PI controller became obvious during the real world experiments. Without a differential branch it cannot react to the current change in the control errors of the outer and inner cascade. During tuning in simulation a cascaded PI controller turned out to be successful. However, during the real world experiments it could be noted that the cascaded PI controller tends to overshoot the platform in the scenarios RPM 0.2 and RPM 0.4. Here, the RL controller is advantageous because it has learned how to handle the platform’s change in the direction of motion.

5.5 Ablation study

In the context of our approach and in addition to the presented method where the landing task was learned by means of a sequential curriculum leveraging transfer learning between curriculum steps, there are two other ways of how learning the task could be achieved. First, the sequential curriculum is conducted without transfer learning. This means that whenever a new curriculum step is added to the learning sequence the Q-table associated with the latest curriculum step is initialized with zeros. We denote this ablation case Sequential curriculum without transfer learning. And second, we abandon curriculum learning completely. Instead, we create the full sequence of learning steps right in the beginning with all associated Q-tables initialized with zeros. We then conduct one training on the full sequence without adding an additional step after each round of training. This constitutes a scenario in which several separate Q-learning tasks are trained simultaneously. For this reason, we call this ablation case Simultaneous training of sequence. For the training and evaluation, we chose the scenario RPM 1.6. All learning related parameters are the same as presented in Sect. 4.5. We compare these additional two methods with the agent selected for further evaluation in Sect. 5.2 and name this scenario Sequential curriculum with transfer learning. Table 13 summarizes the results.

It is clear that ablation case Sequential curriculum without transfer learning performs worst across all evaluation scenarios in terms of both training duration as well as number of episodes required. Our rationale here is the following. In each new curriculum step the task has to be learned from scratch. However, since in all curriculum steps added to the sequence after the initial step the exploration rate is set to \(\varepsilon =0\), it is even more difficult to learn optimal behaviour. Furthermore, in the early phase after adding a new curriculum step its behaviour is bad due to few updates of the state-action-pairs, therefore acting as a source of disturbance to the previous curriculum steps. For this reason, in order to reach a situation in which the Q-values of the latest curriculum step as well as the Q-values of the previous curriculum steps have sufficiently adapted (\(96\%\) success rate over the last 100 episodes), a significant amount of time is required. The discrepancy between the success rate necessary to end the training and the success rate achieved during evaluation can be explained as follows. As outlined in Sect. 3.4, an episode is terminated with success if the agent spends one second in the latest curriculum step before reaching its goal state. However, during evaluation the multi-rotor vehicle is required to constantly stay above the moving platform, not only for one second. This leaves room for erratic behaviour after this one second period which can be observed for this ablation case. Even though centering the multi-rotor vehicle above the platform is learned in principle, maintaining that centered state for a longer time period is not possible which leads to low success rates. Conceivably, the situation could be improved by choosing a longer time period for maintaining the goal state before the success criterion is met.

Allowing training on all curriculum steps that were initialized with zeros simultaneously creates the possibility to adapt all Q-values of all the separate Q-tables associated with the different steps in the sequence during one training. This solves the problem where the latest untrained curriculum step acts as a disturbance to the previous curriculum steps. As a result, the training time is considerably shorter for the ablation case Simultaneous training of sequence. Also, the erratic behaviour is reduced, leading to higher success rates across all evaluation scenarios. However, at the end of the training the number of unvisited state-action-pairs is quite high, 497 out of 2835 state-action pairs. This indicates that the training is not yet optimal, although the terminal criterion to end the training has been met. This might be solved with a prolongation of the exploration phase.

The best performance in terms of success rates as well as required training time was achieved by the third ablation case Sequential curriculum with transfer learning which constitutes the full method. Here, the erratic behaviour is not present anymore. We hypothize that this is due to the transfer of knowledge between curriculum steps. Not only does this significantly reduce the number of unvisited state-action-pairs (0 out of 2385, a curriculum step adds 567 new state-action-pairs to the sequence) but also the knowledge does not have to be learned from scratch in each new curriculum step but is rather only refined.

6 Conclusion and future work

In this work, we presented a RL based method for autonomous multi-rotor landing. Our method splits the overall task into simpler 1-D tasks, then formulates each of those as a sequential curriculum in combination with a knowledge transfer between curriculum steps. Through rigorous experiments, we demonstrate significantly shorter training time (\(\sim -80\%\)) and higher success rates (up to \(+26\%\)) than the DRL-based actor-critic method presented in Rodriguez-Ramos et al. (2019). For two comparison scenarios, our method achieves a performance comparable to a cascaded PI controller. We present statistics of the performance of our approach on real hardware and show interpretable ways to set hyperparameters. In the future, we plan to test other tabular RL methods such as n-step \(Q(\sigma )\) learning or the use of eligible traces. Furthermore, extending the approach to control the vertical movement and yaw angle of the multi-rotor vehicle is of interest as well as addressing complex landing problems, such as on an inclined or on a flying platform.

References

Anderson, C.W., & Crawford-Hines, S. G. (1994). Multigrid q-learning. technical report cs-94-121

Araar, O., Aouf, N., & Vitanov, I. (2017). Vision based autonomous landing of multirotor uav on moving platform. Journal of Intelligent & Robotic Systems, 85(2), 369–384. https://doi.org/10.1007/s10846-016-0399-z

Borowczyk, A., Nguyen, D. T., Phu-Van Nguyen, A., et al. (2017). Autonomous landing of a multirotor micro air vehicle on a high velocity ground vehicle. IFAC-PapersOnLine, 50(1), 10488–10494. https://doi.org/10.1016/j.ifacol.2017.08.1980

Even-Dar, E., & Mansour, Y. (2004). Learning rates for q-learning. Journal of Machine Learning Research, 5, 1–25.

Falanga, D., Zanchettin, A., & Simovic, A., et al. (2017). Vision-based autonomous quadrotor landing on a moving platform. In 2017 IEEE International Symposium on Safety, Security and Rescue Robotics (SSRR), pp 200–207, https://doi.org/10.1109/SSRR.2017.8088164

Furrer, F., Burri, M., & Achtelik, M., et al. (2016). Robot Operating System (ROS): The Complete Reference (Volume 1), Springer International Publishing, Cham, chap RotorS—A Modular Gazebo MAV Simulator Framework, pp. 595–625.

Gautam, A., Sujit, P., & Saripalli, S. (2015). Application of guidance laws to quadrotor landing. In 2015 International Conference on Unmanned Aircraft Systems (ICUAS), pp. 372–379, https://doi.org/10.1109/ICUAS.2015.7152312

Hasselt, H., et al. (2010). Double q-learning. In J. Lafferty, C. Williams, & J. Shawe-Taylor (Eds.), Advances in Neural Information Processing Systems. (Vol. 23). Curran Associates Inc.

Hu, B., Lu, L., & Mishra, S. (2015). Fast, safe and precise landing of a quadrotor on an oscillating platform. In 2015 American Control Conference (ACC), pp. 3836–3841, https://doi.org/10.1109/ACC.2015.7171928

Kooi, J. E., & Babuška, R. (2021). Inclined quadrotor landing using deep reinforcement learning. In 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp 2361–2368, https://doi.org/10.1109/IROS51168.2021.9636096

Lampton, A., & Valasek, J. (2009). Multiresolution state-space discretization method for q-learning. In 2009 American Control Conference, pp. 1646–1651, https://doi.org/10.1109/ACC.2009.5160474

Lee, S., Shim, T., & Kim, S., et al. (2018). Vision-based autonomous landing of a multi-copter unmanned aerial vehicle using reinforcement learning. In 2018 International Conference on Unmanned Aircraft Systems (ICUAS), pp. 108–114, https://doi.org/10.1109/ICUAS.2018.8453315

Ling, K., Chow, D., & Das, A., et al. (2014). Autonomous maritime landings for low-cost vtol aerial vehicles. In 2014 Canadian Conference on Computer and Robot Vision, pp. 32–39, https://doi.org/10.1109/CRV.2014.13

Miyazaki, R., Jiang, R., & Paul, H., et al. (2018). Airborne docking for multi-rotor aerial manipulations. In 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 4708–4714, https://doi.org/10.1109/IROS.2018.8594513

Mnih, V., Kavukcuoglu, K., & Silver, D., et al. (2013). Playing atari with deep reinforcement learning

Mo, H., & Farid, G. (2019). Nonlinear and adaptive intelligent control techniques for quadrotor uav-a survey. Asian Journal of Control, 21(2), 989–1008. https://doi.org/10.1002/asjc.1758

Narvekar, S., Peng, B., Leonetti, M., et al. (2020). Curriculum learning for reinforcement learning domains: A framework and survey. Journal of Machine Learning Research, 21(181), 1–50.

Polvara, R., Patacchiola, M., & Sharma, S., et al. (2018). Toward end-to-end control for uav autonomous landing via deep reinforcement learning. In 2018 International Conference on Unmanned Aircraft Systems (ICUAS), pp. 115–123, https://doi.org/10.1109/ICUAS.2018.8453449

Polvara, R., Sharma, S., Wan, J., et al. (2019). Autonomous vehicular landings on the deck of an unmanned surface vehicle using deep reinforcement learning. Robotica, 37(11), 1867–1882. https://doi.org/10.1017/S0263574719000316

Rodriguez-Ramos, A., Sampedro, C., & Bavle, H., et al. (2018). A deep reinforcement learning technique for vision-based autonomous multirotor landing on a moving platform. In 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp 1010–1017, https://doi.org/10.1109/IROS.2018.8594472

Rodriguez-Ramos, A., Sampedro, C., Bavle, H., et al. (2019). A deep reinforcement learning strategy for uav autonomous landing on a moving platform. Journal of Intelligent & Robotic Systems, 93(1), 351–366. https://doi.org/10.1007/s10846-018-0891-8

Sanchez-Cuevas, P., Heredia, G., & Ollero, A. (2017). Characterization of the aerodynamic ground effect and its influence in multirotor control. International Journal of Aerospace Engineering. https://doi.org/10.1155/2017/1823056

Shi, G., Shi, X., & O’Connell, M., et al. (2019). Neural lander: Stable drone landing control using learned dynamics. In 2019 International Conference on Robotics and Automation (ICRA), pp. 9784–9790, https://doi.org/10.1109/ICRA.2019.8794351

Sutton, R. S., & Barto, A. G. (2015). Reinforcement Learning: An Introduction Second edition, in progress. London, England: The MIT Press, Cambridge, Massachusetts.

Vlantis, P., Marantos, P., & Bechlioulis, C. P., et al. (2015). Quadrotor landing on an inclined platform of a moving ground vehicle. In 2015 IEEE International Conference on Robotics and Automation (ICRA), pp. 2202–2207, https://doi.org/10.1109/ICRA.2015.7139490

Wang, P., Man, Z., & Cao, Z., et al. (2016). Dynamics modelling and linear control of quadcopter. In 2016 International Conference on Advanced Mechatronic Systems (ICAMechS), pp. 498–503, https://doi.org/10.1109/ICAMechS.2016.7813499

Wenzel, K. E., Masselli, A., & Zell, A. (2011). Automatic take off, tracking and landing of a miniature uav on a moving carrier vehicle. Journal of Intelligent & Robotic Systems, 61(1), 221–238. https://doi.org/10.1007/s10846-010-9473-0

Zhong, D., Zhang, X., & Sun, H., et al. (2016). A vision-based auxiliary system of multirotor unmanned aerial vehicles for autonomous rendezvous and docking. In 2016 International Joint Conference on Neural Networks (IJCNN), pp. 4586–4592, https://doi.org/10.1109/IJCNN.2016.7727801

Acknowledgements

The authors thank Eric Price for his extensive support with the flight experiments. The authors thank the International Max Planck Research School for Intelligent Systems (IMPRS-IS) for supporting Pascal Goldschmid.

Funding

Open Access funding enabled and organized by Projekt DEAL. This work was funded by the Institute of Flight Mechanics and Controls, University of Stuttgart.

Author information

Authors and Affiliations

Contributions

Pascal Goldschmid is the first author, implemented the methods, performed all experiments and prepared the main manuscript. Aamir Ahmad supervised the work, provided guidance to the first author, and revised and edited the manuscript. Both authors reviewed the paper.

Corresponding author

Ethics declarations

Conflict of interest

Both authors’ primary affiliation is the Institute of Flight Mechanics and Controls, University of Stuttgart. Pascal Goldschmid is employed as a research associate and Aamir Ahmad as a tenure-track professor. Both authors are also affiliated with the Perceiving Systems department of the Max Planck Institute for Intelligent Systems.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 1 (mp4 77603 KB)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Goldschmid, P., Ahmad, A. Reinforcement learning based autonomous multi-rotor landing on moving platforms. Auton Robot 48, 13 (2024). https://doi.org/10.1007/s10514-024-10162-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10514-024-10162-8