Abstract

In clinical practice, sleep stage classification (SSC) is a crucial step for physicians in sleep assessment and sleep disorder diagnosis. However, traditional sleep stage classification relies on manual work by sleep experts, which is time-consuming and labor-intensive. Faced with this obstacle, computer-aided diagnosis (CAD) has the potential to become an intelligent assistant tool for sleep experts, aiding doctors in the assessment and decision-making process. In fact, in recent years, CAD supported by artificial intelligence, especially deep learning (DL) techniques, has been widely applied in SSC. DL offers higher accuracy and lower costs, making a significant impact. In this paper, we will systematically review SSC research based on DL methods (DL-SSC). We explores DL-SSC from several important perspectives, including signal and data representation, data preprocessing, deep learning models, and performance evaluation. Specifically, this paper addresses three main questions: (1) What signals can DL-SSC use? (2) What are the various methods to represent these signals? (3) What are the effective DL models? Through addressing on these questions, this paper will provide a comprehensive overview of DL-SSC.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Sleep is the most fundamental biological process, occupying approximately one-third of human life and playing a vital role in human existence (Siegel 2009). Unfortunately, sleep disorders are prevalent in modern society. A global study involving nearly 500,000 people in 2022 indicated that the insomnia rate among the public reached as high as 40.5% during the COVID-19 pandemic (Jahrami et al. 2022). Sleep disorders are closely associated with various neurologic and psychiatric disorders (Van Someren 2021). For instance, research by Zhang et al. demonstrated a correlation between reduced deep sleep proportion in Alzheimer’s disease patients and the severity of dementia (Zhang et al. 2022b). Additionally, insomnia was found to double the risk of depression in people without depressive symptoms, as stated in Baglioni et al. (2011). Timely and effective treatment of insomnia was able to serve as a primary preventive measure for depression (Clarke and Harvey 2012). In summary, sleep issues have a significant impact on both physiological and psychological well-being, necessitating timely diagnosis. The essential step in clinical sleep disorder diagnosis and assessment is referred to as sleep stage classification (SSC) (Wulff et al. 2010), also known as sleep staging or sleep scoring.

In clinical practice, the gold standard for classifying sleep stages is the polysomnogram (PSG), which includes a set of nocturnal sleep signals such as electroencephalogram (EEG), electrooculogram (EOG), and electromyogram (EMG). The PSG signals are segmented into 30-second units, and the continuous segments obtained are referred to as sleep stages, with each segment belonging to a specific stage category. The criteria for determining the stage category of each epoch are known as R&K (Rechtschaffen 1968) and AASM (Iber 2007), with the former established in 1968 and the latter being the most recent and commonly used. R&K divides sleep into three basic stages: wakefulness (W), rapid eye movement (REM), and non-rapid eye movement (NREM). NREM can be subdivided into S1, S2, S3, and S4. AASM merging S3 and S4 into a single stage, resulting in five sleep stages: W, N1 (S1), N2 (S2), N3 (S3-S4), and REM. Based on these standards, researchers sometimes describe sleep stages differently. We have listed the various descriptions used in the studies included in this paper in Table 1. Different stages exhibit distinct characteristics during sleep. The N2 stage is typically marked by significant waves such as sleep spindles and K complexes (Parekh et al. 2019). Moreover, sleep is a continuous and dynamic process, and there exists contextual information between consecutive epochs (forming sequences) (Rechtschaffen 1968; Iber 2007). For instance, if isolated N3 occur between several consecutive N2, doctors still classify them as N2 (Wu et al. 2020).

Manual classification is time-intensive and laborious (Malhotra et al. 2013). In response to the immense demand in healthcare, numerous methods for automatically analyzing EEG for sleep staging have been proposed. These automatic sleep stage classification (ASSC) methods are developed using machine learning (ML) algorithms. Early ASSC was a combination of manual feature extraction and traditional ML. Researchers manually extract features from the time-domain, frequency-domain of signals and use traditional ML methods, such as support vector machines (SVM), for feature classification to achieve automation (Li et al. 2017; Sharma et al. 2017). However, manual feature engineering is very tedious and requires additional prior knowledge (Jia et al. 2021; Eldele et al. 2021). Moreover, due to the significant variability in EEG among different individuals (Subha et al. 2010), it is challenging to extract well-generalized features. Therefore, self-learning methods based on deep learning have begun to be used for sleep staging.

In recent years, deep learning (DL) has become a popular approach for automatic sleep stage classification. This may be because DL methods can automatically extract sleep features and complete classification in an end-to-end manner (Zhang et al. 2022a), avoiding the cumbersome feature extraction and explicit classification steps. In the current context of automatic sleep stage classification based on deep learning (DL-ASSC), there are three key points worth noting. On one hand, signals form the basis of ASSC. Various studies have extensively explored multiple types of signals, which can be broadly categorized into three classes: the first category is PSG, including EEG, EOG, and EMG (Guillot et al. 2020; Seo et al. 2020; Supratak et al. 2017); the second category is cardiorespiratory signals, including electrocardiogram (ECG), photoplethysmography (PPG), respiratory effort, etc. (Goldammer et al. 2022; Kotzen et al. 2022; Olsen et al. 2022); the third category is contactless signals, mainly radar, Wi-Fi and audio signals (Zhai et al. 2022; Yu et al. 2021a; Tran et al. 2023). On the other hand, the same signal can be represented in various forms, and different input representation into a DL model might yield different performance (Biswal et al. 2018). Popular data representations fall into three categories: the first category involves directly inputting raw one-dimensional (1D) signals into the network (Seo et al. 2020; Supratak et al. 2017); the second category uses transformed domain data of the signal as model input, commonly seen in two-dimensional (2D) time-frequency spectrograms [usually obtained from the original signal through continuous wavelet transform (Kuo et al. 2022) or short-time Fourier transform (Guillot et al. 2020)]; the third category combines both, typically employing a dual-stream structure where different input forms are processed separately in each branch (Phan et al. 2021; Jia et al. 2020a). Last but not least, the ASSC methods employing various DL models continue to emerge. Convolutional neural networks (CNNs), initially designed for the field of image processing, are commonly used by researchers for feature extraction. As a widely recognized foundational model, CNNs are widely applied in sleep stage classification, either directly using one-dimensional CNNs on raw signals or employing more common 2D CNNs on transformed domain representations of the signals. Another type of classical models takes the spotlight: recurrent neural networks (RNNs) and its two variants, long short-term memory (LSTM) and gated recurrent unit (GRU). RNNs are adept at handling time series data and can capture temporal information in sleep data. Moreover, in 2017, Google introduced the Transformer (Vaswani et al. 2017), which utilizes the multi-head self-attention (MHSA) mechanism and quickly became an indispensable technique in time-series data modeling. Compared with RNNs, MHSA can also effectively capture the time dependence of sleep data when applied to sleep stage classification. In practical applications, researchers often choose to customize (design) a deep neural network (DNN) to adapt to different needs and tasks. In the ASSC based on deep learning methods, the most commonly used architecture in existing research is feature extraction + sequence encoding. The feature extractor first maps the input signal to an embedding space, and then models the temporal information (context-dependent information) through the sequence encoder. CNN is a common choice for the feature extractor, and the sequence encoder is often implemented by RNN-like models or attention mechanisms.

DL-SSC research has achieved significant progress, and some studies have achieved clinically acceptable performance (Phan and Mikkelsen 2022). This topic has been addressed in several review articles. However, earlier publications such as those by Fiorillo et al. (2019) and Faust et al. (2019) do not encompass the developments of recent years. More comprehensive review papers have recently emerged, but they still have some limitations. For instance, the work by Alsolai et al. (2022) focuses more on feature extraction techniques and machine learning methods, with less emphasis on the latest end-to-end deep learning approaches. Sri et al. (2022) and Loh et al. (2020) reviewed the performance of different deep learning models using PSG signals but did not cover aspects such as signal representation and preprocessing. The studies by Phan and Mikkelsen (2022) and Sun et al. (2022) only considered EEG and ECG signals, excluding other types of signals. We have summarized these works in Table 2. Therefore, this paper provides a comprehensive review of recent years’ sleep stage classification based on deep learning. We have examined all the elements required for DL-SSC, including signals, datasets, data preprocessing, data representations, deep learning models, evaluation methods, etc. Specifically, the main topices discussed in this paper include: (1) signals that can be used in DL-SSC; (2) methods to represent data, i.e., how signals can be input into DL models for further processing; (3) effective DL models and their performance.

This paper is organized as follows. Section 2 describes the sources of literature and the search process. Section 3 discusses available signals and summarizes some public datasets. Section 4 discusses PSG-based research, including preprocessing, different data representations, and DL models. Sections 5 and 6 will cover research based on cardiorespiratory signals and non-contact signals, respectively. Finally, Sect. 7 and Sect. 8 will discuss and summarize the findings.

2 Review methodology

We conduct a literature search and screening through the following process, Fig. 1 is a visual representation of this process. We searched well-known literature databases, namely Google Scholar, Web of Science, and PubMed. The relevant studies on sleep stage classification using three different types of signals were identified using the following common keywords and their combinations: (“Deep Learning” OR “Deep Machine Learning” OR “Neural Network”) AND (“Sleep Stage Classification” OR “Sleep Staging” OR “Sleep Scoring”). The keywords specific to each signal type were: (“Polysomnography” OR “Electroencephalogram” OR “Electrooculogram” OR “Electromyogram”), (“Electrocardiogram” OR “Photoplethysmography”), (“Radar” OR “Wi-Fi” OR “Microphone”). For deep neural network models, no specific keywords were set, and the publication or release year of the literature was restricted to 2016 or later. After excluding some irrelevant or duplicate studies, the literature was assessed based on the following criteria, which define the inclusion and exclusion standards of the relevant studies:

-

(1)

Task—only studies that performed sleep stage classification tasks were included.

-

(2)

Signal—studies that used one or a combination of the signals mentioned in the text for sleep staging were included. Studies using other signals, such as functional near-infrared spectroscopy (fNIRS), were excluded due to their scarcity (Huang et al. 2021; Arif et al. 2021).

-

(3)

Method—only studies employing deep learning-based methods were included, i.e., those using neural networks with at least two hidden layers. Traditional machine learning methods were generally not reviewed, but a few studies that used a combination of deep neural networks and machine learning classifiers for feature extraction and classification (Phan et al. 2018) were included.

-

(4)

Time—the focus was on studies conducted after 2016 (the earliest relevant study included in this paper was published in 2016).

Finally, the publicly available datasets reviewed in this paper were found through three approaches: mentioned in the articles included in this review, using the Google search engine with the keywords “Sleep stage Dataset” and corresponding signal types, and the PhysionetFootnote 1 and NSRRFootnote 2 websites.

Schematic diagram of the literature selection process. It is divided into five steps: database paper search, duplicate removal, relevance screening, determination of topic compliance, and final inclusion in the review. In the diagram, n represents the number of papers, and the subscripts indicate different types of signals: 1 represents PSG, etc., 2 represents ECG, etc., and 3 represents non-contact signals. The paper search also includes additional database identifiers. This process ensures that the final included papers can summarize the main research content of recent years

3 Signals, datasets and performance metrics

3.1 Signals

The standard signal for sleep studies is PSG. In addition to this, signals containing cardiorespiratory information such as ECG, PPG, respiratory effort, etc., are commonly used. In recent years, signals like radar and Wi-Fi have also been explored due to their simplicity and comfort (Hong et al. 2019). Commonly used signals are listed in Table 3.

3.1.1 PSG signals

PSG signal refer to the signals obtained from polysomnogram recordings, which are used to monitor sleep stages. It records a set of signals during sleep using multiple electrodes, including various physiological parameters such as brain activity, eye movements, and muscle activity (Kayabekir 2019). Electrodes on the scalp are responsible for recording electrical signals related to brain neuron activity, known as EEG. Electrodes near the eyes record electrical signals associated with eye movements, known as EOG. Electromyogram or EMG typically requires needle electrodes inserted into muscles to obtain electrical signals related to muscle activity, and during sleep monitoring, EMG is usually recorded near the chin. These three signals together are referred to as PSG. PSG serves as the standard signal for quantifying sleep stages and sleep quality (Yildirim et al. 2019; Tăutan et al. 2020).

EEG contains information necessary for ML or DL analysis in various domains such as time domain, frequency domain, and time-frequency domain. In the time domain, EEG features are mainly reflected in the changes in amplitude over time. Event-related potentials (ERPs) and statistical features can be obtained through time-domain averaging (Aboalayon et al. 2016). The frequency domain mainly describes the distribution characteristics of EEG power across different frequencies. The fast Fourier transform (FFT) can be used to obtain five basic frequency bands as shown in Table 4, each with different implications (Aboalayon et al. 2016). EEG is a non-stationary signal generated by the superposition of electrical activities of numerous neurons (Li et al. 2022d). It possesses variability and time-varying characteristics, meaning it has different statistical properties at different times and frequency bands, and it undergoes rapid changes within short periods (Wang et al. 2021; Stokes and Prerau 2020). Time-frequency analysis is particularly suitable for such non-stationary signals. Common methods include short-time Fourier transform (STFT), continuous wavelet transform (CWT), and Hilbert-Huang transform (HHT), among others. Time-frequency analysis can simultaneously reveal changes in signals over time and frequency (Jeon et al. 2020; Tyagi and Nehra 2017). Figure 2 shows the time waveforms and time-frequency spectrogram of N1 and N2 stages. Due to its rich information features from multiple perspectives, EEG can be used in sleep stage classification tasks in various forms. For example, Biswal et al. (2018) constructed neural networks using raw EEG or time-frequency spectra as inputs. They also compared machine learning methods with expert handcrafted features as inputs, and the results showed that deep learning methods outperformed machine learning methods. EOG and EMG signals exhibit different characteristics in different sleep stages and can provide information for identifying sleep stages. For instance, during the REM stage, eye movements are more intense, whereas during the NREM stage, eye movements are relatively stable (Iber 2007). The amplitude of EMG near the chin during the W stage is variable but typically higher than that in other sleep stages (Iber 2007). However, EOG and EMG are usually used as supplements to EEG. Combining EEG, EOG, and EMG in multimodal sleep stage classification is a popular approach (Phan et al. 2021; Jia et al. 2020a). Multimodal approaches can generally improve performance, but continuous attachment of multiple electrodes might affect the natural sleep state of the subjects. Therefore, single-channel EEG is currently the most popular choice in research (Fan et al. 2021).

3.1.2 Cardiorespiratory signals

PSG often needs to be conducted in specialized laboratories and is challenging for long-term monitoring. In contrast, cardiac and respiratory activities are easier to monitor. Many studies have also confirmed the correlation between sleep and cardiac activity (Bonnet and Arand 1997; Tobaldini et al. 2013). This has led people to explore an alternative approach to sleep monitoring apart from PSG.

Research indicates a strong connection between sleep and the activity of the autonomic nervous system (ANS) (Bonnet and Arand 1997; Tobaldini et al. 2013). When the human body is sleeping, it will be repeatedly controlled by the sympathetic and vagus nerves. When sleep changes from wakefulness to the N3 stage, the blood pressure and heart rate controlled by the ANS will also change accordingly (Shinar et al. 2006; Papadakis and Retortillo 2022). This manifests as different features in cardiac and respiratory activities corresponding to changes in sleep stages. For example, one of the features during REM is highly recognizable breathing frequency and potentially more irregular and rapid heart rate (HR). HR during NREM might be more stable, and during the W stage, there is low-frequency heart rate variability (HRV) and significant body movement (Sun et al. 2020). These discriminative features determine the applicability of cardiorespiratory signals in SSC. Cardiorespiratory signals encompass signals containing information about both heart and respiratory activities, primarily including ECG, PPG, and respiratory effort. ECG is a technique used to record cardiac electrical activity, which can directly reflect a person’s respiratory and circulatory systems (Sun et al. 2022). In SSC, raw ECG signals are not directly used; instead, derived signals are employed, such as HR (Sridhar et al. 2020), HRV (Fonseca et al. 2020), ECG-derived respiration (EDR) (Li et al. 2018), RR intervals (RRIs) (Goldammer et al. 2022), RR peak sequences (Sun et al. 2020), and others. An example of an ECG is shown in Fig. 3: the instantaneous heart rate sequence derived from the ECG and the corresponding overnight sleep stage changes (Sridhar et al. 2020). PPG is a low-cost technique measuring changes in blood volume, commonly used to monitor heart rate, blood oxygen saturation, and other information. PPG is simple to implement and can be collected at the hand using photodetectors embedded in watches or rings (Kotzen et al. 2022; Radha et al. 2021; Walch et al. 2019). HR and HRV can be derived from PPG, indirectly reflecting sleep stages. A small portion of research also uses raw PPG for classification (Kotzen et al. 2022; Korkalainen et al. 2020). Figure 4 shows examples of PPG signal waveforms corresponding to the five sleep stages (Korkalainen et al. 2020). Similar to EEG, ECG and PPG also have their auxiliary signals. Common choices include combining signals from chest or abdominal respiratory efforts with accelerometer signals (Olsen et al. 2022; Sun et al. 2020). For instance, in Goldammer et al. (2022), the authors derived RR intervals from ECG and combined them with breath-by-breath intervals (BBIs) derived from chest respiratory efforts for W/N1/N2/N3/REM classification. In Walch et al. (2019), the authors used PPG and accelerometer signals collected from the “Apple Watch” to classify W/NREM/REM sleep stages. It’s worth noting that most studies in cardiac and respiratory signal research focus on four-stage (W/L/D/REM, L: light sleep, D: deep sleep) or three-stage (W/NREM/REM) classification.

The instantaneous heart rate time series derived from the ECG signal throughout the night, and the corresponding changes in sleep stages throughout the night (Sridhar et al. 2020)

The waveforms of the original PPG signals corresponding to the five different sleep stages (Korkalainen et al. 2020)

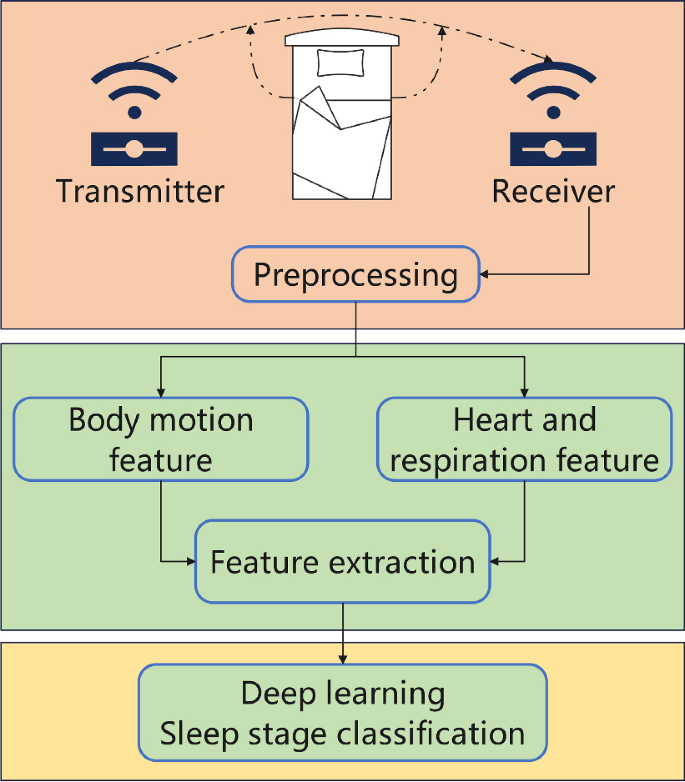

3.1.3 Contactless signals

The use of cardiorespiratory signals can effectively reduce the inconvenience caused to patients during sleep monitoring (compared to PSG). However, it still involves physical contact with the subjects. The development of non-contact sensors (such as biometric radar, Wi-Fi, microphones, etc.) has changed this situation.

In recent years, radar technology has been used for vital sign and activity monitoring (Fioranelli et al. 2019; Hanifi and Karsligil 2021; Khan et al. 2022). In these systems, radar sensors emit low-power radio frequency (RF) signals and extract vital signs, including heart rate, respiration rate, movement, and falls, from reflected signals. Wi-Fi technology has subsequently been developed, utilizing Wi-Fi channel state information (CSI) to monitor vital signs more cost-effectively (Soto et al. 2022; Khan et al. 2021). For example, research by Diraco et al. (2017) used ultra-wideband (UWB) radar and DL methods to monitor vital signs and falls, and Adib (2019) achieved HR measurement and emotion recognition using Wi-Fi. Previous studies have demonstrated that HR, respiration, and movement information can be extracted from RF signals reflected off the human body, which fundamentally still falls under the category of cardiorespiratory signals, and they are also related to sleep stages. Therefore, in principle, we can perform contactless SSC using technologies such as radar or Wi-Fi (Zhao et al. 2017). Subsequent research has proven the feasibility of wireless signals for SSC (Zhai et al. 2022; Zhao et al. 2017; Yu et al. 2021a). Additionally, some research has achieved good results in sleep stage classification by recording nighttime breathing and snoring information through acoustic sensors (Hong et al. 2022; Tran et al. 2023). However, compared to other methods, audio signals might raise concerns about privacy.

3.2 Public datasets

Data is one of the most crucial components in DL. In recent years, the field of sleep stage classification has seen the emergence of several public databases, with the two most prominent ones being PhysioNet (Goldberger et al. 2000) and NSRR (Zhang et al. 2018). Widely used datasets such as Sleep-EDF2013 (SEFD13), Sleep-EDF2018 (SEDF18), and CAP-Sleep are all derived from the open-access PhysioNet database. The Sleep-EDF (SEDF) series is perhaps the most extensively utilized dataset. SEDF18 comprises data from each subject with 2 EEG channels, 1 EOG channel, and 1 chin EMG channel. The data is divided into two parts: SC (without medication) and ST (with medication). SC includes 153 (nighttime) recordings from 78 subjects who did not take medication. ST comprises 44 recordings from 22 subjects who took medication. The data is annotated using R&K rules, and EEG and EOG have a sampling rate of 100 Hz. Another notable database is NSRR, from which datasets like SHHS (Quan et al. 1997) and MESA (Chen et al. 2015) are derived. Table 5 summarizes some the public datasets.

Public datasets have significantly propelled the development of DL-SSC research, and their existence is highly beneficial. For instance, they can serve as common references and benchmarks, as well as be directly utilized for data augmentation or transfer learning to enhance model performance. However, existing datasets also present certain challenges. On one hand, different datasets vary in sampling rates and channels. Automated (DL) methods are often designed based on specific datasets, causing these methods to handle only particular input shapes (Guillot et al. 2021). A common solution is to perform operations like resampling and channel selection on different datasets to standardize the input shape (Lee et al. 2024). On the other hand, class imbalance issues are prevalent in sleep data. Class imbalance refers to a situation where certain categories in the dataset have significantly fewer samples than others. Due to the inherent nature of sleep, the duration of each stage in sleep recordings is not equal (Fan et al. 2020). We have compiled the sample distribution of several datasets in Table 6. The results indicate that the N2 constitutes around 40% of the total samples, while N1 have substantially fewer samples. This sample imbalance might introduce biases in model training. In current research, N1 stage recognition generally performs the worst. For example, in the study by Eldele et al. (2021), the macro F1-score for the N1 class was only around 40.0, while other categories scored around 85. This class imbalance is intrinsic to sleep and cannot be eliminated. However, its impact can be mitigated through certain methods, which we will discuss in Sect. 4.1.2.

3.3 Performance metrics

The essence of sleep staging is a multi-classification problem, commonly evaluated using performance metrics such as accuracy (ACC), macro F1-score (F1), and Cohen’s Kappa coefficient. Accuracy refers to the ratio between the number of correctly classified samples by the model and the total number of samples. The calculation formula is as follows:

where true positive (TP) is the number of samples correctly predicted as positive class by the model, and true negative (TN) is the number of samples correctly predicted as negative class by the model. TP and TN both represent instances where the model’s prediction matches the actual class, indicating correct predictions. False positive (FP) is the number of negative class samples incorrectly predicted as positive class by the model, and false negative (FN) is the number of positive class samples incorrectly predicted as negative class by the model. FP and FN represent instances where the model’s prediction does not match the actual class, indicating incorrect predictions.

ACC is a commonly used evaluation metric in classification problems, but it may show a “pseudo-high” characteristic when dealing with imbalanced datasets (Thölke et al. 2023). In contrast, the F1-score takes into account both precision (PR) and recall (RE) of the model. PR is the proportion of truly positive samples among all samples predicted as positive by the model (Yacouby and Axman 2020). RE is the proportion of truly positive samples among all actual positive samples, as predicted by the model. In classification problems, each class has its own F1-score, known as per-class F1-score. Taking the average of F1-scores for all classes yields the more commonly used macro F1-score (MF1). The calculation formula is as follows:

Cohen’s Kappa coefficient (abbreviated as Kappa) measures the agreement between observers and is used to quantify the consistency between the model’s predicted results and the actual observed results (Hsu and Field 2003). The calculation formula is as follows:

where \({P_{ec}}\) is the observed agreement (the proportion of samples with consistent actual and predicted labels), and \({P_{ei}}\) is the chance agreement (the expected probability of agreement between predicted and actual labels, calculated based on the distribution of actual and predicted labels). Kappa ranges from -1 to +1, with higher values indicating better agreement.

Among these three commonly used performance metrics, accuracy corresponds to the ratio of correctly classified samples to the total number of samples, ranging from 0 (all misclassified) to 1 (perfect classification). ACC represents the overall measure of a model’s correct predictions across the entire dataset. The basic element of calculation is an individual sample, with each sample having equal weight, contributing the same to ACC. Once the concept of class is considered, there are majority and minority classes, with the majority class obviously having higher weight than the minority class. Therefore, in the face of class-imbalanced datasets, the high recognition rate and high weight of the majority class can obscure the misclassification of the minority class (Grandini et al. 2020). This means that high accuracy does not necessarily indicate good performance across all classes.

MF1 is the macro-average of the F1-scores of each class. MF1 evaluates the algorithm from the perspective of the classes, treating all classes as the basic elements of calculation, with equal weight in the average, thus eliminating the distinction between majority and minority classes (the effect of large and small classes is equally important) (Grandini et al. 2020). This means that high MF1 indicates good performance across all classes, while low MF1 indicates poor performance in at least some classes.

The Cohen’s Kappa coefficient is used to measure the consistency between the classification results of the algorithm and the ground truth (human expert classification), ranging from -1 to 1, but typically falling between 0 and 1. From formula 7, it can be seen that the Kappa considers both correct and incorrect classifications across all classes. In the case of class imbalance, even if the classifier performs well on the majority class, misclassifications on the minority class can significantly reduce the Kappa (Ferri et al. 2009). To illustrate this with a simple binary classification problem, assume there are 100 samples in total for classes 0 and 1, with a ratio of 9 : 1. If a poorly performing model always predicts class 0, even if it is entirely wrong on class 1, the ACC would still be as high as 90%. Calculating the F1-score, it is found that class 0 has a score of 1.0 and class 1 has a score of 0, resulting in an MF1 of only 0.5. MF1 equally considers the majority and minority classes, fairly reflecting the poor classification performance. The Kappa value would be 0, indicating no correlation between the model’s predictions and the ground truth. Even though the overall accuracy is high, it does not indicate real classification ability. In summary, this confirms that in the face of class-imbalanced datasets, MF1 and the Kappa can provide more reliable and comprehensive evaluations than accuracy.

4 ASSC based on PSG signals

The essence of automatic sleep stage classification lies in the analysis of sleep data and the extraction of relevant information. In the process of data analysis, appropriate preprocessing and data representation methods can help the model learn and interpret these signals more effectively. This section will provide detailed explanations regarding the preprocessing of PSG signals, method of data representation, and deep learning models.

4.1 Preprocessing methods and class imbalance problems

Preprocessing plays a crucial role in the classification of sleep stages. Appropriate preprocessing methods have a positive impact on subsequent feature extraction, whether it is manual feature extraction in traditional machine learning or high-dimensional feature extraction in deep learning (Wang and Yao 2023). Class imbalance is a persistent problem in sleep stage classification, as shown in Table 6. In this section, we will discuss preprocessing methods and approaches to handling class imbalance problems (CIPs).

4.1.1 Preprocessing methods

In PSG studies, most research is actually based on single-channel EEG, while a small portion uses combinations of EEG and other signals. The original EEG signal is a typical low signal-to-noise ratio signal, usually weak in amplitude and contains a lot of undesirable background noise that needs to be eliminated before actual analysis (Al-Saegh et al. 2021). Additionally, there is sometimes a need to enhance the original EEG to better meet the requirements. Based on these needs and reasons, the following preprocessing methods have appeared in existing studies.

Notch filtering: Used to eliminate 50 Hz or 60 Hz power line interference noise (power frequency interference) (Zhu et al. 2023).

Bandpass filtering: Used to remove noise and artifacts. The cutoff frequencies for filtering are inconsistent across different studies, even for the same signal from the same dataset. For example, Phyo et al. (2022) and Jadhav et al. (2020) applied bandpass filtering with cutoff frequencies of 0.5–49.9 Hz and 0.5–32 Hz for the EEG Fpz-Cz channel of the SEDF dataset, respectively.

Downsampling: Signals from different datasets have varying sampling rates. When utilizing multiple datasets, downsampling is often performed to standardize the rates. Downsampling also reduces computational complexity (Fan et al. 2021).

Data scaling and clipping: Scaling adjusts the signal values proportionally to facilitate subsequent processing by adjusting the amplitude range. Clipping is done to prevent large disturbances caused by outliers during model training. Guillot et al. (2021) first scaled the data to have a unit-interquartile range (IQR) and zero-median and then clipped values greater than 20 times the IQR.

Normalization: Normalization should also belong to the large category of data scaling, and is listed here separately for convenience. The most common preprocessing step, normalization plays a significant role in deep learning. It scales the data proportionally to fit within a specific range or distribution. Normalization unifies the data of different features into the same range, ensuring that each feature has an equal impact on the results during model training, thereby improving the training effectiveness. Z-score normalization (standardization) is the most commonly used method, where data is transformed into a normal distribution with a mean of 0 and a standard deviation of 1 after Z-score normalization. Olesen et al. (2021) applied Z-score normalization to each signal during preprocessing to adapt to differences in devices and baselines while evaluating the generalization ability of the model across five datasets. Additionally, it is important to note that data scaling and data normalization should not be confused, despite their similarities and occasional interchangeability. It is crucial to understand that both methods transform the values of numerical variables, endowing the transformed data points with specific useful properties. In simple terms: scaling changes the range of the data, while normalization changes the shape of the data distribution. Specifically, data scaling focuses more on adjusting the amplitude range of the data, such as between 0 to 100 or 0 to 1. Data normalization, on the other hand, is a relatively more aggressive transformation that focuses on changing the shape of the data distribution, adjusting the data to a common distribution, typically a Gaussian (normal) distribution (Ali et al. 2014). These two techniques are usually not used simultaneously; in practice, the choice is generally made based on the specific characteristics of the data and the needs of the model. The characteristics of the data can be examined for the presence of outliers, the numerical range of features, and their distribution. For example, when data contains a small number of outliers, scaling is often more appropriate than normalization. In particular, the median and IQR-based scaling method used by Guillot et al. (2021) (often referred to as robust scaling) is especially suitable for data with outliers because it uses the median and interquartile range to scale the data, preventing extreme values from having an impact. However, outliers can significantly affect the mean and standard deviation of the data, thus impacting the effectiveness of normalization based on the mean and standard deviation. Different models also have different requirements. For instance, distance-based algorithms (such as SVM) typically require data scaling, while algorithms that assume data is normally distributed commonly use normalization.

4.1.2 Class imbalance problems

In the preceding text, we have discussed the problem of class imbalance in sleep data (Table 6). Deep learning heavily relies on data, and when learning from such imbalanced data, the majority class tends to dominate, leading to a rapid decrease in its error rate (Fan et al. 2020). The result of training might be a model biased towards learning the majority class, performing poorly on minority classes. Moreover, when the number of samples in the minority class is very low, the model might overfit to these samples’ features, achieving high performance on the training set but poor generalization to unseen data (Spelmen and Porkodi 2018). The class imbalance problem in sleep cannot be eradicated but can only be suppressed through certain measures. The most common approach in existing research is data augmentation (DA), which falls within the preprocessing domain, while another category manifests during the training process.

DA is a method to expand the number of samples without significantly increasing existing data (Zhang et al. 2022a). Typically, it generates new samples for minority classes to match the sample counts in each class, constructing a new dataset (Fan et al. 2020). Three methods are generally used in existing research to generate new augmented data.

Oversampling: Aims to increase the number of samples in minority classes. Different class distributions are balanced through oversampling, enhancing the recognition ability of classification algorithms for minority classes. To prevent the model from favoring the majority class excessively, Supratak et al. (2017) used “oversampling with replication” during training. By replicating minority stages from the original dataset, all stages had the same number of samples, avoiding overfitting. Mousavi et al. (2019) used “oversampling with SMOTE (synthetic minority over-sampling technique)” (Chawla et al. 2002). SMOTE synthesizes similar new samples by considering the similarity between existing minority samples.

Morphological transformation: A common image enhancement method in image processing is geometric transformation, including rotation, flipping, random scaling, etc. Similar transformations can be performed on physiological signals. Common operations include translation (along the time axis), horizontal or vertical flipping, etc. Noise can also be added, further introducing variability (Fan et al. 2021). Zhao et al. (2022) applied random morphological transformations, deciding whether to perform cyclic horizontal shifting and horizontal flipping on each EEG epoch with a 50% probability.

Generative adversarial networks (GANs): GAN itself is a deep learning model proposed by Ian Goodfellow and colleagues in 2014 (Goodfellow et al. 2014). The core of GAN is the competition between two neural networks (generator and discriminator), with the ultimate goal of generating realistic data. GAN are widely used in image generation in the field of images and have similar applications in physiological signals. For instance, Zhang and Liu (2018) proposed a conditional deep convolutional generative adversarial network (cDCGAN) based on GAN to augment EEG training data in the brain-computer interface field. This network can automatically generate artificial EEG signals, effectively improving the classification accuracy of the model in scenarios with limited training data. In Kuo’s study (Kuo et al. 2022), another variant of GAN, self-attention GAN (SAGAN) (Zhang et al. 2019), was used. SAGAN is a variant of GAN for image generation tasks. The authors applied continuous wavelet transform to the original EEG signal and used SAGAN to augment the obtained spectrograms. A detailed introduction to the GAN model can be found in Sect. 4.3.

Another category of methods does not belong to preprocessing but is manifested during model training. Firstly, there is class weight adjustment, usually performed in the loss function. The basic idea is to introduce class weights into the loss function, giving more weight to minority classes, thus focusing more on the classification performance of minority classes during training. Commonly used methods include weighted cross-entropy loss function (Zhao et al. 2022) and focal loss function (Lin et al. 2017; Neng et al. 2021). Secondly, there are ensemble learning strategies, which balance the model’s attention to different classes by combining predictions from multiple base models, thus improving performance. Neng et al. (2021) trained 13 basic CNN models, selected the top 3 best-performing ones to form an ensemble model, achieving an average accuracy of 93.78%. Research focusing on addressing data imbalance problems is summarized in Table 7.

We reviewed current methods for mitigating class imbalance in sleep stage classification. Various methods can achieve performance improvements, but their applicability needs further discussion. For DA, the key is to introduce additional information while minimizing changes to the physiological or medical significance of the signals, thereby increasing data diversity (Rommel et al. 2022). Oversampling typically involves replicating existing samples or synthesizing similar samples based on existing ones. GAN, through adversarial training, can implicitly learn the mapping from latent space to the overall distribution of sleep data, generating samples that better fit the original data distribution and are more diverse (Fan et al. 2020). However, in morphological transformation methods, the essence is to obtain new samples by flipping, translating, etc., the original samples. For weak signals like EEG, simple waveform fluctuations can lead to different medical interpretations. Morphological transformations may not bring about sample diversity and could introduce erroneously annotated new samples, severely disrupting model learning. These were demonstrated by Fan et al. (2020). They compared EEG data augmentation methods such as repeating the minority classes (DAR), morphological change (DAMC), and GAN (DAGAN) on the MASS dataset. The results showed that DAMC performed the worst among all methods, only improving accuracy by 0.9%, while DAGAN improved performance by 3.8%. However, DAGAN introduced additional model training and resource costs. In Fan et al.’s experiments, GAN required 71.69 h of training and 19.63 min to generate synthetic signals, whereas morphological transformations only needed 201 min.

Class weight adjustment is typically done in the loss function, introducing minimal additional computation but usually bringing in new hyperparameters. For instance, the weighted cross-entropy loss function is calculated as follows:

where \(\textit{y}_i^k\) is the actual label value of the \(\textit{i}\)th sample, \(\hat{y}_i^k\) is the predicted probability for class \(\textit{k}\) of the \(\textit{i}\)-th sample, \(\textit{M}\) is the total number of samples, and \(\textit{K}\) is the total number of classes. \(\textit{w}_k\) represents the weight of class \(\textit{k}\), which is typically provided as a hyperparameter by the researchers. Other functions, such as focal loss, introduce two hyperparameters: the modulation factor and class weight. Ensemble learning requires training multiple base models for combined predictions, which adds extra overhead.

In summary, each existing method has its pros and cons. In terms of low cost and ease of application, oversampling and morphological transformation have more advantages. Although weighted loss functions also have low costs, they come with hyperparameter issues. GAN have performance advantages. When researchers can accommodate the additional overhead brought by GAN or pursue higher performance, GAN are worth trying. Additionally, Fan et al. (2020) and Rommel et al. (2022) conducted in-depth comparisons and analyses of different data augmentation methods, and interested readers can refer to their works.

4.2 Data representation

When using DL methods to process sleep data, a crucial issue is transforming sleep signals into suitable representations for subsequent learning by DL models. The choice of representation largely depends on the model’s requirements and the nature of the signal (Altaheri et al. 2023). Appropriate input forms enable the model to effectively learn and interpret sleep information. Signals can be directly represented as raw values, in which case they are in the time domain. Through signal processing methods such as wavelet transforms, Fourier transforms, etc., transformed domain representations of the signal can be obtained. Moreover, a combination of these two approaches is also commonly used. Figure 5 displays the representation methods and their proportions used in the reviewed articles. Figure 6a categorizes the representation methods applicable to PSG data, while Fig. 6b provides examples of these different representations.

Classification of PSG data representation methods, and examples of each representation method. a Classification of representations; b Raw multi-channel signal, [TP\(\times\)C]; c STFT gets [T\(\times\)F] time-frequency spectrogram; d STFT gets [T\(\times\)F\(\times\)C] time-frequency spectrogram; e FFT gets [F\(\times\)C] spatial-frequency spectrum. TP Time Point (sampling point), C Channel (electrode), T Time window (time segment), F Frequency

4.2.1 Raw signal

In the time domain, the raw signal represents the main information as variations in signal amplitude over time. When signals come from multiple channels (electrodes), they can be represented as a 2D matrix of [TP (time point)\(\times\)C (channel)] (for a single-channel, it would be [TP\(\times\)1]). This can be visualized as shown in Fig. 6b. Traditionally, specific manually designed time-domain features are extracted from raw signals for input, such as power spectral density features (Xu and Plataniotis 2016). However, DL methods can automatically learn complex features from extensive data. This allows researchers to bypass the manual feature extraction step, directly inputting 1D raw signals with limited or no preprocessing into neural networks. In recent years, this straightforward and effective approach has become increasingly mainstream (as indicated in Fig. 5). Existing studies have directly input raw signals into various DL models, achieving good performance. This includes classic CNN architectures like ResNet (He et al. 2016; Seo et al. 2020) and U-Net (Ronneberger et al. 2015; Perslev et al. 2019), as well as CNN models proposed by researchers (Tsinalis et al. 2016; Goshtasbi et al. 2022; Sors et al. 2018). It also encompasses models such as RNNs (long short-term memory or gated recurrent unit) and Transformer (Phyo et al. 2022; Olesen et al. 2021; Lee et al. 2024; Pradeepkumar et al. 2022). In these works, minimal or no preprocessing has been applied. For example, Seo et al. (2020) utilized an improved ResNet to extract representative features from raw single-channel EEG epochs and explicitly emphasized that their method was an “end-to-end model trained in one step”, requiring no additional data preprocessing methods.

4.2.2 Transform domain

Transformed domain data are typically obtained from the raw signals through methods such as short-time Fourier transform, continuous wavelet transform, Hilbert-Huang transform, fast Fourier transform, and others. STFT, CWT and HHT fall under time-frequency analysis methods, providing time-frequency spectrograms that encompass both time and frequency information. The spectrogram can be regarded as a specific type of image, offering better understanding of the signal’s time-frequency features and patterns of change. As depicted in Fig. 6c and d, spectrograms can be represented as [T (time window)\(\times\) F (frequency)] or in the case of multiple channels, [T\(\times\)F\(\times\)C]. Different time-frequency analysis methods have variances between them. For instance, STFT utilizes a fixed-length window for signal analysis, thus can be considered a static method concerning time and frequency resolution. In contrast, CWT employs multiple resolution windows, providing dynamic features (Herff et al. 2020; Elsayed et al. 2016). To our knowledge, there is a lack of comprehensive research comparing the performance of different time-frequency analysis methods. For EEG, the energy across different sleep stages not only varies in frequency but also in spatial distribution (Jia et al. 2020a). This spatial information can be introduced through the “spatial-frequency spectrum”, typically implemented using FFT (Cai et al. 2021), as shown in Fig. 6e.

Phan et al. (2019) transformed 30-second epochs of EEG, EOG, and EMG signals into power spectra using STFT (window size of 2 s, 50% overlap, Hamming window, and 256-point FFT). This resulted in a multi-channel image of [T\(\times\)F\(\times\)C], where C = 3. The authors input these spectrogram data into a bidirectional hierarchical RNN model with attention mechanisms for sleep stage classification. The spatial-frequency spectrum introduced EEG electrode spatial information to enhance classification accuracy. Jia et al. (2020a) first conducted frequency domain feature analysis on power spectral density using FFT for five EEG frequency bands (delta, theta, alpha, beta, gamma) closely related to sleep. They placed the frequency domain features of different electrodes in the same frequency band on a 16\(\times\)16 2D map, resulting in five 2D maps representing different frequency bands. Each 2D map was treated as a channel of the image, producing a 5-channel image for each sample representing the spatial distribution of frequency domain features from different frequency bands.

In addition to using a single type of input form, some studies simultaneously use both. In these studies, it is often considered that individual time-domain, frequency-domain, or spatial-domain features alone are insufficient to completely differentiate sleep stages. Their combination offers complementarity, supplementing classification information (Jia et al. 2020a; Cai et al. 2021; Phan et al. 2021; Fang et al. 2023). Researchers usually construct a multi-branch network to process different forms of data separately. Features from multiple branches are fused using specific strategies to achieve better classification results. For example, Jia et al. (2020a) established a multi-branch model, simultaneously inputting spatial-frequency spectrum from 20 EEG channels and raw signals (EEG, EOG, EMG) into the model.

4.3 Deep learning models

In automatic sleep stage classification, DL has become the mainstream method in recent years compared to traditional ML techniques. Figure 7a and b provide a comparative overview of the workflows between the two methods. DL methods automate the feature extraction and classification steps present in ML, enabling an end-to-end approach. In this section, different deep learning models used in relevant studies will be introduced. DL models can be categorized into two subclasses based on their functionality: discriminative models and generative models, as well as hybrid models formed by combinations of these, as depicted in Fig. 8.

4.3.1 Discriminative models

Discriminative models refer to DL architectures that can learn different features from input signals through nonlinear transformations and classify them into predefined categories using probability predictions (Altaheri et al. 2023). Discriminative models are commonly utilized in supervised learning tasks and serve both feature extraction and classification purposes. In the context of sleep stage classification, the two major types of discriminative models widely used are CNN and RNN.

4.3.1.1 CNN

CNN, one of the most common DL models, is primarily used for tasks such as image classification in computer vision, and in recent years, it has been applied to biological signal classification tasks like ECG and EEG (Yang et al. 2015; Morabito et al. 2016). CNN is composed of a series of neural network layers arranged in a specific order, typically including five layers: input layer, convolutional layer, pooling layer, fully connected layer, and output layer (Yang et al. 2015; Morabito et al. 2016), as illustrated in Fig. 9. Starting from the input layer, the initial few layers learn low-level features, while later layers learn high-level features (Altaheri et al. 2023). The convolutional layer is the core building block of a CNN, where feature extraction from the input data is achieved through convolutional kernels. For example, in a 2D convolution, if the input data is a 224\(\times\)224 matrix and the convolutional kernel is a 3\(\times\)3 matrix (which can be adjusted), the values within this matrix are referred to as weight parameters. The convolutional kernel is applied to a specific region of the input data, computing the dot product between the data in that region and the kernel. The result of this dot product is provided to the output array. After this computation, the kernel moves by one unit length, known as the “stride,” and the process is repeated. This procedure continues until the convolutional kernel has scanned the entire input matrix. The dot product results from a series of scans constitute the final output, known as the feature map, representing the features extracted by the convolution. Note that the kernel remains unchanged during its sliding process, meaning all regions of the input share the same set of weight parameters, which is referred to as “weight sharing” and is one of the critical reasons for CNN’s success. Pooling layer performs a similar but distinct operation by scanning the input with a pooling kernel. For instance, in the commonly used max pooling, if the pooling kernel size is 3\(\times\)3, the result of each pooling operation is the maximum value from a 3\(\times\)3 region of the input matrix. The essence of pooling is downsampling, aimed at reducing network complexity or computational load. Typically, a series of consecutive convolution-pooling operations are used to extract data features. The feature maps obtained from convolution and pooling are usually flattened and then fed into one or more fully connected layers. As shown in Fig. 9, in the fully connected layers, each node in the input feature map is fully connected to each node in the output feature map, whereas convolutional layers have partial connections. The fully connected layers often use the softmax function to classify the input appropriately, generating probability values between 0 and 1. CNN is one of the most important models in sleep stage classification, with 76% of the studies reviewed in this paper utilizing CNN, as shown in Fig. 10. The CNN variants used in existing research include both standard CNN architectures, as well as various modified versions of CNN. For example, the residual CNN (He et al. 2016), inception-CNN (Szegedy et al. 2015), dense-convolutional (DenseNet) (Huang et al. 2017), 3D-CNN (Ji et al. 2023), and multi-branch CNN (used in ensemble learning) (Kuo et al. 2021), among others, are listed in Table 8, and their structures are shown in Fig. 11.

a The upper part is a single residual connection block, and the lower part is a cascade of multiple residual blocks; b Replacing conv-layer with attention module; c Inception structure proposed in GoogLeNet (Szegedy et al. 2015; d Using an ensemble learning approach, the outputs of three basic CNNs are fed into a neural network with a hidden fully connected layer for further learning; e A novel CNN variant: DenseNet (Huang et al. 2017) is a convolutional neural network architecture that directly connects each layer to all subsequent layers to enhance feature reuse, facilitate gradient flow, and reduce the number of parameters

Zhou et al. (2021) proposed a lightweight CNN model that utilized the inception structure (as shown in Fig. 11c) to increase network width while reducing the number of parameters. This model took EEG’s STFT spectrogram as input. In a multimodal deep neural network model proposed in Zhao et al. (2021a), which included two parallel 13-layer 1D-CNNs, residual connections (as shown in Fig. 11a) were used to address potential gradient vanishing problems. EEG and ECG features were extracted separately in their respective convolutional branches and were later merged through simple concatenation for input into the classification module. Jia et al. (2020a) proposed a CNN model using EEG, EOG, and EMG. The model had multiple convolutional branches, each extracting different features from raw signals, and features from images generated by FFT from EEG. Features from different data representations were concatenated and input into the classification module. Kanwal et al. (2019) combined EEG and EOG to create RGB images, which were then transformed into high bit depth FFT features using 2D-FFT and classified using DenseNet (as shown in Fig. 11e). Conversely, in Liu et al. (2023b), an end-to-end deep learning model for automatic sleep staging based on DenseNet was designed and built. This model took raw EEG as input and employed two convolutional branches to extract features at different frequency levels. Significant waveform features were extracted using DenseNet modules and enhanced with coordinate attention mechanisms, achieving an overall accuracy of 90% on SEDF. Kuo et al. (2021) designed a CNN model that utilized CWT time-frequency spectrograms as input and combined Inception and residual connections. They also trained other classic CNN models and selected the top 3 models with the highest accuracy as base CNNs. These outputs were further learned using a fully connected network with a hidden layer, implementing ensemble learning (as shown in Fig. 11d). In Fang et al. (2023), authors used an ensemble strategy based on Boosting to combine multiple weak classifiers. Additionally, various CNN variants have been introduced in other studies, such as architectures incorporating different attention modules, as seen in Liu et al. (2023a) and Liu et al. (2022b) (as shown in Fig. 11b).

4.3.1.2 RNN

In many real-world scenarios, the input elements exhibit a certain degree of contextual dependency (temporal dependency) rather than being independent of each other. For instance, the variation of stock prices over time and sleep stage signals both reflect this dependency. To capture such relationships, models need to possess a memory capability, enabling them to make predictive outputs based on both current elements and features of previously input elements. This requirement has led to the widespread use of RNN in sleep stage classification tasks. A typical RNN architecture is illustrated in Fig. 12a, which includes an input layer, an output layer, and a hidden layer. Define \(\textit{x}_t\) as the input at time \(\textit{t}\), \(\textit{o}_t\) as the output, \(\textit{s}_t\) as the memory, \(\textit{U}\), \(\textit{V}\), and \(\textit{W}\) as the weight parameter. As shown on the right side of Fig. 12a, when unfolded along the time axis, the RNN repetitively uses the same unit structure at different time steps, incorporating the memory from the previous time step into the hidden layer during each iteration. \(\textit{U}\), \(\textit{V}\), and \(\textit{W}\) are shared across all time steps, enabling all previous inputs to influence future outputs through this recurrence. RNN possess memory capabilities, making them suitable for the demands of sleep stage classification tasks. However, the memory capacity of RNN is limited: it is generally assumed that inputs closer to the current time have a greater impact, while earlier inputs have a lesser impact, restricting RNN to short-term memory. Additionally, RNN face challenges such as high training costs (due to the inability to perform parallel computations in their recurrent structure) and the problem of vanishing gradients (Yifan et al. 2020). To address these issues, two widely used variants of RNN were proposed: LSTM and GRU. The basic unit composition of LSTM is depicted in Fig. 12b. Unlike RNN, which have a single hidden state s representing short-term memory, LSTM introduce \(\textit{h}\) as the hidden state (short-term memory). Moreover, LSTM add a cell state \(\textit{c}\) capable of storing long-term memory. The basic unit is controlled by three gates: the input gate, the forget gate, and the output gate. These “gates” are implemented using the sigmoid function, which outputs a probability value between 0 and 1, indicating the amount of information allowed to pass through. Among the three gates in LSTM, the forget gate determines how much of the previous cell state \(\textit{c}_{t-1}\) is retained in the current cell state \(\textit{c}_t\), based on the current input \(\textit{x}_t\) and the previous output \(\textit{h}_{t-1}\). After forgetting the irrelevant information, new memories need to be supplemented based on the current input. The input gate determines how much of \(\textit{x}_t\) updates the cell state \(\textit{c}_t\) based on \(\textit{x}_t\), \(\textit{h}_{t-1}\), and the output of the forget gate. The output gate controls how much of the cell state ct is output based on \(\textit{x}_t\) and \(\textit{h}_{t-1}\). By introducing the cell state \(\textit{c}\) and gate structures, LSTM can maintain longer memories and overcome issues such as vanishing gradients. However, LSTM are still essentially recurrent structures and thus cannot perform parallel computations (Yifan et al. 2020). GRU, another common variant of RNN, simplifies the architecture by having only two gate structures, reducing the number of parameters and increasing computational efficiency, though it still lacks the capability for parallel computation (Chung et al. 2014).

Phan et al. (2018) designed a bidirectional RNN with an attention mechanism to learn features from single-channel EEG signal’s STFT transformation. The authors first divided the EEG epoch into multiple small frames. Using STFT, they transformed these into continuous frame-by-frame feature vectors, which were then input into the model shown in Fig. 13 for training. The training objective was to enable the model to encode the information of the input sequence into high-level feature vectors. Note that this is not an end-to-end process; the RNN was used as a feature extractor, while the classification was performed by a linear SVM classifier. The final classification is done through SVM. As an improvement, they later proposed a bidirectional hierarchical LSTM model combined with attention. The model takes STFT transformations of signals (EEG, EOG, EMG) as input. Based on attention, bidirectional LSTM encodes epochs into attention feature vectors, which are further modeled by bidirectional GRU (Phan et al. 2019). Inspired by their work, Guillot et al. (2020) enhanced a model based on GRU and positional embedding, reducing the number of parameters. In the study by Xu et al. (2020), four LSTM models were constructed, each with different input signal lengths (1, 2, 3, and 4 epochs). It was found that each model exhibited varying sensitivity to different sleep stages. The authors combined models with distinct stage sensitivities, resulting in improved classification accuracy.

Attention-based bidirectional RNN (Phan et al. 2018)

4.3.1.3 Hybrid

There exists rich temporal contextual information between consecutive sleep stages, which should not be ignored whether in expert manual staging or computer-assisted staging. For instance, if one or more sleep spindles or K-complexes are observed in the second half of the preceding epoch or the first half of the current epoch, the current epoch is classified as N2 stage. Moreover, sleep exhibits continuous stage transition patterns like N1-N2-N1-N2, N2-N2-N3-N2 (Iber 2007; Tsinalis et al. 2016). Both intra-epoch features and inter-epoch dependencies within the epoch sequence should be considered simultaneously (Seo et al. 2020). This is a challenge that individual CNN or RNN models cannot effectively address. Hence, the most common type of model in sleep stage classification is actually the hybrid of CNN and RNN (CRNN), which is designed to simultaneously handle feature extraction and model long-term dependencies. As shown in Fig. 14, hybrid models can be generalized into two main components: feature extractor (FE) and sequence encoder (SE). CNN is commonly used as FE, responsible for extracting epoch features and encoding invariant information over time; RNN is typically used as SE, focusing on representing relationships between epochs and encoding temporal relationships within the epoch sequence (Supratak et al. 2017; Phyo et al. 2022; Phan and Mikkelsen 2022).

Such hybrid structure is implemented in DeepSleepNet, proposed by Supratak et al. (2017). The model extracts invariant features from raw single-channel EEG using a dual-branch CNN with different kernel sizes and encodes temporal information into the model with bidirectional LSTM featuring residual connections. DeepSleepNet achieved an accuracy of 82.0% on SEDF. In subsequent improvements, the authors significantly reduced the parameter count of the CRNN structure (approximately 6% of DeepSleepNet) and improved the performance to 85.4% (Supratak and Guo 2020). Seo et al. (2020) utilized the epoch sequence of raw single-channel EEG as input, employed an improved ResNet-50 network to extract representative features at the sub-epoch level, and captured intra- and inter-epoch temporal context from the obtained feature sequence with bidirectional LSTM. Performance comparisons were made with input sequences of different lengths (L) ranging from 1 to 10, with the model achieving the best accuracy of 83.9% on SEDF and 86.7% on SHHS datasets when L=10. Neng et al. divided sleep data into three levels: frame, epoch, and sequence, where frame is a finer division of epoch, and sequence represents epoch sequences (Neng et al. 2021). Based on this, they designed models with frame-level CNN, epoch-level CRNN, and sequence-level RNN, essentially aiming at modeling long-term dependencies. The input sequence length of the model was 25 epochs, and it achieved an accuracy of 84.29% on SEDF.

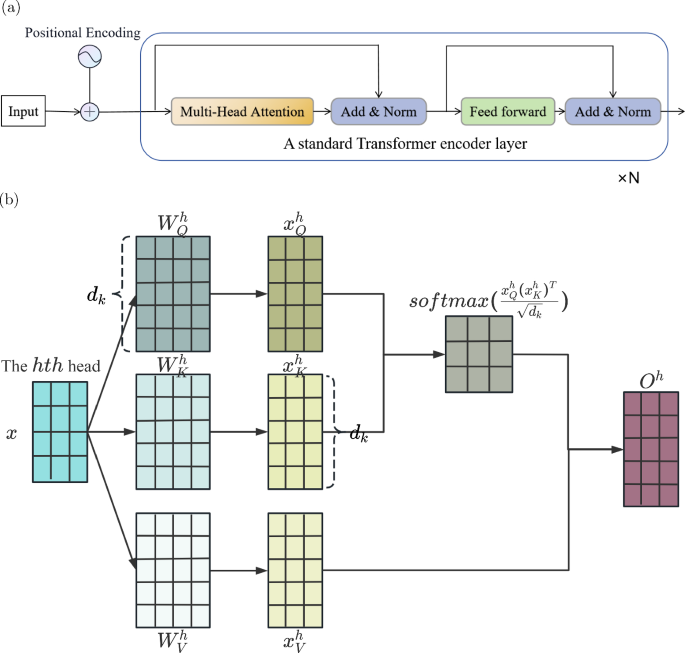

CRNN is the most widely used approach, but RNN suffers from long training times and challenges in parallel training. Hence, researchers have explored attention mechanisms and Transformer architectures based on self-attention (Vaswani et al. 2017), which have shown excellent performance in sequential tasks. The self-attention mechanism excels at capturing the inherent relationships and dependencies within input sequences. As depicted in Fig. 15, the basic structure of self-attention involves computing the relationship between each position in the input sequence and every other position, yielding a weight distribution. By performing a weighted summation of the input sequence based on this distribution, an output sequence encapsulating internal dependencies is produced (Guo et al. 2022). The core of the Transformer is the self-attention mechanism, which is divided into two main parts: the encoder and the decoder. In existing research, the encoder part is typically used. The Transformer encoder comprises several key components: positional encoding, multi-head self-attention, feed-forward neural network, layer normalization, and residual connections, as illustrated in Fig. 16a. The first operation of the encoder is to encode the position of the input sequence. MHSA can model the relationships within the input time series, but it cannot perceive the local positional information of the input sequence (Foumani et al. 2024). Therefore, positional information is first added to the input using fixed positional encoding based on sine and cosine functions of different frequencies (Vaswani et al. 2017):

where \(\textit{t}\) represents the input sequence data, \(\textit{p}\) represents the matrix calculated by the positional encoding function PE, \(\textit{p}os\) is the position index in the input sequence, d is the dimension of the input embeddings, and \(\textit{i}\) is the index of the dimension in the positional encoding vector. Next, MHSA modeling is performed. MHSA is an extension of self-attention that divides the input sequence into H sub-sequences, utilizing H parallel self-attention heads to capture different interactive information in various projection spaces (each head has different parameters). These H heads can capture different features and relationships of the input elements, and their fusion results in a richer global representation. As shown in Fig. 16b, taking the \(\textit{h}\)-th head and sub-sequence input \(\textit{x}\) as an example, three linear projections are first obtained for \(\textit{x}\), resulting in three copies of \(\textit{x}\) (query (\(\textit{q}\)), key (\(\textit{k}\)), and value (\(\textit{v}\)) matrices). This can be represented as:

where \(\textit{x}_q^h\), \(\textit{x}_k^h\), and \(\textit{x}_v^h\) represent the \(\textit{q}\), \(\textit{k}\), and \(\textit{v}\) copies, respectively, and \(\textit{W}_q^h\), \(\textit{W}_k^h\), and \(\textit{W}_v^h\) represent the learnable projection matrices. The self-attention output of the \(\textit{h}\)-th head is:

where \(\textit{d}_k\) is the dimension of the h-th head. Assuming there are H heads, each head’s output can be represented as \(O^i\) (\(1 \le i \le H\)). Concatenating the outputs of all heads and applying another linear projection \(W_o\) yields the final output of MHSA. This can be represented as:

After the multi-head self-attention mechanism, each encoder layer also includes a feed-forward neural network. This network typically consists of two fully connected layers and a nonlinear activation function, such as ReLU. It operates on the inputs at each position to generate new representations for each element. Layer normalization follows the multi-head self-attention and feed-forward neural network, helping to stabilize the training process and accelerate convergence. It normalizes the inputs of each layer so that the output has a mean of 0 and a standard deviation of 1. Residual connections, which appear alongside layer normalization, add the input of a sub-layer directly to its output. This connection helps to address the problem of vanishing gradients in deep networks and speeds up the training process. These components together form a standard Transformer encoder layer, and the encoder typically stacks multiple such layers. Each layer produces higher-level abstract representations, with the output of one layer serving as the input to the next, thereby extracting deeper features step by step. Compared to the recursive computations of RNN, the self-attention mechanism can parallelize the entire sequence, making it easily accelerated by GPU, similar to CNN (Guo et al. 2022). Furthermore, the self-attention mechanism can effortlessly obtain global information. These factors contribute to its widespread application in sequence data tasks, including sleep stage classification problems.

a Transformer encoder: It is composed of N standard encoder layers stacked together. The encoder layer consists of positional encoding, multi-head self-attention, feed-forward neural network, layer normalization, and residual connections; b The self-attention calculation process of the \(\textit{h}\)-th head

Attention and Transformer encoders (as shown in Fig. 16a) are often combined with CNNs to form hybrid models, where they also play the role of SE. For example, in the CNN-Attention model constructed by Zhu et al. (2020), CNN is used to encode epoch features, and self-attention is employed to learn temporal dependencies. AttnSleep, proposed by Eldele, uses CNN for feature extraction and employs a Transformer-encoder module combined with causal convolutions for encoding temporal context (Eldele et al. 2021). A CNN-Transformer model for real-time sleep stage classification on energy-constrained wireless devices was proposed in Yao and Liu (2023). The model, applied to single-channel input data of size (3000, 1) (signal length 30 s, sampling rate 100 Hz), extracts features of size (19, 128) through 4 consecutive convolutional layers. The Transformer-encoder is then used to learn temporal information from these features. The downsized model was tested on an Arduino development board, achieving an accuracy of 80% on the SEDF dataset. Lee et al. (2024) and Pradeepkumar et al. (2022) also introduced their CNN-Transformer approaches. Additionally, Phan et al. (2022b) proposed a model called SleepTransformer, which entirely eliminates the need for convolutional and recurrent operations. SleepTransformer no longer relies on CNN for epoch feature extraction but instead relies entirely on Transformer’s encoder to serve as FE and SE.

4.3.2 Generative models

In sleep stage classification, one popular generative DL model is GAN. It is important to note that the task reviewed in this paper is a classification task. GAN itself is used for data generation, and although it has a discriminator that performs binary classification, its sole purpose is to distinguish between real data and data synthesized by the generator, ultimately aiding the generator in producing realistic data. In the current context, GAN is typically used in the data augmentation phase to mitigate issues such as insufficient EEG training data or class imbalance, as described in Sect. 4.1.2. The data augmented by GAN still requires a classification model to achieve classification. Several studies have compared the effects of GAN with traditional data augmentation methods (such as SMOTE, morphological transformations, etc.) (Fan et al. 2020; Yu et al. 2023). The results of these studies indicate that sleep data augmentation based on GAN significantly improves classification performance. Fan et al. (2020) compared five data augmentation methods: repeating minority class samples, signal morphological transformations, signal segmentation and recombination, dataset-to-dataset transfer, and GAN. The results showed that GAN increased accuracy by 3.79% and 4.51% on MASS and SEDF, respectively, achieving the most remarkable performance improvement. Cheng et al. (2023a) designed a new GAN model (SleepEGAN), using the model from Supratak and Guo (2020) as the generator and discriminator of GAN, combined with a CRNN classifier to perform the classification task. After SleepEGAN augmentation on the SHHS dataset, the number of samples in the N1 stage increased from 10,304 to 46,272, and the overall classification accuracy improved to 88.0% (the second-best method achieved 84.2%). In Cheng’s study, original signals were augmented, while in Kuo et al. (2022), self-attention GAN was used to augment spectrogram images, and ResNet was employed for classification. On their private dataset, the combination of spectrogram, self-attention GAN and ResNet achieved an accuracy of 95.70%, whereas the direct classification approach was only 87.50%. Moreover, Yu et al. (2023); Zhou et al. (2022); Ling et al. (2022), and other studies also utilized GAN for data augmentation. In Yu et al. (2023), the generator and discriminator of the GAN model were both based on Transformer-encoder. Figure 8 displays the proportion of deep learning methods included in the reviewed studies, and Tables 9, 10 and 11 summarizes key information extracted from the papers. In these tables, we have compiled information on various types of input data, datasets, preprocessing methods, deep learning models, and their reported performance in recent papers.

5 ASSC based on cardiorespiratory signals

Currently, PSG remains the “gold standard” signal in sleep research. However, the time-consuming and labor-intensive nature of PSG data collection can disrupt a subject’s natural sleep patterns. Due to these limitations, sleep monitoring based on PSG struggles to transition from sleep labs to everyday life. Recent studies have demonstrated the correlation between sleep and respiratory or circulatory systems (Sun et al. 2020). In contrast, signals reflecting such activities, such as ECG, PPG, etc., offer unique advantages in terms of signal acquisition, cost, and subject comfort. For example, PPG can be collected using smartwatches. Hence, researchers have started exploring how to perform sleep stage classification using signals from the heart and lungs.