Abstract

Artificial intelligence (AI) has significantly impacted various fields. Large language models (LLMs) like GPT-4, BARD, PaLM, Megatron-Turing NLG, Jurassic-1 Jumbo etc., have contributed to our understanding and application of AI in these domains, along with natural language processing (NLP) techniques. This work provides a comprehensive overview of LLMs in the context of language modeling, word embeddings, and deep learning. It examines the application of LLMs in diverse fields including text generation, vision-language models, personalized learning, biomedicine, and code generation. The paper offers a detailed introduction and background on LLMs, facilitating a clear understanding of their fundamental ideas and concepts. Key language modeling architectures are also discussed, alongside a survey of recent works employing LLM methods for various downstream tasks across different domains. Additionally, it assesses the limitations of current approaches and highlights the need for new methodologies and potential directions for significant advancements in this field.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

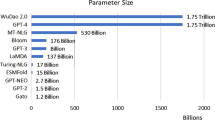

The progression of NLP and AI models has traced a significant path, commencing with rule-based systems circa the mid-1990s, shifting to statistical models by the late 1990s, and ultimately progressing to neural networks in the early 2000s (Ling et al. 2023). The implementation and success of RNN-based “self-attention” and “Transformer-based” neural network architectures (Vaswani et al. 2017) have significantly contributed to the increased prevalence of pre-trained language models (PLMs) during the late 2010s. These PLMs are capable of learning universal language representations from extensive datasets without human intervention. This form of unsupervised learning is particularly advantageous for various downstream NLP tasks, including answering multiple-choice questions (Robinson et al. 2022), story generation (Cao et al. 2023), and common sense reasoning (Yang et al. 2023). Additionally, they mitigate issues related to overfitting. In recent years, there has been significant progress in the development of LLMs (Wei et al. 2022), as demonstrated by GPT-3 (OpenAI) (Brown et al. 2020), PaLM (Google) (Chowdhery et al. 2022), and LLaMA (Meta) (Touvron et al. 2023), Megatron-Turing NLG (NVIDIA) (Smith et al. 2022), and Jurassic-1 Jumbo (AI21 Labs) (Reed et al. 2022), among others. The observed expansion can predominantly be credited to the exponential augmentation in extensive datasets and advancements in computational hardware capabilities. Scholars have noted that augmenting the model’s size and the quantity of training data consistently augments the model’s capability, conforming to a scaling law (Kaplan et al. 2020). LLMs have emerged as a significant area of AI research due to their superior performance in understanding and generating human-like text compared to smaller models. LLMs possess the capacity to revolutionize both scientific and social sciences by accelerating research, enhancing the process of discovery, and fostering interdisciplinary collaboration. This is achieved through streamlined literature analysis, creative idea generation, and intricate data interpretation.

The capabilities of LLMs as versatile problem-solving tools have led to their expanded applications beyond simple chatbots (OpenAI 2023). They are now being utilized as assistants or even replacements for human workers or traditional tools in industries such as healthcare, banking, and education. These capabilities are starting to assume significant roles, particularly within the healthcare sector. For instance, GPT-4 is used to transcribe medical dictations directly into electronic health records (EHRs). This task, typically done by medical scribes, is being increasingly automated. Tools like Nuance’s Dragon Medical One (Onitilo et al. 2023) use LLM technology to accurately convert speech to text, allowing doctors to focus more on patient care and less on paperwork. LLMs can process and summarize vast amounts of medical literature quickly (Watanabe and Wiseman 2023), a task often done by research assistants. Tools like Iris.ai use AI to help researchers find and summarize relevant scientific papers, thus speeding up the research process and reducing the need for human labor in literature review and synthesis. However, the direct application of LLMs to domain-specific problems presents significant challenges. Firstly, there is a substantial difference in the types of speech and language used in various contexts, such as a doctor’s office, a courtroom, and online discussions. Even for humans, acquiring such skills and expertise requires extensive training, much of which is hands-on and confidential. Additionally, a single generic LLM solution cannot readily replace the “business models” that individual fields, institutions, and teams use to maximize their utility functions for specific activities. Furthermore, the professional use of LLMs requires in-depth, real-time, and accurate domain knowledge that pre-trained LLMs cannot easily provide.

The development of artificial intelligence has significantly transformed various sectors. Recently, the field of NLP has seen substantial progress, with LLMs becoming a prominent area within it (Sarker 2022). LLMs are capable of generating human-like language and performing a range of language processing tasks due to their training on extensive textual datasets, which include publicly available data and data licensed from third parties. Notable examples include the generative pretrained transformer (GPT) from OpenAI and BARD from Google (Michael 2020; Deng and Lin 2022; Jordan and Mitchell 2015). NLP is a critical aspect of artificial intelligence that focuses on the interactions between machines and humans for communication. Various sectors such as management, finance, retail, law, architecture, and transportation can leverage enhanced computer capabilities in understanding and manipulating human language (Brown et al. 2020). The advancements in LLMs have greatly enhanced our comprehension and application of AI in these fields, and their influence continues to grow, significantly impacting everyday life.

Advancements in AI are expected to further impact the future of learning and discovery significantly. For example, the latest GPT model, GPT-4, incorporates enhanced features such as increased safety, multilingual support, text generation from images, and tools for drug discovery, which are not present in earlier versions like GPT-3 and GPT-3.5. Despite its capabilities, GPT-4 has certain limitations. These include hallucinations-production of text by a language model that appears plausible but contains information that is either incorrect, not based on the model’s training data, or is contextually inappropriate. This phenomenon occurs due to the model’s probabilistic nature, which predicts the next word or phrase based on learned patterns rather than a strict verification of factual accuracy (Manakul et al. 2023). Additionally, challenges such as data privacy, algorithmic bias, and the ethical implications of AI-driven decision-making need to be addressed when implementing AI technologies (Korteling et al. 2021; Sarker 2022). To fully leverage AI’s potential for enhancing learning and scientific discovery, these challenges must be resolved and ethical guidelines established. Although large language models have made significant progress in recent years, they still require further development to become truly useful. A major limitation is their lack of interpretability, which hampers understanding the rationale behind the model’s predictions. Ethical considerations include potential risks such as improper use, unethical implementation, compromised integrity, and various other concerns. Overall, while large language models continue to push the boundaries of natural language processing, significant efforts are needed to address their limitations and the related ethical issues.

1.1 Organisation of paper

The structure of this study is as follows: Sect. 2 provides a comprehensive analysis and comparison of results from various recent extensive surveys on LLMs. Section 3 explains the technical aspects related to the framework and structure of LLM systems, while Sect. 4 investigates the word embeddings used in LLMs. The overall taxonomy of the paper is illustrated in Fig. 1. Subsequently, Sects. 5 and 6 explore relevant research conducted across multiple fields. These sections cover a broad range of classifications and methods based on LLMs applicable in diverse settings and domains. The concluding section of the paper addresses the most contentious topics and their potential future development, summarizing the discussion.

2 Prior works

Previous surveys relevant to LLM specialization are concisely reviewed in this section.

Recent review articles have deliberated on the advantages and indispensability of tailoring LLMs for particular domains. The critical risks of using generic LLMs in specialized fields such as medical education are emphasized in Sallam (2023), primarily due to their lack of specificity and precision. Additionally, Shaghaghian et al. (2020) provides recommendations for implementing language models tailored to the legal domain. Initial research on a finance-focused LLM has displayed encouraging outcomes, showcasing enhanced efficacy in financial assignments while maintaining parity with overall benchmarks (Wu et al. 2023). Given these developments, it is essential to undertake a thorough examination and precise categorization of domain specialization methods to facilitate the successful application of LLMs across various industries. Research into effective and efficient adaptation of PLMs to different domains encompasses methods such as introducing new model layers or adjusting model parameters, as detailed in surveys (Ding et al. 2022; Guo and Yu 2022). The review (Reis et al. 2021) is one of the most current and relevant surveys of deep learning models that utilize transformers as their core approach for language understanding. It reviews addresses knowledge-encoding strategies for these models and highlights issues such as reliance on context and language. For optimal performance and efficiency in standard NLP tasks, the survey (Zhang and Yang 2018) summarizes and analyzes existing NLP models. The primary value of this survey lies in its detailed information on various architectures and their functionalities. However, LLMs do not benefit from these methods due to the complexity and inaccessibility of their architecture and parameter space. The computational demands and need for effective optimization strategies pose challenges to maintaining the expertise of LLMs.

Several systematic literature reviews have focused on specific applications of NLP and LLMs, such as automated feedback, chatbots, question generation, and essay scoring, to conduct their analyses. For example, Kurdi et al. (2020) performed a comprehensive evaluation of empirical studies addressing the issue of automatic question generation in educational settings. They provided an extensive overview of various generation strategies, tasks, and evaluation techniques described in the existing literature. Semantic-based question generation closely related to source content has significant potential for LLMs. The use of chatbots in educational contexts has been thoroughly investigated by Wollny et al. (2021). Their conclusion was that considerable effort is needed to fully harness the potential of chatbots, including improving their adaptability across diverse educational environments. Automatic essay scoring systems have been examined in a comprehensive literature review (Ramesh and Sanampudi 2022), highlighting the limitations of current systems based on classical machine learning (ML) and deep learning (DL) techniques. Some authors, such as in Mialon et al. (2023), discuss methods to enhance the reasoning capabilities of LLMs, while others, like in Yang et al. (2023) and Zhao et al. (2023), explore the foundations and potential applications of generative artificial intelligence. However, these studies did not fully detail the framework of LLMs. Overall, these extensive literature reviews have identified issues that could be addressed by implementing advanced LLMs (such as GPT-3 or Codex).

The previous research exhibits several limitations, which are detailed as follows:

-

Although there are comprehensive analyses (Min et al. 2021; Qiu et al. 2020) of Pretrained Foundation Models (PFMs) and their application to various NLP tasks, these analyses may not be directly applicable to LLMs due to inherent differences.

-

Numerous review articles have surfaced, focusing on distinct facets of LLMs, in response to their growing significance and established efficacy.

-

Some studies Yang et al. (2023) and Zhao et al. (2023) address elements such as enabling reasoning capabilities in LLMs or examining the foundations and potential applications of generative artificial intelligence. However, these studies may not fully capture the detailed framework of LLMs.

-

The systematic analysis and classification of LLM subfields have not been adequately addressed. Identifying the key subfields within the LLM domain and understanding their contribution to the development of general-purpose LLM frameworks is a recognized gap.

This study thoroughly investigates the methodologies employed in constructing general-purpose LLM frameworks, along with current trends and challenges in this domain.

2.1 Motivation

At present, there is no exhaustive and systematic analysis for categorizing LLM subfields within the context of multi-task NLP that includes explicit benefits and comparative analysis. This study rigorously investigates the techniques used in developing versatile LLM frameworks, as well as the current trends and challenges in this domain. The following enumerates the research questions.

-

1.

What are the fundamental theoretical principles of LLMs and how do they facilitate advancements in natural language comprehension?

-

2.

What impact do different design strategies in language modeling and word embeddings have on the performance and capabilities of LLMs?

-

3.

What are the primary factors affecting the performance of LLMs in downstream tasks across various domains, and how do different LLM architectures perform in these contexts?

-

4.

What are the distinctive features of major LLM architectures, and how do these features influence their performance in different applications?

-

5.

What are the current unresolved issues and limitations in the domain of LLMs, and how can these issues be addressed to advance language comprehension?

-

6.

What ethical factors necessitate examination during the advancement and implementation of LLMs, and what strategies exist to alleviate potential biases and ethical dilemmas?

2.2 Contributions

Recent research has extensively investigated methods for domain specialization in LLMs. Numerous conventional methodologies prioritize the development of universally applicable technical resolutions, capable of accommodating diverse domains with marginal adjustments and access to pertinent domain-specific data. However, there is currently no comprehensive standardization or summary of methodologies for evaluating different domain specialization strategies, making it difficult to cross-reference them across different application domains. This lack of transparency obscures the current bottlenecks, difficulties, unresolved problems, and potential future research areas, posing a challenge for non-AI specialists. This survey provides an in-depth and systematic analysis of the most recent advancements in LLM domain specialization. The following key points highlights the contributions of this work:

-

A comprehensive introduction and context of LLMs is provided to facilitate understanding of advanced concepts.

-

The design of LLMs, with an emphasis on language modeling and word embeddings, is thoroughly examined to improve understanding of various methodologies.

-

An extensive evaluation of recent studies utilizing LLMs for various downstream tasks across different domains is conducted. Additionally, a summary of notable LLM architectures is included.

-

The study has identified several unresolved issues in this field and discussed the potential future directions for LLMs.

3 Methodology for research

This work adhered to the guiding principles outlined by Kitchenham and Charters (Keele et al. 2007). Table 1 illustrates the use of PIOC techniques in developing the research questions.

3.1 Criteria for selecting research studies

Key phrases were chosen to obtain the necessary search results for exploring the research questions within the field. The search string used is: (‘LLM’ OR ‘LLM Architectural features’ OR ‘Generative Language Models’ AND ‘Artificial Intelligence’ OR ‘Neural Networks’ OR ‘deep learning’ AND ‘GPT’).

Table 2 exhibits the outcomes of the search. Although examination in this domain has persisted since 2000, the focus lies on scrutinizing papers published from 2014 to 2023 to depict the most recent progressions in the domain.

3.2 Inclusion and exclusion criteria

Only research papers that are pertinent are considered for this study. The studies encompassed various aspects, including refining the methodologies, examining the frameworks for LLM, and addressing diverse fields of application. The selected language is English, and all the items are subject to peer review.

3.3 Study selection results

A search query was selected to identify and classify 248 papers from the databases specified in Table 2. Following the removal of duplicate entries and the application of inclusion and exclusion criteria, 49 research papers were identified as appropriate for this study. This number was increased to 61 by integrating significant contributions using snowballing techniques. Subsequently, employing quality assessment standards, a total of 53 studies were selected for comprehensive analysis on Language Model Models (LLMs). The inclusion and exclusion criteria are detailed in Table 3.

3.4 Criteria for evaluating the quality of studies for selection

Quality assessment criteria are employed to ascertain the relevance of research papers in addressing the research questions. The research studies were assessed and given a score of either 1 or 0 according to the criteria specified in Table 4. This study considers a quality score of 4.

4 Background

Probability distributions over a sequence of words are scrutinized within the scope of language modeling, a longstanding and foundational pursuit in NLP (Zhang et al. 2022). Language models (LMs) have proven exceptionally beneficial across a spectrum of computational linguistic tasks, including but not limited to speech recognition and text generation. Such tasks derive substantial advantages from the integration of these refined LMs. Figure 2 provides a comprehensive depiction of LLMs. Conventional language models, also referred to as CLMs, are employed to probabilistically predict the occurrence of linguistic sequences. Both CLMs and probabilistic language modeling (PLM) approaches are amenable to training. Presently, data-driven methodologies are ubiquitous across organizations (Wei et al. 2023). PLMs are crafted by training neural network models on extensive datasets sourced from diverse corpora. The PLMs are subsequently adjusted through fine-tuning procedures utilizing datasets and objectives customized to suit their intended applications. The probability of a word sequence within a conventional language model can be approximated through techniques such as n-grams or Hidden Markov Models. The chain rule is one method that can be utilized when calculating the probability: \(P\left( w_1, w_2, \ldots , w_n\right) =P\left( w_1\right) \cdot P\left( w_2 \mid w_1\right) \cdot P\left( w_3 \mid w_1, w_2\right) \cdot \ldots P\left( w_n \mid w_1, w_2, \ldots , w_{n-1}\right)\), where \(\left( w_1, w_2, \ldots , w_n\right)\) represents sequence of words. Pre-trained LMs, such as GPT or BERT, utilize neural networks and fine-tuning for various NLP tasks. In these models, the probability of a word sequence is computed using the Transformer architecture. Given a sequence of words \(x_1, x_2, \ldots , x_n\), the probability can be calculated using the following equation:

In the context of the given formulation, \(P\left( x_i \mid x_1, x_2, \ldots , x_{i-1}\right)\) denotes the conditional probability of the ith word given the sequence of preceding words up to \(i-1\), and \(x_i\) denotes the ith word in the input sequence.

4.1 CLMs and PLMs

CLMs and PLMs refer to different types of language models used in NLP. Here are the key differences between the two:

-

Context

-

CLM: a CLM generates text conditioned on a given input or context. It takes into account the previous words or context to generate the next word or sequence of words. CLMs are often used in tasks like machine translation, chatbots, and text completion.

-

PLM: in order to predict the likelihood of the next word in a sequence, A PLM is trained using a significant amount of text (Fu-Hao et al. 2022) from a corpus. It is able to generate text on its own and does not need to be explicitly conditioned on a particular context in order to do so. PLMs are widely used for various NLP tasks, including text generation, sentiment analysis, named entity recognition, and more.

-

-

Training

-

CLM: an average CLM undergoes training through supervised learning, wherein it learns to predict the following word or word sequence based on a given context. During the training process, the model is provided with pairs of input contexts and their corresponding target outputs as the training data (Wei et al. 2023).

-

PLM: a PLM undergoes unsupervised learning on an extensive collection of text, where it gains proficiency in predicting the succeeding word in a sequence by analyzing the preceding words. Typical approaches employed to train PLMs encompass next sentence prediction (NSP) and masked language modeling (MLM).

-

-

Autoregressive vs. Autoencoding

-

CLM: CLMs are autoregressive models, meaning they generate text by sequentially predicting each word based on the previous context. Autoregressive models can be slower during generation since they generate words one at a time.

-

PLM: PLMs can be both autoregressive and autoencoding models (Wei et al. 2023). In addition to generating text autoregressively, they can also perform tasks like text classification or named entity recognition by encoding the input text and making predictions based on the learned representations.

-

-

Fine-tuning

-

CLM: CLMs are often fine-tuned on specific downstream tasks. The pretrained model is adapted to the target task by further training on task-specific data. This fine-tuning helps the model specialize in the desired task and improve performance.

-

PLM: PLMs can also be fine-tuned for specific tasks, similar to CLMs. The pretrained PLM serves as a feature extractor, and the additional task-specific layers are trained on the labeled data (Fu-Hao et al. 2022). As shown in Fig. 3, a chain of Transformers serves as the backbone of the encoder, while a language model that has already been trained is used for decoding. To enhance the input acoustic feature frames and encompass short-term temporal variances, the method initially incorporates two convolutional neural network (CNN) layers employing 2D filters and subsampling for each speech signal. The stride of each CNN layer is set to 2, reducing the quantity of acoustic representations to one-fourth. To signify the long-term dependencies across all acoustic statistics, six Transformers are sequentially arranged, with position embeddings employed to encode the absolute position of each acoustic representation within an utterance. The encoder and decoder are surrounded by green and red dashed lines.

-

The acoustic representation encoder and text generation decoder make up the network architecture of the proposed non-autoregressive ASR framework (Fu-Hao et al. 2022). (Color figure online)

Overall, CLMs and PLMs serve different purposes in NLP. CLMs are conditioned on specific input contexts and generate text accordingly, while PLMs are pretrained models capable of generating text without explicit conditioning. Both types of models have their own strengths and are widely used in various NLP applications.

With the rise of neural networks and deep learning, PLMs have garnered significant attention. Examples of such models include OpenAI’s GPT series and BERT, which are trained on large textual datasets using unsupervised learning methods (Devlin et al. 2018; Liu et al. 2019). During training, these models predict missing words in sentences or estimate the probability of a word given its context within a broader text (Yang et al. 2019; Lan et al. 2019). PLMs possess the capacity to capture abundant semantic and syntactic information from the training data and can be fine-tuned for specific NLP tasks by utilizing task-specific datasets and objectives. The process of fine-tuning customizes the pre-existing model for a specific use case, such as machine translation, sentiment analysis, or question answering. By leveraging pre-existing knowledge and customizing it for specific tasks, organizations can capitalize on data-driven approaches across various NLP applications.

4.2 Architectures

In the subsequent section, language models, also termed Transformer-based language models are examined, and synopsis of each is provided. These language models, employing a specialized form of deep neural network architecture known as the Transformer, aim to predict upcoming words in a text or words masked during the training process. Since 2018, the fundamental structure of the Transformer language model has scarcely changed (Radford et al. 2018; Devlin et al. 2018). An advanced architecture for sharing information about weighted representations amongst neurons is the Transformer (Vaswani et al. 2017). It utilizes neither recurrent nor convolutional architectures, relying solely on attention processes. To learn the most relevant information from incoming data, the Transformer’s attention mechanism assigns weights to each encoded representation. To determine the result of the attention operation, one computes the weighted sum of the values to yield the outcome. The compatibility function between the query and the corresponding key is utilized to determine how much weight each factor should be given Vaswani et al. (2017). The evolution of LMs has led to the proliferation of various attention mechanisms (Guo et al. 2022). For example, self-attention is developed in NLP to establish associations between nodes in a sequence to generate a representation of that sequence. The Transformer model utilizes a mask matrix to establish the visibility of words to each other, facilitating an attention mechanism grounded in self-attention.

The initial step involves parsing a string of text into a sequence of tokens, some of which may comprise multiple smaller tokens due to limitations in available vocabulary. Subsequently, each token undergoes an “embedding” process, wherein it is allocated a fixed vector. These vectors are acquired during the pre-training phase (Vaswani et al. 2017). A series of Transformer layers, typically ranging from 10 to 100 layers, is utilized for processing the embeddings. These layers consist of individual feedforward networks, layer normalizations, and self-attention networks at the token level. The self-attention network, a pivotal and innovative component of the Transformer layers, generates “value,” “query,” and “key” vectors for each token, employing projections. Through this mechanism, token embeddings are amalgamated to produce a “contextualized” representation of each token, thereby completing the transformation process. Essentially, this architecture converts each input token into a distribution over forthcoming tokens based on their probabilities. Typically, the number of parameters in a language model ranges between 100 million and 500 billion (Devlin et al. 2018; Brown et al. 2020; Lieber et al. 2021; Smith et al. 2022); autoregressive models tend to possess more parameters than masked models.

The Transformer architecture, as originally formulated, does not inherently encode positional information of tokens within input sequences, despite its potential utility in capturing word order. Consequently, Transformer-based language models integrate various methods to incorporate positional information (Wang et al. 2021; Dufter et al. 2022). These methods include augmenting token embeddings with absolute position embeddings (Vaswani et al. 2017; Radford et al. 2018; Brown et al. 2020; Zhang et al. 2022), utilizing relative position embeddings or biases (Shaw et al. 2018; Dai et al. 2019; Raffel et al. 2020), or employing rotary position embeddings (Su et al. 2021; Chowdhery et al. 2022). Studies suggest that models employing relative position approaches may exhibit improved performance in extrapolating to longer sequences compared to those utilizing absolute position methods (Press et al. 2021). Input sequences for language models typically range between 500 and 2000 tokens in length prior to pre-training.

4.3 Learning methodologies

Studies indicate that deep learning algorithms within the field of computer vision (CV), display superior efficacy in contrast to traditional learning algorithms across a broad spectrum of tasks. These tasks include but are not limited to classification, identification, detection, and segmentation, as well as more specialized tasks such as matching, tracking, and sequence prediction. The disparity in performance between deep learning and traditional models is considerable across these tasks. Furthermore, both natural language processing (NLP) and graph learning (GL) employ analogous learning methodologies (Samant et al. 2022).

-

Supervised learning

Substituting every instance of Y found within the training dataset with values denoted as \(\{(a_i, b_i)\}_{i=1}^n\) facilitates a faithful representation of the original dataset. As a result, the variable Y can be represented as \(\{(a_i, b_i)\}_{i=1}^n\), where \(a_i\) signifies the ith instance in the training set and \(b_i\) signifies its associated label. The overarching objective of the network is to minimize the subsequent objective function (Zhou et al. 2023) while acquiring proficiency in learning a function \(f(x; \theta )\):

$$\begin{aligned} \arg \min _{\theta } \frac{1}{n} \sum _{i=1}^{n} L\left( f(a_i;\, \theta ), b_i\right) + \lambda \Omega (\theta ) \end{aligned}$$(2)where the value of L along with the value of \(\Omega\) is the fixed loss function value and an additional regularization term.

-

Semi-supervised learning

Assuming that, in addition to the dataset annotated by humans, there is access to another, unlabeled dataset \(Z = \{z_i\}_{i=1}^m\). Utilizing both datasets collectively, a learning approach is devised to acquire an optimal network, as outlined in Zhou et al. (2023):

$$\begin{aligned} \arg \min _{\theta } \left( \frac{1}{n} \sum _{i=1}^{n} L\left( f(a_i; \theta ), b_i\right) + \frac{1}{m} \sum _{i=1}^{m} L_0\left( f_0(z_i;\, \theta _0), R(z_i, X)\right) + \lambda \Omega (\theta )\right) \end{aligned}$$Within this framework, a relationship function denoted as Ris defined to outline the objectives pertaining to the untagged data. These pseudo-labels are merged into the overall training regimen. The dataset Z retains the primary data, and an encoder, denoted as \(f_0\), is employed to generate a new depiction of this data. Essentially, unsupervised learning and self-supervised learning (SSL) facilitate learning from inherent data characteristics without the presence of labeled data during training. This is accomplished by examining internal distances or predefined pretext tasks.

-

Reinforcement learning

As soon as an event of type t occurs, the agent is given a state \(p_t\) from a state space P of its choosing. In the next step, an action \(q_t\) is chosen from an available set of actions Q using a policy \(\pi _{\theta }(q_t|p_t)\), where \(\pi _{\theta }\) that maps actions to states according to some parameters \(\theta\). The agent is then rewarded with a scalar \(s_t = s(p_t, q_t)\) and progresses to state \(p_{t+1}\) based on the dynamics of the surrounding environment (here, the notation r(p, q) represents the reward function). This procedure is repeated after each episode until the agent reaches an endpoint. As soon as one episode concludes, the RL agent will restart and begin the next. Total rewards are discounted by a factor of \(\gamma \in (0, 1]\), defined as \(S_t = S(p_t, q_t) = \sum _{k=0}^{\infty } \gamma ^k r_{t+k}\) resulting in a net reward for each condition. The agent’s goal is to achieve the highest possible long-term expected return from each state as shown below (Zhou et al. 2023):

$$\begin{aligned} \max _{\theta } \mathbb {E}_{\pi _{\theta }}[S_t | p_t, q_t = \pi _{\theta }(p_t)] \end{aligned}$$(3)

4.4 Linguistic units

In the domain of English language modeling, tokenization denotes the procedure of dissecting a textual sequence into diminutive units termed as tokens, serving as the fundamental components for language models. The choice of tokenization methodology hinges upon the specific language and model utilized. Presented below are numerous prevalent techniques for tokenization utilized in English language modeling, contingent upon diverse unit dimensions:

-

Character-level tokenization: this method treats every single character within the text sequence as an individual token. For instance, the sentence “Hello, world!” would be tokenized into [‘H’, ‘e’, ‘l’, ‘l’, ‘o’, ‘,’, ‘ ’, ‘w’, ‘o’, ‘r’, ‘l’, ‘d’, ‘!’]. Character-level tokenization is advantageous when dealing with languages lacking clear word boundaries or for specific tasks like character-level language modeling (Sutskever et al. 2011; Al-Rfou et al. 2019; Xue et al. 2022). In mT5 (Xue et al. 2020), the text is segmented into SentencePiece tokens, and segments of approximately 3 tokens are masked (highlighted in red). Both the encoder and decoder transformer stacks have identical depths. In contrast, ByT5 (Xue et al. 2022) processes the text as UTF-8 bytes, with spans of approximately 20 bytes being masked. Additionally, the encoder in ByT5 is three times deeper than the decoder.

-

Word-level tokenization: this technique divides the textual sequence into discrete units comprising single words or word-like entities (Sennrich et al. 2015). For instance, the phrase “Hello, world!” undergoes tokenization resulting in [‘Hello’, ‘,’, ‘world’, ‘!’]. Utilizing word-level tokenization is a prevalent practice as it affords a straightforward depiction of text, enabling the model to apprehend the semantic essence of individual words and their interconnections within a sentence.

-

Subword-level tokenization: this approach involves the segmentation of words into smaller subword units, which can aid in managing out-of-vocabulary terms or accommodating languages with complex morphology (Mikolov et al. 2012). Subword tokenization techniques such as Byte-Pair Encoding (BPE) (Gage 1994) or Unigram Language Model (ULM) (Kudo 2018) are commonly employed to construct a vocabulary of subword units based on the training corpus. For instance, the term “unhappiness” could be segmented into [‘un’, ‘happiness’] or [‘un’, ‘h’, ‘ap’, ‘p’, ‘i’, ‘ness’]. Tokenization at the subword level enables the model to handle novel words by leveraging the subword units found in the training data.

-

Phrase-level tokenization: in certain scenarios, sequences of words or multi-word expressions have the potential to be regarded as tokens (Suhm 1994; Saon and Padmanabhan 2001). This method entails representing the semantic content of frequently encountered phrases as a singular entity, as opposed to dissecting them into separate words (Levit et al. 2014). For instance, the expression “New York City” could be tokenized as [‘New York City’]. The practice of tokenization at the phrase level proves advantageous, especially when particular phrases carry substantial semantic or contextual relevance within the given task.

The choice of tokenization method depends on the specific requirements of the language modeling task and the characteristics of the language under consideration. Various tokenization strategies possess unique benefits and constraints, prompting researchers to explore different methods to identify the most appropriate one for their particular application (Table 5).

4.5 Training dataset

The precise forecasting of performance enhancement in generative models between 2019 and 2022 has precipitated a notable expansion in the scale of these models within this timeframe. With increased model size, given adequate data and computational resources, proficiency in word prediction within textual contexts, as demonstrated by the training dataset, is enhanced (Kaplan et al. 2020; Ganguli et al. 2022; Hoffmann et al. 2022). Consequently, a pre-trained LLM comprehends a broader array of contexts, yielding higher-quality, more nuanced, and lengthier texts while retaining greater contextual coherence with preceding text passages. The performance of predictive base models is inherently intriguing, yet a noteworthy transition occurs as models undergo size augmentation. Noteworthy is the capacity of LLMs, equipped with between 10 and 100 billion parameters, to undertake specialized tasks such as code generation, translation, and human behavior prediction, often surpassing or matching the proficiency of specialized models. Table 6 displays the analysis of several prominent and initial PLMs. Anticipating the emergence of such capabilities has posed challenges, and the potential additional capabilities of larger models remain uncertain (Ganguli et al. 2022) (Table 7).

However, as LLMs increase in size, they reveal not only new capabilities but also new failure mechanisms. The development of biases related to sex, gender, race, and religion in LLMs coincides with their learning in programming and chess (Ganguli et al. 2022).

5 Word embeddings

In the domain of LLMs, the term “word embeddings” refers to the representation of words as condensed, lower-dimensional vectors within a continuous vector space. These embeddings encapsulate both semantic and syntactic associations among words, derived from their co-occurrence patterns within a specified text corpus (Petukhova et al. 2024). In the context of word embeddings, the term “lower-dimensional” is used to compare the vector representations of words with the original high-dimensional space in which the words exist. These original high-dimensional spaces typically represent the entire vocabulary of words in a language, where each word is represented by a one-hot encoded vector of size equal to the vocabulary size. The word embeddings acquired by extensive language models encapsulate comprehensive contextual details and encode semantic associations among words. They facilitate the comprehension of word meanings by the models and facilitate their utilization in various natural language processing endeavors. In this section, various prominent methodologies for producing word embeddings are examined and a concise summary of the typically utilized approaches is provided.

5.1 Latent semantic analysis (LSA)

The objective of LSA is to extract and depict word usage within context (Asudani et al. 2023). This facilitates the identification of clusters of words with akin meanings. LSA, employing a vector space methodology, enables the extraction of word-word, passage-passage, and passage-passage relationships (Shaik et al. 2022). These relationships, alongside human cognitive processes and semantic representations, exhibit significant interconnections. The utilization of LSA substantially enhances our capacity to extract and discern the semantic associations that manifest in the thoughts of speakers or writers during communication. Furthermore, LSA can be utilized to approximate human judgment, aiding in the anticipation of semantic connections among different textual segments, and providing computational estimations of semantic similarities between words (Singh et al. 2022; Zhang et al. 2022; Al-Hashedi et al. 2022).

LSA entails a two-phase, fully automated mathematical-statistical process. The initial stage involves constructing a matrix wherein each row corresponds to unique words within the input text, while sentences, sections, and other textual entities (e.g., paragraphs and documents) are allocated their individual columns. Initially, LSA generates a matrix representation of the input text denoted as a term-by-sentence matrix (A). For the ith phrase, every \((A_i)\)th column vector in the matrix constitutes a term-frequency vector accompanied by respective weights. Given s sentences and t distinct words or terms within the input text, matrix A manifests as a \(t \times s\) matrix (\(t \gg s\)). Word and phrase frequencies within a specific sentence are logged in each cell of the matrix. Various methods such as the count of occurrences, binary representation of occurrences, TF-IDF, Root Type, Log Entropy, and Modified TF-IDF can be employed to determine the values of matrix cells.

Singular Value Decomposition (SVD) is then applied to matrix A in the second stage of LSA. Matrix A is triangulated into matrices V, \(\Sigma\), and U after being subjected to SVD (Ramezani et al. 2023).

In this case, U is a \(t\times c\) column-orthogonal matrix with left singular values in its columns. If A has eigenvalues, then the principal diagonal elements of \(\Sigma = \text {diag}(\delta _1, \delta _2, \dots , \delta _c)\) will also be eigenvalues of \(c\times c\) as a whole. On the major diagonal, the eigenvalues have been arranged from least to greatest (Ramezani et al. 2023). The columns of the \(V^T\) matrix, which are the right singular values, are those of the orthogonal \(c\times s\) matrix. The following relation is given for the matrix \(\Sigma\) if and only if the condition \(\text {rank}(A) = r\) holds: \(\sigma _1 \ge \sigma _2 \ge \sigma _3 \ge \dots \ge \sigma _r \ge \sigma _{r+1} = \dots = \sigma _s = 0\)

5.2 Word2Vec

Word embeddings are created using a model proposed by Mikolov et al. (2013), developed by Google. Word2vec differentiates itself from LSA and multi-model learning (MML) by being a predictive rather than a statistical model. The underlying linguistic framework of Word2vec is based on a feedforward neural network model (Bengio et al. 2000). Word2vec employs both the Skip-Gram and continuous bag of words (CBOW) models, which utilize neural networks. The Skip-Gram model operates by predicting the surrounding words of a target word, whereas the CBOW model predicts the current word by aggregating the word vectors of its neighboring words. By leveraging a contextual window, Word2Vec is capable of unsupervised learning to determine semantic meaning and similarity between words (Zhao et al. 2022; Subba and Kumari 2022; Oubenali et al. 2022). Terms with similar meanings (such as “king” and “queen”) typically cluster together within this semantic space. CBOW models are more efficient than Skip-Gram models since they treat the entire context as a single entity, rather than generating multiple training pairs for each word in the context. However, the Skip-Gram model performs better at identifying rare words due to its superior context management.

Word2Vec has experienced significant growth in NLP in recent years (Mikolov et al. 2013). The primary function of the trained model is to provide weights for the word embedding technique, which relies on a shallow neural network. These embedding vectors capture the most salient word connections in the training set. The modeling capabilities of Word2Vec extend beyond the NLP domain. During training, Word2Vec models require the specification of the target vector length, denoted by N. Another critical parameter is the window length W, representing the width of the sliding window used to extract training samples from the data. Word2Vec embeddings possess algebraic features. For instance, consider an advanced Word2Vec model trained on English text. Let \(V(w_i)\) be the Word2Vec embedding of word \(w_i\), where \(w_0 =\) “king”, \(w_1 =\) “man”, \(w_2 =\) “woman”, \(w_3 =\) “queen”. According to Mikolov et al. (2013), if “closeness” is measured by cosine similarity, the vector \(V(w_3)\) is most similar to the vector \(V(w_0) - V(w_1) + V(w_2)\). The results suggest that the embeddings generated by Word2Vec effectively represent key elements of linguistic semantics within the domain of NLP.

5.3 Global vectors (glove)

GloVe, short for Global Vectors for Word Representation, is an unsupervised learning algorithm designed to generate word embeddings. It creates a vector space representation of words based on their co-occurrence statistics within a large text corpus (Pimpalkar 2022). The GloVe algorithm utilizes word co-occurrence data or global statistics to infer semantic relationships between words in the corpus, as opposed to word2vec’s dependence on local context windows for deriving these associations (Badri et al. 2022; Gan et al. 2022; Curto et al. 2022). To identify word co-occurrences, GloVe employs a global matrix factorization technique (Pennington et al. 2014). While word2vec is based on a feedforward neural network model, making it a “neural word embeddings” technique, GloVe is based on a log-bilinear function, classifying it as a “count-based” model. By analyzing the frequency of word pair co-occurrences in a corpus, GloVe captures their relationships. The ratio of the probabilities of two words co-occurring can encode meaning and assist in addressing the word-analogy problem.

The initial stage of GloVe involves the creation of a co-occurrence matrix. Consider a vocabulary of size \(V\), and each word is represented by its index in the vocabulary. The co-occurrence matrix, denoted by \(X\), is a \(V \times V\) matrix, where each element \(X_{ij}\) represents the number of times word \(i\) and word \(j\) co-occur within a specific context window. The objective of GloVe is to acquire word vectors that represent both the semantic and syntactic associations among words. It does so by defining a word vector for each word, denoted by \(w\), and a context vector, denoted by \(c\). The word vectors and context vectors are both of size \(d\), representing the dimensionality of the word embeddings (Pennington et al. 2014).

GloVe defines an objective function that measures the similarity between word vectors and context vectors:

where \(w_i\) and \(c_j\) are the word vector and context vector for words \(i\) and \(j\), respectively, and \(b_i\) and \(b_j\) are the corresponding bias terms. The objective function \(J\) is optimized using gradient descent or other optimization algorithms. The goal is to minimize the difference between the dot product of word and context vectors, the biases, and the logarithm of the co-occurrence counts. The GloVe algorithm introduces a parameter \(\alpha\) to control the importance of each co-occurrence pair. The co-occurrence counts are raised to the power of \(\alpha\), resulting in the following equation (Pennington et al. 2014):

where \(f(X_{ij}) = \min \left( 1, \left( \frac{X_{ij}}{x_{\max }}\right) ^\alpha \right)\) is a weighting function. \(x_{\max }\) represents the maximum co-occurrence count in the matrix. By optimizing this objective function, GloVe learns the word vectors and context vectors that capture meaningful relationships between words.

5.4 FastText

This technique involves learning large-scale word embeddings (Bojanowski et al. 2017; Joulin et al. 2016), representing an advancement from Word2Vec. FastText considers each word as a composite of character n-grams (e.g., “unbalanced” = “un” + “balance” + “ed”), rather than as single units, with the objective of learning vector representations by leveraging the character and morphological structure of words. Consequently, words can be represented by averaging the embeddings of their n-grams. Although the initial computational cost is higher, fastText’s efficient representation of words through sub-word components allows it to estimate “out of vocabulary” (OOV) and rare words, as their character-based n-grams are likely shared with other words in the training data.

The Facebook AI research team developed the FastText library, which is a compilation of word representations. It includes 2,000,000 frequent crawl words, each represented by 300 dimensions, resulting in 600,000,000 word-vectors. Besides single words, it incorporates hand-crafted n-grams as features. Due to its simple design, text classification can be performed efficiently and accurately (Qiao et al. 2018). Word embedding methods have been widely applied to various text categorization problems. Pre-trained word embeddings can predict word contexts in an unsupervised manner, assuming that words in close proximity within a sentence have similar meanings (Badri et al. 2022; Kowsher et al. 2022). FastText embeddings utilize morphological cues to accurately represent vectors, aiding in the identification of problematic words. This capability also enhances its generalizability (Didi et al. 2022). To improve the handling of unfamiliar words, FastText word embedding employs n-grams to construct vectors.

Each input sequence is represented in a unique way for the given task using a feedforward network with ReLU activation and a biLSTM encoder (McCann et al. 2017)

(Left) The framework and training targets for the Transformer (Radford et al. 2018). (Right) Adjustments to the input data for tuning performance on specific tasks

5.5 Contextualized word embeddings (CoVe)

The objective of CoVe (McCann et al. 2017) is to enhance word representation by training an encoder and subsequently adapting it for a different task. By assigning zeros to represent unknown words, this approach also encounters the OOV issue (McCann et al. 2017; Mars 2022), as depicted in Fig. 4. Bilateral attention deficits, a maxout network calculates a distribution over classes using pooled features from multiple representations, a biLSTM incorporates conditional information from both representations, and the two methods are interdependent.

The BiLSTM accepts a word sequence as input and produces a series of hidden states representing the contextual details of each word. Taking the input sequence of words as \(X = [x_1, x_2, \ldots , x_T]\), where T is the length of the sequence. Each word \(x_t\) is associated with a word embedding vector \(e_t\). The hidden states of the BiLSTM at each time step are denoted as \(H = [h_1, h_2, \ldots , h_T]\). The forward pass of the BiLSTM involves computing the hidden states for each time step t using the input sequence X. The forward hidden states \(h_t\) are computed as follows:

where LSTM_forward is the forward LSTM cell function and \(h_{t-1}\) is the previous hidden state. Similarly, the backward pass of the BiLSTM involves computing the backward hidden states \(g_t\) using the input sequence X. The backward hidden states \(g_t\) are computed as follows:

The LSTM_backward function represents the backward LSTM cell, and \(g_{t+1}\) denotes the subsequent hidden state. The combined forward and backward hidden states: \(c_t = [h_t, g_t]\) are concatenated to derive the ultimate contextualized word representation \(c_t\). The hidden states \(h_t\) and \(g_t\) capture the contextual information of the word \(x_t\), considering both preceding and succeeding words. The encoder is trained by optimizing a task-specific objective function, such as language modeling or machine translation. Once the encoder is trained, it can be repurposed for a different task by using the contextualized word representations \(c_t\) as inputs to downstream models or classifiers (Mars 2022; Andrabi and Wahid 2022; Ghanem and Erbay 2023). The training procedure entails the optimization of an objective function specific to the task, yielding an encoder capable of producing contextualized word embeddings suitable for diverse downstream tasks.

6 Deep learning for LLM tasks

Text classification (TC) is a fundamental sub-task underpinning all natural language understanding (NLU) tasks. Questions and answers from customer interactions exemplify text data originating from various sources. While text provides a robust data foundation, its lack of organization complicates the extraction of meaningful insights, making the process challenging and time-consuming. TC can be performed using either human or machine labeling. The increasing availability of data in text form across various applications underscores the utility of automated text categorization. Automatic text classification typically falls into two categories: rule-based or artificial intelligence-based methods. Rule-based approaches categorize text based on predefined criteria and require extensive domain expertise. AI-based methods, on the other hand, are trained on labeled text samples to classify new texts. Machine learning (ML) algorithms learn the relationship between the text and its labels. Traditional ML-based models often follow a phased approach. Generally, NLU is employed for tasks requiring reading, understanding, and interpretation. The first step involves manually extracting features from a document, and the second step involves fitting these features into a classifier to generate a prediction. Relying on manually extracted features necessitates complex feature analysis to achieve reasonable performance, which is a limitation of this phased approach.

OpenAI has harnessed Google’s innovative Transformer neural network architecture (Vaswani et al. 2017) for embedding model creation since 2018. The attention-based Transformer significantly enhances the accuracy of TPU-based model training on a large scale. GPT (Radford et al. 2018), an influential framework developed utilizing Transformers, is currently prevalent for text generation tasks, as depicted in Fig. 5. In 2018, BERT (Devlin et al. 2018), an advancement over the bidirectional transformer, was introduced by Google. OpenAI’s latest GPT-3 model (Brown et al. 2020) continues in the same trajectory, employing larger models trained on expanded datasets. This section highlights notable advancements in NLP and natural language understanding (NLU), demonstrating the application of various deep learning models for diverse language comprehension tasks within LLM.

6.1 Feed forward neural networks

In the domain of NLP (Radford et al. 2018; Devlin et al. 2018; Liu et al. 2019; Radford et al. 2019; Brown et al. 2020), the efficacy and adaptability of large-scale pretrained language models are noteworthy. It has been evidenced that augmenting the model size (scaling) serves as a dependable strategy for enhancing generalization, thereby facilitating additional functionalities, without encountering performance plateaus (Kaplan et al. 2020; Zhang et al. 2022; Chowdhery et al. 2022; Hoffmann et al. 2022; Wei et al. 2022). Nonetheless, there remains a necessity for more resource-efficient methodologies concerning the training and inference of LLMs, given the considerable computational resources requisite for the development of larger language models.

In the context of a transformer layer, the Feed-Forward Network (FFN) block receives an input vector x from the self-attention block. The FFN block consists of a series of operations that transform the input vector to produce an output vector y. Mathematically, the FFN block can be represented as follows (Liu et al. 2023):

value dm, is the total no. of memory cells and the value d is the total no. of dimensions in the input vector x, K is a matrix of shape \(dm \times d\) and x is the input vector. The matrix K acts as a set of learnable parameters that are multiplied element-wise with the input vector x. The resulting intermediate vector is then passed through a non-linear function f to obtain the hidden states m, which is a vector of shape dm. The matrix V, with shape \(dm \times d\), is another set of learnable parameters. The hidden states m are multiplied with V, resulting in a d-dimensional output vector y. Alternatively, the FFN block can be interpreted as a neural memory. In this view, it consists of dm key-value pairs, which form the key table and the value table, respectively. Each key \(k_i\) is a d-dimensional vector, and the values are represented by the matrix V. The memory operation can be represented as follows (Liu et al. 2023):

In this equation, the query input x is multiplied with each key \(k_i\), resulting in a memory coefficient \(m_i\) for the i-th memory cell. The non-linear function f is applied to each dot product \(x \cdot k_i\) to obtain the memory coefficient. The values \(v_i\) are the corresponding values from the value table V. Finally, the output vector y is computed as the sum of the values \(v_i\) weighted by their respective memory coefficients \(m_i\). Both views, the FFN as a multi-layer perceptron [Eq. (9)] and as a neural memory [Eq. (10)], describe the operations performed in the FFN block of a transformer layer, with the only difference being in how the key-value pairs are represented and utilized. In 2000, authors in Bengio et al. (2000) introduced the first model for analyzing natural language. The training process employs a dataset consisting of 14 million words, and the model is constructed upon a feedforward neural network architecture. Conversely, models that rely on premature embeddings demonstrate inferior performance compared to models that utilize manually acquired features (Borgeaud et al. 2022; Schwartz and Dodge 2020; Tay et al. 2022). Sparse scaling, which increases the amount of parameters while maintaining the same training and inference cost (in FLOPs), is a promising direction. Scaling up the feed-forward network (FFN) of a transformer with sparsely activated parameters has been the subject of recent research, yielding a scaled and sparse FFN (S-FFN). Two primary methods have been developed to accomplish S-FFN. S FFN is viewed as a form of neural memory (Sukhbaatar et al. 2015), and like a sparse memory, it only activates a subset of memory cells upon retrieval (Lample et al. 2019). The other method uses a Mixture-of-Expert Network (MoE) (Lepikhin et al. 2020; Fedus et al. 2022; Roller et al. 2021; Gururangan et al. 2021) that uses multiple, smaller FFN modules (called “experts”) and activates a subset of these experts based on the input.

6.2 Recurrent neural networks (RNN)

Models can undergo training on extensive textual datasets, subsequently utilizing the acquired knowledge for subsequent tasks through transfer learning (Mikolov et al. 2013). Before the introduction of the transformer architecture for transfer learning, unidirectional language models were commonly utilized despite their inherent limitations. These limitations encompassed the utilization of one-way RNN architecture and a constrained context vector size. Bidirectional Encoder Representation from Transformer (BERT) (Devlin et al. 2018), can augment the performance of subsequent tasks by addressing these deficiencies.

The LSTM model extends the capabilities of the RNN architecture. While training a basic RNN, the issue of vanishing gradients has been acknowledged. The LSTM model surpasses the standard RNN due to its enhanced memory mechanism. Specifically, the LSTM approach outlined in Hope et al. (2017) effectively reduces dimensionality while achieving notable performance in accurately classifying opinions, owing to its memory function. To employ machine learning for sentiment analysis, simplifying input functions is crucial. For training RNNs on restaurant critics’ opinions, researchers in Tarasov (2015) utilized a long short-term memory model. Various methods were employed to evaluate the collected data, including simple recurrent neural networks, logistic regression, bidirectional RNNs, and bidirectional long short-term memory networks. Among these, the best performance was observed with a deep bidirectional LSTM featuring multiple hidden layers. A vector representation of each word can be regarded as a system training parameter to facilitate the analysis of the model’s text input. For tasks such as identifying romantic phrases in movie reviews and assessing somatizing sentence pairs, the LSTM model was favored by researchers in Tai et al. (2015). Additionally, in Socher et al. (2013), the Treebank sentiment and recursive neural tensor networks were introduced for emotion recognition tasks. Application of recursive neural tensor networks to the positive/negative categorization of individual sentences resulted in a performance improvement from 80 to 85%.

6.3 Convolutional neural networks (CNN)

In contrast to conventional neural networks, CNNs incorporate neurons with adjustable weights and biases, enhancing their appeal. Each neuron processes multiple inputs via a dot product operation, optionally followed by a nonlinearity function. Consequently, CNNs form a feed-forward architecture that continually evolves (LeCun et al. 2010). Convolution primarily aims to identify pertinent features within datasets that exhibit only local connectivity. The activation function, crucial for learning abstract concepts and introducing nonlinearity into the feature space, receives the convolutional kernels’ outputs. The presence of unique activation functions for each neuron due to nonlinearity facilitates learning significant distinctions between images. Furthermore, subsampling typically succeeds the nonlinear activation function’s output, imparting resistance to geometric variations in the input and simplifying output summarization.

The CNN has found applications in NLP, such as language modeling and analysis, despite RNNs being deemed more appropriate for these purposes (Bhatt et al. 2021). Since the inception of CNN as a novel representation learning technique, there has been a shift in the methodology of sentence modeling or structuring in language. Sentence modeling aids developers in crafting functional software by offering insights into the semantic meaning of sentences. Traditional approaches to information retrieval assess data based on individual words or characteristics, which often overlooks the essence of the analyzed statement. In 2014, Kalchbrenner illustrated a dynamic CNN approach with k-max pooling in the training context (Kalchbrenner et al. 2014), as depicted in Fig. 6. A word embedding size of 4 is utilized, and the network comprises two convolutional layers, each with distinct feature maps. The filter widths for the upper and lower layers are 3 and 2, respectively.

A typical DCNN design for the 7 word input sentence (Kalchbrenner et al. 2014)

Illustration of the proposed method of modeling locality in Yang et al. (2018)

Through this methodology, connections among words can be discerned sans reliance on dictionaries or parsers (Gidaris and Komodakis 2015). Furthermore, Collobert and Weston have devised a variant of convolutional neural network (CNN) capable of simultaneous execution of diverse natural language processing tasks, encompassing language modeling, chunking, named entity recognition, and semantic role labeling (Collobert 2011). Integration of a Max-pooling mechanism enables the extraction of intricate details from various segments of the document. Additionally, to refine precision and reduce model dimensions, an intermediate layer (bottleneck) is introduced to facilitate the acquisition of succinct document representations. Moreover, instead of feeding low-dimensional word vectors into CNNs, the approach described in Liu et al. (2017) involves training high-dimensional text embeddings for smaller text categories.

The efficacy of both word embeddings and CNN architectures has been investigated concerning their impact on model performance. The VDCNN model, as proposed by Conneau et al. (2016), operates by directly processing individual characters through small convolutions and pooling operations. Results indicate that VDCCN’s performance improves with increased network depth. Authors in Duque et al. (2019) adapted VDCNN’s structure to accommodate the limitations of mobile platforms.

6.4 Attention-based models

The human attention mechanism can be categorized into two distinct classes (Tsotsos et al. 1995). Saliency-based attention, characterized by its external-to-internal processing, constitutes the initial form of unconscious attention. For example, individuals are more likely to perceive voices in a crowded environment if they are emitted loudly. In the context of deep learning, this resembles the max-pooling and gating mechanism (Hochreiter and Schmidhuber 1997; Cho et al. 2014), wherein less suitable values (e.g., smaller ones) are suppressed while more relevant ones are retained. The other attentional class, termed “focused attention,” operates in a top-down manner. This attentional mode is directed towards a specific objective or set of activities, facilitating deliberate and purposeful concentration. The majority of attention mechanisms in deep learning are tailored for singular tasks.

In neural machine translation, neural networks are employed for the task of translating text from one language to another. A significant obstacle in this process is aligning sentences across different languages, particularly with longer sentences. To address this challenge and improve translation quality, researchers in Bahdanau et al. (2014) introduced the attention mechanism into neural networks. This mechanism enables the network to selectively focus on specific sections of the source text during translation. Since then, various enhancements have been proposed, such as local attention (Luong et al. 2015), supervised attention (Mi et al. 2016; Liu et al. 2016), hierarchical attention (Zhao et al. 2018), and self-attention (Vaswani et al. 2017; Yang et al. 2018). These enhancements aim to better align words and enhance translation performance by experimenting with different attention architectures. Figure 7 illustrates the method proposed by the authors in Yang et al. (2018), incorporating a window size of 2.

Labeling texts is the primary objective of text classification, a process widely utilized in various applications including topic categorization (Wang et al. 2012), sentiment analysis (Maas et al. 2011; Pang et al. 2008), and spam identification (Sahami et al. 1998). Self-attention mechanisms are predominantly employed to enhance the representation of documents in these classification endeavors (Letarte et al. 2018; Shen et al. 2018; Lin et al. 2017). Consequently, numerous studies have integrated self-attention with other attention techniques, such as hierarchical self-attention (Yang et al. 2016) and multi-dimensional self-attention (Lin et al. 2017). Architectures incorporating attention models, including transformer (Song et al. 2019; Ambartsoumian and Popowich 2018) and memory networks (Tang et al. 2016; Zhu and Qian 2018), have also been applied in these tasks.

In addition to question answering, document retrieval, entailment classification, paraphrase detection, and recommendation systems based on reviews, text matching represents a significant area of examination in natural language processing and information retrieval. Various innovative techniques, such as memory networks (Sukhbaatar et al. 2015), attention over attention (Cui et al. 2016), inner attention (Wang et al. 2016), structured attention (Kim et al. 2017), and co-attention (Tay et al. 2018; Lu et al. 2016), have been developed in conjunction with attention mechanisms to address this research domain.

6.5 Knowledge graph (KG) based models

The integration of KG data into neural QA systems is currently a subject of intense study. Some research investigates the utilization of two-tower models (Wang et al. 2019) that merge a graph-based knowledge representation and a language-based representation without any form of interaction between these two components. There are also studies that attempt to utilize one modality to ground the other, such as Knowledgeable Reader (Mihaylov and Frank 2018), KagNet (Lin et al. 2019), and KT-NET (Yang et al. 2019), employing an encoded version for a linked KG in order to enhance the textual expression for any of the question-answering instances. Certain methods, like MHGRN (Feng et al. 2020) and Lv et al.’s graph reasoning model (Lv et al. 2020), demonstrate a different information flow in their approach. They achieve this by employing a textual representation while working with an extracted KG for the given example. However, in all of these contexts, interaction between the two modalities is constrained since information can only go in one direction.

Recent approaches explore broader integrations of the two modalities. To construct local KGs suitable for Question Answering (QA) (Wang et al. 2020; Hwang et al. 2021), certain methodologies aim to extract implicit information ingrained in LMs by leveraging training on structured KG data (Bosselut et al. 2019; Petroni et al. 2019; Hwang et al. 2021). However, following LM training on factual data, several techniques discard the static KG, thereby forfeiting valuable structural cues essential for guiding reasoning. A recent advancement, QA-GNN (Ren et al. 2021), advocates employing message propagation to concurrently refine both LM and Graph Neural Network (GNN) representations. This approach is facilitated by the initiation of the textual component within this amalgamated structure.

Additionally, other studies explore the viability of pre-training knowledge graphs in conjunction with language models. However, akin to question answering (QA), modality interaction is frequently confined to feeding knowledge into language (Zhang et al. 2019; Shen et al. 2020; Donghan et al. 2022), rather than fostering interactions across multiple layers. The authors of Zhang et al. (2019) have presented a work that closely resembles this, albeit they limit the system’s flexibility by utilizing identical modality configurations for both the LM and KG.

7 Applications of LLMs

Recent advancements in deep learning, in conjunction with numerous PLMs, facilitate the efficient execution of various NLP tasks. To leverage LLMs, tasks can be reformulated as text generation challenges, enabling the application of LLMs to efficiently address these tasks.

7.1 Text generation

Text generation is a critical application of language models (LMs), aiming to generate word sequences based on input data. The diversity of objectives and initial materials introduces numerous challenges in text production. For instance, in automated speech recognition (ASR), a sequence of spoken words serves as input, while a sequence of written words is the corresponding output. Similarly, machine translation involves utilizing an input text sequence along with the target language to generate a text sequence in the target language. Generating a story exemplifies the task of generating text from a given topic. Decoding plays a pivotal role in text generation by determining the next linguistic unit in the output sequence. Effective decoding techniques should generate coherent continuations given a context. The significance of decoding techniques has grown in parallel with the increasing complexity of LMs.

The maximization-oriented decoding technique endeavors to identify tokens with the highest probability of text generation, operating under the assumption of model accuracy. In greedy search methodologies (Zhao et al. 2017; Xu et al. 2017), the subsequent token selection consistently prioritizes the one with the greatest probability. Conversely, in beam search approaches (Li et al. 2016; Vijayakumar et al. 2018; Kulikov et al. 2018), tokens with the highest probabilities are retained iteratively, favoring sequences with the highest cumulative probability, thereby avoiding overlooking plausible tokens with lower probabilities. Recent advancements in decoding algorithms have introduced trainable methodologies, such as trainable greedy decoding in neural machine translation (Gu et al. 2017), wherein reinforcement learning optimizes decoding objectives to attain the best translation, albeit potentially resulting in low-probability token generation due to sampling from an unstable tail distribution. The consequence of unrelated prefixes generating nonsensical outcomes underscores recent proposals like Top-k sampling (Fan et al. 2018) and Nucleus sampling (Holtzman et al. 2019), both employing truncated language model distributions to bias sampling towards the most probable tokens. Diverse Beam Search (DBS) (Vijayakumar et al. 2018) builds upon Beam search as a sampling-based decoding algorithm, potentially trainable, with beam diversity settings tailored for different inputs or tasks using reinforcement learning.

7.2 Vision-language models

Researchers have aimed to develop comprehensive models that integrate two distinct types of data, referred to as Vision-Language Models (VLMs).The conceptual framework of these models draws inspiration from the efficacy demonstrated by pre-trained models within the realms of CV and NLP (Wen et al. 2023). VLMs can be classified using either fusion-encoder models or dual-encoder models. Fusion-encoder models employ multi-layer cross-modal Transformer encoders to jointly encode image and text pairs, combining their visual and textual representations. Conversely, dual-encoder models independently encode images and text. The interactions between the two modalities are then captured using a dot product or a multi-layer perceptron.

Methodology for VisualBERT (Li et al. 2019)

The encoder modules (fusion) of these models receive both visual features and text embeddings as input and employ various fusion techniques to accurately capture the interaction between the visual and textual modalities. Following self-attention or cross-attention operations, the latent features of the top layer are considered a merged representation of the multiple modalities. VisualBERT (Li et al. 2019) represents a significant advancement, using self-attention to implicitly align textual components with regions in the corresponding image, as depicted in Fig. 8. By combining image regions and a Transformer, the self-attention mechanism can learn latent correspondences between words and images. Initially, it is pre-trained on caption data using masked language modeling and sentence-image prediction tasks, and subsequently fine-tuned for specific tasks. It integrates BERT (Devlin et al. 2018) for processing linguistic data and pretrained Faster RCNN (Ren et al. 2015) for generating object proposals. To capture complex relationships, the original text and image data from item proposals are fed into VisualBERT as unordered input tokens and processed simultaneously by various Transformer layers. Following this development, a variety of VLM models, including Uniter (Chen et al. 2020), OSCAR (Li et al. 2020), and InterBert (Lin et al. 2020), utilized BERT as a textual encoder and Faster-RCNN as an object proposal generator to model the dynamic relationship between visual information and language.

Dual-stream architectures integrate a cross-attention mechanism to capture interactions between visual and verbal elements, contrasting with the single-stream designs that manage a single information stream. Typically, the cross-attention layer comprises two unidirectional sub-layers: one processes information from vision to language, and the other processes information from language to vision. These sub-layers enable communication and coordination between the two modalities. For example, ViLBERT (Lu et al. 2019) utilized co-attentional transformer layers to enable cross-modal information sharing by independently processing visual and textual inputs. Subsequent research, such as LXMERT (Tan and Bansal 2019), Visual Parsing (Xue et al. 2021), ALBEF (Li et al. 2021), and WenLan (Huo et al. 2021), further refined this approach by employing distinct transformers before cross-attention to separate intra-modal and cross-modal interactions. Chen et al. (2022) proposed VisualGPT to manage limited image-text data within the same domain by introducing a novel self-resurrecting encoder-decoder attention mechanism into PLMs.

7.3 Personalised learning

Educators can utilize AI to analyze student performance and behavior data, identify areas of difficulty, and provide personalized guidance for addressing these issues. AI tools are employed to develop adaptive learning systems that adjust the difficulty of assignments and assessments based on each student’s needs and abilities, thereby facilitating a more personalized learning experience and a more accurate assessment of student progress. This approach ensures that students are appropriately challenged without being overwhelmed, which has been demonstrated to enhance both motivation and engagement (Baars et al. 2022). AI provides personalized feedback that highlights specific areas for improvement and suggests strategies, enabling students to better understand their strengths and weaknesses and develop effective study habits. This individualized learning experience and targeted instruction significantly enhance student support and foster a more productive learning environment. By providing timely feedback and assistance, AI and NLP contribute to the development of students’ metacognitive skills, including the ability to reflect on their learning processes and formulate improvement plans (Khan et al. 2023).