Abstract

Imbalanced learning constitutes one of the most formidable challenges within data mining and machine learning. Despite continuous research advancement over the past decades, learning from data with an imbalanced class distribution remains a compelling research area. Imbalanced class distributions commonly constrain the practical utility of machine learning and even deep learning models in tangible applications. Numerous recent studies have made substantial progress in the field of imbalanced learning, deepening our understanding of its nature while concurrently unearthing new challenges. Given the field’s rapid evolution, this paper aims to encapsulate the recent breakthroughs in imbalanced learning by providing an in-depth review of extant strategies to confront this issue. Unlike most surveys that primarily address classification tasks in machine learning, we also delve into techniques addressing regression tasks and facets of deep long-tail learning. Furthermore, we explore real-world applications of imbalanced learning, devising a broad spectrum of research applications from management science to engineering, and lastly, discuss newly-emerging issues and challenges necessitating further exploration in the realm of imbalanced learning.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

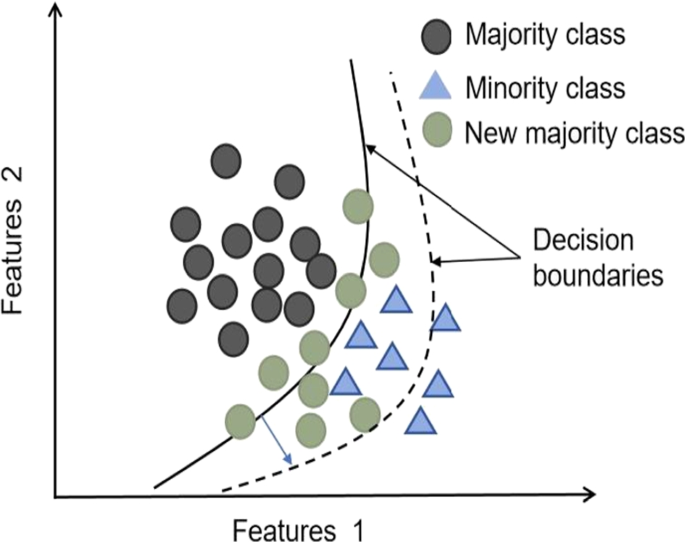

In the field of machine learning, it is commonly assumed that the number of samples in each class under study is roughly equal. However, in real-life scenarios, due to practical applications in enterprises or industries, the generated data often exhibits imbalanced distribution. In the case of fault detection, for example, the major class has a large number of samples, while the other class (the class with faults) has only a small number of samples (Ren et al. 2023). As depicted in Fig. 1, this imbalance presents a challenge as traditional learning algorithms tend to favor the more prevalent classes, potentially overlooking the less frequent ones. Nevertheless, from a data mining perspective, minority classes often carry valuable knowledge, making them crucial. Consequently, the objective of imbalanced learning is to develop intelligent systems capable of effectively addressing this bias, thereby enabling learning algorithms to handle imbalanced data more effectively.

A binary imbalanced dataset. The decision boundaries learned on such samples tend to be increasingly biased towards the majority class, leading to the neglect of minority class data samples. The dashed line is the decision boundary after the addition of the majority class. Note that the new samples are added arbitrarily, just to show how the added samples affect the decision boundaries

Over the past two decades, imbalanced learning has garnered extensive research and discussion. Numerous methods have been proposed to tackle imbalanced data, encompassing data pre-processing, modification of existing classifiers, and algorithmic parameter tuning. The issue of imbalanced data classification is prevalent in real-world applications, such as fault detection (Fan et al. 2021; Kuang et al. 2021; Ren et al. 2023), fraud detection, medical diagnosis (Hung et al. 2022; Fotouhi et al. 2019; Behrad and Abadeh 2022), and other fields (Yang et al. 2022; Haixiang et al. 2017; Ding et al. 2021). In these application scenarios, datasets typically exhibit a significant class imbalance, where a few classes contain only a limited number of samples, while the majority class is more abundant. This imbalance leads to varying performance of learning algorithms, known as performance bias, on the majority and minority classes. To effectively address this challenge, imbalanced learning has been extensively examined by both academia and industry, resulting in the proposal of various methods and techniques (Chen et al. 2022a, b; Sun et al. 2022; Zhang et al. 2019).

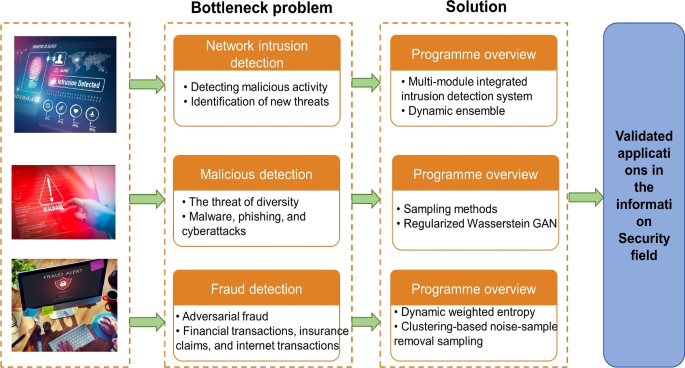

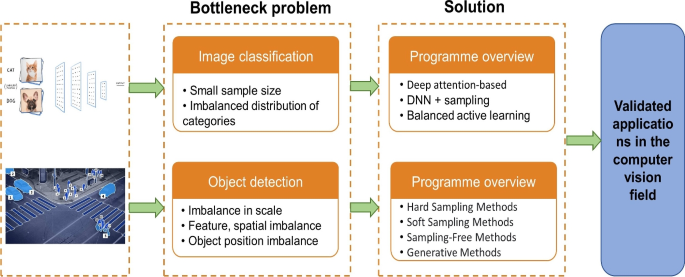

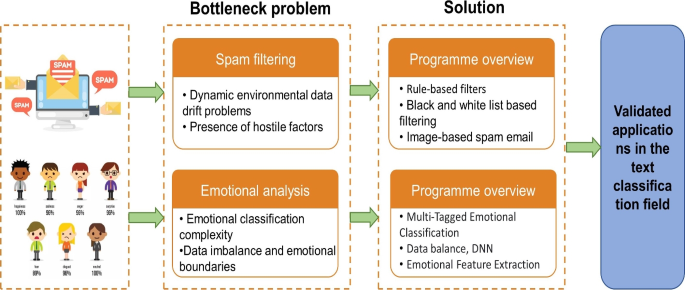

The processing of imbalanced data has gained significant importance due to the escalating volume of data originating from intricate, large-scale, and networked systems in domains such as security (Yang et al. 2022), finance (Wu and Meng 2016) and the Internet (Di Mauro et al. 2021). Despite some notable achievements thus far, there remains a lack of systematic research that reviews and discusses recent progress, emerging challenges, and future directions in imbalanced learning. To bridge this gap, our objective is to present a comprehensive survey of recent research on imbalanced learning. Such an endeavor is crucial for sustaining focused and in-depth exploration of imbalanced learning, facilitating the discovery of more effective solutions, and advancing the field of machine learning and data mining.

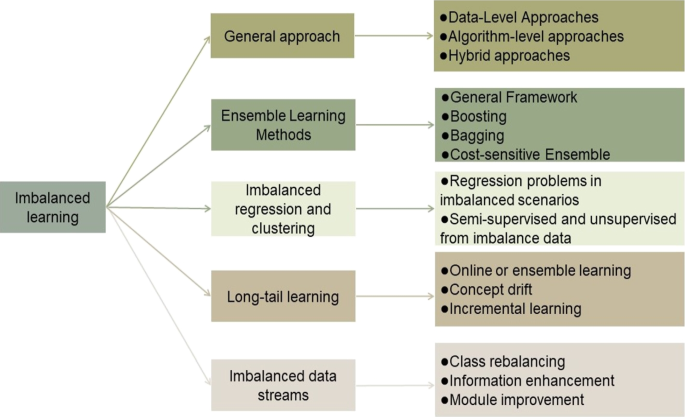

In this paper, we categorize the existing innovative solutions for imbalanced data classification algorithms into five types, as illustrated in Fig. 2. These types encompass general methods, ensemble learning methods, imbalanced regression and clustering, long-tail learning, and imbalanced data streams. Within these categories, we further delve into more detailed methods, including data-level approaches, algorithm-level techniques, hybrid methods, general ensemble frameworks, boosting, bagging, cost-sensitive ensembles, imbalanced regression, imbalanced clustering, online or ensemble learning, concept drift, incremental learning, class rebalancing, information enhancement, and model improvement. Employing this classification scheme, we conduct a comprehensive review of existing imbalanced learning approaches and outline recent practical application directions.

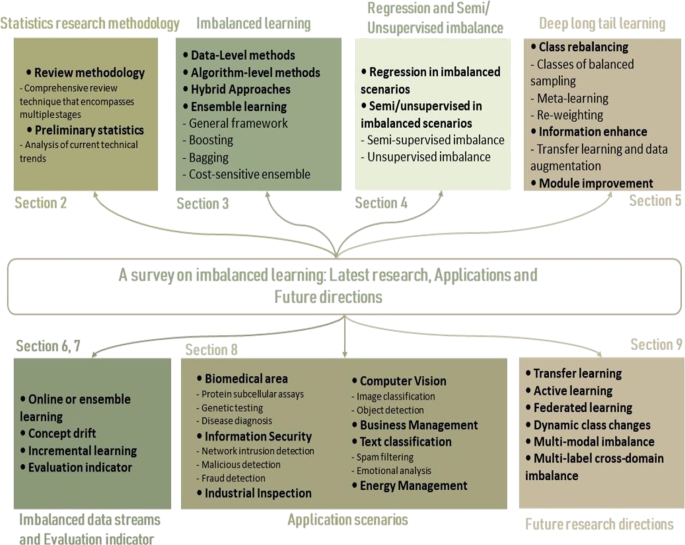

Table 1 summarizes the differences between recent reviews on imbalanced learning and the present investigation. Distinguishing this survey from previous works, we not only summarize recent solutions to the category imbalance problem in traditional machine learning but also address the long-tailed distribution issue in deep learning, which has gained prominence. Additionally, we extensively elaborate on the imbalance problem within the unsupervised or semi-supervised domain. Moreover, building upon current research, we not only encapsulate emerging solutions in imbalanced learning but also outline new challenges and future research directions with practical potential. The organisational framework of this paper is shown in Fig. 3.

We summarize the main contributions of the survey in this paper as follows:

-

This paper provides a unified and comprehensive review of imbalanced learning and deep imbalanced learning. This paper presents the inaugural comprehensive review and summary of the existing research outcomes in imbalanced learning and deep imbalanced learning. It systematically consolidates a wide range of methods and techniques, thereby facilitating researchers in developing a comprehensive understanding of this field.

-

A comprehensive survey of long tail learning and imbalanced machine learning applications. This paper presents a comprehensive survey and summary of the applications of long-tail learning and imbalanced machine learning over the past few years, encompassing diverse fields and real-world application scenarios. Through this study, scholars may get a more profound comprehension of the use of imbalanced learning in several fields, so functioning as a beneficial resource for further inquiries.

-

Six new research challenges and directions were identified. Drawing upon the existing research findings, this paper identifies and proposes six novel research challenges and directions within the realm of imbalanced learning. These challenges and directions hold significant potential and research importance, contributing to the advancement and progression of the imbalanced learning field.

The remainder of this paper is organized as follows: Section 2 outlines the current research methodology employed in this study and presents preliminary statistics on imbalanced learning. Section 3 introduces a comprehensive approach for addressing imbalanced equilibrium learning. Section 4 delves into the methodologies associated with imbalanced regression and clustering. Section 5 explores methods specifically pertaining to long tail learning. Section 6 discusses relevant research on imbalanced data streams. In Section 8, we categorize existing research on imbalanced learning applications into seven fields and provide an overview of the respective research contents within each field. Section 9 compiles and summarizes six future research directions and challenges in imbalanced learning based on the survey and current research trends. Finally, we conclude this paper with a concise summary.

2 Statistics research methodology

2.1 Review methodology

The statistical classification research method proposed in this paper draws upon the works (Kaur et al. 2019; Haixiang et al. 2017). This work uses a multi-stage thorough review strategy to capture a wide range of methods and real-world application domains related to imbalanced learning.

In the initial stage, we focus on imbalanced, sampling, and skewed data to obtain preliminary results. Subsequently, in the second stage, we conduct a thorough search of existing research literature. For the third stage, we employ a triple keyword search approach. Initially, we use data mining, machine learning, and classification keywords to assess the research status of machine learning technologies. Next, we incorporate long-tail distribution and neural network as keywords to examine the research status of deep long-tail learning. Finally, we employ keywords such as ”detection,” ”abnormal,” or ”medical” to identify practical applications in the literature.

To ensure comprehensive coverage, we conducted searches across seven databases encompassing various domains within the natural and social sciences. These databases include IEEE Xplore, ScienceDirect, Springer, ACM Digital Library, Wiley Interscience, HPCsage, and Taylor & Francis Online.

2.2 Preliminary statistics

Figure 4 illustrates the search framework employed in this study. Initially, we applied the search terms ”imbalance” or ”unbalance” to filter the initial research works based on these keywords. Subsequently, we employed a trinomial tree search strategy to filter abstracts, conclusions, and full papers, leading to the identification of papers falling into three distinct directions. To ensure comprehensive research coverage, we incorporated the keywords ”machine learning,” ”long-tail learning”, and ”applications” during the trinomial tree screening stage. Following this, we conducted manual screening to eliminate duplicates and obtain the final results, resulting in a total of 2287 papers. This systematic search process ensures an extensive exploration of the field of imbalanced learning, providing a robust support and foundation for this paper.

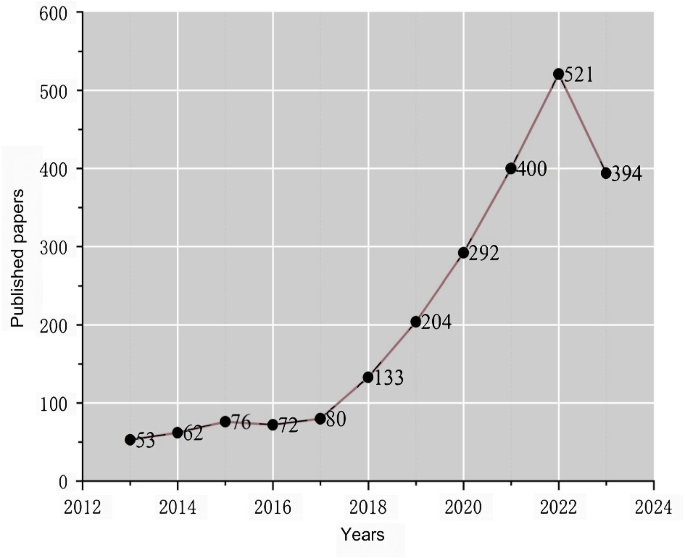

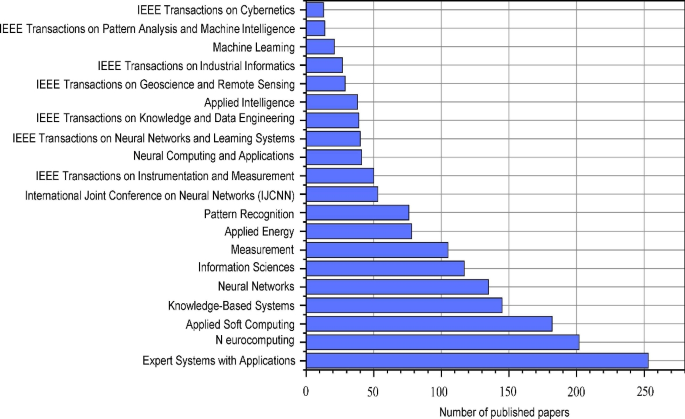

Figure 5 demonstrates the publication trend of papers in the field of imbalanced learning from January 2013 to July 2023. The number of published papers remained relatively stable until 2017, with a slight decline observed during the 2015-2016 period. However, there has been a remarkable surge in the number of published papers from 2018 onwards. This trend indicates the enduring significance of imbalanced learning as a research topic, with ongoing growth in research hotspots. Furthermore, we compiled a list of the top 20 journals or conferences contributing to paper publications. As depicted in Fig. 6, prominent publications are found in fields such as computer science, neural computing, management science, and energy applications.

3 Imbalanced data classification approaches

In this section, we first introduce data-level approaches in imbalanced data classification, then we give various solutions at the algorithmic level as well as hybrid approaches, and finally we introduce more robust ensemble learning methods.

3.1 Data-Level approaches

Data-level approaches to the problem of class imbalance involve techniques and methods that directly modify the training data. These approaches target the challenge of class imbalance by rebalancing the distribution of classes within the training dataset. This rebalancing can be achieved through undersampling, which involves reducing instances from the majority class, or oversampling, which involves increasing instances from the minority class. The objective is to create a more balanced dataset that can effectively train a binary classification model.

The most widely used oversampling methods are random replication of a few samples and synthetic minority over-sampling technique (SMOTE) (Chawla et al. 2002). However, SMOTE is prone to generate duplicate samples, ignore sample distributions, introduce false samples, are not suitable for high-dimensional data, and are sensitive to noise. Therefore, there are many variants of methods that improve on SMOTE. Borderline-SMOTE (Han et al. 2005) identifies and synthesizes samples near the decision boundary, ADASYN (He et al. 2008) adapts the generation of synthetic samples based on minority class density, and Safe-Level-SMOTE (Bunkhumpornpat et al. 2009) incorporates a safe-level algorithm to balance class distribution while reducing misclassification risks. Other methods do not rely solely on synthetic sampling techniques. They explore different strategies for the selection of preprocessing stages or categorization algorithms to suit the characteristics and needs of imbalanced datasets. Stefanowski and Wilk (2008) introduced a novel method for selective pre-processing of imbalanced data that combines local over-sampling of the minority class with filtering challenging cases from the majority classes. To enhance the classification performance of SVM on unbalanced datasets, Akbani et al. (2004) offered several methodologies and procedures, such as altering class weights, utilizing alternative kernel functions, adjusting decision thresholds.

It is worth noting that oversampling methods sometimes produce redundant noise problems, so some oversampling sampling methods combine noise processing in the sampling process to obtain a more excellent balanced dataset. Stefanowski et al. introduced SMOTE-IPF, an extension of SMOTE that incorporates an iterative ensemble-based noise filter called IPF, effectively addressing the challenges posed by noisy and borderline examples in imbalanced datasets (Sáez et al. 2015). In order to handle class imbalance in a noise-free way, Douzas et al. Douzas et al. (2018) introduced a straightforward and efficient oversampling methodology called k-means SMOTE, which combines k-means clustering and the synthetic minority oversampling technique (SMOTE). Sun et al. (2022) introduced a disjuncts-robust oversampling (DROS) method that effectively addresses the challenge of negative oversampling results in the presence of small disjuncts, by utilizing light-cone structures to generate new synthetic samples in minority class areas, outperforming existing oversampling methods in handling class imbalance. At present, there are some new innovative data sampling methods that can combine the regional distribution of data and random walk based on data stochastic mapping to synthesize new samples, and even map data to higher-dimensional space to generate artificial data points (Sun et al. 2020; Zhang and Li 2014; Douzas and Bacao 2017; Han et al. 2023).

Undersampling is the practice of decreasing the number of examples in the majority class in order to obtain a more balanced class distribution. Undersampling seeks to match the number of instances in the minority class with the number of instances in the majority class by randomly or purposefully deleting samples from the majority class. The most widely used is undersampling using KNN technology (Wilson 1972; Mani and Zhang 2003). Wilson (1972) studied the asymptotic characteristics of the nearest neighbor rule using edited data, and discusses the influence of edited data methods on classification accuracy. Mani and Zhang (2003) proposed a method for processing imbalanced dataset distribution based on k nearest neighbor algorithm by taking information extraction as a case study. Michael and colleagues examine cases that are often misclassified and look at elements that contribute to their difficulty in order to better comprehend machine learning data and develop training procedures and algorithms (Smith et al. 2014).

In recent years, some researchers have been investigating variants of sampling methods that take into account the density information, spatial information, and intrinsic characteristics between classes of the data and combine them with reinforcement learning, deep learning, and clustering algorithms for data enhancement. For example, Kang et al. introduced WU-SVM, an improved algorithm that utilizes a weighted undersampling scheme based on space geometry distance to assign different weights to majority samples in subregions, allowing for better retention of the original data distribution information in each learning iteration (Kang et al. 2017). Yan et al. proposed Spatial Distribution-based UnderSampling (SDUS), an undersampling method for imbalanced learning that maintains the underlying distribution characteristics by utilizing sphere neighborhoods and employing sample selection strategies from different perspectives (Yan et al. 2023). Fan et al. proposed a sample selection strategy based on deep reinforcement learning algorithm, aiming to optimize the sample distribution, by constructing a reward function, using deep reinforcement learning algorithm to train the model to select more representative samples, thereby improving the diagnostic accuracy (Fan et al. 2021). Kang et al. (2016) introduced a new under-sampling scheme that incorporates a noise filter before resampling, resulting in improved performance of four popular under-sampling methods for imbalanced classification. Other researchers have incorporated clustering algorithms into undersampling. In order to successfully handle class imbalance issues, Tsai et al. (Lin et al. 2017) presented a unique undersampling strategy called cluster-based instance selection (CBIS), which combines clustering analysis with instance selection. Han et al. (2023) proposed a combination of global and local oversampling methods to distinguish between minority and majority classes by comparing them to the discretisation values at each class level. Instances are synthesised based on the degree of discrete magnitude.

The oversampling method offers several advantages in addressing imbalanced data, including increased representation of the minority class, retention of original information, improved classifier performance, and reduced bias towards the majority class. By artificially increasing the number of instances in the minority class, the dataset becomes more balanced for training, allowing classifiers to better learn the characteristics and patterns of the minority class. However, oversampling can also have drawbacks, such as the potential for overfitting, increased computational complexity, the introduction of noise into the dataset, and limited information gain. These disadvantages can impact the classifier’s generalization ability and performance on unseen data. When using the oversampling approach, it is necessary to take the specific oversampling methodology and the features of the dataset into account. A comparison of algorithmic studies of the latest relevant data levels in recent years is shown in Table 2.

Sampling methods have been of interest to researchers mainly because they are described according to the following guidelines: (1) they work independently of the learning algorithm, (2) they lead to a balanced redistribution of data, and (3) they can be easily combined with any learning mechanism. Of course different sampling methods can be categorised based on the complexity of the corresponding sampling method. Under-sampling typically has faster run times and higher robustness and is less prone to overfitting than oversampling.

3.2 Algorithm-level approaches

Algorithm-level approaches concentrate on adapting or developing machine learning algorithms to effectively handle imbalanced datasets. These techniques prioritize enhancing the algorithms’ capability to accurately classify instances from the minority class. By adjusting or designing algorithms to be more responsive to these underrepresented groups, these techniques contribute to improving the overall performance, fairness, and generalizability of machine learning models when confronted with imbalanced data. Among the most prevalent algorithm-level strategies is cost-sensitive learning, wherein the classification performance is enhanced by modifying the algorithm’s objective function. This alteration ensures that the model receives greater emphasis on learning from underrepresented classes (Castro and Braga 2013; Yang et al. 2021). Table 3 shows the advantages and disadvantages of the main algorithm-level approaches in recent years.

3.2.1 Cost-sensitive learning

Cost-sensitive learning takes into account the different costs associated with different types of misclassification and aims to optimise the model for scenarios where the consequences of errors are uneven. Lately, researchers have incorporated other techniques based on cost-sensitive learning to enable models with higher classification accuracy and better generalization. For example, Zhang and Hu (2014) proposed cost-free learning (CFL) as a method for achieving optimal classification outcomes without depending on cost information, even when class imbalance exists. CFL approach maximizes normalized mutual information between targets and decision outputs, enabling binary or multi-class classifications with or without abstaining. To enhance the classification performance of support vector machines (SVMs) on imbalanced data sets, Cao et al. (2020). developed the unique approach ATEC. By assessing its performance in terms of classification accuracy and changing it in the proper direction, ATEC effectively optimizes the error cost for between-class samples. Addressing both concept drift and class imbalance problems in streaming data, Lu et al. (2019) proposed an adaptive chunk-based dynamic weighted majority technique. In addressing the imbalanced class problem, Fan et al. (2017) introduced Entropy-based Fuzzy Support Vector Machine (EFSVM) to addresses the imbalanced class problem by assigning fuzzy memberships to samples based on their class certainty, resulting in improved classification performance compared to other algorithms. Datta and Das (2019, 2015) proposed another idea where a multi-objective optimisation framework (Datta et al. 2017) was used to train SVM to efficiently find the best trade-off between objectives in class imbalanced data sets. Cao et al. (2021) proposed an adaptive error cost adjustment method for class imbalance learning of support vector machines (SVMs) called ATEC, which has a significant advantage over the traditional grid search strategy in terms of training time by efficiently and automatically adjusting the error cost between samples. This novel domain can effectively solve imbalance problems without expensive parameter tuning.

3.2.2 Weighted shallow neural networks

Shallow neural networks combined with imbalanced learning algorithms have made extensive developments in solving imbalanced data classification problems. Shallow neural networks have fast training capability. Due to the characteristics of the shallow layer structure, the training time of neural networks is relatively short. This is very beneficial for applications that quickly process imbalanced datasets and get instant results. For example, In order to accurately and efficiently identify and categorize power quality disturbances, Sahani and Dash (2019) proposed an FPGA-based online power quality disturbances monitoring system that makes use of the reduced-sample Hilbert-Huang Transform (HHT) and class-specific weighted Random Vector Functional Link Network (RVFLN). Choudhary and Shukla (2021) proposed an integrated ELM method for decomposing complex imbalance problems into simpler subproblems for the class bias problem in classification tasks. The technique successfully addresses elements like class overlap and the number of probability distributions present in the unbalanced classification issue by utilizing cost-sensitive classifiers and cluster assessment. Chen et al. proposed a novel approach called double-kernelized weighted broad learning system (DKWBLS) that addresses the challenges of class imbalance and parameter tuning in broad learning systems (BLS). By utilizing a double-kernel mapping strategy, DKWBLS generates robust features without the need for adjusting the number of nodes. Additionally, DKWBLS explicitly considers imbalance problems and achieves improved decision boundaries (Chen et al. 2022b). Chen et al. proposed a new double kernel-based class-specific generalized learning system (DKCSBLS) to solve the multiclass imbalanced learning problem. DKCSBLS solves the imbalance multiclassification problem based on class distribution adaptively combined with class-specific penalty coefficients and uses a dual kernel mapping mechanism to extract more robust features (Chen et al. 2022a). Yang et al. addresses the imbalance problem in the broad learning system (BLS) by proposing a weighted BLS and an adaptive weighted BLS (AWBLS) that consider the prior distribution of the data. Additionally, an incremental weighted ensemble broad learning system (IWEB) is proposed to enhance the stability and robustness of AWBLS (Yang et al. 2021).

Algorithmic-level approaches optimize the loss function associated with the dataset, concentrating on a limited number of classes to enhance model performance. In contrast to resampling-based methods, these techniques are more computationally efficient and better suited for large data streams. Consequently, considering their ability to enhance AUC and G-mean in imbalanced scenarios along with runtime considerations, algorithmic-level methods may be preferred. Furthermore, they offer flexibility in selecting distinct activation functions and optimization algorithms tailored to specific problem requirements.

These approaches aim to address imbalance classification problems by adapting existing algorithms or creating new ones specifically designed for imbalanced datasets, emphasizing a smaller number of classes. They are easy to implement as they do not necessitate dataset or feature space modifications and are applicable across a wide range of classification algorithms, potentially enhancing classifier performance on unbalanced datasets. However, they may not fully capture the complexity of imbalanced datasets and can be sensitive to hyperparameter selection. Additionally, they do not openly tackle data scarcity among minority groups, and their efficiency may vary based on unique dataset features.

3.3 Hybrid approaches

To address imbalanced learning, hybrid methods incorporate techniques from both the data-level and the algorithm-level. In an effort to improve performance, these techniques try to combine the advantages of both strategies. Table 4 shows the advantages and disadvantages of the main hybrid methods in recent years.

A common hybrid approach is to combine sampling methods with ensemble learning (Sağlam and Cengiz 2022; Abedin et al. 2022; Razavi-Far et al. 2019; Sun et al. 2018; Liang et al. 2022). Another is the combination of sampling methods using multiple optimisation algorithms or adaptive domain methods. For example, Sağlam and Cengiz (2022) proposed a method to solve the category imbalance problem in classification called SMOTEWB (SMOTE with boosting), which combines a new noise detection method with SMOYE. By combining noise detection and augmentation in an ensemble algorithm, SMOTEWB overcomes the challenges associated with random oversampling (ROS) and SMOTE by adjusting the number of neighbours per observation in the SMOTE algorithm. Abedin et al. (2022) presented WSMOTE-ensemble, a unique ensemble technique for small company credit risk assessment that addresses the issue of severely uneven default and nondefault classes. To build robust and varied synthetic examples, the suggested technique combines WSMOTE and Bagging with sampling composite mixes, thereby eliminating class-skewed limitations. Razavi-Far et al. (2019) presented unique class-imbalanced learning algorithms that combine oversampling methods with bagging and boosting ensembles, with a particular emphasis on two oversampling strategies based on single and multiple imputation methods. To rebalance the datasets for training ensemble algorithms, the suggested strategies attempt to develop synthetic minority class samples with missing values estimate that are similar to the actual minority class samples. For the evaluation of unbalanced business credit, Sun et al. (2018) developed the DTE-SBD decision tree ensemble model, which combines the SMOTE with the Bagging ensemble learning algorithm with differential sample rates.

Another is the combination of sampling methods using multiple optimisation algorithms or adaptive domain methods. Chen et al. (Pan et al. 2020) proposed Gaussian oversampling and Adaptive SMOTE methods. Adaptive SMOTE takes the Inner and Danger data from the minority class and combines them to form a new minority class that improves the distributional features of the original data. Gaussian oversampling is a technique that combines dimensionality reduction with a Gaussian distribution to make the distribution’s tails narrower. Dixit and Mani (2023) proposed a novel oversampling filter-based method called SMOTE-TLNN-DEPSO, a hybrid variant of the method that combines SMOTE for synthetic sample generation and the Differential Evolution-based Particle Swarm Optimisation (DEPSO) algorithm for iterative attribute optimisation. Yang et al. (2019) proposed a hybrid optimum ensemble classifier framework that combines density-based under-sampling and cost-effective techniques to overcome the drawbacks of traditional imbalanced learning algorithms.

In order to overcome the cost problems associated with parameter optimisation of traditional hybrid methods. datta et al. (Mullick et al. 2018, 2019) combined neural networks and heuristic algorithms with sampling methods or traditional classifiers. For example, the performance of the k-nearest neighbour classifier on imbalanced data sets was improved by adjusting the k value using neural networks or heuristic learning algorithms. Another is a three-way adversarial strategy that combines convex generators, multi-class classifier networks and discriminators in order to perform oversampling in deep learning systems.

Hybrid methods can integrate various imbalance learning techniques, such as combining clustering algorithms with sampling methods and coupling them with cost-sensitive algorithms to enhance model performance. However, this integration can lead to increased model complexity and necessitate more parameter tuning. Therefore, when selecting hybrid methods to address imbalance algorithms, it is essential to consider their runtime and model complexity.

3.4 Ensemble learning methods

Ensemble learning is a methodology employed for the classification of imbalanced data, which involves amalgamating multiple classifiers or models to enhance the performance of classification tasks on datasets characterized by imbalanced class distributions. Its primary objective is to tackle the challenges presented by imbalanced class distributions by capitalizing on the strengths of different classifiers, thereby enhancing the predictive accuracy for the minority classes (Yang et al. 2021). Ensemble methods for imbalanced data classification typically encompass the creation of diverse subsets from the imbalanced dataset through resampling techniques. Individual classifiers are then trained on these subsets, and their predictions are combined using voting or weighted averaging schemes. Ensemble learning efficiently reduces the bias towards the majority class and enhances overall classification performance on imbalanced data sets by integrating different models.

Over the past few years, many scholars have summarised the progress, challenges and potential solutions of ensemble learning on the problem of unbalanced data classification. At the same time, mainstream methods are categorised and discussed, challenges are clarified, and research directions are proposed. Finally, many surveys have also explored the possibility of combining ensemble learning with other machine learning techniques (Dong et al. 2020; Yang et al. 2023; Galar et al. 2012). For example, Galar et al. (2012) explored the challenges posed by imbalanced datasets in classifier learning. It reviews integrated learning methods for the problem of classifying unbalanced data, proposes classification criteria, and makes empirical comparisons. It is demonstrated that random undersampling combined with either bagging or boosting ensembles works well, emphasizing the superiority of ensemble-based algorithms over stand-alone preprocessing methods. Table 5 summarizes the advantages and disadvantages of some main ensemble learning algorithms over the last three years.

3.4.1 General framework

General ensemble is a generalisability framework for addressing imbalanced learning that is robust to multiple datasets. The goal behind generic integration is to use the diversity and complementary qualities of various models to improve the integration’s overall performance and resilience. This approach aims to overcome the limitations of a single model by aggregating their predictions through various techniques such as voting, averaging or weighted combinations. Liu et al. (2020) proposed to generate strong integrations for unbalanced classification through self-paced coordination of under-sampled data hardness to cope with the challenges posed by imbalanced and low-quality datasets. This computationally efficient method takes into account class disproportionality, noise and class overlap to achieve robust performance even in highly skewed distributions and overlapping classes, while being applicable to a variety of existing learning methods. Liu et al. (2020) present a novel integrated imbalanced learning framework called MESA, which adaptively resamples the training set to create multiple classifiers and form a cascaded integrated model. MESA learns the sampling strategy directly from the data, going beyond heuristic assumptions to optimize the final metric. Liu et al. (2009) proposed two algorithms, EasyEnsemble and BalanceCascade, to address the undersampling in the class imbalance problem. easyEnsemble combines the output of multiple learners trained on a majority class subset, while BalanceCascade sequentially removes the majority class examples that are correctly classified in each step.

The general framework of ensemble learning offers several advantages in addressing the classification of imbalanced data. Firstly, it provides a flexible and adaptable approach that can incorporate various ensemble methods, such as bagging, boosting, or cost-sensitive techniques, depending on the specific characteristics of the imbalanced dataset. This versatility allows for customization and optimization based on the specific problem at hand. Additionally, ensemble learning can effectively leverage the diversity of multiple classifiers to improve the overall classification performance and handle the challenges posed by imbalanced data, such as class overlap or rare class identification. It can also provide robustness against noise or outliers in the data.

However, there are also noteworthy potential disadvantages to consider when employing ensemble learning approaches. Firstly, such approaches may necessitate additional computational resources and increased training time in comparison to single classifier methods. This is due to the requirement of training and combining multiple models within the ensemble. Secondly, the performance of ensemble learning is highly dependent on several factors, including the selection of appropriate base classifiers, ensuring their diversity, and employing an effective ensemble combination strategy. These factors demand careful consideration and tuning to achieve optimal results. Furthermore, the adaptability of ensemble learning to specific domains is limited. While generic framework models may perform well in certain datasets or domains, their efficacy may be compromised in others. As a result, precise tweaking and optimization of the ensemble models may be necessary to achieve high performance in a variety of settings.

3.4.2 Boosting

Boosting ensemble learning is a machine learning technique that combines multiple weak classifier iteratively to create a strong ensemble model. The most widely used of these is the Adaboost (Freund and Schapire 1997), smoteboost (Chawla et al. 2003) and RUSBoost (Seiffert et al. 2009). Every weak classifier is trained on a subset of the data, prioritizing the samples that were misclassified by the preceding models. This iterative process aims to improve the overall performance of the ensemble by focusing on challenging instances during training. The final prediction is obtained by aggregating the predictions of all weak learners, typically using weighted voting or averaging. Boosting effectively improves the overall performance and generalization of the ensemble by emphasizing the challenging instances and reducing the bias in the learning process.

In the past several years, researchers have devoted much thought and attention to Boosting techniques for solving the class imbalance problem, aiming to improve the classification accuracy of minority class and to improve the learning performance in the case of class imbalance. For example, Kim et al. (2015) proposed GMBoost, which deals with data imbalances based on geometric means. GMBoost can comprehensively consider the learning of majority and minority classes by using the geometric mean of the two classes in error rate and accuracy calculations. Roozbeh et al. et al. (Razavi-Far et al. 2021) proposed a new class imbalanced learning technique that combines oversampling methods with bagging and boosting ensembles. The article proposes two strategies based on single and multiple imputation to create synthetic minority class samples by estimating missing values in the original minority class to improve classification performance on imbalanced data. Wang et al. (2020) proposed the ECUBoost framework, which combines augmented integration-based with novel entropy and confidence-based undersampling methods to maintain the validity and structural distribution of the majority of samples during undersampling to address the imbalance problem. In recent years Datta et al. (2020) proposed a new Boosted method that achieves the trade-off between majority and minority classes without expensive search costs. The method treats the weight assignment of component classifiers as a game of tug-of-war between classes in the edge space and avoids costly cost-set adjustments by implementing efficient class compromises in a two-stage linear programming framework.

In summary, Boosting has the following advantages in solving the classification of imbalanced data. (1) It can effectively deal with class imbalance by focusing on a small number of classes and assigning higher weights to misclassified instances, thereby improving overall classification performance. (2) Boosting can combine multiple weak classifiers to create strong integration, resulting in better generalisation and robustness. They can adaptively adjust the weights of classifiers during boosting iterations, emphasising difficult instances and reducing bias towards majority classes.

However, it is important to acknowledge the limitations and potential drawbacks of the Boosting algorithm. One notable concern is its relatively higher computational cost, particularly when handling large-scale datasets. The iterative nature of the enhancement process necessitates multiple iterations and the training of weak classifiers, which can significantly increase training time and resource requirements. Furthermore, augmentation algorithms are sensitive to the presence of noisy or mislabeled data, which can have a detrimental impact on their performance. It is crucial to address these limitations and take appropriate measures to mitigate their effects when applying the Boosting in practical settings. Furthermore, Boosting ensemble strategy is a serial iterative method that requires constant updating of the model and therefore a long training time. Therefore runtime needs to be considered when using Boosting with ensemble learning.

3.4.3 Bagging

Bagging Breiman (1996) is an ensemble learning technique that involves creating multiple subsets of the original dataset by random sampling and replacement. Each subset is used to train a separate base learner, such as a decision tree, using the same learning algorithm. The predictions from all the base learners are then combined by majority voting (for classification) or averaging (for regression) to make the final prediction. By averaging multiple independently trained models, Bagging helps to reduce variation in predictions, thereby improving generalisation and robustness (Wang and Yao 2009).

Researchers have recently concentrated on the application of bagging ensemble learning techniques to address issues with class imbalance. For example, Bader-El-Den et al. (2018) proposed a novel ensemble-based approach, called biased random forest, to address the class imbalance problem in machine learning. The technique concentrates on boosting the number of classifiers representing the minority class in the ensemble rather than oversampling the minority class in the dataset. By identifying critical areas using the nearest neighbor algorithm and generating additional random trees. Błaszczyński and Stefanowski (2015) investigated extensions of bagging ensembles for imbalanced data, comparing under-sampling and over-sampling approaches, and proposes Neighbourhood Balanced Bagging as a new method that considers the local characteristics of the minority class distribution. Guo et al. (2022) proposed a dual evolutionary Bagging framework that combines resampling techniques and integration learning to solve the class imbalance problem. The framework aims to find the most compact and accurate integration structure by integrating different base classifiers. After selecting the best base classifiers and using an internal integration model to enhance diversity, the multimodal genetic algorithm finds the optimal combination based on mean G-means

In summary, bagging offers several advantages in addressing the classification of unbalanced data. Firstly, it can obtain a more balanced dataset and improve classification performance by random sampling. In addition, bagging reduces the variance of the model by creating multiple subsets of the original data and training multiple base classifiers independently, which helps to reduce overfitting and improve generalization. Finally, bagging is a simple and straightforward implementation that can be combined with a variety of basic classifiers. Bagging requires neither adjusting the weight update formula nor changing the amount of computation in the algorithm and is able to achieve good generalization with a simple structure. Therefore, when using ensemble learning algorithms to deal with imbalance problems, Bagging may be the better method to choose if the low complexity and running time of the model as well as the robustness of the model are important.

Although bagging can improve overall classification performance, it may still struggle to accurately classify a small number of classes if they are severely under-represented. In addition, bagging may not be effective when dealing with datasets with overlapping classes or complex decision boundaries. It is also worth noting that the performance of bagging relies heavily on the choice of the underlying classifier and the quality of the individual models in the integration.

3.4.4 Cost-sensitive ensemble

Cost-sensitive ensemble learning takes into account the imbalance costs associated with different classes and aims to optimise the overall cost of misclassification. Such methods take into account cost factors in the decision making process. By assigning appropriate weights to adjust the decision thresholds of individual classifiers, cost-sensitive ensemble techniques aim to minimise the overall cost of misclassification and to improve the performance of specific classes that bear higher costs.

Presently, an increasing number of scholars have focused on research that cost-sensitive ensemble learning. The most widely used is Adacost (Fan et al. 1999), proposed by Fan in 1996. AdaCost is a misclassification cost-sensitive boosting method that updates the training distribution based on misclassification costs, aiming to minimize cumulative misclassification costs more effectively than AdaBoost, with empirical evaluations demonstrating significant reductions in costs without additional computational overhead. Additionally, many scholars have been delving deeper into cost-sensitive ensemble in recent years from various angles. By employing a cascade of straightforward classifiers trained with a subset of AdaBoost, Viola and Jones (2001) demonstrated a unique method for quick identification in areas with highly skewed distributions, such as face detection or database retrieval. The suggested approach significantly outperforms traditional AdaBoost in face identification tasks thanks to its high detection rates, extremely low false positive rates, and quick performance. Zhang et al. (Ng et al. 2018) introduced a new incremental ensemble learning method that addresses concept drift and class imbalances in a streaming data environment, in which the class imbalances are addressed by an imbalance inversion bagging method, which is specifically applied to predict Australia’s electricity price. Akila and Reddy (2018) developed a cost-sensitive Risk Induced Bayesian Inference Bagging model, RIBIB, for detecting credit card fraud. RIBIB used a novel bagging architecture that included a limited bag formation approach, a Risk Induced Bayesian Inference base learner, and a cost-sensitive weighted voting combiner. Zhang et al. (2022) proposed an integrated framework, BPUL-BCSLR, for data-driven mineral prospectivity mapping (MPM) that addresses the challenges of imbalanced geoscience datasets and cost-sensitive classification. The proposed approach integrates Bagging-based positive-unlabeled learning (BPUL) with Bayesian cost-sensitive logistic regression (BCSLR) and was implemented for the study of MPM in the Wulong Au district, China.

Cost-sensitive ensemble learning is valuable for imbalanced datasets. It accounts for misclassification costs, vital in real-world scenarios where rare class errors are expensive. By integrating cost factors, it improves decision-making and resource allocation. These methods balance error types, enhancing sensitivity to minority classes, thus improving overall classification and class distribution representation. While cost-sensitive ensemble learning has its advantages, there are certain challenges associated with its implementation. Estimating error costs demands prior knowledge or expert input, introducing subjectivity. Selecting an effective ensemble combination strategy considering cost factors can be complex. It is not easy to optimize cost-based objectives while maintaining variety. As a result, data distribution bias often affects cost-sensitive ensemble approaches, which might result in comparatively good performance but poor resilience.

4 Regression and Semi/unsupervised learning in imbalanced data

In this section, we first describe the solution of the regression problem in an imbalance scenario. At the same time we give the solution ideas of semi-supervised and unsupervised in imbalance problems.

4.1 Regression in imbalanced scenarios

There is a notable gap in systematically exploring the imbalanced perspective of machine learning algorithms in the context of the regression problem. The regression problem in an unbalanced scenario arises when predicting continuous values, where the target variables in the dataset exhibit an imbalanced distribution. Traditional regression tasks aim to make accurate predictions for all target values. However, in imbalanced scenarios, certain target values have significantly fewer instances, resulting in skewed datasets (Krawczyk 2016; Rezvani and Wang 2023; Yang et al. 2021). This presents a challenge for regression models as the limited availability of training data may hinder their ability to accurately predict these infrequent target values.

The majority of the research on the imbalanced regression problem has focused on developing assessment metrics (Torgo and Ribeiro 2009) that consider how important observations are as well as techniques for handling undersampling and outliers in continuous output prediction. For example, Branco et al. (2017) proposed SMOGN, a novel pre-processing technique tailored for addressing imbalanced regression tasks. SMOGN addresses the performance degradation observed in rare and relevant cases in imbalanced domains. Branco et al. (2019) proposed three new methods: adapting to random oversampling, introducing Gaussian noise, and proposing a new method called WERCS (Weighted Correlation-based Combinatorial Strategy) to address the problems posed by imbalanced distributions in regression tasks. In order to solve the problem of class imbalance in ordinal regression, Zhu et al. (2019) developed SMOR (Synthetic Minority oversampling methodology for imbalanced Ordinal Regression). SMOR takes into account the classification order and gives low selection weights to prospective generation directions that can skew the structure of the ordinal sample.

In recent years, a number of researchers have addressed the imbalance regression problem at the algorithmic level and at the ensemble learning level. For example, Branco et al. (2018) introduced the REsampled BAGGing (REBAGG) algorithm, an ensemble method designed to address imbalanced domains in regression tasks. REBAGG incorporates data pre-processing strategies and utilizes a bagging-based approach. By employing nightly pulse oximetry to diagnose obstructive sleep apnea, Gutiérrez-Tobal et al. (2021) suggested a least-squares boosting (LSBoost) model for predicting the apnea-hypopnea index (AHI). The model achieves high diagnostic performance in both community-based non-referral and clinical referral cohorts, demonstrating its ability to generalize. Kim et al. (2019) introduced a novel method for predicting river discharge (Q) utilizing hydraulic variables collected from remotely sensed data termed Ensemble Learning Regression (ELQ). The ELQ method combines multiple functions to reduce errors and outperforms traditional single-rating curve methods. Liu et al. (2022) proposed an ensemble learning assisted method for accurately predicting fuel properties based on their molecular structures. By comparing two descriptors, COMES and CM, the optimized stacking of various base learners is achieved to efficiently screen potential high energy density fuels (HEDFs) and accurately predict their properties. Steininger et al. (2021) proposed DenseWeight, a sample weighting approach based on kernel density estimation, and DenseLoss, a cost-sensitive learning approach for neural network regression. DenseLoss adjusts the influence of each data point on the loss function according to its rarity, leading to improved model performance for rare data points. Ren et al. (2022) proposed a novel loss function specifically designed for the imbalanced regression task. They offer multiple implementations of balanced MSEs, including one that does not require prior knowledge of the training label distribution. Addressing data imbalance in real-world visual regression.

In the context of regression problems, many solutions developed for categorical imbalanced data can be extended, but currently lack adequacy in addressing the challenges specific to regression imbalanced scenarios. To enhance the robustness and predictive power of regression models in unbalanced data, the utilization of integrated learning methods holds promise. By combining the prediction results from multiple regression models, it becomes possible to mitigate errors and biases associated with infrequent groups, thereby improving the overall prediction performance. The advantage lies in effectively leveraging the collective strengths of multiple models. However, caution must be exercised in controlling the diversity of integrated models to prevent overfitting.

4.2 Semi/unsupervised learning in imbalanced scenarios

Semi/unsupervised learning in imbalanced scenarios involves training machine learning models when labeled data is scarce or imbalanced across classes. These approaches leverage both labeled and unlabeled data to improve model performance, addressing challenges posed by class imbalance. Unsupervised learning method aspect involves imbalanced clustering problems, such as the case where some clusters contain more points than others. This is because traditional clustering methods may have difficulty in accurately identifying and representing clusters of a few classes leading to problems such as poor clustering and loss of information about a few cluster classes. In semi-supervised imbalanced learning (Wei et al. 2021; Yang and Xu 2020), the challenge is not only the lack of sufficient labelled samples, but also that the distribution of these labelled samples exhibits class imbalance.

Semi-supervised imbalanced learning (SSIL) is a key problem in dealing with imbalanced data when labelled samples are scarce. For example, Chen et al. (Wei et al. 2021) proposed Class-Rebalancing Self-Training, which iteratively retrains the SSIL model and selects minority class samples more frequently. Distribution Aligning Refinery of Pseudo-label (DARP) (Kim et al. 2020) optimises the generated pseudo-labels to fit the models that are biased towards the majority class, improving the generalisation ability of SSIL under the balancing test criterion. In addition, a scalable SSIL algorithm that introduces an auxiliary balanced classifier (ABC) (Lee et al. 2021) successfully copes with class imbalance by introducing balance in the auxiliary classifier. Other studies (Yang and Xu 2020; Lee et al. 2021) have argued for the value of unbalanced labelling. Under more unlabelled data conditions, the original labels can be used for semi-supervised learning along with additional data to reduce label bias and significantly improve the final classifier performance.

To address the imbalance problem in the unsupervised case, specialised algorithms and techniques have been developed to ensure fair validity of clustering results. These methods aim to enhance the representatives of minority clusters, take into account imbalance factors, and achieve a fairer clustering distribution. For example, Nguwi and Cho (2010) combined support vector machines and ESOM for variable selection and clustering ordered features. Zhang et al. (2019), Lu et al. (2019) considered unbalanced clusters by integrating interval-type type II fuzzy local metrics. Zhang et al. (2023) proposed k-means algorithm for adaptive clustering weights, which optimised the trade-off between each cluster weight to solve the imbalanced clustering problem. Cai et al. (2022) in order to mine fused location data, developed a unique clustering technique to deal with the problem of imbalanced datasets. The OSRCIH method proposed by Wen et al. (2021) combines autonomous learning and spectral rotation clustering to tackle the challenges of imbalanced class distribution and high dimensional. These combined considerations such as variable selection, fuzzy metrics, and local density.

Overall, the methods proposed for the problem of clustering and semi-supervised learning of imbalanced data show some promise. Schemes such as automatically determining the centre of clusters and the number of clusters are well suited to arbitrarily shaped imbalanced data sets. However, unsupervised methods may require careful tuning of parameters and evaluation using specialised metrics. Although the performance of the model on imbalanced data can be significantly improved by semi-supervised learning, the correlation between unlabelled data and raw data has a significant impact on the results of semi-supervised learning, and SSIL does not really integrate a strategy for imbalanced learning, even though there is still a lot of room for improvement.

5 Deep learning classification problems under long-tail distribution

The long-tailed class distribution refers to a specific pattern observed in datasets where the occurrence of classes follows a long-tailed or power-law distribution. This distribution exhibits a small number of classes with a high frequency of instances, known as the ”head,” while the remaining classes have significantly fewer instances, forming the ”tail.” Consequently, the tail classes are commonly referred to as the minority classes, whereas the head classes are considered the majority classes. A comprehensive review of current research on deep long-tail distributions and future developments can be found in Zhang et al.’s literature (Zhang et al. 2023). This distribution pattern is frequently encountered in various real-world scenarios, including image detection (Zang et al. 2021), visual relation learning (Desai et al. 2021), and few-shot learning (Wang et al. 2020), where certain classes are more prevalent than others. Ghosh et al. (2022) demonstrate through many experiments that the category imbalance problem is not eliminated by deep learning, and also provide many solutions that have been offered so far to solve this problem, which are generally categorised into post-processing, pre-processing, and dedicated algorithms.

Long-tail learning and class imbalanced learning are two interrelated yet distinct research areas. Long-tail learning can be viewed as a specialized sub-task of class imbalanced learning, with the main distinction being that in long-tail learning, the samples of tail classes are typically very sparse and do not necessarily exhibit an absolute imbalance in the number of classes. In contrast, class imbalanced learning typically involves some minority class samples (Zhang et al. 2023; Li et al. 2021). Despite these differences, both research areas are dedicated to addressing the challenges posed by class imbalance and share certain ideas and approaches, such as class rebalancing, when developing advanced solutions. At the same time, Ghosh et al. (2022) explored whether the effect of class imbalance on deep learning models is related to its effect on their shallow learning counterparts, with the aim of exploring the effect of class imbalance on deep learning models and whether deep learning has fully solved the problem in machine learning.

5.1 Class rebalancing

Recent research on long-tail distributions can be divided into the following three categories: class rebalancing, information enhancement, and module improvement (Zhang et al. 2023). Class rebalancing is one of the main approaches for long-tail learning, which aims to address the negative effects of class imbalance by rebalancing the number of training samples. Recent deep long-tail research has used various classes of balanced sampling methods, rather than random resampling, for the training of small batches of deep models. However, these strategies require prior knowledge of the frequency of training samples for different categories, which may not be available in practice (Zhang et al. 2023; Kang et al. 2019).

New research work has recently proposed that rebalancing any imbalanced categorical dataset should essentially just rebalance the classifier, and should not change the distribution of picture features for feature learning with the distribution of categories (Zhou et al. 2020). Wang et al. (2019) propose a unified framework called Dynamic Course Learning (DCL), which adaptively adjusts the sampling strategy and weights in each batch. DCL combines a two-level course scheduler for data distribution and learning importance, resulting in improved generalization and discriminatory power. Zhang et al. (2021) proposed FrameStack, a frame-level sampling method that dynamically balances the class distribution during training, thereby improving video recognition performance without compromising overall accuracy.

There are also methods that incorporate meta-learning (Liu et al. 2020; Hospedales et al. 2021). Zang et al. (2021) propose a method called Feature Augmentation and Sampling Adaptive (FASA) to address the challenge of data scarcity for rare object classes in long-tail instance segmentation. FASA uses an adaptive feature augmentation and sampling strategy to augment the demand space for rare classes, using information from past iterations and adjusting the sampling process to prevent overfitting. By adaptively balancing the impact of meta-learning and task-specific learning within each task, Lee et al. (2019) introduced Bayesian Task-Adaptive Meta-Learning (Bayesian TAML), a revolutionary meta-learning model that tackles the drawbacks of previous techniques. By learning the balancing variables, Bayesian TAML determines whether to rely on meta-knowledge or task-specific learning for obtaining solutions. Dablain et al. (2022) proposed DeepSMOTE, an oversampling algorithm for deep learning models, which addresses the challenge of unbalanced data by combining an encoder/decoder framework, SMOTE-based oversampling, and a penalty-enhanced loss function.

Another approach is the re-weighting related research method, which solves the long-tail or imbalanced distribution problem by improving the loss, which is often simple to implement and requires only a few lines of code to modify the loss to achieve a very competitive result. Cui et al. (2019) proposed a novel theoretical framework for addressing the problem of long-tailed data distribution by measuring data overlap using small neighboring regions instead of single points. In order to establish class balance in the loss function, a reweighting method is created using the effective number of samples, which is determined depending on the volume of data. Muhammad et al. (Jamal et al. 2020) proposed a meta-learning method to explicitly estimate the differences between class conditional distributions, which enhances classical class balancing learning by linking class balancing methods to domain adaptation. Cao et al. (2019) proposed label distribution-aware margin (LDAM) loss as well as delayed re-weighting training schemes that minimise margin-based generalisation bounds and allow the model to learn the initial representation before applying re-weighting, thus improving the performance of imbalanced learning.

Class rebalancing is a simple but well-performing method in long-tail learning, especially when inspired by class-sensitive learning. This makes it an attractive option for real-world applications. However, class rebalancing methods are usually performance trade-offs, and improving the performance of the tail classes may decrease the performance of the head classes. To overcome this problem, combining different approaches can be considered, but the pipeline needs to be carefully designed to avoid performance degradation (Zhang et al. 2023). This suggests that the long-tail problem may require more information-enhanced approaches to effectively deal with tail class deficiencies.

5.2 Information enhancement

To increase the performance of deep learning models in long-tailed learning scenarios, a strategy known as the Information Enhancement Method is used in relation to deep long-tail distribution. It is centered on enriching the available information during model training. Transfer learning and data augmentation are the two primary areas that this approach covers (Zhang et al. 2023).

Transfer learning aims to learn generic knowledge from the head common class and then transfer it to the tail less sample class. Recently, there has been a growing interest in applying migration learning to scenarios with deep long-tail distributions. For example, Liu et al. addressed the challenge of learning deep features from long-tailed data by proposing a method that expands the distribution of tail classes in the feature space. The method augments each instance of tail classes with disturbances, creating a ”feature cloud” that provides higher intra-class variation. With this method, deep representation learning on long-tailed data is enhanced since it reduces the distortion of the feature space brought on by the unequal distribution between head and tail classes. Xiang et al. (2020) introduced a novel framework for self-paced knowledge distillation. This approach includes two levels of adaptive learning schedules, namely self-paced expert selection and lesson example selection, aiming to effectively transfer knowledge from multiple ’experts’ to a unified student model. Wang et al. (2020) addressed the challenge of imbalanced classification in long-tailed data by proposing a new classifier called RoutIng Diverse Experts (RIDE). To lower model variance, decrease model bias, and lower computing costs, RIDE makes use of many experts, a distribution-aware diversity loss, and a dynamic expert routing module. Further research has found that experimental results indicate that self-supervised learning plays a positive role in learning a balanced feature space for long-tailed data (Kang et al. 2020). Furthermore, research is being done to investigate methods for managing long-tailed data with noisy labels (Karthik et al. 2021).

Data augmentation in the context of deep long-tailed distributions refers to a technique that aims to improve the size and quality of datasets used for model training. It involves applying pre-defined transformations, such as rotation, scaling, cropping, or flipping, to each data point or feature in the dataset (Shorten and Khoshgoftaar 2019; Zhang et al. 2023). This category is divided into head-to-tail transfer enhancement and non-transfer enhancement. In head-to-tail transfer augmentation, the data augmentation process involves transferring augmented samples from the head classes to the tail classes. By applying pre-defined transformations to the samples from the head classes and adding them to the tail class data, the augmented tail class data is enriched, allowing for better representation and learning of the tail classes. This approach helps to mitigate the class imbalance issue and improve the generalization ability of the model on the tail classes. For example, Kim et al. (2020) proposed to enhance less frequent classes by performing sample translations from more frequent classes. They enable the network to learn more generalisable features for a small number of classes as a way to address the class imbalance in deep neural networks. Chen et al. (2022) proposed a reasoning-based implicit semantic data augmentation method to address the performance degradation of existing classification algorithms caused by long-tailed data distributions. By borrowing transformation directions from similar categories using covariance matrices and a knowledge graph, they generate diverse instances for tail categories. Zhang et al. (2022) proposed a data augmentation method based on Bidirectional Encoder Representation from Transformers (BERT) to address the long-tailed and imbalanced distribution problem in Mandarin Chinese polyphone disambiguation. They incorporate weighted sampling and filtering techniques to balance the data distribution and improve prediction accuracy. Dablain et al. (2023) proposed a three-stage CNN training framework with extended oversampling (EOS), aiming to address the generalisation gap of a few classes in imbalanced image data by exploiting an end-to-end training approach, learning data augmentation in the embedding space and fine-tuning the classifier head.

Information enhancement is compatible with other methods such as class rebalancing and modular improvement, especially in the two subtypes of information enhancement, migration learning and data augmentation, which, with careful design, can improve the performance of the tail categories without degrading the performance of the head categories. However, it is important to note that simply applying category-independent enhancement techniques may not be effective enough because they ignore the category imbalance problem, may add more samples from the head category than from the tail category, and may introduce additional noise. Thus how to better perform data augmentation for long-tail learning still requires further research.

5.3 Module improvement

In addition to class rebalancing and information enhancement methods, researchers have explored ways to improve the model in recent years. These methods can be divided into representation learning, classifier design and decoupled training (Zhang et al. 2023). This method involves analyzing the challenges posed by the distribution and making targeted modifications to the model’s architecture, loss function, training strategies, or data augmentation techniques to better handle the inherent biases and class imbalances.

Representation learning aim to learn feature representations that capture the underlying structure and discriminative information in the data, while also addressing the imbalance issue. For example, Chen et al. (2021) proposed a novel method based on the principles of causality, leveraging a meta-distributional scenario to enhance sample efficiency and model generalization. Liu et al. (2023) proposed Transfer Learning Classifier (TLC) to address the challenges of class-imbalanced data and real-time visual data in computer vision. The TLC model incorporates an active sampling module to dynamically adjust skewed distribution and a DenseNet module for efficient relearning. Kuang et al. (2021) proposed a class-imbalance adversarial transfer learning (CIATL) network to address the challenges of cross-domain fault diagnosis when dealing with class-imbalanced and machine faulty data. The CIATL network incorporates class-imbalanced learning and double-level adversarial transfer learning to learn domain-invariant and class-separate diagnostic knowledge. Recent studies also have explored contrastive learning approaches for addressing long-tailed problems. Methods such as KCL (Kang et al. 2020), PaCo (Cui et al. 2021), Hybrid (Wang et al. 2021), and DRO-LT (Samuel and Chechik 2021) have been proposed, each introducing innovative techniques such as k-positive contrastive loss, parametric learnable class centers, prototypical contrastive learning, and distribution robust optimization, respectively. These methods seek to reduce class imbalance, boost model generalization, and strengthen the learnt models’ resistance to distribution change.

Traditional deep learning classification algorithms prioritize the majority class, resulting in poor minority class performance. And the loss functions of most classifiers are based on linear functions. To overcome these problems, various techniques have been developed to improve classifier design in the context of long-tailed distributions. In recent years, different classifier designs have been proposed to address the deep long-tail distribution problem. The Realistic Taxonomic Classifier (RTC) (Wu et al. 2020) uses hierarchical classification, mapping images into a class taxonomic tree structure. Samples are adaptively classified at different levels based on difficulty and confidence, prioritizing correct decisions at intermediate levels. The causal classifier applies a multi-head strategy to capture bias information and mitigate long-tailed bias accumulation (Tang et al. 2020). The GIST classifier (Liu et al. 2021) transfers the geometric structure of head classes to tail classes, improving performance on tail classes by enhancing tail-class weight centers through displacements from head classes. Zhou et al. (2022) proposed a unique debiased SGG approach dubbed DSDI to address the dual imbalance problem in scene graph generation (SGG). The strategy efficiently addresses the uneven distribution of both foreground-background occurrences and foreground relationship categories in SGG datasets by adding biased resistance loss and a causal intervention tree.

Decoupled training addresses this problem by dividing the training process into two stages: representation learning and classifier learning. This approach allows the model to learn a more discriminative representation of the data, effectively capturing the inherent characteristics of both the majority and minority classes. By decoupling the training, decoupled training methods have shown promising results in improving the classification accuracy and generalization of models in the context of deep long-tail distributions. Nam et al. (2023) demonstrated effectiveness in long-tail classification through separate decoupled learning of representation learning and classifier learning. The approach includes training the feature extractor using stochastic weight averaging (SWA) to obtain a generalised representation, and a novel classifier retraining algorithm using stochastic representation and uncertainty estimation to construct robust decision bounds. Kang et al. (2020) used a k-positive contrastive loss to create a more balanced and discriminative feature space, which improved long-tailed learning performance. MiSLAS found that data mixup enhances representation learning but has a negative or negligible effect on classifier training, proposing a two-stage approach with data mixup for representation learning and label-aware smoothing for better classifier generalization.

Module improvement methods solve long-tail problems by changing network modules or objective functions. These techniques complement decoupling training and provide a conceptually simple approach to solving real-world long-tail application problems. However, such methods tend to have high computational complexity, no guaranteed substantial improvements, complex model design, lack of generalizability, and risk of overfitting. These methods require careful consideration and customization for specific scenarios.

6 Imbalanced learning in data streams

Imbalanced data streams refer to continuously arriving data instances in a streaming environment, characterized by a highly skewed and uneven class distribution. These data streams present unique challenges due to the amalgamation of streaming and imbalanced data characteristics, including concept drift and evolving class ratios. Effective mining of imbalanced data streams necessitates adaptable algorithms capable of swiftly adapting to changing decision boundaries, imbalance ratios, and class roles, while maintaining efficiency and cost-effectiveness. In the last few years, several reviews (Fernández et al. 2018; Aguiar et al. 2023; Alfhaid and Abdullah 2021) have provided comprehensive insights into the development of techniques for imbalanced data streams. These reviews offer an overview of data stream mining methods, discuss learning difficulties, explore data-level and algorithm-level approaches for handling skewed data streams, and address challenges such as emerging and disappearing classes, as well as the limited availability of ground truth in streaming scenarios. Aguiar et al. (2023) have presented a comprehensive experimental framework, evaluating the performance of 24 state-of-the-art algorithms on 515 imbalanced data streams. This framework covers various scenarios involving static and dynamic class imbalance, concept drift, and incorporates both real-world and semi-synthetic datasets. Recent methods for addressing imbalanced data streams can be classified into three categories: (1) online or ensemble learning approaches, (2) incremental learning approaches, and (3) concept drift handling methods.

6.1 Online or ensemble learning

Online or ensemble learning in solving imbalanced data streams refers to the use of algorithms and techniques that continuously update and adapt classifiers to handle the evolving and imbalanced nature of streaming data, either by incorporating multiple classifiers or by updating the classifier’s model online with new incoming data, to improve classification accuracy and performance in imbalanced scenarios. For example, Du et al. (2021) introduced a cost-sensitive online ensemble learning algorithm that incorporates several equilibrium techniques, such as initializing the base classifier, dynamically calculating misclassification costs, sampling data stream samples, and determining base classifier weights. Furthermore, certain researchers have explored algorithmic techniques for online cost-sensitive learning, integrating online ensemble algorithms with batch mode methods to address cost-sensitive bagging or boosting algorithms. Wang and Pineau (2016), Klikowski and Woźniak (2022), Jiang et al. (2022). Zyblewski et al. (2021) presented a novel framework that combines non-stationary data stream classification with data analysis of skewed class distributions, using stratified bagging, data preprocessing, and dynamic ensemble selection methods. In addition to exploring high-dimensional imbalanced data streams, recent research has also explored the problem of incomplete imbalanced data streams. You et al. (2023) proposed a novel algorithm called OLI2DS for learning from incomplete and imbalanced data streams, addressing the limitations of existing approaches. The method uses empirical risk minimisation to detect information features in the missing data space.

The combination of ensemble learning and active learning presents a powerful strategy for addressing the imbalanced data streams. It not only enhances the learning process by actively selecting informative samples but also utilizes the collective knowledge of multiple classifiers to improve classification performance. This integrated approach offers a valuable solution for applications where data arrives continuously and exhibits imbalanced characteristics. For example, Halder et al. (2023) introduced an autonomous active learning strategy (AACE-DI) for handling concept drifts in imbalanced data streams. The method incorporates a cluster-based ensemble classifier to select informative instances, minimizing expert involvement and costs. It prioritizes uncertain, representative, and minority class data using an automatically adjusting uncertainty strategy. Zhang et al. (2020) presented a novel method called Reinforcement Online Active Learning Ensemble for Drifting Imbalanced data stream (ROALE-DI). The approach addresses concept drift and class imbalance by integrating a stable classifier and a dynamic classifier group, prioritizing better performance on the minority class.