Abstract

Deep neural networks (DNN) techniques have become pervasive in domains such as natural language processing and computer vision. They have achieved great success in tasks such as machine translation and image generation. Due to their success, these data driven techniques have been applied in audio domain. More specifically, DNN models have been applied in speech enhancement and separation to perform speech denoising, dereverberation, speaker extraction and speaker separation. In this paper, we review the current DNN techniques being employed to achieve speech enhancement and separation. The review looks at the whole pipeline of speech enhancement and separation techniques from feature extraction, how DNN-based tools models both global and local features of speech, model training (supervised and unsupervised) to how they address label ambiguity problem. The review also covers the use of domain adaptation techniques and pre-trained models to boost speech enhancement process. By this, we hope to provide an all inclusive reference of all the state of art DNN based techniques being applied in the domain of speech separation and enhancement. We further discuss future research directions. This survey can be used by both academic researchers and industry practitioners working in speech separation and enhancement domain.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Techniques for monaural speech intelligibility improvement can be categorised either as speech enhancement or separation. Speech enhancement involves isolating a target speech either from noise (Bando et al. 2018) or a mixed speech (Xiao et al. 2019). Speech enhancement involves tasks such as dereverberation, denoising and speaker extraction. Speaker separation on the other hand seeks to estimate independent speeches composed in a mixed speech (Wang and Wang 2013). Speech enhancement and separation have applications in multiple domains such as automatic speech recognition, mobile speech communication and designing of hearing aids (Wang et al. 2014). Initial research on speech enhancement and separation exploited techniques such as non-negative matrix factorization (NMF) (Schmidt and Olsson 2006; Wang and Sha 2014; Virtanen and Cemgil 2009) probabilistic models (Virtanen 2006) and computational auditory scene analysis (CASA) (Shao and Wang 2006). However, these techniques are tailored for closed-set speakers (i.e., do not work well with mixtures with unknown speakers) which significantly restricts their applicability in real environments. Due to the recent success of deep learning models in different domains such natural language processing and computer vision, these data driven techniques have been introduced to process audio dataset. In particular, DNN models have become popular in speech enhancement and separation and have achieved great performance in terms of boosting speech intelligibility and their ability to enhance speech with unknown speakers (Luo and Mesgarani 2019; Subakan et al. 2021). In order to be effective in speech enhancement and separation, DNN models must extract important features of speech, maintain order of audio frames, exploit both local and global contextual information to achieve coherent separation of speech data. This necessitates that DNN models should include techniques tailored to meet these requirements. Discussion of these techniques is the core subject of this review. Further, in computer vision and text domain, large pre-trained models are used to extract universal representations that are beneficial to downstream tasks. The review discusses the impact of pre-trained models to the speech enhancement and separation domain. It also discusses DNN techniques being adopted by speech enhancement and separation tools to reduce computation complexity to enable them work in low latency and resource constrained environments. The review therefore focuses on the whole pipeline of DNN application to speech enhancement and separation, i.e., from feature extraction, model implementation, training and evaluation. Our goal is to uncover the dominant techniques at each level of DNN implementation. In each section, we highlight key emerging features and challenges that exist. A recent review (Wang and Chen 2018) only looked at supervised techniques of performing speech separation and in this review, we discuss both supervised and unsupervised methods. Moreover, with the fast-growing field of deep learning, new techniques have emerged that necessitates a new look into how these techniques have been implemented in speech enhancement and separation. The review is constrained to discussing how DNN techniques are being applied to monaural speech enhancement and so we do not focus on multi-channel speech separation (which has been covered in Gannot et al. (2017)).

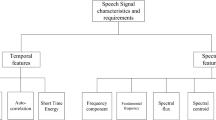

The paper first explains the types of speech enhancement and separation (Sect. 2) by highlighting their key elements and the tools that focus on each type. It discusses the key speech features that are being used by speech enhancement and separation tools in Sect. 3. This section looks at how the features are derived and how they are used to train the DNN models in supervised learning technique. Section 5 discusses the techniques the tools use to model long dependencies that exist in speech. The paper discusses model size compression techniques in Sect. 6. In Sect. 7, the paper discusses some of the popular objective functions used in speech enhancement and separation. Section 8 discusses how some tools are implementing unsupervised techniques to achieve speech enhancement and separation. Section 9 discusses how the speech separation and enhancement tools are being adapted to the target environment. In Sect. 10 the paper looks at how pre-trained models are being utilized in the speech enhancement and separation pipeline. Finally, Sect. 11 looks at future direction. Figure 1 gives an overall organization and topics covered by the paper.

2 Types of speech separation and enhancement

2.1 Speech separation

Scenarios arise where more than one target speech signals are composed in a given speech mixture and the goal is to isolate each independent speech composed in a mixture. This problem is known as speech separation. For a mixture that is composed of C independent speech signals \(x_c(n)\) with \(c=1,\ldots ,C\), a recording y(n) composed of the C speech signals can be represented as:

Here, t indexes time. The goal of speech separation is to estimate each independent \(x_c\) speech signal composed in y(n). Separating speech from another speech is a daunting task by the virtue that all speakers belong to the same class and share similar characteristics (Hershey et al. 2016). Some models such as Wang et al. (2016, 2017) lessen this by performing speech separation on a mixed speech signal based on gender voices present. They exploit the fact that there is large discrepancy between male and female voices in terms of vocal track, fundamental frequency contour, timing, rhythm, dynamic range etc. This results in a large spectral distance between male and female speakers in most cases to facilitate a good gender segregation. For speech separation that the mixture involves speakers of the same gender, the separation task is much difficult since the pitch of the voice is in the same range (Hershey et al. 2016). Most speech separation tools that solve this task such as Zeghidour and Grangier (2021), Huang et al. (2011), Weng et al. (2015), Isik et al. (2016), Hershey et al. (2016) and Luo and Mesgarani (2019) cast the problem as a multi-class regression. In that case, training a DNN model involves comparing its output to a source speaker. DNN models always output a dimension for each target class and when multiple sources of the same type exist, the system needs to select arbitrarily which output dimension to map to each output and this raises a permutation problem (permutation ambiguity) (Hershey et al. 2016). Taking a case of a two speaker separation, if the model estimates \(\hat{a_1}\) and \(\hat{a_2}\) as the magnitude of the reference speech magnitudes \(a_1\) and \(a_2\) respectively, it is unclear the order in which the model will output the estimates i.e. the order of output can either be \(\{\hat{a}_1 ,\hat{a}_2\} \) or \( \{\hat{a}_2 ,\hat{a}_1\}\). A naive approach shown in Fig. 2 (Kolbæk et al. 2017a) is to present the reference speech magnitudes in a fixed order and hope that it is the same order in which the system will output its estimation.

Naive approach of solving label matching problem for a two-talker speech separation model. Here, the reference speech S1 and S2 are presented in a fixed order with expectation that the separated speeches will also be output in the same order. In case of mismatch the training will be optimizing wrong error values

In case of a mismatch, the loss computation will be based on the wrong comparison resulting in low quality of separated speeches. Systems that perform speaker separation have an extra burden of designing mechanisms that are geared towards handling the permutation problem. Several strategies are being implemented by speech separation tools to tackle permutation problem. In Weng et al. (2015), a number of DNN techniques are implemented that estimates two clean speeches contained in a two-talker mixed speech. They employ supervised training to train DNN models to discriminate the two speeches based on average energy, pitch and instantaneous energy of a frame. Work in Yu et al. (2017) and Kolbæk et al. (2017a) introduce permutation invariant training (PIT) technique of computing permutation loss such that permutations of reference labels are presented as a set to be compared with the output of the system. The permutation with the lowest loss is adopted as the correct order. For a a two-speaker separation system introduced earlier, the reference sources permutation will be \(\{a_1,a_2\}\) and \(\{a_2,a_1\}\) such that the possible permutation losses are computed as:

The one that returns the lowest loss between the two is selected as the permutation loss to be minimized (see Fig. 3). For an S speaker separation system a total of S! permutations are generated.

For a system that performs S speaker separation and S is high (e.g. 10), implementation of PIT which has a computation complexity of O(S!) is computationally expensive (Tachibana 2021; Dovrat et al. 2021). Due to this, Dovrat et al. (2021) casts the permutation problem as a linear sum problem where Hungarian algorithm is exploited to find the permutation which minimizes the loss at computation complexity of \(O(S^3)\). Work in Tachibana (2021) proposes SinkPIT loss which is based on Sinkhorn’s matrix balancing algorithm. They utilize the loss to reduce the complexity of PIT loss from O(C!) to \(O(kC^2)\). Work in Zeghidour and Grangier (2021) employs minimum loss permutation computation at each time step t. The best permutation (argmin) at each time-step is exploited to re-order the embedding vectors to be consistent with the training labels. To evade the permutation problem, they train two separate DNN models for each of the two speakers to be identified. Another prominent technique of handling permutation problem is to employ a DNN clustering technique (Hershey et al. 2016; Byun and Shin 2021; Isik et al. 2016; Qin et al. 2020; Lee et al. 2022) to identify the multiple speakers present in a mixed speech signal. The DNN \(f_\theta \) accepts as its input the whole spectrogram X and generates a D dimension embedding vector V i.e., \(V=f_\theta (X)\in R^{N\times D} \). Here, the embedding V learns the features of the spectrogram X and is considered a permutation- and cardinality-independent encoding of the network’s estimate of the signal partition. For the network \(f_\theta \) to be learn how to generate an embedding vector V given the input X, it is trained to minimize the cost function.

Here, \(Y=\{y_{i,c}\}\) represents the target partition that maps the spectrogram \(S_i\) to each of the C clusters such that \(y_{i,c=1}\) if element i is in cluster c . \(YY^T\) is taken here as a binary affinity matrix that represents the cluster assignment in a partition-independent way. The goal in Eq. (2) is to minimise the distance between the network estimated affinity matrix \(VV^T\) and the true affinity matrix \(YY^T\). The minimization is done over the training examples. \(||A||_F^2\) is the squared Frobenius norm. Once V has been established, its rows are clustered into partitions that will represent the binary masks. To cluster the rows \(v_i\) of V, K-means clustering algorithm is used. The resulting clusters of V are then used as binary masks to separate the sources by applying the masks on mixed spectrogram X. The separated sources are then reconstructed using inverse Short-time Fourier transform (STFT). Even though PIT is popular in speech separation models, it is unable to handle the output dimension mismatch problem where there is a mismatch on the number of speakers between training and inference (Jiang and Duan 2020). For example, training a speech separation model on n speaker mixtures but testing it on \(t\ne n\) speaker mixtures. The PIT-based methods cannot directly deal with this problem due to their fixed output dimension. Most speech separation models such as Nachmani et al. (2020), Kolbæk et al. (2017a), Liu and Wang (2019), Luo and Mesgarani (2020) deal with the problem by setting a maximum number of sources C that the model should output from any given mixture. If an inference mixture has K sources, where \(C> K\), \(C-K\) outputs are invalid, and the model needs to have techniques to handle the invalid sources. In case of invalid sources, some models such as Liu and Wang (2019), Nachmani et al. (2020), Kolbæk et al. (2017a) design the model to output silences for invalid sources while (Luo and Mesgarani 2020) outputs the mixture itself which are then discarded by comparing the energy level of the outputs relative to the mixture. The challenge with models that output silences for invalid sources is that they rely on a pre-defined energy threshold, which may be problematic if the mixture also has a very low energy (Luo and Mesgarani 2020). Some models handle the output dimension mismatch problem by generating a single speech in each iteration and subtracting it from the mixture until no speech is left (Shi et al. 2018; Kinoshita et al. 2018; Takahashi et al. 2019; Neumann et al. 2019; von Neumann et al. 2020). The iterative technique despite being trained with a mixture with low number of sources can generalize to mixtures with a higher number of sources (Takahashi et al. 2019). It however faces criticism that setting iteration termination criteria is difficult and the separation performance decreases in later iterations due degradations introduced in prior iterations (Takahashi et al. 2019). Other speech separation models include Luo et al. (2018), Yul et al. (2017), Chang et al. (2020), Liu and Wang (2019), Weng et al. (2015), Isik et al. (2016), Wang et al. (2018a).

2.2 Speaker extraction

Some speech enhancement DNN models have been developed where in a mixed speech such as an Eq. (1), they design methods to extract a single target speech. These models focus only on a single target speech \(x_{target}\) and treat all other speeches as interfering signals, therefore they modify Eq. (1) as shown in (3).

where \(x_{target}(t)\) is the target speech at time t and \(x_c(t)\) is the interfering signal. By focusing on only a single target speech, the permutation ambiguity problem is avoided. They formulate the speech extraction task into a binary classification problem, where the positive class is the target speech, and the negative class is formed by the combination of all other speakers. A popular technique of speaker extraction is to give as input to the DNN models additional speaker dependent information that can be used to isolate a target speaker (Veselỳ et al. 2016). Speaker dependent information can be injected into the DNN models by either concatenating speaker dependent auxiliary clues with the input features or adapting part of the DNN model parameters for each speaker (Wang et al. 2018b). This addition information about a speaker injects a bias that is necessary to differentiate the target speaker from the rest in the mixture (Xiao et al. 2019). Several auxiliary clues have been exploited by DNN models which include pre-recorded enrolment utterances of the target speaker (Wang et al. 2018b, c; Xiao et al. 2019; Ji et al. 2020; Zhang et al. 2021a; Delcroix et al. 2018), electroglottographs (EGGs) of the target speaker (Chen et al. 2023a) and i-vectors extracted at speaker level (Miao et al. 2015; Senior and Lopez-Moreno 2014). Tool in Ochiai et al. (2014) adapt parameters for each speaker by allocating a speaker dependent module to a selected intermediate layer of DNN. Speech extraction tool in Chen et al. (2017) does not use auxiliary clues of the target speaker but design attractor points that are compared with the mixed speech embeddings to generate the mask used to extract the target speech.

2.3 Dereverberation

This is a speech enhancement technique that seeks to eliminate the effect of reverberation contained in speech. When speech is captured in an enclosed space by a microphone that is at distance d from the talker, the observed signal consists of a superposition of many delayed and attenuated copies of the speech resulting from reflections of the enclosed space walls and existing objects within the space (see Fig. 4) (Naylor 2010). The signal received by the microphone consists of direct sound, reflections that arrive shortly after direct sound ( within approximately 50 ms) i.e., early reverberation and reflections that arrive after early reverberation i.e., late reverberation (Williamson and Wang 2017a). Normally, early reverberation does not affect speech intelligibility much (Arweiler and Buchholz 2011) and much of perceptual degradation of speech is attributed to late reverberation. Speech degradation due to reverberation can be attributed to two types of masking (Nábělek et al. 1989), overlap masking- where the energy of a preceding phoneme overlaps with the one following or self-masking-where internal temporal which refers to the time and frequency alterations of an individual phoneme. Reverberation therefore can be viewed as the convolution of the direct sound and the room impulse response (RIR). A reverberant speech can be formally represented according to Eq. (4):

Here, \(*\) represents convolution, s(t) is the clean anechoic speech. h(t) represents room impulse response i.e., direct speech \(h_d(t)\), early reverberation \(h_e(t)\)and late reverberation \(h_l(t)\). Hence h(t) can be represented as

Using the distributive property of convolution (Oppenheim 1999) Eq. (4) becomes:

The goal of dereverberation is therefore to establish s(t) from y(t). Hence it can be viewed as a deconvolution between the speech signal and RIR (Zhou et al. 2022). Dereverberation is considered a more challenging task than denoising for a number of reasons. First, it is difficult to pinpoint direct speech from its copies especially when the reverberation is strong. Secondly, the key underlying assumption of sparsity and orthogonality of speech representations in the feature domain that is commonly used in monaural mask-based speech separation does not hold for speech under reverberation (Cord-Landwehr et al. 2021). Due to these unique features of reverberation, most tools designed for denoising, or speaker separation are ill poised to perform dereverberation (Cord-Landwehr et al. 2021). The DNN tools for speaker separation and denoising mostly make assumption that they are working on reverberation free speech hence do not make special consideration for eliminating reverberation (with exception of a few such as Su et al. (2020), Choi et al. (2020)). For instance, in Cord-Landwehr et al. (2021) they demonstrate that SepFormer (Subakan et al. 2021) performance can significantly improve by making adjustments to include techniques that handle reverberation. Several deep learning models have been designed with a goal to estimate clean speech from a reverberant one. Similar to speech denoising and speech separation, DNN models performing dereverberation exploit these models to fit a nonlinear function to map features of a reverberant speech to features of clean anechoic speech either directly (Wang et al. 2019; Han et al. 2015; Jiang et al. 2014; Gamper and Tashev 2018; Zhao et al. 2020; Ueda et al. 2016) or by use of mask (Huang et al. 2011; Williamson and Wang 2017a, b; Jin and Wang 2009; Jiang et al. 2014; Williamson and Wang 2017a; Jin and Wang 2009). Therefore, one way of categorising the existing dereverberation DNN tools is based on the type of target (spectrogram or ratio mask) they employ. Another way in which dereverberation tools can be categorised is based on whether a tool performs general dereverberation ( i.e suppress h(t) see Eq. (4)) or focus only on eliminating late reverberation (\(h_l* s(t)\) see equation 6). Tools such as León and Tobar (2021), Zhou et al. (2022), Défossez et al. (2020), Isik et al. (2020), Li et al. (2021a), Valin et al. (2022) explore elimination of late reverberation. This is because early reverberation does not affect speech intelligibility much. Finally, the DNN dereverberation tools can be categorised based on the type of training technique used (supervised or unsupervised). Tools such as Han et al. (2015) and Huang et al. (2011) perform speech dereverberation by implementing supervised training where the DNN model is trained to directly estimate features clean speech when given features from a reverberant speech.

Here D(k, f), M(k, f)and Y(k, f) are the Short-time Fourier transform(STFT) of the clean speech, the ideal ratio mask, and the reverberant speech at time frame k and frequency channel f respectively. Work in Fu et al. (2022) exploits conditional GAN to perform unsupervised dereverberation of a reverberant speech.

Dereverberation in discrete Fourier transform (DTF) magnitude domain When dereverberation is to be performed in DFT magnitude domain (see Sect. 3.1), a DFT has to be applied to Eq. (4) such that,

the assumption in Eq. (8) is that the convolution of the clean signal s(t) with RIR h(t) corresponds to the multiplications of their Fourier transform in the T-F domain. However, this is only true if the extent of H(t, f) is smaller than the analysis window (Cord-Landwehr et al. 2021). Therefore, when performing dereverberation in the TF domain the selection of the window is crucial on the performance of the DNN model (Cord-Landwehr et al. 2021).

Target selection in dereverberation In dereverberation training, most tools use direct speech as the target. This therefore means that the estimated speech will have to be compared with the direct path speech via a selected loss function. This has the potential of resulting in large prediction errors which can cause speech distortion (Zhou et al. 2022). Due to this, recent work Valin et al. (2022) proposes the use of a target that has early reverberation. By doing this, they suggest it will improve the quality of enhanced speech. In fact, experiments in Valin et al. (2022) demonstrate that allowing early reverberation in the target speech improves the quality of enhanced speech.

2.4 Speech denoising

This is a speech enhancement technique of separating background noise from the target speech. Formally, the noisy speech is represented as:

where y(t) is the noisy speech signal at time t, s(t) is the target speech signal and n(t) is the noise signal at time t. Speech denoising seeks to isolate a single target speech from noise. Hence data driven DNN models are optimized to predict \(s_t\) from \(y_t\). Since speech denoising has only a single target speech it does not suffer from global permutation ambiguity problem. Some DNN tools that perform speech denoising include Leglaive et al. (2018, 2019, 2020), Bando et al. (2018), Kolbæk et al. (2017b), Lu et al. (2021, 2022), Fu et al. (2016, 2018a); Gao et al. (2016).

3 Speech separation and enhancement features

Speech enhancement and separation tools’ input features can be categorised into two:

-

1.

Fourier spectrum features.

-

2.

Time domain features.

3.1 Fourier spectrum features

Speech enhancement and separation tools that use these features do not work directly on the raw signal (i.e., signal in the time domain) rather they incorporate the discrete Fourier transform (DFT) in their signal processing pipeline mostly as the first step to transform a time domain signal into frequency domain. These models recognise that speech signals are highly non-stationary, and their features vary in both time and frequency. Therefore, extracting their time-frequency features using DFT will better capture the representation of speech signal (Portnoff 1980). To demonstrate the DFT process we exploit a noisy speech signal shown in Eq. (10). The same process can be applied in speech separation.

where x(t) and n(t) represent discrete clean speech and noise respectively at time t. Since speech is assumed to be statistically static for a short period of time, it is analysed frame-wise using DFT as shown in Eq. (11) (Portnoff 1980; Allen 1982; Allen and Rabiner 1977).

Here, k represents the index ( frequency bin) of the discrete frequency, L is the length of the frequency analysis and w(n) is the analysis window. In speech analysis, the Hamming window is mostly used as w(n) (Paliwal et al. 2011). Once the DFT has been applied to the signal y(t), it is transformed into time-frequency domain represented as:

Y[t, k], X[t, k] and N[t, k] are the DFT representations of the noisy speech, clean speech and noise respectively. Each term in Eq. (12) can be expressed in terms of DFT magnitude and phase spectrum. For example, the polar form (including magnitude and phase) of the noisy signal Y[t, k] is:

|Y[t, k]| and \(\angle {Y[t,k]}\) are the magnitude and phase spectra of Y[t, k] respectively. Equation (13) can be written in Cartesian coordinates as shown in Eq. (14).

Both phase and the magnitude are computed from the real and the imaginary part of Y[t, k] i.e.

All models that work with the Fourier spectrum features either use the DFT representations directly as the input of the model or further modify the DFT features. The features based on Fourier spectrum include:

-

1.

Log-power spectrum features.

-

2.

Mel-frequency spectrum features.

-

3.

DFT magnitude features.

-

4.

Complex DFT features.

-

5.

Complementary features.

DFT magnitude features These are features where the mixed raw waveform y(t) is first converted into time-frequency (TF) representation (spectrogram) using DFT ( specifically, short-time Fourier transform (STFT) (Eq. 11) (Natsiou and O’Leary 2021). The magnitude of the time-frequency representation (Eq. 13) acts as the input to a deep neural network (DNN) model for speech separation. The DNN model is then trained to learn how to separate the TF-bins such that those that comprise each source speech are grouped together. DNN speech enhancements and separation models that exploit DFT features include systems such as Nossier et al. (2020a) Fu et al. (2018a), Grais and Plumbley (2018), Fu et al. (2019), Jansson et al. (2017), Kim and Smaragdis (2015). The use of DFT magnitude as features work with high frequency resolution hence necessitating the use of larger time window which is typically more that 32 ms (Isik et al. 2016; Kolbæk et al. 2017a) for speech and more than 90 ms for music separation (Luo et al. 2017). Due to this, these models must handle increased computational complexity (Baby et al. 2014). This has motivated other speech separation models to work with lower dimensional features as compared to those of DFT magnitude.

DFT complex features Unlike the DFT magnitude features that only use the magnitude of T-F representations, tools that use DFT complex features include both the magnitude and the phase of the noisy (mixed) speech signal in the estimation of the enhanced or separated speech. Therefore, each T-F unit of a complex features is a complex number with a real and imaginary component (see Eq. 13). The magnitude and phase of a signal is computed according to Eqs. (15) and (16) respectively. Tools that use DFT complex features include Fu et al. (2017), Williamson and Wang (2017a), Kothapally and Hansen (2022a, b).

Mel-frequency cepstral coefficients (MFCC) features Given the mixed speech signal such as in Eq. (10), to extract Mel frequency cepstral features, the following steps are executed:

-

1.

Perform DFT of the input noisy signal \(DFT(y(t))= Y[t,k]=X[t,k]+N[t,k]\)

-

2.

Given the DFT features Y[n, k] of the input signal, a filterbank with M filters i.e. a \(1\le m\le M\) is defined where m is a triangular filter given by:

$$\begin{aligned} H_m[k]={\left\{ \begin{array}{ll} 0 &{} k< f[m-1] \\ \frac{(2(k-f[k-m])}{f[m+1]-f[m-1])(f[m]-f[m-1])} &{} f[m-1]\le k\le f[m]\\ \frac{2(f[m+1]-1)}{(f[m+1]-f[m-1])(f[m+1]-f[m])} &{} f[m]\le k \le f[m+1]\\ 0 &{} k> f[m+1] \end{array}\right. } \end{aligned}$$(17)The filters are used to compute the average spectrum around centre frequencies with increasing bandwidths as shown in Fig. 5. Here, f[m] are uniformly spaced boundary points in the Mel-scale which is computed according to Eq. (18). The Mel-scale B is given by Eq. (19) and \(B^{-1}\) which is its inverse is computed as shown in Eq. (20).

$$\begin{aligned} f[m]=\frac{N}{F_s}B^{-1}\left( B(f)+m\frac{B(f_h)-B(f_l)}{M+1}\right) \end{aligned}$$(18)\(F_s\) is the sampling frequency, \(f_l\) and \(f_h\) represent the lowest and the highest frequencies of the filter bank in Hz. N is the size of DFT and M is the number of filters.

$$\begin{aligned} B(f)= & {} 1125\ln {\left( 1+\frac{f}{700}\right) } \end{aligned}$$(19)$$\begin{aligned} B^{-1}(b)= & {} 700\left( \exp {\left( \frac{b}{1125}\right) -1}\right) \end{aligned}$$(20) -

3.

Scale the magnitude spectrum |Y[t, k]| of the noisy signal in both frequency and magnitude using mel-filter bank H(k, m) and then take the logarithm of the scaled frequency.

$$\begin{aligned} X^{\prime }(m)=\log (\sum _{k=0}^{N-1}|Y[t,k]|^2H_m[k]) \end{aligned}$$(21)for \(m=0,\ldots ,M \)where M is the number of filter banks.

-

4.

Compute the Mel frequency by computing the discrete cosine transform of the m filter outputs as shown in Eq. (22).

$$\begin{aligned} c[n]=\sum _{m=0}^{m-1}X^{\prime }(m)\cos {(\pi n(m+1/2)/M)} \end{aligned}$$(22)where \(0\le n< M\)

Triangular filters used in the computation of the Mel-cepstrum using Eq. (18)

The motivation for working with MFCC is that it results in reduced resolution space as compared to DFT features. Fewer parameters are easier to learn and may generalise better to unseen speakers and noise (Baby et al. 2014). The challenge however with working on a reduced resolution such as MFCC is that the DNN estimated features must be extrapolated to the DFT feature space. Due to working on a reduced resolution, MFCC degree-of-freedom will be restricted by the dimensionality of the reduced resolution feature space which is much less than that of the DFT space. The low-rank approximation generates a sub-optimal Wiener filter which cannot account for all the added noise content and yields reduced SDR (Baby et al. 2014). MFCC features have been exploited in tools such as Liu et al. (2022), Ueda et al. (2016), Du et al. (2020), Fu et al. (2018a), Weninger et al. (2014), Donahue et al. (2018).

Log-power spectra features To compute these features, a short-time Fourier analysis is applied to the raw signal computing the DFT of each overlapping waveform (see Eq. 11). The log-power spectra are then computed from the output of the DFT. Consider a noisy speech signal in the time-frequency domain i.e., where DFT has been applied to the signal (see Eq. 12). From Eq. (14), the power spectrum of the noisy signal can be represented as in Eq. (23).

Here, \(\theta \) represents the angle between the two complex variables |X[k]| and |N[k]|. Most models that exploit log-power spectra features ignore the last term ( assume the value to be zero) and employ equation 24.

Figure 6 Du and Huo (2008) summarises the process of log-power feature extraction.

Examples of models that use Log-power spectra features include (Fu et al. (2017), Du and Huo (2008), Xu et al. (2015), Du et al. (2014)).

Complementary features Since different features strongly capture different acoustic features which characterise different properties of the speech signal, some DNN models exploit a combination of the features to perform speech separation. This is based on works such as Garau and Renals (2008) and Zolnay et al. (2007) which demonstrated that complementary features significantly improve performance in speech recognition. The complementary features used in Zolnay et al. (2007), Wang et al. (2013), Williamson et al. (2016) include perceptual linear prediction, amplitude modulation spectrogram (AMS), relative spectral transform and perceptual linear product (RASTA-PLP), Gammatone frequency cepstral coefficient, MFCC, pitch-based features. The complementary features are combined by concatenation. Research in Williamson et al. (2016) reports that the use of complementary features registered better results as compared to those of DFT magnitude. The challenge with using complementary features is how to effectively combine the different features, such that those complementing each other are retained while redundant ones are eliminated (Wang et al. 2013).

3.1.1 Supervised speech enhancement and separation training with Fourier spectrum features

DNN models that are trained via supervised learning using Fourier spectrum features employ several strategies to learn how to generate estimated clean signal from a noisy (mixed) signal. These strategies can be classified into three categories based on the target of the model.

-

1.

Spectral mapping techniques.

-

2.

Spectral masking techniques.

-

3.

Generative modelling.

3.1.1.1 Spectral mapping techniques

These models fit a nonlinear function to learn a mapping from a mixed signal feature to an estimated clean signal feature (see Fig. 7).

The training dataset of these models consist of a noisy speech signal (source) and clean speech (target) features. The process of training these models can be generalised in the following steps:

-

1.

Given N raw waveforms of mixed (noisy) speech, convert the N raw waveform of noisy speech to the desired representation (such as spectrogram).

-

2.

Convert the respective N clean speech waveform in time domain to the same representation as that of the noisy speech.

-

3.

Create an annotated dataset consisting of a pair of noisy speech features and that of clean speech i.e., \(<noisy\_speech\_features_i, clean\_speech\_features_i>\) with \(1 \le i\le N\)

-

4.

Train a deep learning model \(g_\theta \) to learn how to estimate clean features \(clean\_speech\_features_i\) given a noisy speech feature as input \(noisy\_speech\_features_i\) by minimizing an objective function.

-

5.

Given new a noisy speech features \(x_j\) the trained model \(g_{\theta }\) should estimate a clean speech feature \(y_j\).

-

6.

Using the estimated clean speech features \(y_j\), reconstruct its raw waveform by performing the inverse of the feature generation process (such as using the inverse short-time Fourier transform if the features are in time-frequency domain).

The above generalisation has been exploited in Fu et al. (2018a), Grais and Plumbley (2018), Kim and Smaragdis (2015), Xu et al. (2014a, 2015), Lu et al. (2013), Fu et al. (2016), Gao et al. (2016) to achieve speech enhancement and in Jansson et al. (2017)and Weninger et al. (2014) to perform speech separation and enhancement. Figure 8 gives a summary of the steps when time-frequency (spectrogram) of a noisy speech is exploited as the input of the speech enhancement model.

3.1.1.2 Spectral masking techniques

Here, the task of estimating clean speech features from a noisy (mixed) speech input features is formulated as that of predicting real-valued or complex-valued masks (Williamson and Wang 2017a). The mask function is usually constrained to be in range the [0,1] even though different types of soft masks have been proposed (see Kolbæk et al. 2017a; Narayanan and Wang 2013; Erdogan et al. 2015; Nossier et al. 2020b). Source separation based on masks is predicated on the assumption of sparsity and orthogonality of the sources in the domain in which the masks are computed (Cord-Landwehr et al. 2021). Due to the sparsity assumption, the dominant signal at a given range (such as time-frequency bin) is taken to be the only signal at that range (i.e. all other signals are ignored except the dominant signal). In that case, the role of DNN estimated mask is to estimate the dominant source at a given range. To do this, the mask is applied on the input features such that it eliminates portion of the signal( where the mask has a value of 0) while allowing others (mask value of 1) (Kjems et al. 2009; Wang 2008). The masks are always established by computing the signal-to-noise (SNR) within each TF bin against a threshold or a local criterion (Kjems et al. 2009). It has been demonstrated experimentally that the use of masks significantly improves speech intelligibility when an original speech is composed of noise or a masker speech signal (Brungart et al. 2006; Nossier et al. 2020a). For deep learning models working on the time-frequency domain, a model \(g_{\theta }\) is designed such that given a noisy or mixed speech spectrogram Y[t, n] at time frame t, it estimates the mask \(m_t\) at that time frame. The established mask \(m_t\) is then applied to the input spectrogram to estimate target or denoised spectrogram i.e., \(\hat{S}_t=m_t \otimes Y[t,n]\) (see Fig. 9). Here, \(\hat{S}_t\) is the spectrogram estimate of the clean speech at time frame t and \(\otimes \) denotes element wise multiplication. To train the model \(g_{\theta }\), there are two key objective variants. The first type minimizes an objective function D such as mean squared error (MSE) between the model estimated mask \(\hat{m}_m\) and the target mask (tm).

This approach however cannot effectively handle silences where \(|Y[t,n]|=0\) and \(|X[t,n]|=0,\) because the target masks tm will be undefined at the silence bins. Note that target masks such ideal amplitude mask (IRM) that is defined as \(IRM(t,f)=\frac{|X_s(t,f)|}{\sum _{i=1}^{s}|Y_s(t,f)|}\) involves division of |X[t, n]| by |Y[t, n]| hence silence regions will make the target mask undefined (Kolbæk et al. 2017a). This cost function also focuses on minimizing the disparity between the masks instead of the features of estimated signal and the target clean signal (Kolbæk et al. 2017a). The second type of cost function seeks to minimize the features of estimated signal \(\hat{S}_t=m_t \otimes Y[t,n]\) and those of target clean signal S directly as shown Eq. (25).

The sum is over all the speech u and time-frequency bin (t, f). Here, Y and S represents noisy (mixed) and clean (target) speech respectively. So, for DNN tools using indirect estimation of clean signal features, instead of them estimating the clean features directly from the noisy features input, the models first estimate binary masks. The binary masks are then applied to the noisy features to separate the sources (see Fig. 9, here, the features are the TF spectrogram). This technique has been applied in Wang and Wang (2013), Isik et al. (2016), Weninger et al. (2014), Fu et al. (2016), Narayanan and Wang (2013), Chen et al. (2015), Huang et al. (2015), Hershey et al. (2016), Grais et al. (2014), Zhang and Wang (2016), Narayanan and Wang (2015), Weninger et al. (2015), Huang et al. (2011), Zhang and Wang (2016), Liu and Wang (2019).

3.1.1.3 Generative modelling

Given an observed sample x, the goal of a generative model is to model its true distribution p(x). The established model can then be used to generate new samples that are similar to the observed samples x. In speech separation and enhancement, these models have been exploited almost exclusively to perform speech denoising. These generative models are required to generate clean speech from a noisy one and maintains the vocal fingerprint of the noisy input speech i.e the models should not collapse to generating the same output even though the input is changing. Several generative models have been employed in a supervised manner to generate clean speech from a noisy one. Generative adversarial network (GAN) (Goodfellow 2016) is an unsupervised model that constitutes two key parts: the generator \(\mathcal {G}\) and the discriminator \(\mathcal {D}\), where \(\mathcal {G}\) generates samples which are then judged by the \(\mathcal {D}\). The generator \(\mathcal {G}\) generates the synthetic data by sampling from a simple prior \(z \sim p(z) \) and the outputs a final sample \(g_{\theta }(z)\) where \(g_{\theta }\) is non-linear function more specifically a DNN. The discriminator \(\mathcal {D}\) on the other hand must be able to catch synthetic data as fake and real data from p(x) as real. The training objective is shown in Eq. (26).

The objective function in Eq. (26) is maximised w.r.t to D(.) and minimised w.r.t G(.). Therefore the objective of GAN is to learn a mapping from a noise vector z to an output x i.e. \(G:z\rightarrow x\). In case of speech enhancement, the models seek to map a noisy speech \(x_c\) to a clean speech x. Equally, the models are required to preserve the vocal fingerprints of the input noisy speech. Based on this, most GAN based speech enhancement models use conditioned GAN (Isola et al. 2017) i.e. \(G:(x_c,z)\rightarrow x\) as shown in the objective below.

The random noise z is added to avoid the DNN producing deterministic outputs and therefore fail to learn any distribution other than a delta function. To further improve the quality of generated enhanced speech, the GAN based denoising models use least square GAN (LSGAN) (Mao et al. 2017). In the original GAN objective (Eq. 26), as long as a generated sample lies in the correct side of the decision boundary, the objective causes almost no loss. However, for LSGAN it penalizes samples based on how far they are from the decision boundary even if they lie on the correct side. Due to this, LSGAN generates speeches that maintain the vocal fingerprints of the noisy input speech. CGAN (Donahue et al. 2018) uses conditioned GAN that accepts input in T-F domain to generate a denoised speech. Since most automatic speech recognition (ASR) tools work in T-F domain, CGAN hypothesises that the generative model working in T-F domain will be more robust for ASR as compared to those working in raw waveform. Therefore, CGAN can be seen as a speech enhancement tool targeting ASR. To address the problem of mismatch between the training objective used in CGAN and the evaluation metrics, MetricGAN (Fu et al. 2019) proposes to integrate evaluation metric in the discriminator. By doing this, instead of the generator giving a false (0) or true (1) discrete values, it will generate continuous values based on the evaluation metric. MetricGAN can therefore be trained to generate data according to the the selected metric score. Through this modification, MetricGAN produces more robust enhanced speech. Both these two models use conditioned GAN to preserve the vocal fingerprints of the input speech. Another common generative group of generative models is variational auto-encoder (VAE) technique (Kingma and Welling 2014). Like GAN, VAE is mainly used for denoising i.e. where the mixture is modelled as:

Here, \(x_{fn}\) denotes the mixture at the frequency index f and the time-frame index n, \(g_n \in R_{+}\) is a frequency independent but frame dependent gain while \(s_{fn}\) and \(b_{fn}\) represent the clean speech and the noise respectively at the frequency index f and the time-frame index n. We first give a brief overview of VAE before we discuss how it is adapted for speech enhancement. Mathematically, given an observable sample s, the goal of a generative VAE model is to model true data distribution p(s). To do this, VAE assumes that the observed sample s are generated by associated latent variable z and their joint distribution is p(s, z). The model therefore seeks to learn how to maximize the likelihood p(s) over all observed data.

Integrating out all the latent variables z in the above equation is intractable. However, using evidence lower bound (ELBO) which quantifies the log-likelihood of observed data, p(s) can be estimated. ELBO is given in Eq. (28) (refer to Lu et al. (2022) to see derivation of relationship between p(s) and ELBO).

Here, \(q_\phi (z\mid s)\) is a flexible variational distribution with parameters \(\phi \) that the model seeks to maximize. Equation (28) can be written as Eq. (29) using Bayes theorem.

Equation (29) can be expanded as:

Equation (30) can be expanded as:

The second term on the right of Eq. (31) seeks to learn the posterior \(q_\phi (z\mid s)\) via prior p(z) while the first term reconstructs data based on the learned latent variable z. \(q_\phi (z\mid s)\) is always modelled by a DNN and referred to as encoder and the reconstruction term is another DNN referred to as decoder. Both the encoder and decoder are trained simultaneously. The encoder is normally chosen to model a multivariate Gaussian with diagonal covariance and the prior is often selected to be a standard multivariate Gaussian:

To estimate clean speech based on variational-autoencoder pre-training, the tools execute several techniques that can be generalised into the following steps:

-

1.

Train a model such that it can maximise the likelihood \(p_\theta (s\mid z)\). Here, s denotes the clean speech dataset that is composed of F-dimensional samples i.e \(s_t\in R^F, 1 \le t\le T\). The variational autoencoder assumes a D-dimensional latent variable \(z_t\in R^D\). The latent variable \(z_t\) and the clean speech \(s_t\) have the following distribution:

$$\begin{aligned}{} & {} z_t \sim \mathcal {N}(0,I_D)\\{} & {} s_t \sim p(s_t|z_t) \end{aligned}$$Here, \(\mathcal {N}(\mu ,\delta )\) denotes a Gaussian distribution with mean \(\mu \) and variance \(\delta \). Basically, a decoder \(p_\theta (s_t\mid z_t)\) is trained to generate clean speech \(s_t\) when given the latent variable \(z_t\), the decoder is parameterized by \(\theta \). The decoder \(p_\theta (s_t\mid z_t)\) is learned by deep learning model during training. The encoder is trained to estimate the posterior \(q_\phi (z_t|s_t)\) using a DNN. The overall objective of the variational auto-encoder training is to maximise Eq. (32).

$$\begin{aligned} p(s)= {\text {*}}{argmin}_{\theta , \phi }\sum _{i=1}^{L} \log p_\theta (s\mid z^i) + D_{KL}(q_\phi (z^{i}\mid s)\mid \mid p(z) ) \end{aligned}$$(32)The posterior estimator \(q_\phi (z\mid s)\) is a Gaussian distribution with parameters \(\mu _d\) and \(\delta _d\). These parameters are to be established by the encoder deep neural network such that \(\mu _d:R^F\rightarrow R\) and \(\delta _d:R^F\rightarrow R_+\).

-

2.

Set up a noise model using unsupervised techniques such as NMF (Hien et al. 2015). For example, in case of NMF the noise \(b_{fn}\) in equation 27 can be modelled as

$$\begin{aligned} b_{bf};w_{b,f},h_{b,n} \sim \mathcal {N}(0,(W_b,H_b)_{f,n}) \end{aligned}$$(33)where \(\mathcal {N}(0,\delta )\) is a Gaussian distribution with zero mean and variance of \(\delta \).

-

3.

Set up a mixture model such that \(p(x\mid z,\theta _s,\theta _u)\) is maximised. Here x is the noisy speech signal, \(\theta _s\) are parameters from the pre-trained model in step 1 i.e \(\phi \) and \(\theta \). \(\theta _u={g_n,(W_b,H_b)_{f,n}} \) represents the parameters to be optimised. The parameters are \(\theta _u\) are optimised by appropriate Bayesian inference technique.

-

4.

Reconstruct the estimated clean speech \(\hat{s}\) such that \(p(\hat{s}|\theta _u,\theta _s,x)\) is maximised based on the parameters \(\theta _u,\theta _s\) from step 1 and 3 respectively and the observed mixed speech x.

Works that exploit different versions of variational auto-encoder technique include Leglaive et al. (2018, 2019, 2020), Bando et al. (2018)). Another generative modelling technique that has been used in speech enhancement is the variational diffusion model (VDM) Sohl-Dickstein et al. 2015. VDM is composed of two processes i.e., diffusion and reverse process. The diffusion process perturbs data to noise and the reverse process seeks to recover data from noise. The goal of diffusion therefore is to transform a given data distribution into a simple prior distribution mostly standard Gaussian while the reverse process recovers data by learning a decoder parameterised by DNN. Formally, representing true data samples and latent variables as \(x_t\) where \(t=0\) represents true data and \(1\le t\le T\) represents a sequence of latent variables, the VDM posterior is represented as:

The VDM encoder \(q(x_t\mid x_{t-1})\) unlike that of VAE, is not learned rather it is a predefined linear Gaussian model. The Gaussian encoder is parameterized with mean \(u_t(x_t)=\sqrt{\alpha _t}x_{t-1}\) and variance \(\varepsilon _t=(1-\alpha _t)I\). Therefore, the encoder \(q(x_t\mid x_{t-1})\) can mathematically be represented as

\(\alpha _t\) evolves over time such that the final distribution of the latent \(p(x_T)\) is a standard Gaussian. The reverse process seeks to train a decoder that starts from the standard Gaussian distribution \(p(x_T)\). Formally the reverse process can be represented as:

Here \(p(x_T)=\mathcal {N}(x_T; 0,I)\). The reverse process seeks to set up a decoder \(p_\theta (x_{t-1}\mid x_t)\) that optimizes the parameter \(\theta \) such that: the conditionals \(p_\theta (x_{t-1}\mid x_t)\) are established. Once the VDM is optimized, a sample from the Gaussian noise \(p(x_T)\) can iteratively be denoised through transitions \(p_\theta (x_{t-1}\mid x_t)\) for T steps to generate a simulated \(x_0\). Using reparameterization trick, \(x_t\) in Eq. (34) can be rewritten as:

where \(\epsilon \sim \mathcal {N}(\epsilon ,O,I)\) Similarly,

Based on this and through iterative derivation of Eq. (34), it can be shown that:

In the reverse process in Eq. (36), the transition probability \(p_\theta (x_{t-1}\mid x_t)\) can be represented by two parameters \(\mu _\theta \) and \(\delta _\theta \) as \(\mathcal {N}(x_{t-1}; \mu _\theta (x_t,t),\delta _\theta (x_t,t)^2I)\) with \(\theta \) being the learnable parameters. It has been shown in Luo (2022) that \(\mu _\theta (x_t,t)\) can be established as:

Based on Eq. (40), to estimate \(\mu _\theta (x_t,t)\) the DNN \(\epsilon _\theta (x_t,t)\) needs to estimate the Gaussian noise \(\epsilon \) in \(x_t\) which was injected during the diffusion process. Like VAE, VDM uses ELBO objective for optimization. Please see Luo (2022) for a thorough discussion on VDM. In speech denoising, work in Lu et al. (2022) uses conditional diffusion process to model the encoder \(q(x_t\mid x_{t-1})\). In conditional encoder, instead of \(q(x_t\mid x_{t-1})\), they define it as \(q(x_t\mid x_0,y)\) i.e., \(q(x_t\mid x_0,y)=\mathcal {N}(x_t;(1-m_t)\sqrt{\bar{\alpha }}x_0+m_t\sqrt{\bar{\alpha }}y,\delta _tI)\). Here \(x_0\),y represents the clean speech and noisy speech respectively. The encoder is modeled as a linear interpolation between clean speech \(x_0\) and the noise speech y with interpolation ratio \(m_t\). The reverse process \(p_\theta (x_{t-1}\mid x_t)\) is also modified to \(p_\theta (x_{t-1}\mid x_t,y)=\mathcal {N}(x_{t-1};\mu _\theta (x_t,y,t),\delta I)\). Here, \(\mu _\theta (x_t,y,t)\) is the mean of the conditional reverse process. similar to Eq. (40), \(\mu _\theta (x_t,y,t)\) is estimated as

where \(\epsilon _\theta (x_t,y,t)\) is a DNN model to estimate the combination of Gaussian and non-Gaussian noise. The coefficients \(c_{xt}\), \(c_{yt}\) and \(c_{\epsilon t}\) are established via the ELBO optimization.

3.1.2 Highlights on Fourier spectrum features

-

1.

When performing a DFT on the input signal, an optimum window length must be selected. The choice of the window has a direct impact on the frequency resolution and the latency of the system. To achieve good performance, most systems use 32ms. This may limit the use of the DFT based models in environments which require short latency (Luo and Mesgarani 2018).

-

2.

DFT is a generic method for signal transformation that may not be optimised for waveform transformation in speech separation and enhancement. It is therefore important to know to what extent does it place an upper bound on the performance level of speech enhancement techniques.

-

3.

Accurate reconstruction of estimated clean speech from the estimated features is not easy and the erroneous reconstruction of clean speech places an upper bound on the accuracy of the reconstructed audio.

-

4.

Perhaps the biggest challenge when working in the frequency domain is how to handle the phase. Most DNN models only use the magnitude spectrum of the noisy signal to train the DNN then factor in the phase of the noisy signal during reconstruction. Recent works such as Paliwal et al. (2011) have shown that this technique does not generate optimum results.

-

5.

While working in the frequency domain, experimental research has demonstrated that spectral masking generates better results in terms of enhanced speech quality as compared to the spectral mapping method (Nossier et al. 2020a).

3.1.3 Handling of phase in frequency domain

The assumption made by most DNN models that use Fourier spectrum features is that phase information is not crucial for human auditory. Therefore, they exploit only the magnitude or power of the input speech to train the DNN models to learn the magnitude spectrum of the clean signal and factor in the phase during the reconstruction of the signal (see Fig. 10) (Xu et al. 2014a; Kumar and Florencio 2016; Du and Huo 2008; Tu et al. 2014; Li et al. 2017). The use of the phase from the noisy signal to estimate the clean signal is based on works such as Ephraim and Malah (1984) that demonstrated that the optimal estimator of the clean signal is the phase of the noisy signal. Further, most speech separation models work on frames that are of size between 20 and 40 ms and believe that the short-time phase contain low information (Lim and Oppenheim 1979; Oppenheim and Lim 1981; Vary and Eurasip 1985; Wang and Lim 1982) and therefore not crucial when estimating clean speech. However, recent research (Paliwal et al. 2011) have demonstrated through experiments that further improvements in quality of estimated clean speech can be attained by processing both the short-time phase and magnitude spectra. Further, the factoring in of the noisy input phase during reconstruction has been noted to be a problem since the phase errors in the input interact with the amplitude of the estimated clean signal hence causing the amplitude of the estimated clean signal to differ with the amplitude of the actual clean signal being estimated (Erdogan et al. 2015; Han et al. 2015). Based on the realisation of the importance of phase, some studies have avoided factoring in the phase of the noisy signal but rather exploit a modified phase to estimate the clean signal. The existing techniques of modifying the phase by the DNN models working on Fourier spectrum features can be categorised into two:

Phase learning These models make the phase part of the objective function i.e., they learn the phase of the estimated clean signal during training. To integrate the phase in the learning process works such as Erdogan et al. (2015), Kolbæk et al. (2017a) use a phase sensitive objective by replacing Eqs. (42) with (43). It essentially exploits a phase sensitive spectrum approximation objective by minimising the distance between the raw waveform of the estimated speech and that of the target clean speech.

Here D, is a selected objective function such as MSE, \(\theta _y\) and \(\theta _s\) represent the phase of the noisy and clean (target) speech respectively. The sum is over all the speech u and time-frequency bin (t, f). Experiments conducted based on the objective function in Eq. (43) show superior results in terms of signal-to-distortion ratio (SDR) (Erdogan et al. 2015). Work in Williamson et al. (2016) trains a DNN model to generate masks that are composed of both the real and imaginary part (see Eq. 44). The complex mask will then be applied to a complex representation of the noisy signal to generate the estimated clean signal. By learning a mask that has both the real and imaginary part, they integrate the phase as part of the learning.

\(O_r\) is the real part of the mask estimated by the DNN model while \(O_i\) is the imaginary part. \(M_r\) is the real part of the target mask while \(M_i\) is the imaginary part. N is the number of frames and (t,f) is a given TF bin. The complex mask implementation has been exploited in Williamson and Wang (2017a), Erdogan et al. (2015), Lee et al. (2017) where the targets are formulated in the complex coordinate system i.e. the magnitude and phase are composed as part of the learning process. Work in Wang et al. (2018a) proposes a model that learns the phase during training via input spectrogram inversion (MISI) algorithm (Gunawan and Sen 2010). Work in Ai et al. (2021) proposes a generative adversarial network (GAN) (Goodfellow 2016) based technique of learning the phase during training. Other works that learn the phase during training include Wang et al. (2019) and Le Roux et al. (2019). Techniques that include phase as part of the training face the difficulty of processing a phase spectrogram which is randomly distributed and highly unstructured (Zheng and Zhang 2019). To mitigate this problem and derive a highly structured phase-aware target masks, Zheng and Zhang (2019) employs instantaneous frequency (IF) (Friedman 1985) to extract structured patterns from phase spectrograms.

Post-processing phase update The models that use this technique, train the DNN models using only the magnitude spectrum. Once the model has been trained to estimate the magnitude spectrum of the clean signal, they iteratively update the phase of the noisy signal to be as close as possible to that of the target clean signal. The algorithm being exploited by the models performing post-processing phase update is based on the Griffin-Lim algorithm proposed in Griffin and Lim (1984). For example, in Han et al. (2015), they exploit the magnitude \(X^0\) of the target clean signal to iteratively obtain an optimal phase \(\phi \) from the phase of the noisy signal (see Algorithm 1). The obtained phase is then used in the reconstruction of the estimated clean signal together with the magnitude \(\hat{X}\) estimated by the DNN. The technique is also used in Zhao et al. (2019). Techniques that implement Griffin-Lim algorithm such as in algorithm 1 perform iterative phase reconstruction of each source independently and may not be effective for multiple source separation where the sources must sum up to the mixture (Wang et al. 2018a). Work in Wang et al. (2018a) proposes to jointly reconstruct the phase of all sources in a given mixture by exploiting their estimated magnitudes and the noisy phase using the multiple input spectrogram inversion (MISI) algorithm (Gunawan and Sen 2010). They ensure that the sum of the reconstructed time-domain signals after each iteration must sum to the mixture signal. Work (Li et al. 2016) and (Choi et al. 2020) also uses post-processing to update the phase of the noisy signal.

3.2 Time-domain features

Due to the challenges highlighted in Sect. 3.1.2 of working in the time-frequency domain, different models such as Luo and Mesgarani (2018), Luo et al. (2020), Luo and Mesgarani (2019), Venkataramani et al. (2018), Zhang et al. (2020a), Subakan et al. (2021), Tzinis et al. (2020a), Tzinis et al. (2020b), Kong et al. (2022), Su et al. (2020), Lam et al. (2021a), Lam et al. (2021b) explore the idea of designing a deep learning model for speech separation that accepts speech signal in the time-domain. The fundamental concept for these models is to replace the DFT based input with a data-driven representation that is jointly learned during model training. The models therefore accept as their input the mixed raw waveform sound and then generates either the estimated clean sources or masks that are applied on the noisy waveform to generate clean sources. By working on the raw waveform, these models address two key limitations of DFT based models. First, the models are designed to fully learn the magnitude and phase information of the input signal during training (Luo et al. 2020). Secondly, they avoid reconstruction challenges faced when working with DFT features. The time domain methods can broadly be classified into two categories (Luo et al. 2020).

3.2.1 Adaptive front-end based method

The models in this category can roughly be discussed as composed of three key modules i.e., the encoder, separation and decoder modules (see Fig. 11).

-

1.

Encoder The encoder can be regarded as an adaptive front-end which seeks to replace STFT with a differentiable transform that is jointly trained (learned) with the separation model. It accepts as its input a time-domain mixture signal then learns STFT-like representation (Subakan et al. 2021; Kong et al. 2022). By working directly with the time-domain signal, these models avoid the decoupling of the magnitude and phase of the input signal (Luo and Mesgarani 2019). Most systems employ 1-dimensional convolution as the encoder to learn the features of the input signal. The transform generated by the encoder is then passed to the separation module. Work in Kavalerov et al. (2019) demonstrates that learned bases from raw data produce better results for speech/non-speech separation.

-

2.

Separation module This module is fed by the output of the encoder. It implements techniques to identify the different sources present in the input signal.

-

3.

Decoder It accepts input from the separation module and sometimes from the encoder(for residual implementation ). It is mostly implemented as an inverse of the encoder in order to reconstruct the separated signals (Luo and Mesgarani 2018, 2019; Subakan et al. 2021).

3.2.2 Waveform mapping

The second category of systems implement end-to-end systems where they utilise deep learning models to fit a regression function that maps an input mixed signal to its constituent estimated clean signal without an explicit front-end encoder (see Fig. 12). The models are trained using a pair of mixed(noisy) and clean speech. The model is fed with features of mixed signal for it to estimate clean speech. The training involves minimising an objective function such as minimum mean square error(MMSE) between the features of the clean signal and the estimated clean signal generated by the model. This approach has been implemented in Stoller et al. (2018), Fu et al. (2018b), Lluís et al. (2019).

3.2.3 Generative modelling

SEGAN (Pascual et al. 2017) is GAN based model for speech denoising that conditions both G and D of Eq. (26) on extra information z representing latent representation of the input. To solve the problem of vanishing gradient associated with optimizing objective in Eq. (26), they replace the cross-entropy loss by a least square function in Eq. (45).

Here, \(\bar{x}\) is the noisy speech, x is the clean speech, z is the extra input latent representation and \(||.||_1\) is the \(l_1\) norm distance between the clean sample x and the generated sample \(|G(z,\bar{x})\) to encourage the generator G to generate more realistic audio. Work in Pascual et al. (2019) improves SEGAN to handle a more generalised speech signal distortion case which involves distortions such as chunk removal, band reduction, clipping and whispered speech. Work (Phan et al. 2020) improves SEGAN by implementing multiple generators as opposed to one and demonstrates that by doing so the speech quality of the enhanced speech is better than when a single generator is used. Work in Adiga et al. (2019) proposes a variation of SEGAN that is more tailored towards speech synthesis and not ASR. They replace the original loss function used in SEGAN with Wasserstein distance with gradient penalty(WGAN) (Gulrajani et al. 2017). They also exploit gated linear unit as activation function which has been shown in van den Oord et al. (2016) to be more robust in generating realistic speech. Other GAN based models for speech enhancement in the time domain include Xiao et al. (2021). Other tools that implement supervised conditional GAN include Donahue et al. (2018), Li et al. (2018a), Qin and Jiang (2018), Fu et al. (2019). In Qian et al. (2017), Bayesian network is exploited to generate estimated clean speech from a noisy one.

3.3 Challenges of working with time-domain features

-

1.

Time domain features lack direct frequency representation; this hinders the features from capturing speech phonetics that are present in the frequency domain. Due to this, artefacts are always introduced in the reconstructed speech in the time domain (Cao et al. 2022).

-

2.

The time domain waveform has a large input space. Based on this, models working with raw waveforms are often deep and complex in order to effectively model the dependencies in the waveform. This is computationally expensive (Défossez et al. 2020; Pascual et al. 2017; Subakan et al. 2021; Wang et al. 2021).

.

4 Which feature produces superior quality of enhanced speech?

We performed analysis of 500 papers that exploit DNN to perform speech enhancement(i.e., multi-talker speech separation or denoising or dereverberation). We selected papers published from 2018 to 2022. We were interested to answer the question, which features are more popular with these tools? The summary is presented in Fig. 13. Based on the analysis, time-domain features popularity has grown rapidly from 2018 to 2022. The use of DFT features has slightly dropped, however remains popular over the five years. The popularity of MFCC and LPS has diminished. The popularity of features that are computationally expensive such as time-domain and DFT features may be attributed to the improved computation power of computers and efficient sequence modelling techniques such as transformers and temporal convolutional networks (see Sect. 5 for discussion). Features such as MFCC are becoming less popular due to their reduced resolution, which must be extrapolated during reconstruction hence placing an upper bound on the quality of enhanced speech.

We also investigated whether DFT or time-domain features produced the highest quality enhanced speech. Several works have conducted experiments with the goal to answer this question. Notable works include Heitkaemper et al. (2020) and Bahmaninezhad et al. (2019). For example, Heitkaemper et al. (2020) investigates Conv-TasNet’s Luo and Mesgarani (2019) performance under different input types in the encoder and decoder. Conv-TasNet uses a frame length of 4ms, stride of 2 ms and overlap of 2 ms. Sample results presented in Heitkaemper et al. (2020) are presented in Table 1 where evaluation parameters include scale-invariant signal-to-distortion (si_SDR), signal-to-distortion (SDR), word error rate (WER). The results in Table 1 show that the Conv-TasNet model gives marginally better results in terms of \(si\_SDR\), SDBdB and WER when the input is in time domain where the signal representation is learned by the encoder and output is learned by the decoder. The results are significantly reduced in all the three parameters if STFT is used as the input and its inverse used in the decoder. For instance, Conv-TasNet model achieves a SDR of 14.7 when time-domain features are used. This drops to 12.8 when DFT features are used. This shows that working in the time domain may be better for this setting as compared to the frequency domain. Work in Bahmaninezhad et al. (2019) also shows the same trend where working in time-domain provides better results as compared to frequency domain. However, for mixed speech with reverberation, the use of a time domain signal does not improve the same results as compared to the frequency domain and further investigation on behaviour of both time and frequency features in the presence of reverberation is needed (Bahmaninezhad et al. 2019).

5 Long term dependencies modelling

To effectively perform speech separation, the speech separation tools need to model both long and short sequences within the audio signal. To do this, existing tools have employed several techniques:

5.1 Use of RNN

The initial speech separation models such as Gao et al. (2016), Xu et al. (2015), Xu et al. (2014b) relied on a feedforward DNN to estimate clean speech from a noisy one. However, feedforward DNN models are ill poised for speech data since they are unable to effectively model long dependencies across time that are present in the speech data. Due to this, researchers progressively introduced recurrent neural networks (RNN) which have a feedback structure such that the representations at given time step t is a function of the data at time t, the hidden state and memory at time \(t-1\). One such RNN that has been exploited in speech separation is long-short-term memory (LSTM) (Hochreiter and Schmidhuber 1997). LSTM has memory blocks that are composed of a memory cell to remember the temporary state and several gates to control the information and gradient flow. LSTM structures can be used to model sequential prediction networks which can exploit long-term contextual information (Hochreiter and Schmidhuber 1997). Works in Weninger et al. (2014), Chen et al. (2015), Han et al. (2019) exploit LSTM to perform speech separation while (Erdogan et al. 2015) uses bidirectional long short-term memory (BLSTM) networks to make use of contextual information from both sides in the sequence. Due to their inherently sequential nature, RNN models are unable to support parallelization of computation. This limits their use when working with large datasets with long sequences due to slow training (Subakan et al. 2021). Moreover, in speech separation, a typical frame(input features) is usually 25ms which corresponds to 400 samples at a 16kHz sampling rate, for LSTM to work directly on the raw waveform, it would require unrolling the LSTM for an unrealistic large number of time steps to cover an audio of modest length (Sainath et al. 2015). Other models that use different versions of RNN include Parveen and Green (2004). Models such as Wichern and Lukin (2017) use the gated recurrent unit (GRU) (Cho et al. 2014) to perform speech denoising.

5.2 Use of temporal convolution network

Conventional convolution neural networks(CNN)have been used to design speech separation models (Jansson et al. 2017; Chandna et al. 2017). However, CNNs are limited in their ability to model long-range dependencies due to limited receptive fields (Chen et al. 2020). They are therefore mainly tailored to learn local features. They exploit local window which maintain translation equivariance to learn a shared position-based kernel (Gulati et al. 2020). For CNN to capture long range dependencies ( i.e., to enlarge the receptive field), there is a need to stack many layers. This increases computation cost due to the large number of parameters. These shortcomings of the CNN and RNN, have motivated the use of dilated temporal convolution network (TCN) in speech separation to encode long-range dependencies using hierarchical convolutional layers (Rethage et al. 2018; Li et al. 2018b; Lea et al. 2017; Zeghidour and Grangier 2021; Zhang et al. 2020b). TCN is composed of two key distinguishing characteristics: the convolution in the model must be causal i.e., a given activation of a certain layer l at time t is only influenced by activations of the previous layer \(l-1\) from time steps that are less that t, 2) the model takes the sequence of any length and maps it into an output sequence of the same length. To achieve the second characteristic, TCN models are implemented using a 1-dimensional convolutional network such that each hidden layer is the same length as the input layer. To ensure same length, a zero padding of length \(filter size-1\) is added to keep subsequent layers the same length as previous ones (Bai et al. 2018) (see Fig. 14). The first property is achieved through the use of causal convolutions i.e. where an output at time t is convolved only with elements from time t and earlier in the previous layer. To increase the receptive fields, models implement dilated TCN. Dilated convolution is where the filter is applied to a region larger than its size (He and Zhao 2019). This is achieved by skipping input with certain specified steps (see Fig. 10). More formally, for 1D sequence such as speech signal, the input \(x\in R^n\) and the kernel \(f:\{0,\ldots , k-1\}\rightarrow R\), the dilated convolution operation F on an element s of a given sequence is defined according to Eq. (46) (Bai et al. 2018).

where x is the 1D input signal, k is the kernel and d is the dilation factor. The effect of this is to expand the receptive field without loss of resolution and drastically increase the number of parameters. Stacked dilated convolution expands the receptive field with only a few layers. The expanded receptive field allows the network to capture temporal dependence of various resolutions with the input sequences (Zhang et al. 2020a). In effect, TCN introduces the idea of time-hierarchy where the upper layers of the network model longer input sequences on larger timescales while local information are modelled by lower layers and are mainly maintained in the network through residuals and skip connections (Zhang et al. 2020a). TCN also uses causal convolution where a given output at layer l in time step t is computed only based on time steps up to time step \(t-1\) in the previous layer. The dilated TCN is exploited by Luo and Mesgarani (2019) to model sequences that exist within the input speech signal. They implement TCN such that each layer is composed of 1-D dilated convolution blocks. The layers have 1-D CNN blocks with increasing dilation factors. This is to uncover long range dependencies that exist in the audio input. The dilation factors increase exponentially over the layers in order to cover a large temporal context window to exploit the long-range dependencies that exist within a speech signal.

Here, y(m, n) is the output of a given layer of dilated convolution, x(m, n) is the input and w(i, j) is the filter with the length and the width of M and N respectively. The parameter r is the dilation rate. Note that if \( r = 1\), the dilated convolution becomes the normal convolution convolution.

5.3 Use of transformers

A transformer (Vaswani et al. 2017) is an attention-based deep learning technique that has been successful in modelling sequences and allows uncovering of dependencies that exist within an input without regard to the distance between any two values of the input. Transformers consist only of feed-forward layers which allows them to exploit the parallel processing capabilities of GPUs leading to fast training (Vaswani et al. 2017). In speech separation, Subakan et al. (2021) introduces a speech separation system that fully relies on transformers to model the dependencies that exist in the mixed audio signal. This is used to extract a mask for each of the speakers in the audio mixture. The transformer is used to uncover both the short-term dependencies (within a frame) and long-term dependencies (between frames). Work in Chen et al. (2020) also exploits transformers in the encoder to model the dependencies that exist in the mixed audio while (Zhao et al. 2020) uses transformers to perform speech dereverberation. Despite their ability to model long-range dependencies and ability to work well with parallelization, the attention mechanism of transformers, has \(O(N^2 )\) complexity that brings a major memory bottleneck (Subakan et al. 2022a). For a sequence of length N, the transformer needs to compare \(N^2\) elements which results in a computational bottleneck especially for long signals such as speech. Transformers also use many parameters aggravating the memory problem further. Several versions of transformers such as Longformer (Beltagy et al. 2020), LinFormer (Wang et al. 2020) and Reformer (Kitaev et al. 2020) have been proposed with a goal to reduce the computation complexity of the transformers. Work in Subakan et al. (2022b) investigates the performance of the three versions of transformers in speech separation and concludes that they are suitable for speech separation applications since they achieve a highly favourable trade-off between performance and computational requirements. Work in Luo et al. (2022) proposes a technique of parameter sharing to reduce the computation complexity of the transformer while (Subakan et al. 2022a) reduces complexity by avoiding frame overlap. In Chen et al. (2021), a teacher-student speech separation model based on transformer is proposed. The student model which is much smaller than the teacher model is used to reduce computation complexity. Other transformer-based speech enhancement tools include Wang et al. (2021), de Oliveira et al. (2022). Another key limitation of a transformer is that while it can model long-range global context, they do not extract fine-grained local features patterns well. Based on this, transformer-based speech separation tools apply attention within a frame (chunk) to capture local features and between frames(chunks) to capture global features (Subakan et al. 2021; Qiu and Hu 2022).

6 Model size reduction techniques

To achieve high performance i.e., generate speech with high intelligibility, DNN models for speech enhancements are becoming large by exploiting millions of parameters (Lutati et al. 2022). High number of parameters increase the memory requirements, computation complexity and latency. To reduce these parameters significantly without compromising quality and make speech enhancement tools to work in resource constrained platform, several techniques are being exploited. The techniques include:

-