Abstract

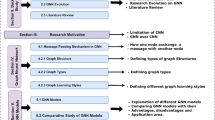

Graph neural networks (GNNs) aim to learn well-trained representations in a lower-dimension space for downstream tasks while preserving the topological structures. In recent years, attention mechanism, which is brilliant in the fields of natural language processing and computer vision, is introduced to GNNs to adaptively select the discriminative features and automatically filter the noisy information. To the best of our knowledge, due to the fast-paced advances in this domain, a systematic overview of attention-based GNNs is still missing. To fill this gap, this paper aims to provide a comprehensive survey on recent advances in attention-based GNNs. Firstly, we propose a novel two-level taxonomy for attention-based GNNs from the perspective of development history and architectural perspectives. Specifically, the upper level reveals the three developmental stages of attention-based GNNs, including graph recurrent attention networks, graph attention networks, and graph transformers. The lower level focuses on various typical architectures of each stage. Secondly, we review these attention-based methods following the proposed taxonomy in detail and summarize the advantages and disadvantages of various models. A model characteristics table is also provided for a more comprehensive comparison. Thirdly, we share our thoughts on some open issues and future directions of attention-based GNNs. We hope this survey will provide researchers with an up-to-date reference regarding applications of attention-based GNNs. In addition, to cope with the rapid development in this field, we intend to share the relevant latest papers as an open resource at https://github.com/sunxiaobei/awesome-attention-based-gnns.

Similar content being viewed by others

References

Abu-El-Haija S, Perozzi B, Al-Rfou R et al (2018) Watch your step: learning node embeddings via graph attention. Adv Neural Inf Processing Syst. https://doi.org/10.48550/arXiv.1710.09599

Ahmad WU, Peng N, Chang KW (2021) Gate: graph attention transformer encoder for cross-lingual relation and event extraction. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 12,462–12,470

Alon U, Yahav E (2020) On the bottleneck of graph neural networks and its practical implications. Mach Learn 89:5–35

Baek J, Kang M, Hwang SJ (2021) Accurate learning of graph representations with graph multiset pooling. In: The Ninth International Conference on Learning Representations, The International Conference on Learning Representations (ICLR)

Bai S, Zhang F, Torr PH (2021) Hypergraph convolution and hypergraph attention. Pattern Recognit 110(107):637

Battaglia PW, Hamrick JB, Bapst V, et al (2018) Relational inductive biases, deep learning, and graph networks. arXiv preprint arXiv:1806.01261

Battiston F, Amico E, Barrat A et al (2021) The physics of higher-order interactions in complex systems. Nat Phys 17(10):1093–1098

Bo D, Wang X, Shi C, et al (2021) Beyond low-frequency information in graph convolutional networks. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 3950–3957

Brauwers G, Frasincar F (2021) A general survey on attention mechanisms in deep learning. IEEE Trans Knowl Data Eng. https://doi.org/10.1109/TKDE.2021.3126456

Brody S, Alon U, Yahav E (2021) How attentive are graph attention networks? In: International Conference on Learning Representations

Bruna J, Zaremba W, Szlam A, et al (2014) Spectral networks and locally connected networks on graphs. In: International Conference on Learning Representations (ICLR2014), CBLS, April 2014, pp http–openreview

Busbridge D, Sherburn D, Cavallo P, et al (2019) Relational graph attention networks. arXiv preprint arXiv:1904.05811

Cai D, Lam W (2020) Graph transformer for graph-to-sequence learning. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 7464–7471

Cao M, Ma X, Zhu K, et al (2020) Heterogeneous information network embedding with convolutional graph attention networks. In: 2020 International Joint Conference on Neural Networks (IJCNN), IEEE, pp 1–8

Cen Y, Zou X, Zhang J, et al (2019) Representation learning for attributed multiplex heterogeneous network. In: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 1358–1368

Chami I, Ying Z, Ré C, et al (2019) Hyperbolic graph convolutional neural networks. Advances in neural information processing systems 32

Chaudhari S, Mithal V, Polatkan G et al (2021) An attentive survey of attention models. ACM Trans Intell Syst Technol (TIST) 12(5):1–32

Chen B, Barzilay R, Jaakkola T (2019) Path-augmented graph transformer network. Mach Learn. https://doi.org/10.48550/arXiv.1905.12712

Cheng R, Li Q (2021) Modeling the momentum spillover effect for stock prediction via attribute-driven graph attention networks. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 55–62

Choi E, Bahadori MT, Song L, et al (2017) Gram: graph-based attention model for healthcare representation learning. In: Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 787–795

Choi J (2022) Personalized pagerank graph attention networks. ICASSP 2022–2022 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 3578–3582

Cini A, Marisca I, Bianchi FM, et al (2022) Scalable spatiotemporal graph neural networks. arXiv preprint arXiv:2209.06520

Defferrard M, Bresson X, Vandergheynst P (2016) Convolutional neural networks on graphs with fast localized spectral filtering. Adv Neural Inf Processing Syst. https://doi.org/10.48550/arXiv.1606.09375

Dwivedi VP, Bresson X (2020) A generalization of transformer networks to graphs. Mach Learn. https://doi.org/10.48550/arXiv.2012.09699

Ektefaie Y, Dasoulas G, Noori A et al (2023) Multimodal learning with graphs. Nat Mach Intell. https://doi.org/10.1038/s42256-023-00624-6

Fang X, Huang J, Wang F, et al (2020) Constgat: Contextual spatial-temporal graph attention network for travel time estimation at baidu maps. In: Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 2697–2705

Fathy A, Li K (2020) Temporalgat: attention-based dynamic graph representation learning. Pacific-Asia conference on knowledge discovery and data mining. Springer, Berlin, pp 413–423

Feng B, Wang Y, Ding Y (2021) Uag: Uncertainty-aware attention graph neural network for defending adversarial attacks. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 7404–7412

Gao C, Wang X, He X, et al (2022) Graph neural networks for recommender system. In: Proceedings of the Fifteenth ACM International Conference on Web Search and Data Mining, pp 1623–1625

Gao H, Ji S (2019) Graph representation learning via hard and channel-wise attention networks. In: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 741–749

Georgousis S, Kenning MP, Xie X (2021) Graph deep learning: State of the art and challenges. IEEE Access 9:22

Gilmer J, Schoenholz SS, Riley PF, et al (2017) Neural message passing for quantum chemistry. In: International Conference on Machine Learning, PMLR, pp 1263–1272

Grover A, Leskovec J (2016) node2vec: Scalable feature learning for networks. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 855–864

Gulcehre C, Denil M, Malinowski M et al (2018) Hyperbolic attention networks. Neural Evol Comput. https://doi.org/10.48550/arXiv.1805.09786

Guo MH, Xu TX, Liu JJ et al (2022) Attention mechanisms in computer vision: a survey. Comput Vis Media 3:1–38

Guo S, Lin Y, Feng N, et al (2019) Attention based spatial-temporal graph convolutional networks for traffic flow forecasting. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 922–929

Hamilton W, Ying Z, Leskovec J (2017) Inductive representation learning on large graphs. Adv Neural Inf Processing Syst. https://doi.org/10.1093/bioinformatics/btad135

He S, Shin KG (2020) Towards fine-grained flow forecasting: a graph attention approach for bike sharing systems. Proc Web Conf 2020:88–98

He T, Ong YS, Bai L (2021) Learning conjoint attentions for graph neural nets. Adv Neural Inf Proc Syst 34:2641–2653

Hong H, Guo H, Lin Y, et al (2020) An attention-based graph neural network for heterogeneous structural learning. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 4132–4139

Hu W, Fey M, Zitnik M et al (2020) Open graph benchmark: datasets for machine learning on graphs. Adv Neural Inf Processing Syst 33:22

Hu Z, Dong Y, Wang K et al (2020) Heterogeneous graph transformer. Proc Web Conf 2020:2704–2710

Huang B, Carley KM (2019) Syntax-aware aspect level sentiment classification with graph attention networks. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pp 5469–5477

Huang J, Li Z, Li N et al (2019a) Attpool: Towards hierarchical feature representation in graph convolutional networks via attention mechanism. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 6480–6489

Huang J, Shen H, Hou L et al (2019) Signed graph attention networks. Int Conf Artif Neural Netw. Springer, Berlin, pp 566–577

Jiang W, Luo J (2021) Graph neural network for traffic forecasting: A survey. arXiv preprint arXiv:2101.11174

Jung J, Jung J, Kang U (2021a) Learning to walk across time for interpretable temporal knowledge graph completion. In: Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, pp 786–795

Jw Jung, Heo HS, Yu HJ et al (2021) Graph attention networks for speaker verification. ICASSP 2021–2021 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 6149–6153

Kazemi SM, Goel R, Jain K et al (2020) Representation learning for dynamic graphs: a survey. J Mach Learn Res 21(70):1–73

Kim BH, Ye JC, Kim JJ (2021) Learning dynamic graph representation of brain connectome with Spatio-temporal attention. Adv Neural Inf Proc Syst 34:4314–4327

Kim D, Oh AH (2021) How to find your friendly neighborhood: Graph attention design with self-supervision. In: The Ninth International Conference on Learning Representations (ICLR 2021), International Conference on Learning Representations (ICLR 2021)

Kim J, Oh S, Hong S (2021) Transformers generalize deepsets and can be extended to graphs & hypergraphs. Adv Neural Inf Proc Syst 34:28,016-28,028

Kim J, Yoon S, Kim D et al (2021c) Structured co-reference graph attention for video-grounded dialogue. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 1789–1797

Klicpera J, Bojchevski A, Günnemann S (2018) Predict then propagate: graph neural networks meet personalized Pagerank. Comput Sci. https://doi.org/10.48550/arXiv.1810.05997

Klicpera J, Weißenberger S, Günnemann S (2019) Diffusion improves graph learning. arXiv preprint arXiv:1911.05485

Knyazev B, Taylor GW, Amer M (2019) Understanding attention and generalization in graph neural networks. Adv Neural Inf Proc Syst. https://doi.org/10.48550/arXiv.1905.02850

Koncel-Kedziorski R, Bekal D, Luan Y, et al (2019) Text generation from knowledge graphs with graph transformers. In: 2019 Annual Conference of the North American Chapter of the Association for Computational Linguistics, Association for Computational Linguistics (ACL), pp 2284–2293

Kreuzer D, Beaini D, Hamilton W et al (2021) Rethinking graph transformers with spectral attention. Adv Neural Inf Proc Syst 34:21,618-21,629

Lee J, Lee I, Kang J (2019) Self-attention graph pooling. International conference on machine learning. PMLR, New York, pp 3734–3743

Lee JB, Rossi R, Kong X (2018) Graph classification using structural attention. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 1666–1674

Lee JB, Rossi RA, Kim S et al (2019) Attention models in graphs: a survey. ACM Trans Knowl Dis Data (TKDD) 13(6):1–25

Li J, Liu X, Zong Z et al (2020a) Graph attention based proposal 3d convnets for action detection. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 4626–4633

Li L, Gan Z, Cheng Y et al (2019) Relation-aware graph attention network for visual question answering. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 10,313–10,322

Li Q, Han Z, Wu XM (2018) Deeper insights into graph convolutional networks for semi-supervised learning. In: 32nd AAAI Conference on Artificial Intelligence, AAAI 2018, AAAI press, pp 3538–3545

Li X, Shang Y, Cao Y et al (2020b) Type-aware anchor link prediction across heterogeneous networks based on graph attention network. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 147–155

Li Y, Zemel R, Brockschmidt M et al (2016) Gated graph sequence neural networks. In: Proceedings of ICLR’16

Li Y, Tian Y, Zhang J et al (2020c) Learning signed network embedding via graph attention. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 4772–4779

Liang Y, Ke S, Zhang J et al (2018) Geoman: Multi-level attention networks for geo-sensory time series prediction. In: Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), pp 3428–3434

Liao R, Li Y, Song Y et al (2019) Efficient graph generation with graph recurrent attention networks. Adv Neural Inf Proc Syst. https://doi.org/10.1016/j.aiopen.2021.01.001

Lin L, Wang H (2020) Graph attention networks over edge content-based channels. In: Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 1819–1827

Lin L, Blaser E, Wang H (2022) Graph embedding with hierarchical attentive membership. In: Proceedings of the Fifteenth ACM International Conference on Web Search and Data Mining, pp 582–590

Lin T, Wang Y, Liu X et al (2021) A survey of transformers. arXiv preprint arXiv:2106.04554

Liu M, Gao H, Ji S (2020) Towards deeper graph neural networks. In: Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 338–348

Liu M, Wang Z, Ji S (2021) Non-local graph neural networks. IEEE Trans Pattern Anal Mach Intell. https://doi.org/10.4108/eetel.v8i3.3461

Liu S, Chen Z, Liu H et al (2019a) User-video co-attention network for personalized micro-video recommendation. In: The World Wide Web Conference, pp 3020–3026

Liu Z, Chen C, Li L et al (2019b) Geniepath: Graph neural networks with adaptive receptive paths. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 4424–4431

Long Y, Wu M, Liu Y et al (2021) Graph contextualized attention network for predicting synthetic lethality in human cancers. Bioinformatics 37(16):2432–2440

Lu Y, Wang X, Shi C et al (2019) Temporal network embedding with micro-and macro-dynamics. In: Proceedings of the 28th ACM International Conference on Information and Knowledge Management, pp 469–478

Luan S, Hua C, Lu Q et al (2021) Is heterophily a real nightmare for graph neural networks to do node classification? arXiv preprint arXiv:2109.05641

Lv Q, Ding M, Liu Q et al (2021) Are we really making much progress? revisiting, benchmarking and refining heterogeneous graph neural networks. In: Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, pp 1150–1160

Ma N, Mazumder S, Wang H et al (2020) Entity-aware dependency-based deep graph attention network for comparative preference classification. In: Proceedings of Annual Meeting of the Association for Computational Linguistics (ACL-2020)

Maron H, Ben-Hamu H, Serviansky H et al (2019) Provably powerful graph networks. Adv Neural Inf Proc Syst. https://doi.org/10.1038/s43246-022-00315-6

Mei G, Pan L, Liu S (2022) Heterogeneous graph embedding by aggregating meta-path and meta-structure through attention mechanism. Neurocomputing 468:276–285

Min E, Chen R, Bian Y et al (2022) Transformer for graphs: An overview from architecture perspective. arXiv preprint arXiv:2202.08455

Mou C, Zhang J, Wu Z (2021) Dynamic attentive graph learning for image restoration. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 4328–4337

Nathani D, Chauhan J, Sharma C et al (2019) Learning attention-based embeddings for relation prediction in knowledge graphs. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pp 4710–4723

Nguyen DQ, Nguyen TD, Phung D (2019) Universal graph transformer self-attention networks. arXiv preprint arXiv:1909.11855

Peng H, Li J, Gong Q et al (2020) Motif-matching based subgraph-level attentional convolutional network for graph classification. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 5387–5394

Perozzi B, Al-Rfou R, Skiena S (2014) Deepwalk: Online learning of social representations. In: Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 701–710

Phuong M, Hutter M (2022) Formal algorithms for transformers. arXiv preprint arXiv:2207.09238

Qin L, Li Z, Che W et al (2021a) Co-gat: A co-interactive graph attention network for joint dialog act recognition and sentiment classification. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 13,709–13,717

Qin X, Sheikh N, Reinwald B et al (2021b) Relation-aware graph attention model with adaptive self-adversarial training. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 9368–9376

Qu M, Tang J, Shang J et al (2017) An attention-based collaboration framework for multi-view network representation learning. In: Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, pp 1767–1776

Rong Y, Huang W, Xu T et al (2019) Dropedge: Towards deep graph convolutional networks on node classification. In: International Conference on Learning Representations

Rong Y, Bian Y, Xu T et al (2020) Self-supervised graph transformer on large-scale molecular data. Adv Neural Inf Proc Syst 33:12,559-12,571

Roweis ST, Saul LK (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290(5500):2323–2326

Ruiz L, Gama F, Ribeiro A (2020) Gated graph recurrent neural networks. IEEE Tran Signal Proc 68:6303–6318

Sankar A, Wu Y, Gou L et al (2018) Dynamic graph representation learning via self-attention networks. arXiv preprint arXiv:1812.09430

Scarselli F, Gori M, Tsoi AC et al (2008) The graph neural network model. IEEE Trans Neural Netw 20(1):61–80

Schuetz MJ, Brubaker JK, Katzgraber HG (2022) Combinatorial optimization with physics-inspired graph neural networks. Nat Mach Intell 4(4):367–377

Seo SW, Song YY, Yang JY et al (2021) Gta: Graph truncated attention for retrosynthesis. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 531–539

Shang C, Liu Q, Chen KS et al (2018) Edge attention-based multi-relational graph convolutional networks. arXiv preprint arXiv:1802.04944

Shi M, Huang Y, Zhu X et al (2021a) Gaen: Graph attention evolving networks. In: Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), pp 1541–1547

Shi Y, Huang Z, Feng S et al (2021b) Masked label prediction: Unified message passing model for semi-supervised classification. In: Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, IJCAI 2021, Virtual Event / Montreal, Canada, 19-27 August 2021, pp 1548–1554

Shukla SN, Marlin BM (2021) Multi-time attention networks for irregularly sampled time series. arXiv preprint arXiv:2101.10318

Silva VF, Silva ME, Ribeiro P et al (2021) Time series analysis via network science: concepts and algorithms. Wiley Interdiscip Rev 11(3):e1404

Stachenfeld K, Godwin J, Battaglia P (2020) Graph networks with spectral message passing. arXiv preprint arXiv:2101.00079

Stärk H, Beaini D, Corso G et al (2021) 3d infomax improves gnns for molecular property prediction. arXiv preprint arXiv:2110.04126

Su X, Xue S, Liu F et al (2022) A comprehensive survey on community detection with deep learning. IEEE Trans Neural Netw Learn Syst. https://doi.org/10.1109/TNNLS.2021.3137396

Sun Q, Liu H, He J et al (2020) Dagc: Employing dual attention and graph convolution for point cloud based place recognition. In: Proceedings of the 2020 International Conference on Multimedia Retrieval, pp 224–232

Suresh S, Budde V, Neville J et al (2021) Breaking the limit of graph neural networks by improving the assortativity of graphs with local mixing patterns. In: Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, pp 1541–1551

Tang H, Liang X, Wu B et al (2021) Graph ensemble networks for semi-supervised embedding learning. International Conference on Knowledge Science. Springer, Engineering and Management, pp 408–420

Tang J, Qu M, Wang M et al (2015) Line: Large-scale information network embedding. In: Proceedings of the 24th International Conference on World Wide Web, pp 1067–1077

Tao Z, Wei Y, Wang X et al (2020) Mgat: multimodal graph attention network for recommendation. Inf Proc Manag 57(5):102,277

Tay Y, Dehghani M, Bahri D et al (2020) Efficient transformers: a survey. ACM Compu Surveys (CSUR). https://doi.org/10.48550/arXiv.2009.06732

Thekumparampil KK, Wang C, Oh S et al (2018) Attention-based graph neural network for semi-supervised learning. arXiv preprint arXiv:1803.03735

Vaswani A, Shazeer N, Parmar N et al (2017) Attention is all you need. Adv Neural Inf Proc Syst 30:5998–6008

Veličković P, Cucurull G, Casanova A et al (2018) Graph attention networks. In: International Conference on Learning Representations

Vijaikumar M, Hada D, Shevade S (2021) Hypertenet: Hypergraph and transformer-based neural network for personalized list continuation. In: 2021 IEEE International Conference on Data Mining (ICDM), IEEE, pp 1210–1215

Wang G, Ying R, Huang J et al (2019a) Improving graph attention networks with large margin-based constraints. arXiv preprint arXiv:1910.11945

Wang G, Ying R, Huang J et al (2021) Multi-hop attention graph neural networks. In: Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI)

Wang K, Shen W, Yang Y et al (2020a) Relational graph attention network for aspect-based sentiment analysis. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp 3229–3238

Wang P, Han J, Li C et al (2019b) Logic attention based neighborhood aggregation for inductive knowledge graph embedding. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 7152–7159

Wang X, He X, Cao Y et al (2019c) Kgat: Knowledge graph attention network for recommendation. In: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 950–958

Wang X, Ji H, Shi C et al (2019d) Heterogeneous graph attention network. In: The World Wide Web Conference, pp 2022–2032

Wang X, Zhu M, Bo D et al (2020b) Am-gcn: Adaptive multi-channel graph convolutional networks. In: Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 1243–1253

Wang X, Bo D, Shi C et al (2022) A survey on heterogeneous graph embedding: methods, techniques, applications and sources. IEEE Trans Big Data 9(2):415–436

Wang Y, Derr T (2021) Tree decomposed graph neural network. In: Proceedings of the 30th ACM International Conference on Information & Knowledge Management, pp 2040–2049

Wang Z, Lei Y, Li W (2020) Neighborhood attention networks with adversarial learning for link prediction. IEEE Trans Neural Netw Learn Syst 32(8):3653–3663

Welling M, Kipf TN (2016) Semi-supervised classification with graph convolutional networks. In: J. International Conference on Learning Representations (ICLR 2017)

Wen Q, Zhou T, Zhang C et al (2022) Transformers in time series: A survey. arXiv preprint arXiv:2202.07125

Wu F, Souza A, Zhang T et al (2019a) Simplifying graph convolutional networks. In: International Conference on Machine Learning, PMLR, pp 6861–6871

Wu J, Shi W, Cao X et al (2021a) Disenkgat: knowledge graph embedding with disentangled graph attention network. In: Proceedings of the 30th ACM International Conference on Information & Knowledge Management, pp 2140–2149

Wu L, Chen Y, Shen K et al (2021b) Graph neural networks for natural language processing: A survey. arXiv preprint arXiv:2106.06090

Wu Q, Zhang H, Gao X et al (2019b) Dual graph attention networks for deep latent representation of multifaceted social effects in recommender systems. In: The World Wide Web Conference, pp 2091–2102

Wu S, Sun F, Zhang W et al (2020) Graph neural networks in recommender systems: a survey. ACM Comput Surv (CSUR). https://doi.org/10.7717/peerj-cs.1166

Wu Z, Pan S, Chen F et al (2020) A comprehensive survey on graph neural networks. IEEE Trans Neural Netw Learn Syst 32(1):4–24

Xia F, Sun K, Yu S et al (2021) Graph learning: a survey. IEEE Trans Artif Intell 2(2):109–127

Xia L, Huang C, Xu Y et al (2021b) Knowledge-enhanced hierarchical graph transformer network for multi-behavior recommendation. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 4486–4493

Xie Y, Zhang Y, Gong M et al (2020) Mgat: multi-view graph attention networks. Neural Netw 132:180–189

Xu D, Ruan C, Korpeoglu E et al (2020) Inductive representation learning on temporal graphs. arXiv preprint arXiv:2002.07962

Xu K, Hu W, Leskovec J et al (2018a) How powerful are graph neural networks? In: International Conference on Learning Representations

Xu K, Li C, Tian Y et al (2018b) Representation learning on graphs with jumping knowledge networks. In: International conference on machine learning, PMLR, pp 5453–5462

Xu X, Zu S, Gao C et al (2018c) Modeling attention flow on graphs. arXiv preprint arXiv:1811.00497

Xu Y, Wang L, Wang Y et al (2022) Adaptive trajectory prediction via transferable gnn. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 6520–6531

Yan S, Xiong Y, Lin D (2018) Spatial temporal graph convolutional networks for skeleton-based action recognition. In: Thirty-second AAAI Conference on Artificial Intelligence

Yang J, Liu Z, Xiao S et al (2021) Graphformers: Gnn-nested transformers for representation learning on textual graph. Adv Neural Inf Processing Syst 34:28,798-28,810

Yang L, Wu F, Gu J et al (2020) Graph attention topic modeling network. Proc Web Conf 2020:144–154

Yang L, Li M, Liu L et al (2021) Diverse message passing for attribute with heterophily. Adv Neural Inf Processing Syst 34:4751–4763

Yang M, Zhou M, Li Z et al (2022a) Hyperbolic graph neural networks: A review of methods and applications. arXiv preprint arXiv:2202.13852

Yang R, Shi J, Yang Y et al (2021) Effective and scalable clustering on massive attributed graphs. Proc Web Conf 2021:3675–3687

Yang S, Hu B, Zhang Z et al (2021) Inductive link prediction with interactive structure learning on attributed graph. Joint European conference on machine learning and knowledge discovery in databases. Springer, Berlin, pp 383–398

Yang T, Hu L, Shi C et al (2021) Hgat: heterogeneous graph attention networks for semi-supervised short text classification. ACM Trans Inf Syst(TOIS) 39(3):1–29

Yang Y, Wang X, Song M et al (2019) Spagan: shortest path graph attention network. In: Proceedings of the 28th International Joint Conference on Artificial Intelligence, pp 4099–4105

Yang Y, Qiu J, Song M et al (2020b) Distilling knowledge from graph convolutional networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 7074–7083

Yang Y, Jiao L, Liu X et al (2022b) Transformers meet visual learning understanding: A comprehensive review. arXiv preprint arXiv:2203.12944

Yang Z, Dong S (2020) Hagerec: hierarchical attention graph convolutional network incorporating knowledge graph for explainable recommendation. Knowl-Based Syst 204(106):194

Ying C, Cai T, Luo S et al (2021) Do transformers really perform badly for graph representation? Adv Neural Inf Proc Syst 34:28,877-28,888

Ying Z, You J, Morris C et al (2018) Hierarchical graph representation learning with differentiable pooling. Adv Neural Inf Processing Systms. https://doi.org/10.48550/arXiv.1806.08804

Yuan H, Yu H, Gui S et al (2020) Explainability in graph neural networks: A taxonomic survey. arXiv preprint arXiv:2012.15445

Yuan H, Yu H, Wang J et al (2021a) On explainability of graph neural networks via subgraph explorations. In: International Conference on Machine Learning, PMLR, pp 12,241–12,252

Yuan J, Yu H, Cao M et al (2021b) Semi-supervised and self-supervised classification with multi-view graph neural networks. In: Proceedings of the 30th ACM International Conference on Information & Knowledge Management, pp 2466–2476

Yun S, Jeong M, Kim R et al (2019) Graph transformer networks. Adv Neural Inf Processing Syst. https://doi.org/10.1016/j.neunet.2022.05.026

Zeng H, Zhou H, Srivastava A et al (2019) Graphsaint: Graph sampling based inductive learning method. In: International Conference on Learning Representations

Zhang C, Gao J (2021) Hype-han: Hyperbolic hierarchical attention network for semantic embedding. In: Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence, pp 3990–3996

Zhang J, Shi X, Xie J et al (2018) Gaan: Gated attention networks for learning on large and spatiotemporal graphs. In: 34th Conference on Uncertainty in Artificial Intelligence 2018, UAI 2018

Zhang J, Zhang H, Xia C et al (2020a) Graph-bert: Only attention is needed for learning graph representations. arXiv preprint arXiv:2001.05140

Zhang J, Gao M, Yu J et al (2021a) Double-scale self-supervised hypergraph learning for group recommendation. In: Proceedings of the 30th ACM International Conference on Information & Knowledge Management, pp 2557–2567

Zhang J, Chen Y, Xiao X et al (2022) Learnable hypergraph laplacian for hypergraph learning. ICASSP 2022–2022 IEEE International Conference on Acoustics. Speech and Signal Processing (ICASSP), IEEE, pp 4503–4507

Zhang J, Li F, Xiao X et al (2022b) Hypergraph convolutional networks via equivalency between hypergraphs and undirected graphs. arXiv preprint arXiv:2203.16939

Zhang K, Zhu Y, Wang J et al (2019) Adaptive structural fingerprints for graph attention networks. In: International Conference on Learning Representations

Zhang R, Zou Y, Ma J (2020b) Hyper-sagnn: a self-attention based graph neural network for hypergraphs. In: International Conference on Learning Representations (ICLR)

Zhang S, Xie L (2020) Improving attention mechanism in graph neural networks via cardinality preservation. In: Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), NIH Public Access, p 1395

Zhang W, Chen Z, Dong C et al (2021b) Graph-based tri-attention network for answer ranking in cqa. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 14,463–14,471

Zhang W, Yin Z, Sheng Z et al (2022c) Graph attention multi-layer perceptron. arXiv preprint arXiv:2206.04355

Zhang X, Zeman M, Tsiligkaridis T et al (2021c) Graph-guided network for irregularly sampled multivariate time series. In: International Conference on Learning Representations (ICLR)

Zhang Y, Wang X, Shi C et al (2021) Hyperbolic graph attention network. IEEE Trans Big Data 8(6):1690–1701

Zhang Z, Zhuang F, Zhu H et al (2020c) Relational graph neural network with hierarchical attention for knowledge graph completion. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 9612–9619

Zhao H, Wang Y, Duan J et al (2020) Multivariate time-series anomaly detection via graph attention network. In: 2020 IEEE International Conference on Data Mining (ICDM), IEEE, pp 841–850

Zhao Z, Gao B, Zheng VW et al (2017) Link prediction via ranking metric dual-level attention network learning. In: Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), pp 3525–3531

Zheng C, Fan X, Wang C et al (2020) Gman: A graph multi-attention network for traffic prediction. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 1234–1241

Zheng X, Liu Y, Pan S et al (2022) Graph neural networks for graphs with heterophily: A survey. arXiv preprint arXiv:2202.07082

Zhou J, Cui G, Hu S et al (2020) Graph neural networks: a review of methods and applications. AI Open 1:57–81

Zhou Y, Zheng H, Huang X et al (2022) Graph neural networks: taxonomy, advances, and trends. ACM Trans Intell Syst Technol (TIST) 13(1):1–54

Zhu Y, Lyu F, Hu C et al (2022) Learnable encoder-decoder architecture for dynamic graph: A survey. arXiv preprint arXiv:2203.10480

Zuo Y, Liu G, Lin H et al (2018) Embedding temporal network via neighborhood formation. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 2857–2866

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No.61876186) and the Xuzhou Science and Technology Project (No.KC21300).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

For ease of reading, we summarize the abbreviations of the methods and their corresponding full names in Table 5.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sun, C., Li, C., Lin, X. et al. Attention-based graph neural networks: a survey. Artif Intell Rev 56 (Suppl 2), 2263–2310 (2023). https://doi.org/10.1007/s10462-023-10577-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10462-023-10577-2