Abstract

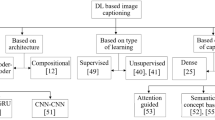

Image captioning is the task of automatically generating sentences that describe an input image in the best way possible. The most successful techniques for automatically generating image captions have recently used attentive deep learning models. There are variations in the way deep learning models with attention are designed. In this survey, we provide a review of literature related to attentive deep learning models for image captioning. Instead of offering a comprehensive review of all prior work on deep image captioning models, we explain various types of attention mechanisms used for the task of image captioning in deep learning models. The most successful deep learning models used for image captioning follow the encoder-decoder architecture, although there are differences in the way these models employ attention mechanisms. Via analysis on performance results from different attentive deep models for image captioning, we aim at finding the most successful types of attention mechanisms in deep models for image captioning. Soft attention, bottom-up attention, and multi-head attention are the types of attention mechanism widely used in state-of-the-art attentive deep learning models for image captioning. At the current time, the best results are achieved from variants of multi-head attention with bottom-up attention.

Similar content being viewed by others

Availability of data and material (data transparency)

The data-set used in all experiments and results reported in this work is publicly available for download. COCO data-set is available at “cocodataset.org”.

Code availability (software application or custom code)

The code for some of the papers reviewed in this work were originally published by the authors on github. The reader of this work can lookup for the code for a particular paper reviewed in this work online.

References

(2002) Proceedings of the 40th annual meeting of the association for computational linguistics, July 6-12, 2002, Philadelphia, PA, USA. ACL

Anderson P, Fernando B, Johnson M, Gould S (2016) Spice: Semantic propositional image caption evaluation. In: ECCV

Anderson P, He X, Buehler C, Teney D, Johnson M, Gould S, Zhang L (2018) Bottom-up and top-down attention for image captioning and visual question answering. In: CVPR

Aneja J, Deshpande A, Schwing AG (2018) Convolutional image captioning. In: Proceedings of the 2018 IEEE/CVF conference on computer vision and pattern recognition, pp 5561–5570. https://doi.org/10.1109/VPR.2018.00583

Bahdanau D, Cho K, Bengio Y (2015) Neural machine translation by jointly learning to align and translate. In: Proceedings of the 3rd international conference on learning representations, ICLR 2015

Bai S, An S (2018) A survey on automatic image caption generation. Neurocomputing 311:291–304. https://doi.org/10.1016/j.neucom.2018.05.080. http://www.sciencedirect.com/science/article/pii/S0925231218306659

Bengio Y, LeCun Y, Henderson D (1994) Globally trained handwritten word recognizer using spatial representation, convolutional neural networks, and hidden Markov models. In: Advances in neural information processing systems 6, pp 937–944. Morgan-Kaufmann

Bruna J, Zaremba W, Szlam A, LeCun Y (2014) Spectral networks and locally connected networks on graphs. In: Bengio Y, LeCun Y (eds) Proceedings of the 2nd international conference on learning representations, ICLR 2014, Banff, AB, Canada, April 14–16, 2014, Conference Track Proceedings. arXiv:1312.6203

Chen C, Mu S, Xiao W, Ye Z, Wu L, Ju Q (2019) Improving image captioning with conditional generative adversarial nets. Proc AAAI Conf Artif Intell 33(01):8142–8150. https://doi.org/10.1609/aaai.v33i01.33018142

Chen S, Jin Q, Wang P, Wu Q (2020) Say as you wish: fine-grained control of image caption generation with abstract scene graphs. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Chen L, Zhang H, Xiao J, Nie L, Shao J, Liu W, Chua TS (2017) Sca-cnn: Spatial and channel-wise attention in convolutional networks for image captioning. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR)

Chen S, Zhao Q (2018) Boosted attention: leveraging human attention for image captioning. In: The European conference on computer vision (ECCV)

Cho K, Courville A, Bengio Y (2015) Describing multimedia content using attention-based encoder–decoder networks. IEEE Trans Multimedia 17(11):1875–1886. https://doi.org/10.1109/TMM.2015.2477044

Cho K, Courville AC, Bengio Y (2015) Describing multimedia content using attention-based encoder–decoder networks. IEEE Trans Multimedia 17(11):1875–1886

Cho K, van Merriënboer B, Bahdanau D, Bengio Y (2014) On the properties of neural machine translation: Encoder–decoder approaches. In: Proceedings of SSST-8, eighth workshop on syntax, semantics and structure in statistical translation, pp. 103–111. Association for computational linguistics, Doha, Qatar. https://doi.org/10.3115/v1/W14-4012. https://www.aclweb.org/anthology/W14-4012

Cho K, van Merriënboer B, Gulcehre C, Bahdanau D, Bougares F, Schwenk H, Bengio Y (2014) Learning phrase representations using RNN encoder–decoder for statistical machine translation. In: Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), pp. 1724–1734. ACL, Doha, Qatar. https://doi.org/10.3115/v1/D14-1179

Cornia M, Baraldi L, Cucchiara R (2019) Show, control and tell: a framework for generating controllable and grounded captions. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Cornia M, Stefanini M, Baraldi L, Cucchiara R (2020) Meshed-memory transformer for image captioning. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Dai B, Fidler S, Urtasun R, Lin D (2017) Towards diverse and natural image descriptions via a conditional gan. In: Proceedings of the IEEE international conference on computer vision (ICCV)

Defferrard M, Bresson X, Vandergheynst P (2016) Convolutional neural networks on graphs with fast localized spectral filtering. Adv Neural Inform Process Syst 29:3844–3852

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) ImageNet: a large-scale hierarchical image database. In: CVPR09

Deubel H, Schneider WX (1996) Saccade target selection and object recognition: evidence for a common attentional mechanism. Vision Res 36(12):1827–1837. https://doi.org/10.1016/0042-6989(95)00294-4

Donahue J, Anne Hendricks L, Guadarrama S, Rohrbach M, Venugopalan S, Saenko K, Darrell T (2015) Long-term recurrent convolutional networks for visual recognition and description. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Fang H, Gupta S, Iandola F, Srivastava RK, Deng L, Dollar P, Gao J, He X, Mitchell M, Platt JC, Lawrence Zitnick C, Zweig G (2015) From captions to visual concepts and back. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR)

Farhadi A, Hejrati M, Sadeghi MA, Young P, Rashtchian C, Hockenmaier J, Forsyth D (2010) Every picture tells a story: generating sentences from images. In: Computer vision: ECCV 2010, pp 15–29. Springer, Berlin, Heidelberg

Feng Y, Ma L, Liu W, Luo J (2019) Unsupervised image captioning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Fu K, Jin J, Cui R, Sha F, Zhang C (2017) Aligning where to see and what to tell: image captioning with region-based attention and scene-specific contexts. IEEE Trans Pattern Anal Mach Intell 39(12):2321–2334. https://doi.org/10.1109/TPAMI.2016.2642953

Gao L, Fan K, Song J, Liu X, Xu X, Shen HT (2019) Deliberate attention networks for image captioning. Proc AAAI Conf Artif Intell 33(01):8320–8327. https://doi.org/10.1609/aaai.v33i01.33018320

Girshick R (2015) Fast r-cnn. In: The Ieee international conference on computer vision (ICCV)

Girshick R, Donahue J, Darrell T, Malik J (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the 2014 IEEE conference on computer vision and pattern recognition, pp. 580–587. https://doi.org/10.1109/CVPR.2014.81

Gong Y, Wang L, Hodosh M, Hockenmaier JC, Lazebnik S (2014) Improving image-sentence embeddings using large weakly annotated photo collections. In: Computer vision, ECCV 2014: 13th European conference, proceedings, no. PART 4 in lecture notes in computer science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), pp. 529–545. Springer, New York. https://doi.org/10.1007/978-3-319-10593-2_35

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. In: Ghahramani Z, Welling M, Cortes C, Lawrence ND, Weinberger KQ (eds.) Advances in neural information processing systems, vol 27, pp 2672–2680. Curran Associates, Inc. http://papers.nips.cc/paper/5423-generative-adversarial-nets.pdf

Guo L, Liu J, Zhu X, Yao P, Lu S, Lu H (2020) Normalized and geometry-aware self-attention network for image captioning. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR)

He K, Gkioxari G, Dollar P, Girshick R (2017) Mask r-cnn. In: The IEEE international conference on computer vision (ICCV)

Herdade S, Kappeler A, Boakye K, Soares J (2019) Image captioning: transforming objects into words. Adv Neural Inform Process Syst 32:11135–11145

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780. https://doi.org/10.1162/neco.1997.9.8.1735

Hodosh M, Young P, Hockenmaier JC (2015) Framing image description as a ranking task: Data, models and evaluation metrics. In: IJCAI 2015: proceedings of the 24th international joint conference on artificial intelligence, IJCAI international joint conference on artificial intelligence, pp 4188–4192. International Joint Conferences on Artificial Intelligence

Hossain MZ, Sohel F, Shiratuddin MF, Laga H (2019) A comprehensive survey of deep learning for image captioning. ACM Comput Surv 51(6):118:1-118:36. https://doi.org/10.1145/3295748

Huang L, Wang W, Xia Y, Chen J (2019) Adaptively aligned image captioning via adaptive attention time. Adv Neural Inform Process Syst 32:8940–8949

Huang L, Wang W, Chen J, Wei XY (2019) Attention on attention for image captioning. In: The IEEE international conference on computer vision (ICCV)

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. In: Proceedings of the 32nd international conference on international conference on machine learning, vol 37, ICML’15, pp 448–456. JMLR.org

Jaderberg M, Simonyan K, Zisserman A, Kavukcuoglu k (2015) Spatial transformer networks. In: Cortes C, Lawrence N, Lee D, Sugiyama M, Garnett R (eds) Advances in neural information processing systems, vol 28

Jia X, Gavves E, Fernando B, Tuytelaars T (2015) Guiding the long-short term memory model for image caption generation. In: The IEEE international conference on computer vision (ICCV)

Jiang W, Ma L, Jiang YG, Liu W, Zhang T (2018) Recurrent fusion network for image captioning. In: Computer vision: ECCV 2018, pp 510–526

Kalchbrenner N, Blunsom P (2013) Recurrent continuous translation models. In: Proceedings of the 2013 conference on empirical methods in natural language processing, pp. 1700–1709. ACL, Seattle, Washington, USA. https://www.aclweb.org/anthology/D13-1176

Karpathy A, Fei-Fei L (2015) Deep visual-semantic alignments for generating image descriptions. In: The IEEE conference on computer vision and pattern recognition (CVPR), pp 3128–3137. IEEE Computer Society

Karpathy A, Joulin A, Fei-Fei LF (2014) Deep fragment embeddings for bidirectional image sentence mapping. In: Advances in neural information processing systems vol 27, pp 1889–1897. Curran Associates, Inc

Ke L, Pei W, Li R, Shen X, Tai YW (2019) Reflective decoding network for image captioning. In: The IEEE international conference on computer vision (ICCV)

Kingma DP, Ba J (2015) Adam: a method for stochastic optimization. Int Conf Learn Repres (ICLR)

Kiros R, Salakhutdinov RR, Zemel RS (2014) Unifying visual-semantic embeddings with multimodal neural language models. CoRR arXiv:1411.2539

Kiros R, Salakhutdinov R, Zemel R (2014) Multimodal neural language models. In: Proceedings of the 31st international conference on machine learning, Proceedings of machine learning research, vol 32, pp 595–603. PMLR

Koch C, Ullman S (1987) Shifts in selective visual attention: towards the underlying neural circuitry, pp 115–141. Springer, Netherlands, Dordrecht. https://doi.org/10.1007/978-94-009-3833-5_5

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Adv Neural Inform Process Syst vol 25, pp 1097–1105. Curran Associates, Inc

Kulkarni G, Premraj V, Ordonez V, Dhar S, Li S, Choi Y, Berg AC, Berg TL (2013) Babytalk: understanding and generating simple image descriptions. IEEE Trans Pattern Anal Mach Intell 35(12):2891–2903. https://doi.org/10.1109/TPAMI.2012.162

Laina I, Rupprecht C, Navab N (2019) Towards unsupervised image captioning with shared multimodal embeddings. In: The IEEE international conference on computer vision (ICCV)

Lavie A, Denkowski MJ (2009) The meteor metric for automatic evaluation of machine translation. Mach Transl 23(2–3):105–115. https://doi.org/10.1007/s10590-009-9059-4

LeCun Y, Boser B, Denker JS, Henderson D, Howard RE, Hubbard W, Jackel LD (1989) Backpropagation applied to handwritten zip code recognition. Neural Comput 1(4):541–551. https://doi.org/10.1162/neco.1989.1.4.541

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Li X, Jiang S (2019) Know more say less: image captioning based on scene graphs. IEEE Trans Multimedia 21(8):2117–2130. https://doi.org/10.1109/TMM.2019.2896516

Li J, Yao P, Guo L, Zhang W (2019) Boosted transformer for image captioning. Appl Sci. https://doi.org/10.3390/app9163260

Li S, Tao Z, Li K, Fu Y (2019) Visual to text: survey of image and video captioning. IEEE Trans Emerg Top Comput Intell 3(4):297–312

Li S, Kulkarni G, Berg TL, Berg AC, Choi Y (2011) Composing simple image descriptions using web-scale n-grams. In: Proceedings of the fifteenth conference on computational natural language learning, CoNLL ’11, pp 220–228. Association for Computational Linguistics, Stroudsburg, PA, USA

Lin CY (2004) ROUGE: a package for automatic evaluation of summaries. In: Text summarization branches out, pp. 74–81. Association for Computational Linguistics, Barcelona, Spain. https://www.aclweb.org/anthology/W04-1013

Lin TY, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollar P, Zitnick CL (2014) Microsoft coco: common objects in context. In: Computer vision: ECCV 2014, pp 740–755. Springer, Cham

Li L, Tang S, Deng L, Zhang Y, Tian Q (2017) Image caption with global-local attention. In: Proceedings of the AAAI conference on artificial intelligence 31(1). https://ojs.aaai.org/index.php/AAAI/article/view/11236

Liu X, Xu Q, Wang N (2019) A survey on deep neural network-based image captioning. Vis Comput 35(3):445–470. https://doi.org/10.1007/s00371-018-1566-y

Liu F, Ren X, Liu Y, Lei K, Sun X (2019) Exploring and distilling cross-modal information for image captioning. In: Proceedings of the twenty-eighth international joint conference on artificial intelligence, IJCAI-19, pp 5095–5101. https://doi.org/10.24963/ijcai.2019/708

Liu J, Wang K, Xu C, Zhao Z, Xu R, Shen Y, Yang M (2020) Interactive dual generative adversarial networks for image captioning. In: The thirty-fourth AAAI conference on artificial intelligence, AAAI 2020, pp 11588–11595. https://doi.org/10.1609/aaai.v34i07.6826

Li G, Zhu L, Liu P, Yang Y (2019) Entangled transformer for image captioning. In: The IEEE international conference on computer vision (ICCV)

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Lu J, Xiong C, Parikh D, Socher R (2017) Knowing when to look: adaptive attention via a visual sentinel for image captioning. In: Proceedings of the 2017 IEEE conference on computer vision and pattern recognition (CVPR) pp 3242–3250

Lu J, Yang J, Batra D, Parikh D (2018) Neural baby talk. In: CVPR

Mikolov T, Sutskever I, Chen K, Corrado G, Dean J (2013) Distributed representations of words and phrases and their compositionality. In: Proceedings of the 26th international conference on neural information processing systems, vol 2, NIPS’13, pp 3111–3119. Curran Associates Inc., USA

Mun J, Cho M, Han B (2017) Text-guided attention model for image captioning. In: AAAI conference on artificial intelligence

Ordonez V, Kulkarni G, Berg TL (2011) Im2text: describing images using 1 million captioned photographs. In: Advances in neural information processing systems, vol 24, pp 1143–1151. Curran Associates, Inc

Pan Y, Yao T, Li Y, Mei T (2020) X-linear attention networks for image captioning. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Pavlopoulos J, Kougia V, Androutsopoulos I (2019) A survey on biomedical image captioning. In: Proceedings of the second workshop on shortcomings in vision and language, pp 26–36. Association for Computational Linguistics, Minneapolis, Minnesota. https://doi.org/10.18653/v1/W19-1803

Pedersoli M, Lucas T, Schmid C, Verbeek J (2017) Areas of attention for image captioning. In: Proceedings of the IEEE international conference on computer vision (ICCV)

Pennington J, Socher R, Manning CD (2014) Glove: global vectors for word representation. In: Empirical methods in natural language processing (EMNLP), pp 1532–1543

Qin Y, Du J, Zhang Y, Lu H (2019) Look back and predict forward in image captioning. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Ren S, He K, Girshick R, Sun J (2015) Faster r-cnn: towards real-time object detection with region proposal networks. In: Advances in neural information processing systems, vol 28, pp 91–99. Curran Associates, Inc

Rennie SJ, Marcheret E, Mroueh Y, Ross J, Goel V (2017) Self-critical sequence training for image captioning. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Sammani F, Melas-Kyriazi L (2020) Show, edit and tell: a framework for editing image captions. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Sharma H, Agrahari M, Singh SK, Firoj M, Mishra RK (2020) Image captioning: a comprehensive survey. In: Proceedings of the 2020 international conference on power electronics IoT applications in renewable energy and its control (PARC), pp 325–328

Shuster K, Humeau S, Hu H, Bordes A, Weston J (2019) Engaging image captioning via personality. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. CoRR arXiv:1409.1556

Sun C, Gan C, Nevatia R (2015) Automatic concept discovery from parallel text and visual corpora. In: The IEEE international conference on computer vision (ICCV)

Sutskever I, Vinyals O, Le QV (2014) Sequence to sequence learning with neural networks. In: Advances in neural information processing systems, vol 27, pp 3104–3112. Curran Associates, Inc

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Tieleman T, Hinton G (2012) Lecture 6.5—RmsProp: divide the gradient by a running average of its recent magnitude. COURSERA: Neural Networks for Machine Learning

Uijlings JR, Sande KE, Gevers T, Smeulders AW (2013) Selective search for object recognition. Int J Comput Vision 104(2):154–171. https://doi.org/10.1007/s11263-013-0620-5

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Lu, Polosukhin I (2017) Attention is all you need. In: Advances in neural information processing systems, vol 30, pp 5998–6008. Curran Associates, Inc

Vedantam R, Lawrence Zitnick C, Parikh D (2015) Cider: consensus-based image description evaluation. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Vinyals O, Fortunato M, Jaitly N (2015) Pointer networks. In: Advances in neural information processing systems, vol 28, pp 2692–2700. Curran Associates, Inc

Vinyals O, Toshev A, Bengio S, Erhan D (2015) Show and tell: a neural image caption generator. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Wang W, Chen Z, Hu H (2019) Hierarchical attention network for image captioning. Proc AAAI Conf Artif Intell 33:8957–8964. https://doi.org/10.1609/aaai.v33i01.33018957

Wang L, Bai Z, Zhang Y, Lu H (2020) Show, recall, and tell: image captioning with recall mechanism. In: The thirty-fourth AAAI conference on artificial intelligence, AAAI 2020, pp 12176–12183. https://doi.org/10.1609/aaai.v34i07.6898

Wang D, Beck D, Cohn T (2019) On the role of scene graphs in image captioning. In: Proceedings of the beyond vision and language, pp 29–34. Association for Computational Linguistics. https://doi.org/10.18653/v1/D19-6405

Wei Y, Wang L, Cao H, Shao M, Wu C (2020) Multi-attention generative adversarial network for image captioning. Neurocomputing 387:91–99. https://doi.org/10.1016/j.neucom.2019.12.073

Xie S, Girshick R, Dollar P, Tu Z, He K (2017) Aggregated residual transformations for deep neural networks. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Xu Huijuanand Saenko K (2016) Ask, attend and answer: Exploring question-guided spatial attention for visual question answering. In: Computer vision: ECCV 2016, pp 451–466. Springer, Cham

Xu K, Ba J, Kiros R, Cho K, Courville A, Salakhudinov R, Zemel R, Bengio Y (2015) Show, attend and tell: neural image caption generation with visual attention. In: Proceedings of the 32nd international conference on machine learning, proceedings of machine learning research, vol. 37, pp. 2048–2057. PMLR

Yang Z, He X, Gao J, Deng L, Smola A (2016) Stacked attention networks for image question answering. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR)

Yang X, Tang K, Zhang H, Cai J (2019) Auto-encoding scene graphs for image captioning. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Yang X, Zhang H, Cai J (2019) Learning to collocate neural modules for image captioning. In: The IEEE international conference on computer vision (ICCV)

Yao T, Pan Y, Li Y, Mei T (2018) Exploring visual relationship for image captioning. In: Computer vision: ECCV 2018, pp 711–727

Yao T, Pan Y, Li Y, Mei T (2019) Hierarchy parsing for image captioning. In: The IEEE international conference on computer vision (ICCV)

Yao T, Pan Y, Li Y, Qiu Z, Mei T (2017) Boosting image captioning with attributes. In: Proceedings of the 2017 IEEE international conference on computer vision (ICCV), pp 4904–4912. https://doi.org/10.1109/ICCV.2017.524

Ye S, Han J, Liu N (2018) Attentive linear transformation for image captioning. IEEE Trans Image Process 27(11):5514–5524. https://doi.org/10.1109/TIP.2018.2855406

You Q, Jin H, Wang Z, Fang C, Luo J (2016) Image captioning with semantic attention. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Young P, Lai A, Hodosh M, Hockenmaier J (2014) From image descriptions to visual denotations: new similarity metrics for semantic inference over event descriptions. TACL 2:67–78

Yu J, Li J, Yu Z, Huang Q (2019) Multimodal transformer with multi-view visual representation for image captioning. IEEE Trans Circ Syst Video Technol. https://doi.org/10.1109/TCSVT.2019.2947482

Zhou Y, Wang M, Liu D, Hu Z, Zhang H (2020) More grounded image captioning by distilling image-text matching model. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Zhou L, Xu C, Koch P, Corso JJ (2017) Watch what you just said: image captioning with text-conditional attention. In: Proceedings of the on thematic workshops of ACM multimedia 2017, pp 305–313. Association for Computing Machinery, New York, NY. https://doi.org/10.1145/3126686.3126717

Zhu X, Li L, Liu J, Peng H, Niu X (2018) Captioning transformer with stacked attention modules. Appl Sci. https://doi.org/10.3390/app8050739

Zitnick CL, Dollár P (2014) Edge boxes: Locating object proposals from edges. In: Computer vision: ECCV 2014, pp 391–405. Springer, New York

Funding

This work has not received any funding

Author information

Authors and Affiliations

Contributions

Zanyar Zohourianshahzadi collected all the research material and wrote the original text of the survey paper. Jugal K. Kalita helped with article preparation phase and provided suggestions regarding the text and structure of the paper.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest or competing interest in this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zohourianshahzadi, Z., Kalita, J.K. Neural attention for image captioning: review of outstanding methods. Artif Intell Rev 55, 3833–3862 (2022). https://doi.org/10.1007/s10462-021-10092-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10462-021-10092-2