Abstract

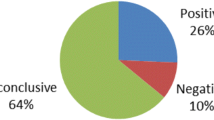

Gamification refers to using game attributes in a non-gaming context. Health professions educators increasingly turn to gamification to optimize students’ learning outcomes. However, little is known about the concept of gamification and its possible working mechanisms. This review focused on empirical evidence for the effectiveness of gamification approaches and theoretical rationales for applying the chosen game attributes. We systematically searched multiple databases, and included all empirical studies evaluating the use of game attributes in health professions education. Of 5044 articles initially identified, 44 met the inclusion criteria. Negative outcomes for using gamification were not reported. Almost all studies included assessment attributes (n = 40), mostly in combination with conflict/challenge attributes (n = 27). Eight studies revealed that this specific combination had increased the use of the learning material, sometimes leading to improved learning outcomes. A relatively small number of studies was performed to explain mechanisms underlying the use of game attributes (n = 7). Our findings suggest that it is possible to improve learning outcomes in health professions education by using gamification, especially when employing game attributes that improve learning behaviours and attitudes towards learning. However, most studies lacked well-defined control groups and did not apply and/or report theory to understand underlying processes. Future research should clarify mechanisms underlying gamified educational interventions and explore theories that could explain the effects of these interventions on learning outcomes, using well-defined control groups, in a longitudinal way. In doing so, we can build on existing theories and gain a practical and comprehensive understanding of how to select the right game elements for the right educational context and the right type of student.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

Introduction

Gamification is rapidly becoming a trend in health professions education. This is at least suggested by the number of peer-reviewed scientific publications on gamification in this field, which has increased almost tenfold over the past 5 years. At the same time, there seems to be little shared understanding of what constitutes gamification and how this concept differs from other, related concepts. Furthermore, according to business and education literature, there is still no clear understanding of when and why gamification can be an appropriate learning and instructional tool (Dicheva et al. 2015; Landers et al. 2015). The purpose of this systematic review was to provide a comprehensive overview of the use and effectiveness of gamification in health professions education and to add to the existing research on gamification in several ways. To this end, we first clearly and carefully distinguished gamification studies from studies investigating other types of game-based learning. Then, we summarized the contexts in which the gamification interventions took place and their underlying theories. Finally, we analysed the effects of individual game elements by using a conceptual framework that was originally developed by Landers et al. to structure game elements in other, non-educational domains (Landers 2014).

What is (or is not) gamification?

The use of game design elements to enhance academic performance (e.g., learning attitudes, learning behaviours and learning outcomes) is known as gamification or ‘gamified learning’ (Deterding et al. 2011). Due to the rapidly growing body of information and proliferation of different types of game-based learning (e.g. serious games), authors tend to use different terms for the same concept, or the same term for different concepts (for examples see Amer et al. 2011; Borro-Escribano et al. 2013; Chan et al. 2012; Chen et al. 2015; Frederick et al. 2011; Gerard et al. 2018; Lim and Seet 2008; Stanley and Latimer 2011; Webb and Henderson 2017). In part, this indiscriminate use of terms may be caused by the fact that in the literature of play and gaming there is neither consensus on what a ‘game’ is conceptually (Arjoranta 2014; Caillois 1961; Huizinga 1955; Ramsey 1923; Salen and Zimmerman 2004; Suits 1978; Sutton-Smith 2000), nor on what the essential elements of a game are (Bedwell et al. 2012; Klabbers 2009).

Since there was a lack of uniformity in the definitions of the main forms of game-based learning—gamification, serious games, and simulations—we chose well-known, academically accepted definitions to distinguish among the three concepts that guided the search strategy and enabled selection of articles relevant for this systematic review. First, although various definitions of gamification can be found in various fields of literature such as business, education and information technology (Blohm and Leimeister 2013; Burke 2014; Burkey et al. 2013; De Sousa Borges et al. 2014; Domínguez et al. 2013; Gaggioli 2016; Hamari et al. 2014; Hsu et al. 2013; Huotari and Hamari 2012, 2017; Kumar 2013; Muntean 2011; Perrotta et al. 2013; Robson et al. 2015; Simões et al. 2013; Warmelink et al. 2018; Zuk 2012), a commonly applied definition is that of Deterding et al. (De-Marcos et al. 2014; Deterding et al. 2011; Dicheva et al. 2015; Lister et al. 2014; Mekler et al. 2017; Sailer et al. 2017; Seaborn and Fels 2015). He and his colleagues define gamification as the use of game elements (e.g. points, leader boards, prizes) in non-gaming contexts (Deterding et al. 2011). This implies that, even though game elements are used in a certain context (such as education), there should be no intention of creating a game. Second, this intention is different from the intention in serious games, which are defined as games (in their various forms) in which education is the primary goal, rather than entertainment (Susi et al. 2007). Serious games address real-world topics in a gameplay context. In contrast to gamification, serious game designers’ intention is to create a game. Therefore, the characteristic difference between gamification and serious games lies in their design intention. Third, a simulation can be defined as a situation in which a particular set of conditions is created artificially in order to study or experience something that could exist in reality (Oxford English Dictionary 2017). Simulations provide instant feedback on performance, which is delivered as accurate and realistic as possible in a safe environment (Alessi 1988; Vallverdú 2014). Simulations do not need game elements like a scoring system and a win/lose condition. However, game-design techniques and solutions can be employed to create the simulated reality and the experience of something real (Alessi 1988; Jacobs and Dempsey 1993). Simulations are therefore best seen as learning activities that necessarily carry some game intention, but do not use game elements. By explicitly adding the designers’ intentions to the chosen definitions for these three types of game-based learning, we established criteria for distinguishing between them and guiding study inclusion and analysis in this systematic review.

Game elements and game attributes

In academic literature, various game elements have been proposed to improve the learning experience in gamification, e.g. rewards, leader boards and social elements (Petrucci et al. 2015; Van Dongen et al. 2008). In addition, grey literature also lists vast amounts of different types of game elements (Marczewski 2017), which—though lacking an academic framework or basis—have been used in previous research (Wells 2018). Different terminology is used for the same type of game-elements; for example, badges (Davidson and Candy 2016), donuts (Fleiszer et al. 1997) and iPads (Kerfoot and Kissane 2014) are all types of rewards. In a recent systematic review on serious games (and gamification), Gorbanev et al. (2018) ascertained a lack of consensus regarding terminology used in games and welcomed any effort to reduce this terminological variety. Therefore, to make the results of gamification research in health professions education more comprehensive and transferable with regard to the game elements involved, we applied a conceptual framework of aggregated game elements that was originally proposed by Bedwell et al. (2012) and Wilson et al. (2009), and later modified by Landers (2014) (Table 2; “Appendix”). This framework posits that all existing game elements can be described and structured into nine attributes, while avoiding significant overlap between these attributes (Bedwell et al. 2012). The following game attributes are included in the framework: action language, assessment, conflict/challenge, control, environment, game fiction, human interaction, immersion and rule/goals. Instead of only focusing on specific game elements, we chose to use this framework to identify whether there is a class of game elements that hold the highest promise of improving health professions education. In doing so, we added a new perspective to existing reviews on gamification in higher (Caponetto et al. 2014; De Sousa Borges et al. 2014; Dichev and Dicheva 2017; Dicheva et al. 2015; Faiella and Ricciardi 2015; Nah et al. 2014; Subhash and Cudney 2018) and health professions education (Gentry et al. 2016; McCoy et al. 2016). In addition, we attempted to uncover the theory underpinning the gamified interventions reported in this systematic review. In doing so, we responded to the call for more theory-driven medical education research (Bligh and Parsell 1999; Bligh and Prideaux 2002; Cook et al. 2008), which we felt should also apply to research on game-based learning in general and gamification in particular (Caponetto et al. 2014; De Sousa Borges et al. 2014; Faiella and Ricciardi 2015; Gentry et al. 2016; McCoy et al. 2016; Nah et al. 2014).

This study, therefore, was intended to contribute to the literature (including existing systematic reviews) in several ways: (1) by creating a sharper distinction between gamification and other forms of game-based learning; (2) by using a more generic way to structure game elements; and (3) by responding to the call for more theory-driven medical education research.

In sum, this systematic review aimed to provide teachers and researchers with a comprehensive overview of the current state of gamification in health professions education, with a particular focus on the effects of gamification elements on learning, the underlying mechanisms and considerations for future research.

We formulated five principal research questions that guided this systematic review:

-

1.

What are the contexts in which game elements are used in health sciences education?

-

2.

What game elements are tested and what attributes do they represent?

-

3.

Is there empirical evidence for the effectiveness of gamified learning in health professions education?

-

4.

What is the quality of existing research on gamified learning in health professions education?

-

5.

What is the theoretical rationale for implementing gamified learning in health professions education?

Methods

We conducted a systematic review in accordance with the guidelines of the Associations for Medical Education in Europe (AMEE) (Sharma et al. 2015).

Search strategy

We systematically searched the literature for publications on the use of game elements in health professions education. First, we consulted two information specialists with expertise in systematic reviews to assist in developing the search strategy. Together, we identified keywords, key phrases, synonyms and alternative keywords for gamification as well as game elements and attributes that were derived from a list of 52 game elements and Landers’ framework. Some of the 52 game elements and attributes were omitted from the search as they were too broad and generated a huge amount of hits (e.g., ‘environment’, ‘scarcity’, ‘consequences’), irrelevant hits or as they did not generate any hits (e.g. ‘Easter eggs’). Based on these main keywords we formulated the search strategy for PubMed.

The first author (AvG) translated the PubMed search strategy for use in other databases and then systematically searched eight databases: Academic Search Premier; CINAHL; EMBASE; ERIC; Psychology and Behavior Sciences Collection; PsychINFO, PubMed and the Cochrane Library. The search was performed in April 2018. We used the following search terms: (gamif* OR gameplay* OR game OR games OR gamelike OR gamebased OR gaming OR videogam* OR edugam* OR flow-theor* OR “social network*” OR scoreboard* OR leveling OR levelling OR contest OR contests OR badgification) AND (medical educat* OR medical train* OR medical field training OR medical school* OR medical Intern* OR medical residen* OR medical student* OR dental student* OR nursing student* OR pharmacy student* OR veterinary student* OR clinical education* OR clinical train* OR clinical Intern* OR clinical residen* OR clinical clerk* OR teaching round* OR dental education* OR pharmacy education* OR pharmacy residen* OR nursing education* OR paramedics education* OR paramedic education* OR paramedical education* OR physiotherapy education* OR physiotherapist education* OR emergency medical services educat* OR curricul* OR veterinary education OR allied health personnel).

Inclusion criteria

We included peer-reviewed journal articles on the use of gamification or game elements in education for (future) health professionals. We defined health professionals as individuals oriented towards providing curative, preventive and rehabilitative health care for humans as well as animals (e.g. individuals working in the fields of medicine, nursery, pharmacology and veterinary medicine). Real-life contexts (e.g. lectures and practicals) as well as digital contexts (e.g. mobile applications or computer software) were eligible for inclusion if they incorporated gamified learning to improve (future) health professionals’ (bio)medical knowledge or skills. We included quantitative as well as mixed-method studies.

Exclusion criteria

We excluded articles that (a) only described the development of gamified learning activities in educational contexts without reporting the effects of their interventions, (b) only focused on qualitative data, (c) focused on serious games, (d) focused on patient education, (e) focused on simulations, except when the focus was on the effects of gamification in simulations (gamified simulations), (f) described adapted environments such as game-shows (e.g., “jeopardy” or “who wants to be a millionaire”) and board-games (e.g., “monopoly” or “trivial pursuit”) which we considered to be game contexts, and (g) were not written in Dutch or English.

Although the term “gamification” has been used since 2008 (Deterding et al. 2011; Szyma 2014), we did not set a timeframe for our search since individual game elements were used in a non-game context long before the term “gamification” was coined and appeared in scientific literature.

Study selection

After retrieving the search results from different databases, AvG removed the duplicates and uploaded the remaining articles to Rayyan, a mobile and web-based application developed for systematic reviews (Ouzzani et al. 2016). Then, AvG and JB independently screened all titles and abstracts for preliminary eligibility. In case of uncertainty, the articles in question were retained. Subsequently, AvG read the full text of all retained articles to determine eligibility for inclusion in this systematic review. In case of uncertainty, articles were marked for discussion and independently screened by all researchers. To ensure consistency in the application of selection criteria, we undertook double screening on a 10% random sample of the excluded articles as a form of triangulation. The researchers met at a regular basis to discuss challenges, uncertainties and conflicts with respect to article selection. Disagreement between researchers was resolved by discussion.

In addition, we hand-searched the reference lists of included articles and citations for additional articles.

Data extraction and quality assessment processes

We extracted the following data from the included articles using the extraction methods described in the AMEE guideline (Sharma et al. 2015):

-

1.

General information (e.g., author, title and publication year), participant characteristics (including demographics and sample size) and characteristics of the educational content (including topic and the context in which the topic is presented, e.g. digital or analogue and type of study or profession);

-

2.

Intervention (type of game element(s) and game attributes used);

-

3.

Study outcomes (including satisfaction, attitudes, perceptions, opinions, knowledge, behaviour and patient outcomes);

-

4.

Study quality (see below);

-

5.

Theoretical frameworks used to design or evaluate gamified educational programs.

We used the Medical Education Research Study Quality Instrument (MERSQI) to measure the methodological quality of the selected studies (Reed et al. 2007). MERSQI is designed for measuring the quality of experimental, quasi-experimental and observational studies and consists of ten items covering six domains (study design, sampling, type of data, validity of evaluation instrument, data analysis, and outcomes). The maximum score for each domain is three. Five domains have a minimum score of one, resulting in a range of 5–18 points. We calculated individual total MERSQI scores, mean scores and standard deviations.

To provide a clear overview of the current state of studies on gamification in health sciences education, we used the framework for classifying the purposes of research in medical education proposed by Cook et al. (2008). They distinguished studies as focusing on description, justification and clarification. Description studies make no comparison, focus on observation and describe what was done. Justification studies make comparisons between interventions, generally lack or do not present a conceptual framework or a predictive model and describe whether the new intervention worked. Clarification studies apply a theoretical framework to understand and possibly explain the processes underlying the observed effects, describe why and how interventions (i.e. gamification) work and illuminate paths of future directions (Cook et al. 2008).

We classified all included studies as descriptive, justification or clarification.

Results

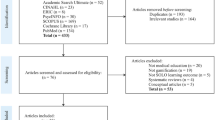

The study selection process is shown in Fig. 1. Our search identified 5044 articles, of which 38 met the inclusion criteria on the basis of full-text screening. Uncertainty about inclusion or exclusion of 20 other articles (Bigdeli and Kaufman 2017; Boysen et al. 2016; Campbell 1967; Courtier et al. 2016; Creutzfel dt et al. 2013; Dankbaar et al. 2016, 2017; Hudon et al. 2016; Inangil 2017; Kaylor 2016; Leach et al. 2016; Lim and Seet 2008; Mishori et al. 2017; Montrezor 2016; Patton et al. 2016; Richey Smith et al. 2016; Sabri et al. 2010) were resolved by consensus discussion among all members of the research team, which yielded three additional studies. Of the 17 studies excluded in this step, one was excluded because no consensus could be reached (Mullen 2018), the others did not meet the inclusion criteria. Hand search of references and citations yielded three additional studies. A total of 44 studies were eligible for inclusion in our systematic review (Table 1).

As a form of triangulation and to assess the level of agreement between the researchers, a random sample of 10% of the excluded articles was double screened by the other members of the team. The overall agreement between JB, JSA, DJ and JRG was 94%. The disagreements about the remaining 6% were resolved by discussion, which led to the conclusion that all studies in the sample had rightly been excluded from the review.

Educational context and student characteristics

The majority of the studies were conducted in the USA (n = 20) or Canada (n = 8). Most studies involved undergraduate (n = 15) and postgraduate medical students (e.g. residency) (n = 15), followed by nursing (n = 7), dental (n = 1), pharmacy (n = 1), osteopathic (n = 1), allied health (n = 1), speech language and hearing pathology students (n = 1) or a mix of students of different professional courses (n = 3) (Table 1). From the gamification studies in post-graduate medical education, the number of studies in surgical specialties (n = 6) equalled the number of studies in other medical specialties (n = 6). Compared to analogue gamified learning activities (n = 14), twice as many digital learning activities (n = 28) were identified. An example of gamification in a digital environment was a web-based platform where gamification elements were inspired by the Tetris game (Lin et al. 2015). The goal of that pilot study was to collect validity evidence for a gaming platform as training and assessment tool for surgical decision making of general residents. An example of an analogue gamified learning activity was the use of a board game for undergraduate medical students. The game consisted of a board depicting a mitochondrion and cards representing components of the mitochondrial electron transport chain that had to be put in the right order. The goal of that study was to assess the effect of this active learning activity (Forni et al. 2017).

Game attributes

We categorized the identified game elements into the game attributes for learning of Landers’ framework (Landers 2014).

In most studies, the game attributes “assessment” (n = 40) and/or “conflict/challenge” (n = 27) (Table 1) were embedded in the learning environment. Intervention studies with assessment attributes particularly used scoring (n = 26) and rewards (n = 10). Scoring mostly entailed keeping record of points earned for completing a certain task or answering a question correctly. Rewards varied considerably and included digital trophies, donuts, iPads and money. Intervention studies with the conflict/challenge attribute particularly used competition (n = 21).

Combinations of game attributes were most common in our review (n = 36). Assessment and conflict/challenge attributes were often applied together (n = 24), predominantly in the form of leader boards displaying rank orders of participants, thus enabling comparison of students’ achievements. Compared to the other attributes, assessment attributes were more often examined separately (n = 6), followed by “conflict/challenge” (n = 1) and “human-interaction” (n = 1). The other attributes were always studied in combination with other game attributes (Table 1).

Effects of gamified learning interventions

We did not find any negative outcome of the use of gamification in health professions education. All studies reported positive results compared to a control group not using gamification, or similar results for both groups (Table 1).

Multiple studies reported that the (frequently used) combination of assessment and conflict/challenge game attributes could increase the use of gamified learning materials (n = 8), strengthen satisfaction (n = 16) or improve learning outcomes (n = 11) (Table 1). Whether or not increased use ensured improved learning remained uncertain. For instance, two comparable studies using assessment and conflict/challenge attributes each reported increased use of simulators, but did not investigate or report learning outcomes (Kerfoot and Kissane 2014; Van Dongen et al. 2008). Two different studies in which the same gamified elements were used also found that the use of simulators had increased (El-Beheiry et al. 2017; Petrucci et al. 2015), but only one study found improved performance (El-Beheiry et al. 2017).

One study focused on the level of health care outcome (Kerfoot et al. 2014). This randomized controlled trial had the highest MERSQI score and investigated whether gamification in an online learning activity could improve primary care clinicians’ hypertension management. The intervention group participated in a gamified, spaced learning activity comprising three game elements: competition, space-learning and scoring. The control group received the same spaced education through online postings. The gamification intervention was associated with a modest reduction in numbers of days to reach the target blood pressure in a subgroup of already hypertensive patients. That study did not uncover the underlying mechanisms of how gamification supported these positive patient outcomes. A proper theory was also lacking or not presented. Because of the study design, we were not able to disentangle whether competition, spaced learning, scoring, or a combination of them had caused the effect. In fact, adopting and testing a combination of game elements without being able to disentangle their individual effects on learning is a quite general phenomenon in gamification research in health professions education.

Contextual differences and effects of game attributes

We found that certain game attributes were more often applied to a particular context. For instance, a combination of conflict and challenge attributes was relatively more often applied to digital contexts (24 out of 28 digital studies) than to analogue contexts (3 out of 14 analogue studies). A combination of conflict and challenge attributes was also more often chosen by researchers from Europe (6 out of 8 European studies) and the USA (17 out of 21 USA studies) than by researchers from Canada (3 out of 8 Canadian studies). We found differences in use of a combination of conflict and challenge attributes between undergraduate and postgraduate settings (9 out of 15 undergraduate studies and 14 out of 15 postgraduate studies used such a combination). Yet, we could not find a direct indication that the effects of game attributes were dependent on these contextual factors.

Quality of the current gamified learning research

The total MERSQI scores of the 44 studies included in our review ranged between 5 and 17 points (mean 9.8 points, SD 3.1; see Table 1).

Descriptive studies

Most of the included studies (n = 25; Table 1) were descriptive in nature in such sense that they focused on observation and described what was done, without using comparison groups. These descriptive studies were typically low in MERSQI scores (mean 8.3, SD 2.3) and only contained post-intervention measurements.

Justification studies

In twelve studies (Table 1), groups involved in gamified learning sessions were compared with control groups to investigate whether gamification enhances learning outcomes. Almost half of these justification studies were confounded in such a way that the outcomes could not be attributed to the gamification intervention under study, because the groups not only differed in treatment, but also with respect to other aspects (Table 1; marked with asterisks). Comparing a group of participants who took part in a gamified learning activity with a group of participants who did not take part in any learning activity is an example of a confounded comparison (Adami and Cecchini 2014). The remaining seven justification studies, which were without confounds, showed an average MERSQI score of 12.5 (SD 2.6), which was the highest study quality in our sample.

Clarification studies

In seven studies, theoretical assumptions were affirmed or refuted, based on the results of the study (Table 1). Three of these studies had a design with a control group (Butt et al. 2018; Van Nuland et al. 2015; Verkuyl et al. 2017), out of which two were confounded by poor design (Table 1; asterisks). The other four studies did not include control groups. With an average MERSQI score of 11.1 (SD 3.5), the seven clarification studies were of medium quality.

The use of theory

The hallmark of clarification studies is the use of theory to explain the processes that underlie observed effects (Cook et al. 2008). In most studies (n = 5), multiple game attributes were related to a chosen theory. In three out of the seven clarification studies, the authors referred to Experiential Learning Theory (El Tantawi et al. 2018; Koivisto et al. 2016; Verkuyl et al. 2017) and in each of the remaining four studies the authors referred to a different theory: Reinforcement Learning Theory (Chen et al. 2017), Social Comparison Theory (Van Nuland et al. 2015), Self-Directed Learning (Fleiszer et al. 1997) and Deliberate Practice Theory (Butt et al. 2018). Each theory will be discussed briefly below, with specific attention to how these theories can be linked to game elements.

Experiential Learning Theory states that concrete experience provides information that serves as a basis for reflection. After this reflection, learners think of ways to improve themselves and, after this abstract conceptualization, they will try to improve their behaviours accordingly (Kolb et al. 2000; Kolb and Boyatzis 2001). Some researchers assumed that by gamifying their courses, students’ experiences and, consequently, their understanding (through reflection and conceptualization) might be enhanced. For instance, dentistry students’ experiences of being a part of an exciting narrative in an academic writing course, including game elements like role-playing, feedback, points, badges, leader boards and a clear storyline, were assumed to improve their performance (El Tantawi et al. 2018).

Self-Directed Learning is the process of diagnosing one’s own learning needs, formulating one’s own learning goals and planning one’s own learning trajectory. The increased autonomy in the pursuit of knowledge is assumed to result in higher motivation (Knowles 1980). Gamification that was inspired by Self-Directed Learning involved quizzes that were fully developed by small groups of medical students or residents about a self-chosen subject of their surgical intensive care unit rotation, right answers were rewarded with donuts (Fleiszer et al. 1997).

Deliberate Practice Theory is based on engaging already motivated students to become experts via well-defined goals, real world tasks and immediate and informative feedback (Butt et al. 2018; Ericsson et al. 1993). Deliberate Practice Theory was applied to develop an educational tool using game-elements (such as points and time constrains) and virtual reality to practice urinary catheterization in nursing education (Butt et al. 2018).

Two studies stood out for the way in which they used theory to explain the effect of a single game attribute. Van Nuland et al. (2015) used social comparison theory to explain the effect of competition and Chen et al. (2017) used Reinforcement Learning Theory to explain the effect of direct feedback in digital learning.

According to the Social Comparison Theory, social comparison is a fundamental mechanism for modifying judgment and behaviour through the inner drive individuals have to gain accurate self-evaluations (Corcoran et al. 2011; Festinger 1954). Gamification based on Social Comparison Theory involved the introduction of leader boards. According to Van Nuland et al. improved performance would be achieved by letting students identify discrepancies in their knowledge through upward comparison or validate their assumptions on knowledge through downward comparison (Van Nuland et al. 2015).

Reinforcement Learning Theory relates to a form of behavioural learning that is dependent on rewards and punishments (Börgers and Sarin 1997). If a desired behaviour or action is followed by a reward, individuals’ tendency to perform that action will increase. Punishment will decrease individuals’ tendency to perform that action. The gamification study based on this theory assumed that rewards and punishments (e.g. receiving points or negative, red-coloured responses, respectively) would improve the subjective learning experience and help learners acquire implicit skills in radiology (Chen et al. 2017).

Although different theories predicted the effectiveness of different mechanisms to improve performance, a common assumption seemed to be that the use of game attributes would improve learning outcomes by changing learning behaviours or attitudes towards learning.

Discussion

The purpose of this systematic review was to investigate the current evidence for using gamification in health profession education and understand which mechanism are involved and how they can explain the observed effects.

The majority of the included studies—only quantitative and mixed-methods studies—were performed in medical schools in the USA and Canada, and used digital technologies to develop and implement gamified teaching and learning. No negative effects of using gamification were observed. Almost all interventions included assessment game attributes, mostly in combination with conflict/challenge attributes. Especially this combination of attributes was found to increase the use of learning materials, sometimes leading to improved learning outcomes. Our review revealed a relatively small number of studies involving high-quality control groups, which limited recommendations for evidence-based teaching practice. In addition, high-quality clarification studies on how underlying mechanisms could explain the observed effects are uncommon in gamified learning research. In most studies, an explicit theory of learning was not presented and an appropriate control group was lacking. Of the few studies that did refer to theory, most researchers essentially proposed that the game element(s) under study would strengthen attitudes or behaviours towards learning, which in turn might positively influence the learning outcomes.

Empirical evidence for using gamification

At first glance, it may seem that improved or unchanged academic performance (e.g. learning behaviours, attitudes towards learning or learning outcomes) can justify the use of gamification in health professions education. However, caution should be taken in drawing strong conclusions, because most studies were descriptive, (confounded) justification or (confounded) clarification studies, or clarification studies that did not include control groups (total n = 36). In sum, despite the apparent encouraging early results, it remains unclear whether the reported effects on academic performance can be solely attributed to the gamified interventions due to the absence of (non-confounded) control-groups. The remaining eight studied included in our study were well-controlled studies using assessment and conflict/challenge attributes, so these study results could be interpreted with more confidence. The use of the learning material was increased in all intervention groups compared to their control groups (El-Beheiry et al. 2017; Kerfoot and Kissane 2014; Kerfoot et al. 2014; Petrucci et al. 2015; Scales et al. 2016; Van Dongen et al. 2008; Van Nuland et al. 2015; Worm and Buch 2014), often in combination with improved learning outcomes (El-Beheiry et al. 2017; Kerfoot et al. 2014; Scales et al. 2016; Van Nuland et al. 2015; Worm and Buch 2014). Using conflict/challenge and assessment attributes, especially competition and scoring, therefore seemed to positively influence learning. The apparently consistent effect of gamification is promising but also warrants further investigation. First, gamification research is still much in its infancy, it should be recognized that positive results may have been overreported due to a publication bias (Kerr et al. 1977; Møllerand and Jennions 2001) and that negative results remain un- or underreported. Second, so far, mainly small-scale and pilot studies have been conducted, which is not just typical of health professions education but also applies to areas where gamification is already more often used, such as computer sciences (Dicheva et al. 2015). Third, it is important to realise that gamification can also have unexpected or unwanted effects (Andrade et al. 2016). Competition, which is one of the most frequently used game elements in this review, is particularly interesting in that regard. In theory, competition can hamper learning by turning projects into a race to the finish line. In this case, participating in a gamified learning activity might diminish learning: winning becomes more important than the internalisation of knowledge and/or skills. This shift in attention from task to competition might, therefore, come at the expense of students’ performance and even their intrinsic motivation to learn (Reeve and Deci 1996). For example, in our review, four studies involving simulators showed that competition leads to increased use of the simulators (e.g., for a longer time and more frequently) (El-Beheiry et al. 2017; Kerfoot et al. 2014; Petrucci et al. 2015; Van Dongen et al. 2008). However, only one of these studies reported improved learning outcomes (El-Beheiry et al. 2017). That this outcome was not found in the other three studies might be attributed to a shift in attention as explained before. Increased use of learning material generally indicated repetition, which is one of the most powerful variables to affect memory, leading to improved learning outcomes and retention (Hintzman 1976; Kerfoot et al. 2009; Murre and Dros 2015; Slamecka and McElree 1983). However, as a corollary from using gamification in learning, repetition may become less effective when students’ attention shifts from the learning task to, for instance, competition. So even though repetition is vital for knowledge retention, increased repetition of the learning material in gamified interventions might not necessarily benefit learning, especially when students get distracted by game elements. Similarly, shifts of motivation may occur with different game attributes. In interventions applying the game attribute assessment, for example, the elements scoring and rewards are frequently used. However, giving rewards for a previously unrewarded activity can lead to a shift from intrinsic to extrinsic motivation and even loss of interest in the activity when the rewards are no longer given. This is also called the over-justification effect (Deci et al. 1999, 2001; Hanus and Fox 2015; Landers et al. 2015; Lepper et al. 1973). In such cases, students’ motivation might shift from being internally driven to learn to being externally driven by gamification, possibly ending with amotivation when the gamified activities are over.

We did not find a direct indication that the effects of game attributes were dependent on contextual factors, since all included studies reported positive results. However, we did find that a combination of conflict and challenge attributes was more often used in the context of postgraduate education and in digital modalities. Whether this implies that opting for digital modalities is better suited to postgraduate courses or whether digital modalities are better suited for a combination of conflict and challenge attributes remains uncertain. Future research should investigate whether other game attributes and/or modalities are also applicable to postgraduate students. In addition, researchers might focus on identifying reasons for choosing specific (combinations of) attributes in a specific context.

The use of a conceptual framework

The conceptual framework we used in this study originated from serious games. It was altered by Landers (2014) for gamification purposes and was now—at least to our knowledge—used for the first time to systematically structure gamification studies. It was not the aim of this study to evaluate this method, however, future researchers may want to re-evaluate before applying it to systematic analyses. Coding was relatively easy which may imply that game-elements are over-generalized. For instance, points, badges, iPads and money are kinds of rewards and, therefore, confirmed as assessment attributes. However, the timing of the rewards (e.g., immediate versus delayed rewards) as well as the context of the rewards (e.g., negative or positive feedback) might have a different impact on the outcomes (Ashby and O’Brien 2007; Bermudez and Schultz 2014; Butler et al. 2007). Consequently, the claim that assessment attributes can increase or improve learning is insufficiently substantiated, or at least incomplete. Besides, some attributes appeared to have much overlap: immersion and environment attributes were almost always implemented in conjunction. This conceptual framework was helpful in guiding our review and interpreting the results, although some work is needed to refine its contents.

Theory-driven gamification

The purpose of theory is to generate hypotheses, predict (learning) outcomes and explain underlying mechanisms. Unfortunately, most identified studies on gamification in health professions education were not based on theory, or theoretical considerations were not included or not yet elaborated. Our review showed that researchers who did use theory hypothesized that effective gamified learning might strengthen students’ learning behaviours or positive attitudes towards learning, which in turn might improve their learning outcomes. For instance, in studies referring to ELT (El Tantawi et al. 2018; Koivisto et al. 2016; Verkuyl et al. 2017), it was assumed that incorporating gamification into courses could enhance students’ experience and, therefore, their reflection on and conceptualization of that experience (Kolb et al. 2001). For example, El Tantawi et al. (2018) used story-telling and game-terminology (together with other game-elements) to improve students’ attitudes towards academic writing. This way, the researchers intended to modify students’ perceptions of a task and made it seem like an exciting adventure, with a story built around a fictitious organisation.

Next to changing attitudes, the aim of studies that applied theory was also to change behaviours. For example, reinforcement learning theory was applied to increase repetition by reinforcing right answers (Chen et al. 2017). Although all theories we identified in this review were useful in clarifying research findings in the field of gamification of education and learning, it remains difficult to explain on the basis of these theories why specific game attributes or combinations of them should be preferred over others. For instance, Van Nuland et al. (2015) used social comparison theory to develop a digital, competition-based learning environment and explain research outcomes. Social comparison theory poses that individuals compare their performances to those of others to evaluate their abilities and seek self-enhancement (Gruder 1971; Wills 1981). Van Nuland et al. (2015) aimed to trigger social comparison in an intervention group by using leader boards with peer-to-peer competition in a tournament environment. They found that the intervention group outperformed their noncompeting peers on the second term test. Here, the use of social comparison theory helped clarify this effect through the comparative element underlying the competitive features of gamified learning that may have increased participants’ motivation to excel. However, because scientific theories are general statements describing or explaining causes or effects of phenomena, it remains unclear which specific game element has the highest potential of triggering social comparison and whether competition should be the most viable option. Although the use of leader boards appears to be a valid choice, it can also hamper learning when it (1) shifts attention from learning to competition (see earlier), (2) is not liked by all students and (3) is not the only game attribute that triggers social comparison. Perhaps less competitive game elements (e.g., upgrading avatars, receiving badges, building things) or even different game attributes (e.g., control, game-fiction or human-interaction) could also trigger social comparison.

Theoretical and practical implications

Based on the scarcity of high-quality studies on processes underlying the effects of gamified educational interventions, we urgently call for more high-quality clarification research. Clarification studies could provide researchers with an understanding of the mechanisms that are involved in gamified learning (and illuminate paths for future research) (Cook et al. 2008) and teachers with evidence-based information on how to implement gamification in a meaningful way. Based on our findings, future clarification studies should use and validate existing learning theories in the context of gamification. The theories we identified in this review could, among others, serve as a basis for this research (Landers 2014; Landers et al. 2015). Because theories are general ideas, researchers should focus on (separate) specific game elements to identify the most promising game attributes in relation to a specific theory. Important negative results should be reported as well. In addition, realist evaluation can help provide a deeper understanding by identifying what works for whom, in what circumstances, in what respects and how (Tilley and Pawson 2000). The finding that (a combination of) game attributes may enhance learning outcomes by strengthening learning behaviours and attitudes towards learning could be used as a starting point for such an approach.

In addition, we would like to emphasize the need for design-based research using well-defined controlled groups to find out whether gamified interventions work (justification research). Although justification research hardly allows for disentangling underlying processes, there should always be room for innovative ideas and interventions (e.g., applying infrequently used game attributes) to inform future research (Cook et al. 2008). Furthermore, design-based research on gamification can shed more light on learning outcomes. For instance, further research could illuminate whether increased repetition results in less positive learning outcomes when students’ attention is distracted from their learning task by one or more game attributes. We would also like to encourage design-based research for interventions that combine different outcome measures, such as learning outcomes and frequency of using gamified educational interventions.

Finally, most studies in this review showed promising results for implementing gamification in health professions education. This opens new ways for educators to carefully experiment with the way they teach and implement gamified learning in their curricula. First, they need to determine whether there are behavioural or attitudinal problems that need attention and can be resolved by integrating game elements into the non-gaming learning environment. Subsequently, they have to determine which game attribute or combination of game attributes and matching game elements may help prevent consolidation of undesirable behaviours and/or attitudes. In this sense, gamification could be seen as an experimental educational tool to resolve behavioural or attitudinal problems towards learning which, therefore, may improve learning outcomes.

Strengths and limitations

The literature in this review represents a broad spectrum of gamified applications, investigated across the health professions education continuum. The strengths of our systematic review are the comprehensive search strategy using multiple databases, the use of explicit in- and exclusion criteria and the transparent approach to collecting data. Furthermore, our study offers a unique analysis approach implying a combination of four core elements—namely (a) an alternative way to distinguish gamification from other forms of game-based learning, (b) structuring game elements in a comprehensive way, (c) uncovering theories underpinning gamified intervention and (d) assessing study quality—which sets our study apart from existing systematic reviews on gamification. Using such an approach, we were better able to make a distinction between the three forms of game-based learning (gamification, serious games and simulations) and, therefore, to make an accurate selection of gamification studies. In doing so, we added a new perspective to literature reviews in health professions education by applying a conceptual framework and using a more generic way of structuring individual game elements into overarching game attributes to investigate whether there is a (combination of) game attribute(s) that holds the highest promise of improving health professions education.

This study had several limitations. First, although we took a systematic approach to identifying relevant articles, it is possible that we unintentionally overlooked some articles that explored the same phenomenon using different keywords. We also may have missed some articles while we had to exclude keywords that were too general and resulted in too many irrelevant articles. Yet, we tried to include as many relevant articles as possible by basing our search on a list of 52 game elements and Lander’s framework (Landers 2014; Marczewski 2017). We kept our search as comprehensive as possible while critically evaluating the output. In addition, we did not set a timeframe for our search since individual game elements were used in a non-game context long before the term “gamification” was coined.

Second, we are aware that other scholars may have different views of what constitutes (serious) games or gamified learning, since there is no consensus on the definition of “game”’ and, therefore, “gamification” and “serious games” (Arjoranta 2014; Ramsey 1923; Salen and Zimmerman 2004; Suits 1978). Since we explicitly added “game-intention” to our definitions of gamification, serious games and simulations, there may have been subjectivity in our decision-making process for inclusion/exclusion of studies. Views of designers, participating students, teachers and scholars can differ as to whether something is a game or not, because meanings are constructed on the basis of historical, cultural and social circumstances through specific discourse of games or acts of gameplay. This means that even though designers, researchers and teachers may have the intention to not create a game and to only use game-elements, a participants’ view of whether it actually is a game can be quite different, depending on his or her background. For instance, some students or researchers may interpret the inclusion of a leader board as a game element in a non-gaming context, while others may interpret it as a game. So, although the intention of creating a game is the characteristic difference between gamification and serious games, the interpretation of gamified interventions is prone to subjectivity due to a lack of consensus of what the word ‘game’ refers to. This, in turn, suggests that our study selection process may also be prone to subjectivity. However, the distinction we made between the three forms of game-based learning proved to be quite straightforward and we used a form of triangulation to overcome this limitation. Although we underline the need for clarification of terms like (serious) game and gamification, we do feel that our research method was appropriate for this study. A third limitation may be that only one researcher (AvG) was involved in screening the full texts to confirm the eligibility of each study on the basis of our in- and exclusion criteria. However, in case of uncertainties, the entire team was involved in the decision-making process and we undertook double screening on a 10% random sample of the excluded articles as a form of triangulation. Additionally, all researchers independently reviewed full texts during the process and engaged in joint discussions to resolve uncertainties and reach consensus, when necessary. The fourth limitation is that we included mixed methods studies, but ranked the included studies using MERSQI only. MERSQI is an instrument for assessing the methodological quality of experimental, quasi-experimental and observational studies in medical education, so it does not assess the qualitative parts of mixed methods studies. Although using the MERSQI enabled comparison between all studies included in our study, we realize that our outcomes neglected the quality of the qualitative parts of these studies and may not reflect the quality of each mixed-methods study in its entirety. We acknowledge, however, that the qualitative components of the mixed-methods studies may be very valuable. Fifth, although the applied conceptual framework enabled us to generalize our findings, at times, generalization might have caused too much information loss since the framework could use more refinement. Sixth and finally, we only included articles written in English and Dutch, so there is the potential for language and culture bias, since studies with positive results are more likely to be published in English-language journals (Egger et al. 1997).

Conclusion

Gamification seems a promising tool to improve learning outcomes by strengthening learning behaviours and attitudes towards learning. Satisfaction rates are generally high and positive changes in behaviour and learning have been reported. However, most of the included studies were descriptive in nature and rarely explained what was meant by gamification and how it worked in health professions education. Consequently, the current research status is too limited to provide educators with evidence-based recommendations on when and how specific game elements should be applied. Future clarification research should explore theories that could explain positive or negative effects of gamified interventions with well-defined control groups in a longitudinal way. In this way, we can build on existing theories and gain a practical and comprehensive understanding of how to select the right game elements for the right educational context and the right type of student.

References

Adami, F., & Cecchini, M. (2014). Crosswords and word games improve retention of cardiopulmonary resuscitation principles. Resuscitation, 85(11), 189.

Alessi, S. M. (1988). Fidelity in the design of instructional simulations. Journal of Computer Based Instruction, 15, 40–47.

Amer, R. S., Denehy, G. E., Cobb, D. S., Dawson, D. V., Cunningham-Ford, M. A., & Bergeron, C. (2011). Development and evaluation of an interactive dental video game to teach dentin bonding. Journal of Dental Education, 75, 823–831.

Andrade, F. R. H., Mizoguchi, R., & Isotani, S. (2016). The bright and dark sides of gamification. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics).

Arjoranta, J. (2014). Game definitions: A Wittgensteinian approach. Game Studies, 14(1).

Ashby, G. F., & O’Brien, J. B. (2007). The effects of positive versus negative feedback on information-integration category learning. Perception and Psychophysics, 69, 865–878.

Ballon, B., & Silver, I. (2004). Context is key: An interactive experiential and content frame game. Med Teach., 26(6), 525–8.

Bhaskar, A. (2014). Playing games during a lecture hour: experience with an online blood grouping game. Adv Physiol Educ [Internet]., 38(3), 277–8.

Bedwell, W. L., Pavlas, D., Heyne, K., Lazzara, E. H., & Salas, E. (2012). Toward a taxonomy linking game attributes to learning: An empirical study. Simulation and Gaming, 43, 729–760.

Bermudez, M. A., & Schultz, W. (2014). Timing in reward and decision processes. Philosophical Transactions of the Royal Society B: Biological Sciences, 369, 20120468.

Bigdeli, S., & Kaufman, D. (2017). Digital games in medical education: Key terms, concepts, and definitions. Medical Journal of the Islamic Republic of Iran, 31, 52.

Bligh, J., & Parsell, G. (1999). Research in medical education: Finding its place. Medical Education, 33, 162.

Bligh, J., & Prideaux, D. (2002). Research in medical education: Asking the right questions. Medical Education, 36, 1114–1115.

Blohm, I., & Leimeister, J. M. (2013). Gamification: Design of IT-based enhancing services for motivational support and behavioral change. Business and Information Systems Engineering, 5, 275–278.

Börgers, T., & Sarin, R. (1997). Learning through reinforcement and replicator dynamics. Journal of Economic Theory, 77, 1–14.

Borro-Escribano, B., Martínez-Alpuente, I., Blanco, A. Del, Torrente, J., Fernández-Manjón, B., & Matesanz, R. (2013). Application of game-like simulations in the Spanish Transplant National Organization. Transplantation Proceedings, 45, 3564–3565.

Boysen, P. G., 2nd, Daste, L., & Northern, T. (2016). Multigenerational challenges and the future of graduate medical education. The Ochsner Journal, 16(1), 101–107.

Burke, B. (2014). Gartner redefines gamification. Gartner.

Burkey, D. D., Anastasio, D. D., & Suresh, A. (2013). Improving student attitudes toward the capstone laboratory course using gamification. In ASEE Annual Conference and Exposition, Conference Proceedings.

Butler, A. C., Karpicke, J. D., & Roediger, H. L. (2007). The effect of type and timing of feedback on learning from multiple-choice tests. Journal of Experimental Psychology: Applied, 13, 273.

Butt, A. L., Kardong-Edgren, S., & Ellertson, A. (2018). Using game-based virtual reality with haptics for skill acquisition. Clinical Simulation in Nursing, 16, 25–32.

Caillois, R. (1961). Man, play, and games. New York: Schocken Books.

Campbell, C. (1967). The examination game. That’s the game I’m in. Journal of Medical Education, 42(10), 974–975.

Caponetto, I., Earp, J., & Ott, M. (2014). Gamification and education: A literature review. In Proceedings of the European Conference on Games-Based Learning.

Chan, W. Y., Qin, J., Chui, Y. P., & Heng, P. A. (2012). A serious game for learning ultrasound-guided needle placement skills. IEEE Transactions on Information Technology in Biomedicine, 16, 1032–1042.

Chia, P. (2013). Using a virtual game to enhance simulation based learning in nursing education. Singapore Nurs J [Internet]., 40(3), 21–6.

Chen, A. M. H., Kiersma, M. E., Yehle, K. S., & Plake, K. S. (2015). Impact of the Geriatric Medication Game® on nursing students’ empathy and attitudes toward older adults. Nurse Education Today, 35(1), 38–43.

Chen, P. H., Roth, H., Galperin-Aizenberg, M., Ruutiainen, A. T., Gefter, W., & Cook, T. S. (2017). Improving abnormality detection on chest radiography using game-like reinforcement mechanics. Academic Radiology, 24(11), 1428–1435.

Cook, D. A., Bordage, G., & Schmidt, H. G. (2008). Description, justification and clarification: A framework for classifying the purposes of research in medical education. Medical Education, 42, 128–133.

Cook, N. F., McAloon, T., O’Neill, P., & Beggs, R. (2012). Impact of a web based interactive simulation game (PULSE) on nursing students’ experience and performance in life support training — A pilot study. Nurse Educ Today [Internet]., 32(6), 714–20.

Corcoran, K., Crusius, J., & Mussweiler, T. (2011). Social comparison : Motives, standards, and mechanisms. In Handbook of theories of social psychology: Volume One.

Courtier, J., Webb, E. M., Phelps, A. S., & Naeger, D. M. (2016). Assessing the learning potential of an interactive digital game versus an interactive-style didactic lecture: The continued importance of didactic teaching in medical student education. Pediatric Radiology, 46(13), 1787–1796.

Creutzfeldt, J., Hedman, L., Heinrichs, L. R., Youngblood, P., & Felländer-Tsai, L. (2013). Cardiopulmonary resuscitation training in high school using avatars in virtual worlds: An international feasibility study. Journal of Medical Internet Research, 15, e9.

Dankbaar, M. E. W., Alsma, J., Jansen, E. E. H., van Merrienboer, J. J. G., van Saase, J. L. C. M., & Schuit, S. C. E. (2016). An experimental study on the effects of a simulation game on students’ clinical cognitive skills and motivation. Advances in Health Sciences Education, 21(3), 505–521.

Dankbaar, M. E. W., Richters, O., Kalkman, C. J., Prins, G., Ten Cate, O. T. J., van Merrienboer, J. J. G., et al. (2017). Comparative effectiveness of a serious game and an e-module to support patient safety knowledge and awareness. BMC Medical Education, 17(1), 30.

Davidson, S. J., & Candy, L. (2016). Teaching EBP using game-based learning: Improving the student experience. Worldviews on Evidence-Based Nursing, 13(4), 285–293.

De Sousa Borges, S., Durelli, V. H. S., Reis, H. M., & Isotani, S. (2014). A systematic mapping on gamification applied to education. In Proceedings of the ACM symposium on applied computing.

Deci, Edward L., Koestner, R., & Ryan, R. M. (1999). A meta-analytic review of experiments examining the effects of extrinsic rewards on intrinsic motivation. Psychological Bulletin, 125(6), 627–668.

Deci, E. L., Koestner, R., & Ryan, R. M. (2001). Extrinsic rewards and intrinsic motivation in education: reconsidered once again. Review of Educational Research, 71(1), 1–27.

De-Marcos, L., Domínguez, A., Saenz-De-Navarrete, J., & Pagés, C. (2014). An empirical study comparing gamification and social networking on e-learning. Computers and Education, 75, 82–91.

Deterding, S., Khaled, R., Nacke, L., & Dixon, D. (2011). Gamification: Toward a definition. Chi, 2011, 12–15.

Dichev, C., & Dicheva, D. (2017). Gamifying education: what is known, what is believed and what remains uncertain: A critical review. International Journal of Educational Technology in Higher Education, 14, 9.

Dicheva, D., Dichev, C., Agre, G., & Angelova, G. (2015). Gamification in education: A systematic mapping study. Educational Technology and Society, 18, 75–89.

Domínguez, A., Saenz-De-Navarrete, J., De-Marcos, L., Fernández-Sanz, L., Pagés, C., & Martínez-Herráiz, J. J. (2013). Gamifying learning experiences: Practical implications and outcomes. Computers and Education, 63, 380–392.

Egger, M., Zellweger-Zähner, T., Schneider, M., Junker, C., Lengeler, C., & Antes, G. (1997). Language bias in randomised controlled trials published in English and German. Lancet, 350, 326–329.

El Tantawi, M., Sadaf, S., & AlHumaid, J. (2018). Using gamification to develop academic writing skills in dental undergraduate students. European Journal of Dental Education, 22(1), 15–22.

El-Beheiry, M., McCreery, G., & Schlachta, C. M. (2017). A serious game skills competition increases voluntary usage and proficiency of a virtual reality laparoscopic simulator during first-year surgical residents’ simulation curriculum. Surgical Endoscopy and Other Interventional Techniques, 31(4), 1643–1650.

Ericsson, K. A., Krampe, R. T., & Tesch-Römer, C. (1993). The role of deliberate practice in the acquisition of expert performance. Psychological Review, 100, 363.

Faiella, F., & Ricciardi, M. (2015). Gamification and learning: A review of issues and research. Journal of E-Learning and Knowledge Society, 11(3):13–21.

Finley, J., Caissie, R., & Hoyt, B. (2012). 046 15 Minute Reinforcement Test Restores Murmur Recognition Skills in Medical Students. Can J Cardiol [Internet]., 28(5), S102.

Festinger, L. (1954). A theory of social comparison processes. Human Relations, 7(2), 117–140.

Fleiszer, D., Fleiszer, T., & Russell, R. (1997). Doughnut Rounds: A self-directed learning approach to teaching critical care in surgery. Medical Teacher, 19(3), 190–193.

Forni, M. F., Garcia-Neto, W., Kowaltowski, A. J., & Marson, G. A. (2017). An active-learning methodology for teaching oxidative phosphorylation. Medical Education, 51(11), 1169–1170.

Frederick, H. J., Corvetto, M. A., Hobbs, G. W., & Taekman, J. (2011). The “simulation roulette” game. Simulation in Healthcare, 6, 244.

Gaggioli, A. (2016). CyberSightings. Cyberpsychology, Behavior, and Social Networking, 19, 635.

Gentry, S., L’Estrade Ehrstrom, B., Gauthier, A., Alvarez, J., Wortley, D., van Rijswijk, J., et al. (2016). Serious Gaming and Gamification interventions for health professional education. Cochrane Database of Systematic Reviews, 2016(6), 1–9.

Gerard, J. M., Scalzo, A. J., Borgman, M. A., Watson, C. M., Byrnes, C. E., Chang, T. P., et al. (2018). Validity evidence for a serious game to assess performance on critical pediatric emergency medicine scenarios. Simulation in Healthcare, 13, 168–180.

Gorbanev, I., Agudelo-Londoño, S., González, R. A., Cortes, A., Pomares, A., Delgadillo, V., et al. (2018). A systematic review of serious games in medical education: Quality of evidence and pedagogical strategy. Medical Education Online, 23(1), 1438718.

Gruder, C. L. (1971). Determinants of social comparison choices. Journal of Experimental Social Psychology, 7, 473–489.

Hamari, J., Koivisto, J., & Sarsa, H. (2014). Does gamification work? A literature review of empirical studies on gamification. In Proceedings of the Annual Hawaii international conference on system sciences.

Hanus, M. D., & Fox, J. (2015). Assessing the effects of gamification in the classroom: A longitudinal study on intrinsic motivation, social comparison, satisfaction, effort, and academic performance. Computers & Education, 80, 152–161.

Henry, B. W., Douglass, C., & Kostiwa, I. M. (2007). Effects of participation in an aging game simulation activity on the attitudes of Allied Health students toward older adults. Internet J Allied Heal Sci Pract., 5(4), 5.

Hintzman, D. L. (1976). Repetition and memory. Psychology of Learning and Motivation - Advances in Research and Theory, 10, 47–91.

Hsu, S. H., Chang, J.-W., & Lee, C.-C. (2013). Designing attractive gamification features for collaborative storytelling websites. Cyberpsychology, Behavior, and Social Networking, 16(6), 428–435.

Hudon, A., Perreault, K., Laliberté, M., Desrochers, P., Williams-Jones, B., Ehrmann Feldman, D., et al. (2016). Ethics teaching in rehabilitation: results of a pan-Canadian workshop with occupational and physical therapy educators. Disability and Rehabilitation, 38, 2244–2254.

Huizinga, J. (1955). Homo Ludens: A study of the play element in culture (1st ed.). Boston: The Beacon Press.

Huotari, K., & Hamari, J. (2012). Defining gamification—A service marketing perspective. In Proceedings of the 16th International Academic MindTrek Conference 2012: “Envisioning Future Media Environments”, MindTrek 2012.

Huotari, K., & Hamari, J. (2017). A definition for gamification: anchoring gamification in the service marketing literature. Electronic Markets, 27, 21–31.

Inangil, D. (2017). Theoretically based game for student success: Clinical education. International Journal of Caring Sciences, 10(1), 464–470.

Jacobs, J. W., & Dempsey, J. V. (1993). Simulation and gaming: Fidelity, feedback, and motivation. In: Dempsey, J.V. and Sales G.C. (Eds.). Interactive instruction and feedback. Englewood Cliffs, New Jersey: Educational Technology Publications

Janssen, A., Shaw, T., Bradbury, L., Moujaber, T., Nørrelykke, A. M., Zerillo, J. A., et al. (2016). A mixed methods approach to developing and evaluating oncology trainee education around minimization of adverse events and improved patient quality and safety. BMC Med Educ., 16(1),

Kalin, D., Nemer, L. B., Fiorentino, D., Estes, C., & Garcia, J. (2016). The Labor Games: A Simulation-Based Workshop Teaching Obstetrical Skills to Medical Students [2B]. Obstetrics & Gynecology,.

Kaylor, S. K. (2016). Fishing for pharmacology success: Gaming as an active learning strategy. Journal of Nursing Education, 55(2), 119.

Kerfoot, B. P., Baker, H., Pangaro, L., Agarwal, K., Taffet, G., Mechaber, A. J., et al. (2012). An online spaced-education game to teach and assess medical students: A multi-institutional prospective trial. Acad Med., 87(10), 1443–9.

Kerfoot, B. P., Kearney, M. C., Connelly, D., & Ritchey, M. L. (2009). Interactive spaced education to assess and improve knowledge of clinical practice guidelines: A randomized controlled trial. Annals of Surgery, 249, 744–749.

Kerfoot, B. P., & Kissane, N. (2014). The use of gamification to boost residents’ engagement in simulation training. JAMA Surgery, 149(11), 1208–1209.

Kerfoot, B. P., Turchin, A., Breydo, E., Gagnon, D., & Conlin, P. R. (2014). An online spaced-education game among clinicians improves their patients’ time to blood pressure control a randomized controlled trial. Circulation: Cardiovascular Quality and Outcomes, 7(3), 468–474.

Kerr, S., Tolliver, J., & Petree, D. (1977). Manuscript characteristics which influence acceptance for management and social science journals. Academy of Management Journal, 20, 132–141.

Klabbers, J. H. G. (2009). Terminological ambiguity: Game and simulation. Simulation and Gaming, 40, 446–463.

Knowles, M. S. (1980). The modern practice of adult education: From pedagogy to andragogy. Wilton, Conn.: Association Press

Koivisto, J. M., Multisilta, J., Niemi, H., Katajisto, J., & Eriksson, E. (2016). Learning by playing: A cross-sectional descriptive study of nursing students’ experiences of learning clinical reasoning. Nurse Education Today, 45, 22–28.

Kolb, D. A., Boyatzis, R. E., & Mainemelis, C. (2001). Experiential learning theory: Previous research and new directions. Perspectives on Cognitive, Learning, and Thinking Styles, 1, 227–247.

Kow, A. W. C., Ang, B. L. S., Chong, C. S., Tan, W. B., & Menon, K. R. (2016). Innovative Patient Safety Curriculum Using iPAD Game (PASSED) Improved Patient Safety Concepts in Undergraduate Medical Students. World J Surg., 40(11), 2571–80.

Kumar, J. (2013). Gamification at work: Designing engaging business software. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics).

Lamb, L. C., DiFiori, M. M., Jayaraman, V., Shames, B. D., & Feeney, J. M. (2017). Gamified Twitter Microblogging to Support Resident Preparation for the American Board of Surgery In-Service Training Examination. J Surg Educ., 74(6), 986–91.

Lameris, A. L., Hoenderop, J. G. J., Bindels, R. J. M., & Eijsvogels, T. M. H. (2015). The impact of formative testing on study behaviour and study performance of (bio)medical students: A smartphone application intervention study. BMC Med Educ., 15(1)

Landers, R. N. (2014). Developing a theory of gamified learning: Linking serious games and gamification of learning. Simulation and Gaming, 45, 752–768.

Landers, R. N., Bauer, K. N., Callan, R. C., & Armstrong, M. B. (2015). Psychological theory and the gamification of learning. In Gamification in education and business, pp. 165–186.

Leach, M. E. H., Pasha, N., McKinnon, K., & Etheridge, L. (2016). Quality improvement project to reduce paediatric prescribing errors in a teaching hospital. Archives of Disease in Childhood: Education and Practice Edition, 101(6), 311–315.

Lepper, M. R., Greene, D., & Nisbett, R. E. (1973). Undermining children’s intrinsic interest with extrinsic reward: A test of the “overjustification” hypothesis. Journal of Personality and Social Psychology, 28(1), 129–137.

Lim, E. C.-H., & Seet, R. C. S. (2008). Using an online neurological localisation game. Medical Education, 42(11), 1117.

Lin, D. T., Park, J., Liebert, C. A., & Lau, J. N. (2015). Validity evidence for Surgical Improvement of Clinical Knowledge Ops: A novel gaming platform to assess surgical decision making. American Journal of Surgery, 209(1), 79–85.

Lister, C., West, J. H., Cannon, B., Sax, T., & Brodegard, D. (2014). Just a fad? Gamification in health and fitness apps. Journal of Medical Internet Research, 2, e9.

Lobo, V., Stromberg, A. Q., & Rosston, P. (2017). The Sound Games: Introducing Gamification into Stanford’s Orientation on Emergency Ultrasound. Cureus., 9(9), e1699.

Longmuir, K. J. (2014). Interactive computer-assisted instruction in acid-base physiology for mobile computer platforms. AJP Adv Physiol Educ [Internet]., 38(1), 34–41.

Mallon D, Vernacchio L, Leichtner AM, Kerfoot BP. “Constipation Challenge” game improves guideline knowledge and implementation. Vol. 50, Medical Education. 2016. p. 589–90.

Marczewski, A. (2017). 52 gamification mechanics and elements. https://www.gamified.uk/user-types/gamification-mechanics-elements/.

McCoy, L., Lewis, J. H., & Dalton, D. (2016). Gamification and multimedia for medical education: A landscape review. The Journal of the American Osteopathic Association, 116(1), 22.

Mekler, E. D., Brühlmann, F., Tuch, A. N., & Opwis, K. (2017). Towards understanding the effects of individual gamification elements on intrinsic motivation and performance. Computers in Human Behavior, 71, 525–534.

Mishori, R., Kureshi, S., & Ferdowsian, H. (2017). War games: using an online game to teach medical students about survival during conflict “When my survival instincts kick in, what am I truly capable of in times of conflict?”. Medicine, Conflict, and Survival, 33(4), 250–262.

Møllerand, A. P., & Jennions, M. D. (2001). Testing and adjusting for publication bias. Trends in Ecology and Evolution, 16, 580–586.

Montrezor, L. H. (2016). Performance in physiology evaluation: Possible improvement by active learning strategies. Advances in Physiology Education, 40(4), 454–457.

Mullen, K. (2018). Innovative learning activity: Toy Closet: A growth and development game for nursing students. Journal of Nursing Education, 57(1), 63.

Muntean, C. C. I. (2011). Raising engagement in e-learning through gamification. In The 6th International Conference on Virtual Learning ICVL 2011, Vol. 1, pp. 323–329.

Murre, J. M. J., & Dros, J. (2015). Replication and analysis of Ebbinghaus’ forgetting curve. PLoS ONE, 10, e0120644.

Nah, F. F. H., Zeng, Q., Telaprolu, V. R., Ayyappa, A. P., & Eschenbrenner, B. (2014). Gamification of education: A review of literature. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics).

Nemer, L. B., Kalin, D., Fiorentino, D., Garcia, J. J., & Estes, C. M. (2016). The labor games. Obstet Gynecol., 128, 1s–5s.

Nevin, C. R., Westfall, A. O., Rodriguez, J. M., Dempsey, D. M., Cherrington, A., Roy, B., et al. (2014). Gamification as a tool for enhancing graduate medical education. Postgrad Med J [Internet]., 90(1070), 685–93.

Ouzzani, M., Hammady, H., Fedorowicz, Z., & Elmagarmid, A. (2016). Rayyan—A web and mobile app for systematic reviews. Systematic Reviews, 5(1), 210.

Oxford English Dictionary. (2017). Oxford English Dictionary Online. Oxford English Dictionary.

Pacala, J. T., Boult, C., & Hepburn, K. (2006). Ten years’ experience conducting the aging game workshop: Was it worth it? J Am Geriatr Soc., 54(1), 144–9.

Patton, K. K., Branzetti, J. B., & Robins, L. (2016). Assessment and the competencies: A faculty development game. Journal of Graduate Medical Education, 8(3), 442–443.

Perrotta, C., Featherstone, G., Aston, H., & Houghton, E. (2013). Game-based learning: Latest evidence and future directions. In NFER (National Foundation for Educational Research).

Pettit, R. K., McCoy, L., Kinney, M., & Schwartz, F. N. (2015). Student perceptions of gamified audience response system interactions in large group lectures and via lecture capture technology Approaches to teaching and learning. BMC Med Educ., 15(1).

Petrucci, A. M., Kaneva, P., Lebedeva, E., Feldman, L. S., Fried, G. M., & Vassiliou, M. C. (2015). You have a message! Social networking as a motivator for FLS training. Journal of Surgical Education, 72(3), 542–548.

Ramsey, F. P. (1923). Tractatus Logico-Philosophicus. By Ludwig Wittgenstein. Mind.

Reed, D. A., Cook, D. A., Beckman, T. J., Levine, R. B., Kern, D. E., & Wright, S. M. (2007). Association between funding and quality of published medical education research. Journal of the American Medical Association, 298, 1002–1009.

Reeve, J., & Deci, E. L. (1996). Elements of the competitive situation that affect intrinsic motivation. Personality and Social Psychology Bulletin, 22, 24–33.

Richey Smith, C. E., Ryder, P., Bilodeau, A., & Schultz, M. (2016). Use of an online game to evaluate health professions students’ attitudes toward people in poverty. American Journal of Pharmaceutical Education, 80(8), 139.

Robson, K., Plangger, K., Kietzmann, J. H., McCarthy, I., & Pitt, L. (2015). Is it all a game? Understanding the principles of gamification. Business Horizons, 58, 411–420.

Rondon, S., Sassi, F. C., & Furquim De Andrade, C. R. (2013). Computer game-based and traditional learning method: A comparison regarding students’ knowledge retention. BMC Med Educ., 13(1)

Sabri, H., Cowan, B., Kapralos, B., Porte, M., Backstein, D., & Dubrowskie, A. (2010). Serious games for knee replacement surgery procedure education and training. Procedia-Social and Behavioral Sciences, 2, 3483–3488.

Sailer, M., Hense, J. U., Mayr, S. K., & Mandl, H. (2017). How gamification motivates: An experimental study of the effects of specific game design elements on psychological need satisfaction. Computers in Human Behavior, 39, 371–380.

Salen, K., & Zimmerman, E. (2004). Rules of play: Game design fundamentals. In Environment. Cambridge, Mass: MIT Press.

Scales, C. D., Moin, T., Fink, A., Berry, S. H., Afsar-Manesh, N., Mangione, C. M., et al. (2016). A randomized, controlled trial of team-based competition to increase learner participation in quality-improvement education. International Journal for Quality in Health Care: Journal of the International Society for Quality in Health Care, 28(2), 227–232.

Seaborn, K., & Fels, D. I. (2015). Gamification in theory and action: A survey. International Journal of Human Computer Studies, 74, 14–31.

Shah, S., Lynch, L. M. J., & Macias-Moriarity, L. Z. (2010). Crossword puzzles as a tool to enhance learning about anti-ulcer agents. Am J Pharm Educ., 74(7), 1–5.

Sharma, R., Gordon, M., Dharamsi, S., & Gibbs, T. (2015). Systematic reviews in medical education: A practical approach: AMEE Guide 94. Medical Teacher, 37, 108–124.

Simões, J., Redondo, R. D., & Vilas, A. F. (2013). A social gamification framework for a K-6 learning platform. Computers in Human Behavior, 29, 345–353.

Slamecka, N. J., & McElree, B. (1983). Normal forgetting of verbal lists as a function of their degree of learning. Journal of Experimental Psychology: Learning, Memory, and Cognition, 9, 384.

Snyder, E., & Hartig, J. R. (2013). Gamification of board review: a residency curricular innovation. Med Educ., 47(5), 524–5.

Stanley, D., & Latimer, K. (2011). “The Ward”: A simulation game for nursing students. Nurse Education in Practice, 11(1), 20–25.

Subhash, S., & Cudney, E. A. (2018). Gamified learning in higher education: A systematic review of the literature. Computers in Human Behavior, 87, 197–206.