Abstract

Many different medical school selection processes are used worldwide. In this paper, we examine the effect of (1) participation, and (2) selection in a voluntary selection process on study performance. We included data from two cohorts of medical students admitted to Erasmus MC, Rotterdam and VUmc, Amsterdam, The Netherlands and compared them to previously published data from Groningen medical school, The Netherlands. All included students were admitted based on either (1) a top pre-university grade point average, or (2) a voluntary selection process, or (3) weighted lottery. We distinguished between lottery-admitted students who had participated in the voluntary selection process and had been rejected, and lottery-admitted students who had not participated. Knowledge test scores, study progress, and professionalism scores were examined using ANCOVA modelling, logistic regression, and Bonferroni post hoc multiple-comparison tests, controlling for gender and cohort. For written test grades, results showed a participation effect at Groningen medical school and Erasmus MC (p < 0.001), and a selection effect at VUmc (p < 0.05). For obtained course credits, results showed a participation effect at all universities (p < 0.01) and a selection effect at Groningen medical school (p < 0.005). At Groningen medical school, a participation effect seemed apparent in on time first-year completion (p < 0.05). Earlier reported selection and participation effects in professionalism scores at Groningen medical school were not apparent at VUmc. Top pre-university students performed well on all outcome measures. For both the participation effect and the selection effect, results differed between universities. Institutional differences in curricula and in the design of the selection process seem to mediate relations between the different admissions processes and performance. Further research is needed for a deeper understanding of the influence of institutional differences on selection outcomes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In the search for better ways to select the most suitable students, medical schools worldwide have developed a variety of selection instruments (Salvatori 2001; Siu and Reiter 2009). Most research assessing the effects of such selection tools on performance has to correct for range restriction, because there is generally no study performance data available on rejected applicants. Therefore, correlations between selection scores and performance can only be calculated for applicants who were accepted into medical training. However, in The Netherlands, multiple admissions processes are simultaneously in effect. In the multi-process Dutch admissions system, applicants who are initially rejected in a selection process for which participation is voluntary, still have a chance of admission through lottery in the same year (Schripsema et al. 2014; Urlings-Strop et al. 2013). This situation offers unique possibilities to study the effects of selection processes.

A recent study by our group revealed that lottery-admitted students were more at risk of study delay and low grades than students admitted based on a voluntary selection process or top pre-university grades (Schripsema et al. 2014). However, we only found this effect for lottery-admitted students who had not first participated in the voluntary selection process. Performance of students who were rejected in this process and subsequently admitted through the lottery did not significantly differ from selection-accepted students. As such, there seemed to be a self-selection effect present in the choice of whether or not to participate in the voluntary selection process. We suggested that the voluntary and time-consuming nature of the selection process may induce self-selection of highly motivated applicants and called this self-selection a ‘participation effect’. This explanation is in line with earlier research showing lower dropout rates among students who had been selected with admission tests than among students who were admitted based solely on pre-admission grades (O’Neill et al. 2011). Moreover, another study showed that students who were accepted in a selection process reported higher motivation than students who had not participated in such a process (Hulsman et al. 2007). Intrinsic or autonomous motivation is related to higher performance in medical school (Kusurkar et al. 2013a, b). Therefore, good motivation and the resulting high efforts in the application to medical school might indeed explain the participation effect of a voluntary selection process on performance. In this mechanism, participation in a voluntary selection process would predict better performance, regardless of the design and contents of the selection process in itself. Efforts to improve selection processes worldwide might then also focus on expanding the time-investment a selection process requires, in order to stimulate the self-selection of highly motivated applicants.

However, in the design of a selection process, medical schools aim mainly at a selection effect, not a self-selection effect of participation per se. The goal of any selection process is that students admitted based on high selection scores perform better in medical school than those who were rejected base on low selection scores. In the previous study, such a selection effect was found only for a ‘non-academic’ professionalism course, where selection-accepted students received the optimal score more often than selection-rejected students. For the academic outcome measures, selection-accepted students did not outperform their initially rejected peers. This made the findings of the study somewhat disappointing in terms of selection outcomes. However, this study was performed at one medical school, and results could be different depending on the contents of the selection process and institutional differences.

We wondered whether the effects we found in the first study would be generalizable to other medical schools within the context of the multiple-admission process system. In this study, we therefore examined whether the participation effect of a voluntary selection process also occurred at two other medical schools in the Dutch context, and whether at these schools, the voluntary process did yield a selection effect. We focused on the following research questions:

-

1.

Does a voluntary selection process have a participation effect on performance?

-

2.

Does a voluntary selection process have a selection effect on performance?

Methods

Context

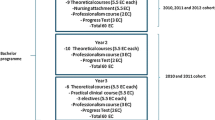

In this study, we compared previously published data from Groningen medical school, The Netherlands (Schripsema et al. 2014) with data from two other Dutch medical schools: Erasmus MC medical school, Rotterdam (Erasmus MC) and VUmc School of Medical Sciences, Amsterdam (VUmc). Annually, approximately 8500 applicants sign up for medical school in The Netherlands. Nationally, there are 2780 places available at eight different medical schools. Groningen medical school and Erasmus MC each offer 410 places, whereas VUmc admits 350 applicants every year. All Dutch medical schools offer a 3-year pre-clinical Bachelor’s programme, followed by a 3-year clinical Master’s programme and share the same educational blueprint and end terms (Van Herwaarden et al. 2009). All courses in the programme provide a student with a fixed number of course credits under the European Credit Transfer System (ECTS). Each course credit reflects a study load of 28 h. The study programme for each year contains 60 ECTS.

3-Step admission system in The Netherlands

The threshold for completing pre-university education in The Netherlands, and hence, for applying to medical school is a pre-university GPA of 5.5. At the time of data collection, places in Dutch medical schools were assigned in three steps. In the first step, applicants with a pre-university GPA of 8 or higher (on a scale from 1 = poor to 10 = excellent), were offered a place in medical school without further assessment. Pre-university GPA is calculated as the average of pre-university school examinations and national final examinations. This is a national policy through which applicants are offered direct access to the Dutch medical school of their choice. Only around 5% of all pre-university graduates, and around 15% of all medical school applicants, achieve a pre-university GPA this high. Therefore, these students were deemed top pre-university students.

The second step, in which participation was voluntary, was a selection process organized by each medical school separately. Everyone who met the requirements for application was allowed to participate in this process. Generally, the voluntary selection processes consisted of two phases; a first selection based on written portfolios, and a second selection based on additional tests. Applicants who were rejected in the selection process automatically enrolled in the third step in the Dutch admission system, given that they did not withdraw their application. In this step, the remaining places were assigned through a national weighted lottery, in which chances of admission increased parallel to pre-university GPA. Four pre-university GPA categories were distinguished: 7.5–7.9; 7.0–7.4; 6.5–6.9, and <6.5. The admission ratio for the categories was 9:6:4:3 (DUO 2013; Schripsema et al. 2014; Urlings-Strop et al. 2009). At the time of data collection, approximately 50% of all places in Dutch medical schools were assigned through the weighted lottery.

Participants

We included all 816 students who started medical training at Erasmus MC in 2009 and 2010, and all 700 students who started medical training at VUmc in 2010 and 2011. In the Groningen medical school study, all 1055 students who started medical education between 2009 and 2011 were included (Schripsema et al. 2014). At Erasmus MC, admissions data were inconsistent for 75 students, warranting exclusion from the analyses. For these students, conflicting data was recorded on participation in the selection process and the basis for placement. For example, acceptance in the selection process for some students was recorded, whereas their official placement was based on lottery. In these cases, the admission group these students belonged to could not be determined. We had no reason to believe the excluded data was not random, and therefore did not correct for this in our analyses. Additionally, 59 students were admitted to Erasmus MC through unusual trajectories, such as a pre-university course, or a trade-off with another admitted applicant who had been placed at a different medical school in The Netherlands. For such students, the admission pathway could not be determined. These students represented different groups from the ones we were studying and were therefore also excluded from the analysis. Consequently, 682 students at Erasmus MC were included in the analyses. At VUmc, 48 students were admitted through unusual trajectories. Therefore, 652 VUmc students were included in the analyses.

In order to make comparisons with the Groningen medical school study possible, we distinguished four groups based on their admissions pathway: (1) top pre-university GPA students, (2) students accepted in the multifaceted selection process, (3) selection-rejected students who were subsequently admitted through lottery, and (4) lottery-admitted students.

Multifaceted selection process

Groningen medical school

In the first phase of the voluntary selection process at Groningen medical school, The Netherlands, applicants handed in a portfolio with sections on pre-university education, extracurricular activities, and reflection. This portfolio was based on the portfolio that was used in the selection processes at Erasmus MC and VUmc, but Groningen medical school expanded the portfolio with the section on reflection. Experience in health care or management and organisation, and special talents in sports, music or science, yielded points. For all activities, evidence was required to support the statements made in the portfolio, such as letters of reference. The portfolios were graded and the 300 highest-scoring applicants were invited to the second phase, which took place at the university. This phase consisted of four blocks: a writing assignment, a patient lecture, a scientific reasoning block, and an MMI-like series of short interviews and role-plays. Characteristics that were assessed in this phase were medical knowledge, ethical decision-making, professional behaviour, analytic/creative/practical skills, and communication skills (Schripsema et al. 2014). All participants were ranked based on their total score and the available places were allotted according to this ranking. A more detailed description of the selection process at Groningen medical school can be found in a previous publication (Schripsema et al. 2014).

Erasmus MC and VUmc

Erasmus MC and VUmc used the same multifaceted selection process, but the scores were handled slightly differently. In the first—‘non-academic’—phase, applicants handed in the portfolio with sections on pre-university education and extracurricular activities. At Erasmus MC, all participants who had sent in a portfolio were invited to the second phase of the selection process. At VUmc, a cut-off score was used in the first phase. All applicants who scored above the cut-off score in this phase were invited to the second phase.

The second—academic—phase of the selection process consisted of five written tests on medical and academic subjects, which were preceded by informative lectures and administered at the university. The tests covered five domains: mathematics, the topics of the lectures, logical reasoning, anatomy, and questions on scientific articles. The tests were taken over three consecutive days (Stegers-Jager et al. 2015). Applicants were admitted if their mean score on all tests was 5.5 or higher (on a scale where 1 = poor and 10 = excellent) and if they had a score of 5.5 higher on at least four of the five tests. When this absolute threshold resulted in the selection of fewer students than available places, more applicants were admitted through the national weighted lottery. When this resulted in the selection of more students than the available places, students were admitted based on a ranking of the selection scores. The selection process is described in more detail in a previous publication (Stegers-Jager et al. 2015).

Outcome measures

Written test grades were examined by averaging scores of all first-year courses that were concluded with a written knowledge test. Only scores on the first occasion that students could take the test were included. Test grades are on a scale ranging from 1 = poor to 10 = excellent with a pass/fail cut-off score of 5.5. At Groningen medical school, students sat four written knowledge tests in the first year. At Erasmus MC, students in the 2009 cohort sat twelve first-year knowledge tests and students in the 2010 cohort sat eleven first-year knowledge tests. At VUmc, students sat six first-year knowledge tests.

Study progress was examined by calculating the number of course credits in the European Credit Transfer System (ECTS) students received in their first year. The maximum attainable number of course credits per year is 60 at all universities.

On-time completion first-year programme was assessed by calculating whether students had passed all first-year courses within the year. For this variable, additional analyses were performed with Groningen medical school data in order to be able to compare all three universities.

Professionalism was examined at Groningen medical school and VUmc by assessing percentages of students who received the optimal score (i.e. ‘good’) in a professional development course. In this course, competencies such as collaborating, communicating, presenting, organizing and skills regarding physical examination of patients are trained. Students’ professionalism was scored as ‘insufficient’, ‘sufficient’ or ‘good’. The calculation of the final grade was based on the assessment of various raters in the course, such as tutors and mentors. At Erasmus MC, no professionalism data was available for the analysed cohorts.

Data analysis

We performed analysis of covariance (ANCOVA) with Bonferroni post hoc multiple comparison tests to assess group differences in written test grades and the number of first-year ECTS students attained. To examine group differences in the percentage of students who obtained fewer ECTS than the minimum norm and percentages of students who obtained the optimal score in the professionalism course, we conducted binary logistic regression analyses. Definitions of dropout differed between universities, which made it difficult to replicate the previous analyses using this variable. Therefore we performed analysis of percentages of students who completed the first-year educational programme within 1 year. We performed this analysis with data from Groningen medical school as well. In all analyses, we controlled for gender and cohort. All analyses were conducted using IBM SPSS statistics for Windows Version 22 (IBM Corp. 2015).

Data handling and permission

Data were extracted from the student administration and anonymised for analysis. All data were collected as part of the selection process and regular academic activities. Therefore, individual consent was not necessary.

This study was approved by the ethical review board of the Dutch association for medical education (NVMO-ERB, file number 458).

Results

Descriptive statistics

Descriptive statistics for group size, group percentages of females and mean pre-university GPA are depicted in Table 1. The percentage of females differed significantly between groups at Groningen medical school and Erasmus MC [χ 2(3) = 3.08, p < 0.05 and χ 2(3) = 9.40, p < 0.05, respectively]. At Groningen medical school, the percentage of females was higher in the selection-accepted group than in the lottery-admitted group that had not participated in selection (p < 0.05) (Schripsema et al. 2014). At Erasmus MC, Bonferroni correction for multiple comparisons rendered the results of post hoc tests non-significant. At VUmc, group percentages of females did not differ [χ 2(3) = 1.25, p > 0.05]. By definition, mean pre-university GPA was higher in the top pre-university group than in the other groups at all medical schools. The other groups did not differ in mean pre-university GPA.

Written test grades

Mean written test grades are displayed in Table 2. At all medical schools, written test grades differed between groups [Groningen medical school: F (3,1025) = 63.2, p < 0.001; Erasmus MC: F (3,676) = 48.3, p < 0.001; VUmc: F (3,619) = 25.4, p < 0.001]. Post hoc multiple comparison tests showed that at all universities, the top pre-university group had a higher mean written test grade than all other groups (p < 0.001). At Groningen medical school and Erasmus MC, the group accepted in the multifaceted selection process scored higher than the lottery-admitted group that had not participated in this process (p < 0.01) (Schripsema et al. 2014) whereas at VUmc, this group outperformed the selection-rejected lottery group (p < 0.05).

First-year course credits

The mean number of attained first-year course credits differed between groups at all medical schools [Groningen medical school: F (3,1025) = 17.5, p < 0.001; Erasmus MC: F (3,653) = 13.2, p < 0.001; VUmc: F (3,648) = 7.73, p < 0.001] (Table 2). At Groningen medical school, the top pre-university group outperformed all other groups (p < 0.01), whereas the lottery-admitted group that had not participated in the selection process obtained fewer credits than all other groups (p < 0.05) (Schripsema et al. 2014) At Erasmus MC and VUmc, the top pre-university group scored higher than both lottery-admitted groups (p < 0.01), whereas the group accepted in the multifaceted selection process outperformed the lottery group that had not participated in this process (Erasmus MC p < 0.001; VUmc p < 0.01).

On-time completion first-year programme

At all schools, the percentage of students who completed the first-year programme within the year differed between groups [Groningen medical school: χ 2(3) = 42.1, p < 0.001; Erasmus MC: χ 2(3) = 26.31, p < 0.001; VUmc: χ 2(3) = 19.3, p < 0.001] (Table 3). At Groningen medical school, the top pre-university group outperformed all other groups (p < 0.01) and the selection-accepted group outperformed the lottery-admitted group that had not participated in the voluntary process (p < 0.05) (Schripsema et al. 2014) At VUmc, the top pre-university group outperformed all other groups (p < 0.05). At Erasmus MC, the top pre-university group outperformed all other groups (p < 0.01).

Optimal professionalism score

Percentages of students who attained the optimal professionalism score at Groningen medical school and VUmc are displayed in Table 4. At Groningen medical school, percentages of students who obtained the optimal professionalism score (i.e. ‘good’) differed between groups. The top pre-university group and the group accepted in the selection process outperformed both lottery-groups, though the difference between the first group and the selection-rejected lottery group did not reach statistical significance due to small group size (Schripsema et al. 2014). At VUmc, group percentages of students who attained the optimal professionalism score (i.e. ‘good’) did not differ between groups [χ 2(3) = 4.5, p > 0.05].

Discussion

In the current study, we examined whether a voluntary multifaceted selection process had a participation effect and/or a selection effect on study performance by comparing performance at three medical schools. For written test grades, results showed a participation effect at Groningen medical school and Erasmus MC and a selection effect at VUmc. For obtained course credits, results showed a participation effect at all universities and a selection effect at Groningen medical school. At Groningen medical school, a participation effect seemed apparent in on-time first-year completion. Earlier reported selection and participation effects for professionalism scores at Groningen medical school were not generalizable to other contexts. Top pre-university students performed well on all outcome measures. For all output measures, effects differed between universities.

The previously reported participation effect of the voluntary selection process was not generalizable to the other medical schools. It seems that the voluntary selection process at Erasmus MC and VUmc did not lead to a self-selection of high-performers the way it did at Groningen medical school. A possible explanation for the absence of this effect could be sought in the design of the voluntary selection process, which was different at Groningen medical school than at Erasmus MC and VUmc. Both the first and the second phase of the process at Groningen medical school were more focused on so-called ‘non-academic’ attributes, whereas the process at Erasmus MC and VUmc was more focused on cognitive ability. It is possible that the process at Groningen medical school calls upon different personal characteristics than the process at the other institutions, and that these characteristics are more predictive of study performance than the variables rewarded in the other process. For example, personality might play a role. A recent study at this university indicated that selection-accepted students scored high on conscientiousness and extraversion, and low on neuroticism, characteristics that were shown to predict success in medical school (Schripsema et al. 2016). Additionally, efficient study strategies and time management skills might play a role (Schripsema et al. 2015; West and Sadoski 2011). Further research is needed to study the different tools within the selection processes and the applicant characteristics that they call upon.

For the selection effect too, the outcomes differed between institutions. At VUmc, the selection-accepted group achieved higher written test grades than the selection-rejected group, but for the other variables we did not find such an effect. These differences in effects between VUmc and Erasmus MC, where the same selection process was used, might be related to different choices regarding the first,—‘non-academic’—phase of the selection process: Erasmus MC did not apply a cut-off score, whereas VUmc rejected the lowest-scoring applicants before inviting the remaining applicants to the second phase of the process. Applicants who scored low in the first phase had less experience with extracurricular activities such as jobs in health care settings, and might be less capable of combining their time-intensive training programme with their personal life and therefore perform less well in medical school (Lucieer et al. 2015; Urlings-Strop et al. 2009). Another explanation could be that students accepted in the multifaceted process at VUmc are likely to have had more working experience within the medical context (hence, a higher score for the portfolio section on extracurricular activities) and therefore might have a knowledge advantage in the first stages of their training, explaining the higher written test scores. However, it remains unclear which measurements within the voluntary selection process at VUmc are related to this selection effect.

In studying the effects of the different admissions processes within the different medical schools, it became clear that outcomes differed substantially between the different universities. A possible explanation for these differences could be that although the grading system and the ECTS course credit system are standardised, the use of these measures differs between universities. For example, one university might offer many courses for few course credits, whereas the other may offer few courses that are worth many course credits. This may have had an effect on performance outcomes, as in principal, students are expected to reach a minimum amount of course credits; something that, judging from the data in our study, seems more difficult at one university than at the other. However, as these effects apply to all students within a university, we do not expect these inter-university differences to influence the intra-university effects we found. Yet, it would be valuable to examine relations between selection processes and different curricula, as a selection process that is well-matched with the educational practice at a given institution will likely lead to better outcomes than a selection process focused on characteristics that are less relevant in a curriculum.

The differences between universities are in line with earlier research showing that relationships between selection processes and medical school performance are influenced by institutional differences. For example, one study examined correlations between medical school performance and a selection process that was the same for three medical schools (UMAT, pre-university GPA and interview) and found that the predictive validity of these tools differed significantly between schools (Edwards et al. 2013). Our findings support the notion that institutional differences and differences in the implementation of a certain selection process influence the effects this process has on later performance. This implicates that the literature on the effects of selection tools on later performance, which consists mainly of single-site cross-sectional studies (Patterson et al. 2016), should be expanded with multi-site studies in order to draw generalizable conclusions about the predictive validity of different selection tools.

A pattern we consistently found in the data was that the group admitted based on a top pre-university GPA performed best. So far, previous academic achievement appears to be one of the most important predictors used in medical school selection (Benbassat and Baumal 2007; Prideaux et al. 2011; Salvatori 2001; Siu and Reiter 2009) a strategy that is supported by our findings. The previous study showed that students admitted based on a top pre-university GPA were also successful within the ‘non-academic’ domain of medical training. In the current study this effect was not significant, but the trend was similar, suggesting that previous academic achievement might well be related to suitable ‘non-academic’ skills. This may partly be explained by the capabilities reflected in pre-university GPA. Performance in pre-university education might not just reflect academic aptitude, but also ‘non-academic’ qualities such as efficient study strategies, personality, work ethic, and interest in learning (Duvivier et al. 2015; Schripsema et al. 2015). These will likely persist in later education. As such, the term ‘previous academic achievement’ might not fully cover the contents of this kind of achievement. All things considered, previous achievement in education remains a valuable criterion in medical school admissions processes.

Some limitations of our study should be taken into account when interpreting its results. First, we only included data from the first, pre-clinical, year of medical training. Further research is necessary to examine whether the effects of the different admissions processes persist throughout the transition to the clinical phase and will extend to performance as a medical professional. Second, although we did find a participation effect and a selection effect for some of the outcomes, it remains unclear whether the effects can be attributed to the selection process as a whole, or only to parts of it. The relation between the individual tools within the process and performance should be analysed to examine which tools are directly related to performance. Third, the admissions processes that we analysed are typical for the Dutch system. The possibility to choose between a time-intensive selection process and lottery might influence outcomes and the effects of the different processes might be different when applicants do not have this possibility.

Conclusions

For both the participation effect and the selection effect of the voluntary selection process, the findings were inconsistent. Institutional differences and differences in the design of the selection process seem to influence the relations between the different admissions processes and performance. The group admitted based on a top pre-university GPA did consistently perform best of all the included groups. Further research is needed to examine which of the selection instruments are most predictive of performance, and how curriculum differences influence these relations.

References

Benbassat, J., & Baumal, R. (2007). Uncertainties in the selection of applicants for medical school. Advances in Health Sciences Education, 12(4), 509–521. doi:10.1007/s10459-007-9076-0.

DUO. (2013). Je kans op inloting. Retrieved from http://www.duo.nl/particulieren/studeren/loten/centrale_selectie/je_kans_op_inloting.asp.

Duvivier, R., Kelly, B., & Veysey, M. (2015). Selection and study performance. Medical Education, 49(6), 638–639. doi:10.1111/medu.12691.

Edwards, D., Friedman, T., & Pearce, J. (2013). Same admissions tools, different outcomes: A critical perspective on predictive validity in three undergraduate medical schools. BMC Medical Education, 13(1), 173.

Hulsman, R. L., van der Ende, J. S. J., Oort, F. J., Michels, R. P. J., Casteelen, G., & Griffioen, F. M. M. (2007). Effectiveness of selection in medical school admissions: Evaluation of the outcomes among freshmen. Medical Education, 41(4), 369–377. doi:10.1111/j.1365-2929.2007.02708.x.

IBM Corp. (2015). IBM SPSS statistics for windows, version 23.0. Armonk, NY: IBM Corp.

Kusurkar, R. A., Croiset, G., Galindo-Garre, F., & Ten Cate, O. (2013a). Motivational profiles of medical students: Association with study effort, academic performance and exhaustion. BMC Medical Education, 13(1), 87.

Kusurkar, R. A., Ten Cate, T., Vos, C. M., Westers, P., & Croiset, G. (2013b). How motivation affects academic performance: A structural equation modelling analysis. Advances in Health Sciences Education: Theory and Practice, 18(1), 57–69.

Lucieer, S. M., Stegers-Jager, K. M., Rikers, R. M., & Themmen, A. P. N. (2015). Non-cognitive selected students do not outperform lottery-admitted students in the pre-clinical stage of medical school. Advances in Health Sciences Education: Theory and Practice. doi:10.1007/s10459-015-9610-4.

O’Neill, L., Hartvigsen, J., Wallstedt, B., Korsholm, L., & Eika, B. (2011). Medical school dropout—Testing at admission versus selection by highest grades as predictors. Medical Education, 45(11), 1111–1120. doi:10.1111/j.1365-2923.2011.04057.x.

Patterson, F., Knight, A., Dowell, J., Nicholson, S., Cousans, F., & Cleland, J. (2016). How effective are selection methods in medical education? A systematic review. Medical Education, 50(1), 36–60. doi:10.1111/medu.12817.

Prideaux, D., Roberts, C., Eva, K., Centeno, A., Mccrorie, P., Mcmanus, C., et al. (2011). Assessment for selection for the health care professions and specialty training: Consensus statement and recommendations from the ottawa 2010 conference RID A-6207-2008. Medical Teacher, 33(3), 215–223. doi:10.3109/0142159X.2011.551560.

Salvatori, P. (2001). Reliability and validity of admissions tools used to select students for the health professions. Advances in Health Sciences Education: Theory and Practice, 6(2), 159–175. doi:10.1023/A:1011489618208.

Schripsema, N. R., van Trigt, A. M., Borleffs, J. C. C., & Cohen-Schotanus, J. (2014). Selection and study performance: Comparing three admission processes within one medical school. Medical Education, 48(12), 1201–1210. doi:10.1111/medu.12537.

Schripsema, N. R., van Trigt, A. M., Borleffs, J. C. C., & Cohen-Schotanus, J. (2015). Underlying factors in medical school admissions. Medical Education, 49(6), 639–640. doi:10.1111/medu.12731.

Schripsema, N. R., van Trigt, A. M., van der Wal, M. A., & Cohen-Schotanus, J. (2016). How different medical school selection processes call upon different personality characteristics. PLoS ONE, 11(3), e0150645. doi:10.1371/journal.pone.0150645.

Siu, E., & Reiter, H. I. (2009). Overview: What’s worked and what hasn’t as a guide towards predictive admissions tool development. Advances in Health Sciences Education: Theory and Practice, 14(5), 759–775. doi:10.1007/s10459-009-9160-8.

Stegers-Jager, K. M., Steyerberg, E. W., Lucieer, S. M., & Themmen, A. P. N. (2015). Ethnic and social disparities in performance on medical school selection criteria. Medical Education, 49(1), 124–133. doi:10.1111/medu.12536.

Urlings-Strop, L. C., Stegers-Jager, K. M., Stijnen, T., & Themmen, A. P. N. (2013). Academic and non-academic selection criteria in predicting medical school performance. Medical Teacher, 35(6), 497–502. doi:10.3109/0142159X.2013.774333.

Urlings-Strop, L. C., Stijnen, T., Themmen, A. P. N., & Splinter, T. A. W. (2009). Selection of medical students: A controlled experiment. Medical Education, 43(2), 175–183. doi:10.1111/j.1365-2923.2008.03267.x.

Van Herwaarden, C. L. A., Laan, R. F. J. M., & Leunissen, R. R. M. (2009). The 2009 framework for undergraduate medical education in The Netherlands. Utrecht: Dutch Federation of University Medical Centres.

West, C., & Sadoski, M. (2011). Do study strategies predict academic performance in medical school? Medical Education, 45(7), 696–703. doi:10.1111/j.1365-2923.2011.03929.x.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Schripsema, N.R., van Trigt, A.M., Lucieer, S.M. et al. Participation and selection effects of a voluntary selection process. Adv in Health Sci Educ 22, 463–476 (2017). https://doi.org/10.1007/s10459-017-9762-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10459-017-9762-5