Abstract

Background

While it is a generally accepted fact that many gambling screening tools are not fit for purpose when employed as part of a public health framework, the evidence supporting this claim is sporadic. The aim of this review is to identify and evaluate the gambling screening tools currently in use and examine their utility as part of a public health approach to harm reduction, providing a holistic snapshot of the field.

Methods

A range of index tests measuring aspects of problem gambling were examined, including the South Oaks Gambling Screen (SOGS) and the Problem Gambling Severity Index (PGSI), among others. This review also examined a range of reference standards including the Diagnostic Interview for Gambling Severity (DIGS) and screening tools such as the SOGS.

Results

The present review supports the belief held by many within the gambling research community that there is a need for a paradigm shift in the way gambling harm is conceptualised and measured, to facilitate early identification and harm prevention.

Discussion

This review has identified a number of meaningful deficits regarding the overall quality of the psychometric testing employed when validating gambling screening tools. Primary among these was the lack of a consistent and reliable reference standard within many of the studies. Currently there are very few screening tools discussed in the literature that show good utility in the domain of public health, due to the focus on symptoms rather than risk factors. As such, these tools are generally ill-suited for identifying preclinical or low-risk gamblers.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Increasingly, gambling-related harm is being identified as a global public health concern (Blank et al. 2021; John et al. 2020) linked to substance use (Barnes et al. 2015; Cowlishaw et al. 2014; Jauregui et al. 2016; Petry et al. 2005), domestic and family-related violence (Afifi et al. 2010; Dowling et al. 2014. Dowling et al. 2018a), homelessness (Holdsworth and Tiyce 2012; Lipmann et al. 2004) and certain psychological disorders (Parhami et al. 2014; Stewart et al. 2008; Suomi et al. 2014). A robust public health response is needed to adequately address the issue of gambling-related harm. This requires effective tools aimed at early identification, focusing on the predictors of harm.

The concept of gambling-related harm is poorly defined, with no single robust definition (Langham et al. 2015), and as such it tends to be conceptualised within the confines of a clinical symptomology (Abbott 2020). However, an overly stringent classification for gambling-related harms leads to an inevitable shift in focus towards high-risk gamblers exclusively. In response to this, frameworks have been developed to conceptualise gambling risk and harm in its totality; for example, the taxonomy purposed by Langham et al. (2015), which considers various dimensions of harm and their impact over time. Definitions such as these address the harm that exists outside of a clinical or pathological classification and, by extension, help broaden the scope of who is eligible for intervention. However, the taxonomy proposed by Langham et al. (2015) is an outlier in the literature, and gambling-related harm is generally an ill-defined concept when not understood through the lens of clinical symptomology. The over-reliance on a clinical symptomology for stratifying gambling-related harm has led to the creation of a false dichotomy between “safe” and “dangerous” gambling. Gambling harm exists on a continuum, and harm is not exclusive to those who meet a classification for problem gambling or pathological gambling. Like the development of a public health approach to alcohol-related harm (Heather and Stockwell 2004), the intervention base for gambling-related harm must be broadened to encompass the lower end of this continuum. To achieve this, gambling-related harm must be conceptualised and stratified to better reflect the way in which harm exists on a continuum (Langham et al. 2015). The taxonomy by Langham et al. (2015) is an example of how gambling-related risks and harms can be conceptualised in a way that helps support upstream/harm reduction interventions as part of a public health framework.

The prevalence of problem gambling in the UK is 0.7%, but the healthcare cost attributed to problem gambling sits between £140 and £610 million (GambleAware 2016). These rates likely represent a conservative estimation of the actual costs in light of other international data (Hofmarcher et al. 2020). Data suggest that a disproportional amount of gambling revenue comes from individuals who are classed as problem gamblers (Cassidy 2020), and this is more evident with riskier forms of gambling (Orford et al. 2012). A Finnish study reported that 28.5% of gambling revenue is attributable to problem and pathological gamblers, while the prevalence of problem gambling in Finland sits as low as 2.3% (Castrén et al. 2018). It is not unreasonable to suggest that a portion of these individuals who make up the revenue data display some level of risky behaviour that could be indicative of gambling-related harm. When looking at harm on a population level, it has been suggested that the quantity of harm in non-problem-gambling samples greatly exceeds those present in samples of problem gamblers at a rate of 6:1 (Victorian Responsible Gambling Foundation 2016). Many population surveys fail to meet meaningful epidemiological objectives (Markham and Young 2016) and likely underestimate the true levels of gambling-related harm.

Many gambling screening tools stem from a more clinical understanding of gambling behaviour (Christo et al. 2003; Cox et al. 2004; Pallanti et al. 2005; Petry 2003: Petry 2007; Raylu and Oei 2004; Wickwire et al. 2008) and are less effective at identifying lower-risk gamblers. Broadly speaking, a low-risk gambler is an individual whose behaviour does not reach a clinical threshold but displays some risk factors. However, there is no clear definition that encompasses the gamut of harm experienced by this subclinical population. There is a clear need for an accurate way of identifying individuals who display risky gambling behaviour but do not meet the minimum threshold for a clinical classification. Currently the classification of “problem gambler” is treated and measured as though it is a pathological classification with its own symptomology and not as a collection of risk factors that predict possible harm. In a recent review it was found that most included papers did not examine gambling issues across a continuum of harm and that subclinical presentations of harm are largely unexamined within the literature (Wardle et al. 2021).

The current limitations around gambling screening hinders a key aim of the public health approach which is prevention. The effectiveness of any preventative approach is reliant on the accurate identification of low-risk cases. However, there is a lack of evidence regarding the early identification of problem gambling, gambling and other gaming disorders (Wardle et al. 2021). Gambling-related harm is a multifaceted issue that should not be conceptualised in line with a restrictive clinical symptomology (Wardle et al. 2019). In a recent review of interventions to reduce the public health burden of gambling-related harms, it was found that studies that focused on screening and identification were not adequately represented in the literature (Blank et al. 2021). Another recent review found that there was a paucity of studies that report findings regarding gambling-related harm across a broad continuum, and that the issues of subclinical gambling harm is poorly understood in primary care settings (Roberts et al. 2021). Indeed, when looking at the screening tools commonly used in the field, none have undergone a meta-analysis to identify their overall diagnostic accuracy in relation to low-risk individuals. While there is a widely held sentiment regarding the flaws in gambling screening there is no single encompassing piece of evidence that supports this belief. There is clear need for a comprehensive review of the screening literature to better understand its role in a public health framework and to examine the legitimacy of the inherited wisdom regarding gambling screening tools common in the gambling research sphere.

The aim of this review was to identify and evaluate the gambling screening tools currently in use and to assess their utility as part of a public health approach to addressing gambling-related harm.

Method

Registration

The protocol for this review was registered at Prospero on the 21st of August 2018 (Registration Number: CRD42018106820).

Eligibility criteria

Types of study and design

The review included studies that sought to examine methods of screening for disordered gambling behaviour. Because of the general paucity of such literature, no restriction was placed on type of study design. Papers were excluded if they were not available in English at the time of screening, did not use a standardised method of identifying gambling-related harm, did not include individuals identified as gamblers, and had not undergone peer review. While the exclusion of non-English-language papers is not ideal, the process of accurately translating studies was beyond the scope of this review, and tools validation in one language does not equate to validation in another.

Reference standard

Studies needed to use a valid method of screening for gambling issues as a reference standard. Possible reference standards include semi-structured clinical interviews adhering to the Diagnostic and Statistical Manual of Mental Disorders, Fourth Edition (DSM-IV), DSM-5, International Classification of Diseases, Tenth Revision (ICD-10) or ICD-11 classification of gambling disorder (and previous definitions of the disorder) or other validated screening tools. For a gambling measure to be considered valid it had to have undergone some level of reliability and validity testing elsewhere in the literature.

Population

Gamblers of any age and severity level were included. Studies eligible for inclusion needed to identify the sample as taking part in some level of gambling behaviour; a clinical diagnosis was not required.

Information sources

CINAHL Plus, Embase, MEDLINE, ProQuest Psychology Database, PsycArticles and PsycINFO were used. The databases were searched using a predefined set of terms (see Table 1) that were combined with the use of the Boolean operator “AND”. The searches for the screening papers were combined with the searches for the separate intervention review. These returns were initially screened together, then later separated in the respective categories following title and abstract screening. The full list of search terms is listed in Table 1 for the sake of transparency. The initial searches were carried out between August 2018 and September 2018, with a subsequent updated search carried out in January 2020.

Study selection

Authors 1 and 3 independently screened prospective papers for inclusion. All retrieved papers were entered into EndNote and then exported to Covidence. Reviewers then independently carried out a title and abstract screening and excluded papers that failed to meet the inclusion criteria. Next, full-text screening took place against a predetermined checklist, relevant information from each included paper was entered into the data extraction form by both reviewers independently (see Fig. 1). Any unresolvable disagreements were addressed with additional reviewers.

Data collection process

The data extraction form was based on the Cochrane data collection form for intervention reviews of randomised controlled trials (RCTs) and non-RCTs (Higgins et al. 2019). These forms were piloted by authors 1 and 3 independently to gauge their effectiveness. Data were extracted by author 1 and checked for accuracy by author 3.

Data items

The extracted data included (1) participants (including demographics and case severity, sample size, and inclusion and exclusion criteria), (2) screening tool (including description of the tool, method of delivery and comparators/reference standards used) and (3) findings (including accuracy of the tools, testing setting, administration and interpretation).

Risk of bias in individual studies

The reviewers independently assessed the risk of bias using the QUADAS-2 (Whiting et al. 2011), a tool that grades the applicability and risk of bias for primary diagnostic accuracy studies. Papers were graded across four domains: patient selection, index test, reference standard, and flow and timing. Signalling questions were used to highlight areas of the study design that may contain bias, for example, “Could the selection of patients have introduced bias?” and “Is the reference standard likely to correctly classify the target condition?” Following this, questions relating to the applicability of the tool were asked, which included items like “Are there concerns that the index test, its conduct, or its interpretation differ from the review question?” Each domain contained three signalling questions and one applicability question, excluding the flow and timing domain, which did not include an applicability question, with each rated as “low/high/unclear” risk of bias. The QUADAS-2 is a robust and effective tool for assessing the quality of diagnostic accuracy studies as part of a systematic review (Whiting et al. 2011).

Summary measures

The primary outcome measures taken from the papers were the levels of specificity and sensitivity relating to the accuracy of the measure in question. Additionally, measures of validity and reliability were examined.

Planned methods of analysis

To gauge the overall accuracy of the various gambling screening methods, a meta-analysis was planned. However, due to the lack of consistency across the papers regarding how various screening tools were psychometrically tested, it was not possible to carry out a meta-analysis.

Results

A total of 54 studies met the inclusion criteria, examining 39 separate tools (screening/diagnostic tools, attitude measures and scales). The search of PsyArticles, PubMed, PsycINFO, ScienceDirect, CINAHL, Embase, ProQuest and MEDLINE returned 7619 studies (with 6178 remaining after duplicate removal). A further 5626 papers were excluded during title and abstract screening. Eighty-nine were identified as screening-specific papers and underwent full-text review. Of these, 35 papers were excluded for not meeting the criteria (See fig. 1).

Study characteristics

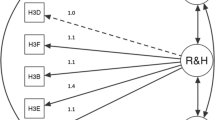

The 54 studies (see Table 2) examined a number of psychometrics including construct validity (n = 5), concurrent validity (n = 13), convergent validity (n = 21), discriminant validity (n = 13), criterion validity (n = 7), predicative validity (n = 7), known group validity (n = 2), internal consistency (n = 26), temporal stability (n = 3), DIF (n = 2), inter-rater reliability (n = 1), test–retest reliability (n = 2), receiver operator characteristics (n = 2), Rasch modelling (n = 5) and classification accuracy (n = 21).

Sixty-two reference standards were used across the 54 studies (see Table 3). In many cases the tool was not explicitly referred to as a reference or gold standard. For the sake of uniformity, any measure that was used in some regard to validate an index test was regarded as a reference standard. The purpose of this holistic approach was to provide a comprehensive view regarding the measures used to test the reliability and validity of different gambling tools.

Participants

The included studies involved 105,061 participants (see Table 4). Of the 54 studies, three used samples of adolescents (n = 5403). Fifty studies used samples of adults (n = 94,344). Lastly, one study used a sample containing both adults (n = 2014) and adolescents (n = 3300).

Risk of bias within the studies

Overall, 51 papers were identified as being at risk of bias and five papers were identified as having concerns regarding applicability ( Fig. 2). Of the 54 papers, only two were identified as being at low risk of bias and having no concerns regarding their applicability (Dellis et al. 2014; Goodie et al. 2013) (Table 5).

Patient selection

Twenty-six studies were rated as being at low risk of including bias relating to the participant selection. Twenty-two were identified as being at high risk of including bias, and a further six were deemed to be lacking sufficient detail to rate the paper as either high- or low-risk.

High risk of bias

A number of the studies included all-male (or predominantly male) samples (Johnson et al. 1997; Nelson and Oehlert 2008; Strong et al. 2003; Wickwire et al. 2008). A screening tool/test for gambling behaviour that is only validated with males is not generalisable to females due to the meaningful gender differences found in the literature (Blanco et al. 2006). Two studies used samples which contained individuals with substance use disorders or mental health issues (Dowling et al. 2018a; Petry 2003). The study by Petry (2003) included a range of subsamples but all were identified as either having a problem with gambling or having a substance use disorder and the study did not include general population samples. Some studies only drew on treatment seeking samples and did not include general population samples when appropriate (Beaudoin and Cox 1999; Brett et al. 2014; Sullivan et al. 2007). Sullivan et al. (2007) drew upon an inmate population, while Brett et al. (2014) did not include a general population sample. Lastly, one study (Dowling et al. 2018b) did not comment on the representatives of their sample.

A portion of the studies also drew upon student samples as a stand-in for a general population sample (Arterberry et al. 2015; Arthur et al. 2008; Christo et al. 2003; Fortune and Goodie. 2010; Steenbergh et al. 2002; Strong et al. 2003; Strong et al. 2004). This is an issue as there is evidence to suggest that some student samples represent a more at-risk group in terms of gambling risk (Nowak 2017). As such, it is not an appropriate subgroup to use when investigating a measure in regard to its functionality towards general population samples. Lastly, one study (Browne et al. 2017) recruited their participants through a survey website that incentivised participation with cash rewards.

Index text

Fifty-two papers were identified as being at low risk of experiencing bias due to the application of the index test. Two studies were identified as high-risk (Arthur et al. 2008; Derevensky and Gupta 2000) due to limitations in the implementation of the index text. In regard to the study by Arthur et al. (2008), many participants did not understand the wording of the measure and were asked to respond in public places on university grounds. Similarly, in the Derevensky and Gupta (2000) study, students completed the index test during school hours in a classroom environment. Finally, one paper was graded and unclear (Chamberlain & Grant 2018), as it lacked a detailed description of the screening tools delivered in relation to the study’s clinical assessment of the participants.

Reference standard

Of the 54 papers, only six were rated as being at low risk of bias, as they employed a clinical interview as a reference standard (Chamberlain and Grant 2018; Dellis et al. 2014; Goodie et al. 2013; Murray et al. 2005; Stinchfield et al. 2017; Weinstock et al. 2007). One study (Sullivan et al. 2007) reported using clinicians to assess/diagnose participants but the procedure was not clearly reported. The remaining 47 papers did not include a gold standard method of identifying problem/gambling disorder; 25 used the South Oaks Gambling Screen (SOGS; Lesieur and Blume 1987) as the reference standard. The SOGS’s issues with accuracy are well documented (Goodie et al. 2013), and it is not an appropriate reference standard. The same is true for any other reference standard that is not a diagnostic clinical interview. As such, any study that did not use a diagnostic clinical interview as a reference standard was automatically rated as high-risk in this domain.

Flow and timing

Forty-nine studies were identified as low-risk, while two studies were identified as high-risk (Petry 2007; Christo et al. 2003). Petry (2007) included an especially long break between follow-up measures (1 month followed by 12 months), while in the Christo (2003) study, the clinical and general population samples were administered different screening procedures. Additionally, three studies were rated as unclear due to reporting and methodological issues (Arterberry, Martens, & Takamatsu 2015; Beaudoin and Cox 1999; Smith et al. 2013).

Discussion

This review has highlighted a number of meaningful deficits in the field of diagnostic testing for gambling issues. At present there is not enough good-quality or consistent data to carry out meta-analyses, and as a result, any evidence supporting the validity, reliability and accuracy of these tests/tools needs to be interpreted conservatively. Furthermore, the ability of these tools to support a public health approach to reducing harm is debatable. However, it is important to reiterate that the aim of this paper is not to draw direct comparisons between the tools, as no such comparison can be made in many cases. The purpose of this review is strictly to provide a broad snapshot of the field that highlights the trends relating to testing, applicability and usefulness. What is clear is that the evidence present here corroborates the long-held belief in the gambling research sphere that many of the widely used gambling screening tools are not fit for purpose as part of a public health approach to harm prevention.

The majority of the tools examined in the review do not identify risk factors, and instead tend to be overly focused on a clinical diagnostic outcome, creating a false dichotomy between the classification of problem gambler and non-problem gambler. The structured parameters of strict categories may be useful from a clinical standpoint, allowing for the efficient identification of individuals who require immediate treatment and those who do not. However, when using such tools in a public health framework, they do not allow practitioners to achieve the fundamental aim of early intervention (Livingstone and Rintoul 2020). Concerns have been raised regarding the identification of gambling harm, with many prevalence statistics (Markham and Young 2016) and studies tending to concentrate their attention towards high-risk samples (Roberts et al. 2021) while overlooking those who sit lower on the continuum of harm. Our research suggests that this is in part due to how gambling harm is measured and conceptualised within the literature. While many researchers likely hold this assertion, this is the first piece of research to provide comprehensive evidence for it. This research has highlighted the need for a paradigm shift in how gambling harm is conceptualised and measured, as the symptom-counting approach is not fit for this purpose and likely contributes to the bias—adding further credence to the utility of a public health framework adopted by many researchers within the field.

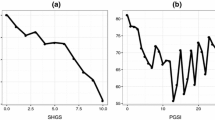

The traditional approach to conceptualising and identifying gambling harm has changed, and the focus has shifted from clinical presentation to the examination of subclinical indicators of harm (Abbott 2020; Wardle et al. 2019). As such, many screening tools discussed in the literature are not fit for this purpose for several reasons ranging from methodological issues to theoretical ones. Such screening tools fall short across two domains, the first being pure clinical accuracy (Otto et al. 2020) and the second relating to their utility as part of a public health approach (Wardle et al. 2019). This is not to say that clinically focused tools are inherently without merit; indeed, this review has identified that some show good accuracy, validity and reliability and have employed rigorous psychometric testing (Dellis et al. 2014; Goodie et al. 2013). Such screening tools have utility when employed in the domain for which they were designed, namely in clinical settings with higher-risk gamblers. However, whilst psychometrically robust, such tools may not be useful for early identification. Screening tools such as the SOGS (Lesieur and Blume 1987) are designed to identify clinical levels of gambling behaviour. Moreover, even tools validated against the SOGS or the Diagnostic Interview for Gambling Severity (DIGS) may share this orientation towards high-risk groups. If the aim is to identify levels of gambling harm across continua of harm as part of a public health approach to harm reduction, then many gambling screening tools in their current form are not able to adequately achieve this. It should be noted that this work aims to highlight the shortage of tools designed specifically for early identification and not to negatively evaluate harm-based tools in a domain to which they were not designed to operate. Employing an addiction-focused tool to quantify the totality of risk and harms constitutes misuse (Browne et al. 2017), a critique that has been levelled at some implementations of the Problem Gambling Severity Index (PGSI; Svetieva and Walker 2008). There is a clear need for new tools to identify risk factors in low-risk samples of gamblers, allowing for earlier identification and upstream harm prevention. Tools such as the short gambling harms screen (Browne et al. 2017) that examine risks and harms rather than symptomology show the greatest promise. However, many such tools lack robust psychometric testing outside of initial validation.

One prominent issue identified relates to the lack of detailed reporting of the positive and negative rates produced by the screening tool in question. Only 10 of the papers clearly reported these rates. These scores are vital when attempting to carry out a meta-analysis to calculate the aggregate sensitivity and specificity of a given measure across a number of studies. In the present sample, which included 22 papers reporting accuracy scores, it was not possible to run a meta-analysis, the primary reason being the lack of a consistent approach and flaws in reporting.

Another issue related to the large degree of variability in the sample selection. Evidence has shown that the sensitivity and specificity of certain gambling measures vary based on the sample they are being administered to (Strong et al. 2003; Williams and Volberg 2013). Additionally, detail must be given regarding the eventual intended usage and role the measure will fulfil. The accuracy requirement for a screening measure used in triage with a clinical assessment will vary from that of a single-use tool used for screening. This is specifically highlighted by the SOGS, a tool that shows good utility when used in triage with a clinical assessment but falls short due to high false-positive rates when used alone (Goodie et al. 2013). Of the 25 studies that used the SOGS as a reference standard only two studies (Stinchfield et al. 2017; Sullivan et al. 2007) employed the SOGS alongside a diagnostic interview. In light of this, researchers should not only focus on reporting the psychometric testing of a measure but also explicitly report the measure’s intended usage (screening, diagnosis, patient monitoring, etc.) and its role (triage, single-use, etc).

Reference standard selection was also identified as an issue. The reference standard should be a tool that is regarded as being correct 100% of the time, meaning any divergence in the classification between the index text and the reference standard is a result of the underperforming index test. In many cases, however, tools such as the SOGS are used, which has poor specificity when compared to a clinical interview. This is especially concerning as three accuracy studies (and 21 of the validity and reliability studies) used it as a reference standard. The high false-positive rate could be seriously undermining the reliability of these findings. Another measure that was frequently used as a reference standard (in seven studies) was the PGSI, which has also shown some deficits in accuracy when compared to a clinical interview. The study by Dellis et al. (2014) reported the PGSI as having a positive predictive value (PPV) of .63, meaning that the probability of correctly identifying low-risk gamblers (PGSI score of 3+) was only 63%. This level of accuracy may be acceptable in a screening setting, but when used as a reference standard this level of inaccuracy is a meaningful weakness.

The final method used to psychometrically evaluate the index tests was a modified DSM criteria with dichotomised (yes/no) response options. There are three key issues to be mindful of when employing the DSM criteria in this manner. Firstly, the classification for gambling issues has changed between the DSM-IV and the DSM-V. The diagnosis now requires only four criteria to be met (instead of five previously in the DSM-IV) within a 12-month window. In a study by Weinstock (2013), the prevalence rates of gambling disorder increased by 9% as a result of these changes. Petry et al. (2013) identified a similar finding, with prevalence rates in a treatment facility increasing from 16.2% to 18.1% with the implementation of the DSM-V criteria. This is an issue, as many screening tools were initially validated against the more conservative DSM-IV criteria. In the present review, a total of 15 studies used the DSM-IV as reference standard (some using it in conjunction with other tools), while a further two papers used the DSM-IV criteria after the introduction of the DSM-V criteria in 2013 (Brett et al. 2014; Bouju et al. 2013).

Secondly, while many of the papers draw on the DSM-IV/V criteria, the method in which they implement them varies across the studies. A 12-item DSM-IV dichotomised questionnaire is used in two studies (Johnson et al. 1997; 1998), while a 19-item DSM-IV questionnaire is used in two other studies (Stinchfield 2002; Strong et al. 2004). In all, a total of five versions of the DSM-IV criteria were used among the studies in the review. This evidence would suggest that while most studies are drawing upon a reliable reference standard in the form of the DSM-IV criteria, there is no standardised method of doing so.

Third, the DSM-IV/V diagnostic criteria are designed to act as a guide for mental health practitioners when assessing the symptomology of a patient. They are not designed to be delivered as a questionnaire with dichotomous answers. There is an implicit assumption in the literature that delivering the DSM-IV/V in this manner is perfectly valid. However, there is very little testing to examine the validity, reliability and accuracy of this approach. While there is evidence to suggest that some of these iterations of the self-report DSM criteria appear to be valid (Beaudoin and Cox1999; Stinchfield et al. 2005), the method of testing is questionable. The reference standard in these cases was the SOGS and not a diagnostic interview, and as such their accuracy is still debatable. The SOGS is a measure that was initially developed from the DSM criteria, so concordance between the two is expected.

Conclusion

This review has provided a broad picture of the field of gambling screening. Moreover, it highlights several significant weaknesses common in many screening tools, which draw into question their utility as part of a public health framework aimed at prevention. A public health approach to gambling-related harm necessitates screening tools that are fit for purpose. However, this comprehensive review of 39 separate gambling measures has established that the vast majority do not adequately meet the needs of such an approach. This is for several reasons, but principle among them is the focus on clinical symptomology over the identification of risk factors. This fact has been largely assumed in the literature, but this review provides concrete evidence to support that assumption. A paradigm shift in the way disordered gambling is conceptualised must occur so that these low-risk individuals might be identified sooner, facilitating the implementation of meaningful harm prevention and reduction strategies. More broadly, this research has also identified a number of issues with the quality and validity of the testing for many screening tools. Whilst many show a great deal of utility, it is important to be mindful of the limitations apparent in much of the literature. Finally, this review had some limitations that must be considered. First, the review included only English-language papers, and as such the findings are largely only relevant to western, English-speaking countries; as a result, non-English-language papers with applicable findings would not have been included. Therefore, it is important not to generalise the findings discussed within this review outside that specific context. Second, due to the variability in the included papers, no direct comparison could be made regarding the performance of the included screening tools. However, this is a consistent issue within the literature and is not exclusive to this review.

Data availability

All materials are available upon request to the corresponding author.

Code availability

Not applicable.

Change history

18 March 2022

A Correction to this paper has been published: https://doi.org/10.1007/s10389-022-01703-5

References

Abbott M (2020) The changing epidemiology of gambling disorder and gambling-related harm: public health implications. Pub Health 184:41–45. https://doi.org/10.1016/j.puhe.2020.04.003

Afifi T, Brownridge D, MacMillan H, Sareen J (2010) The relationship of gambling to intimate partner violence and child maltreatment in a nationally representative sample. J Psychiatr Res 44:331–337. https://doi.org/10.1016/j.jpsychires.2009.07.010

Arterberry B, Martens M, Takamatsu S (2015) Development and validation of the gambling problems scale. J Gambl Issues 124. https://doi.org/10.4309/jgi.2015.30.5

Arthur D, Tong W, Chen C et al (2008) The validity and reliability of four measures of gambling behaviour in a sample of Singapore University students. J Gambl Stud 24:451–462. https://doi.org/10.1007/s10899-008-9103-y

Barnes G, Welte J, Tidwell M, Hoffman J (2015) Gambling and substance use: co-occurrence among adults in a recent general population study in the United States. Int Gambl Stud 15:55–71. https://doi.org/10.1080/14459795.2014.990396

Beaudoin C, Cox B (1999) Characteristics of problem gambling in a Canadian context: a preliminary study using a DSM-IV-based questionnaire. Can J Psychiatr 44:483–487. https://doi.org/10.1177/070674379904400509

Blanco C, Hasin D, Petry N, Stinson F, Grant B (2006) Sex differences in subclinical and DSM-IV pathological gambling: results from the National Epidemiologic Survey on alcohol and related conditions. Psychol Med 36:943. https://doi.org/10.1017/s0033291706007410

Blank L, Baxter S, Woods H, Goyder E (2021) Interventions to reduce the public health burden of gambling-related harms: a mapping review. Lancet Pub Health 6:e50–e63. https://doi.org/10.1016/s2468-2667(20)30230-9

Bouju G, Hardouin J, Boutin C et al (2013) A shorter and multidimensional version of the gambling attitudes and beliefs survey (GABS-23). J Gamb Stud. https://doi.org/10.1007/s10899-012-9356-3

Brett E, Weinstock J, Burton S et al (2014) Do theDSM-5diagnostic revisions affect the psychometric properties of the brief biosocial gambling screen? Int Gambl Stud 14:447–456. https://doi.org/10.1080/14459795.2014.931449

Browne M, Goodwin B, Rockloff M (2017) Validation of the short gambling harm screen (SGHS): a tool for assessment of harms from gambling. J Gambl Stud 34:499–512. https://doi.org/10.1007/s10899-017-9698-y

Cassidy R (2020) Vicious games: capitalism and gambling. Pluto Press, London

Castrén S, Heiskanen M, Salonen A (2018) Trends in gambling participation and gambling severity among Finnish men and women: cross-sectional population surveys in 2007, 2010 and 2015. BMJ Open 8:e022129. https://doi.org/10.1136/bmjopen-2018-022129

Chamberlain S, Grant J (2018) Initial validation of a transdiagnostic compulsivity questionnaire: the Cambridge–Chicago Compulsivity Trait Scale. CNS Spectr 23:340–346. https://doi.org/10.1017/s1092852918000810

Christo G, Jones S, Haylett S et al (2003) The shorter PROMIS questionnaire: further validation of a tool for simultaneous assessment of multiple addictive behaviours. Addict Behav 28:225–248. https://doi.org/10.1016/s0306-4603(01)00231-3

Cowlishaw S, Merkouris S, Chapman A, Radermacher H (2014) Pathological and problem gambling in substance use treatment: a systematic review and meta-analysis. J Subst Abus Treat 46:98–105. https://doi.org/10.1016/j.jsat.2013.08.019

Cox B, Enns M, Michaud V (2004) Comparisons between the South Oaks Gambling Screen and a DSM-IV-based interview in a community survey of problem gambling. Can J Psychiatr 49:258–264. https://doi.org/10.1177/070674370404900406

Dellis A, Sharp C, Hofmeyr A et al (2014) Criterion-related and construct validity of the Problem Gambling Severity Index in a sample of South African gamblers. S Afr J Psychol 44:243–257. https://doi.org/10.1177/0081246314522367

Derevensky J, Gupta R (2000) Prevalence estimates of adolescent gambling: a comparison of the SOGS-RA, DSM-IV-J, and the GA 20 questions. J Gambl Stud 16:227–251. https://doi.org/10.1023/a:1009485031719

Dowling N, Ewin C, Youssef G et al (2018a) Problem gambling and family violence: findings from a population-representative study. J Behav Addict 7:806–813. https://doi.org/10.1556/2006.7.2018.74

Dowling N, Jackson A, Suomi A et al (2014) Problem gambling and family violence: prevalence and patterns in treatment-seekers. Addict Behav 39:1713–1717. https://doi.org/10.1016/j.addbeh.2014.07.006

Dowling N, Merkouris S, Manning V et al (2018b) Screening for problem gambling within mental health services: a comparison of the classification accuracy of brief instruments. Addiction 113:1088–1104. https://doi.org/10.1111/add.14150

Fortune E, Goodie A (2010) Comparing the utility of a modified diagnostic interview for gambling severity (DIGS) with the South Oaks Gambling Screen (SOGS) as a research screen in college students. J Gambl Stud 26:639–644. https://doi.org/10.1007/s10899-010-9189-x

GambleAware. (2016) Cards on the table: the cost to government associated with people who are problem gamblers in Britain. Institute for Public Policy Research, London https://www.ippr.org/files/publications/pdf/Cards-on-the-table_Dec16.pdf

Goodie A, MacKillop J, Miller J et al (2013) Evaluating the South Oaks Gambling Screen with DSM-IV and DSM-5 criteria. Assessment 20:523–531. https://doi.org/10.1177/1073191113500522

Heather N, Stockwell T (2004) The essential handbook of treatment and prevention of alcohol problems. Wiley, Chichester, West Sussex, England

Higgins J, Thomas J, Chandler J, Cumpston M, Li T, Page M et al (2019) Cochrane handbook for systematic reviews of interventions. 2nd ed. Wiley, Chichester

Himelhoch S, Miles-McLean H, Medoff D et al (2015) Evaluation of brief screens for gambling disorder in the substance use treatment setting. Am J Addict 24:460–466. https://doi.org/10.1111/ajad.12241

Hofmarcher T, Romild U, Spångberg J et al (2020) The societal costs of problem gambling in Sweden. BMC Pub Health. https://doi.org/10.1186/s12889-020-10008-9

Holdsworth L, Tiyce M (2012) Exploring the hidden nature of gambling problems among people who are homeless. Aust Soc Work 65:474–489. https://doi.org/10.1080/0312407x.2012.689309

Jauregui P, Estévez A, Urbiola I (2016) Pathological gambling and associated drug and alcohol abuse, emotion regulation, and anxious-depressive symptomatology. J Behav Addict 5:251–260. https://doi.org/10.1556/2006.5.2016.038

John B, Holloway K, Davies N et al. (2020) Gambling harm as a global public health concern: a mixed method investigation of trends in Wales. Front. Public Health. https://doi.org/10.3389/fpubh.2020.00320

Johnson E, Hamer R, Nora R et al (1997) The lie/bet questionnaire for screening pathological gamblers. Psychol Rep 80:83–88. https://doi.org/10.2466/pr0.1997.80.1.83

Johnson EE, Hamer RM, Nora RM (1998) The Lie/Bet Questionnaire for Screening Pathological Gamblers: A Follow-up Study. Psychological Reports 83(3_suppl) 1219–1224. https://doi.org/10.2466/pr0.1998.83.3f.1219

Langham E, Thorne H, Browne M et al. (2015) Understanding gambling related harm: a proposed definition, conceptual framework, and taxonomy of harms. BMC Pub. Health. https://doi.org/10.1186/s12889-016-2747-0

Lesieur H, Blume S (1987) The South Oaks Gambling Screen (SOGS): a new instrument for the identification of pathological gamblers. Am J Psychiatry 144:1184–1188. https://doi.org/10.1176/ajp.144.9.1184

Lipmann B, Mirabelli F, Rota-Bartelink A (2004) Homelessness among older people. Wintringham, Melbourne

Livingstone C, Rintoul A (2020) Moving on from responsible gambling: a new discourse is needed to prevent and minimise harm from gambling. Public Health 184:107–112. https://doi.org/10.1016/j.puhe.2020.03.018

Markham F, Young M (2016) Commentary on Dowling et al. (2016): is it time to stop conducting problem gambling prevalence studies?. Addiction 111:436–437. https://doi.org/10.1111/add.13216

Merkouris S, Greenwood C, Manning V et al (2020) Enhancing the utility of the Problem Gambling Severity Index in clinical settings: identifying refined categories within the problem gambling category. Addict Behav 103:106257. https://doi.org/10.1016/j.addbeh.2019.106257

Murray V, Ladouceur R, Jacques C (2005) Classification of gamblers according to the NODS and a clinical Interview1. Int Gambl Stud 5:57–61. https://doi.org/10.1080/14459790500099463

Nelson K, Oehlert M (2008) Evaluation of a shortened South Oaks Gambling Screen in veterans with addictions. Psychol Addict Behav 22:309–312. https://doi.org/10.1037/0893-164x.22.2.309

Nowak D (2017) A Meta-analytical synthesis and examination of pathological and problem gambling rates and associated moderators among college students, 1987–2016. J Gambl Stud 34:465–498. https://doi.org/10.1007/s10899-017-9726-y

Orford J, Wardle H, Griffiths M (2012) What proportion of gambling is problem gambling? Estimates from the 2010 British gambling prevalence survey. Int Gambl Stud 13:4–18. https://doi.org/10.1080/14459795.2012.689001

Otto J, Smolenski D, Garvey Wilson A et al (2020) A systematic review evaluating screening instruments for gambling disorder finds lack of adequate evidence. J Clin Epidemiol 120:86–93. https://doi.org/10.1016/j.jclinepi.2019.12.022

Pallanti S, DeCaria C, Grant J et al (2005) Reliability and validity of the pathological gambling adaptation of the Yale-Brown obsessive-compulsive scale (PG-YBOCS). J Gambl Stud 21:431–443. https://doi.org/10.1007/s10899-005-5557-3

Parhami I, Mojtabai R, Rosenthal R, Afifi T, Fong T (2014) Gambling and the onset of comorbid mental disorders. J Psychiatr Pract 20:207–219. https://doi.org/10.1097/01.pra.0000450320.98988.7c

Petry N (2003) Validity of a gambling scale for the addiction severity index. J Nerv Men Dis 191:399–407. https://doi.org/10.1097/00005053-200306000-00008

Petry N (2007) Concurrent and predictive validity of the addiction severity index in pathological gamblers. Am J Addict 16:272–282. https://doi.org/10.1080/10550490701389849

Petry N, Blanco C, Auriacombe M et al (2013) An overview of and rationale for changes proposed for pathological gambling in DSM-5. J Gambl Stud 30:493–502. https://doi.org/10.1007/s10899-013-9370-0

Petry N, Stinson F, Grant B (2005) Comorbidity of DSM-IV pathological gambling and other psychiatric disorders. J Clin Psychiatry 66:564–574. https://doi.org/10.4088/jcp.v66n0504

Raylu N, Oei T (2004) The gambling urge scale: development, confirmatory factor validation, and psychometric properties. Psychol Addict Behav 18:100–105. https://doi.org/10.1037/0893-164x.18.2.100

Roberts A, Rogers J, Sharman S, Melendez-Torres GL, Cowlishaw S (2021) Gambling problems in primary care: a systematic review and meta-analysis. Addiction Research & Theory 29(6):454–468. https://doi.org/10.1080/16066359.2021.1876848

Smith DP, Pols RG, Battersby MW, Harvey PW (2012) (2013) The Gambling Urge Scale: Reliability and validity in a clinical population. Addiction Research & Theory 21(2):113–122. https://doi.org/10.3109/16066359.2012.696293

Steenbergh T, Meyers A, May R, Whelan J (2002) Development and validation of the Gamblers' beliefs questionnaire. Psychol Addict Behav 16:143–149. https://doi.org/10.1037/0893-164x.16.2.143

Stewart S, Zack M, Collins P et al (2008) Subtyping pathological gamblers on the basis of affective motivations for gambling: relations to gambling problems, drinking problems, and affective motivations for drinking. Psychol Addict Behav 22:257–268. https://doi.org/10.1037/0893-164x.22.2.257

Stinchfield R (2002) Reliability, validity, and classification accuracy of the South Oaks Gambling Screen (SOGS). Addict Behav 27:1–19. https://doi.org/10.1016/s0306-4603(00)00158-1

Stinchfield R, Govoni R, Ron Frisch G (2005) DSM-IV diagnostic criteria for pathological gambling: reliability, validity, and classification accuracy. Am J Addict 14:73–82. https://doi.org/10.1080/10550490590899871

Stinchfield R, Wynne H, Wiebe J, Tremblay J (2017) Development and psychometric evaluation of the brief adolescent gambling screen (BAGS). Front Psychol. https://doi.org/10.3389/fpsyg.2017.02204

Strong D, Breen R, Lesieur H, Lejuez C (2003) Using the Rasch model to evaluate the South Oaks Gambling Screen for use with nonpathological gamblers. Addict Behav 28:1465–1472. https://doi.org/10.1016/s0306-4603(02)00262-9

Strong D, Lesieur H, Breen R et al (2004) Using a Rasch model to examine the utility of the South Oaks Gambling Screen across clinical and community samples. Addict Behav 29:465–481. https://doi.org/10.1016/j.addbeh.2003.08.017

Sullivan S, Brown R, Skinner B (2007) Pathological and sub-clinical problem gambling in a New Zealand prison: a comparison of the Eight and SOGS gambling screens. Int J Men Health Addict 6:369–377. https://doi.org/10.1007/s11469-007-9070-z

Svetieva E, Walker M (2008) Inconsistency between concept and measurement: the Canadian problem gambling index (CPGI). J Gambl Issues 22:157. https://doi.org/10.4309/jgi.2008.22.2

Suomi A, Dowling N, Jackson A (2014) Problem gambling subtypes based on psychological distress, alcohol abuse and impulsivity. Addict Behav 39:1741–1745. https://doi.org/10.1016/j.addbeh.2014.07.023

Victorian Responsible Gambling Foundation (2016) Assessing gambling-related harm in Victoria: a public health perspective. Melbourne

Wardle H, Degenhardt L, Ceschia A, Saxena S (2021) The lancet public health commission on gambling. Lancet Pub Health 6:e2–e3. https://doi.org/10.1016/s2468-2667(20)30289-9

Wardle H, Reith G, Langham E, Rogers R (2019) Gambling and public health: we need policy action to prevent harm. BMJ l1807. https://doi.org/10.1136/bmj.l1807

Weinstock J, Rash C, Burton S et al (2013) Examination of proposed DSM-5 changes to pathological gambling in a helpline sample. J Clin Psychol 69:1305–1314. https://doi.org/10.1002/jclp.22003

Weinstock J, Whelan J, Meyers A, McCausland C (2007) The performance of two pathological gambling screens in college students. Assessment 14:399–407. https://doi.org/10.1177/107319110730527

Whiting P, Rutjes A, Westwood M, Mallet S, Deeks J, Reitsma J, Leeflang M, Sterne J, Bossuyt P (2011) QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Ann Intern Med 155:529. https://doi.org/10.7326/0003-4819-155-8-201110180-00009

Wickwire E, Burke R, Brown S et al (2008) Psychometric evaluation of the National Opinion Research Center DSM-IV screen for gambling problems (NODS). Am J Addict 17:392–395. https://doi.org/10.1080/10550490802268934

Williams R, Volberg R (2013) The classification accuracy of four problem gambling assessment instruments in population research. Int Gambl Stud 14:15–28. https://doi.org/10.1080/14459795.2013.839731

Funding

This project received no formal funding (Public, commercial or non-profit).

Author information

Authors and Affiliations

Contributions

Nyle Davies: Conceptualisation-(Lead), Data curation-(Lead), Formal analysis-(Lead), Investigation-(Lead), Methodology-(Lead), Validation-(Lead), Writing—original draft-(Lead), Writing—review & editing-(Lead).

Gareth Roderique-Davies: Conceptualisation-(Lead), Investigation-(Lead), Methodology-(Lead), Project administration-(Lead), Resources-(Lead), Supervision-(Lead), Validation-(Lead), Writing—review & editing-(Lead).

Laura Drummond: Data curation-(Supporting), Formal analysis-(Equal), Writing—review & editing-(Equal).

Jamie Torrance: Writing—review & editing-(Equal).

Klara Sabolova: Project administration-(Lead), Resources-(Lead), Supervision-(Lead), Validation-(Lead), Writing—review & editing-(Lead).

Samantha Thomas: Writing—review & editing-(Supporting).

Bev John: Conceptualisation-(Lead), Investigation-(Lead), Methodology-(Lead), Project administration-(Lead), Resources-(Lead), Supervision-(Lead), Validation-(Lead), Writing—review & editing-(Lead).

Corresponding author

Ethics declarations

Ethical statement

The research employed a systematic review and as such the ethics committee at the authors university confirmed that no ethical approval was required.

Conflicts of interest/competing interests

The authors report no conflicts of interest with respect to the content of this manuscript. GRD and BJ have received funding from the personal research budgets of a number of Welsh Parliament members. GRD and BJ have also received funding from European Social Funds/Welsh Government, Alcohol Concern (now Alcohol Change UK) and Research Councils. GRD and BJ are invited observers of the Cross-Party Group on Problem Gambling at the Welsh Parliament and sit on the ‘Beat the Odds’ steering group that is run by Cais Ltd. They receive no remuneration for these roles. ST receives funding from the Australian Research Council Discovery Grant Scheme, the Victorian Responsible Gambling Foundation, and the New South Wales Office of Gaming for research relating to gambling harm prevention. She has previously received funding for gambling research from the Australian Research Council Discovery Grant Scheme, and the Victorian Responsible Gambling Foundation. She has received travel expenses for gambling speaking engagements from the European Union, Beat the Odds Wales, the Office of Gaming and Racing ACT, and the Royal College of Psychiatry Wales. She is a member of the Responsible Gambling Advisory Board for Lotterywest. She does not receive financial reimbursement for this role. JT has received funding from GambleAware for a doctoral research programme unrelated to this work.

Ethical approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised due to a retrospective Open Access order.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Davies, N.H., Roderique-Davies, G., Drummond, L.C. et al. Accessing the invisible population of low-risk gamblers, issues with screening, testing and theory: a systematic review. J Public Health (Berl.) 31, 1259–1273 (2023). https://doi.org/10.1007/s10389-021-01678-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10389-021-01678-9