Abstract

The computational utility of inductive linearizations for binary quadratic programs when combined with a mixed-integer programming solver is investigated for several combinatorial optimization problems and established benchmark instances.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Given a binary quadratic program (BQP) comprising linear (and possibly quadratic) constraints, the inductive linearization technique (Mallach 2021) may serve as a computationally attractive compromise between the well-known “standard” linearization as of Glover and Woolsey (1974), and a complete application of the Reformulation Linearization Technique [RLT, see e.g. Adams and Sherali (1999, 1986)]. In several relevant cases, inductive linearizations are more constraint-side compact than the “standard” linearization and provide a continuous relaxation that is at least as tight. Prominent combinatorial optimization problems where this applies are for instance the Quadratic Assignment, the Quadratic Matching, and the Quadratic Traveling Salesman Problem.

Given this theoretical basis, the central contribution of this paper is a systematic computational study in order to address a number of research questions: Does the mentioned symbiosis of compactness and continuous relaxation strength translate into a faster solution time of the corresponding mixed-integer programs? How do the obtained relaxation bounds compare with the “standard” linearization and the RLT at a broader scope? For which problem structures is the inductive linearization technique (not) well-suited and why? Can an inductive linearization be obtained quickly in practice, and if so, how?

To this end, we investigate the performance of the inductive as well as the “standard” linearization in combination with (and in comparison to) a professional mixed-integer programming solver on various BQPs with linear constraints. More precisely, we look at the Quadratic Assignment Problem, the Quadratic Knapsack Problem, the Quadratic Matching Problem, the Quadratic Shortest Path Problem, and on further instances of the well-established QPLIBFootnote 1 and MINLPLib.Footnote 2 Besides that, an algorithmic frame to derive inductive linearizations in practice, and thus complementing the mixed-integer programming (MIP) approach from Mallach (2021), is presented.

The outline of this paper is as follows: In the beginning of Sect. 2, we briefly review the main concepts of the inductive linearization technique and then strongly emphasize on its practical application. In Sect. 3, we present the aforementioned applications along with the respective problem formulations as well as the benchmark instances used for the computational study, and a detailed discussion of the respective results. Finally, a conclusion is given in Sect. 4.

2 Inductive linearization

The inductive linearization technique addresses optimization problems adhering to or comprising the structure

where, for \(n, m_L \in \mathbb {N} {\setminus } \{0\}\) and \(m_Q \in \mathbb {N}\), the matrices \(A \in \mathbb {R}^{m_L \times n}\) as well as \({C_k \in \mathbb {R}^{n \times n}}\) for \(k \in \{0, \dots , m_Q\}\), the vectors \({g_k \in \mathbb {R}^n}\) for \(k \in \{1, \dots , m_Q\}\) and \({c \in \mathbb {R}^n}\), and finally the scalars b as well as \(\beta _k \in \mathbb {R}\) for \(k \in \{1, \dots , m_Q\}\) are given as input data. Throughout this paper, we will denote by \(N :=\{ 1, \dots , n\}\) the index set of the binary variables \(x \in \{0,1\}^n\). Moreover, the set of products P truly present in the problem is determined by the the objective function and the quadratic restrictions as follows:

Naturally, we assume that P is non-empty and, since we have for binary solutions that \(x_i x_j = x_j x_i\) for \({i, j \in N}\), \(i \ne j\), and \(x_i = x_i^2\) for all \(i \in N\), we assume further that the matrices \(C_k\), \({k \in \{0, \dots , m_Q\}}\), are strictly upper triangular so that we have \(i < j\) for each \({(i,j) \in P}\).

While quadratic constraints may or may not be present, some linear constraints on the binary variables are actually necessary to apply the inductive linearization technique. We assume w.l.o.g. that these are given as equations and less-or-equal inequalities, hence denoted \({Ax \leqq b}\). More precisely, the requirement is that each binary variable \(x_i\), \(i \in N\), being a factor of a product in P (to be linearized with the technique proposed), appears in at least one of these constraints (with a non-zero coefficient). Clearly, this can be assumed (or established) for a binary problem without loss of generality. Indeed, if there is a factor \(x_i\), \(i \in N\), that is entirely free w.r.t. the linear constraints then in principle any linear equation or less-or-equal inequality may be employed that is valid for its feasible set (even, though rather not so desirable, \(x_i \le 1\)). Moreover, if some factor is left free w.r.t. the linear constraints, then this does not affect a successful inductive linearization of other products that do have linearly constrained factors. For simplicity, we thus assume from now on that all factors appearing in P are linearly constrained.

The inductive linearization technique is a generalization of a principle proposed by Liberti (2007) for the special case of equations with right hand side and left hand side coefficients equal to one, and of its later revision (cf. Mallach (2018)). In his original article, Liberti coined the name “compact linearization” because it typically adds fewer constraints to the mentioned problems than the “standard” linearization as of Glover and Woolsey (1974). For the problem under consideration, a general form of the latter reads:

With \(m :=\vert P \vert \), we here use \(y \in \mathbb {R}^m\) to denote the linearized products, \(d \in \mathbb {R}^m\) to denote their corresponding objective coefficients, and \(h_k \in \mathbb {R}^m\), \(k = 1, \dots , m_Q\), to express their coefficients in the original quadratic constraints. Thereby, we use the subscript notation \(y_{ij}\) for \(i < j\) and analogously define \(d_{ij} = (C_0)_{ij}\) and \({h_k}_{ij} = (C_k)_{ij}\). Of course, if \(d_{ij} < 0\) (4) can be omitted, and if \(d_{ij} > 0\) (2) and (3) can be omitted for \((i,j) \in P\).

As we will see, in many cases, inductive linearizations are constraint-side compact as well. However, this cannot be guaranteed for any kind of BQP with linear constraints. Moreover, depending on how the method is applied, more than \(|P |\) linearization variables may be induced (although this can, in principle, always be circumvented as described in Sect. 2.3). Therefore, and to have a clear distinction from other linearizations being called “compact”, as well as to emphasize that the proposed method aims at “inducing” the products associated to the set P by multiplying original constraints with original variables, the technique is referred to as “inductive linearization” since its generalization first presented in Mallach (2021).

2.1 Mathematical derivation of inductive linearizations

Given a problem as introduced at the beginning of this section, suppose that we identify a working (sub-)set of the linear constraints \({Ax \leqq b}\) to actually induce the linearization with. Let us denote the index set of the selected equations and inequalities with \(K_E\) and \(K_I\), respectively. That is, we consider the constraints

where \(I_k :=\{ i \in N \mid a_k^i \ne 0\}\) denotes the respective support index set for each \(k \in K_E\) or \(k \in K_I\).

As already mentioned, we require w.l.o.g. a choice of \(K :=K_E \cup K_I\) such that there exist indices \(k, \ell \in K\) with \(i \in I_k\) and \(j \in I_\ell \) for all \((i,j) \in P\). To refer to the respective constraints, we will use the notation \(K(i) :=\{ k \in K: i \in I_k \}\), as well as \(K_E(i)\) and \(K_I(i)\) analogously defined if more preciseness is in order. Moreover, although we do not require this for the original problem, let us temporarily assume in addition that \(b_k > 0\) for all \(k \in K\) and \(a^i_k > 0\) for all \(i \in I_k\), \(k \in K\). We will elaborate in Sect. 2.4 on how to handle constraints not fulfilling these prerequisites.

The first step of the inductive linearization approach now associates to each equation \(k \in K_E\) another index set \(M^{E}_k \subseteq N\) that is supposed to specify original variables used as multipliers. To each inequality \(k \in K_I\), two such index sets \(M^{+}_k, M^{-}_k \subseteq N\) are associated. The corresponding interpretation is as follows: If \(j \in M^{E}_k\) (\(j \in M^{+}_k\)) the equation \(k \in K_E\) (inequality \(k \in K_I\)) is multiplied by \(x_j\), and if \(j \in M^{-}_k\), the inequality \(k \in K_I\) is multiplied by \((1 - x_j)\).

This leads to the following set of first-level RLT constraints:

Let \(M_k :=M^{E}_k\) if \(k \in K_E\), and \(M_k :=M^{+}_k \cup M^{-}_k\) if \(k \in K_I\). Then

is the index set of the products induced by (7)–(9).

For ease of reference, we also define

which is to be regarded as a multiset of multiplier indices.

Remark 1

In the general approach described above, the induced set Q may contain tuples that correspond to squares. It is a simple and worthwhile optimization to replace these by their linear counterparts before actually deriving the respective linearization constraint (see also Sect. 2.2 and Mallach (2021)).

If we now rewrite (7)–(9) by substituting for each \((i,j) \in Q\) the product \(x_i x_j\) by a continuous linearization variable \(y_{ij}\) that has explicit lower and upper bounds, i.e., \(0 \le y_{ij} \le 1\), we obtain the linearization constraints:

Now, as is expressed by the following theorem, for all the induced \((i, j) \in Q\) and binary \(x_i\), \(x_j\), one has \(y_{ij} = x_i x_j\) if the following three consistency conditions are met:

Condition 1

There is a \(k \in K(i)\) such that \(j \in M^{E}_k\) or \(j \in M^{+}_k\), respectively.

Condition 2

There is a \(k \in K(j)\) such that \(i \in M^{E}_k\) or \(i \in M^{+}_k\), respectively.

Condition 3

There is a \(k \in K(i)\) such that \(j \in M^{E}_k\) or \(j \in M^{-}_k\), respectively, or a \(k \in K(j)\) such that \(i \in M^{E}_k\) or \(i \in M^{-}_k\), respectively.

Theorem 4

[Mallach (2021)] For any integer solution \(x \in \{0,1\}^n\), the linearization constraints (10)–(12) imply \({y_{ij} = x_i x_j}\) for all \((i,j) \in Q\) if and only if Conditions 1–3 are satisfied.

So altogether, if we choose M consistently in terms of the three conditions and such that Q contains P, we obtain a linearization for our original problem. In fact, at the potential expense of losing some continuous relaxation strength, it is always possible to have \(Q=P\) as is described in Sect. 2.3.

2.2 Linear relaxation strength of inductive linearizations

The case where the constraint set K employed satisfies \(b_k = 1\) for all \(k \in K\), and \(a^i_k = 1\) for all \(i \in I_k\) is an example of practical relevance where the linear programming relaxation obtained from an inductive linearization is provably at least as tight as the one obtained from the “standard” linearization. The same is true for equations with a right hand side of two and zero–one left hand side coefficients if these equations are multiplied by all variables on their left hand sides (i.e., \(M_k = I_k\)) and squares are ruled out.

More generally, the corresponding proofs in Mallach (2021) make apparent that the tightness of the relaxations of inductively linearized BQPs relates (besides other criteria) to the ratio between the right hand side and the left hand side coefficients. It also provides an example case where an inductive linearization provably has a strictly stronger continuous relaxation than the “standard” linearization.

In our computational study, we will identify experimentally further cases where this relation is achieved and further investigate how far the bounds deviate in practice.

2.3 Practical derivation of inductive linearizations and their compactness

Given a problem formulation on sheet, a multiset M that induces a set \(Q \supseteq P\) and establishes a consistent linearization can often be derived by inspection once the necessary implications imposed by Conditions 1–3 are understood (see also Sect. 2.4). For instance if the factor pairs in P or the constraints at hand have a complicated structure, it may nevertheless be non-trivial to find a consistent combination of constraints and multipliers, especially if the outcome is supposed to be compact or to meet other objectives. Moreover, an automated derivation is desirable for larger problem instances and allows for a linearization framework to be coupled with a mixed-integer programming solver.

Concerning the computation of an inductive linearization, it has been shown in Mallach (2021) that the associated optimization problem (allowing e.g. to derive a linearization that is as compact as possible in terms of additional variables and constraints) is NP-hard. This result is however rather of theoretic prominence as it considers the general case of any possible input program whereas common inputs are usually structured and the associated “covering problems” turn out to be often simple to solve. Indeed they can frequently be solved even exactly, using e.g. a mixed-integer program or combinatorial polynomial-time algorithms for more specifically structured BQPs. Typically, the number of candidate constraints to induce a certain product, respectively to satisfy one of the three conditions, is anyway rather small. Likewise, for many applications, the complexity can also be reduced by carefully preselecting the set K of original constraints considered for inductions.

It is further apparent from the hardness proof as well as the mixed-integer program in Mallach (2021) that the compactness of the resulting linearization is influenced by the support of the employed constraint set K and its relation to the factor pairs given by P. In this context, it is also worth to mention that the presolve routines of a MIP solver may well eliminate some of the variables and constraints imposed by an inductive linearization that is not “most compact”. Moreover, a few additional constraints may sometimes improve the relaxation strength, so compactness need not necessarily be an ultimate goal.

Notwithstanding, it is also possible to eliminate (all) variables in \(Q {\setminus } P\) if (all of) these have been generated from inequalities (possibly obtained from equations in a preprocessing step). As is clear from inequalities (11) and (12), removing summands on their left hand sides will neither harm their validity nor their necessary implications on the respective remaining linearization variables. In general, however, the feasible region of the continuous relaxation may of course be enlarged by this procedure (referred to as weakened inductive linearization in Sect. 3).

As a particularly practical approach to derive inductive linearizations, we present (the heuristic) Algorithm 1 which is an extension of a special-case combinatorial algorithm from Mallach (2018) to the general case. It also serves to derive the inductive linearizations during the computational study in Sect. 3.

Algorithm 1 consists of two simple components. First, the major routine ConstructSets which is to be supplied with the input sets P and K as well as weights \(w^E_{kj}\), respectively \(w^+_{kj}\) and \(w^-_{kj}\), indicating a “cost” of creating a linearization constraint by multiplying constraint \(k \in K\) with original variable \(x_j\), respectively \(1-x_j\), just as in the mixed-integer program in Mallach (2021). The major routine then invokes the subroutine Append (listed as Algorithm 2) which extends a partial inductive linearization represented by Q and M. It does so by inducing additional linearization constraints in order to satisfy Conditions 1–3 for the variables \(Q_{new}\) added in the previous iteration (initially, \(Q_{new} = P\)), and by appending the variables \(Q_{add}\) newly induced when creating these linearization constraints. Algorithm 1 terminates if a steady state is reached, i.e., if no further constraint-factor multiplications are necessary to satisfy Conditions 1–3 for the current Q, which is then, together with the constraint multiplier set M, returned.

2.4 Normalization, inductive linearizations with general linear constraint sets

We shall now discuss how to deal with the case that some (or all) of the original constraints \(k \in K\) to be employed, i.e., (5) and (6), do not satisfy \(b_k > 0\) and \(a^i_k > 0\) for all \(i \in I_k\). To this end, suppose that

is an equation or \(\le \)-inequality in K, and let \(I_k^- \subseteq I_k\) be the set of variable indices such that \(a_k^i < 0\) for each \(i \in I_k^-\). For ease of notation, define also \(I_k^+ = I_k {\setminus } I_k^-\).

The explicit approach (see also, e.g. Hammer et al. (1984)) to deal with such constraints is to define a new complement variable \(\bar{x}_i\) for each \(i \in I_k^-\), \(k \in K\), along with the corresponding equation:

Apparently, the equations (13) have only positive coefficients on the left hand side and a positive right hand side. Moreover, we may now replace any of the original constraints with

where the term \(-\sum _{i \in I_k^-} a_k^i\) on the left and on the right hand side is non-negative as well.

Carrying out this procedure for every equation or inequality with negative coefficients on the left hand side clearly gives a normalized system with only non-negative coefficients on the left. Now if any of the resulting right hand sides is negative, the system is obviously infeasible. Furthermore, if any of them is zero then the values of all the variables on the respective left hand side can be fixed, and these variables can thus be removed from the formulation. We conclude that the prerequisites \(b_k > 0\) for all \(k \in K\) and \(a^i_k > 0\) for all \(i \in I_k\), \(k \in K\), can therefore be satisfied without loss of generality.

From a computational point of view, the explicit approach however has some drawbacks. The first is clearly that it may add up to n variables and equations while it is not clear a priori which of the resulting normalized constraints are eventually at all employed for multiplications. Moreover, the additional equations (13) are rather undesirable candidates for multiplications as the resulting linearization constraints provide a linkage of linearization and original variables that is similarly poor as in case of the “standard” linearization.

A more economical strategy is to keep the original constraints as they are, and to consider them for multiplication with each \(x_j\) (and \((1- x_j)\) for case (9)) and each \(\bar{x}_j\) (and \((1 - \bar{x}_j)\) for case (9)) without ever truly introducing the complement variables. Instead, the idea of this implicit approach is to choose, for each \(i \in I_k\), the “right” of the four possible combinations \(x_i x_j\), \(\bar{x}_i x_j\), \(x_i \bar{x}_j\), and \(\bar{x}_i \bar{x}_j\) to be induced, such that the respective linearization constraint imposes the necessary implications on their value.

More precisely, as can be verified from the proof of Theorem 4 in Mallach (2021), the inequalities (8) (respectively their linearized counterparts (11)) have the effect of enforcing all the products (respectively, linearization variables) on the left hand side to be zero if the multiplier \(x_j\) is zero. Similarly, the constraints (9) (respectively, (12)) enforce any \(x_i x_j\) (\(y_{ij}\)) on the left hand side to coincide with \(x_i\) if \(x_j\) is one. The equations (7) respectively (10) directly impose both relationships at once. These implications are exactly what is established by Conditions 1–3 if the coefficients and right hand sides of the original constraints are non-negative respectively positive.

To achieve the same in the general case, one has to replace (8) by

respectively by

The Eq. (7) are to be replaced analogously. It is easy to see that, as desired, the linearization variables to be substituted for the products are forced to zero whenever the multiplier \(x_j\) respectively \(\bar{x}_j\) is zero, and in particular that this effect is preserved when re-substituting the conceptual \(\bar{x}_j\) by \((1-x_j)\) in linear terms.

Further, the inequalities (9) need to be replaced by

respectively by

Here, one may again verify that, if \(x_j\) respectively \(\bar{x}_j\) is equal to one, then the inequalities enforce the linearization variables to be substituted for the products to equal \(x_i\) if \(i \in I_k^+\) and to equal \((1-x_i)\) if \(i \in I_k^-\), just as desired. Again, this effect is preserved when re-substituting the conceptual \(\bar{x}_i\) or \(\bar{x}_j\) by respectively \((1-x_i)\) and \((1-x_j)\) in linear expressions.

As a result, we achieved that complement variables and the associated equations need not be introduced. Instead, it now suffices to enforce for each \((i,j) \in Q\) that a linearization variable is induced for at least one of the products \(x_i x_j\), \(\bar{x}_i x_j\), \(x_i \bar{x}_j\), and \(\bar{x}_i \bar{x}_j\), and that its associated Conditions 1–3 are satisfied by means of the adapted linearization constraints above. Given this situation, original terms involving other products that refer to the same index pair (such as products \(x_i x_j\) present in the objective function or in quadratic constraints but without an associated linearization variable induced) may then be substituted for based on the following relations:

Remark 2

By contrast, back-substitution into the adapted linearization constraints may invalidate the construction derived above and thus the linearization. It is crucial that the satisfaction of the consistency conditions of at least one linearization variable w.r.t. an index pair remains unaffected by such substitutions, as only this ensures the validity of the latter in arrears.

We conclude that although the handling of negative factor coefficients becomes almost oblivious with the implicit approach, the complexity of deriving an inductive linearization still increases with their presence in practice, and the resulting linearizations may be less compact as multiple linearization variables w.r.t. the same index pair may be induced along with further corresponding linearization constraints. Nevertheless, the opportunity to eliminate any linearization variable in \(Q {\setminus } P\) as described in Sect. 2.3 is retained. Moreover, besides splitting equations, one may consider both an original equation and its equivalent resulting from a multiplication with \(-1\) as candidates to create linearization constraints (and to induce linearization variables) if the original one has positive and negative coefficients on its left hand side.

3 A computational study

Some evidence for the computational utility of inductive linearizations is already available in the literature for specific applications [e.g. (Davidović et al. 2007; Liberti 2007; Mallach 2017, 2018, 2019)]. Here, we provide a systematic study and a structured overview of computational results for various well-known and well-suited as well as not so well-suited binary quadratic programs with linear constraints.

To this end, we identified a number of prominent combinatorial optimization problems and benchmark instances commonly used by the community in order to evaluate inductive linearizations in comparison with “standard” linearizations. More precisely, we consider and distinguish the following linearizations:

-

An inductive linearization (IL), if necessary with implicit normalization.

-

A weakened inductive linearization (ILW), with \(Q=P\) enforced as described in Sect. 2.3.

-

The complete “standard” linearization (SLC) involving \(\vert P \vert \) additional variables, \(3 \vert P \vert \) additional inequalities, and \(7 \vert P \vert \) additional non-zeros.

-

The reduced “standard” linearization (SLR), which only comprises those of the inequalities (2)–(4) that are required due to the objective coefficients.

On the one hand, we evaluate the performance in terms of the running times achieved when passing these linearizations to a mixed-integer programming solver. Here, we emphasize that the purpose of these experiments is to support an assessment towards the question which kind of problem structures (respectively classes of constraints) the inductive linearization technique appears particularly suited or rather not suited for. Clearly, the displayed (wall clock) running times can only serve as an indicator for this assessment, especially as they depend on various influences (as e.g. seeds, parameters, branching decisions, solver versions) whose possible combinations would justify a computational study on their own.

On the other hand, we compare the optimality gaps obtained with the linear relaxations that are associated with the different linearizations. The corresponding figures depict this gap on the ordinate axis in percent, computed as \(100 (1 - \frac{z_{LP}}{z_{OPT}})\) (respectively \(100 (1 - \frac{z_{OPT}}{z_{LP}})\)), where \(z_{LP}\) is the bound obtained by solving the relaxation and \(z_{OPT}\) is the optimum value for a minimization (maximization) problem. Where appropriate, we will also put the corresponding results into relation with a first-level RLT. We emphasize that however the bound computed by a MIP solver after solving the linear relaxation may still be better due to presolve mechanisms.

Of course, the different linearizations were created based on the same input formulation in which, as a preprocessing, all linear greater-or-equal inequalities were turned into less-or-equal ones, and, if a left hand side has only integer coefficients, right hand sides were rounded down if fractional.

To actually derive the inductive linearizations, we employed Algorithm 1. In each iteration of the function Append, the cost coefficients associated to the constraint-multiplier combinations (see Sect. 2.3) were recomputed as the negated number of products in \(Q_{\text {add}}\) for which one of Conditions 1–3 would be satisfied if the respective combination was realized. In contrast, coefficient ranges and right hand sides as well as the number of non-zero coefficients were not taken into account. Further, due to the order of looking up constraints being candidates for multiplications, a slight implicit preference of equations over inequalities (in case of equal costs) is imposed by the implementation. In the subsequent subsections, the table columns titled “A1 [s]” display the running time of this algorithm (i.e., the wall clock time to derive the respective inductive linearization) in seconds.

In order to finally solve the resulting MIPs, we employed GurobiFootnote 3 in version 9.11 with its seed parameter set to one, and its MIPGap parameter set to \(10^{-6}\) which was sometimes necessary to ensure a solution process until proven optimality.

All computations were carried out using a single thread on a Debian Linux system equipped with an Intel Xeon E5-2690 CPU (3 GHz) and 128 GB RAM. Each run had a time limit of 48 h. If it was exceeded, this is indicated by “–” in the respective table columns.

3.1 The quadratic assignment problem

Given \(T, D \in \mathbb {R}^{n \times n}\) and \(c \in \mathbb {R}^n\), a quadratic assignment problem (QAP) in the form by Koopmans and Beckmann (1957) can be written as follows.

Frieze and Yadegar (1983) derived a linearization of the QAP that is actually an inductive one, but that is not yet most compact. To characterize a most compact inductive linearization for the case where all (meaningfully) possible products are of interest, observe first that each of the variables \(X :=\{ x_{ip} \mid i,p \in \{1, \dots , n\} \}\) occurs exactly once in the equation set (14) and exactly once in the equation set (15). Thus, in order to induce all products and to satisfy Conditions 1 and 2 for them, it would suffice to either multiply all of the constraints (14) with X, or to multiply all of the constraints (15) with X. Moreover, since objective coefficients for \(x_{ip}^2\) may be moved to the linear part, and since the variables \(y_{ipiq}\) for all \(p,q \in \{1, \dots , n\}\) as well as all variables \(y_{ipjp}\) for all \(i,j \in \{1, \dots , n\}\) can be eliminated, it suffices to formulate the resulting constraints only for \(i \ne j\), or \(p \ne q\), respectively. If one further identifies \(y_{jqip}\) with \(y_{ipjq}\) whenever \(i < j\), a most compact inductive linearization of all meaningfully possible products is:

In this formulation, (16) could also be replaced by the constraints

which resembles again the freedom to choose one of (14) and (15) as the basis for inductions.

The total number of additional equations thus amounts to only \(n^3 - n^2\) instead of \({3 \cdot \big ( \frac{1}{2} (n^2 - n) (n^2 -n) \big )} =\frac{3}{2} (n^4 - 2n^3 + n^2)\) inequalities when using the complete “standard” linearization and creating \(y_{ipjq}\) only for \(i < j\) and \(p \ne q\) as well. However, these most compact formulations have a weaker linear programming relaxation than the ones by Frieze and Yadegar (1983) and Adams and Johnson (1994) that comprise more constraints.

If P does not contain all meaningfully possible products, several linearization constraints, i.e., constraint-factor combinations, can be saved. The best possible reduction then depends on the actual factor pairs in P and their “distribution” over the assignment constraints.

Instance Description

With the only exception of esc16f (that has \(T=0\)), we report on those of the established QAPLIB (Burkard et al. 1997) instances that could be solved within the 48 h limit using either IL or one of the standard linearizations SLC and SLR. Thereby, the density \(100 \vert P \vert / \left( {\begin{array}{c}\vert N \vert \\ 2\end{array}}\right) \) of the 42 instances solved spreads between 1 and 87%.

LP Relaxation Bounds and MIP Performance

As shown in Fig. 1, for all considered instances, the “standard” linearization SLC provides a lower bound of zero which translates into an optimality gap of \(100\%\). For some \(\texttt {esc}\) instances, the computed inductive linearization IL and even a first-level RLT (here derived using square reductions as of Remark 1 in addition) do not improve on this bound. More typically, a strong first-level RLT bound is obtained and IL delivers an optimality gap that is closer to the one of the RLT than to the one of SLC.

As is displayed in Table 1, using Gurobi with IL clearly outperforms its combination with each of the two “standard” linearizations SLC and SLR. With the only exception of esc32e, the running times with IL are faster in all the remaining 41 cases, frequently by orders of magnitude. Supported further by the compactness of the derived inductive linearizations, their usually better relaxation strength translates into a superior performance compared with the “standard” linearizations.

We find that assignment constraints prove typically (but not always) suitable for inductive linearizations, even though they are of course not competitive to state-of-the-art methods for the pure QAP. While we here observe that only those QAPLIB instances with up to about 20000 products can be handled within the time limit, it is observed in Sects. 3.5 and 3.6 that inductive linearizations turn out to be frequently attractive for related problems with additional structure in terms of further variables and constraints. This is also in line with results that have been obtained earlier for e.g. quadratic semi-assignment problems (Billionnet and Elloumi 2001), graph partitioning (Mallach 2018), multiprocessor scheduling (Mallach 2017), or graph layering (Mallach 2019).

Table 1 further shows that the task to find a good combination satisfying Conditions 1 and 2 for all induced products could be solved very quickly with Algorithm 1 for the considered QAPLIB instances. In some further experiments, the mixed-integer program from Mallach (2021) could also be solved by Gurobi at the root of its branch-and-bound tree.

3.2 The quadratic 0–1 knapsack problem

The certainly simplest inequality-only application for the inductive linearization technique is the quadratic 0–1 knapsack problem (QKP). It is particularly interesting for the computational study because here the left hand side coefficients (item sizes) and the right hand sides (knapsack capacity) vary and they relate to each other at different, and sometimes large, ratios.

The canonical formulation with a capacity \(b \in \mathbb {R}\), and a variable \(x_j\) for each item j of a ground set J with size \(a_j \in \mathbb {R}\) reads:

It has then been observed in the literature that inequalities of type (8) could be used in combination with the “standard” linearization (see e.g., Billionnet and Calmels (1996)), and also inequalities of type (9) have been applied in the context of semidefinite relaxations to improve the obtained dual bounds (Helmberg et al. 2000). A corresponding square-reduced inductive linearization is the following mixed-integer program:

Irrespective of the cardinality of P, the Conditions 1–3 must be established using (17) for any product which implies that all possible products are induced as soon as P is non-empty. Thus, this formulation is constraint-side compact, and more and more superior over “standard” linearizations in this respect with increasing density. Assuming \(\vert J \vert = n\), even a QKP involving all \(\left( {\begin{array}{c}n\\ 2\end{array}}\right) \) possible products could be linearized using only \(2n-1\) constraints (\(n-1\) inequalities of type (9) suffice to satisfy Condition 3 for all of them while n of (8) are needed for Conditions 1 and 2). However, in the general case of arbitrary \(a_j\), \(j \in J\), and b, an implication of the “standard” linearization inequalities (2)–(4) cannot be expected.

Instance Description

As a benchmark set, we selected the 60 largest of the randomly generated instances by Billionnet and Soutif (2004) for presentation while the remaining ones with 100 elements could be solved more routinely using all methods. Their naming scheme follows the pattern jeu_n_d_i where n is the number of items, d indicates the approximate product density, and i is a running index.

LP Relaxation Bounds and MIP Performance

The optimality gaps obtained with ILW and SLC are shown in Fig. 2. They confirm what could be expected from theory: Due to the comparably large ratios between the constraint coefficients and right hand sides, the upper bounds obtained with ILW (the gap is \(31.65\%\) on average) are typically significantly weaker than with SLC (\(2.10\%\)). While being considerably less compact in size, IL achieves the same bounds as ILW. Since there is only a single inequality comprising all original variables that serves as a basis for inductions, a first-level RLT basically coincides with IL, but involves a “standard” linearization in addition. While the latter is here already able to almost close the gaps standalone, side experiments showed that the much larger full first-level RLT did not suffice as well to close it entirely.

The MIP results are displayed in Table 2. Only in two of the considered cases, it is slightly faster to use ILW than to pass a “standard” linearization to the MIP solver while considerably more timeouts are observed. As it turns out, the improved linearization variable-constraint linkage does not suffice in order to compensate for the weaker relaxation bounds of ILW. Moreover, whereas the numbers of linearization constraints are smaller with ILW than with the “standard” linearizations, the converse is true for the induced numbers of non-zeros. However, despite these preconditions apply broadly, one still observes a considerable variance in the solution times even within each size and product density group, with positive and negative outliers. Moreover, for the densest instances with 200 items in Table 2, only those with a comparably small knapsack capacity remain solvable using ILW while the left hand side coefficient ranges do (almost) not vary over the entire instance set. Even though there is less correlation for the other density groups, the theory-predicted sensitivity of the strength of inductive linearizations to the “ratio” between the right hand side and the left hand side coefficients is here apparent.

The suitability of using inductive linearizations for more complex problems with knapsack constraints may as well be influenced by the mentioned ratio and of course by the additional inherent structure. As mentioned above, deriving an inductive linearization is trivial when based on the knapsack inequality and the unique solution was also computed fast with Algorithm 1 in our experiments.

3.3 The quadratic matching problem

Given an undirected graph \(G=(V,E)\), a canonical BQP that models the quadratic matching problem (QMP) on G [see e.g. (Hupp et al. 2015)] can be expressed as

It is easily observed that each edge (factor) occurs in exactly two constraints, namely those inequalities (18) associated with its endpoints. In this sense, the situation is similar as in case of the QAP. However, the constraints need not be as regular as they depend on the structure of G. Notwithstanding, their coefficients and right hand sides ensure that (the relaxation of) an inductive linearization will imply the inequalities of a “standard linearization”.

Instance Description

For our experiments, we first employed all the instances used by Hupp et al. (2015), and finally selected a representative subset of those called “BM” in this reference, as the other ones with less variables or products could be solved quickly using all linearization approaches, and the other instances of about the same size produced similar results as has been the case also in the reference. The product densities of the selected instances lie between \(63 \%\) and \(83 \%\), increasing from suffixes _80 to _100.

LP Relaxation Bounds and MIP Performance

As can be seen from Fig. 3, the optimality gaps obtained when solving the relaxations of the 50 representative instances with ILW are close to the strong RLT bounds while the upper bounds obtained with SLC are weak. Over the entire “BM” set consisting of 150 instances, the bounds are always within \(13 \%\) and \(32 \%\) for ILW, within \(5\%\) and \(30\%\) using the RLT, and between \(84 \%\) and \(92 \%\) for SLC.

Concerning the MIP solver performance, there is a clear picture: For all the “BM” instances (including the representative ones displayed in Table 3), Gurobi could solve the problems better using one of the inductive linearizations than when using SLC or SLR. In 101 of the entire 150 cases, the best results are obtained with ILW that, on average, took about \(36 \%\) and \(42 \%\) of the running time that was required with SLC and SLR, respectively. Besides the stronger relaxations, this performance of the inductive linearizations is further supported by the constraint-side compactness while the number of induced non-zeros is typically higher. Although using ILW turned out to be more effective than using IL, both are displayed in Table 3 in order to support an assessment of the model size reduction obtained by the weakening. Roughly, the number of linearization constraints compared to IL was reduced by the weakening procedure by about \(21 \%\), \(12 \%\), and \(2 \%\) for the instances with the suffixes _80, _90, and _100, respectively. The running times obtained indicate only a slight and inconsistent correlation to the three density levels, and with IL and ILW the numbers of additional constraints and the additional non-zeros generated by Algorithm 1 are typically even smaller for the denser instances.

We conclude that the matching constraints appear as rather attractive candidates for inductive linearizations. In additional experiments, computing an inductive linearization with a mixed-integer programs turned out to be comparably more difficult for the MIP solver employed. On the contrary, Algorithm 1 delivered good linearizations routinely and quickly.

3.4 The quadratic shortest path problem

Given a directed graph \(G=(V,A)\), a source node \(s \in V\) and a target node \(t \in V\), a canonical BQP that models the quadratic shortest s-t-path problem (QSPP) on G (see e.g. Rostami et al. (2018)) can be expressed as

where \(b(i) = 0\) for all \(i \in V {\setminus } \{s, t\}\), \(b(s) = 1\), and \(b(t) = -1\).

Since the left-hand side coefficients of the only constraints (19) are from the set \(\{-1, 1\}\), normalization is inevitably required when creating an inductive linearization of this BQP.

Instance Description

For the experiments, we self-generated grid instances as described by Rostami et al. (2018), three for each proposed type and size. Their density is about \(50\%\).

LP Relaxation Bounds and MIP Performance

The given density and instance sizes lead to a relatively large number of products so that only 24 out of the 39 generated instances could be solved within an 48 h time limit when passing one of the linearizations to the MIP solver.

Looking at the optimality gaps obtained for these instances as shown in Fig. 4, the bounds computed with IL tend to get weaker with increasing size while the bounds derived with the “standard” linearization reside on an even weaker level. More precisely, for the solved grid1 instances, the gaps are between \(76\%\) and \(85\%\) with IL and between \(90\%\) and \(95\%\) with SLC. In case of the solved grid2 instances the optimality gaps are closer to each other and between \(89\%\) and \(93\%\). The better but still rather weak IL bounds are attenuated by effects of the normalization as is further described below. The discussion indicates a direction to achieve potential bound improvements as well as an explanation why the further addition of constraint-variable multiplications is not auspicious, while especially the model size of a first-level RLT would here be anyway to excessive.

As one can see from Table 4, SLC and especially SLR work better with Gurobi for the considered instances. Despite that the LP bounds obtained with the “standard” linearizations are even worse than with IL, the obtained running times are orders of magnitudes faster than with IL. The negative coefficients respectively the model size play here an important role: Influenced by the required normalization, the number of induced products and non-zeros is significantly larger using IL while many linearization variables referring to the same pair of original variables are generated. Moreover, the constraint linkage among these linearization variables is poor and so is consequently the impact on the bound. Therefore, although one could reduce the total number of linearization variables by a factor of about three when investing more time to derive an inductive linearization using the MIP from Mallach (2021), which improves the solution times considerably, the latter are still not competitive to those obtained with the “standard” linearization, and especially not to those obtained with specialized methods for the QSPP as in Rostami et al. (2018). Moreover, for some instances, even the root linear program of IL turned out to be comparably challenging to solve with Gurobi.

The suitability of typical “flow conservation” constraints like (19) to derive inductive linearizations thus appears to be limited so far. However, side experiments indicate that strong bounds could be achieved with an inductive linearization that resolves the indicated poor linearization variable-constraint linkage while preserving consistency (e.g. using careful back-substitutions as indicated in Sect. 2.4) which is a subject for further research.

Currently, the structure of the present constraints where each potential factor (arc) occurs on the left hand sides of two constraints (those associated with its endpoints), but with opposite signs, impairs the MIP solution times to derive an inductive linearization. Algorithm 1 is also but less affected. Not surprisingly, for the largest instances with \(\vert P \vert > 10^5\), a noticeable increase in the derivation time occurs.

3.5 MINLPLib

To further broaden the experimental setting, we incorporated library collections such as the MINLPLib. In addition, they provide instance formats that one may pass on directly to Gurobi (we used the .lp format) giving rise to another meaningful comparison.

Instance Description

We employed all linearly constrained BQPs from MINLPLib except for six QSPP instances and one QAP instance. All except ten of these instances have equations only, however also for these exceptions only equations were employed to create the inductive linearizations. The first two of these exceptions are crossdock_15x7 and crossdock_15x8 which have 30 equations (with only zero–one coefficients and a right hand side of one) and 14 respectively 16 inequalities (with larger coefficients and right hand sides). The same applies to the eight generalized assignment problem instances with prefix pb whose first (second) two digits refer to the number of equations (inequalities). Stemming from Cordeau et al. (2006), they have been contributed to the MINLPLib by Monique Guignard. In Pessoa et al. (2010), the instance pb351575 still remained unsolved due to a missing optimality certificate for the objective value of 6301723. In all conscience, its solution has not been reported until present. By investing more computational resources and time in addition to the main experiments presented, we could solve all these instances to optimality using IL and thus confirm their optimal values as listed in Table 5.

LP Relaxation Bounds and MIP Performance

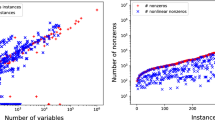

Figure 5 displays the sustained relaxation optimality gaps for the instances that could be solved within 48 h using at least one method. The MIP results are shown in Tables 6 and 7.

Besides the already mentioned crossdock and pb instances, the two color_lab instances are the only ones that do not have their non-zero left hand side coefficients and right hand sides all equal to one. As forward referenced from Sect. 3.1, an inductive linearization is frequently (but not always) suited and superior to a “standard” linearization for instances with this structure. In several cases, this is also true when compared with Gurobi as a standalone solver.

On the one hand, for the crossdock and the pb instances, the improved bounds with IL translate into a better MIP performance, supported further by the ideal situation that IL is here also very effective in terms of the number of linearization equations and additional non-zeros. Moreover, for celar6-sub0, the inductive linearization is orders of magnitude faster even though there are many products and induced non-zeros, and even though there is no according indication in terms of the relaxation optimality gaps.

On the other hand, for the maximum constraint satisfiability problems, SLR performs clearly best with Gurobi while IL requires less linearization constraints but induces more non-zeros and linearization variables, and even its root linear program proved difficult to solve with Gurobi. Moreover, the color_lab instances turn out to be difficult when using IL, even though the bound obtained with its continuous relaxation is much better than with SLC and also the model size is auspicious.

For the “semi-assignment”-like graph partitioning instances (see also a more comprehensive study in Mallach (2018) with an additional normalization constraint), we see a diverse picture as well: While the graphpart-2 and graphpart-3 instances prove rather simple for all methods (which also achieve the same relatively strong relaxation optimality gaps), the bounds obtained for the graphpart-clique-instances are equal to zero and they become especially challenging for IL with increasing size. In the latter instances, each node must be assigned to one of three partitions and the costs of placing any pair of nodes in the same partition are equal for all partitions. The initial number of products P as well as the computed inductive linearizations are significantly larger than for the other graphpart instances mentioned before. Additional experiments revealed that the bounds for most of the instances, in particular the graphpart and the maxcsp instances, could not be improved even when multiplying all constraints with all variables which here leads to a (square-reduced) first-level RLT since the “standard” linearization inequalities are then implied for these instances.

3.6 QPLIB

Finally, we look at the QPLIB (Furini et al. 2019), and we again chose the .lp format to pass the selected instances directly to Gurobi.

Instance Description

Here, we selected all BQPs with linear inequality constraints to be part of our testbed, especially since the MINLPLib experiments only involved instances with linear equation constraints, and since most instances of this kind in QPLIB are also part of the MINLPLib and QAPLIB experiments.

LP Relaxation Bounds and MIP Performance

For those selected instances that could be solved within 48 h using at least one method, Fig. 6 shows the relaxation optimality gaps. For the majority of the instances, these gaps are large irrespective of the method used. Zero lower bounds (\(100 \%\) gaps) are frequently observed for minimization problems like e.g. most instances with prefix 100 that have large ratios between the right hand sides and the left hand side coefficients. For a few of these with slightly better gaps, the SLC bound turns out to be superior. Also for instance 0067 (actually, a QKP), the ILW gap of \(16.25 \%\) is weaker than the \(1.26\%\) gap obtained with SLC which is in line with Sect. 3.2. Another example is instance 0752 where the ILW gap of \(77.60 \%\) is considerably worse than the \(39.83\%\) gap obtained with SLC. Due to negative coefficients this instance requires normalization, and so do the instances with first digits 2 and 3. Here, a similar effect as in Sect. 3.4 concerning poorly linked linearization variables referring to the same pair of original variables applies. Only for the instances with first prefix 5, the ILW bounds are slightly superior even though on a weak level.

The MIP results are presented in Table 8. After the above discussion regarding the relaxation bounds and the impact of normalization, it could be expected that better results are obtained when using Gurobi as a standalone solver or sometimes also when supplying it with one of the “standard” linearization than when using ILW (which still worked better than IL). Indeed, while the instances with prefix 100 and their special coefficient ranges turn out to be unsuited for an inductive linearization, also the size of the linearizations for the instances starting with the digits 2 and 3 turns out to be unfavorable. Only for the instances with first digit 5, competitive model sizes and solution times are achieved with IL.

4 Conclusion

A framework to derive inductive linearizations in practice has been outlined and it has been demonstrated that it can be effectively applied to a variety of binary quadratic programs with linear constraints. The experiments covered the Quadratic Assignment, Knapsack, Matching and Shortest Path Problems, as well as instances from the MINLPLib and QPLIB.

Addressing the research questions mentioned in the introduction, the best results are typically obtained with an inductive linearization if its continuous relaxation is comparably tight and the number of linearization constraints and induced non-zero coefficients keep moderate at the same time. These ideal conditions are for example observed for Quadratic Assignment and Quadratic Matching Problems. If only the first property is fulfilled, for instance because the original constraints employed have right hand sides equal to one and zero–one left hand sides but the structure of the factor pairs or constraints impedes a compact model extension, or if the formulation is more compact without providing a strong relaxation, the results show that an inductive linearization may or may not compare well with a “standard” linearization. Whether one or the other case applies may then depend on further parameters such as the density of the given product terms and the actual distribution of their factors over the set of (employed) constraints, which in turn again also influence the achievable compactness and relaxation strength of inductive linearizations. Moreover, if the ratios between right hand sides and (non-negative) left hand side coefficients are large, the relaxations of inductive linearizations tend to become weaker, and “standard” linearizations may lead to superior running times with a mixed-integer programming solver as has been observed with the Quadratic Knapsack Problem. Until now, negative constraint coefficients may also impair the performance sustained with inductive linearizations. One reason is that the corresponding normalization step typically counteracts a compact reformulation, another is that often a poor linkage between linearization variables referring to the same pair of original variables is established by the induced constraints. Improvements in this respect are a natural subject for further research. The explanations for positive and negative observations at hand, it should be emphasized that they can only provide a partial image while exceptions from the respective rules of thumb exist naturally, especially since a MIP solution process depends on various further influences such as e.g. branching decisions or cutting place effectiveness. Across the instance sets considered, the inductive linearization technique frequently led to a continuous relaxation that is at least as tight as the one provided by a “standard” linearization. In several cases, the bounds also turned out to be equal or almost as good as those provided by a full first-level RLT even though being typically much more compact. The broad computational experiments further revealed that inductive linearizations can be computed quickly in an automated fashion for the various different application instances, and that this can typically be done well even with a simple combinatorial heuristic.

References

Adams WP, Johnson TA (1994) Improved linear programming-based lower bounds for the quadratic assignment problem. In: Pardalos PM, Wolkowicz H (eds) Quadratic assignment and related problems, vol 16. DIMACS Series on Discrete Mathematics and Computer Science. AMS, Providence, pp 43–75

Adams WP, Sherali HD (1986) A tight linearization and an algorithm for zero-one quadratic programming problems. Manag Sci 32(10):1274–1290. https://doi.org/10.1287/mnsc.32.10.1274

Adams WP, Sherali HD (1999) A reformulation-linearization technique for solving discrete and continuous nonconvex problems. In: Nonconvex optimization and its applications, vol 31. Springer. https://doi.org/10.1007/978-1-4757-4388-3

Billionnet A, Calmels F (1996) Linear programming for the 0–1 quadratic knapsack problem. Eur J Oper Res 92(2):310–325. https://doi.org/10.1016/0377-2217(94)00229-0

Billionnet A, Elloumi S (2001) Best reduction of the quadratic semi-assignment problem. Discrete Appl Math 109(3):197–213. https://doi.org/10.1016/S0166-218X(00)00257-2

Billionnet A, Soutif E (2004) An exact method based on Lagrangian decomposition for the 0–1 quadratic knapsack problem. Eur J Oper Res 157(3):565–575. https://doi.org/10.1016/S0377-2217(03)00244-3

Burkard RE, Karisch SE, Rendl F (1997) QAPLIB—a quadratic assignment problem library. J Glob Optim 10(4):391–403. https://doi.org/10.1023/A:1008293323270

Cordeau JF, Gaudioso M, Laporte G, Moccia L (2006) A memetic heuristic for the generalized quadratic assignment problem. INFORMS J Comput 18(4):433–443. https://doi.org/10.1287/ijoc.1040.0128

Davidović T, Liberti L, Maculan N, Mladenović N (2007) Towards the optimal solution of the multiprocessor scheduling problem with communication delays. In: Baptiste P, Kendall G, Munier-Kordon A, Sourd F (eds) Proceedings of the 3rd multidisciplinary international conference on scheduling: theory and applications, École Polytechnique, Paris, pp 128–135

Frieze AM, Yadegar J (1983) On the quadratic assignment problem. Discrete Appl Math 5(1):89–98. https://doi.org/10.1016/0166-218X(83)90018-5

Furini F, Traversi E, Belotti P, Frangioni A, Gleixner A, Gould N, Liberti L, Lodi A, Misener R, Mittelmann H, Sahinidis N, Vigerske S, Wiegele A (2019) QPLIB: a library of quadratic programming instances. Math Program Comput 11:237–265. https://doi.org/10.1007/s12532-018-0147-4

Glover F, Woolsey E (1974) Converting the 0–1 polynomial programming problem to a 0–1 linear program. Oper Res 22(1):180–182. https://doi.org/10.1287/opre.22.1.180

Hammer PL, Hansen P, Simeone B (1984) Roof duality, complementation and persistency in quadratic 0–1 optimization. Math Program 28(2):121–155. https://doi.org/10.1007/BF02612354

Helmberg C, Rendl F, Weismantel R (2000) A semidefinite programming approach to the quadratic knapsack problem. J Comb Optim 4(2):197–215. https://doi.org/10.1023/A:1009898604624

Hupp L, Klein L, Liers F (2015) An exact solution method for quadratic matching: the one-quadratic-term technique and generalisations. Discrete Optim 18:193–216. https://doi.org/10.1016/j.disopt.2015.10.002

Koopmans TC, Beckmann M (1957) Assignment problems and the location of economic activities. Econometrica 25(1):53–76

Liberti L (2007) Compact linearization for binary quadratic problems. 4OR 5(3):231–245. https://doi.org/10.1007/s10288-006-0015-3

Mallach S (2017) Improved mixed-integer programming models for the multiprocessor scheduling problem with communication delays. J Comb Optim. https://doi.org/10.1007/s10878-017-0199-9

Mallach S (2018) Compact linearization for binary quadratic problems subject to assignment constraints. 4OR 16:295–309. https://doi.org/10.1007/s10288-017-0364-0

Mallach S (2019) A natural quadratic approach to the generalized graph layering problem. In: Archambault D, Tóth CD (eds) Graph drawing and network visualization. Springer, Cham, pp 532–544. https://doi.org/10.1007/978-3-030-35802-0_40

Mallach S (2021) Inductive linearization for binary quadratic programs with linear constraints. 4OR 19(4):549–570. https://doi.org/10.1007/s10288-020-00460-z

Pessoa AA, Hahn PM, Guignard M, Zhu YR (2010) Algorithms for the generalized quadratic assignment problem combining Lagrangean decomposition and the reformulation-linearization technique. Eur J Oper Res 206(1):54–63. https://doi.org/10.1016/j.ejor.2010.02.006

Rostami B, Chassein A, Hopf M, Frey D, Buchheim C, Malucelli F, Goerigk M (2018) The quadratic shortest path problem: complexity, approximability, and solution methods. Eur J Oper Res 268(2):473–485. https://doi.org/10.1016/j.ejor.2018.01.054

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mallach, S. Inductive linearization for binary quadratic programs with linear constraints: a computational study. 4OR-Q J Oper Res 22, 47–87 (2024). https://doi.org/10.1007/s10288-023-00537-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10288-023-00537-5