Abstract

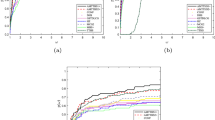

In this paper, according to some suitable features of three-term conjugate gradient methods and excellent theoretical properties of the quasi-Newton methods, a new spectral three-term conjugate gradient is proposed. A modified secant condition is used to compute a suitable spectral parameter. The new search direction ensures the sufficient descent condition without any line search. It is established that the new scheme possesses the global convergence, under the strong Wolfe conditions. Preliminary numerical experiments show the efficiency of the new method, dealing with unconstrained optimization problems.

Similar content being viewed by others

References

Andrei N (2007) A scaled BFGS preconditioned conjugate gradient algorithm for unconstrained optimization. Appl Math Lett 20:645–650

Andrei N (2008) An unconstrained optimization test functions collection. Adv Model Optim 10(1):147–161

Andrei N (2010) New accelerated conjugate gradient algorithms as a modification of Dai–Yuan’s computational scheme for unconstrained optimization. J Comput Appl Math 234:3397–3410

Amini K, Faramarzi P, Pirfalah N (2019) A modified Hestenes–Stiefel conjugate gradient method with an optimal property. Optim Methods Softw 34(4):770–782

Aminifard Z, Babaie-Kafaki S (2018) An optimal parameter choice for the Dai-Liao family of conjugate gradient methods by avoiding a direction of the maximum magnification by the search direction matrix, 4OR Q. J Oper Res. https://doi.org/10.1007/s10288-018-0387-1

Babaie-Kafaki S, Ghanbari R (2017) A class of adaptive Dai–Liao conjugate gradient methods based on the scaled memoryless BFGS update, 4OR Q. J Oper Res 15(1):85–92

Barzilai J, Borwein JM (1988) Two-point step size gradient methods. IMA J Numer Anal 8(1):141–148

Beale EML (1972) A derivation of conjugate gradients. In: Lootsma FA (ed) Numerwal methods/or nonhnear optimization. Academic Press, NewYork, pp 39–43

Birgin EG, Martínez JM (2001) A spectral conjugate gradient method for unconstrained optimization. Appl Math Optim 43:117–128

Bongartz I, Conn AR, Gould NIM, Toint PL (1995) CUTE: constrained and unconstrained testing environments. ACM Trans Math Softw 21:123–160

Dai YH, Kou CX (2013) A nonlinear conjugate gradient algorithm with an optimal property and an improved Wolfe line search. SIAM J Optim 23:296–320

Dolan ED, Moré JJ (2002) Benchmarking optimization software with performance profiles. Math Program 91:201–213

Faramarzi P, Amini K (2019) A modified spectral conjugate gradient method with global convergence. J Optim Theory Appl 182(2):667–690

Fletcher R, Reeves CM (1964) Function minimization by conjugate gradients. Comput J 7:149–154

Hager WW, Zhang H (2005) A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J Optim 16(1):170–192

Hestenes MR, Stiefel E (1952) Method of conjugate gradient for solving linear system. J Res Nat Bur Stand 49:409–436

Jian J, Chen Q, Jiang X, Zeng Y, Yin J (2017) A new spectral conjugate gradient method for large-scale unconstrained optimization. Optim Methods Softw 32(3):503–515

Jiang XZ, Jian JB (2019) Improved Fletcher–Reeves and Dai–Yuan conjugate gradient methods with the strong Wolfe line search. J Comput Appl Math 328:525–534

Kou CX, Dai YH (2015) A modified self-scaling memoryless Broyden–Fletcher–Goldfarb–Shanno method for unconstrained optimization. J Optim Theory Appl 165(1):209–224

Li DH, Fukushima M (2001) A modified BFGS method and its global convergence in non-convex minimization. J Comput Appl Math 129(1–2):15–35

Li X, Shi J, Dong X, Yu J (2018) A new conjugate gradient method based on Quasi–Newton equation for unconstrained optimization. J Comput Appl Math. https://doi.org/10.1016/j.cam.2018.10.035

Nazareth JL (1977) Conjugate direction algorithm without line searches. J Optim Theory Appl 23:373–387

Narushima Y, Yabe H, Ford JA (2011) A three-term conjugate gradient method with sufficient descent property for unconstrained optimization. SIAM J Optim 21:212–230

Nocedal J, Wright SJ (1999) Numerical optimization. Springer series in operation Research. Springer, New York

Polak E, Ribiére G (1969) Note sur la convergence de methods de directions conjugees. Rev Fr Inform Rech Oper 16:35–43

Polyak BT (1969) The conjugate gradient method in extreme problems. USSR Comput Math Math Phys 9:94–112

Raydan M (1997) The Barzilain and Borwein gradient method for the large scale unconstrained minimization problem. SIAM J Optim 7:26–33

Yu GH, Guan LT, Chen WF (2008) Spectral conjugate gradient methods with sufficient descent property for large-scale unconstrained optimization. Optim Methods Softw 23(2):275–293

Zhang L, Zhou W, Li D (2006) A descent modified Polak–Ribiére–Polyak conjugate gradient method and its global convergence. IMA J Numer Anal 26:629–640

Zhang L, Zhou W, Li D (2006) Global convergence of a modified Fletcher–Reeves conjugate gradient method with Armijo-type line search. Numer Math 104:561–572

Zhang L, Zhou W, Li D (2007) Some descent three-term conjugate gradient methods and their global convergence. Optim Methods Softw 22:697–711

Zhou W, Zhang L (2006) A nonlinear conjugate gradient method based on the MBFGS secant condition. Optim Methods Softw 21(5):707–714

Zoutendijk G (1970) Nonlinear programming, computational methods. In: Abadie J (ed) Integer and nonlinear programming. Amsterdam, North-holland, pp 37–86

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Faramarzi, P., Amini, K. A spectral three-term Hestenes–Stiefel conjugate gradient method. 4OR-Q J Oper Res 19, 71–92 (2021). https://doi.org/10.1007/s10288-020-00432-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10288-020-00432-3

Keywords

- Global convergence

- Three-term conjugate gradient method

- Unconstrained optimization

- Spectral parameter

- Modified secant condition