Abstract

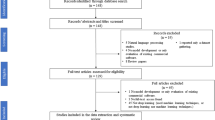

The application of deep learning (DL) in medicine introduces transformative tools with the potential to enhance prognosis, diagnosis, and treatment planning. However, ensuring transparent documentation is essential for researchers to enhance reproducibility and refine techniques. Our study addresses the unique challenges presented by DL in medical imaging by developing a comprehensive checklist using the Delphi method to enhance reproducibility and reliability in this dynamic field. We compiled a preliminary checklist based on a comprehensive review of existing checklists and relevant literature. A panel of 11 experts in medical imaging and DL assessed these items using Likert scales, with two survey rounds to refine responses and gauge consensus. We also employed the content validity ratio with a cutoff of 0.59 to determine item face and content validity. Round 1 included a 27-item questionnaire, with 12 items demonstrating high consensus for face and content validity that were then left out of round 2. Round 2 involved refining the checklist, resulting in an additional 17 items. In the last round, 3 items were deemed non-essential or infeasible, while 2 newly suggested items received unanimous agreement for inclusion, resulting in a final 26-item DL model reporting checklist derived from the Delphi process. The 26-item checklist facilitates the reproducible reporting of DL tools and enables scientists to replicate the study’s results.

Similar content being viewed by others

Abbreviations

- DL:

-

Deep learning

- CVR:

-

Content validity ratio

References

McDermott MBA, Wang S, Marinsek N, Ranganath R, Ghassemi M, Foschini L. Reproducibility in Machine Learning for Health. arXiv [cs.LG]. 2019. Available: http://arxiv.org/abs/1907.01463

Stupple A, Singerman D, Celi LA. The reproducibility crisis in the age of digital medicine. NPJ Digit Med. 2019;2: 2.

Baker M. 1,500 scientists lift the lid on reproducibility. Nature. 2016;533: 452–454.

Vasilevsky NA, Brush MH, Paddock H, Ponting L, Tripathy SJ, Larocca GM, et al. On the reproducibility of science: unique identification of research resources in the biomedical literature. PeerJ. 2013;1: e148.

Moassefi M, Rouzrokh P, Conte GM, Vahdati S, Fu T, Tahmasebi A, et al. Reproducibility of Deep Learning Algorithms Developed for Medical Imaging Analysis: A Systematic Review. J Digit Imaging. 2023. https://doi.org/10.1007/s10278-023-00870-5

Venkatesh K, Santomartino SM, Sulam J, Yi PH. Code and Data Sharing Practices in the Radiology Artificial Intelligence Literature: A Meta-Research Study. Radiol Artif Intell. 2022;4: e220081.

Dalkey N. An experimental study of group opinion: The Delphi method. Futures. 1969;1: 408–426.

Jones J, Hunter D. Consensus methods for medical and health services research. BMJ. 1995;311: 376–380.

Gupta UG, Clarke RE. Theory and applications of the Delphi technique: A bibliography (1975–1994). Technol Forecast Soc Change. 1996;53: 185–211.

Steurer J. The Delphi method: an efficient procedure to generate knowledge. Skeletal Radiol. 2011;40: 959–961.

Lawshe CH. A quantitative approach to content validity. Pers Psychol. 1975;28: 563–575.

Mongan J, Moy L, Kahn CE Jr. Checklist for Artificial Intelligence in Medical Imaging (CLAIM): A Guide for Authors and Reviewers. Radiol Artif Intell. 2020;2: e200029.

Hernandez-Boussard T, Bozkurt S, Ioannidis JPA, Shah NH. MINIMAR (MINimum Information for Medical AI Reporting): Developing reporting standards for artificial intelligence in health care. J Am Med Inform Assoc. 2020;27: 2011–2015.

Liu X, Cruz Rivera S, Moher D, Calvert MJ, Denniston AK, SPIRIT-AI and CONSORT-AI Working Group. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI extension. Nat Med. 2020;26: 1364–1374.

Ayre, C., & Scally, A. J. (2014). Critical Values for Lawshe’s Content Validity Ratio Revisiting the Original Methods of Calculation. Measurement and Evaluation in Counseling and Development, 47, 79–86. - references - scientific research publishing. [cited 30 Aug 2023]. Available: https://www.scirp.org/(S(lz5mqp453edsnp55rrgjct55.))/reference/referencespapers.aspx?referenceid=2434615

Free online form builder & form creator. [cited 17 Oct 2023]. Available: https://www.jotform.com/

Klontzas ME, Gatti AA, Tejani AS, Kahn CE Jr. AI Reporting Guidelines: How to Select the Best One for Your Research. Radiol Artif Intell. 2023;5: e230055.

Acknowledgements

The study was organized through Society of Imaging Informatics for Medicine (SIIM) Machine Learning Tools/Research and Education subcommittees.

Funding

No funding resources.

Author information

Authors and Affiliations

Contributions

Mana Moassefi and Shahriar Faghani, were instrumental in the development and study design as well as writing up the initial draft, incorporating critical revisions of the manuscript and, organization of the Delphi study. Yashbir Singh and Gian Marco Conte provided critical reviews of the draft of the manuscript. Pouria Rouzrokh, Bardia Khosravi, Sanaz Vahdati, Mana Moassefi, and Shahriar Faghani prepared the primary checklist. The remaining individuals listed as authors are recognized as expert panelists who actively engaged in conducting two rounds of the Delphi process and reviewing and commenting the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Moassefi, M., Singh, Y., Conte, G.M. et al. Checklist for Reproducibility of Deep Learning in Medical Imaging. J Digit Imaging. Inform. med. (2024). https://doi.org/10.1007/s10278-024-01065-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10278-024-01065-2