Abstract

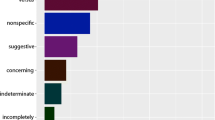

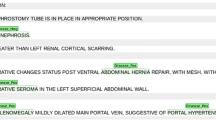

Our goal was to analyze radiology report text for chest radiographs (CXRs) to identify imaging findings that have the most impact on report length and complexity. Identifying these imaging findings can highlight opportunities for designing CXR AI systems which increase radiologist efficiency. We retrospectively analyzed text from 210,025 MIMIC-CXR reports and 168,949 reports from our local institution collected from 2019 to 2022. Fifty-nine categories of imaging finding keywords were extracted from reports using natural language processing (NLP), and their impact on report length was assessed using linear regression with and without LASSO regularization. Regression was also used to assess the impact of additional factors contributing to report length, such as the signing radiologist and use of terms of perception. For modeling CXR report word counts with regression, mean coefficient of determination, R2, was 0.469 ± 0.001 for local reports and 0.354 ± 0.002 for MIMIC-CXR when considering only imaging finding keyword features. Mean R2 was significantly less at 0.067 ± 0.001 for local reports and 0.086 ± 0.002 for MIMIC-CXR, when only considering use of terms of perception. For a combined model for the local report data accounting for the signing radiologist, imaging finding keywords, and terms of perception, the mean R2 was 0.570 ± 0.002. With LASSO, highest value coefficients pertained to endotracheal tubes and pleural drains for local data and masses, nodules, and cavitary and cystic lesions for MIMIC-CXR. Natural language processing and regression analysis of radiology report textual data can highlight imaging targets for AI models which offer opportunities to bolster radiologist efficiency.

Similar content being viewed by others

Data Availability

Data can be made available upon request to the authors, subject to data use agreement and Institutional Review Board restrictions.

References

Jones CM, Danaher L, Milne MR, et al. Assessment of the effect of a comprehensive chest radiograph deep learning model on radiologist reports and patient outcomes: a real-world observational study. BMJ Open. British Medical Journal Publishing Group; 2021;11(12):e052902. https://doi.org/10.1136/BMJOPEN-2021-052902.

Gipson J, Tang V, Seah J, et al. Diagnostic accuracy of a commercially available deep-learning algorithm in supine chest radiographs following trauma. British Journal of Radiology. British Institute of Radiology; 2022;95(1134). https://doi.org/10.1259/BJR.20210979

Seah JCY, Tang CHM, Buchlak QD, et al. Effect of a comprehensive deep-learning model on the accuracy of chest x-ray interpretation by radiologists: a retrospective, multireader multicase study. Lancet Digit Health. Elsevier Ltd; 2021;3(8):e496–e506. https://doi.org/10.1016/S2589-7500(21)00106-0.

Irvin J, Rajpurkar P, Ko M, et al. CheXpert: A Large Chest Radiograph Dataset with Uncertainty Labels and Expert Comparison. www.aaai.org. Accessed February 1, 2019.

Schwartz TM, Hillis SL, Sridharan R, et al. Interpretation time for screening mammography as a function of the number of computer-aided detection marks. Journal of Medical Imaging. Society of Photo-Optical Instrumentation Engineers; 2020;7(2):1. https://doi.org/10.1117/1.JMI.7.2.022408.

Ancker JS, Edwards A, Nosal S, Hauser D, Mauer E, Kaushal R. Effects of workload, work complexity, and repeated alerts on alert fatigue in a clinical decision support system. BMC Med Inform Decis Mak. BioMed Central; 2017;17(1). https://doi.org/10.1186/S12911-017-0430-8.

Elkassem AA, Smith AD. Potential Use Cases for ChatGPT in Radiology Reporting. American Roentgen Ray Society ; 2023. https://doi.org/10.2214/AJR.23.29198.

Mcgurk S, Brauer K, Macfarlane T v., Duncan KA. The effect of voice recognition software on comparative error rates in radiology reports. Br J Radiol. Br J Radiol; 2008;81(970):767–770. https://doi.org/10.1259/BJR/20698753.

Chang CA, Strahan R, Jolley D. Non-clinical errors using voice recognition dictation software for radiology reports: A retrospective audit. J Digit Imaging. 2011;24(4):724–728. https://doi.org/10.1007/s10278-010-9344-z.

Motyer RE, Liddy S, Torreggiani WC, Buckley O. Frequency and analysis of non-clinical errors made in radiology reports using the National Integrated Medical Imaging System voice recognition dictation software. Ir J Med Sci. Ir J Med Sci; 2016;185(4):921–927. https://doi.org/10.1007/S11845-016-1507-6.

Quint LE, Quint DJ, Myles JD. Frequency and Spectrum of Errors in Final Radiology Reports Generated With Automatic Speech Recognition Technology. Journal of the American College of Radiology. Elsevier; 2008;5(12):1196–1199. https://doi.org/10.1016/j.jacr.2008.07.005.

Pezzullo JA, Tung GA, Rogg JM, Davis LM, Brody JM, Mayo-Smith WW. Voice Recognition Dictation: Radiologist as Transcriptionist. J Digit Imaging. Springer; 2008;21(4):384. https://doi.org/10.1007/S10278-007-9039-2.

Femi-Abodunde A, Olinger K, Burke LMB, et al. Radiology Dictation Errors with COVID-19 Protective Equipment: Does Wearing a Surgical Mask Increase the Dictation Error Rate? J Digit Imaging. Springer Science and Business Media Deutschland GmbH; 2021;34(5):1294–1301. https://doi.org/10.1007/S10278-021-00502-W

Radford A, Kim JW, Xu T, Brockman G, Mcleavey C, Sutskever I. Robust Speech Recognition via Large-Scale Weak Supervision. . https://github.com/openai/. Accessed December 27, 2022.

Hillis JM, Bizzo BC, Mercaldo S, et al. Evaluation of an Artificial Intelligence Model for Detection of Pneumothorax and Tension Pneumothorax in Chest Radiographs. JAMA Netw Open. American Medical Association; 2022;5(12):e2247172–e2247172. https://doi.org/10.1001/JAMANETWORKOPEN.2022.47172.

Thian YL, Ng D, Hallinan JTPD, et al. Deep learning systems for pneumothorax detection on chest radiographs: A multicenter external validation study. Radiol Artif Intell. Radiological Society of North America Inc.; 2021;3(4). https://doi.org/10.1148/RYAI.2021200190

Malhotra P, Gupta S, Koundal D, Zaguia A, Kaur M, Lee HN. Deep Learning-Based Computer-Aided Pneumothorax Detection Using Chest X-ray Images. Sensors (Basel). Sensors (Basel); 2022;22(6). https://doi.org/10.3390/S22062278.

Johnson AEW, Pollard TJ, Berkowitz SJ, et al. MIMIC-CXR, a de-identified publicly available database of chest radiographs with free-text reports. Sci Data. NLM (Medline); 2019;6(1):317. https://doi.org/10.1038/s41597-019-0322-0.

MIMIC-CXR Database v2.0.0. . https://physionet.org/content/mimic-cxr/2.0.0/. Accessed April 4, 2020.

Kincaid J, Fishburne R, Rogers R, Chissom B. Derivation Of New Readability Formulas (Automated Readability Index, Fog Count And Flesch Reading Ease Formula) For Navy Enlisted Personnel. Institute for Simulation and Training. 1975; https://stars.library.ucf.edu/istlibrary/56. Accessed December 21, 2022.

Liau TL, Bassin CB, Martin CJ, Coleman EB. MODIFICATION OF THE COLEMAN READABILITY FORMULAS.

Bustos A, Pertusa A, Salinas JM, de la Iglesia-Vayá M. PadChest: A large chest x-ray image dataset with multi-label annotated reports. Med Image Anal. Elsevier; 2020;66:101797. https://doi.org/10.1016/J.MEDIA.2020.101797.

Feng S, Azzollini D, Kim JS, et al. Curation of the candid-ptx dataset with free-text reports. Radiol Artif Intell. Radiological Society of North America Inc.; 2021;3(6). https://doi.org/10.1148/RYAI.2021210136

Wang X, Peng Y, Lu L, Lu Z, Bagheri M, Summers RM. ChestX-ray8: Hospital-scale Chest X-ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases. . https://uts.nlm.nih.gov/metathesaurus.html. Accessed February 1, 2019.

Mann HB, Whitney DR. On a Test of Whether one of Two Random Variables is Stochastically Larger than the Other. Institute of Mathematical Statistics; 1947;18(1):50–60. https://doi.org/10.1214/AOMS/1177730491.

Tibshirani R. Regression shrinkage and selection via the lasso: a retrospective. J R Statist Soc B. 2011;73:273–282.

Pedregosa FABIANPEDREGOSA F, Michel V, Grisel OLIVIERGRISEL O, et al. Scikit-learn: Machine Learning in Python. Journal of Machine Learning Research. 2011;12(85):2825–2830. http://jmlr.org/papers/v12/pedregosa11a.html. Accessed December 21, 2022.

Hartung MP, Bickle IC, Gaillard F, Kanne JP. How to create a great radiology report. Radiographics. Radiological Society of North America Inc.; 2020;40(6):1658–1670. https://doi.org/10.1148/RG.2020200020

Johnson AEW, Pollard TJ, Berkowitz SJ, et al. MIMIC-CXR: A LARGE PUBLICLY AVAILABLE DATABASE OF LABELED CHEST RADIOGRAPHS. . https://github.com/ncbi-nlp/NegBio. Accessed July 14, 2019.

Lakhani P, Flanders A, Gorniak R. Endotracheal tube position assessment on chest radiographs using deep learning. Radiol Artif Intell. Radiological Society of North America Inc.; 2021;3(1). https://doi.org/10.1148/RYAI.2020200026

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study’s conception and design. Data collection and analysis were performed by Dr. Carl Sabottke and Dr. Raza Mushtaq. The first draft of the manuscript was written by Dr. Carl Sabottke and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics Approval

This retrospective study was approved by the Institutional Review Board affiliated with University of Arizona Tucson College of Medicine and Banner Health.

Consent to Participate

Informed consent for this retrospective study was waived by the Institutional Review Board.

Consent to Publish

No individual person’s data is contained within the manuscript which would require consent to publish. Informed consent for this retrospective study was waived by the Institutional Review Board.

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sabottke, C., Lee, J., Chiang, A. et al. Text Report Analysis to Identify Opportunities for Optimizing Target Selection for Chest Radiograph Artificial Intelligence Models. J Digit Imaging. Inform. med. 37, 402–411 (2024). https://doi.org/10.1007/s10278-023-00927-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10278-023-00927-5