Abstract

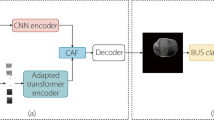

Breast ultrasound (BUS) imaging has become one of the key imaging modalities for medical image diagnosis and prognosis. However, the manual process of lesion delineation from ultrasound images can incur various challenges concerning variable shape, size, intensity, curvature, or other medical priors of the lesion in the image. Therefore, computer-aided diagnostic (CADx) techniques incorporating deep learning–based neural networks are automatically used to segment the lesion from BUS images. This paper proposes an encoder-decoder-based architecture to recognize and accurately segment the lesion from two-dimensional BUS images. The architecture is utilized with the residual connection in both encoder and decoder paths; bi-directional ConvLSTM (BConvLSTM) units in the decoder extract the minute and detailed region of interest (ROI) information. BConvLSTM units and residual blocks help the network weigh ROI information more than the similar background region. Two public BUS image datasets, one with 163 images and the other with 42 images, are used. The proposed model is trained with the augmented images (ten forms) of dataset one (with 163 images), and test results are produced on the second dataset and the testing set of the first dataset—the segmentation performance yielding comparable results with the state-of-the-art segmentation methodologies. Similarly, the visual results show that the proposed approach for BUS image segmentation can accurately identify lesion contours and can potentially be applied for similar and larger datasets.

Similar content being viewed by others

Availability of Data and Material

All the BUS image dataset is acquired from the public medical repositories (Internet source) whose appropriate references are added in the aforementioned sections.

Code Availability

The code can be made available on request and is not present in the public logging domain.

References

Bray, F., Ferlay, J., Soerjomataram, I., Siegel, R., Torre, L., Jemal, A.: Global cancer statistics 2018: Globocan estimates of incidence and mortality worldwide for 36 cancers in 185 countries (vol 68, pg 394, 2018). CA-A CANCER JOURNAL FOR CLINICIANS 70(4), 313–313 (2020)

Siegel, R.L., Miller, K.D., Jemal, A.: Cancer statistics, 2019. CA: a cancer journal for clinicians 69(1), 7–34 (2019)

Berg, W.A., Blume, J.D., Cormack, J.B., Mendelson, E.B., Lehrer, D., Böhm-Vélez, M., Pisano, E.D., Jong, R.A., Evans, W.P., Morton, M.J., et al.: Combined screening with ultrasound and mammography vs mammography alone in women at elevated risk of breast cancer. Jama 299(18), 2151–2163 (2008)

Xian, M., Zhang, Y., Cheng, H.D.: Fully automatic segmentation of breast ultrasound images based on breast characteristics in space and frequency domains. Pattern Recognition 48(2), 485–497 (2015)

Cheng, H.D., Shan, J., Ju, W., Guo, Y., Zhang, L.: Automated breast cancer detection and classification using ultrasound images: A survey. Pattern recognition 43(1), 299–317 (2010)

Fu, H., Xu, Y., Lin, S., Wong, D.W.K., Liu, J.: Deepvessel: Retinal vessel segmentation via deep learning and conditional random field. In: International conference on medical image computing and computer-assisted intervention, pp. 132–139. Springer (2016)

Nie, D., Gao, Y., Wang, L., Shen, D.: Asdnet: attention based semi-supervised deep networks for medical image segmentation. In: International conference on medical image computing and computer-assisted intervention, pp. 370–378. Springer (2018)

Greenspan, H., Van Ginneken, B., Summers, R.M.: Guest editorial deep learning in medical imaging: Overview and future promise of an exciting new technique. IEEE Transactions on Medical Imaging 35(5), 1153–1159 (2016)

Shen, D., Wu, G., Suk, H.I.: Deep learning in medical image analysis. Annual review of biomedical engineering 19, 221–248 (2017)

Cheng, J.Z., Ni, D., Chou, Y.H., Qin, J., Tiu, C.M., Chang, Y.C., Huang, C.S., Shen, D., Chen, C.M.: Computer-aided diagnosis with deep learning architecture: applications to breast lesions in US images and pulmonary nodules in CT scans. Scientific Reports 6(1), 1–13 (2016)

Shelhamer, E., Long, J., Darrell, T.: Fully convolutional networks for semantic segmentation. IEEE transactions on pattern analysis and machine intelligence 39(4), 640–651 (2016)

Agarwal, R., Diaz, O., Lladó, X., Gubern-Mérida, A., Vilanova, J.C., Martí, R.: Lesion segmentation in automated 3D breast ultrasound: volumetric analysis. Ultrasonic imaging 40(2), 97–112 (2018)

Huang, K., Cheng, H.D., Zhang, Y., Zhang, B., Xing, P., Ning, C.: Medical knowledge constrained semantic breast ultrasound image segmentation. In: 2018 24th International Conference on Pattern Recognition (ICPR), pp. 1193–1198. IEEE (2018)

Shareef, B., Xian, M., Vakanski, A.: Stan: Small tumor-aware network for breast ultrasound image segmentation. In: 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), pp. 1–5. IEEE (2020)

Ibtehaz, N., Rahman, M.S.: Multiresunet: Rethinking the u-net architecture for multimodal biomedical image segmentation. Neural Networks 121, 74–87 (2020)

Guan, L., Wu, Y., Zhao, J.: Scan: Semantic context aware network for accurate small object detection. International Journal of Computational Intelligence Systems 11(1), 951 (2018)

Zhuang, Z., Li, N., Joseph Raj, A.N., Mahesh, V.G., Qiu, S.: An RDAU-NET model for lesion segmentation in breast ultrasound images. PloS one 14(8), e0221535 (2019)

Cui, S., Chen, M., Liu, C.: DSUNET: a new network structure for detection and segmentation of ultrasound breast lesions. Journal of Medical Imaging and Health Informatics 10(3), 661–666 (2020)

Shi, X., Chen, Z., Wang, H., Yeung, D.Y., Wong, W.K., Woo, W.c.: Convolutional lstm network: A machine learning approach for precipitation nowcasting. arXiv preprint arXiv:1506.04214 (2015)

Yap, M.H., Pons, G., Marti, J., Ganau, S., Sentis, M., Zwiggelaar, R., Davison, A.K., Marti, R.: Automated breast ultrasound lesions detection using convolutional neural networks. IEEE Journal of Biomedical and Health Informatics 22(4), 1218–1226 (2017)

STU-Hospital Dataset. Accessed on: Feb 6, 2020, https://github.com/xbhlk/STU-Hospital

Xu, B., Wang, N., Chen, T., Li, M.: Empirical evaluation of rectified activations in convolutional network. arXiv preprint arXiv:1505.00853 (2015)

Ioffe, S., Szegedy, C.: Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: International conference on machine learning, pp. 448–456. PMLR (2015)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. International Conference on Learning Representations (ICLR) (2015)

Budak, Ü., Cömert, Z., Rashid, Z.N., Şengür, A., Çıbuk, M.: Computer-aided diagnosis system combining FCN and BI-LSTM model for efficient breast cancer detection from histopathological images. Applied Soft Computing 85, 105765 (2019)

Chorianopoulos, A.M., Daramouskas, I., Perikos, I., Grivokostopoulou, F., Hatzilygeroudis, I.: Deep learning methods in medical imaging for the recognition of breast cancer. In: 2020 11th International Conference on Information, Intelligence, Systems and Applications (IISA, pp. 1–8. IEEE (2020)

Badrinarayanan, V., Kendall, A., Cipolla, R.: Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE transactions on pattern analysis and machine intelligence 39(12), 2481–2495 (2017)

Azad, R., Asadi-Aghbolaghi, M., Fathy, M., Escalera, S.: Bi-directional CONVLSTM U-NET with densley connected convolutions. In: Proceedings of the IEEE International Conference on Computer Vision Workshops, pp. 0–0 (2019)

Ronneberger, O., Fischer, P., Brox, T.: U-net: Convolutional networks for biomedical image segmentation. In: International Conference on Medical image computing and computer-assisted intervention, pp. 234–241. Springer (2015)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 7132–7141 (2018)

Zhuang, J., Xue, Z., Du, Y.: Ccse 703: Dense u-net for biomedical image segmentation (2018)

Staal, J., Abràmoff, M.D., Niemeijer, M., Viergever, M.A., Van Ginneken, B.: Ridge-based vessel segmentation in color images of the retina. IEEE transactions on medical imaging 23(4), 501–509 (2004)

PH\(2\) Database. Accessed on: May 31, 2022, https://www.fc.up.pt/addi/ph2%20database.html

Kvasir SEG: Segmented Polyp Dataset for Computer Aided Gastrointestinal Disease Detection. Accessed on : May 31, 2022, https://datasets.simula.no/kvasir-seg/

Moreira, I.C., Amaral, I., Domingues, I., Cardoso, A., Cardoso, M.J., Cardoso, J.S.: Inbreast: toward a full-field digital mammographic database. Academic radiology 19(2), 236–248 (2012)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778 (2016)

Acknowledgements

The authors would like to thank the Indian Institute of Technology Roorkee for their support to carry out this research work.

Funding

This study was funded by the Ministry of Human Resource Development (MHRD), Government of India, India (grant number OH-31-23-200-428).

Author information

Authors and Affiliations

Contributions

Ridhi Arora: conceptualization, methodology, software validation and data curation, writing — review and editing, writing — original draft. Balasubramanian Raman: supervision

Corresponding author

Ethics declarations

Ethics Approval

This article does not contain any unlisted data, or any other information from studies or experimentation that involves the use of human or animal subjects.

Consent to Participate

Not applicable.

Consent for Publication

Not applicable.

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

A. Depthwise Separable Convolution

Let an input to a convolution layer be represented as \(W \epsilon \mathbb {R}^{n_i \times n_o \times f_h \times f_w}\), where \(n_i\) and \(n_o\) are the input and output number of channels, with \(f_h\) and \(f_w\) denoting the height and width of the used filter, respectively. When the filter is applied to x (an image patch) of image I with size \(n_i \times f_h \times f_w\), a response \(y \ \epsilon \ \mathbb {R}^{n_o}\) is obtained as:

where \(y_o = \sum _{i=1}^{n_i} W_{i,o} * x_i, \ o \ \epsilon \ [n_o]\) and \(i \epsilon \ [n_i]\), and \(*\) denotes the convolution operation. \(W_{i,o} = W[i,o,:,:]\) is a slice of tensor along the \(i^{th}\) input and \(o^{th}\) output channels. The computational complexity of the concerned patch x is \(O (n_i \times n_o \times f_h \times f_w)\).

In contrast to normal convolution, depthwise separable convolution contains depthwise convolution (DC) and pointwise convolution(PC). DC focuses on the spatial connection among pixels, with PC focusing on the cross-channel relationship with \(1 \times 1\) convolution. In order to get the same output shape as that of the input with the application of depthwise separable convolution, the DC convolution kernel is set to \(D \ \in \ \mathbb {R}^{n_i \times 1 \times f_h \times f_w}\), and PC convolution kernel is set to \(P \in \mathbb {R}^{n_i \times n_o \times 1 \times 1}\). When it is applied to the input patch x of image I, the response vector \(\widehat{y}\) is obtained as:

where \(\widehat{y} = \sum _{i=1}^{n_i} P_{i,o} (D_i * x_i)\), with o being the compound operation. \(P_{i,o} = P[i,o,:,:]\) is the corresponding pointwise tensor slice. Also, the corresponding complexity for the depthwise separable operation is \(O(H \times W \times (n_i \times f_h \times f_w + n_i \times n_o))\).

B. Residual Unit

The more a network becomes deep, the more it impedes convergence from the beginning. Later, it faces the problem of network degradation. To attenuate this, residual connections have come into existence and attempted to solve the problem of deep network gradient dispersion by stacking residual modules in the conventional deep networks [36]. The skip connection added in residual modules increases the network’s depth without adding any extra computation parameters. Moreover, this structure promotes training efficiency.

A pyramid form of the residual unit when used, increases the network’s ability to extricate features efficiently. The pyramidal form has the advantage of generalizability and linearly increases the size of output feature maps. Besides, it substantially reduced the network’s training parameters and computational cost. The pyramidal residual unit (in the bottom of Fig. 3), starts with LeakyReLU activation function [22] and ends with Batch Normalization (BN) [23], which is also added before first separable convolution operation. In Fig. 3 (residual block portion), \(X_i\) is the input and \(X_{i+1}\) is the output of i-th residual unit with F denoting the residual function. H signifies the shortcut (residual) connection: if this connections is replaced by identity mapping, then \(H(X_i) = X_i\). Using these notations, the output \(X_{i+1}\) for the basic residual unit is represented as:

C. Bi-directional ConvLSTM

ConvLSTM acts as a convolutional counterpart of conventional fully connected LSTMs [19]. They are introduced to exploit convolution operations into different state transitions, not in conventional LSTM networks that do not consider pixels’ spatial correlation. ConvLSTM consists of different gates for its operation: an input gate \(i_t\), an output gate \(o_t\), a memory cell \(m_t\), and a forget gate \(f_t\). All gates except a memory cell act as control gates for accessing, updating and clearing (forgetting) the memory cell information. With the above definitions, different gates in ConvLSTM are represented as:

where convolution operation and Hadamard product are represented by * and \(\circ\), respectively. \(X_t\) and \(H_t\) are the input and hidden state tensor with \(m_t\) being the memory cell tensor. \(W_{xt}\) and \(W_{ht}\) are the convolution filters for the input and hidden states, respectively. \(b_i\), \(b_f\), \(b_o\), and \(b_m\) are the bias terms.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Arora, R., Raman, B. BUS-Net: Breast Tumour Detection Network for Ultrasound Images Using Bi-directional ConvLSTM and Dense Residual Connections. J Digit Imaging 36, 627–646 (2023). https://doi.org/10.1007/s10278-022-00733-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10278-022-00733-5