Abstract

Model-driven engineering (MDE) uses models as first-class artefacts during the software development lifecycle. MDE often relies on domain-specific languages (DSLs) to develop complex systems. The construction of a new DSL implies a deep understanding of a domain, whose relevant knowledge may be scattered in heterogeneous artefacts, like XML documents, (meta-)models, and ontologies, among others. This heterogeneity hampers their reuse during (meta-)modelling processes. Under the hypothesis that reusing heterogeneous knowledge helps in building more accurate models, more efficiently, in previous works we built a (meta-)modelling assistant called Extremo. Extremo represents heterogeneous information sources with a common data model, supports its uniform querying and reusing information chunks for building (meta-)models. To understand how and whether modelling assistants—like Extremo—help in designing a new DSL, we conducted an empirical study, which we report in this paper. In the study, participants had to build a meta-model, and we measured the accuracy of the artefacts, the perceived usability and utility and the time to completion of the task. Interestingly, our results show that using assistance did not lead to faster completion times. However, participants using Extremo were more effective and efficient, produced meta-models with higher levels of completeness and correctness, and overall perceived the assistant as useful. The results are not only relevant to Extremo, but we discuss their implications for future modelling assistants.

Similar content being viewed by others

Notes

UML2-MDT, www.eclipse.org/modeling/mdt.

Epsilon Exeed, http://www.eclipse.org/epsilon/.

https://www.omg.org/fdtf/projects.htm. The OMG is the standardization body behind many modelling standards such as UML, SysML, MOF or BPMN.

References

Brambilla, M., Cabot, J., Wimmer, M.: Model-Driven Software Engineering in Practice, 2nd edn. Morgan & Claypool, San Rafael (2017)

Schmidt, D.C.: Guest editor’s introduction: model-driven engineering. Computer 39(2), 25–31 (2006)

Kelly, S., Pohjonen, R.: Worst practices for domain-specific modeling. IEEE Softw. 26(4), 22–29 (2009)

Eclipse. Eclipse Code Recommenders. https://marketplace.eclipse.org/content/eclipse-code-recommenders (2020)

Mens, K., Lozano, A.: Source code-based recommendation systems. In: Recommendation Systems in Software Engineering, pp. 93–130. Springer (2014)

Steinberg, D., Budinsky, F., Paternostro, M., Merks, E.: EMF: Eclipse Modeling Framework. Addison-Wesley, Boston (2008)

Almonte, L., Guerra, E., Cantador, I., de Lara, J.: Recommender systems in model-driven engineering. Softw. Syst. Model. 21(1), 249–280 (2022)

Mussbacher, G., Combemale, B., Kienzle, J., Abrahão, S., Ali, H., Bencomo, N., Búr, M., Burgueño, L., Engels, G., Jeanjean, P., Jézéquel, J.-M., Kühne, T., Mosser, S., Sahraoui, H.A., Syriani, E., Varró, D., Weyssow, M.: Opportunities in intelligent modeling assistance. Softw. Syst. Model. 19(5), 1045–1053 (2020)

Agt-Rickauer, H., Kutsche, R.-D., Sack, H.: Automated recommendation of related model elements for domain models. In: 6th International Conference on Model-Driven Engineering and Software Development (MODELSWARD), Revised Selected Papers, volume 991 of CCIS, pp. 134–158. Springer (2018)

Dyck, A., Ganser, A., Lichter, H.: A framework for model recommenders—requirements, architecture and tool support. In: MODELSWARD, pp. 282–290 (2014)

Elkamel, A., Gzara, M., Ben-Abdallah, H.: An UML class recommender system for software design. In: 13th IEEE/ACS International Conference of Computer Systems and Applications (AICCSA), pp. 1–8. IEEE Computer Society (2016)

Burgueño, L., Clarisó, R., Gérard, S., Li, S., Cabot, J.: An NLP-Based Architecture for the Autocompletion of Partial Domain Models. In: Advanced Information Systems Engineering—33rd International Conference, CAiSE, volume 12751 of LNCS, pp. 91–106. Springer (2021)

Di Rocco, J., Di Sipio, C., Di Ruscio, D., Nguyen, P.T.: A GNN-based recommender system to assist the specification of metamodels and models. In: ACM/IEEE 24th International Conference on Model Driven Engineering Languages and Systems (MODELS), pp. 70–81 (2021)

Weyssow, M., Sahraoui, H., Syriani, E.: Recommending metamodel concepts during modeling activities with pre-trained language models. Softw. Syst. Model. (2022)

Almonte, L., Pérez-Soler, S., Guerra, E., Cantador, I., de Lara, J.: Automating the synthesis of recommender systems for modelling languages. In: SLE’21: 14th ACM SIGPLAN International Conference on Software Language Engineering, pp. 22–35. ACM (2021)

Pescador, A., de Lara, J.: DSL-maps: from requirements to design of domain-specific languages. In: Proceedings of ASE, pp. 438–443. ACM (2016)

Aquino, E.R., de Saqui-Sannes, P., Vingerhoeds, R.A.: A methodological assistant for use case diagrams. In: 8th International Conference on Model-Driven Engineering and Software Development (MODELSWARD), pp. 227–236. SciTePress (2020)

Cerqueira, T., Ramalho, F., Marinho, L.B.: A content-based approach for recommending UML sequence diagrams. In: 28th International Conference on Software Engineering and Knowledge Engineering (SEKE), pp. 644–649 (2016)

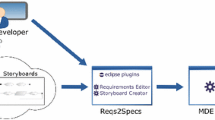

Segura, A.M., de Lara, J., Neubauer, P., Wimmer, M.: Automated modelling assistance by integrating heterogeneous information sources. Comput. Lang. Syst. Struct. 53, 90–120 (2018)

Segura, Á.M., de Lara, J.: Extremo: an Eclipse plugin for modelling and meta-modelling assistance. Sci. Comput. Program. 180, 71–80 (2019)

Stephan, M.: Towards a cognizant virtual software modeling assistant using model clones. In: 41st International Conference on Software Engineering: New Ideas and Emerging Results (NIER@ICSE), pp. 21–24. IEEE/ACM (2019)

Iovino, L., Barriga, A., Rutle, A., Heldal, R.: Model repair with quality-based reinforcement learning. J. Obj. Technol. 19(2), 17:1-17:21 (2020)

Ohrndorf, M., Pietsch, C., Kelter, U., Kehrer, T.: ReVision: a tool for history-based model repair recommendations. In: 40th International Conference on Software Engineering (ICSE), Companion Proceeedings, pp. 105–108. ACM (2018)

Steimann, F., Ulke, B.: Generic model assist. In: Proceedings of MODELS, volume 8107 of Lecture Notes in Computer Science, pp. 18–34. Springer (2013)

Sen, S., Baudry, B., Vangheluwe, H.: Towards domain-specific model editors with automatic model completion. Simulation 86(2), 109–126 (2010)

Mussbacher, G., Combemale, B., Abrahão, S., Bencomo, N., Burgueño, L., Engels, G., Kienzle, J., Kühne, T., Mosser, S., Sahraoui, H.A., Weyssow, M.: Towards an assessment grid for intelligent modeling assistance. In: Proceedings of MODELS Companion, pp. 48:1–48:10. ACM (2020)

Brooke, J., et al.: SUS-a quick and dirty usability scale. Usability Evaluat. Ind. 189(194), 4–7 (1996)

Abrahão, S., Bourdeleau, F., Cheng, B.H.C., Kokaly, S., Paige, R.F., Störrle, H., Whittle, J.: User experience for model-driven engineering: Challenges and future directions. In: MODELS, pp. 229–236. IEEE Computer Society (2017)

Robillard, M.P., Walker, R.J., Zimmermann, T.: Recommendation systems for software engineering. IEEE Softw. 27(4), 80–86 (2010)

Jackson, D.: Software Abstractions-Logic, Language, and Analysis. MIT Press, Cambridge (2006)

Macedo, N., Tiago, J., Cunha, A.: A feature-based classification of model repair approaches. IEEE Trans. Software Eng. 43(7), 615–640 (2017)

Reder, A., Egyed, A.: Computing repair trees for resolving inconsistencies in design models. In: IEEE/ACM ASE, pp. 220–229. ACM (2012)

Habel, A., Sandmann, C.: Graph repair by graph programs. In: STAF Workshops, volume 11176 of Lecture Notes in Computer Science, pp. 431–446. Springer (2018)

Dyck, A., Ganser, A., Lichter, H.: Enabling model recommenders for command-enabled editors. In: MDEBE, pp. 12–21 (2013)

Dyck, A., Ganser, A., Lichter, H.: On designing recommenders for graphical domain modeling environments. In: MODELSWARD, pp. 291–299 (2014)

Hajiyev, E., Verbaere, M., de Moor, O., De Volder, K.: Codequest: querying source code with datalog. In: Proceedings of OOPSLA 2005, pp. 102–103. ACM (2005)

Linstead, E., Bajracharya, S.K., Ngo, T.C., Rigor, P., Videira Lopes, C., Baldi, P.: Sourcerer: mining and searching internet-scale software repositories. Data Min. Knowl. Discov. 18(2), 300–336 (2009)

Mendieta, R., de la Vara, J.L., Llorens, J., Álvarez-Rodríguez, J.: Towards effective SysML model reuse. In: Proceedings of MODELSWARD, pp. 536–541. SCITEPRESS (2017)

Álvarez Rodríguez, J.M., Mendieta, R., de la Vara, J.L., Fraga, A., Morillo, J.L.: Enabling system artefact exchange and selection through a linked data layer. J. UCS 24(11), 1536–1560 (2018)

Lucrédio, D., de Mattos Fortes, R.P., Whittle, J.: MOOGLE: a metamodel-based model search engine. Softw. Syst. Model. 11(2), 183–208 (2012)

Hernández López, J.A., Cuadrado, J. S.: An efficient and scalable search engine for models. Softw. Syst. Model. (2021)

Bislimovska, B., Bozzon, A., Brambilla, M., Fraternali, P.: Textual and content-based search in repositories of web application models. TWEB 8(2), 1–11 (2014)

Dijkman, R.M., Dumas, M., van Dongen, B.F., Käärik, R., Mendling, J.: Similarity of business process models: metrics and evaluation. Inf. Syst. 36(2), 498–516 (2011)

Paige, R.F., Kolovos, D.S., Rose, L.M., Drivalos, N., Polack, F.A.C.: The design of a conceptual framework and technical infrastructure for model management language engineering. In: ICECCS, pp. 162–171. IEEE Computer Society (2009)

Carver, J.C., Syriani, E., Gray, J. Assessing the frequency of empirical evaluation in software modeling research. In: 1st Workshop on Experiences and Empirical Studies in Software Modelling (2011)

Whittle, J., Hutchinson, J.E., Rouncefield, M., Burden, H., Heldal, R.: A taxonomy of tool-related issues affecting the adoption of model-driven engineering. Software Syst. Model. 16(2), 313–331 (2017)

Abrahão, S., Iborra, E., Vanderdonckt, J.: Usability evaluation of user interfaces generated with a model-driven architecture tool. In: Maturing Usability—Quality in Software, Interaction and Value, HCI Series, pp. 3–32. Springer (2008)

Wüest, D., Seyff, N., Glinz, M.: Flexisketch: a lightweight sketching and metamodeling approach for end-users. Softw. Syst. Model. 18(2), 1513–1541 (2019)

Safdar, S.A., Iqbal, M.Z., Khan, M.U.: Empirical evaluation of UML modeling tools-a controlled experiment. In: 11th European Conference on Modelling Foundations and Applications (ECMFA), pp. 33–44 (2015)

Bobkowska, A., Reszke, K.: Usability of UML modeling tools. In: Conference on Software Engineering: Evolution and Emerging Technologies, pp. 75–86 (2005)

Ren, R., Castro, J.W., Santos, A., Pérez-Soler, S., Acuña, S.T., de Lara, J.: Collaborative modelling: Chatbots or on-line tools? An experimental study. In: Proceedings of EASE, pp. 260–269. ACM (2020)

Pérez-Soler, S., Guerra, E., de Lara, J.: Collaborative modeling and group decision making using chatbots in social networks. IEEE Softw. 35(6), 48–54 (2018)

Condori-Fernández, N., Panach, J.I., Baars, A.I., Vos, T.E.J., Pastor, O.: An empirical approach for evaluating the usability of model-driven tools. Sci. Comput. Program. 78(11), 2245–2258 (2013)

Tolvanen, J.-P., Kelly, S.: Model-driven development challenges and solutions—experiences with domain-specific modelling in industry. In: Proceedings of MODELSWARD, pp. 711–719. SciTePress (2016)

Karna, J., Tolvanen, J.-P., Kelly, S. Evaluating the use of domain-specific modeling in practice. In: Proceedings of the 9th OOPSLA Workshop on Domain-Specific Modeling (2009)

Buezas, N., Guerra, E., de Lara, J., Martín, J., Monforte, M., Mori, F., Ogallar, E., Pérez, O., Cuadrado, J.S.: Umbra designer: Graphical modelling for telephony services. In: Proceedings of ECMFA, volume 7949 of Lecture Notes in Computer Science, pp. 179–191. Springer (2013)

Hutchinson, J., Whittle, J., Rouncefield, M., Kristoffersen, S.: Empirical Assessment of MDE in Industry. In: ICSE, pp. 471–480. ACM (2011)

Cuadrado, J.S., Cánovas Izquierdo, J.L., Molina, J.G.: Applying model-driven engineering in small software enterprises. Sci. Comput. Program. 89, 176–198 (2014)

Green, T.R.G.: Cognitive dimensions of notations. In: People and Computers V, pp. 443–460. Cambridge University Press, Cambridge (1989)

Moody, D.L.: The physics of notations: Toward a scientific basis for constructing visual notations in software engineering. IEEE Trans. Software Eng. 35(6), 756–779 (2009)

Granada, D., Vara, J.M., Bollati, V.A., Marcos, E.: Enabling the development of cognitive effective visual DSLs. In: MODELS, volume 8767 of Lecture Notes in Computer Science, pp. 535–551. Springer (2014)

Barisic, A., Amaral, V., Goulão, M.: Usability driven DSL development with USE-ME. Comput. Lang. Syst. Struct. 51, 118–157 (2018)

Granada, D., Vara, J.M., Brambilla, M., Bollati, V.A., Marcos, E.: Analysing the cognitive effectiveness of the WebML visual notation. Softw. Syst. Model. 16(1), 195–227 (2017)

Atkinson, C., Kennel, B., Goß, B.: The level-agnostic modeling language. In: 3rd International Conference on Software Language Engineering (SLE), pp. 266–275. Springer (2011)

Miller, G.A.: Wordnet: a lexical database for English. Commun. ACM 38(11), 39–41 (1995)

Ko, A.J., LaToza, T.D., Burnett, M.M.: A practical guide to controlled experiments of software engineering tools with human participants. Empir. Softw. Eng. 20(1), 110–141 (2015)

Salman, I., Misirli, A.T., Juristo, N.: Are students representatives of professionals in software engineering experiments? In Proceedings of the 37th International Conference on Software Engineering—Volume 1, ICSE ’15, pp. 666–676. IEEE (2015)

Falessi, D., Juristo, N., Wohlin, C., Turhan, B., Münch, J., Jedlitschka, A., Oivo, M.: Empirical software engineering experts on the use of students and professionals in experiments. Empirical Softw. Eng. 23(1), 452–489 (2018)

Feldt, R., Zimmermann, T., Bergersen, G.R., Falessi, D., Jedlitschka, A., Juristo, N., Münch, J., Oivo, M., Runeson, P., Shepperd, M., SjØberg, D.I., Turhan, B.: Four commentaries on the use of students and professionals in empirical software engineering experiments. Empirical Softw. Eng. 23(6), 3801–3820 (2018)

Feitelson, D.G.: Using students as experimental subjects in software engineering research—a review and discussion of the evidence (2015). https://arxiv.org/abs/1512.08409

Likert, R.A.: A technique for measurement of attitudes. Arch. Psychol. 22 (1932)

Wohlin, C., Runeson, P., Ohlsson, M.C., Regnell, B.: Experimentation in Software Engineering. Springer, Martin Höst (2012)

Bangor, A., Kortum, P., Miller, J.: Determining what individual sus scores mean: adding an adjective rating scale. J. Usability Stud. 4(3), 114–123 (2009)

Singer, J., Vinson, N.G.: Ethical issues in empirical studies of software engineering. IEEE Trans. Softw. Eng. 28(12), 1171–1180 (2002)

Vinson, N.G., Singer, J.: A Practical Guide to Ethical Research Involving Humans, pp. 229–256. Springer, Berlin (2008)

Kitchenham, B.A., Pfleeger, S.L.: Personal Opinion Surveys, pp. 63–92. Springer, Berlin (2008)

Powers, D.: Evaluation: From precision, recall and fmeasure to ROC, informedness, markedness and correlation. J. Mach. Learn. Technol. 2, 37–63 (2007)

Saito, T., Rehmsmeier, M.: The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLOS ONE 10(3), 1–21 (2015)

Van Rijsbergen, C.J.: Information Retrieval, 2nd edn. Butterworth-Heinemann, Oxford (1979)

Forman, G., Scholz, M.: Apples-to-apples in cross-validation studies: Pitfalls in classifier performance measurement. SIGKDD Explor. Newsl. 12(1), 49–57 (2010)

Lewis, J.R., Sauro, J.: The factor structure of the system usability scale. In: 1st International Conference on Human Centered Design, HCD, pp. 94–103. Springer, Berlin (2009)

Lewis, J.R., Brown, J., Mayes, D.K.: Psychometric evaluation of the EMO and the SUS in the context of a large-sample unmoderated usability study. Int. J. Human-Comput. Interact. 31(8), 545–553 (2015)

Sauro, J., Lewis, J.R.: When designing usability questionnaires, does it hurt to be positive? In: SIGCHI Conference on Human Factors in Computing Systems, CHI, pp. 2215–2224. ACM (2011)

Lewis, J.R., Utesch, B.S., Maher, D.E.: Measuring perceived usability: the SUS, UMUX-LITE, and AltUsability. Int. J. Human-Computer Interact. 31(8), 496–505 (2015)

Cohen, J.: Statistical Power Analysis for the Behavioral Sciences. Routledge, London (2013)

Banerjee, A., Chitnis, U.B., Jadhav, S.L., Bhawalkar, J.S., Chaudhury, S.: Hypothesis testing, type I and type II errors. Ind. Psychiatry J. 18, 127–31 (2009)

Nickerson, R.: Null hypothesis significance testing: a review of an old and continuing controversy. Psychol. Methods 5, 241–301 (2000)

McGraw, K., Wong, S.C.P.: A common language effect size measure. Psychol. Bull. 111, 361–365 (1992)

Cohen, J.: Statistical Power Analysis for the Behavioral Sciences, 2nd edn. Lawrence Erlbaum Associates, Hillsdale (1988)

Burgueño, L., Cabot, J., Li, S., Gérard, S.: A generic LSTM neural network architecture to infer heterogeneous model transformations. Softw. Syst. Model. 21(1), 139–156 (2022)

Hernández López, J.A., Cánovas Izquierdo, J.L., Cuadrado, J.S.: Modelset: a dataset for machine learning in model-driven engineering. Softw. Syst. Model. (2022)

Wohlin, C., Runeson, P., Höst, M., Ohlsson, M.C., Regnell, B., Wesslén, A.: Experimentation in Software Engineering: An Introduction. Kluwer Academic Publishers, Norwell (2000)

Colquhoun, D.: An investigation of the false discovery rate and the misinterpretation of p-values. R. Soc. Open Sci. 1(3), 140216 (2014)

Forstmeier, W., Wagenmakers, E.-J., Parker, T.H.: Detecting and avoiding likely false-positive findings—a practical guide. Biol. Rev. Camb. Philos. Soc. 92(4), 1941–1968 (2017)

Brooke, J.: SUS: a retrospective. J. Usability Stud. 8(2), 29–40 (2013)

Cronbach, L.J.: Coefficient alpha and the internal structure of tests. Psychometrika 16(3), 297–334 (1951)

Acknowledgements

We would like to thank the reviewers for their valuable comments. This work was supported by the Ministry of Education of Spain (FPU Grant FPU13/02698 and stay EST17/00803); the Spanish Ministry of Science and Innovation (PID2021-122270OB-I00); the R &D programme of the Madrid Region (P2018/TCS-4314); and the Austrian Federal Ministry for Digital and Economic Affairs and the National Foundation for Research, Technology and Development (CDG).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Lionel Briand.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A Appendix: Evaluation material

A Appendix: Evaluation material

This appendix contains the documents provided to the participants in our evaluation. Section A.1 shows a condensed version of the Informed Consent to participate in the user study. Section A.2 contains the Demographic Questionnaire used in the survey. Section A.3 contains a condensed version of the Description of the Task provided to the subjects of the evaluation. Section A.4 contains the Opinion Questionnaire used in the control group. Finally, Sect. A.5 contains the following documents provided to the participants in the evaluation group: the General Questionnaire (according to the System Usability Scale) shown in Table 12 and the Specific Questionnaire shown in Table 13.

1.1 A.1 Informed consent

The Informed Consent had to be signed by all the participants in order to cover the basic ethical aspects [74, 75] considered by the project.

1.2 A.2 Demographic questionnaire

The Demographic Questionnaire contained 11-item, and it was handed out at the beginning of the experiment to both groups.

1.3 A.3 Description of the task

It has some common statements presented to both groups:

Some statements presented to the subjects of the control group:

Some statements presented to the subjects of the experimental group:

1.4 A.4 Control group questionnaire

The Opinion Questionnaire contained 6-item, and it was handed out to the control group after having performed the task. In addition, subjects had the option to provide a rationale to each answer. For the sake of brevity, we omitted the space for the rationale in this appendix.

1.5 A.5 Experimental group questionnaires

The General Questionnaire and the Specific Questionnaire were answered by the experimental group in order to measure the usability perceived by language engineers about using the assistant during the modelling task, and the usefulness of Extremo ’s features. In both cases, all the questions were mandatory.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Mora Segura, Á., de Lara, J. & Wimmer, M. Modelling assistants based on information reuse: a user evaluation for language engineering. Softw Syst Model 23, 57–84 (2024). https://doi.org/10.1007/s10270-023-01094-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10270-023-01094-5