Abstract

To cope with the increased complexity of systems, models are used to capture what is considered the essence of a system. Such models are typically represented as a graph, which is queried to gain insight into the modelled system. Often, the results of these queries need to be adjusted according to updated requirements and are therefore a subject of maintenance activities. It is thus necessary to support writing model queries with adequate languages. However, in order to stay meaningful, the analysis results need to be refreshed as soon as the underlying models change. Therefore, a good execution speed is mandatory in order to cope with frequent model changes. In this paper, we propose a benchmark to assess model query technologies in the presence of model change sequences in the domain of social media. We present solutions to this benchmark in a variety of 11 different tools and compare them with respect to explicitness of incrementalization, asymptotic complexity and performance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Models are a highly valuable asset in any engineering process as they capture the knowledge of a system in a formal abstraction. This abstraction allows to reason on properties in order to obtain insights on the underlying physical system through analysis.

These insights need to be refreshed as soon as the models of the system change in order to stay meaningful. However, for large systems it is often not viable to recalculate the entire model analysis for every change. Rather, it is desirable to propagate these changes to the analysis results incrementally, i.e. only recalculate those parts of the analysis results that are affected by a given change.

Requirements regarding these insights often change over time. This makes it very important to express such analyses in a maintainable and understandable form. Recently, multiple model query technologies [8, 9, 18, 46, 65] have been proposed to aid this problem by deriving an incremental change propagation from a declarative specification. Further, of course one could also use existing and established non-incremental query technology, particularly if some parts of the analysis are more complex and may not be supported by tools that target incremental change propagation. Finally, it is also an option to translate the model into dedicated analysis methods to reuse query technology not based on models. Given this plethora of options, it is difficult to estimate the differences and find the trade-offs between these approaches in terms of understandability, conciseness, efficiency and others. Does the tool fit into my technology space? Is it useful to rely on the incrementalization of a tool or is it better—at least performance-wise—to implement change propagation explicitly? How long does it take to recover from an application crash? How much development effort will be necessary to implement change propagation? How does it scale? Can I speed it up by adding more CPU cores? Is the tool extensible or can it happen that my analysis is not supported at some point?Footnote 1

To aid this comparison and assess how current modelling technologies are capable of offering a concise and understandable language for model analysis, yet still offer a good performance in the presence of frequent model changes, we propose the “Social Media” benchmark. In this benchmark, two queries should be formulated that analyse a model of a social media network. In social networks, new \(\textsf {Post}\)s , \(\textsf {Comment}\)s and likes arrive at a very high frequency and thus cause analyses of the entire network to invalidate quickly. In our benchmark, the analyses shall find the most influential posts and comments, according to selected criteria. While the first query is rather simple in the sense that it can easily be solved with standard query operators, the second query is more complex as graph algorithms are used that are not directly supported by query technologies.

Graph queries are difficult from an incrementalization point of view [31] as they capture a rich family of algorithms and they are often described in an imperative pseudo-code that usually utilizes state. Using a generic incrementalization system, this often spans a state space that is too large for an incrementalization to be efficient. To aid this situation, dynamic algorithms are known for a number of graph problems that offer strategies to propagate graph changes efficiently, often with a radically different approach than how with the computation was performed in the batch (or initial) scenario. However, there is no comparison yet how and how efficient such dynamic algorithms can be included into incremental query technologies.

We collected 11 implementations of this benchmark that cover most of the possible approaches mentioned above and have mostly been created by authors or active developers of the respective tools. Therefore, we claim that we can compare the tools through the solutions for this benchmark. The tools cover a wide area of model query technology and database management systems; thus, the analysis of the solutions using those tools allows us to reason on the previously mentioned questions.

The benchmark was originally proposed at the Transformation Tool Contest (TTC) 2018 as live contest [51]. At the TTC, several solutions have been submitted [12, 17, 34, 49, 78]. After the TTC, we invited further researchers working in incremental query technology to provide solutions in their favourite tools. In this paper, we present all of these solutions and compare them with respect to their features, complexity of change propagation and overall performance.

The remainder of this paper is structured as follows: Sect. 2 presents the benchmark and the queries that it consists of. Section 3 explains the solutions. Section 4 compares the solutions with respect to the declarativeness of the query language, the used data model, the explicitness of the incrementalization, the durability, support for parallelism and the asymptotic complexity of change propagations. Section 5 presents experimental performance results and their analysis. Section 6 discusses related work, and Sect. 7 concludes the paper.

2 The social media benchmark

In this section, we present the benchmark which we use to compare existing incrementalization approaches. First, Sect. 2.1 explains the metamodel that we use to model a social network graph, and then, Sect. 2.2 presents the two queries used in the benchmark. We describe the change sequences in Sect. 2.3 and the phases of the benchmark in Sect. 2.4.

2.1 Metamodel and change sequences

In this benchmark, we use the data from the 2016 DEBS Grand Challenge,Footnote 2 adapted from the LDBC Social Network Benchmark [5, 30] and the SIGMOD 2014 Programming Contest [27]. For this version of the benchmark, we created an Ecore metamodel to describe the social network, translated the series of events in the original data sources to models and change sequences and manually tuned the obtained change sequences.Footnote 3

The metamodel of the social network is depicted in Fig. 1. The social network consists of \(\textsf {User}\)s, \(\textsf {Post}\)s and \(\textsf {Comment}\)s. \(\textsf {User}\)s may have \(\textsf {friends}\), which is a unidirectional edge (i.e. it behaves as a symmetric relationship). \(\textsf {Submission}\)s form a tree with a \(\textsf {Post}\) in its root and \(\textsf {Comment}\)s as the rest of its nodes. \(\textsf {User}\)s may like \(\textsf {Comment}\)s (\(\textsf {likes}\) edge).

2.2 Queries

In the scope of the proposed benchmark, we focus on two model queries. The first query (Q1) is rather easy and is expected to be directly supported by the tools. It shall return the most controversial \(\textsf {Post}\)s in the social media network. The second query (Q2) is more sophisticated, and we expected it not to be directly supported by tools and force the solutions into case-specific extensions. It shall return the most influential \(\textsf {Comment}\)s. The results of both queries are concatenated to strings in order to use them for automated correctness checks.

2.2.1 Query 1: Most controversial posts

We consider a \(\textsf {Post}\) as controversial, if it starts a debate through its \(\textsf {Comment}\)s. For this, we assign a score of 10 for each \(\textsf {Comment}\) that belongs to a \(\textsf {Post}\). Hereby, we consider a \(\textsf {Comment}\) belonging to a \(\textsf {Post}\), if it is a reply to either (1) the \(\textsf {Post}\) itself or (2) another \(\textsf {Comment}\) that already belongs to the \(\textsf {Post}\). In addition, we also value if \(\textsf {User}\)s liked \(\textsf {Comment}\)s, so we additionally assign a score of 1 for each \(\textsf {User}\) that has liked a \(\textsf {Comment}\) (\(\textsf {User}\)s are counted per \(\textsf {Comment}\) liked, i.e. a \(\textsf {User}\) can contribute multiple times to the overall score). In short, the score of a \(\textsf {Post}\) is calculated by taking all \(\textsf {Comment}\)s which are in the \(\textsf {Submission}\) tree rooted in the \(\textsf {Post}\), and scoring each of them as the 10 plus the number of \(\textsf {User}\)s that liked the \(\textsf {Comment}\), then summing up these scores.

The goal of the query is to find the three \(\textsf {Post}\)s with the highest score. Ties are broken by timestamps, i.e. more recent \(\textsf {Post}\)s should take precedence over older \(\textsf {Post}\)s. The result string of this query is a concatenation of the \(\textsf {Post}\)s ids, separated by the character | (Fig. 2).

2.2.2 Query 2: Most influential comments

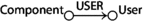

In this query, we aim to find \(\textsf {Comment}\)s that are liked by groups of \(\textsf {User}\)s. We identify groups through the friendship relation. Hereby, \(\textsf {User}\)s that liked a specific \(\textsf {Comment}\) form an induced subgraph where two \(\textsf {User}\)s are connected if they are friends (but still, only \(\textsf {User}\)s who have liked the \(\textsf {Comment}\) are considered). The goal of the second query is to find connected components in that graph. We assign a score to each \(\textsf {Comment}\) which is the sum of its squared component sizes.

Similarly to the previous query, we aim to find the three \(\textsf {Comment}\)s with the highest score. Ties are broken by timestamps, i.e. more recent \(\textsf {Comment}\)s should take precedence over older \(\textsf {Comment}\)s. The result string is again a concatenation of the \(\textsf {Comment}\) IDs, separated by the character | (Fig. 3).

2.3 Change sequences

To measure the incremental performance of solutions, the benchmark uses generated change sequences. The changes are available in the form of models. An excerpt of the change metamodel is depicted in Fig. 4. There are classes for each elementary change operation that distinguish between the type of a feature, whether it is an attribute, association or composition change. Subclasses further specify the kind of operation, i.e. whether elements are added, removed or changed. In these concrete classes, the added, deleted or assigned items are included.Footnote 4 The change metamodel also supports change transactions where a source change implies some other changes, for example, setting opposite references.

The elementary changes can be categorized into the following five change types:

-

A new \(\textsf {User}\) is added

-

A new \(\textsf {Post}\) is added

-

A new \(\textsf {Comment}\) is added as a reply to an existing \(\textsf {Submission}\)

-

A \(\textsf {Comment}\) is liked

-

Two existing \(\textsf {User}\)s become friends

The changes are always additive, i.e. nodes or edges are never deleted. During the contest, these change sequences were made available in NMF and EMF. However, solutions are also allowed to transform the change models into their own, internal change representation. In such case, the transformation of the change representation is excluded from the time measurements.

An example of an update consisting of five elementary changes is depicted in Fig. 5.

2.4 Benchmark phases

The benchmark consists of the following execution phases:

-

1.

Initialization: Setup modelling framework.

-

2.

Loading: Load initial models.

-

3.

Initial evaluation: Compute selected query for initial model.

-

4.

Updates: Change sequences are applied to the model. Each change sequence consists of several elementary change operations. After each change sequence, the query result must be consistent with the changed source models, either by entirely creating the view from scratch (batch evaluation) or by propagating changes to the view result (incremental evaluation).

Solutions are mainly competing on their update performance, but are also expected to keep the times of the loading and initial evaluation at a reasonable level.

3 Solutions

In this section, we present the solutions developed for the Social Media benchmark from Sect. 2. We present the following solutions:

-

Modelling tools and graph databases running in a managed runtime (JVMFootnote 5 or CLRFootnote 6):

-

A solution using the plain .NET query API and NMF to represent the models in memory (Sect. 3.2) and a solution using the incrementalization system of NMF (Sect. 3.3).

-

Multiple solutions using the model management system Hawk (Sect. 3.4).

-

A solution using the incremental reference attribute grammar tool JastAdd (Sect. 3.5).

-

A solution using the model transformation language YAMTL (Sect. 3.6), with a batch variant and two incremental variants (implicit/explicit incrementality).

-

A batch solution using the ATL model transformation language with the EMFTVM batch execution engine (Sect. 3.7).

-

A solution written in Xtend (Sect. 3.8).

-

Two incremental solutions based on AOF (Sect. 3.9): (1) the AOF solution with Xtend surface language, (2) the ATL Incremental solution with ATL surface language.

-

Batch and incremental solutions using the Neo4j graph DBMS (Sects. 3.10–3.11).

-

-

Tools using relational or matrix-based representations, running on native runtimes:

-

Batch and incremental solutions using the PostgreSQL relational DBMS (Sects. 3.12–3.13).

-

Batch and incremental solutions formulated in linear algebra using the GraphBLAS API and the SuiteSparse:GraphBLAS library (Sects. 3.14–3.15), implemented in C++.

-

A solution using the Differential Dataflow programming model implemented in Rust (Sect. 3.16).

-

In broad terms, there have been recurrent approaches for solving the two queries. To help reduce the length of the solution descriptions, a first subsection will provide a broad categorization of the approaches followed by the various solutions for each query. This will be followed by a subsection for each solution: it will start with a description of the used tool, followed by detailed explanations on how they implemented the queries. Given that most solutions have been developed by the tool authors or expert developers, we assume that the solutions represent the best or close to optimal solution possible for each tool.

Solutions created during the contest were described in the proceedings of the event [35]. Preliminary version of the Neo4j and GraphBLAS solutions was discussed in [29] and [28], respectively.

3.1 Common solution approaches

Further examination of the various solutions showed that several common patterns were recurrent through the approaches chosen to solve the two queries. In this section, we will provide a broad classification that will be used in the solution descriptions, in order to allow them to focus on their technology-specific aspects.

3.1.1 Query 1: Most controversial posts

The solutions for this query can largely be characterized across two dimensions: whether they were incremental in their maintenance of the scores, and how they maintained the set of the top three elements. Table 1 summarizes this classification.

Regarding the first dimension, we acknowledge similarly to Giese and Wagner [36] that there can be different degrees of incrementality in a transformation.Footnote 7 Specifically, we consider these three variants:

-

Non-incremental or batch solutions, which re-compute the scores from scratch after each change.

-

Partially incremental solutions, which re-compute scores of \(\textsf {Post}\)s impacted by changes from scratch.

-

Fully incremental solutions that update the scores of the \(\textsf {Post}\)s impacted by changes as needed, without recomputing them from scratch (for instance, a new like would simply increase the previous score by 1).

In regard to the second dimension, four broad variants were found:

-

Full sorting solutions, which use a standard sorting algorithm (typically the one in the standard libraries for the chosen language) to sort the elements by score, incurring a cost of \(O(n \, \log \, n)\).

-

Incremental sorting solutions, which use a dedicated data structure to maintain the elements sorted as they appear and as their individual scores change (e.g. a balanced search tree).

-

Offline top-x solutions, which keep in memory the full list of scores and do a single pass over the elements, maintaining only the top-x elements in an auxiliary list. This has a reduced cost of O(n).

-

Online top-x solutions, which only keep in memory the \(\textsf {Post}\)s with the top-x scores at any time. This effectively has the same sorting cost of O(n), but reduces the amount of memory needed.

Note that the online top-x is only feasible due to the fact that the possible changes mentioned in Sect. 2.3 never remove likes or delete comments: this means that scores are monotonically increasing. This optimization will typically be one that a generic “top-x” incremental operator would not do, as the sorting key could go down as well as up in value in the general case.

3.1.2 Query 2: Most influential comments

The solutions can be characterized across three dimensions: whether they maintained the set of connected components in the graph incrementally, the approach used to compute the set of connected components, and how they sorted the scored results. The general classification is summarized in Table 2.

For the first dimension of incrementality, this is generally easier than in the previous solution, as it is simply whether after each change, they update the set of connected components, or re-compute it. For the second dimension, five broad approaches were followed:

-

The most popular approach was to reuse Tarjan’s algorithm for computing the set of connected components in a graph [84], which has a worst-case performance of \(O(|V| + |E|)\).

-

The next most popular approach was a naive recomputation of the component that each node belonged to after each change, by using breadth-first or depth-first traversals from that node.

-

Some solutions took advantage of the fact that the changes never remove links, using a simpler union-find data structure and using the union operation whenever a new edge was added. In a way, this is similar to how some solutions of query 1 used this “monotonically growing” graph to simplify the way to maintain the top-x elements.

-

There were a few individual solutions that used specialized algorithms, such as FastSV [88], or label propagation.

The third dimension is the approach followed to compute the top scoring results, which uses the same four broad variants as in Sect. 3.1.1.

3.2 Reference solution: C# query syntax

As reference, we use a solution using NMF [45, 50] for the model representation and the standard .NET collection operators through the C# query syntax. During the TTC and throughout the remainder of this paper, this solution is used as a reference in terms of performance and understandability as it represents a solution if one just uses what mainstream programming languages (in this case C#) can provide without a dedicated incremental model query technology.

3.2.1 Tool description

The query syntax and the underlying collection operators are features built into the C# programming language and actively used by millions of developers.

3.2.2 Query 1

The reference solution for Q1 is depicted in Listing 1. It is a full sorting batch implementation. Lines 3–4 of this listing compute the score of a given \(\textsf {Post}\) by summing up the scores for the \(\textsf {Comment}\)s of a given \(\textsf {Post}\). To get all \(\textsf {Comment}\)s of a \(\textsf {Post}\), the solution simply uses the Descendants operation available to any NMF model element and combines it with a simple type filter. This implicitly utilizes the composition hierarchy to obtain a collection of all elements contained somewhere in the given \(\textsf {Post}\). Line 5 simply states that the collection of \(\textsf {Post}\)s should be ordered by the score and the timestamp, before Line 6 specifies that we are only interested in the first three entries.

3.2.3 Query 2

The reference solution for Q2 is depicted in Listing 2, a full sorting batch implementation using Tarjan’s algorithm. It is very similar to Listing 1 in its overall structure except for the slightly more complex score calculation due to the required computation of connected components. For this, we are using the class Layering, an implementation of Tarjan’s algorithm [84]. This implementation requires specifying the underlying graph through its nodes (for each \(\textsf {Comment}\), this is the set of \(\textsf {User}\)s that have liked the \(\textsf {Comment}\)) and a function obtaining incident nodes of a given node (in this case the friends of a given \(\textsf {User}\) that have also liked the \(\textsf {Comment}\)). The result of the scoring computation is then again ordered and the IDs of the first three elements are returned.

3.3 NMF incremental

3.3.1 Tool description

NMF Expressions [46, 52] is an incrementalization system that uses the feature of the C# language to compile lambda expressions into models of the code, instead of machine code. These models are then used to derive a dynamic dependency graph (DDG) from a given expression and observe changes. These changes originate from elementary update notifications and are propagated through the dependency graph.

Based on the underlying formalization as a categorial functor, NMF Expressions is able to include custom incrementalizations of functions and includes a library of such manually incrementalized operators, including most of the Standard Query Operators (SQO).Footnote 8

3.3.2 Query 1

While NMF Expressions includes an incrementalization of most SQOs, the Take method used in the reference solution for Q1 is not supported, same as any other query operators of the SQO that deal with indices. The reason for this is that it is costly to find out the index of an element in, for example, a filtered, unsorted list given the index in the source collection. This always requires a linear scan; meanwhile, the SQO implementations generally try to propagate changes in constant or near constant time (logarithmic effort for updating sorted lists). Therefore, the fact that Take is not supported is simply because it cannot be implemented efficiently, at least not in the general case.

However, we identified top-x queries, i.e. analyses that sort elements for a given criteria and then report on a small number of elements with the best scores, as a rather common pattern. Therefore, we added dedicated support for this kind of analysis operators into NMF. This operator is called TopX. It is essentially a combination of an incremental sort (using balanced binary search trees) and a simple poll for the first x elements upon any change of the balanced binary search tree, assuming that x is small (in comparison with the size of the search tree). The computation of the scores is, however, using the incrementalization system and fully incremental.

The solution to Q1 is shown in Listing 3. We simply calculate the top-3 \(\textsf {Post}\)s where the score of a \(\textsf {Post}\) is calculated as a tuple of the score and the timestamp in order to break ties. The result of the lambda expression in Line 2 is an array of tuples of the \(\textsf {Post}\)s and their scores (actual score and timestamp). Lines 3 to 6 determine how this tuple is created: we iterate over all \(\textsf {Comment}\)s in a \(\textsf {Post}\) and, for each, sum up 10 plus the number of \(\textsf {User}\)s that have liked the \(\textsf {Comment}\).

Lines 1 and 7 surround this lambda expression with a call to NMF Expressions to obtain an incrementalization of this analysis. With that call, we tell NMF Expressions to create a DDG for us. The return value of this function is the root node of this DDG. This node implements a generic interface INotifyValue that provides the current analysis result as well as an event to notify clients when the analysis result changes. In the benchmark solution, we do not make use of this event but repeatedly query the current value of the DDG node. This call is fast, because each node in the DDG always references its current value.

3.3.3 Query 2

The solution for Q2 is very similar to the solution of Q1, except for the fact that for each \(\textsf {Comment}\), it involves finding connected components in a graph spanned by \(\textsf {User}\)s that liked the \(\textsf {Comment}\) and their friendship relations. There is no incremental implementation for finding connected components available that is built into NMF.

From an algorithmic point of view, the incrementalization of the connected components will be rather simple: we cache the current set of connected components and re-compute whenever an edge is added to the graph between two nodes of different components. This incremental algorithm can be isolated into a class ConnectedComponents that is theoretically reusable in different contexts.

With this algorithmics class, we can solve Q2 as in Listing 4. Similar to Q1, the solution is an incremental solution with incrementally maintained full sort and an incremental version of Tarjan’s algorithm. In Lines 1 and 2, we create a function that, given a \(\textsf {Comment}\) and a \(\textsf {User}\), creates the collection of \(\textsf {User}\)s who (1) are friends with the given \(\textsf {User}\) and (2) like the \(\textsf {Comment}\). Because we do not care about changes of the incident function, the function to get the connected \(\textsf {User}\)s is used as compiled code, using the Func class. This is slightly more efficient than the format of lambda expressions that NMF Expressions can use for the incrementalization. However, NMF Expressions currently has some problems to integrate compiled lambda expressions, so we need to specify this function separately, outside the scope of NMF Expressions.

In Lines 4 and 12, we frame the actual analysis with NMF Expressions, allowing it to create the DDG for the inner analysis that is in Lines 5 to 11. In particular, we first iterate all elements in the social network model and filter for \(\textsf {Comment}\)s in Line 5. From these, we pick the topmost elements according to the tuple of scores and timestamps, similar to the solution of Q1. To calculate the score of a \(\textsf {Post}\), we simply run the analysis of connected components where the incident nodes of a given \(\textsf {User}\) are the subset of his friends that also liked that \(\textsf {Comment}\) (Lines 7 to 9). Given these connected components, we calculate the sum of the squared sizes (Line 10) and break ties using the timestamps (Line 11).

3.3.4 Transactions and parallelism

NMF Expressions has some support for transactions and parallelism. The support for transactions means that a DDG node is only processed if all of its dependencies have been processed. Because each transaction may invalidate a different set of DDG nodes, this implies that changes in the DDG need to be processed in a two-pass fashion: in the first pass, the set of potentially affected DDG nodes is calculated, while in the second pass, the changes are actually propagated. Because the transactional behaviour guarantees that each DDG node is only updated at most once in each transaction, the overhead of two passes may be saved. However, whether or not this is the case largely depends on the analysis and the change sequence.

Q1 cannot profit from transactions at all, because whether the changes to any DDG node come at once or one after another does not matter. For Q2, this is slightly different because we are recalculating the connected components in multiple cases. If a change sequence contained multiple events that would cause to re-compute the connected components for a \(\textsf {Comment}\), this calculation is only needed once if the changes are propagated in a transaction.

The transactional support in NMF Expressions is very easy for a developer to use: all that needs to be done is to put the changes inside a transaction. Because in the scope of the benchmark, these changes come in a dedicated change sequence object, we just need to wrap the application of such a change sequence in a transaction as done in Listing 5.

Lastly, NMF Expressions also allows to propagate the changes within such a transaction in parallel. This is done by changing the execution engine implementation as depicted in Listing 6.

The parallel change propagation then allows changes within a transaction to be propagated in parallel on different threads, synchronizing at each DDG node.

3.4 Hawk

3.4.1 Tool description

Eclipse Hawk is a tool to manage models that have been fragmented (e.g. for versioning purposes) by incrementally indexing the various connected fragments into a common graph database [8]. Hawk can watch over a local folder or a version control system and update the graph whenever the model files change. For this case study, Hawk was configured with the ability to index EMF models, maintain a graph database using one of three backends (Neo4j, SQLite, or Greycat [42]) and run queries in a dialect of the Epsilon Object Language (EOL) [61]. Greycat implements a graph-oriented data model on top of existing key-value stores, such as LevelDB or RocksDB. For the present work, LevelDB was chosen as the underlying key-value store.

Further, Hawk provides the concept of “derived attributes”, which extend a type with pre-computed expressions which are updated incrementally as the graph changes. These attributes are also indexed for fast lookup.

3.4.2 Query 1

Several versions of the first query were implemented. For the sake of clarity, we will call these “batch”, “incremental update” (IU) and “incremental update and query” (IUQ). As mentioned in Table 1, all Hawk variants have partial incrementality. Batch and IU use full result sorting, and IUQ uses an “online top-x” approach.

The batch mode is the most direct use of Hawk. In this version, the tool is told to watch a folder that contains the initial version of the model. An EOL script replays the change sequences on the model, and Hawk updates the graph based on the new versions of the model. The \(\textsf {Post}\) type is extended with the score derived attribute, which is updated incrementally by Hawk. The definition of score is as follows, using self as the \(\textsf {Post}\) being extended:

With this derived attribute, it is simple to implement the main query itself. However, in order to sort the \(\textsf {Post}\)s with the Java Collections sort method, a native Java class implementing the Comparator interface is needed:

The incremental update mode uses an alternative graph update class, ChangeSequenceAwareUpdater. This speeds up the process by directly applying the change sequence on the graph, without touching the original model file. The query itself remains the same as the one in the batch mode, using the same derived attribute.

Finally, the incremental update and query mode reuses the same custom updater, while changing the way the query is run. A graph change listener is attached to Hawk: on each new model version, only the updated \(\textsf {Post}\)s are re-scored before selecting the new top-3 elements. The re-scoring is done by invoking the expression in Listing 7 on each \(\textsf {Post}\) that has been updated.

3.4.3 Query 2

The second query goes through the same three versions: batch, incremental update and incremental update and query. The actual query is noticeably more complex, since it essentially requires implementing Tarjan’s strongly connected components algorithm [84] in about 37 lines of EOL. As indicated in Table 2, Tarjan is re-run after each change, and IUQ uses an “online top-x” sorting approach instead of doing a full sort.

In this case, the \(\textsf {Comment}\) class is extended with a score derived attribute. A high-level view of the query shows how it loops over the \(\textsf {User}\)s that liked the \(\textsf {Comment}\), detecting connected components in each of them and computing the score of the \(\textsf {Comment}\) as the sum of the squares of the sizes of each component:

The queries for the batch and incremental update mode are the same, with a collection of all the IDs, scores and timestamps, and sorting to get the top-3 elements:

The incremental update and query mode works the same as in the previous query. A graph change listener detects the \(\textsf {Comment}\)s that should be re-scored, and the new scores are merged with the old ones to keep the top-3 elements up to date.

3.5 JastAdd

3.5.1 Tool description

Attribute Grammars [60] can be used to describe the structure of context-free data along with their static semantics. Reference Attribute Grammars (RAGs) [43] extend this paradigm such that attributes are allowed to return other nodes of the abstract syntax tree (AST) as a result of their computation.

The tool JastAdd [44] is based on Reference Attribute Grammars and offers non-terminal attributes [86] and circular attributes [62], used to represent a part of the model and to compute parts of the second query, respectively. In JastAdd, a grammar specified in BNF with inheritance and attributes specified written in Java are woven together to generate plain Java code. It contains a Java class for every non-terminal specified within the grammar, uses the code of the attributes inside its methods and has additional boilerplate code, e.g. to handle caching.

JastAdd does not work with EMF models directly, but requires users to transform the input metamodel into an AST representation using a dedicated syntax [67].

Listing 11 shows the grammar representing the metamodel of Fig. 1. All identifiable nodes inherit from ModelElement giving them a unique number. Also, the root node SocialNetwork is identifiable to later be able to insert new \(\textsf {User}\)s and \(\textsf {Post}\)s. The two non-containment references \(\textsf {friends}\) and \(\textsf {submissions}\) are replaced by explicit unidirectional relations, whereas the \(\textsf {likes}\) and \(\textsf {likedBy}\) edges are modelled using an explicit bidirectional relation (Line 9 in Listing 11).

3.5.2 Query 1

To solve the first query, all \(\textsf {Comment}\)s referring to a \(\textsf {Post}\) are computed, and afterwards, the score of this \(\textsf {Post}\) can be calculated with the following attribute:

After gathering all scores, a simple iteration over all \(\textsf {Post}\)s and keeping the top-3 is performed to get the final result.

3.5.3 Query 2

For the second query, two variants are evaluated, showing two different approaches within the JastAdd solution. Those variants differ in the way how the \(\textsf {User}\)s liking the same \(\textsf {Comment}\) are calculated. For the first variant, a circular attribute User.getCommentLikerFriends (shown below) is used.

This attribute will start with the set containing the \(\textsf {User}\) it should compute the friends for. Then, if another friend likes the \(\textsf {Comment}\), it adds this friend and calls itself recursively. The recursion always terminates as the number of \(\textsf {User}\)s is finite and the circular attribute is only invoked again if the returned set has changed.

The second variant follows the approach described in [66] highlighting reuse of application-independent analysis. In fact, the algorithm for computing an SCC presented in that work was reused without modification for solving the subproblem of query 2 to compute \(\textsf {User}\)s liking the same \(\textsf {Comment}\). To integrate it, a mapping from \(\textsf {User}\) to Component was established, while the friend relationship served as a basis to connect those components.

To compute the score of a \(\textsf {Comment}\) using the second variant, the squared size of every set of components is summed:

To get the final result, the same iteration as for the first query is used (not shown here).

3.6 YAMTL

3.6.1 Tool description

YAMTL [16] is a model transformation language for EMF models, with support for incremental execution [18], designed as an internal DSL of Xtend. The solution to the Social Media benchmark uses query rules that only consist of input patterns, whose filters define the queries, and uses the YAMTL pattern matcher for evaluating them. Queries are defined using Xtend and the Java Collections Framework. The YAMTL solutions implement the online top-x approach, keeping the best three candidates at all times, with the operation

.

.

Three variants of the solutions have been implemented: batch (YAMTL-B), implicitly incremental (YAMTL-II) and explicitly incremental (YAMTL-EI). YAMTL-B disables dependency tracking enabling a faster initial transformation but subsequent updates compute queries from scratch. YAMTL-II detects which matches need to be re-computed according to updates to the input model, without requiring additional logic in the queries. For each impacted match, the filter expression is re-evaluated from scratch, and the solution is therefore partially incremental. YAMTL-EI exposes model updates affecting an impacted match so that these can be processed explicitly in the filter expression, and the solution is therefore fully incremental. The additional logic that handles incremental updates explicitly in YAMTL-EI has been highlighted in grey. YAMTL-B and YAMTL-II solutions can be obtained by deleting this code. Enabling incremental evaluation and explicit handling of updates is done via configuration parameters.

3.6.2 Query 1

Q1 is implemented as a query rule, whose input pattern is formed by an

element that will match \(\textsf {Post}\)s that contain \(\textsf {Comment}\)s as indicated in the filter. In particular, the EMF method

element that will match \(\textsf {Post}\)s that contain \(\textsf {Comment}\)s as indicated in the filter. In particular, the EMF method

fetches all contained \(\textsf {Comment}\)s within the matched \(\textsf {Post}\). The implementation of the query is shown in Listing 12.

fetches all contained \(\textsf {Comment}\)s within the matched \(\textsf {Post}\). The implementation of the query is shown in Listing 12.

The expression

returns the objects that are added under the \(\textsf {Post}\) object being matched. For each such added object that is a \(\textsf {Comment}\), the score is computed. The solution to Q2 shows how this case could be handled.

returns the objects that are added under the \(\textsf {Post}\) object being matched. For each such added object that is a \(\textsf {Comment}\), the score is computed. The solution to Q2 shows how this case could be handled.

3.6.3 Query 2

The computation of connected components has been implemented using Sedgewick and Wayne’s weighted quick union-find with path compression algorithm [80].Footnote 9 The query, including logic handling updates explicitly, is shown in Listing 13. The instantiation of the class

computes the connected components of the graph whose nodes are the set of \(\textsf {User}\)s who liked the \(\textsf {Comment}\), i.e.

computes the connected components of the graph whose nodes are the set of \(\textsf {User}\)s who liked the \(\textsf {Comment}\), i.e.

. \(\textsf {Comment}\) scores are stored so that they can be subject to updates.

. \(\textsf {Comment}\) scores are stored so that they can be subject to updates.

The expression

returns the collection of features for the \(\textsf {Comment}\) being matched, which have been updated so that they can be handled explicitly. The original union find algorithm has been extended to enable incremental updates of the computed components when a \(\textsf {Comment}\) is liked by a \(\textsf {User}\) (

returns the collection of features for the \(\textsf {Comment}\) being matched, which have been updated so that they can be handled explicitly. The original union find algorithm has been extended to enable incremental updates of the computed components when a \(\textsf {Comment}\) is liked by a \(\textsf {User}\) (

) and when a new friendship is declared (

) and when a new friendship is declared (

). When there are less than three \(\textsf {Comment}\)s with a score different from zero, \(\textsf {Comment}\)s from

). When there are less than three \(\textsf {Comment}\)s with a score different from zero, \(\textsf {Comment}\)s from

are used to complete the list.

are used to complete the list.

3.7 ATL

3.7.1 Tool description

ATL [55] is one of the most common model transformation languages. The solution to the Social Media benchmark only uses ATL queries, i.e. the ATL constructs that allow users to define expressions in the ATL flavour of OCL. ATL queries can call helper OCL functions and libraries and are evaluated over the source model(s) by the ATL virtual machine, that is optimized for model operations.

The vanilla ATL that was used to implement the benchmark does not have support for incremental execution. Classical engines that execute ATL incrementally [56, 63] do not support the incremental evaluation of OCL expressions. Changes on the source model trigger the recomputation only of the impacted OCL expressions, but the whole expressions are re-computed. In Sect. 3.9, we describe a solution that achieves incremental OCL expression evaluation for ATL code, by compiling it towards AOF.

The solution is a pure ATL query and executed on the most recent ATL virtual machine (EMFTVM). Since ATL queries are OCL expressions, this solution includes a complete encoding of the case study as declarative and functional OCL code.

3.7.2 Query 1

The full code for Q1 is presented in Listing 14. The recursive allComments helper gathers the set of \(\textsf {Comment}\) for a given \(\textsf {Post}\), and a score for the \(\textsf {Post}\) is computed by the given formula (Line 11) considering the number of \(\textsf {Comment}\)s and likes to these \(\textsf {Comment}\)s. The main query topPosts sorts the set of \(\textsf {Post}\)s by score (and timestamp) and picks the top-3 \(\textsf {Post}\)s.

3.7.3 Query 2

The code for Q2 is shown in Listing 15. In particular, the allComponents helper implements a one-pass algorithm for the detection of all the connected components. The algorithm iterates on the liker \(\textsf {User}\)s: if the liker has not been visited, then compute a new component by the allFriends helper. The allFriends helper (whose implementation is not shown in the listing) is just a standard depth-first traversal, limited to the subgraph s. Finally, a score is computed for each \(\textsf {Comment}\) (Line 5), and the top-3 \(\textsf {Comment}\)s are identified similarly to Q1 (Lines 1–2).

3.8 Xtend

3.8.1 Tool description

XtendFootnote 10 [13] is a modern Java dialect suited for rapid prototyping thanks to its flexibility and expressiveness. Like ATL, the vanilla Xtend does not support incremental execution.

3.8.2 Query 1

We have written a first batch implementation of Q1 and Q2 in pure Xtend using the Eclipse Modeling Framework (EMF) plugin to perform loading and navigation into models. In a second step, we optimize this solution using Java 8 Streams to parallelize some operations on collections. The Xtend code used for the implementation of Q1 (Listing 16) shows that this mechanism is used two times: (1) to process all \(\textsf {Post}\)s in parallel and (2) to compute the sum of all likes received by \(\textsf {Comment}\)s of a \(\textsf {Post}\) in the

method. For better performance, we have also implemented a specific stream operation, called

method. For better performance, we have also implemented a specific stream operation, called

, to avoid sorting the whole list of \(\textsf {Post}\)s while only the top-3 \(\textsf {Post}\)s can be considered.

, to avoid sorting the whole list of \(\textsf {Post}\)s while only the top-3 \(\textsf {Post}\)s can be considered.

3.8.3 Query 2

The code of Q2 (Listing 17) is similar to the implementation of Q1 except for the

method. Indeed, the second query requires to find connected groups of \(\textsf {User}\)s through the friend relationship. For this purpose, the

method. Indeed, the second query requires to find connected groups of \(\textsf {User}\)s through the friend relationship. For this purpose, the

method uses a connected components algorithm based on Tarjan’s algorithm [84].

method uses a connected components algorithm based on Tarjan’s algorithm [84].

3.9 AOF and ATL incremental

3.9.1 Tool description

Active operations [9] are OCL-like operations equipped with incremental propagation algorithms. They may thus be used to incrementally evaluate OCL expressions [19, Section 5] such as the one found in ATL-like model transformations. It is therefore possible to use active operations to write incremental queries and transformations.

The AOF implementation [53] of active operations supports EMF models and is based on the Observer design pattern, although alternative execution strategies [22] have been explored. It is implemented in Java and can be used from Java, Xtend, or ATL code. Each mutable value is wrapped in an observable box, which is either a collection, or a singleton value.

Though AOF provides enough basic active operations to implement the case study, creating specific operations sometimes helps [54] achieve a better performance. For this case study, we developed four new operations:

-

1.

returns a sorted copy of its source collection using one or more criteria using balanced binary trees.

returns a sorted copy of its source collection using one or more criteria using balanced binary trees. -

2.

returns the n first elements of a collection.

returns the n first elements of a collection. -

3.

retrieves all model elements contained in a given source element, filtering them by type.

retrieves all model elements contained in a given source element, filtering them by type. -

4.

implements an incremental connected component algorithm.

implements an incremental connected component algorithm.

From these,

is more specific to some graph-related transformations, and the others are relatively generic.

is more specific to some graph-related transformations, and the others are relatively generic.

We present two variants of this solution:

-

1.

The AOF solution is written in Xtend, as shown in Listings 18 and 19.

-

2.

The ATL Incremental solution is written in ATL and leverages the ATOL [23] compiler that translates it to Java code that makes use of AOF. It is basically a transliteration of the Xtend code from Listings 18 and 19 into ATL syntax, The main advantage of this variant w.r.t. the AOF variant is that it makes it possible to use the declarative ATL syntax.

3.9.2 Query 1

The implementation of Q1 in AOF is depicted in Listing 18. The actual computation is stored in a hash table such that it does not have to be computed repeatedly. Within the score calculation, we use a method that iterates through the containment hierarchy in conjunction with a type filter, similar to the NMF solution. To calculate the score based on likes, we use the lifting mechanism of OCL that implicitly lifts the property likedBy to collections.

The ATL Incremental implementation of Q1 is shown in [23, Listing 6]. It is very similar to the code from Listing 14, with the most notable difference being that the ATOL compiler does not support the

keyword.

keyword.

3.9.3 Query 2

The solution for Q2 is depicted in Listing 19. Again, the actual score calculation is moved to a helper method. The score calculation itself is then making use of the layering operation.

3.10 Neo4j Batch

3.10.1 Tool description

Neo4j is a graph database management system using the property graph data model. Such graphs consist of labelled entities, i.e. nodes and edges, which can be described with properties encoded as key-value pairs. Neo4j uses the Cypher query language [33] which offers both read and update constructs [37]. While the main focus of Neo4j is to run graph queries in an online transaction processing (OLTP) setup, it also supports graph analytical algorithms with the Graph Data Science libraryFootnote 11 [71].

3.10.2 Query 1

Q1 c Cypher query in Listing 20. The Cypher language uses node labels (e.g. \(\textsf {Post}\), \(\textsf {Comment}\), \(\textsf {User}\)), edge types (e.g. \(\textsf {COMMENTED}\), \(\textsf {LIKES}\)) to express graph patterns. The query matches every node with label \(\textsf {Post}\), then all its \(\textsf {Comment}\)s via a series of \(\textsf {COMMENTED}\) edges, then the \(\textsf {User}\)s via direct \(\textsf {LIKES}\) edges. The

clause denotes an optional pattern, where variables are set to \(\textsf {NULL}\) values if there is no match. The

clause denotes an optional pattern, where variables are set to \(\textsf {NULL}\) values if there is no match. The

clause is used to group and aggregate. The results are grouped by the \(\textsf {id}\) and \(\textsf {timestamp}\) properties of the \(\textsf {Post}\)s, aggregated, and then, the top-3 scores are returned. The aggregation counts the likes using the number of \(\textsf {User}\)s (a \(\textsf {User}\) can like multiple \(\textsf {Comment}\)s) and counts the number of \(\textsf {Comment}\)s (

clause is used to group and aggregate. The results are grouped by the \(\textsf {id}\) and \(\textsf {timestamp}\) properties of the \(\textsf {Post}\)s, aggregated, and then, the top-3 scores are returned. The aggregation counts the likes using the number of \(\textsf {User}\)s (a \(\textsf {User}\) can like multiple \(\textsf {Comment}\)s) and counts the number of \(\textsf {Comment}\)s (

is used to remove duplicate \(\textsf {Comment}\)s).

is used to remove duplicate \(\textsf {Comment}\)s).

3.10.3 Query 2

Listing 21 shows the batch solution for Q2 using the variant of the union-find algorithm [68] implemented in the Neo4j Graph Data Science library. The procedure

is used to find connected components of the subgraph given by Cypher queries matching the nodes and the edges. For each \(\textsf {Comment}\) with likes, the first Cypher query in Lines 3-7 selects \(\textsf {User}\)s who like the \(\textsf {Comment}\), and the second query selects all FRIEND edges as pairs of \(\textsf {User}\)s. The library loads each subgraph into an in-memory projected subgraph before running the computations. The procedure returns the ID of the component containing the \(\textsf {User}\) node. Lines 10–13 calculate the squared sum of the component sizes and select the top-3 scores. On Lines 15–18, the query enumerates the top-3 \(\textsf {Comment}\)s without likes and the

of the two sets are returned. For a detailed comparison of strategies to compute Q2, we refer the reader to [29].

of the two sets are returned. For a detailed comparison of strategies to compute Q2, we refer the reader to [29].

3.11 Neo4j incremental

3.11.1 Tool description

The incremental solution for Neo4j uses node properties and new nodes to materialize the result of previous iterations. For every batch of updates these elements are refreshed, then the top-3 scores are collected. While Q1 can be computed efficiently with only Cypher constructs, the solution for Q2 uses the fixed-point calculation, dynamic node manipulation and reachability procedures of Neo4j’s APOC stored procedure library.Footnote 12

3.11.2 Query 1

To incrementally evaluate Q1, we initially compute the score for each \(\textsf {Post}\) as in the Neo4j Batch solution but, instead of returning it, we store it in the \(\textsf {score}\) property as shown in Listing 22. Based on this property, the current top-3 scores can be computed using Listing 25. The \(\textsf {score}\) property is indexed to improve lookup times. After new elements of an update are inserted, the \(\textsf {score}\) property of new \(\textsf {Post}\)s is initialized to zero, Listings 23-24 maintain the property for new \(\textsf {Comment}\) nodes and \(\textsf {LIKES}\) edges, and then, Listing 25 is used to get the top-3 elements.

3.11.3 Query 2

The incremental Neo4j solution for Q2 materializes the components of the subgraph for each \(\textsf {Comment}\) \( comm \) by converting the  edges to a \(\textsf {Component}\) node and inserting two edges:

edges to a \(\textsf {Component}\) node and inserting two edges:

-

,

, -

,

,

where the \(\textsf {Component}\) node connects all \(\textsf {User}\)s who know each other directly or via friends who also liked the \(\textsf {Comment}\) \( comm \). The conversion is executed for each component one by one using the fixed-point query execution mechanism of APOC. To achieve this, first the solution marks the nodes of each subgraph with dynamically named labels (Listing 41) and finds reachable nodes using the APOC library (Listing 42). The incremental evaluation is performed by merging the components then maintaining their sizes and the resulting scores (Listings 43–44).

3.12 PostgreSQL Batch

3.12.1 Tool description

To study the usability and performance of relational database management systems (RDBMSs), we implemented a batch solution in PostgreSQL. Figure 6 shows the database schema capturing the social network model. Instances of each node type (e.g. \(\textsf {Comment}\)) and the edge type with many-to-many cardinality (\(\textsf {friends}\)) are stored in relations (tables) with the following schemas:

-

\(\textit{comments}(\textsf {id}, \textsf {ts}, \textsf {content}, \textsf {submitterid}, \textsf {parentid})\)

-

\(\textit{posts}(\textsf {id}, \textsf {ts}, \textsf {content}, \textsf {submitterid})\)

-

\(\textit{likes}(\textsf {userid}, \textsf {commentid})\)

-

\(\textit{users}(\textsf {id}, \textsf {name})\)

-

\(\textit{friends}(\textsf {user1id}, \textsf {user2id})\)

Each relation representing a node has a primary key. Many-to-many edges are represented in association tables with two foreign keys. Many-to-one edges are stored as a foreign key in the table representing the node at the endpoint of the edge with a cardinality of “one”. Additionally, indexes were defined on the foreign keys. This supports the SQL optimizer in choosing arbitrary join orders.

Evaluating both Q1 and Q2 require checking transitive reachability between nodes, a common recursive query which cannot be expressed in first-order logic or relational algebra [4]. However, it is possible to express such queries using a relational database by

-

1.

either defining additional data structures and running a sequence of SQL queries in a loop until reaching a fixed point [26] or

-

2.

or using SQL:1999’s

construct, which allows the formulation of recursive queries.

construct, which allows the formulation of recursive queries.

In this solution, we use

as it is widely available in modern SQL implementations [89].

as it is widely available in modern SQL implementations [89].

3.12.2 Query 1

To evaluate Q1, for each \(\textsf {Comment}\), we need to first find the root \(\textsf {Post}\) of the \(\textsf {Comment}\)-chain, which is computed as the transitive closure of the \(\textsf {Comment}\)–parentid–\(\textsf {Comment}\)/\(\textsf {Post}\) edge type.

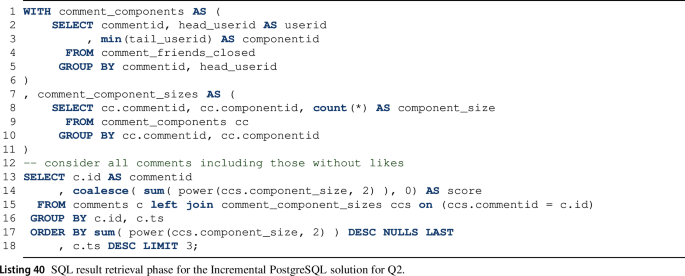

Having the transitive closure enables us to match the corresponding \(\langle \textsf {Post}, \textsf {Comment}, \textsf {User} \rangle \) triples, where the \(\textsf {Comment}\) is a response rooted in the \(\textsf {Post}\) and the \(\textsf {User}\) is someone who liked the \(\textsf {Comment}\). We use two left outer joins to ensure that \(\textsf {Post}\)s without \(\textsf {Comment}\)s and \(\textsf {Comment}\)s without likes are kept with \(\textsf {NULL}\)s. This is followed by an aggregation computing the score and finalized with a top-3 selection as shown in Listing 26.

3.12.3 Query 2

To evaluate Q2, we need to determine the connected components of the induced subgraphs on the \(\textsf {User}\)–friends–\(\textsf {User}\) edge type, which is computed using the transitive closure on the graph.

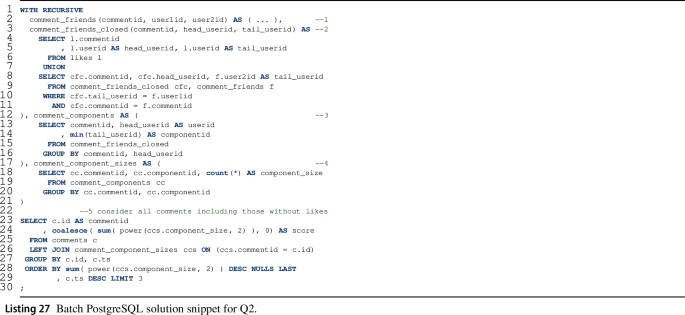

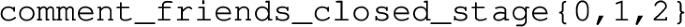

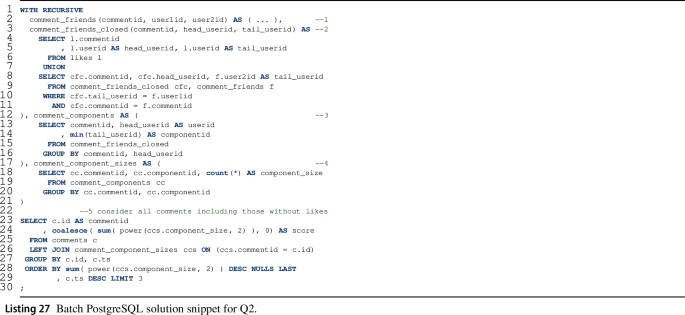

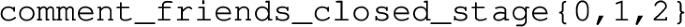

The outline of our Q2 implementation is shown in Listing 27. (The full query is given in Listing 30.) The implementation defines interim views using four subqueries and computes the result with the final (5th) query:

-

1.

comment_friends(commentid, user1id, user2id): for a given \(\textsf {Comment}\), pairs of \(\textsf {User}\)s who liked it and are friends.

-

2.

comment_friends_closed(commentid, head_userid, tail_userid): the transitive closure of cf relations, i.e. for a given \(\textsf {Comment}\), all pairs of \(\textsf {User}\)s who are reachable from each other through \(\textsf {friends}\) edges.

-

3.

connected_components(commentid, userid, componentid): for a given \(\textsf {Comment}\), the \(\textsf {User}\)s who belong to a given component of the friendship graph.

-

4.

comment_component_sizes(commentid, componentid, component_size): for a given \(\textsf {Comment}\), the component IDs and their size.

-

5.

Finally, the resulting relation contains the top-3 \(\textsf {Comment}\)s with the highest scores.

3.13 PostgreSQL incremental

To improve performance for repeated query executions, we have extended the PostgreSQL Batch solution (Sect. 3.12) with support for incremental updates. This section discusses the changes introduced in the schema and presents the queries that maintain the results upon changes.

3.13.1 Tool description

For the incremental solution, we have extended the database schema as shown in Fig. 7. Compared to the batch schema, this has three auxiliary relations and an extra attribute: (1) \(\textit{q1}\_\textit{scoring}\) for maintaining the scores and timestamps of the \(\textsf {Post}\)s as defined in Q1, along with (2) \(\textit{cf}\) and (3) \(\textit{cfc}\) with the same semantics as in the Batch solution of Q2. The attribute (4) \(\textit{comments.postid}\) is where Q1 maintains the root \(\textsf {Post}\) reference for each \(\textsf {Comment}\), which is then used in Q1. Additionally, we have employed a horizontal partitioning strategy [1] on each table. The partitioning uses an attribute status consisting of a single character “B” or “D”. During a particular update phase, rows that were already in the database are stored in the “B” (before) partition, while rows that have just been inserted are temporarily stored in the “D” (diff) partition. At the end of each update phase, rows from the diff partition migrate to the before partition. For both queries, we implemented algebraic incremental view maintenance with delta queries derived using the rules given in [38] and [81, Appendix E].

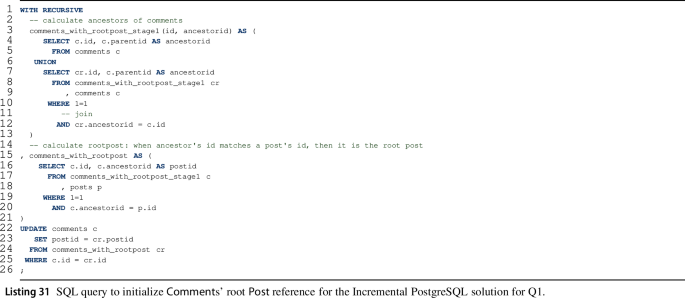

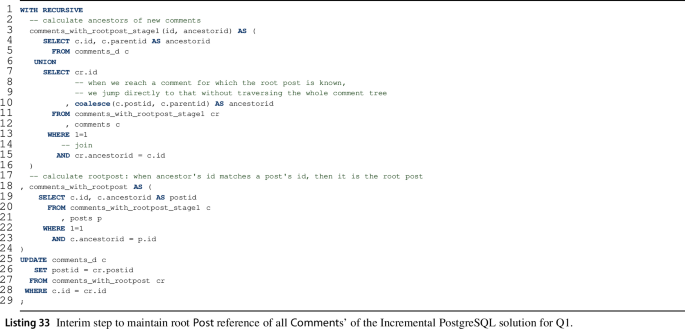

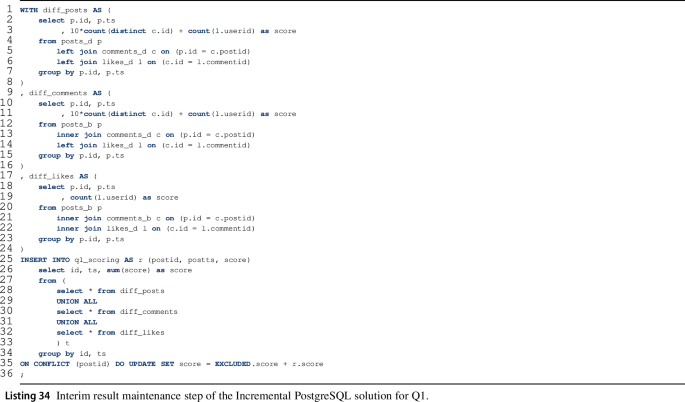

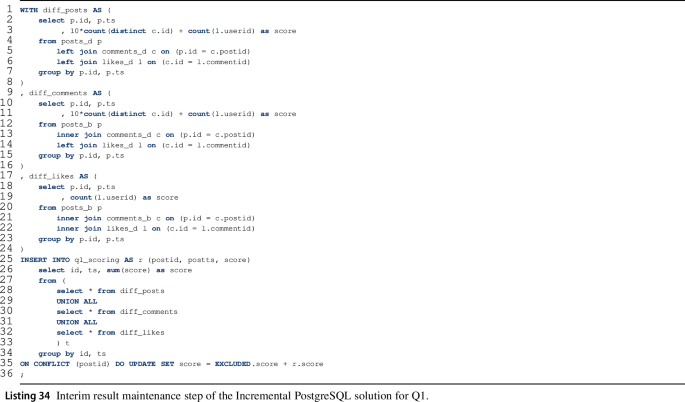

3.13.2 Query 1

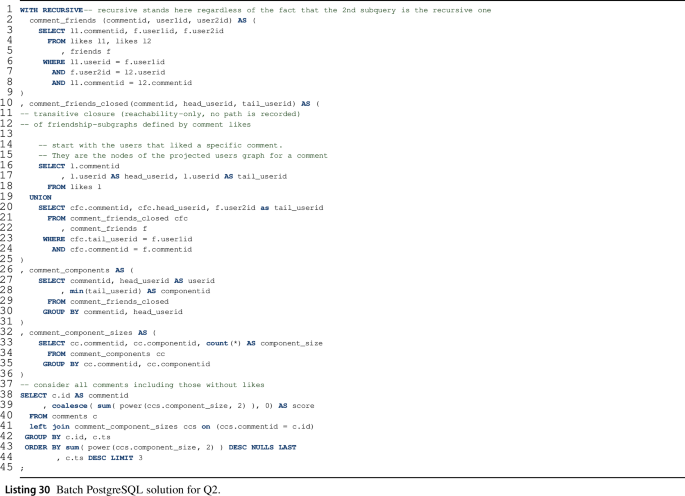

The incremental solution for Q1 consists of three steps, each affecting the \(\textit{q1}\_\textit{scoring}\) auxiliary relation, i.e. an initial step, then, for each update of the graph, a sequence of interim result maintenance and final result retrieval queries.

-

1.

The initial step consists of two substeps:

-

(a)

We compute the root \(\textsf {Post}\) reference for all \(\textsf {Comment}\)s (Listing 31) and materialize in attribute \(\textit{comments.postid}\).

-

(b)

We compute the score for all \(\textsf {Post}\)s (Listing 32)—similarly to the batch solution of Q1 except that we materialize each \(\textsf {Post}\) in relation \(\textit{q1}\_\textit{scoring}\) with its timestamp and score.

-

(a)

-

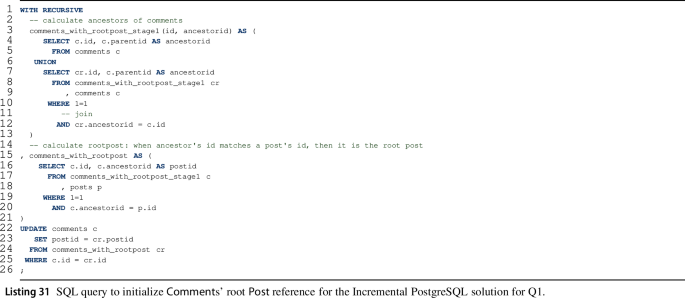

2.

The interim result maintenance is again split into two substeps:

-

(a)

In preparation, we compute the root \(\textsf {Post}\) reference for all new \(\textsf {Comment}\)s (Listing 33) and materialize in attribute \(\textit{comments.postid}\).

-

(b)

\(\textsf {Post}\)s ’ score maintenance (Listing 34) is implemented using three subqueries, each computing the increment in score for certain types of changes:

-

The \(\textit{diff}\_\textit{posts}\) subquery calculates the score of the new \(\textsf {Post}\)s. The \(\textsf {Comment}\)s and likes of the new \(\textsf {Post}\)s must also be new, so the diff partition of all 3 relations are joined together. A left outer join is applied to include each new \(\textsf {Post}\) regardless of whether it has received any \(\textsf {Comment}\)s and/or likes yet.

-

The \(\textit{diff}\_\textit{comments}\) subquery calculates the extra score for old \(\textsf {Post}\)s gained by new \(\textsf {Comment}\)s and the likes for them. This is expressed as the inner join of the before partition of \(\textsf {Post}\)s and diff partition of \(\textsf {Comment}\)s. Then, a left outer join of the diff partition of likes is applied, as only new likes can refer to new \(\textsf {Comment}\)s, and we need to calculate scores of all new \(\textsf {Comment}\)s regardless of whether it received any likes.

-

The \(\textit{diff}\_\textit{likes}\) subquery computes extra score for old \(\textsf {Post}\)s based for the new likes of their old \(\textsf {Comment}\)s. This is expressed as the inner join of the before partition of \(\textsf {Post}\)s and \(\textsf {Comment}\)s, and the diff partition of likes.

In each subquery, one operand of the inner join is the diff partition of either the \(\textit{posts}\), \(\textit{comments}\) or \(\textit{likes}\) relations. Their usually small record count can be exploited by the SQL query optimizer to speed up joins. Using the calculations above, \(\textit{q1}\_\textit{scoring}\) is updated by increasing the scores of old \(\textsf {Post}\)s and inserting new \(\textsf {Post}\)s along with their scores.

The relational algebraic formula for this interim result maintenance query is given in Fig. 13 and proved in Fig. 14.

-

-

(a)

-

3.

The retrieval step is a simple top-3 query on \(\textit{q1}\_\textit{scoring}\) (Listing 35).

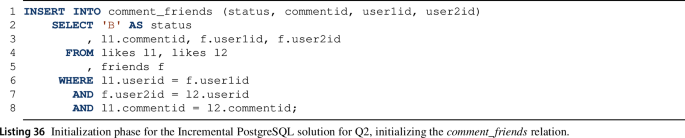

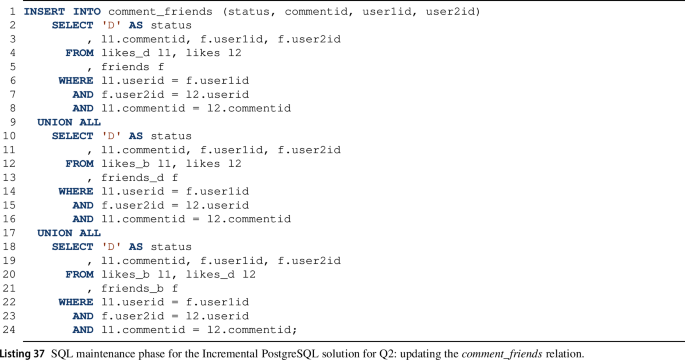

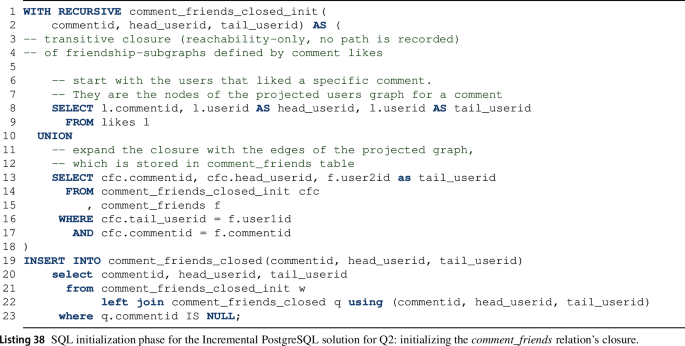

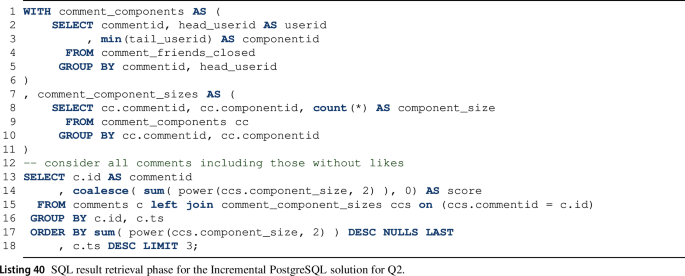

3.13.3 Query 2

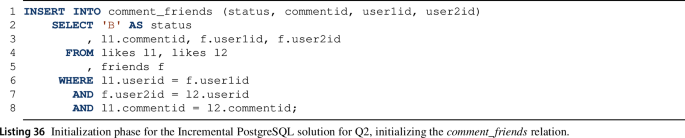

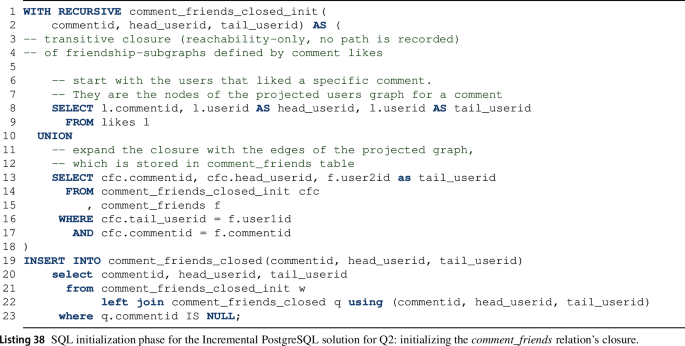

Similarly to Q1, the incremental solution for Q2 again consists of three steps, i.e. an initial step, then, for each update of the graph, an alternating sequence of interim result maintenance and final result retrieval queries. Both the initial and the maintenance steps are further divided into two queries affecting the \(\textit{cf}\) and \(\textit{cfc}\) interim relations.

-

1.

During the initial step, we first compute the before partition of the \(\textit{cf}\) relation (Listing 36), then its closure in the \(\textit{cfc}\) relation (Listing 38). The queries to perform this are analogous to the corresponding subqueries of the PostgreSQL Batch solution for Q2 (Listing 27).

-

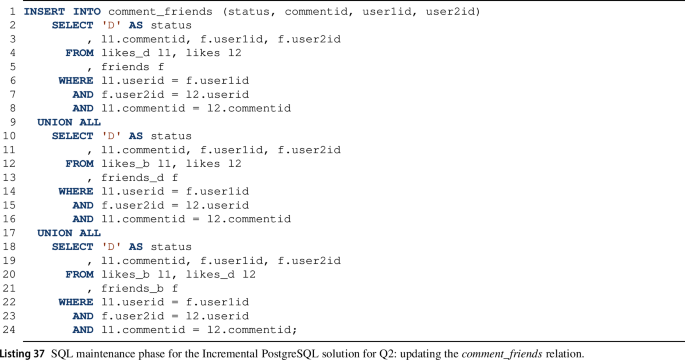

2.

The maintenance step consists of two substeps:

-

(a)

Updating the \(\textit{cf}\) relation with a union of new \(\textit{comment}\_{} \textit{friends}\) edges induced (Listing 37). Considering the \(\textit{friends.user1id}\) as the left side on the join, and \(\textit{friends.user2id}\) as its right side, a new edge is included by either:

-

i.

the new likes on the left side of all friendships with all likes on the right side,

-

ii.

the old likes on the left side of new friendships and all likes on the right side, and

-

iii.

old likes on the left side of old friendships and new likes on the right side.

-

i.

-

(b)

Updating the transitive closure is done in three successive subqueries (denoted as

in Listing 39):

-

i

Each new like on a particular \(\textsf {Comment}\) is a new zero-length path in the closure.

-

ii

Instead of performing the computation on the \( cf \) graph, we use new edges (\(\textit{comment}\_\textit{friends}\_{} \textit{diff}\)) to reach a fixed point in fewer steps.

-

iii

The optimization in the previous step omitted the edges in the existing transitive closure, and hence, we add these to the result as a final step.

-

i

-

(a)

-

3.

The retrieval step, similarly to the PostgreSQL Batch solution for Q2, computes the component sizes, and then, the scores for all \(\textsf {Comment}\)s are computed to retrieve the top-3 scored \(\textsf {Comment}\)s (Listing 40).

3.14 GraphBLAS Batch

3.14.1 Tool description

GraphBLAS is a recently proposed standard built on the theoretical framework of matrix operations on semirings [57], which allows concise and portable formulation of graph algorithms [58]. The goal of GraphBLAS is to create a layer of abstraction between the graph algorithms and the graph analytics framework, separating the concerns of the algorithm developers from those of the framework developers and hardware designers. The GraphBLAS standard defines a C API [20] that can be implemented on a variety of hardware components including GPUs.

Data format An untyped graph can be represented as an adjacency matrix \(\mathbf {A} \in \mathbb {N}^{| V | \times | V |}\), where rows and columns both represent nodes of the graph and element \(A_{ij}\) represents the number of edges from node i to node j. If the number of edges is not important, the adjacency matrix is defined over Boolean values, i.e. \(\mathbf {A} \in \mathbb {B}^{| V | \times | V |}\), with \(A_{ij} = 1\) if there is an edge from node i to node j and \(A_{ij} = 0\) otherwise. Bipartite graphs can be represented with a non-square adjacency matrix \(\mathbf {A} \in \mathbb {N}^{|V_1| \times |V_2|}\), where \(V_1\) and \(V_2\) are the sets of vertices in the two partitions. Typed graphs such as the ones used in this paper can be represented as using a bipartite adjacency matrix for each edge type, where \(V_1\) represent the source nodes and \(V_2\) represents the target nodes, e.g. \(\mathbf {Likes} \in \mathbb {B}^{| users | \times | comments |}\). The graphs used in practical applications such as social networks are sparse. The adjacency matrices representing these graphs are also sparse, i.e. most elements in their adjacency matrix are zero values. These sparse matrices can be represented efficiently using matrix compression techniques such as CSR (Compressed Sparse Row).

A graph can also be stored as an incidence matrix \(\mathbf {B} \in \mathbb {B}^{| V | \times | E |}\), where rows and columns represent nodes and edges (resp.). For undirected graphs, each column contains two 1 values in the positions of the source and the target nodes of the edge, and other elements are 0. Incidence matrices are sparse for all graphs with more than a few nodes.

Notation We follow the notation conventions of GraphBLAS as presented in [25]. Table 3 contains the list of GraphBLAS operations and methods used in this paper. Matrices are typeset in bold and start with an uppercase letter, e.g. \(\mathbf {Friends}\). Vectors are typeset in bold and start with a lowercase letter, e.g. \(\mathbf {scores}\). Additionally, sets are typeset in italic and start with a lowercase letter, e.g. \( posts \).

Implementation For this solution, we used SuiteSparse:GraphBLAS [25], a parallel GraphBLAS implementation and LAGraph [64], a library of GraphBLAS algorithms. We also implemented an incremental solution in GraphBLAS, described in Sect. 3.15.

Execution of the GraphBLAS algorithms on the example graph (Fig. 5): the Initial evaluation step (used by both the batch and incremental solutions) and the Update and re-evaluation step (used by the incremental solution). Recall that the update in the example inserts the following relevant entities (highlighted with grey background): a \(\textsf {friends}\) edge between \(\textsf {User}\)s u1 and u4, a \(\textsf {likes}\) edge from \(\textsf {User}\) u2 to \(\textsf {Comment}\) c2, a \(\textsf {Comment}\) node c4 with a root \(\textsf {Post}\) of p1 and an incoming \(\textsf {likes}\) edge from \(\textsf {User}\) u4

3.14.2 Query 1

Q1 (Algorithm 1) computes the score for each \(\textsf {Post}\), then selects the top-3 \(\textsf {Post}\)s. We use an auxiliary \(\mathbf {RootPost}\) \(^\mathsf{T}\) adjacency matrix to denote the \(\textsf {Post}\) a \(\textsf {Comment}\) belongs to (possibly through a series of \(\textsf {Comment}\)s). The algorithm receives direct \(\textsf {Comment}\)s on \(\textsf {Post}\)s (\(\mathbf {CommentedP}\) \(^\mathsf{T}\)) and \(\textsf {Comment}\)s on other \(\textsf {Comment}\)s (\(\mathbf {CommentedC}\) \(^\mathsf{T}\)) separately. Starting from the former (Line 7), BFS is used to find child \(\textsf {Comment}\)s level by level and collect them to the \(\textsf {Post}\) they belong to until there are no more left (Lines 8-10). In Line 12, the row-wise summation of \(\mathbf {RootPost}\) \(^\mathsf{T}\) matrix produces the number of (direct and indirect) \(\textsf {Comment}\)s per \(\textsf {Post}\), and then, an ’apply’ operation multiplies the vector elements by 10. Line 14 sums up the number of likes the \(\textsf {Post}\) has via its \(\textsf {Comment}\)s. For each \(\textsf {Post}\), the \(\mathbf {RootPost}\) \(^\mathsf{T}\) adjacency matrix selects the elements of the \(\mathbf {likesCount}\) vector corresponding to the \(\textsf {Comment}\)s of the \(\textsf {Post}\) and then sums up the values. The score for each \(\textsf {Post}\) is the element-wise sum of the two vectors (Line 15). Figure 8a shows an example calculation.

3.14.3 Query 2

A batch evaluation example of Q2 (Algorithm 2) is depicted in the upper part of Fig. 8b. Q2 computes the score for each \(\textsf {Comment}\) and then selects the top-3 \(\textsf {Comment}\)s. To collect the \(\textsf {User}\)s of each subgraph, in Lines 8 and 10,

extracts the elements of the \(\mathbf {Likes}\) \(^\mathsf{T}\) matrix as \( ( c, u, 1 ) \) tuples and collects them into sets of \(\textsf {User}\) IDs (u) per \(\textsf {Comment}\) (c). To produce the subgraph for each \(\textsf {Comment}\),

extracts the elements of the \(\mathbf {Likes}\) \(^\mathsf{T}\) matrix as \( ( c, u, 1 ) \) tuples and collects them into sets of \(\textsf {User}\) IDs (u) per \(\textsf {Comment}\) (c). To produce the subgraph for each \(\textsf {Comment}\),

extracts a submatrix based on the \(\textsf {User}\)s selected (Line 11).

extracts a submatrix based on the \(\textsf {User}\)s selected (Line 11).

finds connected components in the induced subgraph using the FastSV algorithm [88] of the LAGraph library (Line 12). This produces a vector containing the component ID for each \(\textsf {User}\).

finds connected components in the induced subgraph using the FastSV algorithm [88] of the LAGraph library (Line 12). This produces a vector containing the component ID for each \(\textsf {User}\).

yields the squared sum of component sizes, i.e. the score for each \(\textsf {Comment}\) (Line 14).

yields the squared sum of component sizes, i.e. the score for each \(\textsf {Comment}\) (Line 14).

3.15 GraphBLAS incremental

3.15.1 Tool description

To incrementalize the GraphBLAS Batch solution (Sect. 3.14), we have reworked the solution to compute changes to the results upon updates instead of running a full re-evaluation.

Approach The incremental version performs a full batch evaluation for the first run and computes the scores. Then, for each update only those parts of the model are re-evaluated which might be affected by the update. Finally, the previous top-3 scores and the new ones are compared to maintain the result. Incremental computation of Q1 uses fine-grained maintenance as it stores the scores of each \(\textsf {Post}\) and updates them when new \(\textsf {Comment}\) nodes and \(\textsf {likes}\) edges appear. The granularity of the incremental version of Q2 is coarser, as it collects the \(\textsf {Comment}\)s whose scores can change and re-evaluates them.

Notation The updated variables are denoted with prime, e.g. the updated version of vector \(\mathbf {scores}\) is \(\mathbf {scores'}\), which contains the scores for the new nodes and the updated scores for the existing ones. The changes can be stored as increment matrices/vectors, denoted with a superscript plus symbol (\(\mathbf {^+}\)) and are applied with the \(\oplus \) operation to the original values, e.g. \({ \mathbf {scores'} = \mathbf {scores} \oplus \mathbf {scores^+} }\). Another option is to store the changed values, denoted with \({\Delta }\), and apply them by overwriting the existing values: the new vector is initialized with the original one: \({ \mathbf {scores'} = \mathbf {scores} }\), then the new values overwrite the existing ones via a mask, which preserves the unaffected values from modification: \({ \mathbf {scores'} \langle \!\langle {\Delta }\mathbf {scores} \rangle \!\rangle = {\Delta }\mathbf {scores} }\).

3.15.2 Query 1

To incrementally evaluate Q1, Algorithm 3 updates the scores as follows. Lines 9 and 10 compute the increment of the score induced by new \(\textsf {Comment}\)s. In Line 11, the number of likes the \(\textsf {Comment}\)s newly received is summed up per \(\textsf {Post}\). The two types of increments are summed up in Line 12. For subsequent evaluations, the scores are updated using the increment vector (Line 13). To find the top-3 scores, only the previous maximum values and the \(\textsf {Post}\)s with updated scores are considered. Line 14 yields the updated score values by assigning the \(\mathbf {scores'}\) vector via the \(\mathbf {scores^+}\) increment vector as a mask, which allows changes in the result only if the mask has an element at the corresponding position. Figure 8a shows an example calculation. Using the output of this algorithm, merging the previous top-3 scores and the new ones yields the new result.Footnote 13

3.15.3 Query 2

The incremental evaluation of Q2 is depicted in the lower part of Fig. 8b. Algorithm 4 returns the \(\textsf {Comment}\)s with new scores (\( {\Delta }\mathbf {scores} \)) by re-evaluating the \(\textsf {Comment}\)s which the updates might impact on. Merging the previous top-3 scores and the new ones yields the new result. (New scores overwrite existing ones.) The first phase of the algorithm (Steps

–

–

, Lines 14–20) collects the \(\textsf {Comment}\)s which might be affected by the updates (affected comments, \( acSet \) set), and then, the second phase (Steps

, Lines 14–20) collects the \(\textsf {Comment}\)s which might be affected by the updates (affected comments, \( acSet \) set), and then, the second phase (Steps

–

–

, Line 21) computes the new scores of these \(\textsf {Comment}\)s using the batch algorithm described in Algorithm 2.

, Line 21) computes the new scores of these \(\textsf {Comment}\)s using the batch algorithm described in Algorithm 2.

A \(\textsf {Comment}\) might be affected by an update if (1) it is a new \(\textsf {Comment}\), or (2) the \(\textsf {Comment}\) receives a new incoming \(\textsf {likes}\) edge from a \(\textsf {User}\), resulting in a new component or the expansion of an existing one, or (3) two \(\textsf {User}\)s who like the \(\textsf {Comment}\) become friends, which merges the components the \(\textsf {User}\)s belong to (if they previously belonged to different components). Case (3) is covered by Lines 14-18, where Steps

–

–

compute the \(\textsf {Comment}\)s which might be affected by new \(\textsf {friends}\) edges. \(\mathbf {NewFriends}\) incidence matrix represents each new friendship by a column having two 1-/valued elements for the two \(\textsf {User}\)s. For each new friendship (i.e. pair of \(\textsf {User}\)s),

compute the \(\textsf {Comment}\)s which might be affected by new \(\textsf {friends}\) edges. \(\mathbf {NewFriends}\) incidence matrix represents each new friendship by a column having two 1-/valued elements for the two \(\textsf {User}\)s. For each new friendship (i.e. pair of \(\textsf {User}\)s),

computes how many \(\textsf {User}\) of the pair likes each \(\textsf {Comment}\) (\(0, 1, \text {or } 2\)). During the matrix–matrix multiplication, each column of new friendships selects two columns of \(\mathbf {Likes'}\) \(^\mathsf{T}\) matrix and sums them up into \(\mathbf {AC}\) matrix (Line 15). In Line 16,

computes how many \(\textsf {User}\) of the pair likes each \(\textsf {Comment}\) (\(0, 1, \text {or } 2\)). During the matrix–matrix multiplication, each column of new friendships selects two columns of \(\mathbf {Likes'}\) \(^\mathsf{T}\) matrix and sums them up into \(\mathbf {AC}\) matrix (Line 15). In Line 16,

keeps only 2-/valued elements, i.e. where both \(\textsf {User}\)s of a friendship liked the \(\textsf {Comment}\), so both of them are present in the subgraph and the new friendship might merge components. Then,

keeps only 2-/valued elements, i.e. where both \(\textsf {User}\)s of a friendship liked the \(\textsf {Comment}\), so both of them are present in the subgraph and the new friendship might merge components. Then,

produces a row-wise sum using the logical or operator \(\vee \) (Line 17).

produces a row-wise sum using the logical or operator \(\vee \) (Line 17).

extracts \( ( c, 1 ) \) tuples from the result vector and collects the \(\textsf {Comment}\) IDs from these tuples, then