Abstract

There exists a plethora of claims about the advantages and disadvantages of model transformation languages compared to general-purpose programming languages. With this work, we aim to create an overview over these claims in the literature and systematize evidence thereof. For this purpose, we conducted a systematic literature review by following a systematic process for searching and selecting relevant publications and extracting data. We selected a total of 58 publications, categorized claims about model transformation languages into 14 separate groups and conceived a representation to track claims and evidence through the literature. From our results, we conclude that: (i) the current literature claims many advantages of model transformation languages but also points towards certain deficits and (ii) there is insufficient evidence for claimed advantages and disadvantages and (iii) there is a lack of research interest into the verification of claims.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Ever since the dawn of model-driven engineering at the beginning of the century, model transformations, supported by dedicated transformation languages [31], have been an integral part of model-driven development. Model transformation languages (MTLs), being domain-specific languages, have ever since been associated with advantages in areas like productivity, expressiveness and comprehensibility compared to general-purpose programming languages (GPLs) [50, 55, 60]. Such claims are reiterated time and time again in the literature, often without any actual evidence. Nowadays, such an abundance of claims runs through the whole literature body that one can be forgiven when losing track of which claims verifiably apply and which are still purely visionary.

The goal of this study is to identify and categorize claims about advantages and disadvantages of model transformation languages made throughout the literature and to gather available evidence thereof. We do not intend to provide a complete overview over the current state of the art in research. For this purpose, we performed a systematic review of claims and evidence in the literature.

The main contributions of our study are:

-

a systematic review and overview over the advantages and disadvantages of model transformation languages as claimed in the literature;

-

insights into the state of verification of aforementioned advantages and disadvantages;

This study is intended for researchers to (i) raise awareness for the current state of research and (ii) incentivise further research in areas where we identified gaps. The study can also be of interest to practitioners who wish to gain an overview over what research claims about MTLs compared to a practitioners view of the matter.

To systematize information from the literature, we performed a systematic literature review [14, 41] based on the research questions we defined (see Sect. 3.1). As a first step, during the review we selected 58 publications from which to extract claims and evidence for advantages and disadvantages of model transformation languages. Afterwards, we categorized claims and systematized the evidence to produce (i) a categorization of claimed advantages and disadvantages into 15 separate categories (namely analysability, comprehensibility, conciseness, debugging, ease of writing a transformation, expressiveness, extendability, just better, learnability, performance, productivity, reuse and maintainability, tool support, semantics and verification, versatility) and (ii) a systematic representation of which claims are verified through what means. From our results, we conclude that:

-

1.

The current literature claims many advantages and disadvantages of model transformation languages.

-

2.

A large portion of claims are very broad.

-

3.

There is insufficient or no evidence for a large portion of claims.

-

4.

There is a number of claims that originate in claims about DSLs without proper evidence why they hold for MTLs too.

-

5.

There is a lack of research interest in evaluation and especially verification of claimed advantages and disadvantages.

We hope our results can provide an overview over what MTLs are envisioned to achieve, what current research suggests they do and where further research to validate the claimed properties is necessary.

The remainder of this paper is structured as follows: Sect. 2 introduces the background of this research, model-driven engineering and model transformation languages. In Sect. 3, we will detail the methodology used for the conducted literature review. We present our findings in Sect. 4. Afterwards, in Sect. 5, we discuss the results of our findings. This section will also include propositions for much needed validation of claims about model transformation languages synthesized from the literature review. Section 6 contains information about related work, and in Sect. 7 potential threats to the validity of this research are discussed. Lastly, Sect. 8 draws a conclusion for our research.

2 Background

In this section, we provide the necessary background for our study and explain the context in which our study integrates.

2.1 Model-driven engineering

In 2001, the Object Management Group published the software design approach called Model-Driven Architecture [52] as a means to cope with the ever-growing complexity of software systems. MDA placed models at the centre of development rather than using them as mere documentation artefacts. The approach envisions an automated, continuous specialization from abstract models towards code. Starting with the so-called Computation Independent Models (CIMs), each specialization step should provide the models with more specific information about the intended system, transforming them from CIM into Platform Independent Models (PIMs) and then into Platform Specific Models (PSMs) and finally into production ready source code.

The different abstraction levels were designed to enable practitioners to be as platform, system and language independent as possible. The notion of using models as the central artefact during development is what is commonly referred to as Model-Driven (Software-) Engineering (MDE/MDSE) or Model-Based (Software-) Engineering (MBE/MBSE) [20].

The structure of a model is defined by a so-called meta-model whose structure is then also defined by meta-models of their own.

2.2 Domain-specific languages

“A domain-specific language (DSL) provides a notation tailored towards an application domain and is based on relevant concepts and features of that domain” [61]. The idea behind this design philosophy is to increase expressiveness and ease of use through more specific syntax. As such, DSLs provide an auspicious alternative for solving tasks associated with a specific domain. Representative DSLs include HTML for designing Web pages or SQL for database querying and manipulation.

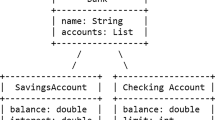

2.3 Model transformation languages

Models are transformed into different models of the same or a different meta-model via the so-called model transformations. Driven by the appeal of DSLs, a plethora of dedicated MTLs have been introduced since the emergence of MDE as a software development approach [3, 7, 38, 43]. Unlike general-purpose programming languages, MTLs are designed for the sole purpose of enabling developers to transform models. As a result, model transformation languages provide explicit language constructs for tasks performed during model transformation such as model matching. Similar to GPLs, model transformation languages can differ vastly in several aspects, starting with features that can be found in GPLs as well like language paradigm and typing all the way to transformation-specific features such as directionality [22]. There are numerous of features that can be used to distinguish model transformation languages from one another. For a complete classification of these features, please refer to Kahani et al. [39], Mens and Gorp [49] or Czarnecki and Helsen [22].

Model transformation languages, being DSLs, promise dedicated syntax tailored to enhance the development of model transformations.

3 Methodology

Our review procedures are based on the descriptions of literature and mapping reviews from Boot, Sutton and Papaioannou [14]. First of all, a protocol for the review was defined. The protocol, as defined in Boot, Sutton and Papaioannou [14], describes (I) the research background (see Sect. 2), (II) the objective of the review and review questions (see Sect. 3.1), (III) the search strategy (see Sect. 3.2), (IV) selection criteria for the studies (see Sect. 3.3), (V) a quality assessment checklist and procedures (see Sect. 3.4), (VI) the strategy for data extraction and (VII) a description of the planned synthesis procedures (see Sect. 3.5). A complete overview of all steps of our literature review can be found in Sect. 1.

The remainder of this section will describe in detail each of the introduced protocol elements, with the exemption of the research background which we already covered in Sect. 2.

3.1 Objective and research questions

To formulate the objective as well as to derive the research questions for our review, we first applied the Goal-Question-Metric approach [11] which splits the overall goal into four separate concerns, namely purpose, issue, object and viewpoint.

- Purpose:

-

Find and categorize

- Issue:

-

claims of and evidence for advantages and disadvantages

- Object:

-

of model transformation languages

- Viewpoint:

-

from the standpoint of researchers and practitioners.

Based on the described goal, we then extracted the two main research questions for our literature review:

- RQ1:

-

What advantages and disadvantages of model transformation languages are claimed in the literature?

- RQ2:

-

What advantages and disadvantages of model transformation languages are validated through empirical studies or by other means?

The aim of RQ1 is to provide an extensive overview over what kinds of advantages or disadvantages are explicitly attributed to using dedicated model transformation languages compared to using general-purpose programming languages. We consider such an overview to be necessary, because the number of claims and their repetition in the literature to date makes it difficult to keep track of which claims verifiably apply and which are still purely visionary. Naturally to be able to distinguish between substantiated and unsubstantiated claims, it is also required to record which claims are supported by evidence. With RQ2, we aim to do exactly that. Combining the results of RQ1 and RQ2 then makes it possible to determine if, and how, a positive or negative claim about MTLs is verified. Additionally, this also enables us to identify those claims that have yet to be investigated.

3.2 Search strategy

Our search strategy consists of seven consecutive steps. A visual overview of the complete search process is shown in Fig. 3. The figure visualizes steps Database search to Snowballing from Fig. 1 in more detail.

In the first step, we defined the search string to be used for automatic database searches. For this, we identified major terms concerning our research questions. Each new term was made more specific than the previous one. The resulting terms and justifications for including them were:

-

Model-driven engineering The overall context we are concerned with. This was included to ensure only papers from the relevant context were found.

-

Model transformation The more specific context we are concerned with.

-

Model transformation language Since our focus is on the languages to express model transformations.

We used a thesaurus to identify relevant synonyms for each term in order to enhance our search string. In addition, we included one representative model transformation language with graphical syntax, one imperative language, one declarative language and one hybrid language as well as the term domain-specific language and its synonyms. The selection of the representative languages was made on the basis of their widespread use, active development and in the case of QVT because it is the standard for model transformations adopted by the Object Management Group. All these additional terms were included as synonyms for the model transformation language term.

We dropped the terms advantage and disadvantage after initial searches, because they resulted in a too narrow of a result set which excluded key publications [29, 33] manually identified by the authors.

To combine all keywords, we followed the advice of Kofod-Petersen [42] to use the Boolean (\(\vee \)) to group together synonyms and the Boolean (\(\wedge \)) to link our major term groups.

This resulted in the search string shown in Fig. 2 which was applied in full text searches.

We decided on the following four search engines to use for automated literature search:

-

ACM Digital Library

-

IEEE Xplore

-

Springer Link

-

Web Of Science

Search engines were chosen based on their overall coverage, completeness, the availability of accessible publications and usage in other literature reviews in this field such as Loniewski, Insfran, and Abrahão [8, 48]. The online library Science Direct, which is often used in this domain, was excluded from our list due to us only having limited access to the publications in the database. We decided that the overhead of requesting access to all publications for which our proceedings would require a full text review (see step 4) would take up too much time; thus, we excluded the database from our automatic search process. Badampudi, Wohlin, and Petersen [6] also show that combining the automatic database searches with an additional snowballing process can make up for a reduced list of searched databases. We also decided against using Google Scholar as a search engine due to our experience with it producing too many irrelevant results and having a large overlap with ACM Digital Library and IEEE.

We conducted several preliminary searches on all four databases during the construction of the search string, to validate the resulting publications included key publications.

After the definition and validation of the search string, the second step consisted of full text searches using the search engines of ACM Digital Library, IEEE Xplore Digital Library and Web of Science.

For the Springer Link database, we realized early on that a full text search would result in too many hits and instead opted to query only the titles for the keyword model transformation language and its synonyms and filtered these results by applying a full text search based on the remaining keywords and their synonyms. The remaining results still far exceeded those of all other databases combined. We further realized during preliminary sifting that neither title nor abstracts of publications beyond the first 200 results suggested a relevance to our study. For that reason, we decided to cap our search at 500 publications, doubling the size of results from the point where the relevance of publications started to slide. This decision is supported by the fact that any publication which ended up in our data extraction set was found within the first 200 results.

All automated database searches were conducted between June 17 and June 28, 2019.

In the third step, all duplicates that resulted from using multiple search engines were filtered out based on the publication title and date. This also included the removal of publications that had extended versions published in a journal. This resulted in a total of 935 publications.

During the fourth step, two researchers independently used the selection criteria (see Sect. 3.3) on the titles and abstracts to select a set of relevant publications. The researchers categorized literature as either relevant or irrelevant. And in cases where they could not deduce the relevance based on the title and abstract, the publication was marked as undecidable.

Afterwards, in step 5 the results for each publication of the independent selection processes were compared. In cases where the two researchers agreed on relevant or irrelevant, the paper was included or excluded from the final set of publications. In cases of either a disparity between the categorizations or an agreement on undecidable, the full text of the publications was consulted using adaptive reading techniques to decide whether it should be included or excluded. Adaptive reading in this context meant going from reading the introduction to reading the conclusion and if a decision was still not reached reading the paper from start to finish until a decision could be reached. The step resulted in a total of 99 publications to use as a start set for the sixth step.

In the sixth step, we applied exhaustive backward and forward snowballing, meaning, as described in many previous studies [5, 59], until no new publication was selected. The snowballing procedures followed the guidelines laid out by Wohlin [67]. Our start set was comprised of all 99 publications from step 5. We then applied backward and forward snowballing to the set. For backward snowballing, we used the reference lists contained in the publications, and for forward snowballing we used Google Scholar as suggested by Wohlin [67] and because from our experience it provides the most reliable source for the cited by statistic. To the cited and citing publications, we then applied our inclusion and exclusion criteria as described in step 4. All publications that were deemed as relevant were then used as the starting set for the next round of snowballing until no new publications were selected as relevant. The result of this step was a set of 107 relevant publications.

Lastly, in step 7, we filtered out all publications that did not explicitly mention advantages or disadvantages of model transformation languages by reading the full text of all publications. This step was introduced to filter out the noise that arose from a broader search string and less restrictive inclusion criteria (see Sect. 3.3). The remaining 58 publications form our final set on which data synthesis was performed on. (A list of all included publications with an unique assigned ID can be found in “Appendix B”.)

3.3 Selection criteria

We decided that a publication be marked as relevant, if it satisfies at least one inclusion criteria and does not satisfy any exclusion criteria. The inclusion criteria were chosen to include as many papers that potentially contain advantages or disadvantages as possible. A publication was included if:

- IC1:

-

The publication introduces a model transformation language.

- IC2:

-

The publication analyses or evaluates properties of one or multiple model transformation languages.

- IC3:

-

The publication describes the application of one or multiple model transformation languages.

IC1 is an inclusion criteria, because the introduction of a new language should include a motivation for the language and possibly even a section on potential shortcomings of the language. Such shortcomings can be attributed either to the design of the language or to the concept of model transformation languages as a whole.

A publication that is covered by IC2 can help answer both RQ1 and RQ2 depending on the analysed/evaluated properties.

IC3 forms our third inclusion criteria since experience reports can be a good source for both strengths and weaknesses of any applied technique or tool.

Our exclusion criteria were:

- EC1::

-

Publications written in a language other than English.

- EC2::

-

Publications that are tutorial papers, poster papers or lecture slides.

- EC3::

-

Publications that are a Doctoral/Bachelor/Master thesis.

EC1 ensures that the scientific community is able to verify our extracted data from publications.

Because tutorial papers, poster papers and lecture slides are less reliable and do not provide enough information to work with, they are excluded with EC2.

Lastly, to reduce the required workload, we excluded all thesis publications with EC3 as full text reviews would take up too much time. We also argue that relevant thesis findings are most likely also published in journal or conference papers.

3.4 Quality assessment checklist and procedures

Assessing the quality of publications found during the selection process is an essential part of a literature review [14].

For that reason, we adopted a list of six quality attributes for studies. The quality attributes (seen in Table 1) are taken from Shevtsov et al. [57] which adapted quality criteria from Weyns et al. [64]. Each quality item has a set of three characteristics for which a value between 0 and 2 is assigned. The quality score of a publication is calculated by summing up the values for each characteristic, making 12 the maximum quality score for a publication. The quality score did not influence the decision to include or exclude a publication.

3.5 Data extraction strategy

Based on our research questions, and general documentation concerns, we devised a total of eight data items to extract from each selected publication. Table 2 lists all extracted data items.

Data items D1–D3 are recorded for documentation purposes.

To gather explicitly, claimed advantages and disadvantages of model transformation languages D4 and D5 are necessary items to include.

Another goal of our literature review is to find out which advantages or disadvantages are empirically verified. It is therefore necessary to extract information about whether empirical evidence exists and which advantage or disadvantage it is concerned with (D6). Similarly, citations used to back up claimed advantages or disadvantages are also documented (D7). Our goal is it to either track down references that provide evidence and find sources of common claims about advantages and disadvantages of model transformation languages.

Lastly, in order to evaluate the quality of publications the quality score D8 for each publication is recorded.

All data items were extracted during full text reviews of all selected publications.

3.6 Synthesis procedures

The synthesis of the collected data was split into multiple parts with multiple results for each research question.

3.6.1 RQ1: What advantages and disadvantages of model transformation languages are claimed in the literature?

The first part of the synthesis for RQ1 was a simple collection of all claimed advantages and disadvantages. This was done in order to create a basic overview.

Next, an analysis of all collected items was performed in order to devise categories for the advantages and disadvantages. To develop categories, we used initial coding and focused coding as described by Charmaz [19]. First, all claims were analysed claim by claim to extract common phrases or similar topics. These were then used to group together claims and develop descriptive terms when then served as the name for the category formed by the grouped claims. The categories themselves were split into a positive section and a negative section to contrast negative and positive mentions with each other.

Using the devised categorization allows for quick identification of contradictory claims. Such claims then have to be further analysed in terms of origin, context and supporting evidence.

3.6.2 RQ2: What advantages and disadvantages of model transformation languages are validated through empirical studies or by other means?

To analyse evidence of claimed advantages and disadvantage, we started by assessing the quality of each respective publication using the quality score system from Sect. 3.4.

Afterwards, we devised a visual representation for claims and evidence thereof in publications. The representation allows a straightforward identification of substantiated and unsubstantiated claims and tracking of citations back to the origin of cited claims. This in turn enabled us to easily identify whether citations back up stated claims or serve as nothing more than a reference to a publication which claims the same thing.

4 Findings

In this section, we provide a summary of the synthesized data as well as an analysis of the demographics and quality of publications. The summary will be in narrative form, supported by plots and graphs as suggested by Boot, Sutton and Papaioannou [14]. Before describing our findings with regard to the research questions from Sect. 3.1, we first offer statistics and information about the demographic data of the collected literature as well as an overview over their quality which we assessed using the quality criteria from Sect. 3.4.

4.1 Demographics

Figure 4 provides an overview over the quantity of included publications per year. An interesting thing to note is that it took only two years from the introduction of the Model-Driven Architecture in 2001 to the first mentions of advantages of model transformation languages. One of the most cited papers about model transformations in our literature review was published that year too (P63). Its title shapes introductions of publications in the community even today: Model transformation: The heart and soul of model-driven software development.

Scrutinizing claims about MTLs, however, just recently started to be a focus of research, with the first study (P59) dedicated to evaluating advantages of MTLs being published in 2018. To us, this suggests that research might be slowly catching on to the fact that evaluation of specific properties of MTLs is necessary instead of relying on broad claims. Simply relying on the fact that model transformation languages are DSLs and that DSLs in general fare better compared to non-domain-specific languages [12, 28, 40] is not enough.

Industrial case studies about the adoption of MDSE have been performed much earlier than 2018, but such studies mainly focus on the complete MDSE workbench and do not analyse the impact of the used MTLs in great detail. The case study P670 for example, while stating that “The technology used in the company should provide advanced features for developing and executing model transformations”, does not go into detail about neither current shortcomings nor any other specifics of model transformation languages used during the development process.

Overall, there are 32 publications that mention advantages and 36 publications that mention disadvantages. Moreover, four publications provide empirical evidence for either advantages or disadvantages, while 12 publications use citations to support their claims and 14 publications use other means such as examples and experience (more on this in Sect. 4.4).

Lastly, Table 3 shows which transformation languages were directly involved in publications used in our data extraction. We counted a transformation language as being involved if it was used, analysed or introduced in the publication. Simply being mentioned during enumerations of example MTLs was not sufficient.

The table paints an interesting picture. ATL far exceeds all other model transformation languages in involvement, and most languages are only discussed in a single publication.

4.2 Quality of publications

The results from the quality assessment, summarized in Fig. 5, shows that both the problem context and definition as well as the overall contributions are well defined in a majority of publications. Insights drawn from the work described in these publications, while less comprehensive in many cases, are also described most often. However, thorough descriptions of the research design, the used methods or steps taken are less common, a trend which is even more prominent for the presentation and discussion of limitations that act upon the studies. Similar observations have already been made by other literature reviews in different domains [26, 57].

4.3 RQ1: Advantages and disadvantages of model transformation languages

We used data items D4 and D5 to answer our first research question, namely which advantages or disadvantages of dedicated model transformation languages are claimed in the literature. The resulting statements were sorted into 15 different categories (seen in Fig. 6) which arose naturally from the collected statements. An overview over all claims sorted into the different categories is given in Table 4. The table ascribes each claim with a unique ID (Cxx) for reference throughout this work. The table also contains evidence used to support a claim (if existent) to which we will come back later in Sect. 4.4. For almost all categories, there exist papers that describe model transformation languages as being advantageous as well as publications that describe them as disadvantageous in the category. In the following, we discuss the statements made in publications for each category.

4.3.1 Analysability

Throughout our gathered literature, there is only one publication, P45, that mentions analysability. According to them, a declarative transformation language comes with the added advantage of being automatically analysable which enables optimizations and specialized tool support (C1). While a detailed discussion of this claim within the publication remains owed, the authors provide examples of how static analysis allows the engine to implicitly construct an execution order. While our literature review found only a single publication that explicitly mentions analysability as an advantage of model transformation languages, there do exist multiple publications [2, 3, 63] that contain analysis procedures for model transformations.

4.3.2 Comprehensibility

Comprehensibility is a much disputed and multifaceted issue for model transformation languages. A total of eleven publications touch on several different aspects of how the use of MTLs influences the understandability of written transformations.

The first aspect is the use of graphical syntax compared to a textual one which is typically used in general-purpose programming languages. In P63, the authors talk about “perceived cognitive gains” of graphical representations of models when compared to textual ones (C6). A pronouncement that is echoed in P43 states that graphical syntax for transformations is more intuitive and beneficial when reading transformation programs (C2).

While all these claims about graphical notation increasing the comprehensibility of transformations stand undisputed in our gathered literature, there are other facets in which graphical notation is said to be disadvantageous. We will come back to them later on in Sect. 4.3.5.

Declarative textual syntax is another commonly used syntax for defining model transformations. The authors of P45 contend that a declarative syntax makes it easy to understand transformation rules in isolation and combination (C3). However, declarative transformation languages are typically based on graph transformation approaches which can become complex and hard to read according to P70 (C13). They additionally assert that the use of abstract syntax hampers the comprehensibility of transformation rules (C12). Furthermore, P22 insist that the use of graph patterns results in only parts of a meta-model being revealed in the transformation rules and that current transformation languages exhibit a general lack of facilities for understanding transformations (C8). P22 also reports that understanding transformations in current model transformation languages is hampered, specially by the fact that many of the involved artefacts such as meta-models, models and transformation rules are scattered across multiple views (C9). P29 brings forward the concern that large models are also a factor that hampers comprehensibility since there exist no language concepts to master this complexity (C11). Adding to this point, P27 describes that for non-experts (e.g. stakeholders) transformations written in a traditional model transformation language are “very complex to understand” because they lack the necessary skills (C10). The authors of P95 on the other hand claim that the usage of dedicated MTLs, which incorporate high-level abstractions, produces transformations that are more concise and more understandable (C7). This sentiment is shared in P44 which explains the belief that using GPLs for defining synchronizations brings disadvantages in comprehensibility compared to model transformation languages (C3).

Understanding a transformation requires, among other things, understanding which elements are affected by it and in which context a transformation is placed. Using a model transformation language is beneficial for this as shown in the study described in P59 (C5).

4.3.3 Conciseness

Interestingly, there seems to be a consensus on the conciseness of model transformation languages compared to GPLs.

In general, dedicated model transformation languages are seen as more concise (P63 C17, P95 C21) which, apart from textual languages, is also stated for graphical languages in P75 (C18).

The fact that MTLs are more abstract making them more concise and thus better is claimed multiple times in P80 (C19), P52 (C15), P3 (C14) and P95 (C20), while P673 claims that the abstraction in MTLs helps to reduce their overall complexity (C22).

The SLOC metric has also been drawn from as a way to compare MTLs with other MTLs and even GPLs. According to an experiment described in P59, using a rule-based model transformation reduces the transformation code by up to 48% (C16). Whether or not this is any indication of superiority is a disputed subject [9].

4.3.4 Debugging

Debugging support is much less disputed than comprehensibility. Of the five publications that talk about debugging in model transformation languages, none praise the current state of debugging support.

P22 (C24, C25) and P90 (C27) both describe that currently no sufficient debugging support exist for MTLs. And while in P95 it is stated that debugging of transformations in a dedicated languages is likely better than when the transformation is written in a general-purpose language (C23) they fail to bring forth a single example for their assertion.

Lastly, P45 lauded declarative syntax for its benefit in comprehension but also note that imperative syntax is easier to debug in general (C26).

4.3.5 Ease of writing a transformation

The main purpose of model transformation languages is to improve the ease with which developers are able to define transformations. Hence, this should also be a main benefit when compared to general-purpose languages. However, the authors of the study described in P59 found:“no sufficient (statistically significant evidence) of general advantage of the specialized model transformation language QVTO over the modern GPL Xtend” (C39). This is not to say that there are none as the authors admit the conclusions were “made under narrow conditions” but is still a concerning finding. Much more so because claims about such benefits of using MTLs persist through the literature. Claims such as those described in P29 (C29), P672 (C32) and P50 (C30) state that their simpler syntax makes it easier to handle and transform models. These claims draw from statements about the expressiveness, to which we will come to in the next section, and reason that better expressiveness must lead to an easier time in writing transformations. A potential reason that hampers model transformation languages from evidentially being better for writing transformations is cited in P27 (C34) and P28 (C35). They both state that using a model transformation language requires skill, experience and a deep knowledge of the meta-models involved (P56 C38). In our opinion, however, this holds true regardless of the language used to transform models.

Moreover, many model transformation languages use declarative syntax which can be unfamiliar for many programmers, according to P45 (C37) and P63 (C40), which are much more familiar with the status quo, i.e. imperative languages. The authors of P22, on the other hand, state that imperative MTLs often require additional code since many issues have to be accomplished explicitly compared to implicitly in declarative languages (C33).

Lastly, graphical syntax is said to make writing model transformations easier as the syntax is purported to be more intuitive for this task compared to a textual one in P3. In P43 (C36) and P672 (C41), however, the authors claim that graphical syntax can be complicated to use and that textual syntax is more compact and does not force users to spend time to beautify the layout of diagrams.

4.3.6 Expressiveness

As described in Sect. 2.2, the idea behind domain-specific languages is to design languages around a specific domain, thus making it more expressive for tasks within the domain [50]. Since model transformation languages are DSLs, it should not be a surprise that their expressiveness in the domain of model transformations is mentioned almost exclusively positive by a total of 19 different publications found in our literature review.

A large portion (P95, P80, P94, P63, P15, P40, P52, P70) of publications refer to expressiveness state that the higher level of abstraction that results from specific language constructs for model manipulation increases the conciseness and expressiveness of MTLs. P80 additionally asserts that model transformation languages are just easier to use (C61).

Another portion (P2, P15, P45, P677, P27, P63, P95, P27) explains that the expressiveness is increased by the fact that model transformation engines can hide complexity from the developer. One such complex task is pattern matching and the source model traversal as mentioned in P2 (C42), P15 (C43) and P45 (C53), respectively. According to them, not having to write the matching algorithms increases the expressiveness and ease of writing transformations in MTLs. Implicit rule ordering and rule triggering is another aspect that P15 (C46), P45 (C51) and P677 (C65) claim increases the expressiveness of a transformation language. Related to rule ordering is the internal management and resolution of trace information which is stated by P15 (C44), P45 (C50), P677 (C65) and P95 (C64) to be a major advantage of model transformation languages. Furthermore, P45 asserts that implicit target creation is another expressiveness advantage that MTLs can have over general-purpose languages (C52). Lastly, the study described in P59 observed that copying complex structures can be done more effectively in MTLs (C56).

However, we also uncovered some shortcomings in current syntaxes. P10 argues that the lack of expressions for transforming a single element into fragments of multiple targets is a detriment to the expressiveness of transformation languages, going as far as to allege that without such constructs model transformation languages are not expressive enough (C68). P32 implies that MTLs are unable to transform OCL constraints on source model elements to target model elements (C69). And lastly P33 critiques that model transformation languages lack mechanisms for describing and storing information about the properties of transformations (C70).

4.3.7 Extendability

Being able to extend the capabilities of a model transformation language seems to be less of a concern to the community. This can be seen by the fact that only P50 touches this issue. They explain that external MTLs can only be extended (“if at all”) with a specific general-purpose language (C71). Internal model transformation languages of course do not suffer from this problem since they can be extended using the host language [21, 32, 46].

4.3.8 Just better

Apart from specific aspects in which the literature ascribes advantages or disadvantages to model transformation languages, there are also several instances where a much broader claim is made.

P86 for example states that there exists a consensus that MTLs are most suitable for defining model transformations (C78). This claim is also reiterated in several other publications using statements such as “the only sensible way” or “most potential due to being tailored to the purpose” (P9, P23, P63, P64, P66). However, one publication claims that both GPLs and MTLs are not well suited for model migrations and that instead dedicated migration languages are required (P34 C80).

4.3.9 Learnability

The learnability issues of tools have been shown to positively correlate with usability defects [1] and thus their general acceptance.

However, the learnability of model transformation languages is rarely discussed in detail. P30 (C81), P58 (C83) and P81 (C84) all express concerns about the steep learning curve of model transformation languages, and P52 explain that transformation developers are often required to learn multiple languages, which requires both time and effort (C82).

4.3.10 Performance

The execution performance of transformations is an important aspect of model transformations. Often times, the goal is to trigger a chain of multiple transformations with each change to a model. Hence, good transformation performance is paramount to the success of model transformation languages.

Opinion on performance in the literature is divided. On the one hand, there are publications such as P52 (C88) and P80 (C89) which describe that the performance of dedicated MTLs is worse than that of compiled general-purpose programming languages, while on the other hand there is P95 which states that some introduced transformation languages are more performant (C85), citing articles from the Transformation Tool Contest (TTC), and P675 which shows a performance comparison of transformations written in Java and GrGen where GrGen performs better than Java (C86). There are also more nuanced views on the subject. P45 describes that practitioners sometimes perceive the performance as worse and that there exist factors that hamper the performance (C87). The listed factors are the fact that the transformation languages are often interpreted, a mismatch with hardware and less control over the algorithms that are used. However, they also describe that specialized optimizations can bridge the performance gap.

4.3.11 Productivity

Increased productivity through the use of DSLs is a much cited advantage [50] (C6D). Unsurprisingly, it resurfaces in various forms in the context of model transformation languages as well. For instance, in P45 it is described that the use of declarative MTLs improves the productivity of developers (C91). P29 goes even further, claiming that the use of any model transformation language results in higher productivity (C90).

This is contrasted by the hypothesis that productivity in general-purpose programming languages might be higher due to the fact that it is easier to hire expert users, which was put forward in P59 (C93). Lastly, P32 raises the concern that some of the interviewed subjects perceive model transformation languages as not effective, i.e. not helpful for the productivity of developers (C92).

4.3.12 Reuse and maintainability

In our gathered literature, maintainability is used as a motivation for modularization and reuse concepts. P29,P60 and P95 all claim that reuse mechanisms are necessary to keep model transformations maintainable. Combined with a total of eight (P4, P10, P29, P33, P41, P60, P95, P78) publications that state that reuse is hardly, if at all, established in current model transformation languages, this paints a bleak picture for both maintainability and reuse. The need for reuse mechanisms has already been recognized in the research community as stated by P77 in which the authors explain that a plethora of mechanisms have been introduced (C95) but are hindered by several barriers such as insufficient abstraction from meta-models and platform or missing repositories of reusable artefacts (C103).

There exists only a single claim that directly addresses maintainability. P44 states that bidirectional model transformation languages have an advantage when it comes to maintenance (C94).

Apart from the maintainability of written code, there is also the maintainability of languages and their ecosystems. Surprisingly, this is hardly discussed in the literature at all. Only P52 explains that evolving and maintaining a model transformation language is difficult and time-consuming (C101).

4.3.13 Semantics and verification

Three publications (P39, P23, P58) all suggest that most model transformation languages do not have well-defined semantics which in turn makes verification and verification support difficult (P22 C109). P44, however, explains that bidirectional transformations are advantageous with regards to verification (C107).

4.3.14 Tool support

Tools are another important aspect in the MDE life cycle according to Hailpern and Tarr [28]. They are essential for efficient transformation development. Regrettably, MTLs lack good tool support according to P23, P45, P52 and P80 and if tools exist, they are not close to as mature as those of general-purpose languages as stated in P74 (C119). Additionally, the authors of P94 explain that developers of MTLs need to put extra effort into the creation of tool support for the language (C121). This might, however, be worthwhile, because P44 presumes that dedicated tools for model transformation languages have the potential to be more powerful than tools for GPLs in the context of transformations (C114). And due to the high analysability of MTLs, P45 explains that tool support could potentially thrive (C115). Internal MTLs, on the other hand, are able to inherit tool support from their host languages as reported by P23 (C113). This helps to mitigate the overall lack of tool support, at least for internal MTLs.

An interesting discussion to be held is how important tool support for the acceptance of MTLs actually is. Whittle et al. [65] describe that organizational effects are far more impactful on the adoption of MDE, while the results of Cabot and Gérard [16] contradict this observation citing interviewees from commercial tool vendors that stopped the development of tools due to lack of customer interest.

4.3.15 Versatility

It should be self-evident that languages that are designed for a special purpose do not possess the same level of versatility and area of applicability than general-purpose languages. Hence, it is not surprising that all mentions of versatility of model transformation languages in our gathered literature paint MTLs as less versatile compared to GPLs (P52 (C124), P80 (C125), P94 (C127)).

4.4 RQ2: Supporting evidence for advantages and disadvantages of MTLs

We found a number of different ways used by authors of our gathered literature to support their assertions. The largest portion of “supporting evidence” is made up of cited literature, i.e. a claim is followed by a citation that supposedly supports the claim.

The second way claims are supported is by example, i.e. authors implemented transformations in MTLs and/or GPLs and reported on their findings. Another aspect of this is relying on experience, i.e. authors state that from experience it is clear that some pronouncement is true or that it is a well-established fact within the community that a claim is true.

Third, there is empirical evidence, i.e. studies designed to measure specific effects of model transformation languages or case studies designed to gather the state of MTL usage in industry.

Last, there are those assertions that are not supported by any means. Authors simply suggest that an advantage or disadvantage exists. We assume that some claims made in this way implicitly rely on experience but do not state so. Nevertheless, since there is no way of testing this assumption we have to record such claims exactly the way they are made, without any evidence.

In the following sections, we will talk in detail about how each group of evidence is used in the literature to support claims about advantages or disadvantages of model transformation languages. As mentioned previously, Table 4 contains a complete overview over each claim and through what evidence the claim is supported.

4.4.1 Citation as evidence

Using citations to support statements is a core principle in research. It should therefore come as no surprise that citations are used to support claims about model transformation languages. An interesting aspect to explore for us was to trace how the cited literature supports the claim. For that, as stated in Sect. 3, we created a graphical representation to trace citations used as evidence through literature. The graph is shown in Fig. 7. It is inspired by UML syntax for object diagrams. The head of an “object” contains a publication id, while the body contains the categories for which advantages (+) or disadvantages (–) are claimed in the publication. Each category within the body is accompanied by an ID which can be used to find the corresponding claim within Table 4. We use different borders around publications to denote the type of evidence provided by the publication and arrows from one category within a publication to a different publication stand for the use of a citation to support a claim. Lastly, if the content of a publication does not concern itself with model transformation languages but instead with DSLs, the publication id is followed by “(DSL)”.

Our graph allows to easily gauge information about the following things:

-

What publication claims an advantage or disadvantage of MTLs in which category?

-

What type of evidence (if any) is used to support claims in a publication?

-

Which exact claims are supported through the citation of what publication?

In the following, we discuss observations about citations as evidence that can be made with help from the citation graphs.

First, only a total of 25 citations, split among 12 out of the 58 gathered publications, are used to support claims. This constitutes less than ten percent of all assertions found during our literature review. Seven of the 25 citations cite a publication that itself only states claims without any evidence thereof (P63, P94, P673, P674, P800). A further 11 end in a publication that uses examples or experience (see also Sect. 4.4.3) (P664, P665, P667, P671, P672, P676, P77, P64, P804, P801). Next, there are 3 citations that cite publications which in turn cite further publications to support their claims (P677, P675), leaving only 4 citations that cite empirical studies (P669, P670, P803) (see also Sect. 4.4.2). To us, this is worrying because the practice of citing literature that only restates an assertion corrodes the confidence readers can have in citations as supporting evidence.

From the graph, it is clearly evident that there exists no single cited source for claims about model transformation languages. This is clearly indicated by the fact that only five publications (P63, P77, P673, P675, P803) are cited more than once; twice to be exact. And no publication is cited more than two times. Moreover, of those five publications P675 and P803 are each cited by a single publication, respectively. P675 is cited twice by P80 and P803 by P675. Related thereto, nearly each claim, even within the same category, is being supported through different citations.

Furthermore, only claims about conciseness, expressiveness, reuse & maintainability, tool support, performance and statements that MTLs are just better are supported using citations. It is interesting to note that claims within these categories which are supported by citations are either all positive or all negative. This is not to say that there are no contrasting claims, see for example C113 and C116 in P23, only that, if citations are used for a category the supported claims are either all positive or all negative.

Another thing to note is that in some instances claims about model transformation languages are being supported by citing publications on domain-specific languages in general. This can be seen in P80. The claims C60 and C61 are both supported by a citation of P675 which is a publication that concerns itself with DSLs. Interestingly, P675 itself then cites both publications about DSLs (P800, P801, 803) and a publication about model transformation languages (P804) to support claims stated within the publication.

Coming back to citations of empirical studies, we have to report that while there exist 4 citations of empirical studies only a single claim about model transformation languages (C116 in P23) is actually supported thereby. This is due to P803 being an empirical study about DSLs and P669 and P670 both being cited as evidence for C116.

Lastly, apart from those publications that only make a single claim, no publication supports all their claims using citations. Extreme cases of this can be seen in P45 and P52 which make a total of 16 claims, only supporting three of them with citations while leaving the other 13 unsubstantiated.

4.4.2 Empirical evidence

To our disappointment, we have to report a lack of overall empirical evidence for properties of model transformation languages. Only four publications (P32, P59, P669, P670) in our gathered literature assess characteristics of model transformations using empirical means (see Fig. 7 and Table 4). Of those four, only P59 focuses on MTLs as its central research object, while the other three are case studies about MDA that happen to contain results about transformation languages. P803 too is an empirical study, but as mentioned in Sect. 4.4.1 focuses on domain-specific languages in general not on MTLs. In order to provide the necessary context for scrutinizing the claims extracted from the publications, we provide a short overview over the central aspects of P32, P59, P669, P670 in the following.

The study described in P59 was comprised of a large-scale controlled experiment with over 78 subjects from two universities as well as a preliminary study with a single individual. Subjects had to solve 231 tasks using three different languages (ATL, QVT-O and Xtend). The tasks focused on one of three aspects in transformation development, namely comprehending an existing transformation, changing a transformation and creating a transformation from scratch. After analysing the results, the authors come to the disillusioning conclusion that there is “no statistically significant benefit of using a dedicated transformation language over a modern general-purpose language”.

The authors of P32 report on an empirical study on the efficiency and effectiveness of MDA. A total of 38 subjects, selected from a model-driven engineering course, were asked to implement the book-purchasing functionality of an e-book store system. Afterwards, the subjects evaluated the perceived efficiency and effectiveness of the used methodology. This also included questions about the used QVT language which was perceived as only marginally efficient.

Both P669 and 670 are reports of industrial case studies. The objective of the study in P669 was to investigate the state of practice of applying MDSE in industry. To achieve this, they collected data from tool evaluations, interviews and a survey. Four different companies were consulted to collect the data. Again while some reported results concerned themselves with transformations, model transformation languages were not explicitly discussed. Similarly, P670 reports on an industrial case study involving two companies aiming to collect factors that influence the decision to adopt MDE. For that purpose, multiple preselected individuals at both companies were interviewed. Just as P669, the study did not directly focus on transformations or transformation languages.

As evident from Fig. 7, the results from P32 and P59 have yet to be used in the literature for supporting claims about MTLs. Since both of them have only been published recently, we are, however, optimistic about this prospect.

4.4.3 Evidence by example/experience

Using examples to demonstrate shortcomings of any kind has a long-standing tradition not only in informatics. Using examples to demonstrate an advantage, however, can result in less robust claims (especially toy or textbook examples Shaw [56]). As such, it is important to differentiate whether a claim is made by demonstrating a shortcoming or benefit.

In our gathered literature, ten publications use examples to support a claim. Interestingly, examples are mainly used to support broad claims about model transformation languages. This can be observed by the fact that P34 and P64 use examples to try and demonstrate that GPLs are not well suited for transforming models, while P664, P665, P667, P672, P804 and P676 try to demonstrate the general superiority of MTLs by showing examples of transformations written in MTLs. Other claims that are supported through examples are a demonstration of the reduction in code size when using rule-based MTLs in P59 and statements about the extensive amount of reuse mechanisms for MTLs through listing gathered publications about the proposed mechanisms in P77.

Long-time practitioners of model transformation languages or programming languages in general often rely on their experience to make assertions about aspects of the language. And while the experience of long-term users can create valuable insights, it is still subjective and can therefore vary in accuracy. In our case, six publications directly state that their assertions come from experience. P3 report on their experiences using different languages to implement transformations, coming to the conclusion that graphical rule definition is more intuitive, an experience shared by P40. P43 name user feedback as grounds for claiming that visual syntax has advantages in comprehension but makes writing transformations more difficult. And P672 share that they are under the impression that graph transformations are the superior method for defining refactorings.

Since experience is subjective, contradicting experiences are bound to occur sometime. While the authors of P10 believe from experience that current MTLs are not abstract enough for expressing transformations, P671 feel that the difficulty of writing transformations in a MTL does stem from the chosen MDD method rather than the syntax of the language.

4.4.4 No evidence

Figure 7 and especially Table 4 make it clear that a large portion of both positive and negative claims about model transformations are never substantiated. In fact, of the 127 claims ~69% are unsubstantiated. Adding those that are supported by a citation that in the end turns out to be unsupported as well brings the number up to ~77%. Particularly, the categories concerning the usability of MTLs such as comprehensibility, ease of writing a transformation and productivity lack meaningful evidence. All three of them being cornerstones of language engineers arguments for the superiority of model transformation languages make this especially worrisome.

We believe that a realization in the community about this fact is necessary. The necessity or superiority of model transformations has to be properly motivated. This means that it is not sufficient to claim advantages or disadvantages without providing at least some form of explanation on why this claim is valid (more on this in Sect. 5.3).

5 Discussion

In this section, we reflect on the previously presented findings. Our focus for this is fourfold. First, we feel it is necessary to draw parallels between our categorization and attributes of product quality. Next, we want to briefly discuss how claims are made in regards to transformation language features. Afterwards, a discussion about lack of empirical studies about properties of model transformation languages is warranted. And last we feel a discussion about the research direction for the community is also necessary.

5.1 Claims about model transformation languages in context of software quality

There are undeniable parallels between the categories we developed for claims and characteristics of software quality as defined by ISO/IEC 25010:2011 [35]. This can be seen by the fact that many of our categories can be directly placed within the characteristics of the software product quality model (namely functional suitability, performance efficiency, compatibility, usability, reliability, security, maintainability, portability).

Both expressiveness and semantics and verification are part of functional suitability. Performance and productivity can be classified under performance efficiency. Furthermore are comprehensibility, conciseness, debugging, ease of writing a transformation, learnability and tool support part of Usability. Maintainability covers analysability and reuse & maintainability. And lastly, extendability and versatility can be classified under portability. This leaves only our generic category just better without a corresponding characteristic which is to be expected.

However, there are also compatibility, reliability and security which have no corresponding categories from our categorization. This does not necessarily mean that the current research is not focused on aspects related to these quality criteria. It instead suggests a lack of concrete statements regarding them. And while security is justifiably less of a concern for model transformation languages, both the compatibility of different approaches and their reliability should definitely be focused on (see also Sect. 5.4).

Lastly, even though most claims we collected during our review could be categorized within the software product quality model we opted to develop a classification based on the claims alone since we believe the resulting categories to be more specialized and allow for a more nuanced view on the subject matter than the generic characteristics defined by ISO/IEC 25010:2011 [35].

5.2 Claims about model transformation languages in context of language features

An effort by us to categorize the extracted claims along an existing taxonomy of model transformation language features such as the one by Czarnecki and Helsen [22] failed because a large portion of claims (~70%) are made broadly without reference to specific features of MTLs that aid the advantage or disadvantage.

We suggest that claims on benefits and disadvantages of model transformation languages be made more specific and include mentions of the features that aid or hamper the benefits. For example, incrementality aids the performance of model transformations since only parts of a transformation have to be re-executed and bidirectional transformation languages provide special support for incremental execution giving them an edge in performance.

5.3 Lack of evidence for MTL advantages and disadvantages

The current literature exhibits a deficit in evidence (empirical or otherwise) for asserted properties of model transformation languages. We believe there to be several factors which can explain this lack of evidence.

First, designing and conducting rigorous studies to examine model transformation languages requires a substantial amount of time and effort. Studies are further complicated by the lack of easily available study subjects due to the community being relatively small compared to the body of general-purpose programming language users. The study described in P59, for example, had to be conducted over the timespan of three semesters and at two universities just to attain 78 subjects. And even when a pertinent number of study subjects is found, ensuring comparable levels of experience within the subjects is another challenge, even more so when collaborating with industrial partners [58].

Relying on the fact that transformation languages are DSLs and hence bear all the benefits that are proclaimed for those might also be a factor. Describing the advantages of DSLs in the introduction of a paper about transformation languages is far from uncommon in the literature. And while we too believe that there are benefits when using DSLs, we would caution against broad usage of the fact that model transformation languages are DSLs to claim them advantageous over general-purpose languages (as is done in publications such as P29, P63 or P804), especially because the manpower that goes into the development of the ecosystems of GPLs far exceeds that of MTLs.

Another problem is that statements can become “established” facts by virtue of being cited by a paper which is in turn cited. Suppose one author claims that model transformation languages are more expressive than GPLs. A second author claims the same thing and references the first author to provide context. Next, a third author, assuming that the second author verifies their claim via the citation, cites the second author to support a similar claim. Over time, this can lead to the statement being treated as a fact rather than an assumption made multiple times. This can be seen on multiple occasions in Fig. 7. P63 makes an unsubstantiated claim (C57) that the expressiveness of MTLs is superior to that of GPLs. This claim is then reiterated by P677 (C65) citing P67. Lastly, P677 is cited by P45 to support their assertion about the expressiveness of model transformation languages (C50-53). Such a chain is not even the worst case in our results. The chain P80 \(\rightarrow \) P675 \(\rightarrow \) P801-804 is even more worrisome, in that some of the claims stated in P80 (C75) actually originate in claims about domain-specific languages from 675 (C1D). P80 claims two advantages of MTLs using P675 as reference. P675 again uses citations to support their claims. However, the papers cited by P675 do not make statements about model transformation languages but DSLs in general. This shows how such chains can create a blurred factual picture. Moreover, in the presented cases it is still possible to find the origin of claims and realize how the claims were changed throughout the citation chains. If authors deemed it unnecessary to support claims that are “established” facts, this is no longer possible. Quite likely this is the case for a non-negligible number of publications (see Table 4) where no citations or any other substantiation for claimed properties of MTLs is given.

As previously described, it is not uncommon for authors to ascribe properties to model transformation languages due to them being DSLs. However, a language does not necessarily have to be more expressive, easier to use or easier to maintain simply by being domain specific. In fact we believe that everything about a DSL stands and falls with the domain itself as well as the design of the language. As a result, all advantages and disadvantages for DSLs, described in the literature, only define potential properties. Thus, it is necessary to evaluate advantages and disadvantages anew for each domain, especially in complex domains such as model transformations.

5.4 Research direction

In our opinion, the research community has to acknowledge that the current way of language development is not expedient. There needs to be a shift away from constant development of new features and transformation languages with, at best, prototypical evaluation. Tomaž Kosar, Bohra and Mernik [44] share this sentiment after a mapping study on the development of DSLs in general (see Sect. 6).

Instead, it is necessary to extensively evaluate current transformation languages, first to identify their actual strengths and weaknesses and then to compare these results with the expected (and desired) results to determine which aspects of MTLs still need improving.

We believe the categories from Sect. 4 to be a good reference for possible areas to evaluate.

It is not necessary to evaluate each category empirically: For some categories, empirical evaluation might not be sensible at all. Such categories include analysability, and semantics and verification for example, since there exist no universally accepted measures to base evaluation on. Additional literature reviews are also conceivable. Analogous to how P77 gathered different reuse mechanisms, a comprehensive review of verification and analysis approaches can be useful to assess the analysability and verifiability of model transformation languages.

Designing and executing appropriate studies also entails significant effort which is why it becomes necessary to carefully weigh up which properties should be evaluated. Additionally, some categories should also be examined more urgently than others.

The ease of writing a transformation and comprehensibility are two such categories for which evaluation is most pressing. Also given that in the domain of programming languages (especially object-oriented programming), many studies exploring the comprehensibility and ease of use, such as Burkhardt et al. [15], Rein et al. [54], and Kurniawan and Xue [47], already exist. Study designs similar to the one described in P59 are in our opinion most suitable for this purpose. This is supported by the fact that many studies for comparing programming languages follow a similar structure in that a common problem or task is solved in multiple languages and the resulting code is analysed [4, 30, 53]. It may also be useful to design the cases in such a way that the complete capabilities of the used transformation languages have to be used. In the study described in P59, for example advanced features such as QVTs late resolve were not part of the evaluation. Such a design can help to better understand if the most “advanced” features of transformation languages have practical value and how complex a GPL for these features is.

Comprehensibility can also be tested in isolation by requiring subjects to describe functionality of given transformations written in both a dedicated model transformation language and a GPL.

According to Mohagheghi et al. [51], one of the main motivations for adopting MDE in industry is to improve productivity; hence, we believe that evaluation of the productivity when using model transformation languages should be a focus too. Admittedly measuring productivity is a challenging task, a fact that has been observed as early as 1978 [37]. But since then, numerous ways have been proposed and tested out in practice [10, 13] which should allow for productivity studies on MTLs to be carried out. A potential study into the productivity could require subjects to develop transformations in either a model transformation language or a general-purpose language within a certain time frame followed by measuring and comparing how productive the subjects were in both cases. Researchers can also draw from the large corpus of productivity studies on different aspects of programming, such as Wiger and Ab [66], Frakes and Succi [25] and Dieste et al. [23].

The performance of model transformations can have huge impact on development, especially when multiple transformations have to be executed in succession. Many language engineers already pay tribute to that fact by providing performance comparisons between their languages and other MTLs or general-purpose languages such as Java [32, 46]. And the Transformation Tool Contest (TTC) provides a venue for comparing MTLs. However, we believe extensive comparisons between the performance of model transformation languages and general-purpose programming languages to be necessary to abolish the prejudice that dedicated transformation languages cannot outperform current compilers. Comparison of performance between different programming languages that are used for the same purpose is a well-established practice demonstrated by comparisons between Java and C++ for robotics programming done by Gherardi, Brugali and Comotti [27] or C++ and F90 for scientific programming by Cary et al. [18]. Performance comparisons are also common practice in other domains such as GPU programming where specialized DSLs are used and performance is of high importance (Karimi et al. [24]). It is conceivable to compare the performance of transformations written in dedicated MTLs and GPLs by either manually solving the same tasks as described previously or by using existing mechanisms (for example Calvar et al. [17]) for transforming transformation scripts written in a MTL into GPL code.

We also believe that special focus needs to be given to the question of what model transformation languages are expected to achieve (such as easy synchronization of multiple artefacts or fast transformations through incremental transformations): first, because this can allow to direct more resources on evaluating relevant aspects of MTLs; and second, because model transformation languages will appear more streamlined and mature when the focus of development lies in improving their core features instead of overloading them with “experimental” features. An opinion Tomaž Kosar et al. [44] share is that this can enable practitioners to truly understand the effectiveness and efficiencies of DSLs.

6 Related work

To the best of our knowledge, there exists no other literature review that explores advantages and disadvantages of model transformation languages. There does, however, exist some literature that can be related to our work.

A closely related survey and open discussion about the future of model transformation languages was held by Cabot and Gérard [16]. They report on the results of an online survey and subsequent open discussion during the 12th edition of the International Conference on Model Transformations (ICMT’2019). The survey was designed to gather information about why developers used MTLs or why they hesitate to do so and what their predictions about the future of these languages were. An open discussion was held after the results of the online survey were presented to the audience at ICMT’2019. The results of the study point towards MTLs becoming less popular not only because of technical issues but also due to tooling and social issues as well as the fact that some GPLs have assimilated ideas from MTLs and thus making them less bad alternatives to writing transformations in dedicated languages.

Hutchinson et al. [34] conducted an empirical study into MDSE in industry. The authors used questionnaires and interviews to explore different factors that influence the success of MDSE in organizations and attempt to provide empirical evidence for hailed benefits of MDSE. They report on a total of over 250 questionnaire responses as well as interviews with 22 practitioners from 17 different companies. While the main focus of the study was on MDSE adoption in general, the authors do report on some findings regarding model transformations, such as negative influences of writing and testing transformations on the productivity and influences of transformations on the portability. However, no results regarding used transformation languages are included.

Mens and Gorp [49] propose a taxonomy for model transformation languages. They define groups of transformation languages based on answers to a set of questions. The answers are split into multiple subgroups themselves. The authors describe in great detail different possible characteristics within the groups. In part, this also includes listings of properties for transformation languages that fall into specific groups. The authors, however, have not provided any evidence or more precise explanations. Similarly, Czarnecki and Helsen [22] propose a classification framework for model transformation approaches based on several approaches such as VIATRA, ATL and QVT. The framework is given as a feature diagram to allow to explicitly highlight different design choices for transformations. At the top level, the feature model contains features such as rule organization, incrementality, directionality and tracing. Each feature and its sub-components are extensively discussed and demonstrated with examples of transformation tools that boast different aspects of the features. In contrast to the two described classifications, our study categorizes claims about MTLs on a qualitative dimension rather than on language features.

Kahani et al. [39] describe a classification and comparison of a total of 60 model transformation tools. Their classification differentiates tools based on two levels. The first level describes whether the tool is a model-to-model (M2M) or model-to-text (M2T) tool. The second level differentiates M2M tools based on their transformation approach meaning whether the approach is relational, operational or graph-based and M2T tools based on the underlying implementation approach meaning visitor-based, template-based or hybrid. Unlike our study, the described comparison focuses on comparing different model transformation tools on a technical basis based on six categories (general, model level, transformation, user experience, collaboration support and runtime requirements), while we focus on qualitative aspects of claims made throughout literature about any kind of dedicated model transformation language.

Van Deursen et al. [62] gathered an annotated bibliography on the premise of domain-specific languages versus generic programming languages. The bibliography contains 73 different DSLs differentiated by their application domains: Software Engineering, Systems Software, Multi-Media, Telecommunication and Miscellaneous. Additionally, they provide a discussion of terminology as well as risks and benefits of DSLs. And while parts of the listed risks and benefits such as enhanced productivity or cost of education can be found in the listed advantages and disadvantages of our literature review, their bibliography does not contain any model transformation languages.

Tomaž Kosar et al. [44] report on the results of a systematic mapping study they conducted to understand the DSL research field, to identify research trends and to detect open issues. Their data comprised a total of 1153 candidates which they condensed into 390 publications for classification. The results from the study corroborate observations made during our literature review. The research community is mainly concerned with the development of new techniques, while research into the effectiveness of languages and empirical evaluations is lacking.