Abstract

We propose a method for characterizing the local structure of weighted multivariate time series networks. We draw intensity and coherence of network motifs, i.e. statistically recurrent subgraphs, to characterize the system behavior via higher-order structures derived upon effective transfer entropy networks. The latter consists of a model-free methodology enabling to correct for small sample biases affecting Shannon transfer entropy, other than conducting inference on the estimated directional time series information flows. We demonstrate the usefulness of our proposed method with an application to a set of global commodity prices. Our main result shows that, despite simple triadic structures are the most intense, coherent and statistically recurrent over time, their intensity suddenly decreases after the Global Financial Crisis, in favor of most complex triadic structures, while all types of subgraphs tend to become more coherent thereafter.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Network models received early recognition as useful tools in the social science community, when Social Networks began to publish in 1978, and several articles on network analysis appeared in the Journal of the American Statistical Association directly after - see Fienberg (2012). Since then, the number of papers by social scientists and statisticians has been surpassed by those of computer scientists and statistical physicists. Nevertheless, the study of network connectedness is gaining much importance in statistics, econometrics and risk management (see Lauritzen and Wermuth 1989; Billio et al. 2012; Giudici and Spelta 2016; Bosma et al. 2019; Baruník et al. 2020; Chen et al. 2021; Han et al. 2021; Giudici et al. 2023).

Time series network models have become a cornerstone approach in the financial literature to study shock transmission across asset prices (see e.g. Billio et al. 2012; Pagnottoni and Spelta 2022; Pagnottoni 2023). Such models widen the category of statistical models applied in social sciences, and many of them respond to the need for modeling strategies that are capable of investigating nonlinear market interactions and responses to large, exogenous shocks such as climate and pandemic shocks (see e.g. Pagnottoni et al. 2021, 2022; De Giuli et al. 2022; Spelta and Pagnottoni 2021b). Amongst these, transfer entropy, first introduced by Shannon, has emerged as a fundamental tool for the quantification of the statistical soundness between systems evolving in time (Schreiber 2000). From a statistical viewpoint, transfer entropy is regarded as a nonlinear generalization of Granger causality (Barnett et al. 2009). A number of papers show the exact equivalence between Granger causality and transfer entropy for given assumptions on the data generating processes: this enables to view transfer entropy as a nonparametric test of pure Granger causality under certain conditions (Barnett et al. 2009; Hlavácková-Schindler 2011; Barnett and Bossomaier 2012). These topics drew the attention of many statisticians and empirical researchers, who have widely investigated properties and applications of transfer entropy - see, e.g. Gupta et al. (2007); Dimpfl and Peter (2013); Toomaj (2017); Caserini and Pagnottoni (2022). Particularly, Dimpfl and Peter (2013) have proposed an alternative methodology, named effective transfer entropy, to examine the information flow between two time series, which underpins our proposed method. Effective transfer entropy allows to eliminate small sample biases induced by traditional transfer entropy measures, as well as to make statistical inference on the estimated information flows.

Transfer entropy is a convenient metric to characterize the evolution of temporal networks over time, as it consists of a model-free approach able to detect statistical dependencies without being restricted to linear dynamics, and construct adjacency matrices representing information flows across asset prices. In our paper, transfer entropy is the building block to derive time series networks. In particular, we consider networks whose nodes are time-varying random variables and whose edges are the mutual transfer entropy between these variables. This enables to model the evolution of network linkages across financial assets over time and analyze them through the lens of network theory.

In temporal networks, the notion of centrality measures (Newman et al. 2006; Newman 2018) enables to rank nodes and link over time. This concept is of utmost importance, and is summarized by node degree and strength, i.e. the number of directly connected neighbours of a node, and the sum of the weights of its links. Despite their simplicity in terms of interpretation, degree, strength and similar centrality measures often stem from pairwise relationships among variables, disregarding the fact that interactions might simultaneously occur across a group of nodes. This is equivalent to say that dyads are not always suitable to describe the interactions across nodes in a network.

Against this background, we characterize the local structure of weighted effective transfer entropy networks by means of statistically validated measures of intensity and coeherence on their recurrent subgraphs. While “intensity” is defined as the geometric mean of link weights, “coherence” is defined as the ratio of the geometric to the corresponding arithmetic mean. These two measures are able to generalize motifs scores and clustering coefficients to weighted directed networks, which are suitable structures to analyze financial price connectedness and might considerably modify the conclusions obtained from the study of unweighted characteristics (Onnela et al. 2005). In other words, intensity and coherence allow us to identify statistically recurrent subgraphs, i.e. network motifs, their dynamic properties and significance over time, so to characterize the system behavior via higher-order structures which might heavily drive time series co-movements.

We apply our methodology to study the interconnected dynamics of a set of 63 global commodity prices over a 30-year period ranging from January 1990 to May 2020. The application to the set of commodity prices is illustrative of the usefulness of the methodology in detecting ”unexpected” or ”anomalously exceptional” edges, i.e. connections between commodity prices. Our results show how different types of motifs occur at starkly diverse rates of intensity in the commodity transfer entropy network. Particularly, the intensity of simple triadic structures decrease in the aftermath of the Global Financial Crisis in spite of that of more complex ones, whereas the coherence of all types of subgraphs tend to increase. This suggests that, while on average link intensity decreases (increases) for simple (complex) subgraphs, the weights of links attached to nodes become internally more coherent, i.e. they become less heterogeneous as a consequence of such shock.

Our study is casted in the network theory literature (Squartini and Garlaschelli 2012; Squartini et al. 2013) which concentrates on diadic or, at most, triadic structures. The fundamental reason for this is that there are only 13 conceivable combinations of three nodes, whereas there are 64 if one is interested in 4-way motifs, which makes interpretation highly challenging. 4-way motifs are studied in different disciplines, such as genetics and chemistry. Only a portion of them are considered since, a priori, some 4-way motifs, such as the feed-forward or the bi-fan and bi-parallel, are linked to particular roles of genes in regulatory networks or of cells in neuronal networks (Milo et al. 2002).

The remainder of the paper is structured as follows. Section 2 introduces the methodological framework. In section 3 we develop our empirical application. Section 4 concludes.

2 Methodology

2.1 Effective transfer entropy

Shannon (1948) derived a measure for the uncertainty by averaging, over all possible states, the amount of information gained from a certain state of a random variable which can be assumed by the random variable itself. The formulation of transfer entropy in the context of time series is founded on the Shannon transfer entropy, and has been outlined by Schreiber (2000). Let us consider a discrete random variable X whose probability distribution is \(p_X (x)\), where x denotes all possible outcomes that the random variable X can assume. The definition of entropy formulated by Shannon (1948) corresponds to the average number of bits needed to optimally encode independent draws from the distribution of X, formally:

where the sum goes over all possible states x the random variable X can assume, and \(\log (\cdot )\) represents the logarithm function, whose basis varies across different applications (base 2 is one of the most commonly used, and gives the unit of bits, or ”Shannons”). From the interpretation viewpoint, Shannon’s entropy can be seen as the average level of uncertainty associated to the variable’s possible outcomes.

The Kullback–Leibler distance (Kullback and Leibler 1951) establishes the link between uncertainty and information by defining the excess number of bits in the encoding in case of erroneous assumption on the probability distribution of X. In a bivariate setting, let Y be a second discrete random variable with probability distribution \(p_Y (y)\). The Kullback–Leibler (KL) distance of the two random variables, also known as mutual information, is

where \(p_X (x)\) and \(p_Y (y)\) denote the marginal probability distributions of X and Y. Despite mutual information measures any form of statistical dependency between two random variables, its symmetry makes it unsuitable to measure directional information exchange.

In the time series framework, Schreiber (2000) outlined a dynamic structure based on transition probabilities built upon such measures. The underlying assumption is that the dynamic structure of a discrete random variable Y corresponds to a stationary Markov process of order k. This implies that the probability of observing Y at time \(t+1\) in state y, conditional on k previous observations, is \(p(y_{t+1}|y_t,...,y_{t-k+1})=p(y_{t+1}|y_t,...,y_{t-k})\). Then, the average amounts of bits required to encode an additional time series observation if the previous values are known is \(h_Y(k)=- \sum _{y}^{}p(y_{t+1},y_t^{(k)}) \, \log \, (p(y_{t+1}|y_t^{(k)}))\), where \(y_t^{(k)}=(y_t,...,y_{t-k+1})\). In the bivariate case, Schreiber (2000) measures the information flow from time series X to time series Y by as the deviation from the generalized Markov property, namely the transfer entropy

where \(x_t^{(l)}=(x_t,...,x_{t-l+1})\). Notice that such measure is asymmetric, meaning it allows to detect the dominant direction of the information flow when computed for both directions.

Given that equation (3) is designed for discrete time series, whereas in economic and financial applications time series are mostly continuous, time series are generally partitioned into discretized values. The discretized series \(S_t\) is obtained via a partition of the generic time series \(z_t\) into n bins:

where the choice of the number of bins and quantiles is, in general, motivated by the data and the aims of the analysis.

2.1.1 Inference on time series transfer entropy

Marschinski and Kantz (2002) show that, due to small sample effects, the transfer entropy estimates derived in equation (3) are likely to be biased. Therefore, the authors propose an alternative measure, i.e. the effective transfer entropy, obtained by subtracting to the original transfer entropy in equation (3) a transfer entropy computed on a shuffled version of the time series of the variable X, namely

with \(T_{X_{s} \rightarrow Y}(k,l)\) denoting the transfer entropy determined on a shuffled version of the series X. As shuffling is implemented via a random drawing of the realizations from the distribution of X, the statistical dependencies between the two time series are annihilated. This is equivalent to state that \(T_{X_{s} \rightarrow Y}(k,l)\) converges to zero as the sample size increases, and that any non-null value of \(T_{X_{s} \rightarrow Y}(k,l)\) can be attributed to small sample bias. Averaging across repeated shuffling and estimation of the transfer entropy is a common practice to determine effective transfer entropy.

In order to conduct statistical inference on the estimated information flows, we bootstrap the underlying Markov process building on Dimpfl and Peter (2013), rather than simply shuffling the time series and computing the corresponding effective transfer entropy. While this procedure annihilates the statistical dependencies between the two time series, as for the shuffling one, the main difference consists of the fact that it preserves the dependencies within the variables X and Y. Indeed, when shuffling the series, single discretizations of the time series are randomly rearranged into blocks which may not even occur in the actual sample. When bootstrapping, instead, the discretized values are rearranged based on the probabilities with which they occur in the actual sample. As a matter of fact, the joint probabilities of specific blocks of length k which need to be estimated within the series X correspond more closely to the observed ones in the sample.

In summary, such procedure yields the distribution of the estimates under the null hypothesis of no information flow, and it can be obtained by repeating the estimation of the transfer entropy using the bootstrapped time series. This yields Z-scores and p-values, which can be used to conduct standard inference on the effective information flows exchanged between random variables. While the effective transfer entropy measure will be the basis to construct the adjacency matrix of our temporal network of asset price connectedness, standard p-values will be used in order to retain in the network structure solely the significant flows of information across time series.

2.2 Statistically recurrent subgraphs in weighted directed networks

The concept of a motif was originally introduced by Milo et al. (2002) as “patterns of interconnections occurring in complex networks at numbers that are significantly higher than those in randomized networks”. In other words, the frequency with which a subgraph emerges in a network can be used to describe the local structure of unweighted networks.

On the other hand, when dealing with weighted networks, it becomes more natural to approach this concept in terms of intensities (Onnela et al. 2005), as opposed to the number of occurrences, where the latter is obtained as a special case of the former. In this setup, one can determine the “intensity” of a subgraph as the geometric mean of its link weights, and a motif’s “coherence” as the ratio of the geometric to the equivalent arithmetic mean. Then, motifs showing statistically significant deviation from some reference system can be referred to as high or low intensity motifs.

To expand the motif detection method for weighted networks, these are generally thought of as a fully connected graph with some links having zero weights. We introduce the intensity I(g) of subgraph g with vertices \(v_g\) and links \(l_g\) as the geometric mean of its weights

where \(\left| l_g\right|\) is the number of links in \(l_g\), and “\(w_{ij}\) represents the generic element (i, j) of the weighted adjacency matrix W constructed via pairiwise transfer entropy. The aforementioned definition implies a shift in perspective from viewing subgraphs as discrete objects (either they exist or do not exist) to a continuum of subgraph intensities, with zero or very low intensity values implying that the subgraph in question does not exist or exists at a practically insignificant intensity level. Due to the nature of the geometric mean, the subgraph intensity I(g) may be low because one of the weights is very small, or it might result from the case where all of the weights result low. In order to distinguish between these two extremes, we apply the concept of subgraph coherence Q(g), which is defined as the geometric mean to the arithmetic mean of the weights

where \(Q \in [0,1]\) and it is close to unity only if the subgraph weights do not differ much, namely when they are internally coherent.

We define the total intensity \(I_M\) of a motif M in the network as the sum of its subgraph intensities \(I_M=\Sigma _{g \in M} I(g)\). Similarly, the total coherence of a motif \(Q_M\) is computed as the sum of its subgraph coherence \(Q_M=\Sigma _{g \in M} Q(g)\).

2.3 Maximum likelihood inference via null models with strength preserving constraints

In order to conduct standard inference, the statistical significance of the presence of motifs can be determined by comparing the distributions of motifs intensity and coherence to those generated from an ensemble of adequately randomized networks. Such randomized networks are regarded as constituents of a null hypothesis, since their topological structure is produced via a method in which no selection is applied to the network’s component motifs. In order to conduct a thorough comparison, we employ randomized networks that are identical to the real network in terms of single-node characteristics. Specifically, since we are dealing with weighted directed networks, we preserve the in-strength and out-strength of each node, namely the sum of incoming and outgoing links weights. In this way, we are able to control for patterns that only occur due to the network’s single-node characteristics.

In other words, we aim at discriminating whether the \(I_M\) and \(Q_M\) statistics are fully determined by the local centrality measures. Accordingly, we construct null models by preserving the strength of nodes to investigate whether \(I_M\) and \(Q_M\) are significantly over- (or under-) represented in the real network compared to the null models.

To achieve this goal, we opt for family of statistically randomized benchmarks, i.e. ensembles of graphs where the local heterogeneity is the same as in the real network, while the topology is random in any other respect. Nontrivial relevant motifs are then detected in the form of empirical deviations from the theoretical expectations of the null model (see Garlaschelli and Loffredo 2008, 2009; Squartini and Garlaschelli 2011). To build graph ensembles, we use maximum likelihood estimation where model parameters, e.g. the node strength, is fixed such that its expected value match the empirically observed one.

Let \(P(G \mid \mathbf {\theta })\) be the conditional probability of occurrence of a graph G, depending on the set of parameters \(\mathbf {\theta }\). For a realization of the graph \(G=G^{\prime }\), \(P(G^{\prime } \mid \mathbf {\theta })\) is the likelihood that \(G^{\prime }\) is generated by the parameter choice \(\mathbf {\theta }\). Therefore, for fixed \(G^{\prime }\), the maximum likelihood estimator of \(\mathbf {\theta }\) is the value \(\mathbf {\theta }^{*}\) maximizing \(P(G^{\prime } \mid \mathbf {\theta })\) or, equivalently, \(\lambda (\mathbf {\theta }) \equiv \ln P(G^{\prime }\mid \mathbf {\theta })\).

Considering our weighted directed network, in the case of strength preserving, each set of nodes is characterized by strength vectors, whose components are \(k_{v} \equiv \sum _{u} TE(v,u)\) for the in-strength and \(k_{u}\equiv \sum _{v} TE(v,u)\) for the out-strength. The model constrains the strength sequences to those of the real effective transfer entropy network.

The unknown parameter vectors \(\mathbf {\theta }_{v}\) and \(\mathbf {\theta }_{u}\) can be determined by maximizing the log-likelihood function

where

and \(p_{v,u} \equiv \mathbf {\theta }_{v}\mathbf {\theta }_{u}\). Once solutions are found, we can draw weighted links from vertex v to vertex u using the corresponding geometric distribution.

Motif intensity and coherence are considered statistically significant if such values, computed on the original graph, are exceptionally higher (lower) compared to their mean derived from random networks under the null model. To assess such exceptionality, Milo et al. (2002) propose to employ standard inference to derive statistical significance of motif occurrences. The standard Z-score can be defined as

where \(N_M\) is the number of subgraphs in motif M in the empirical network and \(\left\langle n_M\right\rangle\) is the expectation of their number in the reference ensemble, and \(\sigma _M\) is the standard deviation of the latter. Replacing the number of subgraphs by their intensities the Z-score becomes

where \(i_M\) is the total intensity of motif M in one realization of the reference system. In an analogous way, we introduce the motif coherence score as

where \(Q_M\) and \(q_M\) are the total coherence for motif M in the empirical network and in one realization of the reference system, respectively. The intensity (coherence) of a 3-node subgraph (motif) is thus considered statistically significant if its value in the original graph is exceptionally higher compared to that produced by random networks under the null model. The Z-score being defined as the difference of the intensity (coherence) of a motif M in the target network and its mean average value in a sufficiently large set of randomized networks, divided by the standard deviation allows to measure of the statistical significance of intensity (coherence) for a particular motif.

3 Application to commodity price co-movements

We apply our methodology to analyze data provided by the World BankFootnote 1 on selected monthly prices of 63 globally widespread commodities belonging to different sectors: Energy (Coal, Crude Oil, Natural Gas), Agriculture (Food, Beverages and Agricultural Raw Materials) and Fertilizers, Metals and Minerals, Precious Metals. The sample period ranges from January 1990 to May 2020. We refer the reader to Table 1 for the complete list and description of commodities. We perform a dynamic analysis in which we derive the effective transfer entropy measures and related network statistics on a rolling window basis, setting the estimation width to 250 monthly observations. Effective transfer entropy is obtained by discretizing the observations into three bins, by using the 5% and 95% empirical quantiles of the return time series as lower and upper bounds for binning, resulting in a symbolic encoding where the first (third) bin includes the extreme negative (positive) returns, meaning tails observations are selected (Dimpfl and Peter 2013). Throughout our analysis, we select only statistically significant values of the transfer entropy, meaning values for which the associated p-value does not exceed the \(\alpha =0.1\) significance level.

Before entering into the details of the empirical application of weighted motifs detection in transfer entropy networks, we report in Fig. 1 all possible 3-node sub-graphs, i.e. triadic structures for unweighted networks. The figure is instrumental to associate all possible motifs, labeled as \(m = 1,..., 13\), and their corresponding triadic structure.

Close Triangle Motif. The figure the difference between motif frequency (unweighted case) and motif intensity (weighted case) for the reciprocated triangle \(m=13\). The link weight among nodes j and k gradually decreases from left to right. The motif frequency is equal for the first three triangles and drops to zero suddenly for the fourth triangle, while the intensity tends smoothly to zero when the link weight decreases

Moreover we report, in Fig. 2 a schematic illustration of the difference between the reciprocated triangle \(m=13\) frequency in the unweighted framework and intensity in the weighted one. The weight of the link among nodes j and k gradually decreases from left to right. The motif occurrence, i.e. the frequency in the unweighted framework, is equal for the first three triangles, whereas it suddenly drops to zero for the fourth triangle. In contrast, the intensity of the motif in the weighted case tends smoothly to zero when the link weight decreases.

We start our analysis by investigating the topological structure of the transfer entropy network recorded at different points in time. As an illustrative example we show in Fig. 3 two snapshots of the network topology during a ”business as usual phase” (left panel) and during the Global Financial Crisis (right panel). The figure shows how, during a crisis period, multiple hubs referred to different commodity classes emerge, such as those related to metals and food commodities. During a normal business period, instead, the transfer entropy network tends to become more randomly oriented, with less nodes functioning as hubs.

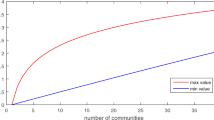

Moreover, we explore the evolution of the aggregate structure of the transfer entropy network by reporting, in Fig. 4, the dynamics of the total number of links and of the total network flow, i.e. the sum of link weights, over the analyzed period. We observe an increase of the system interconnectedness during the Global Financial Crisis. Indeed, the increase in oil prices in the period of 2007 to 2008 was a significant cause of the recession which led commodity prices to rise by roughly 75% in real terms on average during mid-2008 (see Spatafora and Tytell 2009). This was the broadest surge in terms of the number and groups of commodities involved (Baffes and Haniotis 2010). Secondly, network connections remain quite stable after a decrease occurred in 2010–2012 when the economic slowdown in China was a key factor driving the commodity prices burst. Moreover, we observe a sharpe decrease in 2017–2018. This suggests that overall interconnectedness and, therefore, systemic risk of global commodity prices has considerably decreased over recent times.

Finally, we report, in Figs. 5 and 6 the degree and strength distribution for the pooled network. These measures represent the number of links attached to a node and the sum of link weights, respectively, and are indicative of the importance of each node in the network. Moreover, since we are dealing with a directed network, we split these statistics into incoming and outgoing (in- and out-) centrality measures to asses the importance of each node as receiver or as a transmitter of systemic risk. Notice that, on average, oil and natural gas, together with raw material have higher out-degree (strength) with respect to the in-degree (strength) meaning that they are mainly shock propagators in terms of transmitted transfer entropy, while food commodities present higher in-degree (strength), thereby mainly being shock receivers.

We now turn our attention on the dynamic evolution of network motifs. Firstly, we investigate the behavior of the motif intensity and coherence computed on the transfer entropy network. Secondly, we study the patterns of the variables in deviation form the results obtained from 1000 bootstrap replications of the weighted directed configuration model that is employed as a null model benchmark. Figure 7 shows the intensity and coherence dynamic for each triadic structure. The motifs are labelled \(m=1,...,13\) and we refer the reader to Fig. 1 for the associated triadic structures. From Fig. 7, it is evident how diverse types of motifs occur at starkly different rates of intensity in the commodity transfer entropy network. In particular, “v”-like motif (motifs from 1 to 4) are the most intense structures, whereas more complex sub-structures are less present in the network. Moreover, we observe that the Global Financial Crisis has caused an instantaneous growth in the intensity of triangle sub-graphs, including the most complex triadic structures, with a consequent decrease of the simpler “v”-like motifs. Additionally, as opposed to the patterns observed for the intensity, coherence generally increases during the 2009 crisis, meaning that during this turbulent market phase motif weights do not differ much, i.e. they become internally coherent. Finally, during the years 2017–2018 we observe a decrease in all motifs coherence but only the intensity of close motifs, i.e. triangles, decreases while “v”-like motif intensity remains to the pre-crisis level.

We further investigate whether the emerging triadic configurations can be explained in terms of the resulting heterogeneity of the network vertices. Before comparing the observed frequencies with the expected ones, we report in Fig. 8 the intensity and coherence distribution of each motif for the last window of the sample. The distributions of statistics related to motifs are generally bell shaped, centered on different averages, with a more prominent right tail, especially for the ‘v”-like triadic structures. This is a common feature of motif intensity and coherence in many kind of networks, particularly when networks exhibit a large number of links with low weights (Fig. 9).

The presence of statistically significant recurrent sub-structures in the transfer entropy network are determined through standard inference on their observed intensity and coherence as opposed to that of randomized networks. Indeed, our most informative findings will correspond to a deviation, rather than an agreement, with null models. To this aim, Fig. 10 shows the dynamic of the Z-scores associated to the different types of motifs which emerge from the transfer entropy network.

Motifs on transfer entropy networks are highly dynamic but their significance does not dramatically change over time. Interestingly, single link “v”-like motifs, which represent the simplest triadic structures, exhibit relatively high Z-scores over the whole sample, and the 2008 crisis only produces a decrease in their intensity Z-score, but an increase in the coherence one. On the other hand, most complex sub-graphs such as reciprocated “v”-like motifs and triangles show an opposite behaviour. Another compelling result is given by the fact that motifs number 7 and 8 show a spike in their intensity Z-score with the advent of the Global Financial Crisis, which turned these structures to be statistically over-represented.

Finally, we have aggregated the motifs intensity and coherence Z-score by node. We observe that, overall, the average node intensity is positive, while the opposite holds for the coherence. Negative values are mostly given by the most complex triadic structures, while positive Z-scores are associated with simpler “v”-like motifs. Moreover, we observe that the highest values are associated with food and materials commodities. As far as energy commodities are concerned, only oil exhibits relative high values, while the opposite happens for natural gas, particularly for the US commodity.

One of the main limitations might consist in the fact that the null model considered in the paper constrains the expected strength sequence, regardless of the degree. We have performed a replication exercise constraining the degree sequence, rather than the strength sequence, whose results are contained in Appendix.

4 Conclusion

In this paper we propose a method for characterizing the local structure of weighted multivariate time series networks. In particular, we exploit the topological structure outlined by effective transfer entropy to derive statistically validated measures of intensity, i.e. the geometric mean of link weights, and coherence, namely the ratio of the geometric to the corresponding arithmetic mean, of multivariate time series observations. Effective transfer entropy enables us to correct for small sample biases which affect the traditional transfer entropy measures, as well as it allows to make inference on the estimated directional information flows. Intensity and coherence allow us to identify statistically recurrent subgraphs, i.e. network motifs, their dynamic properties and significance over time, so to characterize the system behavior via higher-order structures which allegedly consist of important drivers of time series co-movements.

Our methodology is employed to study the network connectedness of a set of commodity prices. Our main result shows that simple triadic structures are the most intense, coherent and statistically recurrent ones. However, their intensity suddenly decreases after the Global Financial Crisis, in favor of most complex triadic structures. Despite that, coherence of all types of subgraphs tend to surge after such event, meaning that link weights have become less heterogeneous since then and for a considerable period of time thereafter.

We remark that there is room for further investigations in this field by means of alternative modelling strategies for the multivariate time series connectivity matrices - see, e.g., Spelta and Pagnottoni (2021a), Celani et al. (2023), Spelta and Pecora (2023), Pagnottoni et al. (2023), Celani and Pagnottoni (2023). Effective transfer entropy is just a measure of a broader class of techniques which economic and financial theory could still much benefit from, and other multivariate time series models can be employed and compared. Moreover, other techniques borrowed from network theory can unveil useful network properties of time series co-movements, which could be employed as statistically validated measures of systemic risk and/or early waning signals of market crises, possibily enhancing extant portfolio selection strategies - see, e.g., Spelta et al. (2022).

The interdisciplinary future trajectories of research call for a more in depth investigation of such topics from a wider variety of viewpoints and disciplines.

Data Availability

Data are available at https://www.worldbank.org/en/research/commodity-markets

References

Baffes J, Haniotis T (2010) Placing the 2006/08 commodity price boom into perspective. World Bank Policy Research Working Paper (5371)

Barnett L, Bossomaier T (2012) Transfer entropy as a log-likelihood ratio. Phys Rev Lett 109(13):138,105

Barnett L, Barrett AB, Seth AK (2009) Granger causality and transfer entropy are equivalent for gaussian variables. Phys Rev Lett 103(23):238,701

Baruník J, Bevilacqua M, Tunaru R (2020) Asymmetric network connectedness of fears. The Review of Economics and Statistics pp 1–41

Billio M, Getmansky M, Lo A, Pelizzon L (2012) Econometric measures of connectedness and systemic risk in the finance and insurance sectors. J Financ Econ 104:535–559

Bosma JJ, Koetter M, Wedow M (2019) Too connected to fail? inferring network ties from price co-movements. J Bus Econ Stat 37(1):67–80

Caserini NA, Pagnottoni P (2022) Effective transfer entropy to measure information flows in credit markets. Stat Methods Appl 31(4):729–757

Celani A, Pagnottoni P (2023) Matrix autoregressive models: generalization and bayesian estimation. Stud Nonlinear Dyn Econom. https://doi.org/10.1515/snde-2022-0093

Celani A, Cerchiello P, Pagnottoni P (2023) The topological structure of panel variance decomposition networks. J Financ Stab, Forthcoming

Chen CYH, Okhrin Y, Wang T (2021) Monitoring network changes in social media. J Bus Econ Stat (just-accepted):1–34

De Giuli ME, Flori A, Lazzari D, Spelta A (2022) Brexit news propagation in financial systems: multidimensional visibility networks for market volatility dynamics. Quant Financ 22(5):973–995

Dimpfl T, Peter FJ (2013) Using transfer entropy to measure information flows between financial markets. Stud Nonlinear Dyn Econom 17(1):85–102

Fienberg SE (2012) A brief history of statistical models for network analysis and open challenges. J Comput Graph Stat 21(4):825–839

Garlaschelli D, Loffredo MI (2008) Maximum likelihood: extracting unbiased information from complex networks. Phys Rev E 78(1):015,101

Garlaschelli D, Loffredo MI (2009) Generalized bose-fermi statistics and structural correlations in weighted networks. Phys Rev Lett 102(3):038,701

Giudici P, Spelta A (2016) Graphical network models for international financial flows. J Bus Econ Stat 34(1):128–138

Giudici P, Pagnottoni P, Spelta A (2023) Network self-exciting point processes to measure health impacts of COVID-19. J R Stat Soc Ser A: Stat Soc https://doi.org/10.1093/jrsssa/qnac006

Gupta AK, Harrar SW, Pardo L (2007) On testing homogeneity of variances for nonnormal models using entropy. Stat Methods Appl 16(2):245–261

Han X, Hsieh CS, Ko SI (2021) Spatial modeling approach for dynamic network formation and interactions. J Bus Econ Stat 39(1):120–135

Hlavácková-Schindler K (2011) Equivalence of granger causality and transfer entropy: A generalization. Appl Math Sci 5(73):3637–3648

Kullback S, Leibler RA (1951) On information and sufficiency. Ann Math Stat 22(1):79–86

Lauritzen SL, Wermuth N (1989) Graphical models for associations between variables, some of which are qualitative and some quantitative. The annals of Statistics pp 31–57

Marschinski R, Kantz H (2002) Analysing the information flow between financial time series. Eur Phys J B-Condens Matter Complex Syst 30(2):275–281

Milo R, Shen-Orr S, Itzkovitz S, Kashtan N, Chklovskii D, Alon U (2002) Network motifs: simple building blocks of complex networks. Science 298(5594):824–827

Newman M (2018) Networks. Oxford University Press, Oxford, England

Newman ME, Barabási ALE, Watts DJ (2006) The structure and dynamics of networks. Princeton University Press, Oxford, England

Onnela JP, Saramäki J, Kertész J, Kaski K (2005) Intensity and coherence of motifs in weighted complex networks. Phys Rev E 71(6):065,103

Pagnottoni P (2023) Superhighways and roads of multivariate time series shock transmission: application to cryptocurrency, carbon emission and energy prices. Physica A 615(128):581

Pagnottoni P, Spelta A (2022) The motifs of risk transmission in multivariate time series: application to commodity prices. Socio-Economic Planning Sciences p 101459

Pagnottoni P, Spelta A, Pecora N, Flori A, Pammolli F (2021) Financial earthquakes: Sars-cov-2 news shock propagation in stock and sovereign bond markets. Physica A 582(126):240

Pagnottoni P, Spelta A, Flori A, Pammolli F (2022) Climate change and financial stability: natural disaster impacts on global stock markets. Physica A 599(127):514

Pagnottoni P, Famà A, Kim J-M (2023) Financial networks of cryptocurrency prices in time-frequency domains. Qual Quant. https://doi.org/10.1007/s11135-023-01704-w

Schreiber T (2000) Measuring information transfer. Phys Rev Lett 85(2):461

Shannon CE (1948) A mathematical theory of communication. Bell Syst Tech J 27(3):379–423

Spatafora N, Tytell I (2009) Commodity terms of trade: The history of booms and busts

Spelta A, Pagnottoni P (2021a) An alternative approach for nowcasting economic activity during covid-19 times. Book of Short Papers SIS 2021:126–131

Spelta A, Pagnottoni P (2021b) Mobility-based real-time economic monitoring amid the COVID-19 pandemic. Sci Rep 11(1):13069

Spelta A, Pecora N (2023) Wasserstein barycenter for link prediction in temporal networks. Stat Soc, J Roy Stat Soc Ser A. https://doi.org/10.1093/jrsssa/qnad088

Spelta A, Pecora N, Pagnottoni P (2022) Chaos based portfolio selection: a nonlinear dynamics approach. Expert Syst Appl 188:116055

Squartini T, Garlaschelli D (2011) Analytical maximum-likelihood method to detect patterns in real networks. New J Phys 13(8):083,001

Squartini T, Garlaschelli D (2012) Triadic motifs and dyadic self-organization in the world trade network. In: International Workshop on Self-Organizing Systems, Springer, pp 24–35

Squartini T, Van Lelyveld I, Garlaschelli D (2013) Early-warning signals of topological collapse in interbank networks. Sci Rep 3(1):1–9

Toomaj A (2017) On the effect of dependency in information properties of series and parallel systems. Stati Methods Appl 26(3):419–435

Acknowledgements

We thank Prof. Paolo Giudici for useful discussions on the topics concerning risk measurement and management. We also thank the participants to the 51st Scientific Meeting of the Italian Statistical Society (SIS 2022) for useful comments on an earlier version of the paper.

Funding

Open access funding provided by Università degli Studi di Pavia within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare no conflict of interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pagnottoni, P., Spelta, A. Statistically validated coeherence and intensity in temporal networks of information flows. Stat Methods Appl 33, 131–151 (2024). https://doi.org/10.1007/s10260-023-00724-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-023-00724-y