Abstract

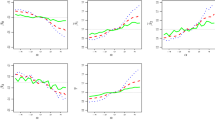

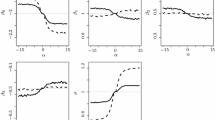

In the framework of generalized linear models for binary responses, we develop parametric methods that yield estimators for regression coefficients less compromised by an inadequate posited link function. The improved inference are obtained without correcting a misspecified model, and thus are referred to as wrong-model inference. A byproduct of the proposed methods is a simple test for link misspecification in this class of models. Impressive bias reduction in estimators for the regression coefficients from the proposed methods and promising power of the proposed test to detect link misspecification are demonstrated in simulation studies. We also apply these methods to a classic data example frequently analyzed in the existing literature concerning this class of models.

Similar content being viewed by others

References

Aranda-Ordaz FJ (1981) On two families of transformations to additivity for binary response data. Biometrika 68(2):357–363

Bliss CI (1935) The calculation of the dosage–mortality curve. Ann Appl Biol 22(1):134–167

Boos DD, Stefanski LA (2013) Essential statistical inference: theory and methods. Springer, New York

Carroll RJ, Ruppert D, Stefanski LA, Crainiceanu C (2006) Measurement error in nonlinear models: a modern perspective. Chapman & Hall/CRC, Boca Raton

Cleveland WS, Devlin SJ (1988) Locally weighted regression: an approach to regression analysis by local fitting. J Am Stat Assoc 83(403):596–610

Cook JR, Stefanski LA (1994) Simulation-extrapolation estimation in parametric measurement error models. J Am Stat Assoc 89(428):1314–1328

Copas JB (1988) Binary regression models for contaminated data. J R Stat Soc Ser B (Methodol) 50(2):225–253

Czado C, Santner TJ (1992) The effect of link misspecification on binary regression inference. J Stat Plan Infer 33(2):213–231

Guerrero VM, Johnson RA (1982) Use of the box-cox transformation with binary response models. Biometrika 69(2):309–314

Hosmer DW, Hosmer T, Le Cessie S, Lemeshow S (1997) A comparison of goodness-of-fit tests for the logistic regression model. Stat Med 16(9):965–980

Jiang X, Dey DK, Prunier R, Wilson AM, Holsinger KE (2013) A new class of flexible link functions with application to species co-occurrence in cape floristic region. Ann Appl Stat 7(4):2180–2204

Kim S, Chen MH, Dey DK (2007) Flexible generalized t-link models for binary response data. Biometrika 95(1):93–106

McCullagh P, Nelder J (1989) Generalized linear models. Chapman & Hall/CRC, Boca Raton

Morgan BJ (1983) Observations on quantit analysis. Biometrics 39(4):879–886

Nelder JA, Wedderburn RW (1972) Generalized linear models. J R Stat Soc Ser A-G 135(3):370–384

Pregibon D (1980) Goodness of link tests for generalized linear models. J R Stat Soc C-Appl 29(1):15–24

Samejima F (2000) Logistic positive exponent family of models: virtue of asymmetric item characteristic curves. Psychometrika 65(3):319–335

Stefanski LA, Cook JR (1995) Simulation-extrapolation: the measurement error jackknife. J Am Stat Assoc 90(432):1247–1256

Stukel TA (1988) Generalized logistic models. J Am Stat Assoc 83(402):426–431

White H (1982) Maximum likelihood estimation of misspecified models. Econometrica 50(1):1–25

Whittemore AS (1983) Transformations to linearity in binary regression. SIAM J Appl Math 43(4):703–710

Acknowledgements

I would like to thank the Associate Editor and the two anonymous referees for their helpful suggestions and insightful comments that greatly improve of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix: Proof of equation (2)

Appendix: Proof of equation (2)

Because the assumed GLM is specified by \(P(Y=1|X)=H(\eta )\), where \(\eta =\beta _0+\beta _1 X\), and the reclassified response is generated according to \(P(Y^*=Y|Y, X)=\pi \), one has

It follows that the likelihood function based on the assumed primary model for \(Y^*\) evaluated at one data point \((Y^*, X)\) is \(L(\pi , \varvec{\beta })=P(Y^*=1|X)^{Y^*}\{1-P(Y^*=1|X)\}^{1-Y^*}\), and the log-likelihood function is \(\ell (\pi , \varvec{\beta })=Y^*\log P(Y^*=1|X)+(1-Y^*)\log \{1-P(Y^*=1|X)\}\).

Differentiating (15) with respect to each element in \((\pi , \varvec{\beta })\) gives

Using (16), one can show that the three normal score functions associated with \(\ell (\pi , \varvec{\beta })\) are given by

To further simplify notations, let \(\mu =P(Y^*=1|X)\). The above three score functions can be re-expressed as

The expectation of the first score in (17) with respect to the true distribution of \((Y^*, X)\) is

where \(\eta _0\) is equal to \(\eta \) evaluated at the true value of \(\varvec{\beta }\), and \(\mu _0 =(2\pi -1)G(\eta _0)+1-\pi \), as defined in (4), is the mean of \(Y^*\) given X under the correct model evaluated at the true parameter values. Setting this expectation equal to zero gives the first estimating equation in (2). Similarly, the expectations of the second and the third score functions in (17) with respect to the true distribution of \((Y^*, X)\) are given by

respectively. Setting these two expectations equal to zero gives the second and the third equations in (2).

Rights and permissions

About this article

Cite this article

Huang, X. Improved wrong-model inference for generalized linear models for binary responses in the presence of link misspecification. Stat Methods Appl 30, 437–459 (2021). https://doi.org/10.1007/s10260-020-00529-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10260-020-00529-3