Abstract

MITCA (homework implementation method) was born with the purpose of turning homework into an educational resource capable of improving the self-regulation of learning and the school engagement of students. In this article, following the current theoretical framework, we evaluate the impact of the MITCA method on school engagement in students in the 5th and 6th years of Primary Education. While the control group of students who did not participate in the 12 weeks of MITCA (N = 431; 61% of 5th grade) worsened significantly in emotional, behavioral, and cognitive engagement, these pre-post differences do not reach significance for the group that has participated in MITCA, even observing a tendency to improve. After the intervention, the students who participated in MITCA (N = 533; 50.6% of 5th grade) reported greater emotional and behavioral engagement than the students in the control group. MITCA students showed positive emotions, were happier in school and were more interested in the classroom, paid more attention in class, and were more attentive to school rules. The conditions of the tasks’ prescription proposed by MITCA would not only restrain the lack of engagement but would also improve students’ emotional and behavioral engagement in school found in the last years of Primary Education. In the light of the results, a series of educational strategies related to the characteristics of these tasks, such as the frequency of prescription and the type of correction are proposed.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

Introduction

Teachers prescribe homework with diverse intentions, such as revising or practicing what has been worked on in class and/or expanding academic skills, preparing new content, or ensuring that every student participates in their learning processes (Epstein & Van Voorhis, 2001). At this point, achieving a homework prescription that effectively favors school engagement and promotes student autonomy in their learning process has become a challenge (Rodríguez et al., 2020). With the ultimate purpose of turning school homework into a valuable educational resource, this quasi-experimental investigation is developed to verify to what extent the implementation of the method MITCA (homework implementation method) (Valle & Rodríguez, 2020) contributes to students’ school engagement.

The study of school engagement has acquired great relevance in education in the last decade (Boekaerts, 2016; Christenson et al., 2012), to the extent that it has been linked, among other variables, to early school dropout (Wang & Fredricks, 2014) and the disruptive behavior of adolescents (O’Toole & Due, 2015; Wang & Fredricks, 2014). Indeed, the available evidence has linked school engagement with satisfaction in school (King & Gaerlan, 2014) and even life satisfaction among students (Liang et al., 2016; Martin et al., 2014).

In the academic field, previous research has shown that achievement and learning are positively linked to school engagement (e.g., Froiland & Worrell, 2016; Motti-Stefanidi, et al., 2015; Tomás et al., 2016). The interaction of school engagement with academic motivation has also been evidenced in terms of perception of competence (Li et al., 2010), dedication of effort, and persistence (Skinner et al., 2009).

Prescription of quality homework

In an attempt to ensure that homework contributes to promoting a more positive attitude toward school (Buijs & Admiraal, 2013; Ramdass & Zimmerman, 2011), favors academic involvement, and protects the emotional well-being and perception of student competence (Dettmers et al., 2011; Ramdass & Zimmerman, 2011), different authors have contributed to point out conditions for a prescription of quality homework (see, e.g., Coutts, 2004; Marzano & Pickering, 2007; Rosário et al., 2018; Rosário et al., 2019; Vatterott, 2010, among others). Taking these contributions into consideration and also taking into account other studies that have incorporated homework as work topics within teaching programs aimed at improving organizational habits and student autonomy (Akioka & Gilmore, 2013; Breaux et al., 2019; Flunger et al., 2021; Gambill et al., 2008; Langberg, 2011), evidence related to the homework characteristics and correction practices are compiled below.

Evidence on the conditions for prescribing quality homework

One of the conditions that seem to have the greatest consensus when prescribing quality homework would be the need to establish and make explicit the purpose of the tasks (Coutts, 2004; Marzano & Pickering, 2007; Rosário et al., 2019). Ensuring that the goal of the assignments is clear and consistent with the type of tasks would be a fundamental element for the prescription of quality homework. Furthermore, it seems relevant to design tasks that are perceived as meaningful, useful, and interesting tasks by the students. In this line, assigning varied activities both attending to the needs and preferences of the students and adjusted to the academic curriculum could be understood as a condition for the prescription of quality homework (Akioka & Gilmore, 2013; Flunger et al., 2021; Marzano & Pickering, 2007; Rosário et al., 2019; Vatterott, 2010).

Evidence on homework correction practices

Making homework a valuable educational resource surely requires feedback that incorporates frequent and personalized comments on the homework itself, including the correct answers, if applicable. Also, teachers’ feedback on homework should include notes to improve and/or advice that can be extrapolated to exam situations (Akioka & Gilmore, 2013). In addition to constituting a reference for the teacher, homework correction and feedback would contribute to the student’s own self-assessment (Rosário et al., 2019), and, specifically, to the recognition of their difficulties (Bang, 2012; Epstein & Van Voorhis, 2001).

In summary, understanding that quality homework must consider, at least, conditions related to its prescription and correction, and given that, as far as we know, there are no programs or methods that specify the set of guidelines to follow, the MITCA method (Valle & Rodríguez, 2020) is designed.

MITCA and school engagement

The MITCA method has been designed by establishing five conditions:

-

1)

Not only revision or post-topic tasks should be prescribed, but also it is necessary to assign similar proportions of revision, organization, and production tasks that also include pre-topic tasks (Varied Tasks),

-

2)

Tasks are described by the mental work they involve and the content they address (Specific Tasks),

-

3)

The teacher must convey the usefulness, interest, importance and/or applicability of homework (Worthwhile Tasks),

-

4)

Tasks are prescribed weekly and the students establish their timeslots in which to do them (Weekly Tasks), and

-

5)

Tasks are corrected weekly, either in the classroom or individually, differentiating between aspects to improve and positive points (Evaluated Tasks).

These five conditions for the prescription of homework are synthesized as Varied, Specific, Worthwhile, Weekly, and Evaluated. Thus, the method contemplates that the students, while carrying out information identification tasks, e.g., marking, writing, and/or reviewing literal, and organizational, e.g., differentiating and ordering ideas, are involved in more constructive ways of participation, e.g., paraphrasing or writing an opinion, and interactive ,e.g., preparing an explanation for others or defending an argument in public, when dealing with homework at home (Varied Tasks—STEP 1 of the MITCA method).

The Varied Tasks condition, which implicitly calls for the increased prescription of more elaborate and generally more challenging extension tasks for learners, should positively affect learners’ cognitive and behavioral engagement (see, e.g., Dunlosky et al., 2013; Fiorella & Mayer, 2015).

On the other hand, taking into account the evidence around the need to establish and explain the purpose of the tasks and based on the TASC conditions developed by McCardle et al. (2016) for the establishment of learning purposes, MITCA refers the teacher to the need to define the tasks that are prescribed in terms of cognitive operation and content (Specific Tasks—STEP 2 of the MITCA method). It is expected that setting clear purposes for homework allows progress to be monitored, difficulties to be recognized, and revision opportunities to increase (McCardle et al., 2016). Furthermore, it has been linked to student engagement in school (Shernoff, 2013).

MITCA maintains that the subjective value attributed to the tasks that are prescribed can be improved when expectations are adjusted, the intrinsic interest is adjusted, as far as possible, and the instrumental value of the same is identified (Worthwhile Tasks—STEP 3 of the MITCA method) (Eccles & Wigfield, 2002). Aligning homework with the syllabus and integrating it into classroom activities would enhance students’ perception of its usefulness, thereby contributing to their motivation and behavioral engagement in academic tasks (Núñez et al., 2019).

In this sense, it is understood that attributing some kind of recognition to tasks—for example, “this type of task will be in the exam” or “the best ones will be presented in class”—or instrumental value, e.g., “they will help you learn to buy well at sales or to learn to speak in public”, will improve affective school engagement (Katz & Assor, 2006).

In summary, based on the literature around the characteristics of the tasks that are prescribed, it is hypothesized that the conditions of varied tasks, concrete tasks, and valuable tasks of the MITCA method improve and/or contribute to containing the cognitive, behavioral, and/or emotional engagement with the school.

The MITCA method proposes a weekly task prescription, instigating the teacher to collaborate with students in establishing their own timetable to complete them in the first six weeks of implementation of the presented method (Weekly Tasks—STEP 4 of the MITCA method). In various programs that aim to promote students’ autonomy and self-regulation through homework, certain conditions have been considered, such as planning schoolwork outside of school hours, estimating the time required to complete tasks (Langberg, 2011), and providing instructions for organizing agendas and materials (Gambill et al., 2008).

Finally, MITCA also includes the informative and motivating feedback condition as a feedback strategy (Evaluated Tasks—STEP 5 of the MITCA method). It is understood that feedback that provides individual information on improvements and guides on aspects to improve—informative feedback—becomes an educational resource capable of optimizing the learner’s self-regulatory skills and increasing their academic engagement. In fact, we have evidence to suggest that feedback that incorporates both criticism and praise, directed at controllable aspects, such as effort or dedication, will contribute to students’ motivational engagement (Cunha et al., 2018; Fong et al., 2019).

Likewise, it is expected that the weekly assignment of tasks (Weekly Tasks—STEP 4 of the MITCA method), together with regular informative and motivating feedback (Evaluated Tasks - STEP 5 of the MITCA method), contribute to improving or sustaining student’ school engagement.

In order to verify if the prescription of homework following these five conditions of the MITCA method during 12 school weeks contributes, indeed, to the motivational, cognitive, and behavioral engagement of the students, this quasi-experimental research is proposed with a control group (CG) and measures pre- and post-intervention.

Materials and methods

This study presents an experimental research design composed of a control group and an experimental group evaluated in two stages (pre-test and post-test). Specifically, the MITCA method was implemented for 12 weeks—a full term of the Spanish academic year—in the subjects of Spanish, Galician, and Mathematics. These three subjects have been chosen for this study as they are core subjects in the Spanish academic curriculum. They are common across the different grades explored and are given greater weight within the academic curriculum.

An experimental (with classes convenience assigned to the experimental group, EG, or the control group ,CG) study was designed to observe the impact of using the MITCA method for 12 school weeks on school engagement, differentiating between cognitive, motivational, and behavioral engagement with the school, in 5th- and 6th-grade Primary School students. Data were collected at two measurement time points (pre-test and post-test) for the dependent variables. Therefore, the two groups who participated in this research are characterized by the following conditions:

-

Control condition: A group of teachers with their respective pupils who assign and perform homework under their convictions, without prior training.

-

Experimental condition: A group of teachers with their respective pupils who assign and perform homework under the characteristics of the MITCA method, with previous training in this method and weekly follow-ups by the researchers.

Participants

In this study, a total of 43 teachers participated, teaching either Spanish or Galician Language and/or Mathematics to the 5th- and 6th-grade students in Primary Education. Specifically, there were 23 teachers from the 5th grade and 20 teachers from the 6th grade. The student sample comprised 964 individuals, consisting of 469 boys and 495 girls. These participants were drawn from 20 Primary Education schools located in the Autonomous Community of Galicia (Spain). While convenience sampling was employed to select the participants, the research implementation procedure utilized official channels associated with the teacher training centers of the government of Galicia. This approach ensured that all Primary Schools within the target population had an equal opportunity to be included in the sample.

There were 17 schools in the experimental group, consisting of 11 public schools and 6 subsidized schools. All these schools were situated in an urban context, and the socio-economic level of the area was classified as medium–high according to the Spanish national statistics (National Institute of Statistics, 2020).

In this research, the decision was made to focus the analysis on students in the 5th and 6th grades of Primary Education. These grades were chosen as they represent the final years required to complete this educational stage before transitioning to Secondary Education, which often takes place in a different school center. In Spain, Primary school students have one teacher as their tutor for all or most subjects, except for specialized areas such as English or Physical Education. Meanwhile, Secondary Education students have different teachers for each subject.

The sample was differentiated into an experimental group and a control group. The experimental group is made up of 24 teachers (12 from the 5th year and 12 from the 6th year) and 533 students (270 students from the 5th year and 262 students from the 6th year), while the control group is made up of 19 teachers (11 from the 5th grade and 8 from the 6th grade) and 431 students (263 students from the 5th grade and 168 students from the 6th grade).

All participants were evaluated before and after the intervention. Ethical and biosafety implications were previously approved within the framework of the project developed for this research. Specifically, the study adhered to the guidelines outlined in the Declaration of Helsinki and was conducted in accordance with the ethical standards set by the Ethics Committee of the University of A Coruña, given its involvement with human participants.

Instruments

The student’s school engagement was measured through the Ramos-Díaz et al. (2016) validated Spanish version of The School Engagement Measure (SEM) by Fredricks et al. (2005), which contains 19 items with a 5-point Likert-type response format (where 1 is “never” and 5 is “always”). The exploratory factorial analysis of the elements in the Spanish adaptation allows us to replicate the original structure differentiating the behavioral engagement (α = .82) (example items: In class I pay attention; When I am in class I dedicate myself to work (study); I follow the rules that mark in my school), emotional engagement (α = .80) (example items: I have fun in class; I am happy at school; I like being at school), and cognitive engagement (α = .72) (example items: I read extra books about things we do at school; I try to watch TV shows about things we do at school; When I read a book I ask myself questions to make sure I understand what I read).

Procedure

Several methods were used to select the sample. The participation of teachers who taught either Spanish or Galician and/or Mathematics in the 5th and 6th year of Primary Education was requested through the six Training and Resource Centers (CFRs) dependent on the Xunta de Galicia (Government of the Autonomous Community from Galicia, Spain). The official social networks of the Autonomous Center for Training and Innovation (CAFI) of this same institution and the social networks of the research group in Educational Psychology of the University of A Coruña were also used. Four meetings were arranged, one in each of the provinces of the autonomous community of Galicia (Spain), to make the objective of the research known to the teachers who showed interest in participating. Twenty-four of the teachers who attended this first meeting agreed to implement the homework prescription method during the 12 weeks during the second school term of the academic year—the academic year for Primary Education in Spain is divided into three terms—(experimental group).

Nineteen teachers agreed to participate as a control group, committing themselves to continue with a conventional task without incorporating any change to their usual practice during the 12 weeks of implementation of the method. All the teachers that participated in the intervention received a training seminar of approximately one hour on the principles and conditions for the prescription and correction of homework following the MITCA method (Vieites, 2022).

In the middle of the intervention, 6 weeks after the implementation of the method began, the teachers that constituted the experimental group attended a second meeting whose purpose was to collect impressions on the suitability of the method, reporting on how it was articulated in their habits, routines, and particular characteristics.

On Monday or Tuesday of each of the 12 weeks of the intervention, teachers in the experimental group prescribed homework for their students in the classroom. The prescription included (a) the mental process, e.g., identify, organize, and solve, and the academic content involved, e.g., adjectives, numbers, and quantity problems, and the value that had been expressly attributed to those tasks, e.g., usefulness, interest, importance, and/or applicability of homework. The prescription of different types of tasks—review vs organization vs production tasks; post-topic task vs pre-topic task—had to be proportional at the end of the 12-week intervention. From week two of the intervention, the teachers in the experimental group reported not only the tasks they prescribed but also the evaluation procedure adopted. During the 12 weeks of intervention, the correction of the prescribed tasks had to be either individualized—the notebooks were collected and corrected, indicating errors and strong points—or solved in class out loud and/or waxed, one by one. The use of both correction procedures was to be proportional by the end of the intervention.

Throughout the intervention period, the participating teachers communicated the homework they prescribed to the students in the experimental group via email. They were encouraged to ask questions and share any difficulties they encountered while incorporating the method into their teaching practice. Moreover, they received constructive feedback from the research group on the content of the tasks assigned, along with suggestions for improvement and corrections.

The data referring to the variables under study were collected during school hours by research collaborators, with the prior consent of the management team and the student’s families. The variables related to homework and student school engagement were obtained in the second term of 2020–21.

Data analysis

To address the hypotheses of this research, intergroup differences were analyzed both before and after a 12-week intervention. Pre-test and post-test comparisons were conducted considering the type of prescription—conventional vs MITCA—as a factor and measuring school engagement through cognitive engagement, behavioral engagement, and emotional engagement as dependent variables. The study of differences was interpreted using Cohen’s (1988) criteria, where Cohen’s d values below 0.20 indicate no effect, values between 0.21 and 0.49 indicate a small effect, values between 0.50 and 0.70 indicate a moderate effect and values above 0.80 indicate a large effect.

Results

Since the sample selection procedure was convenience-based, our initial objective was to examine whether there were any baseline differences in cognitive, behavioral, and emotional engagement between the control group (n = 431) and the experimental group (n = 533).

Table 1 contains pre-test and post-test data, including means, standard deviations, skewness, kurtosis, and the number of students in each group.

While no significant differences were found in cognitive or behavioral engagement between the two groups, there were reported differences in emotional engagement favoring the experimental group (t = 2.147, p < .05, d = .14) (see Fig. 1).

The analysis of the mean suggests significant differences between students who participated in the 12 weeks of MITCA intervention and those who did not participate in emotional engagement (t = 4.185, p < .001, d = .28) and behavioral engagement (t = 3.610, p < .001, d = .24). No significant differences were reached in cognitive engagement (see Fig. 2).

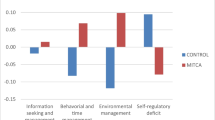

According to our results, the group of students who did not participate in the 12-week MITCA significantly worsened their emotional (t = 4.084, p < .001, d = .21), behavioral (t = 3.743, p < .001, d = .20), and cognitive (t = 2.17, p < .05, d = .11) engagement (see Fig. 3).

Although the pre-post differences for the group that participated in MITCA did not reach statistical significance, higher average scores were observed for all three dimensions of school engagement after 12 weeks (see Fig. 4).

Discussion

Previous research suggests that the decline in school engagement typically begins in the last years of primary school, which is the focus of this study (Archambault & Dupéré, 2017; Bae et al., 2020; Fredricks et al., 2019). In line with this, our findings indicate a significant decline in school engagement among participants in the control group, whereas no such decline is observed among MITCA students. Similarly, some studies have indicated that school engagement tends to progressively decrease as students’ progress through their schooling (see, e.g., Rosário et al., 2019). To examine the potential impact of appropriate homework prescription on students’ school engagement, this research aims to evaluate the effectiveness of the MITCA method in the two final grades of Primary Education (Vieites, 2022).

Overall, the findings of this study support the hypothesis of previous literature regarding the usefulness of homework as a strategy to improve students’ school engagement (Fong et al., 2016). Specifically, after 12 weeks of MITCA implementation, participants followed the rules set by their school, paid attention in class, and were more engaged in studying or performing teacher-mandated tasks—behavioral engagement—than students in the control group. The students in the experimental group of this research also reported being happier at school, having more fun, and enjoying themselves more in the classroom—emotional engagement—after 12 weeks than the students who functioned as controls.

As suggested by previous literature, the diversification of tasks assigned for home completion (Varied Tasks—STEP 1 of the MITCA method) may have contributed to consolidating behavioral engagement and facilitating cognitive engagement in the experimental group. This diversification allows for better adaptation of tasks to students’ learning rhythms and styles (e.g., Rosário et al., 2019; Vatterott, 2010). Furthermore, the specification of processes and content (Specific Tasks—STEP 2 of the MITCA method) and the emphasis on the value of tasks when assigned (Worthwhile Tasks—STEP 3 of the MITCA method) enable students to establish clear connections between homework, classroom activities, and academic achievement. These factors may explain the sustained sense of responsibility and behavioral disposition observed among students in the experimental group after 12 weeks of intervention (e.g., Katz & Assor, 2006; Shernoff, 2013). The observed improvement in behavioral and emotional school engagement may also be attributed to the feedback condition proposed by the MITCA method (Evaluated Tasks—STEP 5 of the MITCA method), as it contributes to students’ sense of control over the learning process and enhances their confidence (Fong et al., 2019).

In this study, it was expected that cognitive engagement would be higher among students in the experimental group after the intervention. This expectation was based on the cognitive operationalization of homework (Specific Tasks—STEP 2 of the MITCA method) and the inclusion of varied tasks (Varied Tasks—STEP 1 of the MITCA method), which implicitly promote more active, constructive, and interactive learning approaches (Dunlosky et al., 2013; Fiorella & Mayer, 2015). While cognitive disengagement was observed in the control group, no significant differences in cognitive engagement were observed between the groups after 12 weeks of intervention.

We interpret that the ability to self-regulate and the use of deep learning strategies associated with cognitive engagement (Fredricks et al., 2004; Wang et al., 2016) may require more extensive intervention. In this regard, we account that studies such as Wang and Fredricks (2014) and Quin et al. (2017) in Secondary Education obtained significant results in their interventions for emotional and behavioral engagement, but not for cognitive engagement. The research design incorporating, specifically, lagged measures that allow us to observe long-term trends is assumed to be a limitation in this work. At the same time, the incorporation of resources or instructional strategies specifically aimed at cognitive support to approach tasks at home is proposed as a future line of work.

In any case, we note at this point that different authors have warned about a certain disarticulation of the construct of cognitive engagement, which could also be more specific to the subject or content than other dimensions of school engagement (Quin et al., 2017).

The items measuring the cognitive engagement focus on tasks such as revision work, self-monitoring strategies, and self-evaluation. However, the cognitive operationalization of the MITCA method may not fully capture the cognitive engagement construct after only 12 weeks, lacking qualitative aspects. In a study by Li and Lajoie (2022), where they present an integrated model of cognitive engagement in self-regulated learning, they highlight the importance of incorporating the qualitative aspect of cognitive engagement, concerning students’ learning strategies and their adaptation. Future research could explore interventions that consider both quantitative and qualitative aspects of cognitive engagement, integrating them into the intervention design.

Additionally, it is worth noting that the intervention time for this dimension of engagement may not have been sufficient. It would be valuable to investigate whether longer intervention periods (e.g., the entire school year instead of one term) would lead to more significant changes. Furthermore, considering delayed measures could provide further insights into the long-term effects of the intervention.

Finally, while positive trends are observed when comparing the results of the control group and experimental group, the absence of statistically significant results may be attributed to the relatively short intervention time of 12 weeks, during which only the prescription and correction conditions of homework were affected. Notably, measures of engagement, where significant changes are yet to occur, tend to require more time to exhibit noticeable effects. Additionally, it is important to acknowledge the limitation of this study, which is the absence of delayed measures. Further research with extended intervention periods and delayed measurements could provide deeper insights into the long-term impact of the MITCA method on students’ engagement.

Conclusion

Educational practices that aim at improving and/or maintaining school engagement appear to be the key in ensuring the foundations of learning and preventing disengagement or dropout later on. Beyond the potential on academic performance, the results of this investigation should be interpreted with regard to the relevance of school engagement for the prevention of school failure or dropout, satisfaction in school, and even personal happiness and self-fulfillment (Clark & Malecki, 2019; Gutiérrez et al., 2017; Liang, et al., 2016; Martin et al., 2014; Rodríguez-Fernández et al., 2016).

Previous research has sought to promote student engagement in Primary Education through various means, such as providing classroom support, fostering curiosity, or emphasizing self-awareness of one’s engagement with school tasks (e.g., Schardt et al., 2019; Vaz et al., 2015). In this context, the MITCA method could specify and complement practices and educational strategies specifically aimed at promoting or maintaining school engagement, by differentiating the characteristics of the prescribed tasks, the frequency of prescription, and the type of feedback provided.

In terms of the characteristics of the prescribed tasks, two specific strategies can be proposed to improve and/or maintain student engagement in the final years of Primary Education. First, ensuring that students understand the purpose of the assigned task by specifying the mental processes, academic content involved, and the attributed value (Coutts, 2004; Marzano & Pickering, 2007; Rosário et al., 2018; Rosário et al., 2019; Vatterott, 2010). Second, prescribing varied tasks, specifically incorporating elaborative and pre-topic tasks (Akioka & Gilmore, 2013; Flunger et al., 2021; Rosário et al., 2019; Vatterott, 2010). These strategies can be considered as specific approaches to enhance and/or contain student engagement at the end of this educational stage.

Similarly, the weekly prescription of tasks, along with teacher support in learning time management (Gambill et al., 2008; Langberg, 2011), and the combination of individualized correction, including personalized comments on the homework itself, pointing out errors and strong points, and solving them in class, either by reading aloud or through class discussion, one task at a time (Akioka & Gilmore, 2013; Rosário et al., 2019), can be understood as specific steps to promote school engagement in light of the findings of this study.

The absence of follow-up measures, which prevents considering the long-term effects of the intervention in general and the potential changes in cognitive engagement specifically, is understood as a limitation in this study. Although the current results suggest the promising value of this intervention, which involved simple and feasible modifications in the practices of participating teachers, future research should consider including follow-up measures.

While we understand that the end of the Primary Education stage can provide a crucial framework for the promotion of self-regulatory skills and is particularly sensitive to the decline in school engagement, the impact of quality homework prescription in other educational stages remains open for future investigation.

Data Availability

The data that support the findings of this study are available from the corresponding author, upon reasonable request.

References

Akioka, E., & Gilmore, L. (2013). An intervention to improve motivation for homework. Australian Journal of Guidance and Counselling, 23(1), 34–48. https://doi.org/10.1017/jgc.2013.2

Archambault, I., & Dupéré, V. (2017). Joint trajectories of behavioral, affective, and cognitive engagement in elementary school. The Journal of Educational Research, 110(2), 188–198. https://doi.org/10.1080/00220671.2015.1060931

Bae, C. L., Les DeBusk-Lane, M., & Lester, A. M. (2020). Engagement profiles of elementary students in urban schools. Contemporary Educational Psychology, 62, 1–13. https://doi.org/10.1016/j.cedpsych.2020.101880

Bang, H. (2012). Promising homework practices: teachers’ perspectives on making homework for newcomer immigrant students. The High School Journal, 95(2), 3–31. https://doi.org/10.1353/hsj.2012.0001

Boekaerts, M. (2016). Engagement as an inherent aspect of the learning process. Learning and Instruction, 43, 76–83. https://doi.org/10.1016/j.learninstruc.2016.02.001

Breaux, R. P., Langberg, J. M., Bourchtein, E., Eadeh, H.-M., Molitor, S. J., & Smith, Z. R. (2019). Brief homework intervention for adolescents with ADHD: Trajectories and predictors of response. School Psychology, 34(2), 201–211. https://doi.org/10.1037/spq0000287

Buijs, M., & Admiraal, W. (2013). Homework assignments to enhance student engagement in secondary education. European Journal of Psychology of Education, 28(3), 767–779. https://doi.org/10.1007/s10212-012-0139-0

Christenson, S. L., Reschly, A. L., & Wylie, C. (Eds.). (2012). Handbook of research on student engagement. Springer Science + Business Media. https://doi.org/10.1007/978-1-4614-2018-7

Clark, K. N., & Malecki, C. K. (2019). Academic determination scale: Psychometric properties and associations with achievement and life satisfaction. Journal of School Psychology, 72, 49–66. https://doi.org/10.1016/j.jsp.2018.12.001

Cohen, J. (1988). Statistical Power Analysis for the Behavioral Sciences. LEA.

Coutts, P. M. (2004). Meanings of homework and implications for practice. Theory Into Practice, 43(3), 182–188. https://doi.org/10.1207/s15430421tip4303_3

Cunha, J., Rosário, P., Núñez, J. C., Nunes, A. R., Moreira, T. y Nunes, T. (2018). “Homework feedback is…”: Elementary and middle school teachers’ conceptions of homework feedback. Frontiers in Psychology, 9, 1–20. https://doi.org/10.3389/fpsyg.2018.00032

Dettmers, S., Trautwein, U., Lüdtke, O., Goetz, T., Frenzel, A. C., & Pekrun, R. (2011). Students’ emotions during homework in mathematics: Testing a theoretical model of antecedents and achievement outcomes. Contemporary Educational Psychology, 36(1), 25–35. https://doi.org/10.1016/j.cedpsych.2010.10.001

Dunlosky, J., Rawson, K. A., Marsh, E. J., Nathan, M. J., & Willingham, D. T. (2013). Improving students’ learning with effective learning techniques: Promising direction from cognitive and educational psychology. Psychological Science and the Public Interest, 14, 4–58. https://doi.org/10.1177/1529100612453266

Eccles, J. S., & Wigfield, A. (2002). Motivational beliefs, values, and goals. Annual Review of Psychology, 53, 109–132. https://doi.org/10.1146/annurev.psych.53.100901.135153

Epstein, J. L., & Van Voorhis, F. L. (2001). More than minutes: Teachers’ roles in designing homework. Educational Psychologist, 36(3), 181–193. https://doi.org/10.1207/S15326985EP3603_4

Fiorella, L., & Mayer, R. E. (2015). Learning as a generative activity: Eight learning strategies that promote understanding. Cambridge University Press. https://doi.org/10.1017/CBO9781107707085

Flunger, B., Gaspard, H., Häfner, I., Brisson, B. M., Dicke, A. L., Parrisius, C., Nagengast, B., & Trautwein, U. (2021). Relevance interventions in the classroom: A means to promote students’ homework motivation and behavior. AERA Open, 7(1), 1–20. https://doi.org/10.1177/23328584211052049

Fong, C. J., Warner, J. R., Williams, K. M., Schallert, D. L., Chen, L., Williamson, Z. H., & Lin, S. (2016). Deconstructing constructive criticism: the nature of academic emotions associated with constructive, positive, and negative feedback. Learning and Individual Differences, 49, 393–399. https://doi.org/10.1016/j.lindif.2016.05.019

Fong, C. J., Patall, E. A., Vasquez, A. C., & Stautberg, S. (2019). A meta-analysis of negative feedback on intrinsic motivation. Educational Psychology Review, 31, 121–162. https://doi.org/10.1007/s10648-018-9446-6

Fredricks, J. A., Blumenfeld, P. C., & Paris, A. (2004). School engagement: Potential of the concept, state of the evidence. Review of Educational Research, 74(1), 59–109. https://doi.org/10.3102/00346543074001059

Fredricks, J. A., Blumenfeld, P. C., Friedel, J., & Paris, A. (2005). School engagement. In K. A. Moore & L. Lippman (Eds.), What do children need to flourish? The search institute series on developmentally attentive community and society (Vol. 3, pp. 305–321). Springer. https://doi.org/10.1007/0-387-23823-9_19

Fredricks, J. A., Reschly, A. L., & Christenson, S. L. (Eds.). (2019). Handbook of student engagement interventions: Working with disengaged students. Academic Press.

Froiland, J. M., & Worrell, F. C. (2016). Intrinsic motivation, learning goals, engagement, and achievement in a diverse high school. Psychology in the Schools, 53(3), 321–336. https://doi.org/10.1002/pits.21901

Gambill, J. M., Moss, L. A., & Vescogni, C. D. (2008). The impact of study skills and organizational methods on student achievement [Unpublished master’s thesis]. University of Saint Xavier.

Gutiérrez, M., Tomás, J. M., Romero, I., & Barrica, J. M. (2017). Perceived social support, school engagement and satisfaction with school. Revista de Psicodidáctica (English ed.), 22(2), 111–117. https://doi.org/10.1016/j.psicoe.2017.05.001

Katz, I., & Assor, A. (2006). When choice motivates and when it does not. Educational Psychology Review, 19, 429–442.

King, R. B., & Gaerlan, M. J. M. (2014). High self-control predicts more positive emotions, better engagement, and higher achievement in school. European Journal of Psychology of Education, 29, 81–100. https://doi.org/10.1007/s10212-013-0188-z

Langberg, J. M. (2011). Homework, organization and planning skills (HOPS) Interventions: A treatment manual. National Association of School Psychologists (NASP) Publications.

Li, S., & Lajoie, S. P. (2022). Cognitive engagement in self-regulated learning: An integrative model. European Journal of Psychology of Education, 37(3), 833–852. https://doi.org/10.1007/s10212-021-00565-x

Li, Y., Lerner, J., & Lerner, R. (2010). Personal and ecological assets and academic competence in early adolescence: The mediating role of school engagement. Journal of Youth and Adolescence, 39(7), 801–815. https://doi.org/10.1007/s10964-010-9535-4

Liang, B., Lund, T. J., Mousseau, A. M., & Spencer, R. (2016). The mediating role of engagement in mentoring relationships and self-esteem among affluent adolescent girls. Psychology in the Schools, 53(8), 848–860. https://doi.org/10.1002/pits.21949

Martin, A. J., Papworth, B., Ginns, P., & Liem, G. A. D. (2014). Boarding school, academic motivation and engagement, and psychological well-being: A large-scale investigation. American Educational Research Journal, 51(5), 1007–1049. https://doi.org/10.3102/0002831214532164

Marzano, R. J., & Pickering, D. J. (2007). The case for and against homework. Educational Leadership, 64(6), 74–79.

McCardle, L., Webster, E. A., Haffey, A., & Hadwin, A. F. (2016). Examining students’ self-set goals for self-regulated learning: Goal properties and patterns. Studies in Higher Education, 42(11), 2153–2169. https://doi.org/10.1080/03075079.2015.1135117

Motti-Stefanidi, F., Masten, A., & Asendorpf, J. B. (2015). School engagement trajectories of immigrant youth: Risks and longitudinal interplay with academic success. International Journal of Behavioral Development, 39(1), 32–42. https://doi.org/10.1177/0165025414533428

National Institute of Statistics (INE). (2020). Average income per person. National Institute of Statistics. Retrieved July 23, 2023, from https://inespain.maps.arcgis.com/apps/webappviewer/index.html?id=2ed4829bedbf438fa1c78dee5ab6cb10

Núñez, J. C., Regueiro, B., Suárez, N., Piñeiro, I., Rodicio, M. L., & Valle, A. (2019). Student perception of teacher and parent involvement in homework and student engagement: The mediating role of motivation. Frontiers in Psychology, 10, 1–16. https://doi.org/10.3389/fpsyg.2019.01384

O’Toole, N., & Due, C. (2015). School engagement for academically at-risk students: a participatory research project. The Australian Educational Researcher, 42, 1–17. https://doi.org/10.1007/s13384-014-0145-0

Quin, D., Hemphill, S. A., & Heerde, J. A. (2017). Associations between teaching quality and secondary students’ behavioral, emotional, and cognitive engagement in school. Social Psychology of Education, 20(4), 807–829. https://doi.org/10.1007/s11218-017-9401-2

Ramdass, D., & Zimmerman, B. J. (2011). Developing self-regulation skills: The important role of homework. Journal of Advanced Academics, 22(2), 194–218. https://doi.org/10.1177/1932202X1102200202

Ramos-Díaz, E., Rodríguez-Fernández, A., & Revuelta, L. (2016). Validation of the Spanish version of the School Engagement Measure (SEM). The Spanish Journal of Psychology, 19, E86. https://doi.org/10.1017/sjp.2016.94

Rodríguez, S., Pineiro, I., Regueiro, B., & Estévez, I. (2020). Intrinsic motivation and perceived utility as predictors of student homework engagement. Revista de Psicodidáctica (English ed.), 25(2), 93–99. https://doi.org/10.1016/j.psicoe.2019.11.001

Rodríguez-Fernández, A., Ramos-Díaz, E., Ros, I., Fernández-Zabala, A., & Revuelta, L. (2016). Resiliencia e implicación escolar en función del sexo y del nivel educativo en educación secundaria. Aula abierta, 44(2), 77–82. https://doi.org/10.1016/j.aula.2015.09.001

Rosário, P., Núñez, J. C., Vallejo, G., Nunes, T., Cunha, J., Fuentes, S., & Valle, A. (2018). Homework purposes, homework behaviors, and academic achievement. Examining the mediating role of students’ perceived homework quality. Contemporary Educational Psychology, 53, 168–180. https://doi.org/10.1016/j.cedpsych.2018.04.001

Rosário, P., Cunha, J., Nunes, A. R., Moreira, T., Núñez, J. C., & Xu, J. (2019). “Did you do your homework?” Mathematics teachers’ homework follow-up practices at middle school level. Psychology in the Schools, 56(1), 92–108. https://doi.org/10.1002/pits.22198

Schardt, A. A., Miller, F. G., & Bedesem, P. L. (2019). The effects of CellF-monitoring on students’ academic engagement: A technology-based self-monitoring intervention. Journal of Positive Behavior Interventions, 21(1), 42–49. https://doi.org/10.1177/1098300718773462

Shernoff, D. (2013). Optimal learning environments to promote student engagement. Springer.

Skinner, E., Kindermann, T., Connel, J., & Wellborn, J. (2009). Engagement and disaffection as organizational constructs in the dynamics of motivational development. In K. Wentzel, & A. Wigfield (2009), Handbook of Motivation at School (pp. 223-245). Routledge.

Tomás, J. M., Gutiérrez, M., Sancho, P., Chireac, S. M., & Romero, I. (2016). El compromiso escolar (school engagement) de los adolescentes: Medida de sus dimensiones. Enseñanza & Teaching, 34(1), 119–135. https://doi.org/10.14201/et2016341119135

Valle, A., & Rodríguez, S. (2020). MITCA: Homework Implementation Method. University of A Coruña, Publication Service. https://doi.org/10.17979/spudc.9788497496360

Vatterott, C. (2010). Five hallmarks of good homework. Educational Leadership, 68(1), 10–15.

Vaz, S., Falkmer, M., Ciccarelli, M., Passmore, A., Parsons, R., Tan, T., & Falkmer, T. (2015). The personal and contextual contributors to school belongingness among primary school students. PLoS One, 10(4), e0123353. https://doi.org/10.1371/journal.pone.0123353

Vieites, T. (2022). Diseño e implementación de propuestas de prescripción de deberes escolares que mejoren el compromiso y la autorregulación del estudiante [Doctoral dissertation, University of A Coruña]. University of A Coruña, Publication Service. http://hdl.handle.net/2183/31240

Wang, M. T., & Fredricks, J. A. (2014). The reciprocal links between school engagement, youth problem behaviors, and school dropout during adolescence. Child Development, 85(2), 722–737. https://doi.org/10.1111/cdev.12138

Wang, M. T., Fredricks, J. A., Ye, F., Hofkens, T. L., & Linn, J. S. (2016). The math and science engagement scales: Scale development, validation, and psychometric properties. Learning and Instruction, 43, 16–26. https://doi.org/10.1016/j.learninstruc.2016.01.008

Acknowledgements

This study was performed thanks to financing from research project EDU2013-44062-P (MINECO) and EDU2017-82984-P (MEIC) and PID2021-125898NB-100 (MCI). The research has also been carried out thanks to the funding received in a FPI predoctoral grant (PRE2018-084938), a FPU predoctoral grant (FPU18-02191) and a predoctoral grant from the Xunta de Galicia (ED481A 2021/35) gained by three of the authors.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature (Universidade da Coruña/CISUG).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Current Themes of Research:

Self-Regulation (Rodríguez, S., Rodríguez-Llorente, C., & Vieites, T.); Motivational Psychology (Rodríguez, S., & Valle, S.); Homework (Díaz-Freire, F., Rodríguez, S., Rodríguez-Llorente, C., Valle, A., & Vieites, T.); School engagement (Díaz-Freire, F., Rodríguez, S., Rodríguez-Llorente, C., Valle, A., & Vieites, T.).

Relevant Publications:.

Rodríguez, S., Piñeiro, I., Regueiro, B., & Estévez, I. (2020). Intrinsic motivation and perceived utility as predictors of student homework engagement. Revista de Psicodidáctica. 25(2), 93-99 https://doi.org/10.1016/j.psicod.2019.11.001.

Impact Factor JCR (2020): 3.225 (Q2).

Rodríguez, S., Núñez, J.C., Valle, A., Freire, C., Ferradás, M., & Rodríguez-Llorente, C. (2019). Relationship between students’ prior academic achievement and homework behavioral engagement: the mediating/moderating role of learning motivation. Frontiers in Psychology, 10:1047 https://doi.org/10.3389/fpsyg.2019.01047.

Impact Factor JCR (2019): 2.067 (Q2).

Valle, A., Piñeiro, I., Rodríguez, S., Regueiro, B., Freire, C., & Rosario, P. (2019). Time spent and time management on homework in elementary school students: A person-centered approach. Psicothema, 31(4), 422- 428. https://doi.org/10.7334/psicothema2019.191.

Impact Factor JCR (2019): 2.632 (Q1).

Rosário, P., Núñez, J.C., Vallejo, G., Nunes, T., Cunha, J., Fuentes, S., & Valle, A. (2018). Homework purposes, homework behaviors, and academic achievement: Examining the mediating role of students’ perceived homework quality. Contemporary Educational Psychology, 53, 168- 180. https://doi.org/10.1016/j.cedpsych.2018.04.001.

Impact Factor JCR (2018): 2.484 (Q1).

Núñez, J.C., Epstein, J.L., Suárez, N., Rosário, P., Vallejo, G., & Valle, A. (2017). How do student prior achievement and homework behaviors relate to perceived parental involvement in homework? Frontiers in Psychology, 8:1217. https://doi.org/10.3389/fpsyg.2017.01217.

Impact Factor JCR (2018): 2.089 (Q2).

Valle, A., Pan, I., Regueiro, B., Suárez, N., Tuero, E. y Nunes, A.R. (2015). Predicting approach to homework in Primary school students. Psicothema, 27(4), 334- 340. https://doi.org/10.7334/psicothema2015.118.

Impact Factor JCR (2015): 1.245 (Q2).

Núñez, J.C., Suárez, N., Rosário, P., Vallejo, G., Valle, A. y Epstein, J.L. (2015). Relationships between perceived parental involvement in homework, student homework behaviors, and academic achievement: differences among elementary, junior high, and high school students. Metacognition and Learning, 10, 375-406. https://doi.org/10.1007/s11409-015-9135-5.

Impact Factor JCR (2015): 2.400 (Q1).

Regueiro, B., Suárez, N., Valle, A., Núñez, J.C., & Rosário, P. (2015). La motivación e implicación en los deberes escolares a lo largo de la escolaridad obligatoria. Revista de Psicodidáctica, 20(1), 47-63. https://doi.org/10.1387/RevPsicodidact.12641.

Impact Factor JCR (2015): 2.400 (Q1).

Valle, A., Rodríguez, S., Rosário, P., & Moledo, M. L., (Eds.). Homework, learning and academic success: the role of family and contextual variables. Frontiers Media SA. https://doi.org/10.3389/978-2-88963-492-7.

Rodríguez, S., Piñeiro, I., Regueiro, B., & Valle, A. (Eds.) (2022). Handbook of homework: theoretical principles and practical applications. Nova Science Publishers https://doi.org/10.52305/YLYW6263.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vieites, T., Díaz-Freire, F., Rodríguez, S. et al. Effects of a homework implementation method (MITCA) on school engagement. Eur J Psychol Educ 39, 1283–1298 (2024). https://doi.org/10.1007/s10212-023-00743-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10212-023-00743-z