Abstract

The aim of the study is to investigate the measurement invariance of mathematics self-concept and self-efficacy across 40 countries that participated in the Programme for International Student Assessment (PISA) 2003 and 2012 cycles. The sample of the study consists of 271,760 students in PISA 2003 and 333,804 students in PISA 2012. Firstly, the traditional measurement invariance testing was applied in the multiple-group confirmatory factor analysis (MGCFA). Then, the alignment analyses were performed, allowing non-invariance to a minimum to estimate all of the parameters. Results from MGCFA indicate that mathematics self-concept and self-efficacy hold metric invariance across the 80 groups (cycle by country). The alignment method results suggest that a large proportion of non-invariance exists in both mathematics self-concept and self-efficacy factors, and the factor means cannot be compared across all participating countries. Results of the Monte Carlo simulation show that the alignment results are trustworthy. Implications and limitations are discussed, and some recommendations for future research are proposed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

One of the main goals of using the international large-scale assessments (ILSAs) is to make cross-national comparisons concerning different educational systems and diverse cultural backgrounds. Therefore, valid comparison across countries and time is crucial in facilitating inferences on differences and trends. The discussions of methodological issues in using the data for comparisons have increasingly arisen (e.g., Kim et al., 2017; Rutkowski & Svetina, 2014). One of the fundamental and critical issues is the establishment of measurement invariance. Invariance is a statistical property of measurement, indicating the measured construct is equivalent across time or groups (Vandenberg & Lance, 2000). In psychological measurement, invariance is based on the idea that a measurement instrument designed to capture a specific construct should work equivalently under different conditions (van de Vijver, 2018). In other words, the variation observed in the construct under the different conditions should be irrelevant to the instrument itself but reflect the true difference across the conditions.

In the ILSAs like the Programme for International Student Assessment (PISA), self-reported questionnaire data are used to assess student non-cognitive constructs across different education systems and measurement cycles. The observed difference in these constructs should reflect the group difference in the measured constructs across countries and cycles. It should not be due to the questionnaire items functioning inconsistently over the countries and measurement occasions. Additionally, given the cultural differences in understanding the concept of non-cognitive constructs and translation bias, without the evidence of measurement invariance, the claims of comparative differences might be untrustworthy (Rutkowski & Svetina, 2014; Vandenberg & Lance, 2000). He et al. (2019) carried out a study on the cross-cultural comparability of non-cognitive constructs—instrumental motivation, enjoyment of science and sense of belonging to school from the Trends in International Mathematics and Science Study (TIMSS) and PISA. They found that the ignorance of measurement invariance might result in misleading conclusions. One of the classical examples was the paradoxical correlations between students’ motivation and achievement in the ILSAs. In their case, several east Asian counties outperform in science but students in these countries tend to have a lower level of motivation. Concerning scalar invariance did not hold, the researchers suggested not to compare scale mean scores directly with multiple cultures. It is necessary to test measurement invariance as a basic psychometric check when using data ILSAs to achieve more proper conclusions (He et al., 2019).

Academic self-concept and self-efficacy are two important psychological factors of student self-beliefs that are associated with academic achievement (Bandura, 1986; Bong & Skaalvik, 2003; Parker et al., 2014). Several attempts have been made to explore the generalizability of the effect of academic self-concept and self-efficacy in a cross-cultural context. By using data from PISA 2000, Marsh and Hau (2003) investigated the big-fish-little-pond effect (BFLPE) on academic self-concept. Their results demonstrated that the BFLPE maintained cross-cultural generalizability. Similar conclusions were generated by Marsh et al. (2015) when they used data from two age cohorts in Western, Asian and Middle Eastern Islamic countries from TIMSS. Chen and Zimmerman (2007) compared the accuracy of mathematics self-efficacy regarding mathematics achievement across American and Taiwanese middle-school students. In their study, paradoxical results were presented, where Taiwanese students outperformed in mathematics but tended to have a lower level of mathematics self-efficacy. These attempts seemed not to pay attention to the measurement issues.

More recent studies tend to recognize the importance of validation and measurement invariance for these two factors oncerning cross-group comparisons (e.g., gender, race, culture). However, these published studies are limited to local surveys (Cicero, 2020; Niehaus & Adelson, 2013), a single country context (Chen et al., 2019; Garcia et al., 2018; Uysal & Arıkan, 2018), and small group size (Teo & Kam, 2014).

To avoid providing divergent conclusions based on the paradoxical results, it is essential to test the measurement invariance of self-reported constructs, so that the inferences drawn from the cross-national and cross-cycle analyses involving these constructs would be more valid and trustworthy.

In this study, the literature on self-concept and self-efficacy was firstly reviewed, focusing on previous research on academic self-concept and self-efficacy in large-scale studies, followed by a literature review on the importance of measurement invariance, and analytical methods for testing measurement invariance. The purposes, methods used to investigate the problem, and the results are then outlined. In the end, the results, implications, and limitations were discussed, and also recommendations for future research were proposed.

Previous research

Self-concept and self-efficacy

Self-beliefs or self-related perceptions have been widely studied by researchers for quite a long time (Bandura, 1997; Bong & Skaalvik, 2003; Marsh & Shavelson, 1985; Marsh et al., 2019; Schunk, 1989; Shavelson et al., 1976). Previous research has shown that individuals’ beliefs in their capabilities have a major impact on their actions in different situations (e.g., Bandura, 1986; Marsh et al., 2018; Usher & Pajares, 2006). Students with different self-beliefs demonstrate different levels of cognitive engagement, motivation, and well-being in school (Bong & Skaalvik, 2003), and these self-beliefs play a vital role in their academic achievements. Self-belief is a broad concept that involves several related facets, among which self-concept and self-efficacy have been given considerable attention in their prediction of academic achievements (Bong & Skaalvik, 2003; Marsh, 1987; Shavelson et al., 1976).

Self-concept, dating back to the 1950s, was defined by phenomenologists as a global perception of oneself (Rogers, 1951). About two decades afterwards, a group of theorists tried to reconceptualize self-concept as a hierarchical construct with multiple facets (Harter, 1978; Marsh & Shavelson, 1985). Self-concept is considered people’s general perception of themselves, which is established through experiences, interpretations of social environment, and regarding their peers (Marsh, 1987; Parker et al., 2014; Rosenberg, 1979). It was argued to be an influential and important factor that is closely related to individuals’ emotional and cognitive outcomes, and their behaviours (Marsh & Martin, 2011).

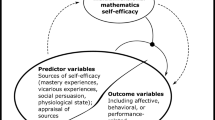

Self-efficacy is one of the closest constructs to self-concept. Perceived self-efficacy, as defined by Bandura (1986, p.391), is “people’s judgments of their capabilities to organise and execute courses of action required to attain designated types of performances.” Self-efficacy is developed by previous experiences and refers to one’s perception of and belief in the capability of performing a certain task related to a specific subject.

Compared with self-efficacy, self-concept can be context-dependent but less task-specific dependent (Pajares, 1996). The latter belief of oneself is a construct that describes a more general feeling of self-knowledge and self-evaluations, while the former focuses more precisely on expectations that an individual has on a task-specific question. Unlike self-efficacy, self-concept captures the perceived general competence of a subject matter untied to any specific tasks in that subject. The self-efficacy question in a mathematics-domain survey could be “How confident you are when you have to calculate the price of goods with discounts?”. The self-concept question is quite general, such as “Are you good at math?”. However, several researchers have recently argued that the high correlation between self-concept and self-efficacy would indicate that these two constructs may be taken as one construct, when measured empirically (Bong & Skaalvik, 2003; Marsh et al., 2019).

Academic self-concept and self-efficacy in large-scale studies

More recent studies concerning academic self-concept and self-efficacy address the distinctions between the two self-beliefs (Bong & Skaalvik, 2003; Marsh et al., 2019), the predictive power of self-concept and self-efficacy on academic achievements (McConney & Perry, 2010; Parker et al., 2014; Randhawa et al., 1993), the big-fish-little-pond effect regarding self-concept (Marsh & Hau, 2003; Marsh et al., 2008), and the reciprocal relationships between these two constructs with academic achievements (Marsh & O’Mara, 2008). Research has also been done in the mathematics field in terms of mathematics self-concept and self-efficacy (Chiu, 2017; Eklöf, 2007; Githua & Mwangi, 2003; Zhang & Wang, 2020). Taking previous research as references, the gap in research on student self-concept and self-efficacy locates in the lack of examination of measurement and the equivalence of measurement.

The accessibility of PISA data provides a unique chance to examine the mechanisms of such constructs across educational systems and over time. In the PISA 2003 and 2012 cycles, the participating students were asked to report, among other things, their perceived mathematics self-concept and self-efficacy in the student questionnaire.

Lee (2009) investigated universals and specific traits of mathematics self-efficacy, self-concept and anxiety in PISA 2003, and established valid comparability of these constructs across all the countries. For mathematics self-concept and self-efficacy in the PISA 2012 survey, OECD (2013) stated,

“Self-efficacy and self-concept, both these motivation scales have scalar invariance, allowing the use of these variables as non-cognitive, mathematics-related outcome indicators, establishing trend, as well as cross-national comparative analyses” (OECD, 2013: 191).

Changes have been observed between the 2003 and 2012 PISA cycles in the two constructs regarding students’ gender and socio-economic (OECD, 2013). For all the participating countries, boys and socio-economically advantaged students tend to have higher levels of self-efficacy than girls and socio-economically disadvantaged students. However, for studies aiming to make valid conclusions about mechanisms over countries and time, the measurement invariance of the constructs involved must be investigated. Nevertheless, less attention was focused on the evaluation of measurement invariance of mathematics self-concept and self-efficacy across participating countries and the two PISA cycles.

Measurement invariance (testing)

Measurement invariance, alternatively measurement equivalence, refers to the property that the latent variable is understood and measured equivalently across groups (Rutkowski & Svetina, 2014). Lack of measurement invariance may risk comparing “apples and oranges” and lead to misinterpretation of the results and conclusions by methodological artefacts. The conventional strategy to test measurement invariance is to use multiple-group confirmatory factor analysis (MGCFA, e.g., Putnick & Bornstein, 2016). As a starting point for measurement invariance testing, the factor structure of the construct should be the same over the groups (i.e., configural invariance). When the configural invariance holds for all groups, a stronger invariance can be tested, assuming that factor loadings of the latent construct indicators are equal (i.e., metric invariance). Built on the metric invariance, the scalar invariance embraces the intercepts of the latent variable indicators being equal. Conventionally, only when the scalar invariance is reached, can the latent variable means be compared across groups (e.g., Millsap, 2012; Schmitt et al., 2011). The accessibility and advantages of using MGCFA for measurement invariance testing across groups are well-documented (e.g., Chen, 2007; Cheung & Rensvold, 2002; Meade et al., 2008; Vandenberg & Lance, 2000; Widaman & Reise, 1997).

However, MGCFA has its disadvantages and limitations in testing measurement invariance when the number of groups and the sample size in the data are large. Many possible violations of invariance may occur, and it may be difficult to achieve acceptable model fits. Beierlein et al. (2012) found that the model fit results were overall poor when estimating MGCFA models with 26 countries and nearly 50 000 individuals in the sample. Moreover, the scalar invariance is typically hard to reach in the traditional CFA, especially when the group size is large (Davidov et al., 2012; He et al., 2019; Marsh et al., 2018).

Muthen and Asparouhov (2013) proposed the alignment method, considered an extension of the underlying MGCFA, which is preferably used when conducting analyses of measurement invariance across many groups. Compared to MGCFA which often fails due to too many model modifications, the alignment method can perform the estimations of factor means and variances across groups by allowing for approximate measurement invariance (Asparouhov & Muthén, 2014; Muthen & Asparouhov, 2013). Marsh et al. (2018) argued that scalar measurement invariance might be hard to achieve in the traditional MGCFA and using approaches such as partial invariance could be questionable. Conversely, the alignment method was argued to be considerably flexible and practical for testing measurement invariance and comparing the latent factor means in ILSAs across many groups. In another study (Coromina & Peral, 2020), the researchers compared MGCFA and the alignment method in testing the measurement invariance of institutional trust with the European Social Survey data. They proposed that the alignment method was more convenient than MGCFA with less strictness in the invariance parameters. Recent research applying the alignment method showed its practicability when comparing the construct among many groups (e.g., Glassow et al., 2021; Munck et al., 2018; Odell et al., 2021). The advantage of the alignment method, as argued in Asparouhov and Muthén (2014), is that it minimizes the amount of measurement non-invariance by estimating the factor means and factor variance.

A further step in the alignment method is to conduct a Monte Carlo simulation to examine how well the alignment method works under conditions where the numbers of groups, group sample size, and degree of measurement non-invariance are varying. Muthen and Asparouhov (2013) suggested that simulations studies can be conducted to validate the alignment results whether the proportion of non-invariance was above or below 25%.

Purposes

The study aims to investigate the measurement invariance of mathematics self-concept and self-efficacy measured in PISA. The traditional method multiple-group confirmatory factor analysis, followed by the more recent alignment method, was used to test the measurement invariance of mathematics self-concept and self-efficacy across 40 countries that participated in both PISA 2003 and 2012 cycles, namely 80 groups in total by cycle and by country.

Method

Data source and participants

This study is based on the data from the PISA 2003 and 2012 cycles. In all, 40 countries and economies participated in both cycles, and 605,564 15-year-old students were included. Table 1 shows the sample size of each country from the two PISA cycles.

Variables

The items for measuring mathematics self-concept and self-efficacy are consistent in the PISA 2003 and 2012, as well as in all participating countries. In Table 2, the indicators and the response alternatives for the two constructs are presented. It should be noted that the indicators measuring mathematics self-concept and self-efficacy are Likert scale variables. In the current analysis, they were treated as continuous variables in a confirmatory factor model. The assumption of continuity in the Likert scale items seemed to work well for measurement invariance testing in cross-cultural comparisons when full information maximum likelihood estimators are used (Munck et al., 2018).

Mathematics self-efficacy

Mathematics self-efficacy (MSE) in PISA is based on eight items, reflecting students’ perceived abilities in specific mathematics problems. The students were asked to report whether they felt very confident, confident, not very confident or not at all confident (a 4-point Likert scale) on tasks such as “using a train timetable,” “calculating TV discount,” and “calculating square metres of tiles.”

Mathematics self-concept

Mathematics self-concept (MSC) in PISA is based on five items reflecting students’ perceived competence in mathematics, whether they strongly agree, agree, disagree, or strongly disagree (a 4-point Likert scale) on tasks such as “get good grades,” “learn quickly,” and “not good at math.”

Analytical method

Multiple-group confirmatory factor analysis

Traditionally, testing of measurement invariance follows a sequential procedure. Starting from the least stringent form of invariance, i.e., configural invariance, it assumes the indicators of the construct of interest underly a common factor structure (equivalent model form). If the configural invariance is achieved, the metric invariance of the construct is tested where the factor loadings of all indicators are constrained to be equal. Finally, if the metric invariance is supported, then the scalar invariance is tested where the intercept of factor indicators is being constrained to be equal (see, e.g., Millsap, 2012). For factor means to be comparable, the scalar invariance must be satisfied. However, such a restricted invariance model is often rejected (Asparouhov & Muthén, 2014).

As the point of departure, the factor structure of MSE and MSC were tested by confirmatory factor analysis models (CFA, Brown, 2015). The chi-square test was used to evaluate whether the model fits the data. However, since the chi-square test is sensitive to sample size (see, e.g., Bollen, 1989; Cheung & Rensvold, 2002; Meade et al., 2008; Svetina et al., 2020), it is recommended that multiple model-fit indices should be examined. These additional model fit indices are root mean square error of approximation (RMSEA), standardized root mean square residual (SRMR) and comparative fit index (CFI). To achieve an acceptable model fit, RMSEA and SRMR values should be less than 0.08, and CFI to be greater than 0.95 (e.g., Hu & Bentler, 1999; Van de Schoot et al., 2012). A one-factor CFI model was fitted to each country in both PISA 2003 and 2012 cycles. This step was to examine whether a common factor structure can be found in all the groups (i.e., testing configural invariance).

In the MGCFA model, constraints were exposed on the model parameters to test the degree of invariance in the measurement model of mathematics self-efficacy and self-concept. When a common factor structure was found, a multi-group confirmatory factor analysis with the configural invariant factor structure was used for testing metric and scalar invariance across groups.

Chen (2007) suggested that, for metric invariance to be supported, a change of CFI (ΔCFI) from the configural invariance has to be less than 0.01, supplemented by a change of RMSEA (ΔRMSEA) by less than 0.015 and a change of SRMR (ΔSRMR) less than 0.30. For achieving scalar invariance, ΔCFI from the metric invariance model has to be less than 0.01, ΔRMSEA less than 0.015 and ΔSRMR less than 0.10 (Chen, 2007). More rigid criteria can also be found in ΔCFI, being less than − 0.002 (Meade et al., 2008). Chen (2007) also stated that CFI was chosen to be the main criterion because changes in RMSEA were more probably to be affected by sample size and model complexity. Rutkowski and Svetina (2014) relaxed the cutoff value of ΔRMSEA and ΔCFI to 0.03 and 0.02, respectively, to achieve metric invariance.

The alignment method

The current study followed the three sequential steps recommended by Asparouhov and Muthén (2014) when conducting the alignment analysis ( see also Muthen & Asparouhov, 2013).

Configural model estimation

Factor loadings and thresholds are set free across groups, factor means fixed at zero, and factor variance fixed at one.

Alignment optimization

The factor means and variances are set free, the values of which are chosen to minimize the total amount of non-invariance. In this step, the alignment method “does not assume measurement invariance and can estimate the factor mean and variance parameters in each group while discovering the most optimal measurement invariance pattern” by using a simplicity function (Asparouhov & Muthén, 2014, p. 496). The FREE alignment approach should be initially applied but the alignment model might be poorly identified or even not converge. If so, the FIXED approach has to be used by setting the group with the factor mean closest to zero as a reference.

Monte Carlo simulations

Muthén and Asparouhov (2013) recommended that when the level of non-invariance is high, the alignment measurement invariance should be complemented with Monte Carlo investigations. Several Monto Carlo simulations under different conditions should be conducted to confirm the validity of the alignment optimization and assess the quality of the performance in the alignment procedure (Asparouhov & Muthén, 2014). The parameters estimated from the alignment optimization were saved and used as the data-generating population parameter values in the simulation command. A large number of replications (nrep = 1 00) and three group sizes (N = 500, N = 1000, N = 10,000) were planned for the simulation study. The important statistic in this step is the correlation between the true factor means and the estimated factor means. The correlation above 0.98 indicates a good quality of the alignment optimization.

In this study, a 40-group alignment analysis of the two constructs MSE and MSC was performed for the 40 countries and economies in each of the two PISA cycles in 2003 and 2012, followed by an 80-group alignment analysis of the two cycles jointly. The joint analysis makes it possible to compare factor means and variances across countries, as well as across the two cycles (Asparouhov & Muthén, 2014). In addition, missing values were dealt with by using the maximum-likelihood estimation, which enables the usage of all available information. The weight variable was used to compute unbiased statistics at the country level.

Data management was performed in SPSS 28, and measurement invariance testing was done in Mplus Version 8 (Muthén & Muthén, 1998–2017).

Results

A single factor model of MSE and MSC was fitted separately for each country and cycle, examining the relationships between the latent variables MSE and MSC and their correspondent indicators. Neither of the single factor models fitted the data well. Consulting the modification indices, for MSE, adding correlated residuals between item “MSE5: Solving an equation like 3x + 5 = 17” and item “MSE7: Solving an equation like 2(x + 3) = (x + 3)(x − 3),” and item MSE5 with item “MSE8: Calculating the petrol consumption rate of a car” improved the model fit to an acceptable level according to the criteria of model fit indices. For MSC, the residual covariance of items “MSC2: I am just not good at mathematics” and “MSC4: I get good marks in mathematics” also had high modification index values, and was added to the model to get a well-fitted model (see Fig. 1).

Results from MGCFA

An MGCFA of MSE and MSC was performed for the 40 countries in each of the two PISA cycles separately, and for the joint data with 80 groups in total (cycle by country, see Table 3).

As is shown in Table 3, the configural and metric invariance models of MSE fitted the data well, in both PISA 2003 and 2012. The scalar invariance model, however, fitted neither MSE nor MSC. The differences in the RMSEA, CFI and SRMR (\(for MSE: \Delta RMSEA=.000, \Delta CFI=-.009, \Delta SRMR=.021\) \(and for MSC:\Delta RMSEA=.011, \Delta CFI=-.014, \Delta SRMR=.052\)) between the configural and metric models fulfilled the critical values proposed by Chen (2007; Rutkowski & Svetina, 2014), indicating that MSE and MSC were metric invariant.

Similar patterns could be found both in PISA 2012 and in the joint data with the 80 groups: as presented in Table 3, model fit evaluation of the different invariance models from MGCFA revealed that configural and metric models of both MSE and MSC fit the data well. MSE and MSC held configural and metric invariance across 80 groups (cycle by country), but scalar invariance was not achieved. The scalar invariance could be supported in neither the cross-country within cycle comparison nor the cross-country between cycle comparisons.

Results from the alignment method

This section presents the results from the alignment analysis. The estimates were achieved by the FIXED alignment model, taking the country whose factor mean was the closest to zero as the reference.

In Table 4, the alignment optimization results are presented. In column 1, the fit function values for each item intercept and factor loading of MSE and MSC are presented which add up to the total optimized simplicity function values. Among the intercepts for MSE, the item MSE2 contributes the most non-invariance, while the item MSE7 contributes the least. Among the factor loadings, the item MSE5 contributes the most amount of non-invariance and the item MSE3 contributes the least. For MSC, the item MSC2 contributes the most of the intercepts and the factor loadings non-invariance, while the item MSC6 contributes the least.

The \({R}^{2}\) values in the second column suggest that MSE2 and MSE3 are the most invariant indicators in the intercept and the factor loadings, respectively, while MSE7 is the least invariant in the intercept, and MSE1 and MSE5 are the least invariant in the factor loadings. Among intercepts for MSC, MSC6 is the most invariant item while MSC2 is the least invariant. Among factor loadings for MSC, MSC9 is the most invariant item while MSC7 is the least invariant.

The mean and standard deviation of intercepts and loading for MSE and MSC were calculated in the next two columns in Table 4, followed by the groups that hold the minimum and the maximum estimated parameters of intercepts and loadings for MSE and MSC.

Weighted average estimates of intercepts and factor loadings across invariant groups for MSE and MSC are presented in Table 5. For MSE, the number of groups with approximate measurement invariance among intercepts ranges from 22 (item MSE7) to 43 (item MSE8). The number ranges from 37 (item MSE5) to 69 (item MSE8). 63.59% and 33.28% of the intercepts and factor loadings are non-invariant, which results in 48.43% of total non-invariance in MSE. For MSC, the number of groups with approximate measurement invariance among intercepts ranges from 23 (item MSC2) to 35 (item MSC6), whereas among factor loadings the number ranges from 36 (item MSC9) to 45 (item MSC2, MSC4 and MSC6). 65.5% and 48% of the intercepts and factor loadings are non-invariant, which results in 56.75% of total non-invariance in MSC.

Asparouhov and Muthén (2014) proposed a 25% non-invariance rate as the limit for partial invariance, within which the estimated mean and relations among latent constructs could be compared. However, the results from the current alignment analyses showed that the non-invariance rates were too high to allow such comparisons across countries and cycles.

In the alignment method, the latent means are estimated anyhow for a reference regardless of measurement non-invariance. It is important to note that the estimates would be reliable only if the presentation of approximate measurement invariance exists. In this present study, the comparison of factor means was not performed due to the large proportion of measurement non-invariance.

Monte Carlo simulation

As mentioned above, the proportion of non-invariance for both MSE and MSC is above 25%, and several measurement parameters show large non-invariance in various groups. It might be a concern that the factor means are not well enough estimated to satisfy a valid comparison across groups (Asparouhov & Muthén, 2014; Muthen & Asparouhov, 2013). It is necessary to carry out a Monte Carlo simulation study to check the reliability and quality of the alignment model results.

The results of the simulation study are presented in Table 6. In the column “N = 10,000 nrep = 100” (close to the real population), the correlations of true (real-data population) means, and estimated means were all above 0.99 for factor means and factor variances for both mathematics self-concept and self-efficacy, higher than the suggested cut-off value 0.98 (Muthen & Asparouhov, 2013). Therefore, despite the existence of a large proportion of measurement non-invariance of items, the parameters achieved in the alignment analysis for both mathematics self-efficacy and self-concept are trustworthy.

Some further investigations

Due to the large amount of non-invariance with the intercepts and the factor loadings of mathematics self-efficacy and self-concept, some further attempts were done. Firstly, the indicators that were non-invariant in most of the countries were deleted and we re-ran the alignment analyses. The results still displayed an undesirable amount of non-invariance in both constructs. Secondly, the analyses in the separate cycle without the non-invariant indicators were performed, but the amount of non-invariance still exceeded more than one quarter. Since the self-concept and self-efficacy are likely affected by cultural tradition and upbringing(He et al., 2019; Marsh et al., 2006), another attempt was made that focused only on the Nordic countries (Denmark, Finland, Island, Norway, Sweden). These countries are not only geographically close but also to a great extent similar in their culture and educational systems. Surprisingly, the proportion of non-invariance was still over 25%. In all the attempts, neither mathematics self-efficacy nor mathematics self-concept has reached scalar invariance.

In summary, the results show that mathematics self-efficacy and self-concept are metric invariant across countries in PISA 2003 and 2012 cycles but scalar invariance fails to hold for both constructs. A large proportion of non-invariance exists in intercepts and factor loadings of both constructs, and factor mean comparisons fail across the groups.

Discussion and conclusions

The aim of the study is to investigate the measurement invariance of mathematics self-concept and self-efficacy across education systems and PISA cycles, by using multiple-group confirmatory factor analysis and the alignment method. Overall, this study indicates that measurement invariance is unjustified and there exists a great extent of variation in the measurement of the two constructs across countries and between the PISA 2003 and 2012 cycles. The results call for caution in comparative studies where the self-concept and self-efficacy factors are involved.

The measurement structure of mathematics self-concept and self-efficacy

Configural invariance of the single-factor CFA models with correlated residuals for both mathematics self-concept and self-efficacy constructs confirmed that this model structure fitted the data very well across all countries and the PISA cycles. The results suggested that the one-factor structure would be valid for both constructs, revealing that the latent structures of the constructs were consistent with the general understanding of the nature of the factors.

The measurement invariance of mathematics self-concept and self-efficacy

Using multi-group CFA and in comparison with previous findings

Mathematics self-concept and self-efficacy hold metric invariance across countries in PISA 2003 and 2012 cycles. The scalar invariance could not be established for the constructs since the poor model fit is always an issue when assessing the exact invariance using large-scale data (Rutkowski & Svetina, 2014).

These findings are not in line with the results of the previous study (Lee, 2009), where the nested multiple-group models with certain constraints for mathematics self-concept and self-efficacy were found to fit the all-country data PISA 2003, even though it was not quite clear whether the same model structure was applied, for example, we observed a slight difference in the correlated residuals. Lee (2009) presented the comparison of different measurement invariance models, the model fit indices of CFI and RMSEA did not fulfil the required criteria. As mentioned in the previous section, CFI is suggested to be above 0.95 for acceptable model fit and RMSEA is trustworthy under 0.08 (e.g., Hu and Bentler, 1999). In Lee’s (2009), CFI was below 0.90 and RMSEA was equal to 0.08. There was no reasonable explanation of the unacceptable model fit but the results were interpreted based on the unfit model. Furthermore, Lee (2009) did not present or discuss the changes in CFI and RMSEA when comparing models with different levels of measurement invariance, which is essential evidence for assessing measurement invariance (e.g., Chen, 2007). Hence, this raises concern about using a comparative approach to these two constructs without rigorous testing for measurement invariance.

The alignment method

The alignment method, on the other hand, is a recently developed technique for testing measurement invariance. This method has its strength in comparisons across a large number of groups, and it is convenient to estimate the models with multiple factors and many indicators (Asparouhov & Muthén, 2014). It focuses on the approximate measurement invariance and can be used to determine the most invariant items in the measurement instruments, and gives the estimates of parameter invariance for each model parameter in every single group (Asparouhov & Muthén, 2014; Muthen & Asparouhov, 2013). Results from the alignment method indicated that a large proportion of non-invariance existed in both mathematics self-concept and self-efficacy. The factor means of the two constructs are thus not comparable across countries and over time. The quality of the alignment analyses and the reliability of the results were confirmed by the Monte Carlo simulations.

Our conclusion—neither mathematics self-efficacy nor mathematics self-concept can be compared across the PISA countries in 2003 and 2012—does not agree with the statement in the PISA reports (OECD, 2013, 2014), where scalar invariance of mathematics self-concept and self-efficacy were claimed to hold. Some circumstances might account for the gaps between the current study and PISA presentations, for example, the usage of different software for analysis, the establishment of model structure and the choice of estimators. Due to the limited information on the analytical methods in the PISA reports, it is difficult to provide further explanations. OECD (2013) argued that “alternative measures were tried out to further improve cross-cultural comparability” (p. 191); however, no clarification on which measures were tried, nor on how cross-cultural comparability could be improved.

Given the presence of a large proportion of non-invariance from the alignment analyses, the comparison of mathematics self-concept and self-efficacy across space and time should be made with caution. The measurement properties reflected by intercepts and factor loadings of the indicators of mathematics self-concept and self-efficacy are much unlikely to hold the same among such a variety of PISA countries and cycles. The results of the current study implied that a direct comparison of the two constructs might be problematic. This may partly be due to the diversity of educational systems and the prerequisites and characteristics of the systems in different contexts and countries, and partly the different cultural backgrounds, which may foster different mentalities in viewing one’s general and specific subject ability (He et al., 2019; Marsh et al., 2006). Albeit the incomparability across the countries and cycles included in this study, the measurement of the mathematics self-concept and self-efficacy may be invariant in a small set of countries or a single country across different PISA cycles.

Exact versus approximate measurement invariance

The current study is comprised of a total of 80 education systems and over 600,000 students from two PISA cycles. Striving for exact measurement invariance at the scalar level across many groups and with a large sample size has shown to be an impossible endeavor since the possible violation of the invariance increases as the number of groups and sample size grow larger (e.g., Asparouhov & Muthén, 2014; Davidov et al., 2014; Kim et al., 2017; Millsap, 2012; Rutkowski & Svetina, 2014). According to the current exact measurement invariance test in multiple-group confirmatory factor analysis, the mathematics self-concept and self-efficacy constructs cannot be directly compared. Even though different strategies have been proposed for between-group comparison allowing approximate measurement invariance of the constructs, criteria on the level of tolerance of invariance in different conditions are very ambiguous and practically cumbersome to apply (e.g., Putnick & Bornstein, 2016). Built on the well-fitting configural model from the traditional multiple-group confirmatory factor analysis, the alignment approach estimates group-specific factor means and variances while allowing approximate measurement invariance, which is more applicable and convenient for many groups (Asparouhov & Muthén, 2014; for applications, see, e.g., Glassow et al., 2021; Munck et al., 2018). Given such advantages, the current study detected the most non-invariant indicators in the two measured constructs. The indicators “Calculating how much cheaper a TV would be after a 30% discount” and “Solving an equation like 3x + 5 = 17” in the mathematics self-concept construct, and the indictor “I am just not good at mathematics” in the mathematics self-efficacy construct seem to contribute the most non-invariance. This could be further investigated to check whether these indicators are good measures to the given constructs.

It is challenging for further application of the mathematics self-concept and self-efficacy constructs in a comparative setting when neither exact nor approximate measurement invariance holds. One possible explanation may be that there are unobserved heterogeneities in the response patterns of the instrument that measure the constructs both among individuals within each education system and among systems. One way to test this is to apply a multi-level factor mixture model (Kim et al., 2017) to identify subgroups of individuals and education systems that are homogeneous in the construct measurement. As a next step, to conduct comparisons of the factor means or other model parameters, e.g., regression coefficients, based on the subset of homogenous individuals and groups. Another way is to apply multilevel structural equation modelling by introducing a between-level variable, which would be helpful for researchers to understand why measurement invariance is absent (Davidov et al., 2012).

Implications and suggestions for future research

The international large-scale survey studies monitor the quality and development of education systems and facilitate evidence-based policymaking (e.g., Harju-Luukkainen et al., 2020). In that, the findings from cross-national comparisons often are taken as motivations for educational decisions and reforms. Therefore, the reliability and validity of the statistical analyses for policy arguments are especially crucial. Ignorance of measurement invariance may have important consequences on education.

The results and findings from this study have foremost methodological significance. On the one hand, it stresses the necessity of measurement invariance testing before making trustworthy comparative inferences among many countries. On the other hand, it supports, as the other recent studies do (Marsh et al., 2018; Munck et al., 2018), the possibility of using the alignment method for investigating measurement invariance. It is a valuable alternative to the commonly used MGCFA concerning many indicator variables and many groups (Asparouhov & Muthén, 2014). Given the identification of the most non-invariant items in the measurement instruments from the alignment analyses, this study has the potential to help improve the mathematics self-concept and self-efficacy scales in PISA to achieve comparability across space and over time. We recommend researchers conduct measurement invariance tests to ensure a solid ground for comparison. Once measurement invariance is established across the selected counties, the latent factor means, and other parameters would be estimated and compared.

References

Asparouhov, T., & Muthén, B. (2014). Multiple-group factor analysis alignment. Structural Equation Modeling: A Multidisciplinary Journal, 21(4), 495–508.

Bandura, A. (1986). Social foundations of thought and action: A social cognitive theory. Prentice-Hall.

Bandura, A. (1997). Self-efficacy: The exercise of control. W. H. Freeman.

Beierlein, C., Davidov, E., Schmidt, P., Schwartz, S. H., & Rammstedt, B. (2012). Testing the discriminant validity of Schwartz’ Portrait Value Questionnaire items – A replication and extension of Knoppen and Saris (2009). Survey Research Methods, 61, 25–36. https://doi.org/10.18148/srm/2012.v6i1.5092

Bollen, K. A. (1989). A new incremental fit index for general structural equation models. Sociological Methods & Research, 17(3), 303–316.

Bong, M., & Skaalvik, E. M. (2003). Academic self-concept and self-efficacy: How different are they really? Educational Psychology Review, 15(1), 1–40.

Brown, T. A. (2015). Confirmatory factor analysis for applied research. Guilford Publications.

Chen, F. F. (2007). Sensitivity of goodness of fit indexes to lack of measurement invariance. Structural Equation Modeling: A Multidisciplinary Journal, 14(3), 464–504.

Chen, H., Dai, J., & Gao, Y. (2019). Measurement invariance and latent mean differences of the Chinese version physical activity self-efficacy scale across gender and education levels. Journal of Sport and Health Science, 8(1), 46–54. https://doi.org/10.1016/j.jshs.2017.01.004

Chen, P., & Zimmerman, B. (2007). A cross-national comparison study on the accuracy of self-efficacy beliefs of middle-school mathematics students. The Journal of Experimental Education, 75(3), 221–244.

Cheung, G. W., & Rensvold, R. B. (2002). Evaluating goodness-of-fit indexes for testing measurement invariance. Structural Equation Modeling, 9(2), 233–255.

Chiu, M. (2017). Self-Concept, Self-Efficacy, and Mathematics Achievement: Students in 65 Regions Including the US and Asia (pp. 267–288). https://doi.org/10.1007/978-3-319-51187-0_15

Cicero, D. C. (2020). Measurement invariance of the self-concept clarity scale across race and sex. Journal of Psychopathology and Behavioral Assessment, 42(2), 296–305. https://doi.org/10.1007/s10862-019-09770-x

Coromina, L., & Peral, E. B. (2020). Comparing alignment and multiple group CFA for analysing political trust in Europe during the crisis. Methodology, 16(1), 21–40. https://doi.org/10.5964/meth.2791

Davidov, E., Dülmer, H., Schlüter, E., Schmidt, P., & Meuleman, B. (2012). Using a multilevel structural equation modeling approach to explain cross-cultural measurement noninvariance. Journal of Cross-Cultural Psychology, 43(4), 558–575.

Davidov, E., Meuleman, B., Cieciuch, J., Schmidt, P., & Billiet, J. (2014). Measurement equivalence in cross-national research. Annual Review of Sociology, 40, 55–75.

Eklöf, H. (2007). Self-concept and valuing of mathematics in TIMSS 2003: Scale structure and relation to performance in a Swedish setting. Scandinavian Journal of Educational Research, 51(3), 297–313.

Garcia, F., Martínez, I., Balluerka, N., Cruise, E., Garcia, O. F., & Serra, E. (2018). Validation of the five-factor self-concept questionnaire AF5 in Brazil: Testing factor structure and measurement invariance across language (Brazilian and Spanish), gender, and age. Frontiers in Psychology, 9,. https://doi.org/10.3389/fpsyg.2018.02250

Githua, B. N., & Mwangi, J. G. (2003). Students’ mathematics self-concept and motivation to learn mathematics: Relationship and gender differences among Kenya’s secondary-school students in Nairobi and Rift valley provinces. International Journal of Educational Development, 23(5), 487–499.

Glassow, L. N., Rolfe, V., & Yang Hansen, K. (2021). Assessing the comparability of teacher-related constructs in TIMSS 2015 across 46 education systems: An alignment optimization approach. Educational Assessment, Evaluation and Accountability, 33(1), 105–137.

Harju-Luukkainen, H., Stang, J., & McElvany, N. (2020). Introduction to monitoring student achievement in the twenty-first century. In H. Harju-Luukkainen, N. McElvany, & J. Stang (Eds.), Monitoring Student Achievement in the 21st Century: European Policy Perspectives and Assessment Strategies (pp. 1–6). Springer International Publishing. https://doi.org/10.1007/978-3-030-38969-7_1

Harter, S. (1978). Effectance motivation reconsidered: Toward a developmental model. Human Development, 21(1), 34–64. https://doi.org/10.1159/000271574

He, J., Barrera-Pedemonte, F., & Buchholz, J. (2019). Cross-cultural comparability of noncognitive constructs in TIMSS and PISA. Assessment in Education: Principles, Policy & Practice, 26(4), 369–385.

Hu, L., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal, 6(1), 1–55. https://doi.org/10.1080/10705519909540118

Kim, E. S., Cao, C., Wang, Y., & Nguyen, D. T. (2017). Measurement invariance testing with many groups: A comparison of five approaches. Structural Equation Modeling: A Multidisciplinary Journal, 24(4), 524–544.

Lee, J. (2009). Universals and specifics of math self-concept, math self-efficacy, and math anxiety across 41 PISA 2003 participating countries. Learning and Individual Differences, 19(3), 355–365. https://doi.org/10.1016/j.lindif.2008.10.009

Marsh, H. W. (1987). The big-fish-little-pond effect on academic self-concept. Journal of Educational Psychology, 79(3), 280.

Marsh, H. W., Abduljabbar, A. S., Morin, A. J. S., Parker, P., Abdelfattah, F., Nagengast, B., & Abu-Hilal, M. M. (2015). The big-fish-little-pond effect: Generalizability of social comparison processes over two age cohorts from Western, Asian, and Middle Eastern Islamic countries. Journal of Educational Psychology, 107(1), 258–271. https://doi.org/10.1037/a0037485

Marsh, H. W., Guo, J., Parker, P. D., Nagengast, B., Asparouhov, T., Muthén, B., & Dicke, T. (2018). What to do when scalar invariance fails: The extended alignment method for multi-group factor analysis comparison of latent means across many groups. Psychological Methods, 23(3), 524.

Marsh, H. W., & Hau, K.-T. (2003). Big-fish-little-pond effect on academic self-concept: A cross-cultural (26-country) test of the negative effects of academically selective schools. American Psychologist, 58(5), 364–376. http://dx.doi.org.ezproxy.ub.gu.se/10.1037/0003-066X.58.5.364

Marsh, H. W., Hau, K.-T., Artelt, C., Baumert, J., & Peschar, J. L. (2006). OECD’s brief self-report measure of educational psychology’s most useful affective constructs: Cross-cultural, psychometric comparisons across 25 countries. International Journal of Testing, 6(4), 311–360.

Marsh, H. W., & Martin, A. J. (2011). Academic self-concept and academic achievement: Relations and causal ordering. British Journal of Educational Psychology, 81(1), 59–77.

Marsh, H. W., & O’Mara, A. (2008). Reciprocal effects between academic self-concept, self-esteem, achievement, and attainment over seven adolescent years: Unidimensional and multidimensional perspectives of self-concept. Personality and Social Psychology Bulletin, 34(4), 542–552.

Marsh, H. W., Pekrun, R., Parker, P. D., Murayama, K., Guo, J., Dicke, T., & Arens, A. K. (2019). The murky distinction between self-concept and self-efficacy: Beware of lurking jingle-jangle fallacies. Journal of Educational Psychology, 111(2), 331.

Marsh, H. W., Seaton, M., Trautwein, U., Lüdtke, O., Hau, K. T., O’Mara, A. J., & Craven, R. G. (2008). The big-fish–little-pond-effect stands up to critical scrutiny: Implications for theory, methodology, and future research. Educational Psychology Review, 20(3), 319–350. https://doi.org/10.1007/s10648-008-9075-6

Marsh, H. W., & Shavelson, R. (1985). Self-concept: Its multifaceted, hierarchical structure. Educational Psychologist, 20(3), 107–123. https://doi.org/10.1207/s15326985ep2003_1

McConney, A., & Perry, L. (2010). Socioeconomic status, self-efficacy, and mathematics achievement in Australia: A secondary analysis. Educational Research for Policy and Practice, 9, 77–91. https://doi.org/10.1007/s10671-010-9083-4

Meade, A. W., Johnson, E. C., & Braddy, P. W. (2008). Power and sensitivity of alternative fit indices in tests of measurement invariance. Journal of Applied Psychology, 93(3), 568.

Millsap, R. E. (2012). Statistical approaches to measurement invariance. Routledge.

Munck, I., Barber, C., & Torney-Purta, J. (2018). Measurement invariance in comparing attitudes toward immigrants among youth across Europe in 1999 and 2009: The alignment method applied to IEA CIVED and ICCS. Sociological Methods & Research, 47(4), 687–728.

Muthén, B., & Asparouhov, T. (2013). New methods for the study of measurement invariance with many groups. Mplus www.statmodel.com.

Muthén, L. K., & Muthén, B. (1998). Mplus user’s guide: Statistical analysis with latent variables, user’s guide. Muthén & Muthén.

Niehaus, K., & Adelson, J. L. (2013). Self-concept and native language background: A study of measurement invariance and cross-group comparisons in third grade. Journal of Educational Psychology, 105(1), 226–240. https://doi.org/10.1037/a0030556

Odell, B., Gierl, M., & Cutumisu, M. (2021). Testing measurement invariance of PISA 2015 mathematics, science, and ICT scales using the alignment method. Studies in Educational Evaluation, 68, 100965. https://doi.org/10.1016/j.stueduc.2020.100965

OECD. (2013). PISA 2012 assessment and analytical framework: Mathematics, reading, science, problem solving and financial literacy. Organisation for Economic Co-Operation and Development. https://doi.org/10.1787/19963777

OECD. (2014). PISA 2012 technical report. Organisation for Economic Co-Operation and Development. https://www.oecd.org/pisa/pisaproducts/PISA-2012-technical-report-final.pdf.

Pajares, F. (1996). Self-efficacy beliefs in academic settings. Review of Educational Research, 66(4), 543–578.

Parker, P. D., Marsh, H. W., Ciarrochi, J., Marshall, S., & Abduljabbar, A. S. (2014). Juxtaposing math self-efficacy and self-concept as predictors of long-term achievement outcomes. Educational Psychology, 34(1), 29–48.

Putnick, D. L., & Bornstein, M. H. (2016). Measurement invariance conventions and reporting: The state of the art and future directions for psychological research. Developmental Review, 41, 71–90.

Randhawa, B. S., Beamer, J. E., & Lundberg, I. (1993). Role of mathematics self-efficacy in the structural model of mathematics achievement. Journal of Educational Psychology, 85(1), 41.

Rogers, C. R. (1951). Client-centered therapy; its current practice, implications, and theory (pp. xii, 560). Houghton Mifflin.

Rosenberg, M. (1979). Conceiving the self. RE Krieger.

Rutkowski, L., & Svetina, D. (2014). Assessing the hypothesis of measurement invariance in the context of large-scale international surveys. Educational and Psychological Measurement, 74(1), 31–57. https://doi.org/10.1177/0013164413498257

Schmitt, N., Golubovich, J., & Leong, F. T. (2011). Impact of measurement invariance on construct correlations, mean differences, and relations with external correlates: An illustrative example using Big Five and RIASEC measures. Assessment, 18(4), 412–427.

Schunk, D. H. (1989). Self-efficacy and achievement behaviors. Educational Psychology Review, 1(3), 173–208. https://doi.org/10.1007/BF01320134

Shavelson, R. J., Hubner, J. J., & Stanton, G. C. (1976). Self-concept: Validation of construct interpretations. Review of Educational Research, 46(3), 407–441.

Svetina, D., Rutkowski, L., & Rutkowski, D. (2020). Multiple-group invariance with categorical outcomes using updated guidelines: An illustration using Mplus and the lavaan/semtools packages. Structural Equation Modeling: A Multidisciplinary Journal, 27(1), 111–130.

Teo, T., & Kam, C. (2014). A measurement invariance analysis of the general self-efficacy scale on two different cultures. Journal of Psychoeducational Assessment, 32(8), 762–767. https://doi.org/10.1177/0734282914531707

Usher, E. L., & Pajares, F. (2006). Sources of academic and self-regulatory efficacy beliefs of entering middle school students. Contemporary Educational Psychology, 31(2), 125–141.

Uysal, N. K., & Arıkan, Ç. A. (2018). Measurement invariance of science self-efficacy scale in PISA. 5(2), 14.

Van de Schoot, R., Lugtig, P., & Hox, J. (2012). A checklist for testing measurement invariance. European Journal of Developmental Psychology, 9(4), 486–492.

van de Vijver, F. J. (2018). Towards an integrated framework of bias in noncognitive assessment in international large-scale studies: Challenges and prospects. Educational Measurement: Issues and Practice, 37(4), 49–56.

Vandenberg, R. J., & Lance, C. E. (2000). A review and synthesis of the measurement invariance literature: Suggestions, practices, and recommendations for organizational research. Organizational Research Methods, 3(1), 4–70.

Widaman, K. F., & Reise, S. P. (1997). Exploring the measurement invariance of psychological instruments: Applications in the substance use domain. In The science of prevention: Methodological advances from alcohol and substance abuse research (pp. 281–324). American Psychological Association. https://doi.org/10.1037/10222-009

Zhang, D., & Wang, C. (2020). The relationship between mathematics interest and mathematics achievement: Mediating roles of self-efficacy and mathematics anxiety. International Journal of Educational Research, 104, 101648.

Funding

Open access funding provided by University of Gothenburg.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Yi Ding. Department of Education and Special Education, University of Gothenburg, Gothenburg, Sweden. E-mail: yi.ding@gu.se

Current themes of research:

Educational psychology. Cross-cultural psychology. Comparative education. Quantitative research methods. Psychometric methods.

Most relevant publications in the field of Psychology of Education:

Ding, Y., Klapp, A., & Yang Hansen, K. (2021a). Construct Validity and Measurement Invariance of Mathematics Self-Efficacy and Self-Concept in Swedish PISA 2003 and 2012. [Paper presentation]. World Education Research Association (WERA) 2021 Virtual Focal Meeting, Santiago de Compostela, Spain. https://gup.ub.gu.se/publication/305664.

Ding, Y., Klapp, A., & Yang Hansen, K. (2021b). Measurement Invariance in Comparing Mathematics Self-Efficacy and Self-Concept across 40 Countries and Economies in PISA 2003 and 2012. [Paper presentation]. European Conference on Educational Research (ECER) 2021, European Educational Research Association (EERA), Geneva (online), Switzerland. https://eera-ecer.de/ecer-programmes/conference/26/contribution/51059/.

Kajsa Yang Hansen. Department of Education and Special Education, University of Gothenburg, Gothenburg, Sweden. E-mail: kajsa.yang-hansen@ped.gu.se

Current themes of research:

Educational equity. Large-scale assessment. Psychometric methods. Academic resilience. Motivation in mathematics.

Most relevant publications in the field of Psychology of Education:

Thorsen, C., Yang Hansen, K., & Johansson, S (2021). The Mechanisms of Interest and Perseverance among Academically Resilient and Non-Resilient Students: Evidence from Swedish Longitudinal Data. British Journal of Educational Psychology e12431. https://doi.org/10.1111/bjep.12431.

Thorsen, C., Johansson, S. & Yang Hansen, K. (2021). Do you need to be gritty to learn English? The importance of the performance domain. Journal for the Psychology of Language Learning, 3(2), 61-85.

Alli Klapp. Department of Education and Special Education. University of Gothenburg, Gothenburg, Sweden. E-mail: alli.klapp@ped.gu.se

Current themes of research:

Educational psychology. Educational assessment. Educational evaluation. Teacher education. Psychometric methods.

Most relevant publications in the field of Psychology of Education:

Klapp, A. (2018). Does academic and social self-concept and motivation explain the effect of grading on students’ achievement?. European Journal of Psychology of Education, 33(2), 355-376.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ding, Y., Yang Hansen, K. & Klapp, A. Testing measurement invariance of mathematics self-concept and self-efficacy in PISA using MGCFA and the alignment method. Eur J Psychol Educ 38, 709–732 (2023). https://doi.org/10.1007/s10212-022-00623-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10212-022-00623-y