Abstract

Sign languages (SL) are the natural languages used by Deaf communities to communicate with each other. Signers use visible parts of their bodies, like their hands, to convey messages without sound. Because of this modality change, SLs have to be represented differently in natural language processing (NLP) tasks: Inputs are regularly presented as video data rather than text or sound, which makes even simple tasks computationally intensive. Moreover, the applicability of NLP techniques to SL processing is limited by their linguistic characteristics. For instance, current research in SL recognition has centered around lexical sign identification. However, SLs tend to exhibit lower vocabulary sizes than vocal languages, as signers codify part of their message through highly iconic signs that are not lexicalized. Thus, a lot of potentially relevant information is lost to most NLP algorithms. Furthermore, most documented SL corpora contain less than a hundred video hours; far from enough to train most non-symbolic NLP approaches. This article proposes a method to achieve unsupervised identification of phonetic units in SL videos, based on Image Thresholding using The Liddell and Johnson Movement-Hold Model [13]. The procedure strives to identify the smallest possible linguistic units that may carry relevant information. This is an effort to avoid losing sub-lexical data that would be otherwise missed to most NLP algorithms. Furthermore, the process enables the elimination of noisy or redundant video frames from the input, decreasing the overall computation costs. The algorithm was tested in a collection of Mexican Sign Language videos. The relevance of the extracted segments was assessed by way of human judges. Further comparisons were carried against French Sign Language resources (LSF), so as to explore how well the algorithm performs across different SLs. The results show that the frames selected by the algorithm contained enough information to remain comprehensible to human signers. In some cases, as much as 80% of the available frames could be discarded without loss of comprehensibility, which may have direct repercussions on how SLs are represented, transmitted and processed electronically in the future.

Similar content being viewed by others

References

Akmeliawati, R., Ooi, M.P.L., Kuang, Y.C.: Real-time malaysian sign language translation using colour segmentation and neural network. In: 2007 IEEE Instrumentation & Measurement Technology Conference IMTC 2007, pp. 1–6. IEEE (2007)

Alvarez Hidalgo, A., Acosta Arrellano, A., Moctezuma Contreras, C., Sanabria Ramos, E., Maya Ortega, E., Alvárez Hidalgo, G., Márquez Vaca, M., Sanabria Ramos, M., Romero Rojas, N.: Dielseme 2 diccionario de lengua de señas mexicana (2009). http://educacionespecial.sepdf.gob.mx/dielseme.aspx

Aronoff, M., Meir, I., Padden, C., Sandler, W.: Morphological universals and the sign language type. In: Booij, G., van Marle, J. (eds.) Yearbook of Morphology 2004, Yearbook of Morphology, pp. 19–39. Springer, Netherlands, Dordrecht (2005). https://doi.org/10.1007/1-4020-2900-4_2

Aronoff, M., Meir, I., Sandler, W.: The paradox of Sign Language morphology. Language 81(2), 301–344 (2005)

Bradski, G.: The OpenCV Library. Dr. Dobb’s Journal of Software Tools (2000)

Brentari, D.: Sign language phonology: the word and sub-lexical structure. In: Pfau, R., Steinbach, M., Woll, B. (eds.) Handbook of Sign Language Linguistics. Mouton, Berlin (2012)

Brentari, D., Fenlon, J., Cormier, K.: Sign Language Phonology (2018). https://doi.org/10.1093/acrefore/9780199384655.013.117. ISBN: 9780199384655

Fenk-Oczlon, G., Pilz, J.: Linguistic complexity: relationships between phoneme inventory size, syllable complexity, word and clause length, and population size. Front. Commun. (2021). https://doi.org/10.3389/fcomm.2021.626032

Filhol, M.: Zebedee: a lexical description model for sign language synthesis. Internal, LIMSI (2009)

Fleming, L.: Phoneme inventory size and the transition from monoplanar to dually patterned speech. J. Lang. Evolut. 2(1), 52–66 (2017). https://doi.org/10.1093/jole/lzx010

Gonzalez, M., Filhol, M., Collet, C.: Semi-automatic sign language corpora annotation using lexical representations of signs. In: LREC, pp. 2430–2434 (2012)

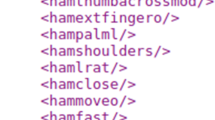

Hanke, T.: Hamnosys-representing sign language data in language resources and language processing contexts. In: LREC, vol. 4, pp. 1–6 (2004)

Johnson, R.E., Liddell, S.K.: A segmental framework for representing signs phonetically. Sign Lang. Stud. 11(3), 408–463 (2011)

Johnson, R.E., Liddell, S.K.: Toward a phonetic representation of signs: sequentiality and contrast. Sign Lang. Stud. 11(2), 241–274 (2011)

Jurafsky, D., Martin, J.H.: Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition. Prentice Hall (2009)

Kacorri, H., Kacorri, H.: A survey and critique of facial expression synthesis in sign language animation

Liddell, S.K., Johnson, R.E.: American sign language: the phonological base. Sign Lang. Stud. 64(1), 195–277 (1989)

Lu, P., Huenerfauth, M.: Learning a vector-based model of American sign language inflecting verbs from motion-capture data. In: Proceedings of the Third Workshop on Speech and Language Processing for Assistive Technologies, pp. 66–74 (2012). Association for Computational Linguistics

Manning, C.D., Schütze, H.: Foundations of Statistical Natural Language Processing, 1, edition The MIT Press, Cambridge (1999)

Martınez-Guevara, N., Cruz-Ramırez, N., Rojano-Cáceres, J.R.: Robust algorithm of clustering for the detection of hidden variables in Bayesian networks. Res. Comput. Sci. 148, 267–276 (2019)

McCarty, A.L.: Notation Systems for Reading and Writing Sign Language. Anal. Verbal Behav. 20(1), 129–134 (2004). https://doi.org/10.1007/BF03392999

Nazari, Z., Kang, D., Asharif, M.R., Sung, Y., Ogawa, S.: A new hierarchical clustering algorithm. In: 2015 International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), pp. 148–152. IEEE (2015)

Ong, S.C.W., Ranganath, S.: Automatic sign language analysis: a survey and the future beyond lexical meaning. IEEE Trans. Pattern Anal. Mach. Intell. 27(6), 873–891 (2005). https://doi.org/10.1109/TPAMI.2005.112

Perniss, P., Özyürek, A., Morgan, G.: The influence of the visual modality on language structure and conventionalization: insights from sign language and gesture. Top. Cogn. Sci. 7(1), 2–11 (2015). https://doi.org/10.1111/tops.12127

Quer, J., Steinbach, M.: Handling sign language data: the impact of modality. Front. Psychol. 10, 483 (2019). https://doi.org/10.3389/fpsyg.2019.00483

Rastgoo, R., Kiani, K., Escalera, S.: Sign language recognition: a deep survey. Expert Syst. Appl. 164, 113794 (2021). https://doi.org/10.1016/j.eswa.2020.113794

Reagan, T.: Sign language and the deaf-world:‘listening without hearing’. In: Linguistic Legitimacy and Social Justice, pp. 135–174. Springer (2019)

Sandler, W.: The phonological prganization of Sign Languages. Lang. Linguist. Compass 6(3), 162–182 (2012). https://doi.org/10.1002/lnc3.326

SEMATOS: SEMATOS portail européen des langues de signes (2013). http://www.sematos.eu/lsf.html

Sutton, V.: SignWriting. Sign languages are written languages. The signWritting Press Lsf, La Jolla (2009)

Sutton-Spence, R., Woll, B.: The Linguistics of British Sign Language: An Introduction. Cambridge University Press, Cambridge (1999)

Takkinen, R.: Some observations on the use of HamNoSys (Hamburg Notation System for Sign Languages) in the context of the phonetic transcription of children’s signing. Sign Lang. Linguist. 8(1–2), 99–118 (2005). https://doi.org/10.1075/sll.8.1.05tak

Valli, C., Lucas, C.: Linguistics of American Sign Language: An Introduction. Gallaudet University Press, Washington (1995)

Verma, V.K., Srivastava, S.: A perspective analysis of phonological structure in Indian sign language. In: Proceedings of First International Conference on Smart System, Innovations and Computing, pp. 175–180. Springer, Berlin (2018)

Vigliocco, G., Perniss, P., Vinson, D.: Language as a multimodal phenomenon: implications for language learning, processing and evolution. Philos Trans R Soc B Biol Sci (2014). https://doi.org/10.1098/rstb.2013.0292

Vogler, C., Metaxas, D.: A framework for recognizing the simultaneous aspects of American sign language. Comput. Vis. Image Underst. 81(3), 358–384 (2001)

Yousefi, J.: Image Binarization Using Otsu Thresholding Algorithm. University of Guelph, Ontario (2011)

Zhou, Z., Menne, T., Li, K., Xu, K., Feng, Z., Lee, Ch.: System and method for automated sign language recognition (2018). US Patent App. 10/109,219

Zwitserlood, I.: Sign language lexicography in the early 21st century and a recently published dictionary of sign language of the Netherlands. Int. J. Lexicogr. 23(4), 443–476 (2010). https://doi.org/10.1093/ijl/ecq031.

Acknowledgements

We would like to thank CONACyT for the support for the preparation of this paper, as well as the “Secretaria de Educacion Especial de la SEP” of México, for providing access and permission for the use of the DIELSEME database, and the doctorate in Computer Science of the Veracruzana University for the support for the development of this project. Niels Martínez is supported by a CONACyT doctoral scholarship, No. 711994. Arturo Curiel is supported by the Cátedras CONACYT project “Infraestructura para agilizar el desarrollo de sistemas centrados en el usuario”, Ref. 3053.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Martínez-Guevara, N., Rojano-Cáceres, JR. & Curiel, A. Unsupervised extraction of phonetic units in sign language videos for natural language processing. Univ Access Inf Soc 22, 1143–1151 (2023). https://doi.org/10.1007/s10209-022-00888-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10209-022-00888-6