Abstract

The protection of private information is of vital importance in data-driven research, business and government. The conflict between privacy and utility has triggered intensive research in the computer science and statistics communities, who have developed a variety of methods for privacy-preserving data release. Among the main concepts that have emerged are anonymity and differential privacy. Today, another solution is gaining traction, synthetic data. However, the road to privacy is paved with NP-hard problems. In this paper, we focus on the NP-hard challenge to develop a synthetic data generation method that is computationally efficient, comes with provable privacy guarantees and rigorously quantifies data utility. We solve a relaxed version of this problem by studying a fundamental, but a first glance completely unrelated, problem in probability concerning the concept of covariance loss. Namely, we find a nearly optimal and constructive answer to the question how much information is lost when we take conditional expectation. Surprisingly, this excursion into theoretical probability produces mathematical techniques that allow us to derive constructive, approximately optimal solutions to difficult applied problems concerning microaggregation, privacy and synthetic data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

“Sharing is caring”, we are taught. But if we care about privacy, then we better think twice what we share. As governments and companies are increasingly collecting vast amounts of personal information (often without the consent or knowledge of the user [32]), it is crucial to ensure that fundamental rights to privacy of the subjects the data refer to are guaranteedFootnote 1. We are facing the problem of how to release data that are useful to make accurate decisions and predictions without disclosing sensitive information on specific identifiable individuals.

The conflict between privacy and utility has triggered intensive research in the computer science and statistics communities, who have developed a variety of methods for privacy-preserving data release. Among the main concepts that have emerged are anonymity and differential privacy [8]. Today, another solution is gaining traction, synthetic data [34]. However, the road to privacy is paved with NP-hard problems. For example, finding the optimal partition into k-anonymous groups is NP-hard [1]. Optimal multivariate microaggregation is NP-hard [13, 28] (albeit, the error metric used in these papers is different from the one used in our paper). Moreover, assuming the existence of one-way functions, there is no polynomial time, differentially private algorithm for generating Boolean synthetic data that preserves all two-dimensional marginals with accuracy o(1) [18].

No matter which privacy preserving strategy one pursues, in order to implement that strategy the challenge is to navigate this NP-hard privacy jungle and develop a method that is computationally efficient, comes with provable privacy guarantees and rigorously quantifies data utility. This is the main topic of our paper.

1.1 State of the Art

Anonymity captures the understanding that it should not be possible to re-identify any individual in the published data [8]. One of the most popular ways in trying to ensure anonymity is via the concept of k-anonymity [35, 36]. A dataset has the k-anonymity property if the information for each person contained in the dataset cannot be distinguished from at least \(k-1\) individuals whose information also appears in the dataset. Although the privacy guarantees offered by k-anonymity are limited, its simplicity has made it a popular part of the arsenal of privacy enhancing technologies, see e.g. [8, 12, 21, 23]. k-anonymity is often implemented via the concept of microaggregation [7, 8, 22, 27, 33]. The principle of microaggregation is to partition a data set into groups of at least k similar records and to replace the records in each group by a prototypical record (e.g. the centroid).

Finding the optimal partition into k-anonymous groups is an NP-hard problem [1]. Several practical algorithms exists that produce acceptable empirical results, albeit without any theoretical bounds on the information loss [6,7,8]. In light of the popularity of k-anonymity, it is thus quite surprising that it is an open problem to design a computationally efficient algorithm for k-anonymity that comes with theoretical utility guarantees.

As k-anonymity is prone to various attacks, differential privacy is generally considered a more robust type of privacy.

Differential privacy formalizes the intuition that the presence or absence of any single individual record in the database or data set should be unnoticeable when looking at the responses returned for the queries [10]. Differential privacy is a popular and robust method that comes with a rigorous mathematical framework and provable guarantees. It can protect aggregate information, but not sensitive information in general. Differential privacy is usually implemented via noise injection, where the noise level depends on the query sensitivity. However, the added noise will negatively affect utility of the released data.

As pointed out in [8], microaggregation is a useful primitive to find bridges between privacy models. It is a natural idea to combine microaggregation with differential privacy [31, 33] to address some of the privacy limitations of k-anonymity. As before, the fundamental question is whether there are computationally efficient methods to implement this scheme while also maintaining utility guarantees.

Synthetic data are generated (typically via some randomized algorithm) from existing data such that they maintain the statistical properties of the original data set, but do so without risk of exposing sensitive information. Combining synthetic data with differential privacy is a promising means to overcome key weaknesses of the latter [25, 26, 34, 37]. Clearly, we want the synthetic data to be faithful to the original data, so as to preserve utility. To quantify the faithfulness, we need some similarity metrics. A common and natural choice for tabular data is to try to (approximately) preserve low-dimensional marginals [5, 11, 20].

We model the true data \(x_1,\ldots ,x_n\) as a sequence of n points from the Boolean cube \(\{0,1\}^p\), which is a standard benchmark data model [5, 16, 18, 26]. For example, this can be n health records of patients, each containing p binary parameters (smoker/non-smoker, etc.)Footnote 2 We are seeking to transform the true data into synthetic data \(y_1,\ldots ,y_m \in \{0,1\}^p\) that is both differentially private and accurate.

As mentioned before, we measure accuracy by comparing the marginals of true and synthetic data. A d-dimensional marginal of the true data has the form

for some given indices \(j_1,\ldots ,j_d \in [p]\). In other words, a low-dimensional marginal is the fraction of the patients whose d given parameters all equal 1. The one-dimensional marginals encode the means of the parameters, and the two-dimensional marginals encode the covariances.

The accuracy of the synthetic data for a given marginal can be defined as

Clearly, the accuracy is bounded by 1 in absolute value.

1.2 Our Contributions

Our goal is to design a randomized algorithm that satisfies the following list of desiderata:

-

(i)

(synthetic data): the algorithm outputs a list of vectors \(y_1,\ldots ,y_m \in \{0,1\}^p\);

-

(ii)

(efficiency): the algorithm requires only polynomial time in n and p;

-

(iii)

(privacy): the algorithm is differentially private;

-

(iv)

(accuracy): the low-dimensional marginals of \(y_1,\ldots ,y_m\) are close to those of \(x_1,\ldots ,x_n\).

There are known algorithms that satisfy any three of the above four requirements if we restrict the accuracy condition (iv) to two-dimensional marginals.

Indeed, if (i) is dropped, one can first compute the mean \(\frac{1}{n}\sum _{k=1}^{n}x_{k}\) and the covariance matrix \(\frac{1}{n}\sum _{k=1}^{n}x_{k}x_{k}^{T}-(\frac{1}{n}\sum _{k=1}^{n}x_{k})(\frac{1}{n}\sum _{k=1}^{n}x_{k})^{T}\), add some noise to achieve differential privacy, and output i.i.d. samples from the Gaussian distribution with the noisy mean and covariance.

Suppose (ii) is dropped. It suffices to construct a differentially private probability measure \(\mu \) on \(\{0,1\}^{p}\) so that \(\int _{\{0,1\}^{p}}x\,d\mu (x)\approx \frac{1}{n}\sum _{k=1}^{n}x_{k}\) and \(\int _{\{0,1\}^{p}}xx^{T}\,d\mu (x)\approx \frac{1}{n}\sum _{k=1}^{n}x_{k}x_{k}^{T}\). After \(\mu \) is constructed, one can generate i.i.d. samples \(y_{1},\ldots ,y_m\) from \(\mu \). The measure \(\mu \) can be constructed as follows: First add Laplace noises to \(\frac{1}{n}\sum _{k=1}^{n}x_{k}\) and \(\frac{1}{n}\sum _{k=1}^{n}x_{k}x_{k}^{T}\) (see Lemma 2.4 below) and then set \(\mu \) to be a probability measure on \(\{0,1\}^{p}\) that minimizes \(\Vert \int _{\{0,1\}^{p}}x\,d\mu (x)-(\frac{1}{n}\sum _{k=1}^{n}x_{k}+\mathrm {noise})\Vert _{\infty }+\Vert \int _{\{0,1\}^{p}}xx^{T}\,d\mu (x)-(\frac{1}{n}\sum _{k=1}^{n}x_{k}x_{k}^{T}+\mathrm {noise})\Vert _{\infty }\), where \(\Vert \,\Vert _{\infty }\) is the \(\ell ^{\infty }\) norm on \({\mathbb {R}}^{p}\) or \({\mathbb {R}}^{p^{2}}\). However, this requires exponential time in p, since the set of all probability measures on \(\{0,1\}^{p}\) can be identified as a convex subset of \({\mathbb {R}}^{2^{p}}\). See [5].

If (iii) or (iv) is dropped, the problem is trivial: in the former case, we can output either the original true data; in the latter, all zeros.

While there are known algorithms that satisfy (i)–(iii) with proofs and empirically satisfy (iv) in simulations (see e.g., [15, 19, 27, 30]), the challenge is to develop an algorithm that provably satisfies all four conditions.

Ullman and Vadhan [18] showed that, assuming the existence of one-way functions, one cannot achieve (i)–(iv) even for \(d=2\), if we require in (iv) that all of the d-dimensional marginals be preserved accurately. More precisely, there is no polynomial time, differentially private algorithm for generating synthetic data in \(\{0,1\}^{p}\) that preserves all of the two-dimensional marginals with accuracy o(1) if one-way functions exist. This remarkable no-go result by Ullman and Vadhan already could put an end to our quest for finding an algorithm that rigorously can achieve conditions (i)–(iv).

Surprisingly, however, a slightly weaker interpretation of (iv) suffices to put our quest on a more successful path. Indeed, we will show in this paper that one can achieve (i)–(iv), if we require in (iv) that most of the d-dimensional marginals be preserved accurately. Remarkably, our result does not only hold for two-dimensional marginals, but for marginals of any given degree.

Note that even if the differential privacy condition in (iii) is replaced by the condition of anonymous microaggregation, it is still a challenging open problem to develop an algorithm that fulfills all these desiderata. In this paper we will solve this problem by deriving a computationally efficient anonymous microaggregation framework that comes with provable accuracy bounds.

Covariance loss. We approach the aforementioned goals by studying a fundamental, but a first glance completely unrelated, problem in probability. This problem is concerned with the most basic notion of probability: conditional expectation. We want to answer the fundamental question:

“How much information is lost when we take conditional expectation?”

The law of total variance shows that taking conditional expectation of a random variable underestimates the variance. A similar phenomenon holds in higher dimensions: taking conditional expectation of a random vector underestimates the covariance (in the positive-semidefinite order). We may ask: how much covariance is lost? And what sigma-algebra of given complexity minimizes the covariance loss?

Finding an answer to this fundamental probability question turns into a quest of finding among all sigma-algebras of given complexity that one which minimizes the covariance loss. We will derive a nearly optimal bound based on a careful explicit construction of a specific sigma-algebra. Amazingly, this excursion into theoretical probability produces mathematical techniques that are most suitable to solve the previously discussed challenging practical problems concerning microaggregation and privacy.

1.3 Private, Synthetic Data?

Now that we described the spirit of our main results, let us introduce them in more detail.

As mentioned before, it is known from Ullman and Vadhan [18] that it is generally impossible to efficiently make private synthetic data that accurately preserves all low-dimensional marginals. However, as we will prove, it is possible to efficiently construct private synthetic data that preserves most of the low-dimensional marginals.

To state our goal mathematically, we average the accuracy (in the \(L^2\) sense) over all \(\left( {\begin{array}{c}p\\ d\end{array}}\right) \) subsets of indices \(\{i_1,\ldots ,i_d\} \subset [p]\), then take the expectation over the randomness in the algorithm. In other words, we would like to see

for some small \(\delta \), where \(E(i_1,\ldots ,i_d)\) is defined in (1.1). If this happens, we say that the synthetic data is \(\delta \)-accurate for d-dimensional marginals on average. Using Markov inequality, we can see that the synthetic data is o(1)-accurate for d-dimensional marginals on average if and only if with high probability, most of the d-dimensional marginals are asymptotically accurate; more precisely, with probability \(1-o(1)\), a \(1-o(1)\) fraction of the d-dimensional marginals of the synthetic data is within o(1) of the corresponding marginals of the true data.

Let us state our result informally.

Theorem 1.1

(Private synthetic Boolean data) Let \(\varepsilon ,\kappa \in (0,1)\) and \(n,m \in {\mathbb {N}}\). There exists an \(\varepsilon \)-differentially private algorithm that transforms input data \(x_1,\ldots ,x_n \in \{0,1\}^p\) into output data \(y_1,\ldots ,y_m \in \{0,1\}^p\). Moreover, if \(d=O(1)\), \(d \le p/2\), \(m \gg 1\), \(n \gg (p/\varepsilon )^{1+\kappa }\), then the synthetic data is o(1)-accurate for d-dimensional marginals on average. The algorithm runs in time polynomial in p, n and linear in m, and is independent of d.

Theorem 5.15 gives a formal and non-asymptotic version of this result.

Our method is not specific to Boolean data. It can be used to generate synthetic data with any predefined convex constraints (Theorem 5.14). If we assume that the input data \(x_1,\ldots ,x_n\) lies in some known convex set \(K \subset {\mathbb {R}}^p\), one can make private and accurate synthetic data \(y_1,\ldots ,y_m\) that lies in the same set K.

1.4 Covariance Loss

Our method is based on a new problem in probability theory, a problem that is interesting on its own. It is about the most basic notion of probability: conditional expectation. And the question is: how much information is lost when we take conditional expectation?

The law of total expectation states that for a random variable X and a sigma-algebra \({\mathcal {F}}\), the conditional expectation \(Y = {{\,\mathrm{{\mathbb {E}}}\,}}[X|{\mathcal {F}}]\) gives an unbiased estimate of the mean: \({{\,\mathrm{{\mathbb {E}}}\,}}X = {{\,\mathrm{{\mathbb {E}}}\,}}Y\). The law of total variance, which can be expressed as

shows that taking conditional expectation underestimates the variance.

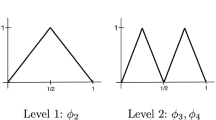

Heuristically, the simpler the sigma-algebra \({\mathcal {F}}\) is, the more variance gets lost. What is the best sigma-algebra \({\mathcal {F}}\) with a given complexity? Among all sigma-algebras \({\mathcal {F}}\) that are generated by a partition of the sample space into k subsets, which one achieves the smallest loss of variance, and what is that loss?

If X is bounded, let us say \(|X| \le 1\), we can decompose the interval \([-1,1]\) into k subintervals of length 2/k each, take \(F_i\) to be the preimage of each interval under X, and let \({\mathcal {F}}= \sigma (F_1,\ldots ,F_k)\) be the sigma-algebra generated by these events. Since X and Y takes values in the same subinterval a.s., we have \(|X-Y| \le 2/k\) a.s. Thus, the law of total variance gives

Let us try to generalize this question to higher dimensions. If X is a random vector taking values in \({\mathbb {R}}^p\), the law of total expectation holds unchanged. The law of total variance becomes the law of total covariance:

where \(\Sigma _X = {{\,\mathrm{{\mathbb {E}}}\,}}(X-{{\,\mathrm{{\mathbb {E}}}\,}}X)(X-{{\,\mathrm{{\mathbb {E}}}\,}}X)^{\mathsf {T}}\) denotes the covariance matrix of X, and similarly for \(\Sigma _Y\) (see Lemma 3.1 below). Just like in the one-dimensional case, we see that taking conditional expectation underestimates the covariance (in the positive-semidefinite order).

However, if we naively attempt to bound the loss of covariance like we did to get (1.3), we would face a curse of dimensionality. The unit Euclidean ball in \({\mathbb {R}}^p\) cannot be partitioned into k subsets of diameter, let us say, 1/4, unless k is exponentially large in p (see e.g. [2]). The following theoremFootnote 3 shows that a much better bound can be obtained that does not suffer the curse of dimensionality.

Theorem 1.2

(Covariance loss) Let X be a random vector in \({\mathbb {R}}^p\) such that \(\left\| X\right\| _2 \le 1\) a.s. Then, for every \(k \ge 3\), there exists a partition of the sample space into at most k sets such that for the sigma-algebra \({\mathcal {F}}\) generated by this partition, the conditional expectation \(Y = {{\,\mathrm{{\mathbb {E}}}\,}}[X|{\mathcal {F}}]\) satisfies

Here C is an absolute constant. Moreover, if the probability space has no atoms, then the partition can be made with exactly k sets, all of which have the same probability 1/k.

Remark 1.3

(Optimality) The rate in Theorem 1.2 is in general optimal up to a \(\sqrt{\log \log k}\) factor; see Proposition 3.14.

Remark 1.4

(Higher moments) Theorem 1.2 can be automatically extended to higher moments via the following tensorization principle (Theorem 3.10), which states that for all \(d \ge 2\),

Remark 1.5

(Hilbert spaces) The bound (1.4) is dimension-free. Indeed, Theorem 1.2 can be extended to hold for infinite-dimensional Hilbert spaces.

1.5 Anonymous Microaggregation

Let us apply these abstract probability results to the problem of making synthetic data. As before, denote the true data by \(x_1,\ldots ,x_n \in {\mathbb {R}}^p\). Let \(X(i)=x_i\) be the random variable on the sample space [n] equipped with uniform probability distribution. Obtain a partition \([n]=I_1 \cup \cdots \cup I_m\) from the Covariance Loss Theorem 1.2, where \(m\le k\), and let us assume for simplicity that \(m=k\) and that all sets \(I_j\) have the same cardinality \(|I_j| = n/k\) (this can be achieved whenever k divides n, a requirement that can easily be dropped as we will discuss later). The conditional expectation \(Y = {{\,\mathrm{{\mathbb {E}}}\,}}[X|{\mathcal {F}}]\) on the sigma-algebra \({\mathcal {F}}= \sigma (I_1,\ldots ,I_k)\) generated by this partition takes values

with probability 1/k each. In other words, the synthetic data \(y_1,\ldots ,y_k\) is obtained by taking local averages, or by microaggregation of the input data \(x_1,\ldots ,x_n\). The crucial point is that the synthetic data is obviously generated via (n/k)-anonymous microaggregation. Here, we use the following formal definition of r-anonymous microaggregation.

Definition 1.6

Let \(x_{1},\ldots ,x_{n}\in {\mathbb {R}}^{p}\) be a dataset. Let \(r\in {\mathbb {N}}\). A r-anonymous averaging of \(x_{1},\ldots ,x_{n}\) is a dataset consisting of the points \(\sum _{i\in I_{1}}x_{i},\ldots ,\sum _{i\in I_{m}}x_{i}\) for some partition \([n]=I_{1}\cup \ldots \cup I_{m}\) such that \(|I_{i}|\ge r\) for each \(1\le i\le m\). A r-anonymous microaggregation algorithm \({{{\mathcal {A}}}}(\,)\) with input dataset \(x_{1},\ldots ,x_{n}\in {\mathbb {R}}^{p}\) is the composition of a r-anonymous averaging procedure followed by any algorithm.

For any notion of privacy, any post-processing of a private dataset should still be considered as private. While a post-processing of a r-anonymous averaging of a dataset is not necessarily a r-anonymous averaging of the original dataset (it might not even consist of vectors), the notion of r-anonymous microaggregation allows a post-processing step after r-anonymous averaging.

What about the accuracy? The law of total expectation \({{\,\mathrm{{\mathbb {E}}}\,}}X = {{\,\mathrm{{\mathbb {E}}}\,}}Y\) becomes \(\frac{1}{n} \sum _{i=1}^n x_i = \frac{1}{k}\sum _{j=1}^k y_j\). As for higher moments, assume that \(\left\| x_i\right\| _2 \le 1\) for all i. Then, Covariance Loss Theorem 1.2 together with tensorization principle (1.5) yields

Thus, if \(k \gg 1\) and \(d=O(1)\), the synthetic data are accurate in the sense of the mean square average of marginals.

This general principle can be specialized to Boolean data. Doing appropriate rescaling, bootstrapping (Section 4.2) and randomized rounding (Section 4.3), we can conclude the following:

Theorem 1.7

(Anonymous synthetic Boolean data) Suppose k divides n. There exists a randomized (n/k)-anonymous microaggregation algorithm that transforms input data \(x_1,\ldots ,x_n \in \{0,1\}^p\) into output data \(y_1,\ldots ,y_m \in \{0,1\}^p\). Moreover, if \(d=O(1)\), \(d \le p/2\), \(k \gg 1\), \(m \gg 1\), the synthetic data is o(1)-accurate for d-dimensional marginals on average. The algorithm runs in time polynomial in p, n and linear in m, and is independent of d.

Theorem 4.6 gives a formal and non-asymptotic version of this result.

1.6 Differential Privacy

How can we pass from anonymity to differential privacy and establish Theorem 1.1? The microaggregation mechanism by itself is not differentially private. However, it reduces sensitivity of synthetic data. If a single input data point \(x_i\) is changed, microaggregation (1.6) suppresses the effect of such change on the synthetic data \(y_j\) by the factor k/n. Once the data have low sensitivity, the classical Laplacian mechanism can make it private: one has simply to add Laplacian noise.

This is the gist of the proof of Theorem 1.1. However, several issues arise. One is that we do not know how to make all blocks \(I_j\) of the same size while preserving their privacy, so we allow them to have arbitrary sizes in the application to privacy. However, small blocks \(I_j\) may cause instability of microaggregation, and diminish its beneficial effect on sensitivity. We resolve this issue by downplaying, or damping, the small blocks (Sect. 5.4). The second issue is that adding Laplacian noise to the vectors \(y_i\) may move them outside the set K where the synthetic data must lie (for Boolean data, K is the unit cube \([0,1]^p\).) We resolve this issue by metrically projecting the perturbed vectors back onto K (Sect. 5.5).

1.7 Related Work

There exists a large body of work on privately releasing answers in the interactive and non-interactive query setting. But a major advantage of releasing a synthetic data set instead of just the answers to specific queries is that synthetic data opens up a much richer toolbox (clustering, classification, regression, visualization, etc.), and thus much more flexibility, to analyze the data.

In [4], Blum, Ligett, and Roth gave an \(\varepsilon \)-differentially private synthetic data algorithm whose accuracy scales logarithmically with the number of queries, but the complexity scales exponentially with p. The papers [17, 26] propose methods for producing private synthetic data with an error bound of about \(\tilde{{\mathcal {O}}}(\sqrt{n} p^{1/4})\) per query. However, the associated algorithms have running time that is at least exponential in p. In [5], Barak et al. derive a method for producing accurate and private synthetic Boolean data based on linear programming with a running time that is exponential in p. This should be contrasted with the fact that our algorithm runs in time polynomial in p and n, see Theorem 1.7.

We emphasize that the our method is designed to produce synthetic data. But, as suggested by the reviewers, we briefly discuss how well d-way marginals can be preserved by our method in the non-synthetic data regime. Here, we consider the dependence of n on p as well as the accuracy we achieve versus existing methods.

Dependence of n on p: In order to release 1-way marginals with any non-trivial accuracy on average and with \(\varepsilon \)-differential privacy, one already needs \(n > rsim p\) [10, Theorem 8.7]. Our main result Theorem 1.1 on differential privacy only requires n to grow slightly faster than linearly in p.

If we just want to privately release the d-way marginals without creating synthetic data, and moreover relax \(\varepsilon \)-differential privacy to \((\varepsilon ,\delta )\)-differential privacy, one might relax the dependence of n on p. Specifically, \(n\gg p^{\lceil d/2\rceil /2}\sqrt{\log (1/\delta )}/\varepsilon \) suffices [11]. In particular, when \(d=2\), this means that \(n\gg \sqrt{p\log (1/\delta )}/\varepsilon \) suffices. On the other hand, our algorithm does not depend on d. Moreover, for \(d\ge 5\), the dependence of n on p required in Theorem 1.1 is less restrictive than in [11].

Accuracy in n: As mentioned above, Theorem 5.15 gives a formal and non-asymptotic version of Theorem 1.1. The average error of d-way marginals we achieve has decay of order \(\sqrt{\log \log n/\log n}\) in n. In Remark 5.16, we show that even for \(d=1,2\), no polynomial time differentially private algorithm can have average error of the marginals decay faster than \(n^{-a}\) for any \(a>0\), assuming the existence of one-way functions.

However, if we only need to release d-way marginals with differential privacy but without creating synthetic data, then one can have the average error of the d-way marginals decay at the rate \(1/\sqrt{n}\) [11].

1.8 Outline of the Paper

The rest of the paper is organized as follows. In Sect. 2, we provide basic notation and other preliminaries. Section 3 is concerned with the concept of covariance loss. We give a constructive and nearly optimal answer to the problem of how much information is lost when we take conditional expectation. In Sect. 4, we use the tools developed for covariance loss to derive a computationally efficient microaggregation framework that comes with provable accuracy bounds regarding low-dimensional marginals. In Sect. 5, we obtain analogous versions of these results in the framework of differential privacy.

2 Preliminaries

2.1 Basic Notation

The approximate inequality signs \(\lesssim \) hide absolute constant factors; thus \(a \lesssim b\) means that \(a \le C b\) for a suitable absolute constant \(C>0\). A list of elements \(\nu _{1},\ldots ,\nu _{k}\) of a metric space M is an \(\alpha \)-covering, where \(\alpha >0\), if every element of M has distance less than \(\alpha \) from one of \(\nu _{1},\ldots ,\nu _{k}\). For \(p\in {\mathbb {N}}\), define

2.2 Tensors

The marginals of a random vector can be conveniently represented in tensor notation. A tensor is a d-way array \(X \in {\mathbb {R}}^{p \times \cdots \times p}\). In particular, 1-way tensors are vectors, and 2-way tensors are matrices. A simple example of a tensor is the rank-one tensor \(x^{\otimes d}\), which is constructed from a vector \(x \in {\mathbb {R}}^p\) by multiplying its entries:

In particular, the tensor \(x^{\otimes 2}\) is the same as the matrix \(xx^{\mathsf {T}}\).

The \(\ell ^2\) norm of a tensor X can be defined by regarding X as a vector in \({\mathbb {R}}^{p^d}\), thus

Note that when \(d=2\), the tensor X can be identified as a matrix and \(\Vert X\Vert _{2}\) is the Frobenius norm of X.

The errors of the marginals (1.1) can be thought of as the coefficients of the error tensor

A tensor \(X \in {\mathbb {R}}^{p \times \cdots \times p}\) is symmetric if the values of its entries are independent of the permutation of the indices, i.e., if

for any permutation \(\pi \) of [p]. It often makes sense to count each distinct entry of a symmetric tensor once instead of d! times. To make this formal, we may consider the restriction operator \(P_{\mathrm {sym}}\) that preserves the \(\left( {\begin{array}{c}p\\ d\end{array}}\right) \) entries whose indices satisfy \(1 \le i_1< \cdots < i_d \le p\), and zeroes out all other entries. Thus,

Thus, the goal we stated in (1.2) can be restated as follows: for the error tensor (2.1), we would like to bound the quantity

The operator \(P_\mathrm {sym}\) is related to another restriction operator \(P_\mathrm {off}\), which retains the \(\left( {\begin{array}{c}p\\ d\end{array}}\right) d!\) off-diagonal entries, i.e., those for which all indices \(i_1, \ldots i_d\) are distinct, and zeroes out all other entries. Thus,

for all symmetric tensor X.

Lemma 2.1

If \(p \ge 2d\), we have

for all symmetric d-way tensor X.

Proof

According to (2.3), the left hand side of (2.4) equals \(\big ( \left( {\begin{array}{c}p\\ d\end{array}}\right) d! \big )^{-1} \left\| P_\mathrm {off}X\right\| _2^2\), and \(\left( {\begin{array}{c}p\\ d\end{array}}\right) d! = p(p-1) \cdots (p-d+1) \ge (p/2)^d\) if \(p \ge 2d\). This yields the desired bound. \(\square \)

2.3 Differential Privacy

We briefly review some basic facts about differential privacy. The interested reader may consult [10] for details.

Definition 2.2

(Differential Privacy [10]) A randomized function \({{\mathcal {M}}}\) gives \(\varepsilon \)-differential privacy if for all databases \(D_1\) and \(D_2\) differing on at most one element, and all measurable \(S \subseteq {{\,\mathrm{range}\,}}({{{\mathcal {M}}}})\),

where the probability is with respect to the randomness of \({{\mathcal {M}}}\).

Almost all existing mechanisms to implement differential privacy are based on adding noise to the data or the data queries, e.g. via the Laplacian mechanism [5]. Recall that a random variable has the (centered) Laplacian distribution \({{\,\mathrm{Lap}\,}}(\sigma )\) if its probability density function at x is \(\frac{1}{2\sigma }\exp (-|x|/\sigma )\).

Definition 2.3

For \(f: {{{\mathcal {D}}}} \rightarrow {\mathbb {R}}^d\), the \(L_1\)-sensitivity is

for all \(D_1, D_2\) differing in at most one element.

Lemma 2.4

(Laplace mechanism, Theorem 2 in [5]) For any \(f: {{{\mathcal {D}}}} \rightarrow {\mathbb {R}}^d\), the addition of \({{\,\mathrm{Lap}\,}}(\sigma )^{d}\) noise preserves \((\Delta f/\sigma )\)-differential privacy.

The proof of the following lemma, which is similar in spirit to the Composition Theorem 3.14 in [10], is left to the reader.

Lemma 2.5

Suppose that an algorithm \({{{\mathcal {A}}}}_1: {\mathcal {D}} \rightarrow {{{\mathcal {Y}}}}_1\) is \(\varepsilon _1\)-differentially private and an algorithm \({{{\mathcal {A}}}}_2: {\mathcal {D}}\times {{{\mathcal {Y}}}}_1 \rightarrow {{{\mathcal {Y}}}}_2\) is \(\varepsilon _2\)-differentially private in the first component in \({\mathcal {D}}\). Assume that \({{{\mathcal {A}}}}_1\) and \({{{\mathcal {A}}}}_2\) are independent. Then, the composition algorithm \({{{\mathcal {A}}}} = {{{\mathcal {A}}}}_2(\,\cdot \,, {{{\mathcal {A}}}}_1(\cdot ))\) is \((\varepsilon _1+\varepsilon _2)\)-differentially private.

Remark 2.6

As outlined in [5], any function applied to private data, without accessing the raw data, is privacy-preserving.

The following observation is a special case of Lemma 2.5.

Lemma 2.7

Suppose the data \(Y_1\) and \(Y_2\) are independent with respect to the randomness of the privacy-generating algorithm and that each is \(\varepsilon \)-differentially private, then \((Y_1,Y_2)\) is \(2\varepsilon \)-differentially private.

3 Covariance Loss

The goal of this section is to prove Theorem 1.2 and its higher-order version, Corollary 3.12. We will establish the main part of Theorem 1.2 in Sects. 3.1–3.4, the “moreover” part (equipartition) in Sects. 3.5–3.6, the tensorization principle (1.5) in Sect. 3.7, and then immediately yields Corollary 3.12. Finally, we show optimality in Sect. 3.8.

3.1 Law of Total Covariance

Throughout this section, X is an arbitrary random vector in \({\mathbb {R}}^p\), \({\mathcal {F}}\) is an arbitrary sigma-algebra and \(Y = {{\,\mathrm{{\mathbb {E}}}\,}}[X|{\mathcal {F}}]\) is the conditional expectation.

Lemma 3.1

(Law of total covariance) We have

In particular, \(\Sigma _X \succeq \Sigma _Y\).

Proof

The covariance matrix can be expressed as

and similarly for Y. Since \({{\,\mathrm{{\mathbb {E}}}\,}}X = {{\,\mathrm{{\mathbb {E}}}\,}}Y\) by the law of total expectation, we have \(\Sigma _X-\Sigma _Y = {{\,\mathrm{{\mathbb {E}}}\,}}XX^{\mathsf {T}}- {{\,\mathrm{{\mathbb {E}}}\,}}YY^{\mathsf {T}}\), proving the first equality in the lemma. Next, one can check that

by expanding the product in the right hand side and recalling that Y is \({\mathcal {F}}\)-measurable. Finally, take expectation on both sides to complete the proof. \(\square \)

Lemma 3.2

(Decomposing the covariance loss) For any orthogonal projection P in \({\mathbb {R}}^p\) we have

Proof

By the law of total covariance (Lemma 3.1), the matrix

is positive semidefinite. Then, we can use the following inequality, which holds for any positive-semidefinite matrix A (see e.g. in [3, p.157]):

Let us bound the two terms in the right hand side. Jensen’s inequality gives

Next, since the matrix \({{\,\mathrm{{\mathbb {E}}}\,}}YY^{\mathsf {T}}\) is positive-semidefinite, we have \(0 \preceq A \preceq {{\,\mathrm{{\mathbb {E}}}\,}}XX^{\mathsf {T}}\) in the semidefinite order, so \(0 \preceq (I-P)A(I-P) \preceq (I-P)({{\,\mathrm{{\mathbb {E}}}\,}}XX^{\mathsf {T}})(I-P)\), which yields

Substitute the previous two bounds into (3.1) to complete the proof. \(\square \)

3.2 Spectral Projection

The two terms in Lemma 3.2 will be bounded separately. Let us start with the second term. It simplifies if P is a spectral projection:

Lemma 3.3

(Spectral projection) Assume that \(\left\| X\right\| _2 \le 1\) a.s. Let \(t\in {\mathbb {N}}\cup \{0\}\). Let P be the orthogonal projection in \({\mathbb {R}}^p\) onto the t leading eigenvectors of the second moment matrix \(S = {{\,\mathrm{{\mathbb {E}}}\,}}XX^{\mathsf {T}}\). Then,

Proof

We have

where \(\lambda _i(S)\) denote the eigenvalues of S arranged in a non-increasing order. Using linearity of expectation and trace, we get

It follows that at most t eigenvalues of S can be larger than 1/t. By monotonicity, this yields \(\lambda _i(S) \le 1/t\) for all \(i > t\). Combining this with the bound above, we conclude that

Substitute this bound into (3.2) to complete the proof. \(\square \)

3.3 Nearest-Point Partition

Next, we bound the first term in Lemma 3.2. This is the only step that does not hold generally but for a specific sigma-algebra, which we generate by a nearest-point partition.

Definition 3.4

(Nearest-point partition) Let X be a random vector taking values in \({\mathbb {R}}^p\), defined on a probability space \((\Omega , \Sigma , {\mathbb {P}})\). A nearest-point partition \(\{F_1,\ldots ,F_s\}\) of \(\Omega \) with respect to a list of points \(\nu _1,\ldots ,\nu _s \in {\mathbb {R}}^p\) is a partition \(\{F_1,\ldots ,F_s\}\) of \(\Omega \) such that

for all \(\omega \in F_j\) and \(1\le j\le s\). (Some of the \(F_j\) could be empty.)

Remark 3.5

A nearest-point partition can be constructed as follows: For each \(\omega \in \Omega \), choose a point \(\nu _j\) nearest to \(X(\omega )\) in the \(\ell ^2\) metric and put \(\omega \) into \(F_j\). Break any ties arbitrarily as long as the \(F_j\) are measurable.

Lemma 3.6

Let X be a random vector in \({\mathbb {R}}^p\) such that \(\left\| X\right\| _2 \le 1\) a.s. Let P be an orthogonal projection on \({\mathbb {R}}^p\). Let \(\nu _1,\ldots ,\nu _s \in {\mathbb {R}}^p\) be an \(\alpha \)-covering of the unit Euclidean ball of \({{\,\mathrm{ran}\,}}(P)\). Let \(\Omega =F_1 \cup \cdots \cup F_s\) be a nearest-point partition for PX with respect to \(\nu _1,\ldots ,\nu _s\). Let \({\mathcal {F}}= \sigma (F_1,\ldots ,F_s)\) be the sigma-algebra generated by the partition. Then, the conditional expectation \(Y = {{\,\mathrm{{\mathbb {E}}}\,}}[X|{\mathcal {F}}]\) satisfies

Proof

If \(\omega \in F_j\) then, by the definition of the nearest-point partition, \(\Vert \nu _j-PX(\omega )\Vert _2=\min _{1\le i\le s}\Vert \nu _i-PX(\omega )\Vert _2\). So by the definition of the \(\alpha \)-covering, we have \(\left\| PX(\omega )-\nu _j\right\| _2 \le \alpha \). Hence, by the triangle inequality we have

Furthermore, by definition of Y, we have

Thus, for such \(\omega \) we have

Applying the projection P and taking norm on both sides, then using Jensen’s inequality, we conclude that

where in the last step we used (3.4). Since the bound holds for each \(\omega \in F_j\) and the events \(F_j\) form a partition of \(\Omega \), it holds for all \(\omega \in \Omega \). The proof is complete. \(\square \)

3.4 Proof of the Main Part of Theorem 1.2

The following simple (and possibly known) observation will come handy to bound the cardinality of an \(\alpha \)-covering that we will need in the proof of Theorem 1.2.

Proposition 3.7

(Number of lattice points in a ball) For all \(\alpha >0\) and \(t\in {\mathbb {N}}\),

In particular, for any \(\alpha \in (0,1)\), it follows that

Proof

The open cubes of side length \(\alpha /\sqrt{t}\) that are centered at the points of the set \({\mathcal {N}}:=B_2^t \cap \frac{\alpha }{\sqrt{t}} {\mathbb {Z}}^t\) are all disjoint. Thus, the total volume of these cubes equals \(|{\mathcal {N}}| (\alpha /\sqrt{t})^t\).

On the other hand, since each such cube is contained in a ball of radius \(\alpha /2\) centered at some point of \({\mathcal {N}}\), the union of these cubes is contained in the ball \((1+\alpha /2) B_2^t\). So, comparing the volumes, we obtain

or

Now, it is well known that [9]

Using Stirling’s formula we have

This gives

Substitute this into the bound on \(|{\mathcal {N}}|\) above to complete the proof. \(\square \)

It follows now from Proposition 3.7 that for every \(\alpha \in (0,1)\), there exists an \(\alpha \)-covering in the unit Euclidean ball of dimension t of cardinality at most \((7/\alpha )^t\).

Fix an integer \(k \ge 3\) and choose

The choice of t is made so that we can find an \(\alpha \)-covering of the unit Euclidean ball of \({{\,\mathrm{ran}\,}}(P)\) of cardinality at most \((7/\alpha )^t \le k\).

We decompose the covariance loss \(\Sigma _{X}-\Sigma _{Y}\) in Lemma 3.1 into two terms as in Lemma 3.2 and bound the two terms as in Lemmas 3.3 and 3.6. This way we obtain

where the last bound follows from our choice of \(\alpha \) and t. If \(t=0\) then \(k\le C\), for some universal constant \(C>0\), so \(\Vert \Sigma _X-\Sigma _Y\Vert _{2}\) is at most O(1) and \(\sqrt{\log \log k/\log k}=O(1)\). The main part of Theorem 1.2 is proved. \(\square \)

3.5 Monotonicity

Next, we are going to prove the “moreover” (equipartition) part of Theorem 1.2. This part is crucial in the application for anonymity, but it can be skipped if the reader is only interested in differential privacy.

Before we proceed, let us first note a simple monotonicity property:

Lemma 3.8

(Monotonicity) Conditioning on a larger sigma-algebra can only decrease the covariance loss. Specifically, if Z is a random variable and \({\mathcal {B}}\subset {\mathcal {G}}\) are sigma-algebras, then

Proof

Denoting \(X = {{\,\mathrm{{\mathbb {E}}}\,}}[Z|{\mathcal {G}}]\) and \(Y = {{\,\mathrm{{\mathbb {E}}}\,}}[Z|{\mathcal {B}}]\), we see from the law of total expectation that \(Y = {{\,\mathrm{{\mathbb {E}}}\,}}[X|{\mathcal {B}}]\). The law of total covariance (Lemma 3.1) then yields \(\Sigma _Z \succeq \Sigma _X \succeq \Sigma _Y\), which we can rewrite as \(0 \preceq \Sigma _Z-\Sigma _X \preceq \Sigma _Z-\Sigma _Y\). From this relation, it follows that \(\left\| \Sigma _Z-\Sigma _X\right\| _2 \le \left\| \Sigma _Z-\Sigma _Y\right\| _2\), as claimed. \(\square \)

Passing to a smaller sigma-algebra may in general increase the covariance loss. The additional covariance loss can be bounded as follows:

Lemma 3.9

(Merger) Let Z be a random vector in \({\mathbb {R}}^p\) such that \(\left\| Z\right\| _2 \le 1\) a.s. If a sigma-algebra is generated by a partition, merging elements of the partition may increase the covariance loss by at most the total probability of the merged sets. Specifically, if \({\mathcal {G}}= \sigma (G_1,\ldots ,G_m)\) and \({\mathcal {B}}= \sigma (G_1 \cup \cdots \cup G_r, G_{r+1},\ldots ,G_m)\), then the random vectors \(X = {{\,\mathrm{{\mathbb {E}}}\,}}[Z|{\mathcal {G}}]\) and \(Y = {{\,\mathrm{{\mathbb {E}}}\,}}[Z|{\mathcal {B}}]\) satisfy

Proof

The lower bound follows from monotonicity (Lemma 3.8). To prove the upper bound, we have

where the first bound follows by triangle inequality, and the second from Lemmas 3.1 and 3.2 for \(P=I\).

Denote by \({{\,\mathrm{{\mathbb {E}}}\,}}_G\) the conditional expectation on the set \(G = G_1 \cup \cdots \cup G_r\), i.e., \({{\,\mathrm{{\mathbb {E}}}\,}}_G[Z] = {{\,\mathrm{{\mathbb {E}}}\,}}[Z|G] = {\mathbb {P}}(G)^{-1} {{\,\mathrm{{\mathbb {E}}}\,}}[Z {{{\textbf {1}}}}_G]\). Then

Indeed, to check the first case, note that since \({\mathcal {B}}\subset {\mathcal {G}}\), the law of total expectation yields \(Y = {{\,\mathrm{{\mathbb {E}}}\,}}[X|{\mathcal {B}}]\); then the case follows since \(G \in {\mathcal {B}}\). To check the second case, note that since the sets \(G_{r+1}, \cdots ,G_m\) belong to both sigma-algebras \({\mathcal {G}}\) and \({\mathcal {B}}\), so the conditional expectations X and Y must agree on each of these sets and thus on their union \(G^c\). Hence

Here, we bounded the variance by the second moment, and used the assumption that \(\left\| X\right\| _2 \le 1\) a.s. Substitute this bound into (3.6) to complete the proof. \(\square \)

3.6 Proof of Equipartition (the “Moreover” Part of Theorem 1.2)

Let \(k' = \lfloor \sqrt{k} \rfloor \). Assume that \(k'\ge 3\). (Otherwise \(k<9\) and the result is trivial by taking arbitrary partition into k of the same probability.) Applying the first part of Theorem 1.2 for \(k'\) instead of k, we obtain a sigma-algebra \({\mathcal {F}}'\) generated by a partition of a sample space into at most \(k'\) sets \(F_i\), and such that

Divide each set \(F_i\) into subsets with probability 1/k each using division with residual. Thus, we partition each \(F_i\) into a certain number of subsets (if any) of probability 1/k each and one residual subset of probability less than 1/k. By Monotonicity Lemma 3.8, any partitioning can only reduce the covariance loss.

This process results in the creation of a lot of good subsets – each having probability 1/k – and at most \(k'\) residual subsets that have probability less than 1/k each. Merge all residuals into one new “residual subset.” While a merger may increase the covariance loss, Lemma 3.9 guarantees that the additional loss is bounded by the probability of the set being merged. Since we chose \(k' = \lfloor \sqrt{k} \rfloor \), the probability of the residual subset is less than \(k' \cdot (1/k) \le 1/\sqrt{k}\). So the additional covariance loss is bounded by \(1/\sqrt{k}\).

Finally, divide the residual subset into further subsets of probability 1/k each. By monotonicity, any partitioning may not increase the covariance loss. At this point we partitioned the sample space into subsets of probability 1/k each and one smaller residual subset. Since k is an integer, the residual must have probability zero and thus can be added to any other subset without affecting the covariance loss.

Let us summarize. We partitioned the sample space into k subsets of equal probability such that the covariance loss is bounded by

The proof is complete. \(\square \)

3.7 Higher Moments: Tensorization

Recall that Theorem 1.2 provides a bound on the covariance lossFootnote 4

Perhaps counterintuitively, the bound on the covariance loss can automatically be lifted to higher moments, at the cost of multiplying the error by at most \(4^d\).

Theorem 3.10

(Tensorization) Let X be a random vector in \({\mathbb {R}}^p\) such that \(\left\| X\right\| _2 \le 1\) a.s., let \({\mathcal {F}}\) be a sigma-algebra, and let \(d \ge 2\) be an integer. Then the conditional expectation \(Y = {{\,\mathrm{{\mathbb {E}}}\,}}[X|{\mathcal {F}}]\) satisfies

For the proof, we need an elementary identity:

Lemma 3.11

Let U and V be independent and identically distributed random vectors in \({\mathbb {R}}^p\). Then,

Proof

We have

\(\square \)

Proof of Theorem 3.10

Step 1: binomial decomposition. Denoting

we can represent

Since \(Y^{\otimes d} = X_0 \otimes \cdots \otimes X_0\), it follows that

Taking expectation on both sides and using triangle inequality, we obtain

Let us look at each summand on the right hand side separately.

Step 2: Dropping trivial terms. First, let us check that all summands for which \(i_1+\cdots +i_d = 1\) vanish. Indeed, in this case exactly one term in the product \(X_{i_1} \otimes \cdots \otimes X_{i_d}\) is \(X_1\), while all other terms are \(X_0\). Let \({{\,\mathrm{{\mathbb {E}}}\,}}_{\mathcal {F}}\) denote conditional expectation with respect to \({\mathcal {F}}\). Since \({{\,\mathrm{{\mathbb {E}}}\,}}_{\mathcal {F}}X_1 = {{\,\mathrm{{\mathbb {E}}}\,}}_{\mathcal {F}}[X-{{\,\mathrm{{\mathbb {E}}}\,}}_{\mathcal {F}}X] = 0\) and \(X_0=Y={{\,\mathrm{{\mathbb {E}}}\,}}_{\mathcal {F}}X\) is \({\mathcal {F}}\)-measurable, it follows that \({{\,\mathrm{{\mathbb {E}}}\,}}_{\mathcal {F}}X_{i_1} \otimes \cdots \otimes X_{i_d} = 0\). Thus, \({{\,\mathrm{{\mathbb {E}}}\,}}X_{i_1} \otimes \cdots \otimes X_{i_d} = 0\) as we claimed.

Step 3: Bounding non-trivial terms. Next, we bound the terms for which \(r = i_1+\cdots +i_d \ge 2\). Let \((X'_0,X'_1)\) be an independent copy of the pair of random variables \((X_0,X_1)\). Then, \({{\,\mathrm{{\mathbb {E}}}\,}}X_{i_1} \otimes \cdots \otimes X_{i_d} = {{\,\mathrm{{\mathbb {E}}}\,}}X'_{i_1} \otimes \cdots \otimes X'_{i_d}\), so

By assumption, we have \(\left\| X\right\| _2 \le 1\) a.s., which implies by Jensen’s inequality that \(\left\| X_0\right\| _2 = \left\| {{\,\mathrm{{\mathbb {E}}}\,}}_{\mathcal {F}}X\right\| _2 \le {{\,\mathrm{{\mathbb {E}}}\,}}_{\mathcal {F}}\left\| X\right\| _2 \le 1\) a.s. These bounds imply by the triangle inequality that \(\left\| X_1\right\| _2 = \left\| X-X_0\right\| _2 \le 2\) a.s. By identical distribution, we also have \(\left\| X'_0\right\| _2 \le 1\) and \(\left\| X'_1\right\| _2 \le 2\) a.s. Hence,

Returning to the term we need to bound, this yields

Step 4: Conclusion. Let us summarize. The sum on the right side of (3.8) has \(2^d-1\) terms. The d terms corresponding to \(i_1+\cdots +i_d=1\) vanish. The remaining \(2^d-d-1\) terms are bounded by \(K :=2^{d-2} \left\| {{\,\mathrm{{\mathbb {E}}}\,}}X^{\otimes 2} - {{\,\mathrm{{\mathbb {E}}}\,}}Y^{\otimes 2}\right\| _2\) each. Hence, the entire sum is bounded by \((2^d-d-1) K\), as claimed. The theorem is proved. \(\square \)

Combining the Covariance Loss Theorem 1.2 with Theorem 3.10 in view of (3.7), we conclude:

Corollary 3.12

(Tensorization) Let X be a random vector in \({\mathbb {R}}^p\) such that \(\left\| X\right\| _2 \le 1\) a.s. Then, for every \(k \ge 3\), there exists a partition of the sample space into at most k sets such that for the sigma-algebra \({\mathcal {F}}\) generated by this partition, the conditional expectation \(Y = {{\,\mathrm{{\mathbb {E}}}\,}}[X|{\mathcal {F}}]\) satisfies for all \(d\in {\mathbb {N}}\),

Moreover, if the probability space has no atoms, then the partition can be made with exactly k sets, all of which have the same probability 1/k.

Remark 3.13

A similar bound can be deduced for the higher-order version of covariance matrix, \(\Sigma ^{(d)}_X :={{\,\mathrm{{\mathbb {E}}}\,}}(X-{{\,\mathrm{{\mathbb {E}}}\,}}X)^{\otimes d}\). Indeed, applying Theorems 1.2 and 3.10 for \(X-{{\,\mathrm{{\mathbb {E}}}\,}}X\) instead of X (and so for \(Y-{{\,\mathrm{{\mathbb {E}}}\,}}Y\) instead of Y), we conclude that

(The extra \(2^d\) factor appears because from \(\left\| X\right\| _2 \le 1\) we can only conclude that \(\left\| X-{{\,\mathrm{{\mathbb {E}}}\,}}X\right\| _2 \le 2\), so the bound needs to be normalized accordingly.)

3.8 Optimality

The following result shows that the rate in Theorem 1.2 is in general optimal up to a \(\sqrt{\log \log k}\) factor.

Proposition 3.14

(Optimality) Let \(p>16\ln (2k)\). Then, there exists a random vector X in \({\mathbb {R}}^{p}\) such that \(\left\| X\right\| _2 \le 1\) a.s. and for any sigma-algebra \({\mathcal {F}}\) generated by a partition of a sample space into at most k sets, the conditional expectation \(Y = {{\,\mathrm{{\mathbb {E}}}\,}}[X|{\mathcal {F}}]\) satisfies

We will make X uniformly distributed on a well-separated subset of the Boolean cube \(p^{-1/2}\{0,1\}^p\) of cardinality \(n=2k\). The following well known lemma states that such a subset exists:

Lemma 3.15

(A separated subset) Let \(p>16\ln n\). Then, there exist points \(x_1,\ldots ,x_n \in p^{-1/2}\{0,1\}^p\) such that

Proof

Let X and \(X'\) be independent random vectors uniformly distributed on \(\{0,1\}^p\). Then, \(\left\| X-X'\right\| _2^2 = \sum _{r=1}^p (X(r)-X'(r))^2\) is a sum of i.i.d. Bernoulli random variables with parameter 1/2. Then, Hoeffding’s inequality [14] yields

Let \(X_1,\ldots ,X_n\) be independent random vectors uniformly distributed on \(\{0,1\}^p\). Applying the above inequality for each pair of them and then taking the union bound, we conclude that

due to the condition on n. Therefore, there exists a realization of these random vectors that satisfies

Divide both sides by \(\sqrt{p}\) to complete the proof. \(\square \)

We will also need a high-dimensional version of the identity \({{\,\mathrm{Var}\,}}(X) = \frac{1}{2}{{\,\mathrm{{\mathbb {E}}}\,}}(X-X')^{2}\) where X and \(X'\) are independent and identically distributed random variables. The following generalization is straightforward:

Lemma 3.16

Let X and \(X'\) be independent and identically distributed random vectors taking values in \({\mathbb {R}}^p\). Then,

Proof of Proposition 3.14

Let \(n=2k\). Consider the sample space [n] equipped with uniform probability and the sigma-algebra that consists of all subsets of [n]. Define the random variable X by

where \(\{x_1,\ldots ,x_n\}\) is the (1/2)-separated subset of \(p^{-1/2}\{0,1\}^p\) from Lemma 3.15. Hence, X is uniformly distributed on the set \(\{x_1,\ldots ,x_n\}\).

Now, if \({\mathcal {F}}\) is the sigma-algebra generated by a partition \(\{F_1,\ldots ,F_{k_0}\}\) of [n] with \(k_0\le k\), then

where the random variable \(X_j\) is uniformly distributed on the set \(\{x_i\}_{i \in F_j}\).

where \(X'_j\) is an independent copy of \(X_j\), by Lemma 3.16. Since the \(X_j\) and \(X'_j\) are independent and uniformly distributed on the set of \(|F_j|\) points, \(\left\| X_j-X'_j\right\| _2\) can either be zero (if both random vectors hit the same point, which happens with probability \(1/|F_j|\)) or it is greater than 1/2 by separation. Hence,

Moreover, \({\mathbb {P}}(F_j) = |F_j|/{n}\), so substituting in the bound above yields

where we used that the sets \(F_j\) form a partition of [n] so their cardinalities sum to n, our choice of \(n=2k\) and the fact that \(k_0\le k\).

We proved that

If \(p \le 25\ln n\), this quantity is further bounded below by \(1/(80 \sqrt{\ln n}) = 1/(80 \sqrt{\ln (2k)})\), completing the proof in this range. For larger p, the result follows by appending enough zeros to X and thus embedding it into higher dimension. Such embedding obviously does not change \(\left\| \Sigma _X-\Sigma _Y\right\| _2\). \(\square \)

4 Anonymity

In this section, we use our results on the covariance loss to make anonymous and accurate synthetic data by microaggregation. To this end, we can interpret microaggregation probabilistically as conditional expectation (Sect. 4.1) and deduce a general result on anonymous microaggregation (Theorem 4.1). We then show how to make synthetic data with custom size by bootstrapping (Sect. 4.2) and Boolean synthetic data by randomized rounding (Sect. 4.3).

4.1 Microaggregation as Conditional Expectation

For discrete probability distributions, conditional expectation can be interpreted as microaggregation, or local averaging.

Consider a finite sequence of points \(x_1,\ldots ,x_n \in {\mathbb {R}}^p\), which we can think of as true data. Define the random variable X on the sample space [n] equipped with the uniform probability distribution by setting

Now, if \({\mathcal {F}}= \sigma (I_1,\ldots ,I_k)\) is the sigma-algebra generated by some partition \([n]=I_1 \cup \cdots \cup I_k\), the conditional expectation \(Y = {{\,\mathrm{{\mathbb {E}}}\,}}[X|{\mathcal {F}}]\) must take a constant value on each set \(I_j\), and that value is the average of X on that set. In other words, Y takes values \(y_j\) with probability \(w_j\), where

The law of total expectation \({{\,\mathrm{{\mathbb {E}}}\,}}X = {{\,\mathrm{{\mathbb {E}}}\,}}Y\) in our case states that

Higher moments are handled using Corollary 3.12. This way, we obtain an effective anonymous algorithm that creates synthetic data while accurately preserving most marginals:

Theorem 4.1

(Anonymous microaggregation) Suppose k divides n. There exists a (deterministic) algorithm that takes input data \(x_1,\ldots ,x_n \in {\mathbb {R}}^p\) such that \(\left\| x_i\right\| _2 \le 1\) for all i, and computes a partition \([n]=I_1 \cup \cdots \cup I_k\) with \(|I_j| = n/k\) for all j, such that the microaggregated vectors

satisfy for all \(d\in {\mathbb {N}}\),

The algorithm runs in time polynomial in p and n and is independent of d.

Proof

Most of the statement follows straightforwardly from Corollary 3.12 in light of the discussion above. However, the “moreover" part of Corollary 3.12 requires the probability space to be atomless, while our probability space [n] does have atoms. Nevertheless, if the sample space consists of n atoms of probability 1/n each, and k divides n, then it is obvious that the divide-and-merge argument explained in Sect. 3.6 works, and so the “moreover" part of Corollary 3.12 also holds in this case. Thus, we obtain the (n/k)-anonymity from the microaggregation procedure. It is also clear that the algorithm (which is independent of d) runs in time polynomial in p and n. See the Microaggregation part of Algorithm 1.

\(\square \)

Remark 4.2

The requirement that k divides n appearing in Theorem 4.1 as well as in other theorems makes it possible to partition [n] into k sets of exactly the same cardinality. While convenient for proof purposes, this assumption is not strictly necessary. One can drop this assumption and make one set slightly larger than others. The corresponding modifications are left to the reader.

The use of spectral projection in combination with microaggregation has also been proposed in [6], although without any theoretical analysis regarding privacy or utility.

4.2 Synthetic Data with Custom Size: Bootstrapping

A seeming drawback of Theorem 4.1 is that the anonymity strength n/k and the cardinality k of the output data \(y_1,\ldots ,y_k\) are tied to each other. To produce synthetic data of arbitrary size, we can use the classical technique of bootstrapping, which consists of sampling new data \(u_1,\ldots ,u_m\) from the data \(y_1,\ldots ,y_k\) independently and with replacement. The following general lemma establishes the accuracy of resampling:

Lemma 4.3

(Bootstrapping) Let Y be a random vector in \({\mathbb {R}}^p\) such that \(\left\| Y\right\| _2 \le 1\) a.s. Let \(Y_1,\ldots ,Y_m\) be independent copies of Y. Then, for all \(d \in {\mathbb {N}}\) we have

Proof

We have

Using the assumption \(\left\| Y\right\| _2 \le 1\) a.s., we complete the proof. \(\square \)

Going back to the data \(y_1,\ldots ,y_k\) produced by Theorem 4.1, let us consider a random vector Y that takes values \(y_j\) with probability 1/k each. Then obviously \({{\,\mathrm{{\mathbb {E}}}\,}}Y^{\otimes d} = \frac{1}{k} \sum _{j=1}^k y_j^{\otimes d}\). Moreover, the assumption that \(\left\| x_i\right\| _2 \le 1\) for all i implies that \(\left\| y_j\right\| _2 \le 1\) for all j, so we have \(\left\| Y\right\| _2 \le 1\) as required in Bootstrapping Lemma 4.3. Applying this lemma, we get

Combining this with the bound in Theorem 4.1, we obtain:

Theorem 4.4

(Anonymous microaggregation: custom data size) Suppose k divides n. Let \(m\in {\mathbb {N}}\). There exists a randomized (n/k)-anonymous microaggregation algorithm that transforms input data \(x_1,\ldots ,x_n \in B_2^p\) to the output data \(u_1,\ldots , u_m \in B_2^p\) in such a way that for all \(d\in {\mathbb {N}}\),

The algorithm consists of anonymous averaging (described in Theorem 4.1) followed by bootstrapping (described above). It runs in time polynomial in p and n, and is independent of d.

Remark 4.5

(Convexity) Microaggregation respects convexity. If the input data \(x_1,\ldots ,x_n\) lies in some given convex set K, the output data \(u_1,\ldots , u_m\) will lie in K, too. This can be useful in applications where one often needs to preserve some natural constraints on the data, such as positivity.

4.3 Boolean Data: Randomized Rounding

Let us now specialize to Boolean data. Suppose the input data \(x_1,\ldots ,x_n\) is taken from \(\{0,1\}^p\). We can use Theorem 4.4 (and obvious renormalization by the factor \(\left\| x_i\right\| _2 = \sqrt{p}\)) to make (n/k)-anonymous synthetic data \(u_1,\ldots ,u_m\) that satisfies

According to Remark 4.5, the output data \(u_1,\ldots ,u_m\) lie in the cube \(K=[0,1]^p\). In order to transform the vectors \(u_i\) into Boolean vectors, i.e., points in \(\{0,1\}^p\), we can apply the known technique of randomized rounding [29]. We define the randomized rounding of a number \(x \in [0,1]\) as a random variable \(r(x) \sim {{\,\mathrm{Ber}\,}}(x)\). Thus, to compute r(x), we flip a coin that comes up heads with probability x and output 1 for a head and 0 for a tail. It is convenient to think of \(r: [0,1] \rightarrow \{0,1\}\) as a random function. The randomized rounding r(x) of a vector \(x \in [0,1]^p\) is obtained by randomized rounding on each of the p coordinates of x independently.

Theorem 4.6

(Anonymous synthetic Boolean data) Suppose k divides n. There exists a randomized (n/k)-anonymous microaggregation algorithm that transforms input data \(x_1,\ldots ,x_n \in \{0,1\}^p\) into output data \(z_1,\ldots ,z_m \in \{0,1\}^p\) in such a way that the error \(E = \frac{1}{n} \sum _{i=1}^n x_i^{\otimes d} - \frac{1}{m} \sum _{i=1}^m z_i^{\otimes d}\) satisfies

for all \(d \le p/2\). The algorithm consists of anonymous averaging and bootstrapping (as in Theorem 4.4) followed by independent randomized rounding of all coordinates of all points. It runs in time polynomial in p, n and linear in m, and is independent of d.

For convenience of the reader, Algorithm 1 gives a pseudocode description of the algorithm described in Theorem 4.6.

To prove Theorem 4.6, first note:Footnote 5

Lemma 4.7

(Randomized rounding is unbiased) For any \(x \in [0,1]^p\) and \(d \in {\mathbb {N}}\), all off-diagonal entries of the tensors \({{\,\mathrm{{\mathbb {E}}}\,}}r(x)^{\otimes d}\) and \(x^{\otimes d}\) match:

where \(P_\mathrm {off}\) is the orthogonal projection onto the subspace of tensors supported on the off-diagonal entries.

Proof

For any tuple of distinct indices \(i_1,\ldots ,i_d \in [p]\), the definition of randomized rounding implies that \(r(x)_{i_1}, \ldots , r(x)_{i_d}\) are independent \({{\,\mathrm{Ber}\,}}(x_{i_1}), \ldots , {{\,\mathrm{Ber}\,}}(x_{i_d})\) random variables. Thus,

completing the proof. \(\square \)

Proof of Theorem 4.6

Condition on the data \(u_1,\ldots ,u_m\) obtained in Theorem 4.4. The output data of our algorithm can be written as \(z_i = r_i(u_i)\), where the index i in \(r_i\) indicates that we perform randomized rounding on each point \(u_i\) independently. Let us bound the error introduced by randomized rounding, which is

where \(Z_i :=P_\mathrm {off}\big ( r_i(u_i)^{\otimes d} - u_i^{\otimes d} \big )\) are independent mean zero random variables due to Lemma 4.7. Therefore,

Since the variance is bounded by the second moment, we have

since \(r_i(u_i) \in \{0,1\}^p\). Hence

Lifting the conditional expectation (i.e., taking expectation with respect to \(u_1,\ldots ,u_m\)) and combining this with (4.3) via triangle inequality, we obtain

Finally, we can replace the off-diagonal norm by the symmetric norm using Lemma 2.1. If \(p \ge 2d\), it yields

In view of (2.2), the proof is complete. \(\square \)

5 Differential Privacy

Here, we pass from anonymity to differential privacy by noisy microaggregation. In Sect.5.1, we construct a “private" version of the PCA projection using repeated applications of the “exponential mechanism” [24]. This “private" projection is needed to make the PCA step in Algorithm 1 in Sect.4.3 differentially private. In Sects. 5.2–5.6, we show that the microaggregation is sufficiently stable with respect to additive noise, as long as we damp small blocks \(F_j\) (Sect. 5.4) and project the weights \(w_j\) and the vectors \(y_j\) back to the unit simplex and the convex set K, respectively (Sect. 5.5). We then establish differential privacy in Sect. 5.7 and accuracy in Sect. 5.6, with Theorem 5.13 being the most general result on private synthetic data. Just like we did for anonymity, we then show how to make synthetic data with custom size by bootstrapping (Sect. 5.9) and Boolean synthetic data by randomized rounding (Sect. 5.10).

5.1 Differentially Private Projection

If A is a self-adjoint linear transformation on a real inner product space, then the ith largest eigenvalue of A is denoted by \(\lambda _{i}(A)\); the spectral norm of A is denoted by \(\Vert A\Vert \); and the Frobenius norm of A is denoted by \(\Vert A\Vert _{2}\). If \(v_{1},\ldots ,v_{t}\in {\mathbb {R}}^{p}\), then \(P_{v_{1},\ldots ,v_{t}}\) denotes the orthogonal projection from \({\mathbb {R}}^{p}\) onto \(\mathrm {span}\{v_{1},\ldots ,v_{t}\}\). In particular, if \(v\in {\mathbb {R}}^{p}\), then \(P_{v}\) denotes the orthogonal projection from \({\mathbb {R}}^{p}\) onto \(\mathrm {span}\{v\}\).

In this section, we construct for any given \(p\times p\) positive semidefinite A and \(1\le t\le p\), a random projection P that behaves like the projection onto the t leading eigenvectors of A and, at the same time, is “differentially private" in the sense that if A is perturbed a little, the distribution of P changes a little. Something like this is done [24]. However, in [24], a PCA approximation of A is produced in the output rather than the projection. The error in the operator norm for this approximation is estimated in [24], whereas in this paper, we need to estimate the error in the Frobenius norm.

Thus, we will do a modification of the construction in [24]. But the general idea is the same: first construct a vector that behaves like the principal eigenvector (i.e., 1-dimensional PCA) and, at the same time, is “differentially private." Repeatedly doing this procedure gives a “differentially private" version of the t-dimensional PCA projection.

The following algorithm is referred to as the “exponential mechanism" in [24]. As shown in Lemma 5.1, this algorithm outputs a random vector that behaves like the principal eigenvector (see part 1) and is “differentially private" in the sense of part 3.

Lemma 5.1

([24]) Suppose that A is a positive semidefinite linear transformation on a finite-dimensional vector space V.

-

(1)

If v is an output of \(\mathrm {PVEC}(A)\), then

$$\begin{aligned} {{\,\mathrm{{\mathbb {E}}}\,}}\langle Av,v\rangle \ge (1-\gamma ) \lambda _1(A) \end{aligned}$$for all \(\gamma >0\) such that \(\lambda _{1}(A)\ge C \dim (V)\frac{1}{\gamma }\log (\frac{1}{\gamma })\), where \(C>0\) is an absolute constant.

-

(2)

\(\mathrm {PVEC}(A)\) can be implemented in time \(\mathrm {poly}(\mathrm {dim}\, V,\lambda _{1}(A))\).

-

(3)

Let \(B: V \rightarrow V\) be a positive semidefinite linear transformation. If \(\Vert A-B\Vert \le \beta \), then

$$\begin{aligned} {\mathbb {P}}_{} \left\{ \mathrm {PVEC}(A)\in {\mathcal {S}} \right\} \le e^{\beta } \cdot {\mathbb {P}}_{} \left\{ \mathrm {PVEC}(B)\in {\mathcal {S}} \right\} \end{aligned}$$for any measurable subset \({\mathcal {S}}\) of V.

Let us restate part 1 of Lemma 5.1 more conveniently:

Lemma 5.2

Suppose that A is a positive semidefinite linear transformations on a finite-dimensional vector space V. If v is an output of \(\mathrm {PVEC}(A)\), then

for all \(\gamma >0\), where \(C>0\) is an absolute constant.

Proof

Fix \(\gamma >0\), and let us consider two cases.

If \(\lambda _1(A) \ge C\dim (V)\frac{1}{\gamma }\log (\frac{1}{\gamma })\), then by part 1 of Lemma 5.1, we have \(\lambda _1(A) - {{\,\mathrm{{\mathbb {E}}}\,}}\langle Av,v\rangle \le \gamma \lambda _{1}(A)\). Therefore, keeping in mind that the inequality \(\langle Av,v\rangle \le \lambda _1(A)\) always hold, we obtain \(\lambda _{1}(A)^{2}- {{\,\mathrm{{\mathbb {E}}}\,}}\langle Av,v\rangle ^2{=}{{\,\mathrm{{\mathbb {E}}}\,}}\left[ \!(\lambda _{1}(A){+}\langle Av,v\rangle )(\lambda _{1}(A){-}\langle Av,v\rangle )\!\right] \le 2\gamma \lambda _{1}(A)^{2}\).

If \(\lambda _1(A) < C\dim (V)\frac{1}{\gamma }\log (\frac{1}{\gamma })\), then \(\lambda _1(A)^{2}- {{\,\mathrm{{\mathbb {E}}}\,}}\langle Av,v\rangle ^2 \le \lambda _1(A)^{2} \le C^{2}(\mathrm {dim}\,V)^{2}\frac{1}{\gamma ^{2}}\log ^{2}(\frac{1}{\gamma })\). The proof is complete. \(\square \)

We now construct a “differentially private" version of the t-dimensional PCA projection. This is done by repeated applications of PVEC in Algorithm 2.

The following lemma shows that the algorithm PROJ is “differentially private" in the sense of part 3 of Lemma 5.1, except that \(e^{\beta }\) is replaced by \(e^{t\beta }\).

Lemma 5.3

Suppose that A and B are \(p\times p\) positive semidefinite matrices and \(1\le t\le p\). If \(\Vert A-B\Vert \le \beta \), then

for any measurable subset \({\mathcal {S}}\) of \({\mathbb {R}}^{p\times p}\).

Proof

Fix \(\beta \). We first define a notion of privacy similar to the one in [24]. A randomized algorithm \({\mathcal {M}}\) with input being a \(p\times p\) positive semidefinite real matrix A is \(\theta \)-DP if whenever \(\Vert A-B\Vert \le \beta \), we have \({\mathbb {P}}\{{\mathcal {M}}(A)\in {\mathcal {S}}\}\le e^{\theta }{\mathbb {P}}\{{\mathcal {M}}(B)\in {\mathcal {S}}\}\) for all measurable subset \({\mathcal {S}}\) of \({\mathbb {R}}^{p\times p}\).

In the algorithm PROJ, the computation of \(v_{1}\) as an algorithm is \(\beta \)-DP by Lemma 5.1(3).

Similarly, if we fix \(v_{1}\), the computation of \(v_{2}\) as an algorithm is also \(\beta \)-DP. So by some version of Lemma 2.5, the computation of \((v_{1},v_{2})\) as an algorithm (without fixing \(v_{1}\)) is \(2\beta \)-DP.

And so on. By induction, we have that the computation of \((v_{1},\ldots ,v_{t})\) as an algorithm is \(t\beta \)-DP. Thus, \(\mathrm {PROJ}(\cdot ,t)\) is \(t\beta \)-DP. The result follows. \(\square \)

Next, we show that the output of the algorithm PROJ behaves like the t-dimensional PCA projection in the sense of Lemma 5.5 below. Observe that if P is the projection onto the t leading eigenvectors of a \(p\times p\) positive semidefinite matrix A, then \(\Vert (I-P)A(I-P)\Vert _{2}^{2}=\sum _{i=t+1}^{p}\lambda _{i}(A)^{2}\). To prove Lemma 5.5, we first prove the following lemma and then we apply this lemma repeatedly to obtain Lemma 5.5.

Lemma 5.4

Let A be a \(p\times p\) positive semidefinite matrix. Let \(v\in {\mathbb {R}}^{p}\) with \(\Vert v\Vert _{2}=1\). Then

for every \(1\le j\le p\).

Proof

For every \(p\times p\) real symmetric matrix B and every \(1\le i\le p\), we have

where the infimum is over all subspaces W of \({\mathbb {R}}^{p}\) with dimension \(p-i+1\). Thus, since \(P_{v}\) is a rank-one orthogonal projection,

for every \(1\le i\le p-1\). Thus,

so

Since \(\Vert A\Vert _{2}^{2}-\Vert (I-P_{v})A(I-P_{v})\Vert _{2}^{2}\ge \Vert P_{v}AP_{v}\Vert _{2}^{2}=\langle Av,v\rangle ^{2}\), the result follows. \(\square \)

Lemma 5.5

Suppose that A is a \(p\times p\) positive semidefinite matrix and \(1\le t\le p\). If P is an output of \(\mathrm {PROJ}(A,t)\), then

for all \(\gamma >0\), where \(C>0\) is an absolute constant.

Proof

Let \(v_{1},\ldots ,v_{t}\) be those vectors defined in the algorithm \(\mathrm {PROJ}(A,t)\). Let \(A_{0}=A\). For \(1\le k\le t\), let \(A_{k}=(I-P_{v_{1},\ldots ,v_{k}})A(I-P_{v_{1},\ldots ,v_{k}})\). Since \(v_{k+1}\) is an output of \(\mathrm {PVEC}(A_{k})\), by Lemma 5.2, we have

for all \(1\le j\le p\) and \(0\le k\le t-1\), where the expectation \({\mathbb {E}}_{v_{k+1}}\) is over \(v_{k+1}\) conditioning on \(v_{1},\ldots ,v_{k}\). By Lemma 5.4, we have

for all \(1\le j\le p\) and \(0\le k\le t-1\). Therefore,

for all \(1\le j\le p\) and \(0\le k\le t-1\).

In the algorithm \(\mathrm {PROJ}(A,t)\), each \(v_{k+1}\) is chosen from the unit sphere of \(\mathrm {ran}(I-P_{v_{1},\ldots ,v_{k}})\). Hence, the vectors \(v_{1},\ldots ,v_{t}\) are orthonormal, so \(I-P_{v_{1},\ldots ,v_{k+1}}=(I-P_{v_{k+1}})(I-P_{v_{1},\ldots ,v_{k}})\) for all \(1\le k\le t-1\). Thus, \((I-P_{v_{k+1}})A_{k}(I-P_{v_{k+1}})=A_{k+1}\). So we have

for all \(1\le j\le p\) and \(0\le k\le t-1\). Taking the full expectation \({\mathbb {E}}\) on both sides, we get

for all \(1\le j\le p\) and \(0\le k\le t-1\), where we used the fact that \(\lambda _{1}(A_{k})=\Vert A_{k}\Vert \le \Vert A\Vert \). Repeated applications of this inequality yields

Note that \(A_{t}=(I-P_{v_{1},\ldots ,v_{t}})A(I-P_{v_{1},\ldots ,v_{t}})\) and \(P=P_{v_{1},\ldots ,v_{t}}\) is the output of \(\mathrm {PROJ}(A,t)\). Thus, the left hand side is equal to \({\mathbb {E}}\Vert A_{t}\Vert _{2}^{2}={\mathbb {E}}\Vert (I-P)A(I-P)\Vert _{2}^{2}\). The result follows. \(\square \)

5.2 Microaggregation with More Control

We will protect privacy by adding noise to the microaggregation mechanism. To make this happen, we will need a version of Theorem 4.1 with more control.

We adapt the microaggregation mechanism from (4.1) to the current setting. Given a partition \([n]=F_1 \cup \cdots \cup F_s\) (where some \(F_j\) could be empty), we define for \(1\le j\le s\) with \(F_j\) being non-empty,

and when \(F_j\) is empty, set \(w_j=0\) and \(y_j\) to be an arbitrary point.

Theorem 5.6

(Microaggregation with more control) Let \(x_1,\ldots ,x_n \in {\mathbb {R}}^p\) be such that \(\left\| x_i\right\| _2 \le 1\) for all i. Let \(S = \frac{1}{n} \sum _{i=1}^n x_i x_i^{\mathsf {T}}\). Let P be an orthogonal projection on \({\mathbb {R}}^p\). Let \(\nu _1,\ldots ,\nu _s \in {\mathbb {R}}^p\) be an \(\alpha \)-covering of the unit Euclidean ball of \({{\,\mathrm{ran}\,}}(P)\). Let \([n]=F_1 \cup \cdots \cup F_s\) be a nearest-point partition of \((Px_i)\) with respect to \(\nu _1,\ldots ,\nu _s\). Then, the weights \(w_j\) and vectors \(y_j\) defined in (5.1) satisfy for all \(d \in {\mathbb {N}}\):

Proof of Theorem 5.6

We explained in Sect. 4.1 how to realize microaggregation probabilistically as conditional expectation. To reiterate, we consider the sample space [n] equipped with the uniform probability distribution and define a random variable X on [n] by setting \(X(i)=x_i\) for \(i=1,\ldots ,n\). If \({\mathcal {F}}= \sigma (F_1,\ldots ,F_s)\) is the sigma-algebra generated by some partition \([n]=F_1 \cup \cdots \cup F_s\), the conditional expectation \(Y = {{\,\mathrm{{\mathbb {E}}}\,}}[X|{\mathcal {F}}]\) is a random vector that takes values \(y_j\) with probability \(w_j\) as defined in (5.1). Then, the left hand side of (5.2) equals

\(\square \)

5.3 Perturbing the Weights and Vectors

Theorem 5.6 makes the first step toward noisy microaggregation. Next, we will add noise to the weights \((w_j)\) and vectors \((y_j)\) obtained by microaggregation. To control the effect of such noise on the accuracy, the following two simple bounds will be useful.

Lemma 5.7

Let \(u,v \in {\mathbb {R}}^n\) be such that \(\left\| u\right\| _2 \le 1\) and \(\left\| v\right\| _2 \le 1\). Then, for every \(d \in {\mathbb {N}}\),

Proof

For \(d=1\) the result is trivial. For \(d \ge 2\), we can represent the difference as a telescopic sum

Then, by triangle inequality,

where we used the assumption on the norms of u and v in the last step. The lemma is proved. \(\square \)

Lemma 5.8

Consider numbers \(\lambda _j, \mu _j \in {\mathbb {R}}\) and vectors \(u_j, v_j \in {\mathbb {R}}^p\) such that \(\left\| u_j\right\| _2 \le 1\) and \(\left\| v_j\right\| _2 \le 1\) for all \(j=1,\ldots ,m\). Then, for every \(d \in {\mathbb {N}}\),

Proof

Adding and subtracting the cross term \(\sum _j \lambda _j v_j^{\otimes d}\) and using triangle inequality, we can bound the left side of (5.3) by

It remains to use Lemma 5.7 and note that \(\left\| v_j^{\otimes d}\right\| _2 = \left\| v_j\right\| _2^d\). \(\square \)

5.4 Damping

Although the microaggregation mechanism (5.1) is stable with respect to additive noise in the weights \(w_j\) or the vectors \(y_j\) as shown in Sect. 5.3, there are still two issues that need to be resolved.

The first issue is the potential instability of the microaggregation mechanism (5.1) for small blocks \(F_j\). For example, if \(|F_j|=1\), the microaggregation does not do anything for that block and returns the original input vector \(y_j=x_i\). To protect the privacy of such vector, a lot of noise is needed, which might be harmful to the accuracy.

One may wonder why can we not make all blocks \(F_j\) of the same size like we did in Theorem 4.1. Indeed, in Sect. 3.6 we showed how to transform a potentially imbalanced partition \([n]=F_1 \cup \cdots \cup F_s\) into an equipartition (where all \(F_j\) have the same cardinality) using a divide-an-merge procedure; could we not apply it here? Unfortunately, an equipartition might be too sensitiveFootnote 6 to changes even in a single data point \(x_i\). The original partition \(F_1,\ldots ,F_s\), on the other hand, is sufficiently stable.

We resolve this issue by suppressing, or damping, the blocks \(F_j\) that are too small. Whenever the cardinality of \(F_j\) drops below a predefined level b, we divide by b rather than \(|F_j|\) in (5.1). In other words, instead of vanilla microaggregation (5.1), we consider the following damped microaggregation:

5.5 Metric Projection

And here is the second issue. Recall that the numbers \(w_j\) returned by microaggregation (5.4) are probability weights: the weight vector \(w = (w_j)_{j=1}^s\) belongs to the unit simplex

This feature may be lost if we add noise to \(w_j\). Similarly, if the input vectors \(x_j\) are taken from a given convex set K (for Boolean data, this is \(K = [0,1]^p\)), we would like the synthetic data to belong to K, too. Microaggregation mechanism (5.1) respects this feature: by convexity, the vectors \(y_j\) do belong to K. However, this property may be lost if we add noise to \(y_j\).

We resolve this issue by projecting the perturbed weights and vectors back onto the unit simplex \(\Delta \) and the convex set K, respectively. For this purpose, we utilize metric projections mappings that return a proximal point in a given set. Formally, we let

(If the minimum is not unique, break the tie arbitrarily. One valid choice of \(\pi _{\Delta ,1}(w)\) can be defined by setting all the negative entries of w to be 0 and then normalize it so that it is in \(\Delta \). In the case when all entries of w are negative, set \(\pi _{\Delta ,1}(w)\) to be any point in \(\Delta \).)

Thus, here is our plan: given input data \((x_i)_{i=1}^s\), we apply damped microaggregation (5.4) to compute weights and vectors \((w_j, {\tilde{y}}_j)_{j=1}^s\), add noise, and project the noisy vectors back to the unit simplex \(\Delta \) and the convex set K, respectively. In other words, we compute

where \(\rho \in {\mathbb {R}}^s\) and \(r_j \in {\mathbb {R}}^p\) are noise vectors (which we will set to be random Laplacian noise in the future).

5.6 The Accuracy Guarantee

Here is the accuracy guarantee of our procedure. This is a version of Theorem 5.6 with noise, damping, and metric projection:

Theorem 5.9

(Accuracy of damped, noisy microaggregation) Let K be a convex set in \({\mathbb {R}}^p\) that lies in the unit Euclidean ball \(B_2^p\). Let \(x_1,\ldots ,x_n \in K\). Let \(S = \frac{1}{n} \sum _{i=1}^n x_i x_i^{\mathsf {T}}\). Let P be an orthogonal projection on \({\mathbb {R}}^p\). Let \(\nu _1,\ldots ,\nu _s \in {\mathbb {R}}^p\) be an \(\alpha \)-covering of the unit Euclidean ball of \({{\,\mathrm{ran}\,}}(P)\). Let \([n]=F_1 \cup \cdots \cup F_s\) be a nearest-point partition of \((Px_i)\) with respect to \(\nu _1,\ldots ,\nu _s\). Then, the weights \({\bar{w}}_j\) and vectors \({\bar{y}}_j\) defined in (5.6) satisfy for all \(d \in {\mathbb {N}}\):

Proof

Adding and subtracting the cross term \(\sum _j w_j y_j^{\otimes d}\) and using triangle inequality, we can bound the left hand side of (5.7) by

The first term can be bounded by Theorem 5.6. For the second term, we can use Lemma 5.8 and note that