Abstract

We study the geometric Whitney problem on how a Riemannian manifold (M, g) can be constructed to approximate a metric space \((X,d_X)\). This problem is closely related to manifold interpolation (or manifold reconstruction) where a smooth n-dimensional submanifold \(S\subset {{\mathbb {R}}}^m\), \(m>n\) needs to be constructed to approximate a point cloud in \({{\mathbb {R}}}^m\). These questions are encountered in differential geometry, machine learning, and in many inverse problems encountered in applications. The determination of a Riemannian manifold includes the construction of its topology, differentiable structure, and metric. We give constructive solutions to the above problems. Moreover, we characterize the metric spaces that can be approximated, by Riemannian manifolds with bounded geometry: We give sufficient conditions to ensure that a metric space can be approximated, in the Gromov–Hausdorff or quasi-isometric sense, by a Riemannian manifold of a fixed dimension and with bounded diameter, sectional curvature, and injectivity radius. Also, we show that similar conditions, with modified values of parameters, are necessary. As an application of the main results, we give a new characterization of Alexandrov spaces with two-sided curvature bounds. Moreover, we characterize the subsets of Euclidean spaces that can be approximated in the Hausdorff metric by submanifolds of a fixed dimension and with bounded principal curvatures and normal injectivity radius. We develop algorithmic procedures that solve the geometric Whitney problem for a metric space and the manifold reconstruction problem in Euclidean space, and estimate the computational complexity of these procedures. The above interpolation problems are also studied for unbounded metric sets and manifolds. The results for Riemannian manifolds are based on a generalization of the Whitney embedding construction where approximative coordinate charts are embedded in \({{\mathbb {R}}}^m\) and interpolated to a smooth submanifold.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and the Main Results

1.1 Geometrization of Whitney’s Extension Problem

In this paper, we develop a geometric version of Whitney’s extension problem. Let \(f : K \rightarrow {\mathbb {R}}\) be a function defined on a given (arbitrary) set \(K \subset {\mathbb {R}}^n\), and let \(m \ge 1\) be a given integer. The classical Whitney problem is the question whether f extends to a function \(F \in C^m({\mathbb {R}}^n)\) and if such an F exists, what is the optimal \(C^m\) norm of the extension. Furthermore, one is interested in the questions if the derivatives of F, up to order m, at a given point can be estimated, or if one can construct extension F so that it depends linearly on f.

These questions go back to the work of Whitney [95,96,97] in 1934. In the decades since Whitney’s seminal work, fundamental progress was made by Glaeser [50], Brudnyi and Shvartsman [19,20,21,22,23,24] and [86,87,88], and Bierstone et al. [10]. (See also Zobin [101, 102] for the solution of a closely related problem.)

The above questions have been answered in the last few years, thanks to work of Bierstone et al. (see [10, 18, 19, 21, 22, 24, 37,38,39,40,41,42]). Along the way, the analogous problems with \(C^m({\mathbb {R}}^n)\) replaced by \(C^{m,\omega }({\mathbb {R}}^n)\), the space of functions whose mth derivatives have a given modulus of continuity \(\omega \), (see [41, 42]), were also solved.

The solution of Whitney’s problems has led to a new algorithm for interpolation of data, due to Fefferman and Klartag [45, 46], where the authors show how to compute efficiently an interpolant F(x), whose \(C^m\) norm lies within a factor C of least possible, where C is a constant depending only on m and n.

In recent years, the focus of attention in this problem has moved to the direction when the measurements \({\widetilde{f}}:K\rightarrow {\mathbb {R}}\) on the function f are given with errors bounded by \(\varepsilon >0\). Then, the task is to find a function \(F:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) such that \(\sup _{x\in K} |F(x)-{\widetilde{f}}(x)| \le \varepsilon \). Since the solution is not unique, one wants to find the extensions that have the optimal norm in \(C^m({\mathbb {R}}^n)\), see, e.g., [45, 46]. Finding F can be considered as the task of finding a graph \(\varGamma (F)=\{(x,F(x)):\ x\in {\mathbb {R}}^n\}\subset {\mathbb {R}}^{n+1}\) of a function in \(C^m({\mathbb {R}}^n)\) that passes near the points \(\{(x,{\widetilde{f}}(x)):\ x\in K\}\). To formulate the above problems in geometric (i.e., coordinates invariant) terms, instead of a graph set \(\varGamma (F)\), we aim to construct a general submanifold or a Riemannian manifold that approximates the given data. Also, instead of the \(C^m({\mathbb {R}}^n)\)-norms, we will measure the optimality of the solution in terms of invariant bounds for the curvature and the injectivity radius.

In this paper, we consider the following two geometric Whitney problems:

-

A.

Let E be a separable Hilbert space, e.g., \({\mathbb {R}}^N\), and assume that we are given a set \(X\subset E\). When can one construct a smooth n-dimensional submanifold \(M\subset E\) that approximates X with given bounds for the geometry of M and the Hausdorff distance between M and X? How can the submanifold M be efficiently constructed when X is given?

-

B.

Let \((X,d_X)\) be a metric space. When there exists a Riemannian manifold (M, g) that has given bounds for geometry and approximates well X? How can the manifold (M, g) be constructed when X is given? What is an algorithm that constructs (M, g) when X is finite?

In Question B, by ‘approximation’ we mean Gromov–Hausdorff or quasi-isometric approximation, see definitions in Definition 3 and Sect. 2.1.

We answer the Question A in Theorem 2, by showing that if \(X\subset E\) is locally (i.e., at a certain small scale) close to affine n-dimensional planes, see Definition 4, there is a submanifold \(M\subset E\) such that the Hausdorff distance of X and M is small and the second fundamental form and the normal injectivity radius of M are bounded.

The answer to the Question B is given in Theorem 1. Roughly speaking, it asserts that the following natural conditions on X are necessary and sufficient: Locally, X should be close to \({\mathbb {R}}^n\), and globally, the metric of X should be almost intrinsic.

The conditions in Theorem 1 are optimal, up to multiplying the obtained bounds by a constant factor depending on n. Theorem 1 gives sufficient conditions for metric spaces that approximate smooth manifolds. In Corollary 1, we show that similar conditions, with modified values of parameters, are necessary.

The result of Theorem 2 is optimal, up to multiplication the obtained bounds by a constant factor depending on n.

The proofs of Theorems 1 and 2 are constructive and give raise to manifold reconstruction algorithms when X is a finite set. Moreover, we give algorithms that verify if a finite data set X satisfies the characterizations given in Theorems 1 and 2. We analyze in Sect. 7.2 the computational complexity of these algorithms, but emphasize that to keep the algorithms simple, the algorithms have not been optimized in this paper to have the minimal complexity.

Finally, we note that this paper is related to two rather different theorems of Whitney: One is the Whitney embedding theorem of smooth manifolds into an Euclidean space and the other is the Whitney extension theorem for functions in \({\mathbb {R}}^n\).

Next we formulate the definitions needed to state the results rigorously.

Notations For a metric space X and sets \(A,B\subset X\), we denote by \(d_H^X(A,B)\), or just by \(d_H(A,B)\), the Hausdorff distance between A and B in X.

By \(d_{\mathrm{GH}}(X,Y)\), we denote the Gromov–Hausdorff (GH) distance between metric spaces X and Y. For the reader’s convenience, we collect definitions and elementary facts about the GH distance in Sect. 2.1. For more detailed account of the topic, see, e.g., [26, 79, 85]. In most cases, we work with pointed GH distance between pointed metric spaces \((X,x_0)\) and \((Y,y_0)\), where \(x_0\in X\) and \(y_0\in Y\) are distinguished points. For the definition of pointed GH distance, see [79, §1.2 in Ch. 10]) or Sect. 2.1.

For a metric space X, \(x\in X\) and \(r>0\), we denote by \(B^X_r(x)\) or \(B_r(x)\) the ball of radius r centered at x. For \(X={\mathbb {R}}^n\), we use notation \(B_r^n(x)=B_r^{{\mathbb {R}}^n}(x)\) and \(B_r^n=B_r^n(0)\). For a set \(A\subset X\) and \(r>0\), we denote by \({{\mathcal {U}}}_r^X(A)\) or \({{\mathcal {U}}}_r(A)\) the metric neighborhood of A of radius r that is the set points within distance r from A.

When speaking about GH distance between metric balls \(B_r^X(x)\) and \(B_r^Y(y)\), we always mean the pointed GH distance where the centers x and y are distinguished points of the balls. We abuse notation and write \(d_{\mathrm{GH}}(B_r^X(x),B_r^Y(y))\) to denote this pointed GH distance.

For a Riemannian manifold M, we denote by \({\text {Sec}}_M\) its sectional curvature and by \({{\,\mathrm{inj}\,}}_M\) its injectivity radius.

Small metric balls in a Riemannian manifold are GH close to Euclidean balls. More precisely, let M be a Riemannian n-manifold with \(|{\text {Sec}}_M|<K\) where K is a positive constant, and \(0<r\le \frac{1}{2}\min \{\frac{\pi }{\sqrt{K}},{{\,\mathrm{inj}\,}}_M\}\). Then, for every \(x\in M\), the metric balls \(B^M_r(x)\) in M and \(B_r^n\) in \({\mathbb {R}}^n\) satisfy

For a proof of this estimate, see Sect. 4.

If M is a submanifold of \({\mathbb {R}}^N\), one can write a similar estimate for the Hausdorff distance in \({\mathbb {R}}^N\). Namely, if the principal curvatures of M are bounded by \(\kappa >0\), then M deviates from its tangent space by at most \(\tfrac{1}{2} \kappa r^2\) within a ball of radius r. Thus, the Hausdorff distance between r-ball \(B^M_r(x)\) in M and the ball \(B_r^{T_xM}(x)=B_r^N(x)\cap T_xM\) of the affine tangent space of M at x satisfies

Note the different order of the above estimates for the intrinsic distances (1) and the extrinsic distances (2).

With (1) in mind, we give the following definition.

Definition 1

Let X be a metric space, \(r>\delta >0, \, n \in {\mathbb {N}}\). We say that X is \(\delta \)-close to \({\mathbb {R}}^n\)at scale r if, for any \(x \in X\),

Condition (3) can be effectively verified, up to a constant factor, see Algorithm GHDist. The condition can be also formulated for finite subsets: If sequences \((y_j)_{j=1}^N\subset B_r^n\) and \((x_j)_{j=1}^N\subset B_r^X(x)\) are \(\frac{\delta }{4}\)-nets such that \(|d_{{\mathbb {R}}^n}(y_j,y_k)-d_X(x_j,x_k)|<\frac{\delta }{4}\) for all \(j,k=1,2,\dots ,N\), then (3) is valid by [26, Prop. 7.3.16 and Corollary 7.3.28]. On the other hand, if X is \(\frac{\delta }{16}\)-close to \({\mathbb {R}}^n\) at scale r, then such \(\frac{\delta }{4}\)-nets exist.

In a Riemannian manifold, large-scale distances are determined by small-scale ones through the lengths of paths. However, Definition 1 does not impose any restrictions on distances larger that 2r in X. To rectify this, we need to make the metric ‘almost intrinsic’ as explained below.

Definition 2

Let \(X=(X,d)\) be a metric space and \(\delta >0\). A \(\delta \)-chain in X is a finite sequence \(x_1,x_2,\dots ,x_N\in X\) such that \(d(x_i,x_{i+1})<\delta \) for all \(1\le i\le N-1\). A sequence \(x_1,x_2,\dots ,x_N\in X\) is said to be \(\delta \)-straight if

for all \(1\le i<j<k\le N\). We say that X is \(\delta \)-intrinsic if for every pair of points \(x,y\in X\) there is a \(\delta \)-straight \(\delta \)-chain \(x_1,\dots ,x_N\) with \(x_1=x\) and \(x_N=y\).

Clearly, every Riemannian manifold (more generally, every length space) is \(\delta \)-intrinsic for any \(\delta >0\). Moreover, if X lies within GH distance \(\delta \) from a length space, then X is \(C\delta \)-intrinsic. In fact, this property characterizes \(\delta \)-intrinsic metrics, see Lemma 2.

In order to conveniently compare metric spaces at both small scale and large scale, we need the notion of quasi-isometry.

Definition 3

Let X, Y be metric spaces, \(\varepsilon >0\) and \(\lambda \ge 1\). A (not necessarily continuous) map \(f:X\rightarrow Y\) is said to be a \((\lambda ,\varepsilon )\)-quasi-isometry if the image f(X) is an \(\varepsilon \)-net in Y and

for all \(x,y\in X\), where \(d_X\) and \(d_Y\) denote the distances in X and Y, resp.

Unlike the use of quasi-isometries in, e.g., geometric group theory, in this paper we consider quasi-isometries with parameters \(\varepsilon \approx 0\) and \(\lambda \approx 1\). The quasi-isometry relation is almost symmetric: If there is a \((\lambda ,\varepsilon )\)-quasi-isometry from X to Y, then there exists a \((\lambda ,{3}\lambda \varepsilon )\)-quasi-isometry from Y to X. We say that metric spaces X and Y are \((\lambda ,\varepsilon )\)-quasi-isometric if there are \((\lambda ,\varepsilon )\)-quasi-isometries in both directions.

The existence of a \((\lambda ,\varepsilon )\)-quasi-isometry \(f:X\rightarrow Y\) implies that

See Sect. 2.1 for the proof.

Now we formulate our main result.

Theorem 1

For every \(n\in {\mathbb {N}}\), there exist \(\sigma _1=\sigma _1(n)>0\) and \(C_1=C_1(n),C_2=C_2(n)>0\) such that the following holds. Let \(r>0\), X be a metric space with \({{\,\mathrm{diam}\,}}(X)>r\), and

Suppose that X is \(\delta \)-intrinsic and \(\delta \)-close to \({\mathbb {R}}^n\) at scale r, see Definitions 1 and 2. Then, there exists a complete n-dimensional Riemannian manifold M such that

-

1.

X and M are \((1+C_1\delta r^{-1},C_1\delta )\)-quasi-isometric. Moreover, when the diameter of X is finite, we have

$$\begin{aligned} d_{\mathrm{GH}}(X,M) \le {2C_1}\delta r^{-1}{{\,\mathrm{diam}\,}}(X). \end{aligned}$$(8) -

2.

The sectional curvature \({\text {Sec}}_M\) of M satisfies \(|{\text {Sec}}_M|\le C_2\delta r^{-3}\).

-

3.

The injectivity radius of M is bounded below by r / 2.

By following the steps of the proof, one can obtain explicit formulas for the values of \(\sigma _1(n)\), \(C_1(n)\), and \(C_2(n).\)

The estimate (8) follows from the existence of a \((1+C_1\delta r^{-1},C_1\delta )\)-quasi-isometry from X to M due to (6) and the fact that \({{\,\mathrm{diam}\,}}(X)>r\). The proof of Theorem 1 is given in Sect. 5.

The quasi-isometry parameters and sectional curvature bound in Theorem 1 are optimal up to constant factors depending only on n, see Remark 9.

Remark 1

The assumption that X is \(\delta \)-intrinsic in Theorem 1 is not crucial. Without this assumption, the following more technical variant of the theorem holds:

If a metric space X is \(\delta \)-close to \({\mathbb {R}}^n\) at scale r, where \(\delta /r\) is bounded above by a constant depending on n, then there exists a complete (possibly not connected) Riemannian n-manifold M which satisfies properties (2) and (3) from Theorem 1 and approximates X in the following sense: There is a map \(f:X\rightarrow M\) such that

for all \(x,y\in X\) such that \(d_X(x,y)<r\) or \(d_M(f(x),f(y))<r\).

This variant follows from Theorem 1 and the fact that one can modify ‘large’ distances in X so that the resulting metric is \(C\delta \)-intrinsic and coincides with d within balls of radius r. The new distances are measured along ‘discrete shortest paths’ in X, see (32) and Lemma 3 in Sect. 2.2.

This procedure may split X into several ‘components’ with modified distances between them being infinite. In the original metric space, these components are subsets separated by distances greater that r. They correspond to connected components of the approximating manifold M.

For \(\delta \)-intrinsic metrics, an approximation f as above is \((1+C\delta r^{-1},C\delta )\)-quasi-isometry and vice versa. This follows from Lemmas 1 and 4, see Sect. 2.

Furthermore, Theorem 1 gives a characterization result for metric spaces that GH approximate smooth manifolds with certain geometric bounds. The precise formulation is the following.

Let \({\mathcal {M}}(n,K,i_0,D)\) denote the class of n-dimensional compact Riemannian manifolds M satisfying \(|{\text {Sec}}_M|\le K\), \({{\,\mathrm{inj}\,}}_M\ge i_0\), and \({{\,\mathrm{diam}\,}}(M)\le D\). Denote by \(\mathcal M_\varepsilon (n,K,i_0,D)\) the class of metric spaces X such that \(d_{\mathrm{GH}}(X,M)<\varepsilon \) for some \(M\in {\mathcal {M}}(n,K,i_0,D)\). Also, let \({\mathcal {X}}(n,\delta ,r,D)\) denote the class of metric spaces X that are \(\delta \)-intrinsic and \(\delta \)-close to \({\mathbb {R}}^n\) at scale r, and satisfy \({{\,\mathrm{diam}\,}}(X)\le D\). Theorem 1 has the following corollary that concerns neighborhoods of smooth manifolds and the class of metric spaces that satisfy a weak \(\delta \)-flatness condition in the scale of injectivity radius and a strong \(\delta \)-flatness condition in a small-scale r.

Corollary 1

For every \(n\in {\mathbb {N}}\), there exist \({\sigma _1}={\sigma _1}(n)>0\) and \(C_3=C_3(n),C_4=C_4(n)>0\) such that the following holds. Let \(K,i_0,D>0\) and assume that \(i_0<\sqrt{{\sigma _1}/K}\). Let \(\delta _0=Ki_0^3\), \(0<\delta <\delta _0\), and \(r=(\delta /K)^{\frac{1}{3}}\). Let \({\mathcal {X}}\) be the class of metric spaces defined by

Then,

where \(\varepsilon _1=\delta /6\) and \(\varepsilon _2=C_4DK^{1/3}\delta ^{2/3}\).

For a metric space X, the first inclusion in (9) means that the condition \(X\in {\mathcal {X}}\) is necessary for X to approximate a manifold from \(\mathcal M(n,K/2,2i_0,D-\delta )\) with accuracy \(\varepsilon _1\). Likewise, the second inclusion in (9) says that \(X\in {\mathcal {X}}\) is a sufficient condition for X to approximate a manifold from \({\mathcal {M}}(n,C_3K,i_0/{2},D)\) with accuracy \(\varepsilon _2\).

The optimal values of \(\varepsilon _1\) and \(\varepsilon _2\) in Corollary 1 remain an open question. The proof of Corollary 1 is given in Sect. 6. It is based on Theorem 1 and Proposition 2.

Another application of Theorem 1 is the following characterization of Alexandrov spaces with two-sided curvature bounds.

Corollary 2

For a complete geodesic metric space X and \(n\in {\mathbb {N}}\), the following two conditions are equivalent:

-

1.

There exists \(K>0\) such that for all \(x\in X\) and \(r>0\),

$$\begin{aligned} d_{\mathrm{GH}}(B^X_r(x),B^n_r) \le Kr^3 . \end{aligned}$$(10) -

2.

X is an n-dimensional manifold, its metric has two-sided bounded curvature in the sense of Alexandrov, and its injectivity radius is bounded away from 0.

Furthermore, if (1) holds, then X has Alexandrov curvature bounds between \(-C_5K\) and \(C_5K\) and injectivity radius at least \(1/(C_6\sqrt{K})\), where \(C_5\) and \(C_6\) are positive constants depending only on n.

The proof of Corollary 2 is given in Sect. 6. We refer to [17, 26, 27] or [8] for the definition and basic properties of Alexandrov curvature bounds. Here we only mention the fact that finite-dimensional boundaryless Alexandrov spaces with two-sided curvature bounds are Riemannian manifolds with \(C^{1,\alpha }\) metrics ([71], see also [8, Theorem 14.1]).

1.1.1 On the Proof of Theorem 1

In the proof of Theorem 1, M is constructed as a submanifold of a separable Hilbert space E, which is either \({\mathbb {R}}^N\) with a large N (in case when X is bounded) or \(\ell ^2\) endowed with the standard \(\Vert \,\cdot \,\Vert _{\ell ^2}\) norm. However, the Riemannian metric on M is different from the one inherited from E.

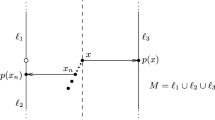

We note that an algorithm based on Theorem 1, which also summarizes some of the main objects used in the proof, is given in Sect. 7, see also Fig. 1 in Sect. 5.

A schematic visualization of the interpolation algorithm ‘ManifoldConstruction’ based on Theorem 1, see Sect. 7. Assume that a finite metric space \((X,d_X)\) is given. First we construct a maximal (r / 100)-separated subset \(X_0=\{q_i,\ i=1,2,\dots ,N\}\subset X\) and r-neighborhoods \(B^X_r(x_i)\subset X\) of points \(q_i\in X_0\). Then, we construct balls \(D_i\subset {\mathbb {R}}^n\) approximating the r-balls \(B^X_r(q_i)\) and local embeddings \(f_i:B^X_r(q_i)\rightarrow D_i\). The balls \(D_i\) are considered as local coordinate charts. We embed these local charts to an Euclidean space \(E={\mathbb {R}}^m\) using Whitney-type embeddings \({F^{(i)}=F|_{D_i^{r/10}}:D_i^{r/10}\rightarrow \varSigma _i}\). Submanifolds \(\varSigma _i\subset E\) are denoted by blue curves. Using the algorithm SubmanifoldInterpolation, the union \(\bigcup _i\varSigma \) is interpolated to a red submanifold \(M\subset E\). When \(P_M\) is the normal projector onto M, denoted by the red arrows, we can determine a metric tensor \(g_i\) on \(P_M(\varSigma _i)\) by pushing forward the Euclidean metric from \(D_i\) to \(P_M(\varSigma _i)\) by the map \(P_M\circ F|_{D_i}\). The metric tensor g on M is obtained by computing a smooth weighted average of tensors \(g_i\) (Color figure online)

Here is the idea of the proof of Theorem 1. Since the r-balls in X are GH close to the Euclidean ball \(B_r^n\), they admit nice maps (\(2\delta \)-isometries) to \(B_r^n\). These maps can be used as a kind of coordinate charts for X, allowing us to argue about X as if it were a manifold. In particular, we can mimic the proof of Whitney embedding theorem (on the classical Whitney embedding, see [98, 99]). If X were a manifold, this would give us a diffeomorphic submanifold of a higher-dimensional Euclidean space E. In our case, we get a set \(\varSigma \subset E\) which is a Hausdorff approximation of a submanifold \(M\subset E\). In order to prove this, we use Theorem 2 (see Sect. 1.3) which characterizes sets approximable by (nice) submanifolds. We emphasize that the resulting submanifold \(M\subset E\) is the image of a Whitney embedding but not of a Nash isometric embedding [68, 69]. As the last step of the construction (see Sect. 5.4), we construct a Riemannian metric g on M so that a natural map from X to (M, g) is almost isometric at scale r. The construction is explicit and can be performed in an algorithmic manner, see Sect. 7. Then, with the assumption that X is \(\delta \)-intrinsic, it is not hard to show that X and (M, g) are quasi-isometric with small quasi-isometry constants (Table 1).

Convention Here and later, we fix the notation n for the dimension of a (sub)manifold in question. Throughout the paper, we denote by \(c,\,C\), \(C_1,C_2,\) etc., various constants depending only on n and, when dealing with derivative estimates, on the order of the derivative involved. The same letter C can be used to denote different constants, even within one formula. The constants with a number are used for two reasons: First, to make it possible to compute the values of these constants when needed, and second, to make the presentation easier, so that the reader can see what earlier estimates are involved in each formula. However, to keep the presentation simpler, some constants that are not needed in later estimates or are not main in focus of the interest of this paper are not numbered. To indicate dependence on other parameters, when we introduce a new constant, we use notation like C(M, k) or \(C_j{(M,k)}\) for numbers depending on manifold M and number k. The locations where the constants appear first time are listed in Table 2. Constants that do not depend on any parameters, including the dimension n of the manifold, are called universal constants. Most of the constants \(C_j\) depend on the intrinsic dimension n, and we do not usually indicate it (except in the introduction where main results are stated), that is, we have \(C_j=C_j(n)\), \(C(M,k)= C(M,k,n)\), etc. We note that several constants \(C_j\) have an exponential dependency in n. One of the reasons for this is that in a manifold having a negative sectional curvature, e.g., in the hyperbolic space, a ball of radius r contains \(e^{cr/\delta }\) points that are \(\delta \)-separated. We emphasize that n is the intrinsic dimension of the manifold that is relatively small in several applications, and the constants do not depend on the dimension of an ambient space where a considered manifold may be embedded in.

1.2 Manifold Reconstruction and Inverse Problems

Theorem 1 and Corollary 1 give quantitative estimates on how one can use discrete metric spaces as models of Riemannian manifolds, for example for the purposes of numerical analysis. With this approach, a data set representing a Riemannian manifold is just a matrix of distances between points of some \(\delta \)-net. Naturally, the distances can be measured with some error. In fact, only ‘small-scale’ distances need to be known, see Corollary 3.

The statement of Theorem 1 provides a verifiable criterion to tell whether a given data set approximates any Riemannian manifold (with certain bounds for curvature and injectivity radius). See Sect. 2.4 for an explicit algorithm.

The proof of Theorem 1 is constructive. It provides an algorithm, although a rather complicated one, to construct a Riemannian manifold approximated by a given discrete metric space X. See Sect. 7 for an outline of the algorithm.

Next we formulate results that describe properties of the manifold M constructed from data X that approximates some smooth manifold \({\widetilde{M}}\) and discuss how this result is used in inverse problems.

1.2.1 Reconstructions with Data that Approximate a Smooth Manifold

When dealing with inverse problems, it is assumed that the data set X comes from some unknown Riemannian manifold \({\widetilde{M}}\), and moreover, some a priori bounds on the geometry of this manifold are given. Applying Theorem 1 to this data set yields another manifold M which is \((1+C\delta r^{-1},C\delta )\)-quasi-isometric to \({\widetilde{M}}\). One naturally asks what information about the original manifold \({\widetilde{M}}\) can be recovered, in particular, if the topological and differentiable type of the manifold \({\widetilde{M}}\) can be determined using the set X. An answer is given by the following proposition.

Proposition 1

(cf. Theorem 8.19 in [51]) There exist \({\kappa _0}>0\) and \(C_7>0\) such that the following holds. Let M and \({\widetilde{M}}\) be complete Riemannian n-manifolds with \(|{\text {Sec}}_M|\le K\) and \(|{\text {Sec}}_{\widetilde{M}}|\le K\), where \(K>0\).

Let \(0<{\kappa }<{\kappa _0}\) and assume that M and \({\widetilde{M}}\) are \((1+{\kappa },{\kappa } r)\)-quasi-isometric, where \( r < \min \{({\kappa }/K)^{1/2}, {{\,\mathrm{inj}\,}}_M, {{\,\mathrm{inj}\,}}_{{\widetilde{M}}} \} . \)

Then, M and \({\widetilde{M}}\) are diffeomorphic. Moreover, there exists a bi-Lipschitz diffeomorphism \(\varPsi \) between M and \({\widetilde{M}}\) with bi-Lipschitz constant bounded by \(1+C_7{\kappa }\),

We do not prove Proposition 1 because it is essentially the same as Theorem 8.19 in [51], except that the approximation is quasi-isometric rather than GH. To prove Proposition 1, one can apply the same arguments as in [51, 8.19] using coordinate neighborhoods of size r. The estimates are not given explicitly in [51], but they follow from the argument. These results can be regarded as quantitative versions of Cheeger’s Finiteness Theorem [30], see [79, Ch. 10] and [78] for different proofs.

Remark 2

Using results of [2], one can show that for any \(\alpha <1\), M and \({\widetilde{M}}\) in Proposition 1 are close to each other in \(C^{1,\alpha }\) topology. However, we do not know explicit estimates in this case.

1.2.2 An Improved Estimate for the Injectivity Radius

The injectivity radius estimate provided by Theorem 1 is not good enough in the context of manifold reconstruction. Indeed, in order to obtain a good approximation one has to begin with a small r. (Recall that for Theorem 1 to work, \(\delta \) should be of order \(Kr^3\) where K is the curvature bound.) However, Theorem 1 guarantees only a lower bound of order r for \({{\,\mathrm{inj}\,}}_M\), so a priori one could end up with an approximating manifold M with a very small injectivity radius. In order to rectify this, we need the following result.

Proposition 2

There exists a universal constant \(C_8>0\) such that the following holds. Let \(K>0\) and let \(M, {\widetilde{M}}\) be complete n-dimensional Riemannian manifolds with \(|{\text {Sec}}_M|\le K\) and \(|{\text {Sec}}_{{\widetilde{M}}}|\le K\).

1. Let \(x\in M\), \({\widetilde{x}}\in {\widetilde{M}}\), and \( 0 < \rho \le \min \{{{\,\mathrm{inj}\,}}_{{\widetilde{M}}}({\widetilde{x}}) , \tfrac{\pi }{\sqrt{K}} \} . \) Then,

2. Suppose that M and \({\widetilde{M}}\) are \((1+\varepsilon ,\delta )\)-quasi-isometric where \(\varepsilon ,\delta \ge 0\). Then,

This result is important for the inverse problems of an approximate recovery of an unknown manifold \({\widetilde{M}}\). It is often the case that we a priori know bounds for the sectional curvature, injectivity radius, etc., of \({\widetilde{M}}\). On the other hand, any other manifold M described by Theorem 1 is \((1+C\delta r^{-1},C\delta )\)-quasi-isometric to \({\widetilde{M}}\). Thus, the second part of Proposition 2 gives a better estimate for \({{\,\mathrm{inj}\,}}_M\) than Theorem 1.

The proof of Proposition 2 is given in Sect. 4.

1.2.3 An Approximation Result with Only One Parameter

We summarize the manifold reconstruction features of Theorem 1 in the following corollary where all approximations, errors in data, as well as the errors in the reconstruction are given in terms of a single parameter \({\widehat{\delta }}\). Essentially, the corollary tells that a manifold N can be approximately reconstructed from a \({\widehat{\delta }}\)-net X of N and the information about local distances between points of X containing small errors. This type of results is useful, e.g., in inverse problems discussed below.

Corollary 3

Let \(K>0\), \(n\in {\mathbb {Z}}_+\) and (N, g) be a compact n-dimensional manifold with sectional curvature bounded by \(|{\text {Sec}}_N|\le K\). There exist \(\delta _0=\delta _0(n,K)\) and \(C_9=C_9(n),C_{10}=C_{10}(n)>0\) such that if \(0<\widehat{\delta }<\delta _0\), then the following holds:

Let \(r=({\widehat{\delta }}/K)^{1/3}\) and suppose that the injectivity radius \({{\,\mathrm{inj}\,}}_N\) of N satisfies \({{\,\mathrm{inj}\,}}_N>2r\). Also, let \(X=\{x_j:\ j=1,2,\dots ,J\}\subset N\) be a \({\widehat{\delta }}\)-net of N and \(\widetilde{d} :X\times X\rightarrow {\mathbb {R}}_+\cup \{0\}\) be an approximate local distance function that satisfies for all \(x,y\in X\)

and

Given the set X and the function \({\widetilde{d}}\), one can effectively construct a compact, smooth n-dimensional Riemannian manifold \((M,g_M)\), with distance function \(d_M\). This manifold approximates the manifold (N, g) in the following way:

-

1.

There is a diffeomorphism \(F:M\rightarrow N\) satisfying

$$\begin{aligned} \frac{1}{L}\le \frac{d_N(F(x),F(y))}{d_M(x,y)}\le L,\quad \hbox {for all }x,y\in M, \end{aligned}$$(15)where \(L=1+C_{10}K^{1/3}{\widehat{\delta }}\,{}^{2/3}\).

-

2.

The sectional curvature \({\text {Sec}}_M\) of M satisfies \(|{\text {Sec}}_M|\le C_9K\).

-

3.

The injectivity radius \({{\,\mathrm{inj}\,}}_M\) of M satisfies

$$\begin{aligned} {{\,\mathrm{inj}\,}}_M\ge \min \{(C_9K)^{-1/2}, (1-C_{10}K^{1/3}\widehat{\delta }\,{}^{2/3}){{\,\mathrm{inj}\,}}_N\} . \end{aligned}$$

The proof of Corollary 3 is given in the end of Sect. 6.

We call the function \({\widetilde{d}}:X\times X\rightarrow {\mathbb {R}}_+\cup \{0\}\), defined on the \({\widehat{\delta }}\)-net X and satisfying the assumptions of Corollary 3, an approximate local distance function with accuracy \({\widehat{\delta }}\). Many inverse problems can be reduced to a setting where one can determine the distance function \(d_N(x_j,x_k)\), with measurement errors \(\epsilon _{j,k}\), in a discrete set \(\{x_j\}_{j\in J}\subset N\). Thus, if the set \(\{x_j\}_{j\in J}\) is \({\widehat{\delta }}\)-net in N, the errors \(\epsilon _{j,k}\) satisfy conditions (14), and \({\widehat{\delta }}\) is small enough, then the diffeomorphism type of the manifold can be uniquely determined by Corollary 3. Moreover, the bi-Lipschitz condition (15) means that also the distance function can be determined with small errors. We emphasize that in (14) one needs to approximately know only the distances smaller than \(r=({\widehat{\delta }}/K)^{1/3}\). The larger distances can be computed as in (32).

1.2.4 Manifold Reconstructions in Imaging and Inverse Problems

Recently, geometric models have became an area of focus of research in inverse problems. As an example of such problems, one may consider an object with a variable speed of wave propagation. The travel time of a wave between two points defines a natural non-Euclidean distance between the points. This is called the travel time metric, and it corresponds to the distance function of a Riemannian metric. In many topical inverse problems, the task is to determine the Riemannian metric inside an object from external measurements, see, e.g., [61, 62, 75, 76, 89,90,91, 93]. These problems are the idealizations of practical imaging tasks encountered in medical imaging or in Earth sciences. Also, the relation of discrete and continuous models for these problems is an active topic of research, see, e.g., [9, 13, 14, 57]. In these results, discrete models have been reconstructed from various types of measurement data. However, a rigorously analyzed technique to construct a smooth manifold from these discrete models to complete the construction has been missing until now.

In practice, the measurement data always contain measurement errors and the amount of these data is limited. This is why the problem of the approximate reconstruction of a Riemannian manifold and the metric on it from discrete or noisy data is essential for several geometric inverse problems. Earlier, various regularization techniques have been developed to solve noisy inverse problems in the PDE setting, see, e.g., [36, 65], but most of such methods depend on the used coordinates and, therefore, are not invariant. One of the purposes of this paper is to provide invariant tools for solving practical imaging problems.

An example of problems with limited data is an inverse problem for the heat kernel, where the information about the unknown manifold (M, g) is given in the form of discrete samples \((h_M(x_j, y_k, t_i))_{j,k\in J,i\in I}\) of the heat kernel \(h_M(x, y, t)\), satisfying

where the Laplace operator \(\varDelta _g\) operates in the x variable, see, e.g., [56]. Here \(y_j=x_j\), where \(\{x_j:\ j\in J\}\) is a finite \(\varepsilon \)-net in an open set \(\varOmega \subset M\), while \(\{t_i:\ i\in I\}\) is in \(\varepsilon \)-net of the time interval \((t_0, t_1)\). It is also natural to assume that one is given measurements \(h_M^{(m)}(x_j, y_k, t_i)\) of the heat kernel with errors satisfying \(|h_M^{(m)}(x_j, y_k, t_i)-h_M(x_j, y_k, t_i)|<\varepsilon \). Several inverse problems for the wave equation lead to a similar problem for the wave kernel \(G_M(x,y,t)\) satisfying

see, e.g., [53, 56, 72]. In the case of complete data (corresponding to the case when \(\varepsilon \) vanishes), the inverse problem for heat kernel and wave kernel is equivalent to the inverse interior spectral problem, see [55]. In this problem, one considers the eigenvalues \(\lambda _k\) of \(-\varDelta _g\), counted by their multiplicity, and the corresponding \(L^2(M)\)-orthonormal eigenfunctions, \(\varphi _k(x)\), that satisfy

In the inverse interior spectral problem, one assumes that we are given approximations \({\widetilde{\lambda }}_k,\)\(k=0,1, 2,\dots , N-1\), to the first N smallest eigenvalues of \(-\varDelta _g\), and values \(\varphi _k^{\prime }(x_j)\), at points \(x_j\), of approximations to the eigenfunctions \(\varphi _k\). Here \(x_j\) form an \(\varepsilon \)-net \(\{x_j:\ j\in J\}\subset \varOmega \), where \(\varOmega \subset M\) is open, and \(|{\widetilde{\lambda }}_k -\lambda _k| \le \varepsilon \) and \(|\varphi _k^{\prime }(x_j)-\varphi _k(x_j)|<\varepsilon \).

It is shown in [15] that these data determine a metric space \((X, d_X)\) which is a \(\delta -\)approximation (in the Gromov–Hausdorff distance) to the unknown manifold M, where \(\delta = \delta (\varepsilon ,N; \varOmega )\) tends to 0 as \(\varepsilon \rightarrow 0\) and \(N\rightarrow \infty \). It may be noted that the earlier works [3, 57] dealt with similar approximations (under other geometric conditions) for the case of manifolds with boundary and the Laplace operators with some classical boundary conditions.

Returning to the case when M has no boundary, Theorem 1 completes the solution of the above inverse problems by constructing a smooth manifold that approximates M.

1.3 Interpolation of Manifolds in Hilbert Spaces

As already mentioned, in the proof of Theorem 1 we need to approximate a set in a Hilbert space by an n-dimensional submanifold (with bounded geometry). At small scale, the set in question should be close to affine subspaces in the following sense.

Definition 4

Let E be a Hilbert space, \(X\subset E\), \(n\in {\mathbb {N}}\) and \(r,\delta >0\). We say that X is \(\delta \)-close to n-flats at scale r if for any \(x\in X\), there exists an n-dimensional affine space \(A_x\subset E\) through x such that

To formulate our result for the sets in Hilbert spaces, we recall some definitions. By a closed submanifold of a Hilbert space E, we mean a finite-dimensional smooth submanifold which is a closed subset of E.

Let \(M\subset E\) be a closed submanifold. The reach of M, denoted by \({{\,\mathrm{Reach}\,}}(M)\), is the supremum of all \(r>0\) such that for every \(x\in {{\mathcal {U}}}_r(M)\) there exists a unique nearest point in M. We denote this nearest point by \(P_M(x)\) and refer to the map \(P_M:{{\mathcal {U}}}_r(M)\rightarrow M\) as the normal projection.

For \(x\in M\), we denote by \(T_xM\) the tangent space of M at x. The tangent space is regarded as an affine subspace of E containing x. We denote by \(\mathbf {T}_xM\) the linear subspace of E parallel to \(T_xM\).

Theorem 2

For every \(n,k\in {\mathbb {N}}\), there exist positive constants \({\sigma _2}\), \(C_{11}\), \(C_{12}\) depending only on n and a positive constant \(C_{13}({n,k})>0\) such that the following holds. Let E be a separable Hilbert space, \(X\subset E\), \(r>0\) and

Suppose that X is \(\delta \)-close to n-flats at scale r (see Definition 4). Then, there exists a closed n-dimensional smooth submanifold \(M\subset E\) such that:

-

1.

\(d_H(X,M)\le 5\delta \).

-

2.

The second fundamental form of M at every point is bounded by \(C_{11}\delta r^{-2}\).

-

3.

\({{\,\mathrm{Reach}\,}}(M)\ge r/3\).

-

4.

The normal projection \(P_M:{{\mathcal {U}}}_{r/3}(M)\rightarrow M\) is smooth and satisfies for all \(x\in {{\mathcal {U}}}_{r/3}(M)\)

$$\begin{aligned} \Vert d^k_x P_M\Vert < C_{13}(n,k) \delta r^{-k}, \qquad k\ge 2 , \end{aligned}$$(18)and

$$\begin{aligned} \Vert d_x P_M - P_{\mathbf {T}_yM}\Vert < C_{13}(n,1) \delta r^{-1} \end{aligned}$$(19)where \(y=P_M(x)\) and \(P_{\mathbf {T}_yM}\) is the orthogonal projector to \(\mathbf {T}_yM\).

-

5.

The tangent spaces of M approximate subspaces \(A_x\) from Definition 4 in the following sense. If \(x\in X\) and \(y=P_M(x)\), then the angle between \(A_x\) and the tangent space \(T_yM\) satisfies

$$\begin{aligned} \angle (A_x,T_yM) < C_{12}\delta r^{-1} . \end{aligned}$$(20)

Notations In (19), (18) and throughout the paper, \(d_x\) and \(d^k_x\) denote the first and kth differentials of a smooth map at a point x. The norm of the kth differential is derived from the inner product norm on E in the standard way. As usual, we define the \(C^k\)-norm of a map f defined on an open set \(U\subset E\), by

where \(d_x^0f=f(x)\).

The angle \(\angle (A_1,A_2)\) between n-dimensional linear subspaces \(A_1,A_2\subset E\) is defined by

where \(\angle (u_1,u_2) = \arccos \frac{\langle u_1,u_2\rangle }{|u_1||u_2|}\) and \(\langle \,\cdot ,\cdot \,\rangle \) is the inner product in E. The angle between affine subspaces is defined as the angle between their parallel translates containing the origin. Note that if \(A_1\) and \(A_2\) are linear subspaces and \(P_{A_1}\) and \(P_{A_2}\) are orthogonal projectors onto \(A_1\) and \(A_2\), respectively, then

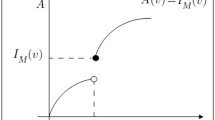

The proof of Theorem 2 is given in Sect. 3. An algorithm based on Theorem 2, which also summarizes the main objects used in its proof, is given in Sect. 7, see also Fig. 2.

The above question of submanifold interpolation has attracted recently much interest in geometry and machine learning. For example, an interpolation problem similar to Theorem 2 has been considered in the recent paper of Kleiner and Lott concerning Perelman’s proof of the geometrization conjecture, see [60, Lemma B.2]. Compared to these results, our method provides explicit geometric bounds for M in claims (2)–(4) of Theorem 2, whereas the bounds in [60, Lemma B.2] arise from a contradiction argument and have the form of an unknown \(\varepsilon =\varepsilon (\delta ,\dots )\) which just goes to 0 along with \(\delta \). In particular, Theorem 2 provides explicit curvature bounds that are linear in \(\delta \). This is essential in the proof of Theorem 1 as well as in applications.

In Remark 7, we show that the bounds in claims (2) and (3) in Theorem 2 are optimal, up to constant factors depending on n. Thus, Theorem 2 gives necessary and sufficient conditions (up to multiplication of the bounds by a constant factor) for a set \(X\subset E\) to approximate a smooth submanifold with given geometric bounds.

A schematic visualization of the interpolation algorithm ‘SubmanifoldInterpolation’ based on Theorem 2. In the figure, on the top, the black data points \(X\subset E={\mathbb {R}}^m\) have a \(\delta \)-neighborhood \(U={\mathcal U}_\delta (X)\). The boundary of U is marked by blue. In the figures below, we determine, near points \(x_i\in X\), \(i=1,2,3\) the approximating n-dimensional planes \(A_i\), marked by red lines. Then, we map the set U by applying to it iteratively functions \(\varphi _i:E\rightarrow E\), defined in (71). The maps \(\varphi _i\) are convex combinations of the projector \(P_{A_i}\), onto \(A_i\), and the identity map. The three figures on the bottom show the sets \(\varphi _1(U)\), \(\varphi _2(\varphi _1(U))\), and \(\varphi _3(\varphi _2(\varphi _1(U)))\), respectively. The limit of these sets converges to the n-dimensional submanifold \(M\subset E\) (Color figure online)

In order to approximate a submanifold M as in Theorem 2, the set X must contain as many points as a \(C\delta \)-net in M. This is an unreasonably large number of points when \(\delta \) is small. The following corollary allows one to reconstruct M from a smaller approximating set. It involves two parameters \(\varepsilon \) and \(\delta \) where \(\varepsilon \) is a ‘density’ of a net and \(\delta \) is a ‘measurement error.’ Note that \(\delta \) may be much smaller than \(\varepsilon \). A similar generalization is possible for Theorem 1, but we omit these details.

Corollary 4

For every \(n\in {\mathbb {N}}\), there exist \({\sigma _2}={\sigma _2}(n)>0\) and \(C_{14}=C_{14}(n)>0\) such that the following holds. Let E be a Hilbert space, \(X\subset E\), \(0<\varepsilon <r/10\) and \(0<\delta <{\sigma _2}r\). Suppose that for every \(x\in X\) there exists an n-dimensional affine subspace \(A_x\subset E\) such that the set \(X\cap B_r(x)\) is within Hausdorff distance \(\delta \) from an \(\varepsilon \)-net of the affine n-ball \(A_x\cap B_r(x)\).

Then, there exists a closed n-dimensional submanifold \(M\subset E\) satisfying properties 2–4 of Theorem 2 and an \(\varepsilon \)-net Y of M such that

Proof sketch

Consider the set \( X' = \bigcup _{x\in X} (A_x \cap B_r(x)) \subset E . \) A suitably modified version of Lemma 9 implies that \(\angle (A_x,A_y)<C\delta r^{-1}\) for all \(x,y\in X\) such that \(|x-y|<r\). It then follows that \(X'\) is \(C\delta \)-close to n-flats at scale \(r-C\delta \). Now the corollary follows from Theorem 2 applied to \(X'\). \(\square \)

1.4 Submanifold Interpolation and Machine Learning

The construction of a manifold that approximates, in some suitable sense, the given data points is a classical problem of machine learning. We emphasize that we consider reconstruction of manifolds which are either considered as (differentiable) Riemannian manifolds or embedded submanifolds of an Euclidean space but not immersed submanifolds of an Euclidean space (i.e., a submanifold that intersects itself) that are outside the context of this paper.

Next we give a short review on existing methods and discuss how Theorem 2 is applied for problems of manifold learning.

1.4.1 Literature on Submanifold Interpolation

The question of fitting a manifold to data has been of interest to data analysts and statisticians of late. There are several results dealing exclusively with sample complexity such as [1, 48, 64, 66, 67]. We will restrict our attention to results that provide an algorithm for describing a manifold to fit the data together with upper bounds on the sample complexity.

A work in this direction, [49], building over [74] provides an upper bound on the Hausdorff distance between the output manifold and the true manifold equal to \(O((\frac{\log N}{N})^{\frac{2}{D+8}}) + \widetilde{O}(\sigma ^2\log (\sigma ^{-1}))\). In order to obtain a Hausdorff distance of \(c\varepsilon \), one needs more than \(\varepsilon ^{-D/2}\) samples, where D is the ambient dimension. The results of [43] guarantee (for sufficiently small \(\sigma \)) a Hausdorff distance of

with less than

samples, where d is the dimension of the submanifold, V is in upper bound in the \(d-\)dimensional volume, and \(\sigma \) is the standard deviation of the noise projected in one dimension. The question of fitting a manifold \({{\mathcal {M}}}_o\) to data with control both on the reach \(\tau _o\) and mean squared distance of the data to the manifold was considered in [44]. The paper [44] did not assume a generative model for the data and had to use an exhaustive search over the space of candidate manifolds whose time complexity was doubly exponential in the intrinsic dimension d of \({{\mathcal {M}}}_o\). In [43], the construction of \({{\mathcal {M}}}_o\) has a sample complexity that is singly exponential in d, made possible by the generative model, while [44] did not specify the bound on \(\tau _o\), beyond stating that the multiplicative degradation \(\frac{\tau }{\tau _o}\) in the reach depends on the intrinsic dimension alone. In [43], this degradation is pinned down to within \((0, C d^7]\), where C is an absolute constant and d is the dimension of \({{\mathcal {M}}}\).

There are also methods which map high-dimensional data points to low-dimensional piecewise linear manifolds. Cheng, Dey and Ramos present an algorithm [32] to reconstruct a smooth k-dimensional manifold \({\mathcal {M}}\) embedded in a Euclidean space from a sufficiently dense point sample on the manifold. The algorithm outputs a simplicial manifold that is homeomorphic to \({\mathcal {M}}\) and close to \({\mathcal {M}}\) in Hausdorff distance (see also the related work using witness complexes in [12]). In recent work, Aamari and Levrard [1] derive optimal rates for the estimation of tangent spaces the second fundamental form, and the submanifold \({\mathcal {M}}\) given a sample drawn from a submanifold \({\mathcal {M}}\) of Euclidean space. Unlike this paper or [43, 44, 49], they do not, however, provide a method to produce a single consistent manifold from finitely many samples. In other recent work [11], Boissonnat et al. presented an algorithm for producing Delaunay triangulations of manifolds. Given a set of sample points and an atlas on a compact manifold, a manifold Delaunay complex is produced for a perturbed point set provided the transition functions are bi-Lipschitz with a constant close to 1, and the original sample points meet a local density requirement. The output complex is endowed with a piecewise-flat metric which is a close approximation of the original Riemannian metric. This is similar to our present work, except that our metric is \(C^\infty \), and not just piecewise linear.

1.4.2 Literature on Manifold Learning

The following methods aim to transform data lying near a d-dimensional manifold in an N-dimensional space into a set of points in a low-dimensional space close to a d-dimensional manifold. During transformation, all of them try to preserve some geometric properties, such as appropriately measured distances between points of the original data set. Usually the Euclidean distance to the ‘nearest’ neighbors of a point is preserved. In addition, some of the methods preserve, for points farther away, some notion of geodesic distance capturing the curvature of the manifold.

Perhaps the most basic of such methods is ‘principal component analysis’ (PCA), [52, 77] where one projects the data points onto the span of the d eigenvectors corresponding to the top d eigenvalues of the (\(N\times N\)) covariance matrix of the data points.

An important variation is the ‘Kernel PCA’ [84] where one defines a feature map \(\varphi (\cdot )\) mapping the data points into a Hilbert space called the feature space. A ‘kernel matrix’ K is built whose (i, j)th entry is the dot product \(\langle \varphi (x_i), \varphi (x_j)\rangle \) between the data points \(x_i,x_j.\) From the top d eigenvectors of this matrix, the corresponding eigenvectors of the covariance matrix of the image of the data points in the feature space can be computed. The data points are projected onto the span of these eigenvectors of this covariance matrix in the feature space.

In the case of ‘multi-dimensional scaling’ (MDS) [34], only pairwise distances between points are attempted to be preserved. One minimizes a certain ‘stress function’ which captures the total error in pairwise distances between the data points and between their lower-dimensional counterparts. For instance, a raw stress function could be \(\varSigma (\Vert x_i-x_j\Vert -\Vert y_i-y_j\Vert )^2,\) where \(x_i\) are the original data points, \(y_i,\) the transformed ones, and \(\Vert x_i-x_j\Vert ,\) the distance between \(x_i, x_j.\)

‘Isomap’ [92] attempts to improve on MDS by trying to capture geodesic distances between points while projecting. For each data point, a ‘neighborhood graph’ is constructed using its k neighbors (k could be varied based on various criteria), the edges carrying the length between points. Now the shortest distance between points is computed in the resulting global graph containing all the neighborhood graphs using a standard graph theoretic algorithm such as Dijkstra’s. Let \(D = [d_{ij}]\) be the \(n\times n\) matrix of graph distances. Let \(S = [d_{ij}^2]\) be the \(n \times n\) matrix of squared graph distances. Form the matrix, \(A =\frac{1}{2}HSH,\) where \(H = I - n^{-1}{\mathbf {1}}{\mathbf {1}}^T\). The matrix A is of rank \(t < n\), where t is the dimension of the manifold. Let \(A^Y =\frac{1}{2}HS^YH\), where \([S^Y]_{ij} = \Vert y_i - y_j\Vert ^2.\) Here the \(y_i\) are arbitrary t-dimensional vectors. The embedding vectors \({\widehat{y}}_i\) are chosen to minimize \(\Vert A - A^Y\Vert \). The optimal solution is given by the eigenvectors \(v_1, \dots , v_t\) corresponding to the t largest eigenvalues of A. The vertices of the graph G are embedded by the \(t \times n\) matrix

‘Maximum variance unfolding’ (MVU) [94] also constructs the neighborhood graph as in the case of Isomap but tries to maximize distance between projected points keeping distance between the nearest points unchanged after projection. It uses semidefinite programming for this purpose.

In ‘Diffusion Maps’ [33], a complete graph on the data points is built and each edge is assigned a weight based on a gaussian: \(w_{ij}\equiv \exp ({\frac{\Vert x_i-x_j\Vert ^2}{\sigma ^2}}).\) Normalization is performed on this matrix so that the entries in each row add up to 1. This matrix is then used as the transition matrix P of a Markov chain. \(P^t\) is therefore the transition probability between data points in t steps. The d nontrivial eigenvalues \(\lambda _i\) and their eigenvectors \(v_i\) of \(P^t\) are computed, and the data are now represented by the matrix \([\lambda _1v_1, \cdots , \lambda _dv_d], \) with the row i corresponding to data point \(x_i.\)

The following are essentially local methods of manifold learning in the sense that they attempt to preserve local properties of the manifold around a data point.

‘Local linear embedding’ (LLE) [81] preserves solely local properties of the data. Let \(N_i\) be the neighborhood of \(x_i\), consisting of k points. Find optimal weights \({\widehat{w}}_{ij}\) by solving \( {\widehat{W}} := \arg \min _W \sum _{i=1}^n \Vert x_i - \sum _{j=1}^n w_{ij}x_j\Vert ^2,\) subject to the constraints (i) \(\forall i, \sum _j w_{ij} = 1\), (ii) \(\forall i,j, w_{ij} \ge 0,\) (iii) \(w_{ij} = 0 \) if \(j \not \in N_i.\) Once the weight matrix \({\widehat{W}}\) is found, a spectral embedding is constructed using it. More precisely, a matrix \({\widehat{Y}}\) is a \(t \times n\) matrix constructed satisfying \( {\widehat{Y}} = \arg \min _Y Tr( {YMY^T}),\) under the constraints \(Y{\mathbf {1}} = 0\) and \(YY^T = nI_t\), where \(M = (I_n - {\widehat{W}})^T(I_n - {\widehat{W}})\). \({\widehat{Y}}\) is used to get a t-dimensional embedding of the initial data.

In the case of the ‘Laplacian eigenmap’ [4, 54] again, a nearest neighbor graph is formed. The details are as follows. Let \(n_i\) denote the neighborhood of i. Let \(W = (w_{ij})\) be a symmetric \((n \times n)\) weighted adjacency matrix defined by (i) \(w_{ij} = 0\) if j does not belong to the neighborhood of i; (ii) \(w_{ij} = \exp (\Vert x_i - x_j\Vert ^2/{2\sigma ^2}),\) if \(x_j\) belongs to the neighborhood of \(x_i\). Here \(\sigma \) is a scale parameter. Let G be the corresponding weighted graph. Let \(D = (d_{ij})\) be a diagonal matrix whose ith entry is given by \((W {\mathbf {1}})_i\). The matrix \(L = D - W\) is called the Laplacian of G. We seek a solution in the set of \(t \times n\) matrices \( {\widehat{Y}} = \arg \min _{Y:YDY^T = I_t}\hbox {Tr}(YLY^T).\) The rows of \({\widehat{Y}}\) are given by solutions of the equation \(Lv = \lambda D v\).

Hessian LLE (HLLE) (also called Hessian eigenmaps) [35] and ‘local tangent space alignment’ (LTSA) [100] attempt to improve on LLE by also taking into consideration the curvature of the higher-dimensional manifold while preserving the local pairwise distances. We describe LTSA below.

LTSA attempts to compute coordinates of the low-dimensional data points and align the tangent spaces in the resulting embedding. It starts with computing bases for the approximate tangent spaces at the datapoints \(x_i\) by applying PCA on the neighboring data points. The coordinates of the low-dimensional data points are computed by carrying out a further minimization \(min_{Y_i,L_i}\varSigma _i \Vert Y_iJ_k -L_i\varTheta _i\Vert ^2\). Here \(Y_i\) has as its columns, the lower- dimensional vectors, \(J_k\) is a ‘centering’ matrix, \(\varTheta _i\) has as its columns the projections of the k neighbors onto the d eigenvectors obtained from the PCA, and \(L_i\) maps these coordinates to those of the lower-dimensional representation of the data points. The minimization is again carried out through suitable spectral methods.

The alignment of local coordinate mappings also underlies some other methods such as ‘local linear coordinates’ (LLC) [82] and ‘manifold charting’ [16].

Each of the algorithms is based on strong domain-based intuition and in general performs well in practice at least for the domain for which it was originally intended. PCA is still competitive as a general method.

Some of the algorithms are known to perform correctly under the hypothesis that data lie on a manifold of a specific kind. In Isomap and LLE, the manifold has to be an isometric embedding of a convex subset of Euclidean space. In the limit as the number of data points tends to infinity, when the data approximate a manifold, then one can recover the geometry of this manifold by computing an approximation of the Laplace–Beltrami operator. Laplacian eigenmaps and diffusion maps rest on this idea. LTSA works for parameterized manifolds, and detailed error analysis is available for it.

1.4.3 Theorems 1 and 2 and the Problems of Machine reconstruction

Theorem 1 addresses the fundamental question, when a given metric space \((X,d_X)\), corresponding to data points and their ‘abstract’ mutual distances, approximates a Riemannian manifold with a bounded sectional curvature and injectivity radius. In the context of Theorem 1, the distances are measured in intrinsic sense in M and X.

Theorem 2 deals with approximating a subset of a Hilbert space E satisfying certain local constraints by a manifold having bounded second fundamental form and reach. In the context of Theorem 2, the distances are measured in extrinsic sense in E. Such approximations have extensively been considered in machine learning or, more precisely, manifold learning and nonlinear dimensionality reduction, where the goal is to approximate the set of data lying in a high-dimensional space like E by a submanifold in E of a low enough dimension in order to visualize these data, see, e.g., references of Sect. 1.4.2.

The results of this paper provide for the observed data an abstract low-dimensional representation of the intrinsic manifold structure that the data may possess. In particular, the topology of the manifold structure is determined, assuming that the sampling density has been sufficient. As described in Sect. 3, the proof of Theorem 2 is of a constructive nature and provides an algorithm to perform such visualization. Note that this algorithm starts with tangent-type planes which makes it distantly similar to the LTSA method in machine learning, see, e.g., [63, 100]. In paper [44], the authors provide a method of visualization of a given data using a probabilistic setting. In comparison, Theorem 2 helps us visualize data in a deterministic setting.

The results of this paper are also related to dimensionality reduction considered extensively in machine learning, see, e.g., [4,5,6, 80]. Using the constructions of Sect. 5.2, we can associate with given data not only the metric structure but also point measures. Combining this with the constructions of [28], one could analyze the approximate determination of the eigenvalues and eigenfunctions of a manifold that approximates the data set.

2 Approximation of Metric Spaces

In this section, we collect preliminaries about GH and quasi-isometric approximation of metric spaces. In Sects. 2.4 and 2.5, we present algorithms that can be used to verify the assumptions of Theorems 1 and 2 .

2.1 Gromov–Hausdorff Approximations

Let X be a metric space. Recall that the Hausdorff distance between sets \(A,B\subset X\) is defined by

where \({{\mathcal {U}}}_r\) denotes the r-neighborhood of a set.

The Gromov–Hausdorff (GH) distance \(d_{\mathrm{GH}}(X,Y)\) between metric spaces X and Y is the infimum of all \(\varepsilon >0\) such that there exist a metric space Z and subsets \(X',Y'\subset Z\) isometric to X and Y, resp., such that \(d_H(X',Y')<\varepsilon \). One can always assume that Z is the disjoint union of X and Y with a metric extending those of X and Y. The pointed GH distance between pointed metric spaces \((X,x_0)\) and \((Y,y_0)\) is defined in the same way with an additional requirement that \(d_Z(x_0,y_0)<\varepsilon \). See, e.g., [79, §1.2 in Ch. 10] or [26] for details.

Example 1

(Distorted net) Recall that a subset S of a metric space X is called an \(\varepsilon \)-net if \({{\mathcal {U}}}_\varepsilon (S)=X\). Let S be an \(\varepsilon \)-net in X and imagine that we have measured the distances between points of S with an absolute error \(\varepsilon \), that is, we have a distance function \(d'\) on \(S\times S\) such that \(|d'(x,y)-d(x,y)|<\varepsilon \) for all \(x,y\in S\). Then, the GH distance between X and \((S,d')\) is bounded by \(2\varepsilon \). This follows from the fact that the inclusion \(S\hookrightarrow X\) is an \(\varepsilon \)-isometry from \((S,d')\) to (X, d), see below.

Strictly speaking, the ‘measurement errors’ in this example may break the triangle inequality so that \((S,d')\) is no longer a metric space. This can be fixed by adding \(3\varepsilon \) to all \(d'\)-distances.

Let X, Y be metric spaces, \(f:X\rightarrow Y\) a (not necessarily continuous) map, and \(\varepsilon >0\). The distortion of f, denoted by \({{\,\mathrm{dis}\,}}f\), is defined by

and f is called an \(\varepsilon \)-isometry if \({{\,\mathrm{dis}\,}}f<\varepsilon \) and f(X) is an \(\varepsilon \)-net in Y.

If \(d_{\mathrm{GH}}(X,Y)<\varepsilon \), then there exists a \(2\varepsilon \)-isometry from X to Y, and conversely, if there is an \(\varepsilon \)-isometry from X to Y, then \(d_{\mathrm{GH}}(X,Y)<2\varepsilon \). Moreover,

Also, if f(X) is \(\varepsilon -\)net in Y, then

See [26, §7.3.3] for proofs of these facts. They also hold for the pointed GH distance between pointed metric spaces \((X,x_0)\) and \((Y,y_0)\), provided that \(f(x_0)=y_0\). Throughout the paper, we use these properties without explicit reference.

If f is a \((\lambda ,\varepsilon )\)-quasi-isometry (see Definition 3), then \({{\,\mathrm{dis}\,}}f\le (\lambda -1){{\,\mathrm{diam}\,}}(X)+\varepsilon \). This together with (25)–(26) implies (6). The next lemma is a variant of (6) for metric balls.

Lemma 1

Let \(f:X\rightarrow {M}\) be a \((\lambda ,\varepsilon )\)-quasi-isometry and suppose that \(({M,d_M)}\) is a Riemannian manifold. Then, every r-ball in M is within GH distance \(2(\lambda -1) r + {5}\varepsilon \) from some r-ball in X. More precisely,

for all \(x\in X\) and \(y\in {M}\) such that \(d_{M}(f(x),y)<\varepsilon \).

Proof

Let x and y be as in the formulation. Then,

for all \(r_1,r_2>0\).

Fix \(r>0\) and denote \({X_1}=B^X_r(x)\) and \({{M}_1}={B^{M}_r(y)}\). Since \({{\,\mathrm{diam}\,}}({X_1})\le 2r\), the distortion of \(f|_{{X_1}}\) is bounded by \(2(\lambda -1)r+\varepsilon \). Hence, by (26),

where \(f({X_1})\) is regarded as a pointed metric space with distinguished point f(x). Now we estimate the Hausdorff distance \(d_H(f({X_1}),{{M}_1})\) in M. By (5) and (28),

To prove that \({{M}_1}\) is contained in a suitable neighborhood of \(f({X_1})\), let \(r_1=\lambda ^{-1}r-3\varepsilon \) and consider \(z\in {B^{M}_{r_1}(y)}\). Since f(X) is an \(\varepsilon \)-net in M, there is \(x'\in X\) such that \(d_{M}(z,f(x'))<\varepsilon \), and hence, \(d_{M}(f(x),f(x'))<r_1+2\varepsilon \). This and (5) imply that

hence, \(x'\in {X_1}\). Thus, \( {B^{M}_{r_1}(y)} \subset \mathcal U_{\varepsilon }(f({X_1})) \). This and (28) imply that

Thus, \(d_H(f({X_1}),{{M}_1}) \le \max (\varepsilon ,\varepsilon _1,\varepsilon _2) < (\lambda -1)r+4\varepsilon \). Since the Hausdorff distance is an upper bound for the GH distance, this and (29) imply (27). \(\square \)

2.2 Almost Intrinsic Metrics

Here we discuss properties of \(\delta \)-intrinsic metrics and related notions from Definition 2. First observe that, if \(x_1,x_2,\dots ,x_N\) is a \(\delta \)-straight sequence, then its ‘length’ satisfies

This follows by induction from (4) and the triangle inequality.

The next lemma characterizes almost intrinsic metrics as those that are GH close to Riemannian manifolds. However, manifolds provided by this lemma may have extremely large curvatures and tiny injectivity radii.

Lemma 2

Let X be a metric space and \(\delta >0\). 1. If there exists a length space Y such that \(d_{\mathrm{GH}}(X,Y)<\delta \), then X is \(6\delta \)-intrinsic. 2. Conversely, if X is compact and \(\delta \)-intrinsic, then there exists a two-dimensional Riemannian manifold M such that

where \(C_{15}\) is a universal constant.

Proof

1. By the definition of the GH distance, there exists a metric d on the disjoint union \(Z:=X\sqcup Y\) such that d extends \(d_X\) and \(d_Y\) and \(d_H(X,Y)<\delta \) in (Z, d). Let \(x,x'\in X\). Since \(d_H(X,Y)<\delta \), there exist \(y,y'\in Y\) such that \(d(x,y)<\delta \) and \(d(x',y')<\delta \). Connect y to \(y'\) by a minimizing geodesic and let \(y=y_1,y_2,\dots ,y_N=y'\) be a sequence of points along this geodesic such that \(d(y_i,y_{i+1})<\delta \) for all i. For each \(i=2,\dots ,N-1\), choose \(x_i\in X\) such that \(d(x_i,y_i)<\delta \). Then, \(x,x_2,\dots ,x_{N-1},x'\) is a \(6\delta \)-straight \(3\delta \)-chain connecting x and \(x'\). Since x and \(x'\) are arbitrary points of X, the claim follows.

2. Since we do not use this claim, we do not give a detailed proof of it. Here is a sketch of the construction. First, arguing as in [26, Proposition 7.5.5], one can approximate X by a metric graph. If X is \(\delta \)-intrinsic, the graph can be made GH \(C_{15}\delta \)-close to X. Consider a piecewise-smooth arcwise isometric embedding of the graph into \({\mathbb {R}}^3\), and let M be a smoothed boundary of a small neighborhood of the image. Then, M is a two-dimensional Riemannian manifold which can be made arbitrarily close to the graph and hence \(C_{15}\delta \)-close to X. \(\square \)

Now we describe a construction that makes a \(C\delta \)-intrinsic metric out of a metric which is \(\delta \)-close to \({\mathbb {R}}^n\) at scale r (see Definition 1). More generally, let \(X=(X,d)\) be a metric space in which every ball of radius r is \(\delta \)-intrinsic, where \(r>\delta >0\). For \(x,y\in X\), define the new distance \(d'(x,y)\) by

where the infimum is taken over all finite sequences \(x_1,\dots ,x_N\) connecting x to y and such that every pair of subsequent points \(x_i,x_{i+1}\) is contained in a ball of radius r in (X, d).

In order to avoid infinite \(d'\)-distances, we need to assume that any two points can be connected by such a sequence. If this is not the case, X divides into components separated from one another by distance at least r. For our purposes, such components are unrelated to one another just like disconnected components of a manifold.

Lemma 3

Under the above assumptions, the function \(d'\) given by (32) is a \(8\delta \)-intrinsic metric on X. Furthermore, d and \(d'\) coincide within any ball of radius r.

Proof

The triangle inequality for d implies that \(d'\) is a metric, \(d'\ge d\), and \(d'(x,y)=d(x,y)\) if x and y belong to an r-ball in (X, d). It remains to verify that \((X,d')\) is \({8}\delta \)-intrinsic. Let \(x,y\in X\) and let \(x=x_1,\dots ,x_N=y\) be a sequence realizing the infimum in (32) with an error less than \(\delta \). Then,

hence, the sequence \(\{x_i\}\) is \(\delta \)-straight with respect to \(d'\). Recall that every pair \(x_i,x_{i+1}\) belongs to an r-ball and this ball is \(\delta \)-intrinsic. Hence, there is a \(\delta \)-straight \(\delta \)-chain \(S_i=\{z_j^{(i)}\}_{j=1}^{N_i}\) connecting \(x_i\) to \(x_{i+1}\) and contained in an r-ball. Joining the sequences \(S_i\) together yields a \(\delta \)-chain \(\{y_k\}_{k=1}^{N'}\), \(N'=\sum N_i\), connecting x to y.

It suffices to prove that the sequence \(\{y_k\}\) is \(8\delta \)-straight with respect to \(d'\). Note that the chains \(S_i\) are \(\delta \)-straight with respect to both d and \(d'\) since the two metrics coincide in any r-ball. Let \(a_i=d'(x,x_i)\) for \(i=1,\dots ,N\). Then, for \(i\le j\) we have

by the triangle inequality and the \(\delta \)-straightness of \(\{x_i\}\). For \(k\in \{1,\dots ,N'\}\), define

where \(i=i(k)\) is the index such that \(y_k\) belongs to \(S_i\). Note that, for \(y_k\in S_i\),

due to the \(\delta \)-straightness of \(S_i\) and (33). We claim that

for all \(k,m\in \{1,\dots ,N'\}\) such that \(k\le m\). If both \(y_k\) and \(y_m\) are from one sub-chain \(S_i\), then (36) follows from the \(\delta \)-straightness of \(S_i\). Assume that \(y_k\in S_i\) and \(y_m\in S_j\) where \(i<j\). Then, the triangle inequality

and relations \(d'(y_k,x_{i+1})<a_{i+1} - b_k + 2\delta \) (cf. (35)), \(d'(x_{i+1},x_j)< a_j-a_{i+1} + \delta \) (cf. (33)), and \(d'(x_j,y_m)=b_m-a_j\) (cf. (34)) imply the upper bound in (36). Similarly, the lower bound in (36) follows from the triangle inequality

and relations \(d'(x_i,x_{j+1}) \ge a_{j+1}-a_i\) (cf. (33)), \(d'(x_i,y_k) = b_k-a_i\) (cf. (34)), and \(d'(y_m,x_{j+1}) < a_{j+1}-b_m+2\delta \) (cf. (35)). This finishes the proof of (36).

For \(k,m,n\in \{1,\dots ,N'\}\) such that \(k\le m\le n\), (36) implies that

Thus, \(\{y_k\}\) is a \(8\delta \)-straight sequence and the lemma follows. \(\square \)

The next lemma shows that if a map is almost isometric at small scale, then it is a quasi-isometry with small constants. It is used in the proof of Theorem 1.

Lemma 4

Let \(r>{15}\delta >0\). Let X and Y be \(\delta \)-intrinsic metric spaces and \(f:X\rightarrow Y\) a map such that f(X) is a \(\delta \)-net in Y and

for all \(x,y\in X\) such that

Then, f is a \((1+{10}r^{-1}\delta ,{3\delta })\)-quasi-isometry.

Proof

Let \(p,q\in X\) and \(D=d_X(p,q)\). We have to verify that

Since X is \(\delta \)-intrinsic, p and q can be connected by a \(\delta \)-straight \(\delta \)-chain, see Definition 2. This chain contains a subsequence \(p=x_1,x_2,\dots ,x_N=q\) such that \( r-\delta< d_X(x_i,x_{i+1}) < r \) for all \(i=1,\dots ,N-2\) and \(d_X(x_{N-1},q)<r\). Since the subsequence is also \(\delta \)-straight, by (30) we have

Since \(d_X(x_i,x_{i+1})>r-\delta \) for each \(i\le N-2\), the left-hand side of (39) is bounded below by \((N-2)(r-\delta )\). Hence,

By (37), we have \(d_Y(f(x_i),f(x_{i+1})) < d_X(x_i,x_{i+1})+\delta \) for all i. Therefore,

Thus,

Since \(r-2\delta >r/2\), the second inequality in (38) follows.

To prove the first inequality in (38), interchange the roles of X and Y and apply the same argument to an ‘almost inverse’ map \(g:Y\rightarrow X\) constructed as follows: For each \(y\in Y\), let g(y) be an arbitrary point from the set \(f^{-1}(B_\delta (y))\). This map satisfies the assumptions of the lemma with \(3\delta \) in place of \(\delta \) and \(r-2\delta \) in place of r. We may assume that \(g(f(p))=p\) and \(g(f(q))=q\); then, (41) for g takes the form

This implies the first inequality in (38) and the lemma follows. \(\square \)

2.3 GH Approximations of the Disk

Here we prove a technical Lemma 6 about \(\delta \)-isometries to subsets of \({\mathbb {R}}^n\). For a matrix \(A\in {\mathbb {R}}^{n\times n}\), the norm \(\Vert A\Vert \) is the operator norm of the map \(A:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^n\), unless stated otherwise. First we need the following estimate.

Lemma 5

Let \(\varepsilon >0\) and \(v_1,\dots ,v_n\in {\mathbb {R}}^n\) be such that \(\left| |v_i|^2-1\right| < \varepsilon \) and \(|\langle v_i,v_j \rangle | < \varepsilon \) if \(i\ne j\), for all \(i,j\in \{1,\dots ,n\}\). Define a linear map \(L:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^n\) by \( L(v) = (\langle v,v_i\rangle )_{i=1}^n \). Then, there exists an orthogonal operator \(U:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^n\) such that \( \Vert L-U\Vert < n\varepsilon \).

Proof

We regard L as an \(n\times n\) matrix whose ith row consists of coordinates of \(v_i\). The inner products \(\langle v_i,v_j\rangle \) are elements of the matrix \(LL^t\). By assumptions of the lemma, all elements of the matrix \(LL^t-I\) are bounded by \(\varepsilon \). Therefore, the operator norm \(\Vert LL^t-I\Vert \) is bounded by \(n\varepsilon \). Decompose L as \(L=U_1DU_2\) where \(U_1\) and \(U_2\) are orthogonal matrices and D is a diagonal matrix with nonnegative entries. Then, \(LL^t=U_1D^2U^{-1}_1\) and

Thus, the operator \(U=U_1U_2\) satisfies the desired inequality. \(\square \)

Lemma 6

There is a universal constant \(C_{16}>0\) such that the following holds. Let X be a metric space, \(x_0\in X\), and \(f,g:X\rightarrow {\mathbb {R}}^n\) maps with \(f(x_0)=g(x_0)=0\). Let \(R\ge r\ge \delta > 0\) and assume that f and g are \(\delta \)-isometries to sets \(Y_1\subset {\mathbb {R}}^n\) and \(Y_2\subset {\mathbb {R}}^n\), resp., such that \(B_r^n\subset Y_i\subset B_R^n\) for \(i=1,2\).

Then, there exists an orthogonal operator \(U:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^n\) such that

for all \(x\in X\).

Proof

The statement of the lemma is scale invariant, i.e., one can multiply the parameters \(R,r,\delta \), the maps f, g, and the distances in X by the same scale factor. Thus, we may assume that \(r=1\). Since f and g are \(\delta \)-isometries, we have

for all \(x,y\in X\). In particular, \( \bigl ||f(x)| - |g(x)|\bigr | < 2\delta \) since \(f(x_0)=g(x_0)=0\). Hence,

and

for all \(x,y\in X\). These inequalities and the polarization identity

imply that

for all \(x,y\in X\).

Since \(B_1^n\subset Y_1\), there exist \(x_1,\dots ,x_n\in X\) such that \(|f(x_i)-e_i|<\delta \) for all i, where \((e_i)_{i=1}^n\) is the standard basis of \({\mathbb {R}}^n\). Let \(v_i=g(x_i)\), \(i=1,\dots ,n\). Then, by (44) applied to \(x=x_i\) and \(y=x_j\), for all \(i,j\in \{1,\dots ,n\}\) we have

since \(|f(x_i)|<1+\delta \) and \(|g(x_i)|<1+3\delta \). Therefore,

since \(|\langle f(x_i),f(x_j)-\langle e_i,e_j\rangle |<2\delta +\delta ^2\). Thus, the vectors \(v_i\) satisfy the assumptions of Lemma 5 with \(\varepsilon =\delta _1\). As in Lemma 5, define \(L(v)=(\langle v ,v_i\rangle )_{i=1}^n\) for all \(v\in {\mathbb {R}}^n\) and let U be an orthogonal operator such that \(\Vert L-U\Vert <n\delta _1\).

Since f(X) and g(Y) are contained in \(B_R^n\), the right-hand side of (44) is bounded by \(8R\delta \). Hence, by (44) applied to \(y=x_i\),

for all \(x\in X\) and \(i\in \{1,\dots ,n\}\). We also have

This and (45) imply that

The term \(\langle g(x),v_i)\rangle \) is the ith coordinate of the vector L(g(x)) (recall the definition of L above), and \(\langle f(x),e_i\rangle \) is the ith coordinate of f(x). Hence, (46) implies that

Since \(\Vert L-U\Vert <n\delta _1\), we also have

Therefore,

since \(\delta _1\le 27\delta \). Thus, (42) holds with \(C_{16}=36\). \(\square \)

2.4 Verifying GH Closeness to the Disk

Here we present an algorithm that can be used to verify the main assumption of Theorem 1. Namely, given a discrete metric space X, \(n\in {\mathbb {N}}\) and \(r>0\), one can approximately (i.e., up to a factor \(C=C(n)\)) find the smallest \(\delta \) such that X is \(\delta \)-close to \({\mathbb {R}}^n\) at scale r (see Definition 1). Due to rescaling, it suffices to handle the case \(r=1\).

Thus, the problem boils down to the following: Given a point \(x_0\in X\), find approximately the (pointed) GH distance between the metric ball \(B^X_1(x_0)\subset X\) of radius 1 centered at \(x_0\) and the Euclidean unit ball \(B_1^n\subset {\mathbb {R}}^n\). In the case, when X is finite, the following algorithm solves this problem.

Algorithm GHDist: Assume that we are given n, the point \(x_0\in X\), and the ball \({X_1}=B^X_1(x_0)\subset X\). We regard \({X_1}\) as a metric space with metric \(d=d_X|_{{X_1}\times {X_1}}\). We implement the following steps:

-

1.

Let \(x_1\in {X_1}\) be a point that minimizes \( |1 - d(x_0, x)|\) over all \(x\in {X_1}\).

-

2.

Given \(x_1, x_2,\dots x_m\) for \(m \le n\), we define the coordinate function

$$\begin{aligned} f_m(x)=\tfrac{1}{2} \bigl (d(x,x_0)^2-d(x,x_m)^2+d(x_0,x_m)^2\bigr ) \end{aligned}$$(47) -

3.

Given \(x_1, x_2,\dots x_m\) and coordinate functions \(f_1(x),f_2(x),\dots ,f_m(x)\) for \(m \le n-1\), choose \(x_{m+1}\) that is the solution of the minimization problem

$$\begin{aligned} \min _{x\in {X_1}} K_m(x),\quad K_m(x)=\max (|1-{d(x_0, x)^2}|, |f_1(x)|,\dots ,|f_{m}(x)| ). \end{aligned}$$ -

4.

When \(x_1, x_2,\dots ,x_n\) and coordinate functions \(f_1(x),f_2(x),\dots ,f_n(x)\) are determined, compute the map \({\mathbf{F}}:{X_1}\rightarrow B_1^n\) defined by

$$\begin{aligned} {\mathbf{F}}(x)=P(f_1(x),\dots ,f_n(x)) \end{aligned}$$(48)where P is the map from \({\mathbb {R}}^n\) to \(B_1^n\) defined as follows: \(P(v)=v\) if \(|v|\le 1\); otherwise, \(P(v)=v/|v|\).

-

5.

Let \(\ell _1=\# X_1\) be the number of elements in \(X_1\) and compute the values

$$\begin{aligned}&\delta _1=\sup _{x,x'\in {X_1}} \bigg |d(x',x)-|{\mathbf{F}}(x')-{\mathbf{F}}(x)|\bigg |,\nonumber \\&\delta _2=\sup _{y\in Y(\ell _1)} \inf _{x\in {X_1}} |{\mathbf{F}}(x)-y| + \ell _1^{-1/n},\nonumber \\&\qquad \delta _a=\max (\delta _1,\delta _2). \end{aligned}$$(49)where \(Y(\ell _1)=(h{\mathbb {Z}}^n)\cap B^n_1\) is the set of points in the unit ball whose coordinates are integer multiplies of \(h=\ell _1^{-1/n}/\sqrt{n}\). Finally, the algorithm outputs the value of \(\delta _a\) and the map \({\mathbf{F}}\).

Lemma 7

There is a universal constant \(C_{17}>0\) such that the following holds. Let \({X_1}\), \(x_0\) be as in the above algorithm, \(\delta >0\), and suppose that \(d_{\mathrm{GH}}({X_1},B_1^n)<\delta \) where \({X_1}\) and \(B_1^n\) are regarded as pointed metric spaces with distinguished points \(x_0\) and 0, resp. Then,

-

1.

The output value \(\delta _a\) of the algorithm satisfies \(\delta _a<C_{17}n\delta \).

-

2.

The output map \({\mathbf{F}}:{X_1}\rightarrow B_1^n\) is a \(\delta _a\)-isometry with \({\mathbf{F}}(x_0)=0\).

Proof

First we make some preliminary considerations. Let \(\delta _1\) be as in (49), and define

Here, \(\delta _a'\) is considered as a better approximation of the Gromov–Hausdorff distance \(d_{\mathrm{GH}}({X_1},B_1^n)\) than \(\delta _a\), but it is computationally more difficult to obtain.

Next we show that